Abstract

A crucial problem in achieving innovative high-throughput materials growth with machine learning, such as Bayesian optimization (BO), and automation techniques has been a lack of an appropriate way to handle missing data due to experimental failures. Here, we propose a BO algorithm that complements the missing data in optimizing materials growth parameters. The proposed method provides a flexible optimization algorithm that searches a wide multi-dimensional parameter space. We demonstrate the effectiveness of the method with simulated data as well as in its implementation for actual materials growth, namely machine-learning-assisted molecular beam epitaxy (ML-MBE) of SrRuO3, which is widely used as a metallic electrode in oxide electronics. Through the exploitation and exploration in a wide three-dimensional parameter space, while complementing the missing data, we attained tensile-strained SrRuO3 film with a high residual resistivity ratio of 80.1, the highest among tensile-strained SrRuO3 films ever reported, in only 35 MBE growth runs.

Similar content being viewed by others

Introduction

Recent advances in materials informatics exploiting machine-learning techniques, such as Bayesian optimization (BO) and artificial neural networks, offer an opportunity to accelerate materials research1,2,3,4,5. In particular, data-driven decision-making approaches have attained high-throughput in experiments where machine-learning models are incrementally updated by newly measured data6,7,8,9,10. BO is a sample-efficient approach for global optimization11. It has proven itself useful to streamline the optimization of the materials synthesis conditions for bulk12 and thin film materials13. Further acceleration of experimental optimization by designing Gaussian process (GP) models that leverage prior knowledge, such as physical models, is also making progress14,15. Taking thin film growth as an example, the BO algorithm provides the growth conditions that should be examined in the next growth run, which enables automatic optimization of the growth parameters. In combination with automated growth16,17,18 and characterization apparatuses, the entire growth process has been automated. These approaches are collectively referred to as autonomous materials synthesis19,20,21,22,23,24.

The missing data problem is a general issue encountered in materials informatics when analyzing real materials data25,26. Since missing data are common in various materials databases, appropriate handling of the missing data is vital for accelerating materials research. Furthermore, this problem is also critical in optimizing the conditions for materials growth since it is caused in the growth parameter space when a target material cannot be obtained due to growth parameters that are far from optimal. One possible solution is to restrict the search space of growth parameters so that the space is guaranteed to exclude experimental failures that lead to missing data. Such restriction may be empirically carried out based on the experience and intuition of researchers and/or the information available in databases and the literature. However, there is no guarantee that the optimal growth parameters for the target material exist in such a small parameter space. Therefore, to maximize the benefit of high-throughput materials growth, including autonomous materials synthesis, it is essential to search in a wide parameter space while complementing the missing data generated due to unsuccessful growth runs, e.g., when the designated phase is not formed.

This study proposes a BO method capable of handling missing data even when a target material has not formed due to growth parameters that are far from optimal. We equalize the evaluation value for the missing data to the worst evaluation value available at that time. This imputation of experimental failure enables us to search a wide parameter space and avoid unstable parameter regions. We demonstrate the effectiveness of the BO method with experimental failure first by using virtual data for simulation, and subsequently through implementation for real materials growth, namely machine-learning-assisted molecular beam epitaxy (ML-MBE) of itinerant ferromagnetic perovskite SrRuO3 thin films, where we used the residual resistivity ratio (RRR) as the evaluation value. We achieved the RRR of 80.1, the highest ever reported among tensile-strained SrRuO3 films, through the exploitation and exploration in a wide three-dimensional parameter space in only 35 MBE growth runs—the tensile-strained SrRuO3 thin films achieved by epitaxial strain showed higher Curie temperature than those of bulk or compressive-strained films27. The proposed method provides a flexible optimization algorithm for a wide multi-dimensional parameter space that assumes experimental failure, and it will enhance the efficiency of high-throughput materials growth and autonomous materials growth.

Results and discussion

Simulation with virtual data

Our parameter search problem is described as follows. Given a multidimensional parameter denoted by vector x, an experimental trial returns its evaluation y. In materials growth optimization, x and y represent growth parameters and physical property that evaluate grown materials, respectively. Examples of physical properties include electrical resistance, X-ray diffraction intensity, and so on. The choice of evaluation metrics should be determined by the purpose of the study. We assume an additive observation noise y = S(x) + e, where e follows the normal distribution as \(e \sim {{{\mathcal{N}}}}\left( {0,\sigma ^2} \right)\). This noise in y corresponds to experimental fluctuations of the physical property currently under focus in materials prepared nominally under the same conditions. Here, the relationship between the parameter and evaluation, denoted by function S(⋅), is unknown a-priori. Our goal is to find x* such that its corresponding evaluation S(x*) is maximized. Since the underlying S is unknown, we sequentially carry out experimental trials with a parameter that is likely to return a better evaluation given past observations. That is, we choose a parameter xn such that its result \(y_n \approx S\left( {{{{\mathbf{x}}}}_n} \right)\) is predicted to be high given the data observed so far \(\left\{ {\left( {{{{\mathbf{x}}}}_1,y_1} \right), \ldots ,\left( {{{{\mathbf{x}}}}_{n - 1},y_{n - 1}} \right)} \right\}\), where xn and yn are nth parameter and nth evaluation values, respectively. Bayesian optimization is adopted for this sequential parameter search (see the Methods section ‘Bayesian optimization with experimental failure’ for details).

Our technical challenge is how to handle experimental failures. Specifically, evaluation yn may not always be returned for a specified parameter xn. To meet this challenge, we need to satisfy two requirements. First, the optimization procedure should avoid subsequent failures if the target material has not been formed under certain synthesis conditions. Second, even if a certain parameter xn turns out to be a failure, the prediction model should be updated. The lack of evaluation yn provides some information. For example, if xn led to a failure, parameters in a distance from the failed xn should be favored because xn is far from optimal parameter region or xn is a condition in which another undesired material is stabilized. Thus, a failure can guide the sequential optimization by encouraging the exploration of other parametric regions.

This paper seeks two approaches to cope with experimental failures. The first approach is called the floor padding trick: when the experiment turns out a failure given parameter xn, the floor padding trick uses the worst value observed so far, namely the yn value is complemented by \(\mathop {{\min }}\nolimits_{1 \le i < n} y_i\). This simple trick provides the search algorithm with information that the attempted parameter xn worked negatively. At the same time, this method is adaptive and automatic. The past experiments determine how bad a failure should count; in contrast, a naïve alternative is to give a predetermined constant value to failures. This may require careful tuning of the padding constant. The floor padding trick fulfills both requirements discussed above: the worst evaluation helps avoid parameters near the failure and updates the prediction model as well. The other approach is the binary classifier of failures, which, in addition to a prediction model for the evaluation value yn, we employ to predict whether a given parameter xn leads to a failure or not. The binary classifier meets the first requirement to avoid subsequent failures. Nevertheless, the second requirement is yet to be addressed since the binary classifier may not affect the evaluation prediction when we employ distinct models. Thus, the floor padding or naïve constant padding is combined with the binary classifier to update the prediction model accordingly.

We designed several methods by incorporating the floor padding trick and the binary classifier. These methods are compared using a simulated function, where simulation means the evaluation is calculated by artificial functions (see the Methods section ‘Simulated functions’ for details). They are designed to investigate the efficiency of the various combination of the floor padding trick (abbreviated as ‘F’) and the binary classifier (abbreviated as ‘B’), as summarized in Table 1. When F is active, the method uses the floor padding for experimental failures, while the baseline uses a predetermined constant value without F. Similarly, when B is active, the method constructs a binary classifier28 to predict failures in addition to the prediction model of evaluation, both of which are based on the Gaussian process29 (see the Methods section ‘Bayesian optimization with experimental failure’ for details). In this work, each method searched the three types of simulated parameter spaces (Fig. 1) until 100 observations were queried. This search process was repeated five times to account for randomness. For each process, five search points were randomly chosen as the initial observations. The observation noise was set to σ2 = 0.005.

Figure 2 shows the parameter search results for the Circle function. Each curve indicates the best evaluation value averaged over five runs as a function of the number of observations, whereas the shaded areas represent the best and worst evaluation among the five runs for each method. A curve that rises with fewer observations indicates a better search algorithm since it requires fewer resources before reaching a high evaluation. A vertically narrow shaded area means that the method performs robustly against randomness in the search process, such as the initial choice of parameters and observation noise. First, we observe that the choice of a constant value to replace failed evaluations affects the curves in Fig. 2b. In particular, the slope of the curves at the early stage is sensitive to the choice of the constant; for example, baseline @0 shows a quick improvement at first while @−1 gives slower improvements. Appropriate tuning of this value may be difficult in general and dependent on the experience and knowledge of individual researchers. As shown in Fig. 2a, the floor padding trick of method F demonstrates an initial improvement as quick as @0 without careful tuning of the constant. With that said, the final average evaluation of F is suboptimal compared to the best @−1. Second, method FB with the floor padding and binary classifier combined results in slower improvements of evaluation (Fig. 2a). The binary classifier alleviates the sensitivity to the choice of the constant value for handling failures in that the discrepancy between the curves in Fig. 2c is suppressed compared with that in Fig. 2b. However, the initial improvements in the evaluation of B@0 and B@−1 are inferior to that of F (Fig. 2a), and the final evaluation is also exceeded by @−1 (Fig. 2b).

Best evaluation values averaged over five runs as a function of the number of observations. Shaded areas indicate the range of the best and worst values of five runs. a Methods F and FB that adaptively replace failed evaluations by the floor padding. b Baseline methods that use a constant value for the failed evaluations. Method @0 used the fixed value of 0 when the experiment failed, whereas @−1 used −1. c Methods with classifier while padding a constant to failures. B@0 used a value of 0 as the evaluation when the experiment failed, whereas B@−1 used −1.

Figure 3 shows the results for the Hole function. The overall tendency of the two tricks is similar to that of Circle: the floor padding leads to quick improvements of the evaluation in the early part of the search process (Fig. 3a). We observe sensitivity to the choice of padding constant in Fig. 3b: the baseline of @−1 quickly improved the evaluation and reached a high evaluation on average at the end, whereas @0 struggled to find good parameters. Note that a favorable choice of the padding constant depends on the function: baseline @0 showed quicker improvements for the Circle function (Fig. 2b), while @−1 performed better for the Hole function (Fig. 3b). Method F with the floor padding trick gave competitive curves at early stages for both functions compared to the baseline methods. Another similarity to the Circle result is that method B shows less sensitivity to the choice of constant in Fig. 3c and, at the same time, slower improvement compared to method F in Fig. 3a or @−1 in Fig. 3b with a well-chosen constant. Method FB, the combination of the floor padding and binary classifier, was comparable to method F, as shown in Fig. 3a.

Best evaluation values averaged over five runs as a function of the number of observations. Shaded areas indicate the range of the best and worst values of five runs. a Methods F and FB that adaptively replace failed evaluations by the floor padding. b Baseline methods that use a constant value for the failed evaluations. Method @0 used the fixed value of 0 when the experiment failed, whereas @−1 used −1. c Methods with classifier while padding a constant to failures. B@0 used a value of 0 as the evaluation when the experiment failed, whereas B@−1 used −1.

Figure 4 shows the optimization result for the Softplus function, the maximum of which exists on the boundary of failed region. Despite the difficulty in the location of maximum point, all the methods demonstrated steady improvements during the search process. However, methods with the binary classifier, such as FB, are sometimes stuck in a suboptimal value, as suggested by the wider shade in Fig. 4a. One of the reasons is that the classifier made the exploration conservative: the proportion of failed observations of method F was 11.2% in the five trials, whereas that of FB was 7.4%. This indicates FB tried to avoid failures and missed the maximum at the boundary. A comparison of F, @0, and @−1 showed the efficacy of method F without an effort of the choice of the padding value.

Best evaluation values averaged over five runs as a function of the number of observations. Shaded areas indicate the range of the best and worst values of five runs. a Methods F and FB that adaptively replace failed evaluations by the floor padding. b Baseline methods that use a constant value for the failed evaluations. Method @0 used the fixed value of 0 when the experiment failed, whereas @−1 used −1. c Methods with classifier while padding a constant to failures. B@0 used a value of 0 as the evaluation when the experiment failed, whereas B@−1 used −1.

Our simulation experiments confirmed the efficacy of the floor padding: this trick is beneficial for initial quick search progress and sidesteps the difficult choice of padding constants. As for the binary classifier, this approach also alleviates the sensitivity to the choice of constant, though the search progress can be slowed. Furthermore, combining it with the floor padding was not as effective as expected. Thus, we adopted method F for the ML-MBE growth of SrRuO3 films to make the most of limited resources for experiments.

Application for ML-MBE of SrRuO3 films

To demonstrate the applicability and effectiveness of the BO method with experimental failure to actual growth of materials, we applied method F to our recently developed ML-MBE13 of SrRuO3 films on DyScO3 (110) substrates. The itinerant 4d ferromagnetic perovskite SrRuO3 is one of the most promising materials for oxide electronics owing to its high metallic conduction, chemical stability, compatibility with other perovskite oxides, and ferromagnetism with strong uniaxial magnetic anisotropy30,31,32,33,34,35,36,37,38,39. In addition, the recent observation of the Weyl fermions in SrRuO3 points to this material as an appropriate platform to integrate two emerging fields: topology in condensed matter and oxide electronics40,41,42,43,44. The RRR value, defined as the ratio of resistivity at 300 K [ρ(300 K)] to that at 4 K [ρ(4 K)], is a good measure of the purity of metallic systems, and accordingly, the quality of single-crystalline SrRuO3 thin films35,39,45,46,47. For practical applications of SrRuO3, such as electrodes in dynamic random access memory application, high-quality SrRuO3 films are necessary48,49. In terms of physical interest, high-quality SrRuO3 films are also indispensable because only SrRuO3 films with high RRR values above 20 have enabled the observation of quantum transport of Weyl fermions41,42. Thus, we adopted RRR as the evaluation value.

Figure 5 shows the flow of the ML-MBE growth using the BO with experimental failure (see Methods section ‘ML-MBE growth and sample characterizations’ for details). For the growth of high-quality SrRuO3, fine tuning of the growth conditions (the supplied Ru/Sr flux ratio, growth temperature, and O3-nozzle-to-substrate distance) is important13,49. In a previous study13, to simplify the intricate search space of entangled growth conditions, we ran the BO for a single growth condition while keeping the other conditions fixed. In addition, to avoid experimental failures, the search range was reduced to the growth parameter range within which the SrRuO3 phase had formed. In contrast, in the present study, we ran the BO algorithm in the three-dimensional space directly. Following the F method, when the SrRuO3 phase had not formed, we defined the RRR value of those samples to be the worst experimental RRR value by that time, instead of reducing the search range. The search ranges for the Ru flux rate, growth temperature, and O3-nozzle-to-substrate distance were 0.25–0.50 Å/s, 700–900 °C, and 10–50 mm, respectively. Here, the Ru/Sr ratio is determined by the Ru flux ratio as the Sr flux rate was fixed at 0.98 Å/s. The O3-nozzle-to-substrate distance is a parameter for oxidation strength.

a Schematic illustration of our multisource oxide MBE setup. EIES: Electron Impact Emission Spectroscopy. b Resistivity vs. the temperature curve of the SrRuO3/DyScO3 film with a RRR of 80.1, as an example. c Growth conditions for four samples, as an example. d Two-dimensional plots of EI values at the O3-nozzle-to-substrate distance of 22.5 mm obtained from the collected data for 18 samples, as an example.

Figure 6 shows how the BO algorithm predicts RRR values with unseen parameter configurations and acquires new data points. The process starts with five random initial growth parameters (Fig. 6a) and gains experimental RRR values for the updated GPR model with 18 (Fig. 6b) and 37 (Fig. 6c) samples. Two-dimensional plots of the predicted RRR, standard deviation s, and EI values at the O3-nozzle-to-substrate distance, at which the highest EI value was obtained, are shown in the lower panels (Fig. 6d–l). Among the five random initial growth parameters, the SrRuO3 phase had not formed at the Ru flux rate = 0.47 Å/s, growth temperature = 832 °C, and the O3-nozzle-to-substrate distance = 25 mm (Fig. 6a). Thus, we defined the RRR value of this sample to be the worst experimental one at that time (13.1). This imputation of experimental failure enabled direct search of the wide three-dimensional parameter space. The prediction results from the five initial samples yielded the highest EI at a O3 nozzle-substrate distance 1.5 mm larger than that for the highest experimental RRR value at that time (Fig. 6a). The RRR obtained at the next set of growth parameters (RRR = 35) was slightly larger than the highest RRR value of the five initial samples (RRR = 33.4). The region with relatively small s of the predicted RRR became larger as the number of experimental samples increased from five to 37 (Fig. 6g–i), indicating that the accuracy of the prediction had increased. The small s values result in lower EI values (Fig. 6j–l). This suggests that we have only a limited chance to improve the RRR value by further modifying the growth parameters. Through this exploitation and exploration of the materials growth data with experimental failure in the three-dimensional parameter space, the highest RRR value increased and reached over 80 in only 35 MBE growth runs (Fig. 7). The highest experimental RRR of 80.1, was achieved at the Ru flux = 0.365 Å/s, growth temperature = 826 °C, and O3-nozzle-to-substrate distance = 22 mm. This is the highest RRR ever reported among tensile-strained SrRuO3 films27,35. The achievement of the target material with the highest conductivity in such a small number of optimizations demonstrates the effectiveness of this method utilizing the floor padding trick for high-throughput materials growth.

a–c Experimental RRR values in the three-dimensional growth parameter space for 5 (a), 18 (b), and 37 (c) samples. The purple spheres indicate the NaN points at which the SrRuO3 phase was not obtained. The red spheres indicate the next conditions at which the highest EI values were obtained. The red planes indicate the cutting plane of the O3-nozzle-to-substrate distance, at which the highest EI value was obtained. d–l Two-dimensional plots of predicted RRR values (d–f), standard deviation s values (g–i), and EI values (j–l) at the O3-nozzle-to-substrate distance of 15.5 mm (d, g, j), 22.5 mm (e, h, k), and 19. 5 mm (f, i, l), which were obtained from the collected data for 5 (d, g, j), 18 (e, h, k), and 37 (f, i, l) samples, respectively. The O3-nozzle-to-substrate distance was that at which the highest EI value was obtained.

In summary, we presented a BO algorithm which complements the missing data. We designed several methods by combining the floor padding trick and the binary classifier, and compared them by simulation experiments. We found the efficacy of the floor padding trick, while the binary classifier decelerates the search in the early stages. The imputation of missing data to the worst experimental RRR value at that time allows us to search a wide parameter space directly and will enhance the time and cost efficiency of materials growth and autonomous materials synthesis. With regard to the growth parameter optimization for the growth of high-quality tensile-strained SrRuO3 thin films, the experimental RRR of 80.1 was achieved in only 35 ML-MBE runs with the floor padding trick. The proposed method, providing a flexible optimization algorithm for a wide multi-dimensional parameter space that assumes experimental failure, will play an essential role in the growth of various materials.

Methods

Bayesian optimization with experimental failure

Here, we describe our parameter search method based on Bayesian optimization. Our notation uses italic characters to denote scalar values, bold lower-case symbols for vectors, and bold upper-case characters for matrices. Supplementary Fig. 1 outlines our method. Let xn,d denote the dth element of the parameter in the nth trial. That is, the D-dimensional parameter is written as a column vector \({{{\mathbf{x}}}}_n = \left[ {x_{n,1}, \ldots ,x_{n,D}} \right]^{\it{ \top }}\), where \(\cdot ^ \top\) is the transpose operator. We assume the search space is bounded; namely, every element has its minimum and maximum values, \(\underline x _d\) and \(\bar x_d\), respectively. The value of nth evaluation yn is a real number when the experiment is successful, but is denoted as ϕ otherwise. In our implementation, ϕ = NaN (not a number) for experimental failure. The parameter is first normalized as

such that all elements in \(\widetilde {{{\mathbf{x}}}}_n\) fall between 0 and 1. This facilitates the training of the Gaussian process used as the prediction model. The floor padding trick preprocesses the evaluations as follows. Let \(y_1, \ldots ,y_{n - 1}\) be the observed evaluations so far. The failed evaluations are replaced with the worst successful value as:

for \(n^\prime = 1, \ldots ,n - 1\). If y1 = ϕ, then \(\tilde y_1 = 0\) or any other reasonable guess of low evaluation. Note that the padded value may vary later; for example, when we have observed \(y_1 = 10,y_2 = \phi ,y_3 = 15\), \(\tilde y_2\) is padded with the worst observed value 10. If we later obtain the observations as \(y_1 = 10,y_2 = \phi ,y_3 = 15,y_4 = 5\), then we use \(\tilde y_2 = 5\) to determine the fifth experimental condition.

We start the search by trying a random initial point x1 to obtain the first evaluation y1. We may optionally start with multiple initial points depending on the budget for the total number of experiments. Then, we iterate the update of the prediction model, decide a parameter for the next experiment, and then receive its evaluation through the actual experimental process.

Given the preprocessed n−1 observations \({{{\mathcal{D}}}}_{n - 1}: = \left\{ {\widetilde {{{\mathbf{x}}}}_i,\tilde y_i} \right\}_{i = 1}^{n - 1}\) so far, we fit the Gaussian process to predict the evaluation of the unexplored parameter region and to decide which parameter to try in the next step. The predictive evaluation \(\tilde y^\prime\) at normalized parameter \(\widetilde {{{\mathbf{x}}}}^\prime\) given Dn−1 is specified as the following normal distribution50: \(p\left( {\tilde y^\prime |\widetilde {{{\mathbf{x}}}}^\prime ,{{{\mathcal{D}}}}_{n - 1}} \right) = {{{\mathcal{N}}}}\left( {m\left( {\widetilde {{{\mathbf{x}}}}^\prime } \right),s^2\left( {\widetilde {{{\mathbf{x}}}}^\prime } \right)} \right)\), where the mean and variance are calculated as follows.

Here, \(k\left( {\widetilde {{{\mathbf{x}}}}^\prime ,\widetilde {{{\mathbf{x}}}}^\prime } \right)\) is the kernel function, \({{{\mathbf{k}}}}_{n - 1}\left( {\widetilde {{{\mathbf{x}}}}^\prime } \right)\) is the kernel vector, Kn−1 is the kernel matrix, \({{{\tilde{\mathbf y}}}}_{n - 1} = \left[ {\tilde y_1, \ldots ,\tilde y_{n - 1}} \right]^{\it{ \top }}\), and I is an identity matrix of appropriate size. The mean \(m\left( {\widetilde {{{\mathbf{x}}}}^\prime } \right)\) and standard deviation \(s\left( {\widetilde {{{\mathbf{x}}}}^\prime } \right)\) of the predictive distribution of Eqs. (3) and (4) are derived through matrix and vector operations. The kernel vector is defined with the kernel function k(⋅,⋅): \({{{\mathbf{k}}}}_{n - 1}\left( {\widetilde {{{\mathbf{x}}}}^\prime } \right) = \left[ {k\left( {\widetilde {{{\mathbf{x}}}}^\prime ,\widetilde {{{\mathbf{x}}}}_1} \right), \ldots ,k\left( {\widetilde {{{\mathbf{x}}}}^\prime ,\widetilde {{{\mathbf{x}}}}_{n - 1}} \right)} \right]^ \top\). The ith row and jth column of the matrix Kn−1 is defined as \(k_{ij} = k\left( {\widetilde {{{\mathbf{x}}}}_i,\widetilde {{{\mathbf{x}}}}_j} \right)\). The kernel represents the similarity between the two parameters. When \(\widetilde {{{\mathbf{x}}}}_i\) and \(\widetilde {{{\mathbf{x}}}}_j\) are close to each other, kij amounts to a large value, which leads to a high correlation between \(\tilde y_i\) and \(\tilde y_j\). For Bayesian optimization, the radial basis function (RBF) kernel and Matérn 5/2 kernel are popular choices. When the distance is given by \({\Delta}_{ij} = \sqrt {\mathop {\sum}\nolimits_{d = 1}^D {\frac{{\left( {\widetilde {\it{x}}_{i,d} - \widetilde {\it{x}}_{j,d}} \right)^2}}{{s_d}}} }\), the RBF kernel is defined as \(k_{ij} = A{{{\mathrm{exp}}}}\left( { - {\Delta}_{ij}^2} \right)\), and Matérn 5/2 kernel is \(k_{ij} = A\left( {1 + \sqrt 5 {\Delta}_{ij} + \frac{5}{3}{\Delta}_{ij}^2} \right)\exp \left( { - \sqrt 5 {\Delta}_{ij}} \right)\). Here, A > 0 and sd > 0 are hyperparameters of the kernel and usually tuned to maximize the likelihood of observation \({{{\mathcal{D}}}}_{n - 1}\)29.

Once the prediction model is constructed, we will choose a parameter that maximizes the possibility to produce a high evaluation value in the next experiment. We use the expected improvement (EI) criterion. EI is defined as follows:

where \({\mathbb{I}}_{\tilde y^\prime \ge \bar y_{n - 1}}\) is the indicator function, which equals 1 when \(\tilde y^\prime \ge \bar y_{n - 1}\) and 0 otherwise, and \(\bar y_{n - 1} = \mathop {{\max }}\nolimits_{1 \le i < n} \tilde y_n\) is the best evaluation so far. The intuition of EI is that it measures the expected gain over the best-observed evaluation. Thus, our next parameter is the maximizer of \(a_{{{{\mathrm{EI}}}}}\left( {\widetilde {{{\mathbf{x}}}}^\prime } \right)\) where the search space is \(0 \le \tilde x_d^\prime \le 1\) for all d. The floor padding trick can also be combined with other acquisition functions in the literature other than the expected improvement (see Supplementary Note ‘Floor padding trick with other acquisition function’ for details).

Supplementary Fig. 2 shows the effect of the floor padding trick compared with an approach that ignores failed observations. By replacing the failure with the worst evaluation, the floor padding trick reduces the predicted mean (Supplementary Fig. 2a and b) and uncertainty in the prediction (Supplementary Fig. 2c and d) around the failed observations ‘−’. As a result, the floor padding trick led the search inward to avoid past failures (Supplementary Fig. 2f). In contrast, the lack of compensation for failures triggered a search outward (Supplementary Fig. 2e) due to the high prediction mean and moderate standard deviation. These results indicate that the prediction model should consider the failure information since the search method would repeatedly recommend parameters in failed regions if the upcoming failure were ignored. Thus, the floor padding trick or at least padding with a sufficiently small constant is necessary to cope with experimental failures.

The EI function is modified when the binary classifier is adopted to predict the experimental failures. The modified criterion takes the form

This is the product of the original EI and the predicted probability of \(\widetilde {{{\mathbf{x}}}}^\prime\) not resulting in a failure ϕ. By assuming the occurrence of experimental failure is independent from the evaluation value, this modified EI amounts to the expectation of the experiment being successful with its gain in the evaluation from the best one obtained so far. The assumption is justified by obtaining a distinct classifier and evaluation prediction model.

This formulation is an extension of the constrained BO28, where the objective is given as

where C(x) ≤ c is a constraint. The constraint function C(x) is unknown but observable: an observation consists of (x, y, z) with y = S(x) and z = C(x) (the observation noise was omitted for clarity). Two Gaussian processes are considered to predict y' and z' for unseen x'. The resulting EI of the constrained BO28 is the product of expected improvement in y' and the probability of z' ≤ c.

The binary classifier is the replacement of the constraint part. Instead of handling the continuous variable z, the classifier fits with the binary outcome: y = ϕ or y ≠ ϕ. We can still use the Gaussian process with the same kernel explained above, while variational inference is employed for the learning to approximate the likelihood of binary observations29. Supplementary Fig. 3 shows how the EI function is modified. The probability of success \(p\left( {y^\prime \ne \phi |\widetilde {{{\mathbf{x}}}}^\prime ,{{{\mathcal{D}}}}_{n - 1}} \right)\) is lowered by failed observations (Supplementary Fig. 3a). This directly discourages the exploration of failed regions in subsequent trials. Because of the approximate variational inference, the probability estimated by the binary classifier is not sharp; that is, it is not as high as 1 around successful observations and not as low as 0 around failures. This attenuates the variation in the EI function, as shown in Supplementary Fig. 3b and c.

Simulated functions

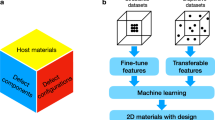

This section explains the setup of functions used in the simulation experiments. Figure 1 depicts three functions. The search space is two-dimensional between −1 and 1 for all functions. The Circle and Softplus function fail when \(x_1^2 + x_2^2 \,> \, 1,\) whereas the Hole function fails when |x1| < L and |x2| < L, or \(x_1^2 + x_2^2 \,>\, 1\). With a slight abuse of notation, subscript xd means the dth element of the parameter by omitting the index of observation. Hole length is set as \(L = \frac{{\sqrt {\pi - 2} }}{2}\) such that the area of failure is half of the search space. The Circle function is defined to have four peaks:

where \(G_i\left( {{{\mathbf{x}}}} \right) = \mathop {\sum}\nolimits_{d = 1}^2 {a_{id}} \left| {x_d - c_{id}} \right|\) with center

and scale being

The peaks are connected with ridges, which may facilitate finding the top by tracing the ridges. Function SCircle (x) is normalized by ZCircle = 1.53 such that the maximum of the function is 1 at \({{{\mathbf{x}}}} = \left[ {0.7,0} \right]^{\it{ \top }}\). The Hole function is similarly defined but the ridges are tilted:

where \(H_i\left( {{{\mathbf{x}}}} \right) = \mathop {\sum}\nolimits_{d = 1}^2 {a_{id}} \left| {z_{id}} \right|\) with rotated vector

Center coordinates were slightly moved to avoid touching the failure boundary:

whereas scale ai was the same as that used for the Circle function. Since the peaks are disconnected, the parameter search may add some difficulty. Function SHole (x) is normalized by ZHole = 1.85 such that the maximum of the function is 1 at \({{{\mathbf{x}}}} = \left[ {0.75,0} \right]^{\it{ \top }}\). We investigate whether replacing failed observations with low values may affect the optimization when the optimum is located at the boundary between successful and failed regions. To this end, we used the following Softplus function:

where Zsp = 1.63 is used as a normalization factor such that the maximum of the function is 1 at \({{{\mathbf{x}}}} = \left[ {\frac{1}{{\sqrt 2 }},\frac{1}{{\sqrt 2 }}} \right]^{\it{ \top }}\). As shown in Fig. 1c, the optimal point \({{{\mathbf{x}}}} = \left[ {\frac{1}{{\sqrt 2 }},\frac{1}{{\sqrt 2 }}} \right]^{\it{ \top }}\)lies at the boundary between successful and failed regions.

Impact of the padding for failed observations on prediction

Our method replaces failed observations with inferior values, that is, small values for maximization problems, to construct the prediction model. While this may distort the fitting of the prediction model to the shape of the objective function, we empirically confirm that the distorted prediction is viable for optimization. For example, Supplementary Fig. 4 depicts the predicted mean of the Hole function given 100 observations. These 100 observations were collected via the sequential procedure of the BO with respective padding methods: F, @0, and @−1. As a whole, the feature of having four peaks is well reproduced by the incremental choice of observations, which is critical for the maximization problem. In contrast, the function shape is poorly reproduced in low-value regions. This is partly due to the replacement of failure values and partly because such areas are less important for maximization, leading to a sparse distribution of observations.

ML-MBE growth and sample characterizations

Epitaxial tensile-strained SRO films with a thickness of 60 nm were grown on DyScO3 (DSO) (110) substrates in a custom-designed molecular beam epitaxy (MBE) system with multiple e-beam evaporators (Fig. 5a). Rare-earth scandate DyScO3 has a GdFeO3 structure, a distorted perovskite structure with the (110) face corresponding to the pseudocubic (001) face27. Since the in-plane lattice parameter for the pseudocubic (001) face of DyScO3 (3.944 Å) is larger than that of bulk SrRuO3 (3.93 Å), the SrRuO3 films epitaxially grown on the DyScO3 substrate is tensile strained. Detailed information about the MBE system is described elsewhere51,52,53. We precisely controlled the elemental fluxes even for elements with high melting points, e.g., Ru (2,250 °C), by monitoring the flux rates with an electron-impact-emission-spectroscopy sensor and feeding the results back to the power supplies for the e-beam evaporators. The oxidation during growth was carried out with ozone (O3) gas (~15% O3 + 85% O2) introduced through an alumina nozzle pointed at the substrate. For the high-quality SrRuO3 growth, fine tuning of the growth conditions (the ratio of the Ru flux to the Sr flux, growth temperature, and local ozone pressure at the growth surface) is important13,49. To systematically change the Ru flux ratio to the Sr flux, we changed the Ru flux while keeping the Sr flux at 0.98 Å/s. The growth temperature was controlled by the heater shown in Fig. 5a. We can adjust the local ozone pressure at the growth surface by changing the O3-nozzle-to-substrate distance while keeping the flow rate of O3 gas at ~2 sccm. We ran the BO algorithm in the three-dimensional space. The search ranges for the Ru flux rate, growth temperature, and O3-nozzle-to-substrate distance were 0.25–0.50 Å/s, from 700–900 °C, and 10–50 mm, respectively. We searched equally spaced grid points for each parameter. The number and corresponding intervals of the respective quantities were 50 (0.005 Å/s interval), 100 (2 °C interval), and 80 (0.5 mm interval). The experimental errors of the actual values from the nominal values for the respective growth parameters are typically ±0.005 Å/s, ±1 °C, and ±0.2 mm, respectively. Since the three-dimensional parameter space consisted of 400,000 (50 × 100 × 80) points, performing a trial for the entire space in a point-by-point manner was unrealistic, as only several runs can be carried out per day with a typical MBE system. The crystal structure of the films was monitored by in-situ reflection high-energy electron diffraction (RHEED) after the growth. When diffractions from the SrRuO3 phase were indiscernible and/or diffractions from SrO or RuO2 precipitates (impurity phases) appeared, we defined the RRR value of those samples to be the worst experimental RRR value by that time. To determine the RRR of the samples, their electrical resistivity was measured using a standard four-probe method with Ag electrodes deposited on the SrRuO3 surface without any additional processing. The distance between the two voltage electrodes was 2 mm.

Here, a black-box function RRR = S(x) is the target function specific to our SrRuO3 films, and x represents the growth parameters (Ru flux rate, growth temperature, and O3-nozzle-to-substrate distance). We used a data set \({{{\mathcal{D}}}}_{n - 1}: = \left\{ {\widetilde {{{\mathbf{x}}}}_i,\tilde y_i} \right\}_{i = 1}^{n - 1}\) (Fig. 5c) obtained from past n−1 MBE growths and RRR measurements (Fig. 5b) of SrRuO3 films to construct a model to predict the value of S(x) at an unseen x. To this end, we use Gaussian process regression (GPR) to estimate the mean m and standard deviation s at an arbitrary parameter value x (see the Methods section ‘Bayesian optimization with experimental failure’ for details). Specifically, GPR predicts the value of S(x) as a Gaussian-distributed variable \({{{\mathcal{N}}}}\left( {m\left( {{{\mathbf{x}}}} \right),s^2\left( {{{\mathbf{x}}}} \right)} \right)\), where m and s depend on x and n−1 observations. In short, m(x) represents the expected value of RRR and s(x) represents the uncertainty of RRR at x. To consider the inherent noise in the RRR of SrRuO3 films grown under nominally the same conditions, the variance of the observation noise σ2 of the GPR model was set to 0.02 or 0.002. In our implementation, we used the same kernel function (Matérn 5/2 kernel) used in materials science8 and the BO literature11 since it is good at fitting functions with steep gradients. We iterated the routine after the initial MBE growth with five random initial growth parameters and RRR measurements. First, GPR was updated using the data set at the time (Fig. 5c). Subsequently, to assign the value of the growth parameter in the next run, we calculated the EI54 (Fig. 5d).

Data availability

The data that support the findings of this study are available from the corresponding authors upon reasonable request.

Code availability

The python codes used in this study is available at https://github.com/nttcslab/floor-padding-BO.

Change history

22 September 2022

A Correction to this paper has been published: https://doi.org/10.1038/s41524-022-00892-7

References

Mueller, T. Kusne, A. G. & Ramprasad, R. in Reviews in Computational Chemistry, (eds Parrill, A. L. & Lipkowitz, K. B.) 29 (Wiley, 2015).

Lookman, T. Alexander, F. J. & Rajan, K. Information Science for Materials Discovery and Design (Springer, 2016).

Burnaex, E. & Panov, M. Statistical Learning and Data Sciences (Springer, 2015).

Agrawal, A. & Choudhary, A. N. Materials informatics and big data: Realization of the “fourth paradigm” of science in materials science. APL Mater. 4, 053208 (2016).

Rajan, K. Materials informatics. Mater. Today 8, 38–45 (2005).

Ueno, T. et al. Adaptive design of an X-ray magnetic circular dichroism spectroscopy experiment with Gaussian process modelling. npj Comput. Mater. 4, 4 (2018).

Ren, F. et al. Accelerated discovery of metallic glasses through iteration of machine learning and high-throughput experiments. Sci. Adv. 4, eaaq1556 (2018).

Wakabayashi, Y. K., Otsuka, T., Taniyasu, Y., Yamamoto, H. & Sawada, H. Improved adaptive sampling method utilizing Gaussian process regression for prediction of spectral peak structures. APEX 11, 112401 (2018).

Li, X. et al. Efficient optimization of the performance of Mn2+-doped kesterite solar cell: machine learning aided synthesis of high efficient Cu2(Mn,Zn)Sn(S,Se)4 solar cells. Sol. RRL 2, 1800198 (2018).

Hou, Z., Takagiwa, Y., Shinohara, Y., Xu, Y. & Tsuda, K. Machine-learning-assisted development and theoretical consideration for the Al2Fe3Si3 thermoelectric material. ACS Appl. Mater. Interfaces 11, 11545–11554 (2019).

Snoek, J., Larochelle, H. & Adams, R. P. paper presented at Advances in Neural Information Processing Systems 25, see also http://papers.nips.cc/paper/4522-practical-bayesian-optimization (2012).

Xue, D. et al. Accelerated search for BaTiO3-based piezoelectrics with vertical morphotropic phase boundary using Bayesian learning. Proc. Natl Acad. Sci. USA 113, 13301–13306 (2016).

Wakabayashi, Y. K. et al. Machine-learning-assisted thin-film growth: Bayesian optimization in molecular beam epitaxy of SrRuO3 thin films. APL Mater. 7, 101114 (2019).

Mcdannald, A. et al. On-the-fly autonomous control of neutron diffraction via physics-informed bayesian active learning. Appl. Phys. Rev. 9, 021408 (2022).

Ziatdinov, M. A. et al. Hypothesis learning in automated experiment: application to combinatorial materials libraries. Adv. Mater. 34, 2201345 (2022).

Sakai, J., Murakami, S. I., Hirama, K., Ishida, T. & Oda, Z. High-throughput and fully automated system for molecular-beam epitaxy. J. Vac. Sci. Technol. B 6, 1657 (1988).

O’Steen, M. et al. Molecular Beam Epitaxy From Research to Mass Production, 649–675 (Elsevier Inc., 2018).

Tabor, D. P. et al. Accelerating the discovery of materials for clean energy in the era of smart automation. Nat. Rev. Mater. 3, 5–20 (2018).

Burger, B. et al. A mobile robotic chemist. Nature 583, 237–241 (2020).

Shimizu, R., Kobayashi, S., Watanabe, Y., Ando, Y. & Hitosugi, T. Autonomous materials synthesis by machine learning and robotics. APL Mater. 8, 111110 (2020).

Kusne, A. G. et al. On-the-fly closed-loop materials discovery via Bayesian active learning. Nat. Commun. 11, 5966 (2020).

Ament, S. et al. Autonomous materials synthesis via hierarchical active learning of nonequilibrium phase diagrams. Sci. Adv. 7, eabg4930 (2021).

Stach, E. et al. Autonomous experimentation systems for materials development: a community. Perspect., Matter 4, 2702–2726 (2021).

Li, Y., Xia, L., Fan, Y., Wang, Q. & Hu, M. Recent advances in autonomous synthesis of materials. ChemPhysMater 1, 77–85 (2022).

Butler, K. T., Davies, D. W., Cartwright, H., Isayev, O. & Walsh, A. Machine learning for molecular and materials science. Nature 559, 547–555 (2018).

Bertsimas, D., Pawlowski, C. & Zhuo, Y. D. From predictive methods to missing data imputation: an optimization approach. J. Mach. Learn. Res. 18, 1–39 (2018).

Wakabayashi, Y. K. et al. Wide-range epitaxial strain control of electrical and magnetic properties in high-quality SrRuO3 films. ACS Appl. Electron. Mater 3, 2712–2719 (2021).

Gardner, J. R. et al. Bayesian optimization with inequality constraints. Proc. Mach. Learn. Res. 32, 2 (2014).

Nickisch, H. & Rasmussen, C. E. Approximations for binary Gaussian process classification. J. Mach. Learn. Res. 9, 2035–2078 (2008).

Randall, J. J. & Ward, R. The preparation of some ternary oxides of the platinum metals. J. Am. Chem. Soc. 81, 2629–2631 (1959).

Wakabayashi, Y. K. et al. Structural and transport properties of highly Ru-deficient SrRu0.7O3 thin films prepared by molecular beam epitaxy: Comparison with stoichiometric SrRuO3. AIP Adv. 11, 035226 (2021).

Wakabayashi, Y. K. et al. Single-domain perpendicular magnetization induced by the coherent O 2p-Ru 4d hybridized state in an ultra-high-quality SrRuO3 film. Phys. Rev. Mater. 5, 124403 (2021).

Eom, C. B. et al. Single-crystal epitaxial thin films of the isotropic metallic oxides Sr1-xCaxRuO3. Science 258, 1766–1769 (1992).

Izumi, M. et al. Magnetotransport of SrRuO3 thin film on SrTiO3 (001). J. Phys. Soc. Jpn. 66, 3893–3900 (1997).

Koster, G. et al. Structure, physical properties, and applications of SrRuO3 thin films. Rev. Mod. Phys. 84, 253 (2012).

Shai, D. E. et al. Quasiparticle mass enhancement and temperature dependence of the electronic structure of ferromagnetic SrRuO3 thin films. Phys. Rev. Lett. 110, 087004 (2013).

Takahashi, K. S. et al. Inverse tunnel magnetoresistance in all-perovskite junctions of La0.7Sr0.3MnO3/SrTiO3/SrRuO3. Phys. Rev. B 67, 094413 (2003).

Li, Z. et al. Reversible manipulation of the magnetic state in SrRuO3 through electric-field controlled proton evolution. Nat. Commun. 11, 184 (2020).

Siemons, W. et al. Dependence of the electronics structure of SrRuO3 and its degree of correlation on cation off-stoichiometry. Phys. Rev. B 76, 075126 (2007).

Chen, Y., Bergman, D. L. & Burkov, A. A. Weyl fermions and the anomalous Hall effect in metallic ferromagnets. Phys. Rev. B 88, 125110 (2013).

Takiguchi, K. et al. Quantum transport evidence of Weyl fermions in an epitaxial ferromagnetic oxide. Nat. Commun. 11, 4969 (2020).

Kaneta-Takada, S. et al. Thickness-dependent quantum transport of Weyl fermions in ultra-high-quality SrRuO3 films. Appl. Phys. Lett. 118, 092408 (2021).

Kaneta-Takada, S. et al. Quantum limit transport and two-dimensional Weyl fermions in an epitaxial ferromagnetic oxide. Preprint at https://arxiv.org/abs/2106.03292v1 (2021).

Lin, W. et al. Electric field control of the magnetic Weyl fermion in an epitaxial SrRuO3 (111) thin film. Adv. Mater. 2021, 2101316 (2021).

Thompson, J. et al. Enhanced metallic properties of SrRuO3 thin films via kinetically controlled pulsed laser epitaxy. Appl. Phys. Lett. 109, 161902 (2016).

Gan, Q., Rao, R. A. & Eom, C. B. Growth mechanisms of epitaxial metallic oxide SrRuO3 thin films studied by scanning tunneling microscopy. Appl. Phys. Lett. 71, 1171 (1997).

Jiang, J. C. et al. Effects of miscut of the SrTiO3 substrate on microstructures of the epitaxial SrRuO3 thin films. Mater. Sci. Eng. B 56, 152–157 (1998).

Popescu, D. et al. Feasibility study of SrRuO3/SrTiO3/SrRuO3 thin film capacitors in DRAM applications. IEEE Trans. Electron Devices 61, 2130–2135 (2014).

Kim, B. et al. Effects of the flux-controlled cation off-stoichiometry in SrRuO3 grown by molecular beam epitaxy on its physical and electrical properties. Mater. Lett. 281, 128375 (2020).

Rasmussen, C. E. & Williams, C. K. I. Gaussian Processes for Machine Learning (MIT Press, 2006).

Naito, M. & Sato, H. Stoichiometry control of atomic beam fluxes by precipitated impurity phase detection in growth of (Pr,Ce)2CuO4 and (La,Sr)2CuO4 films. Appl. Phys. Lett. 67, 2557 (1995).

Yamamoto, H., Krockenberger, Y. & Naito, M. Multi-source MBE with high-precision rate control system as a synthesis method sui generis for multi-cation metal oxides. J. Cryst. Growth 378, 184–188 (2013).

Wakabayashi, Y. K. et al. Ferromagnetism above 1000 K in a highly cation-ordered double-perovskite insulator Sr3OsO6. Nat. Commun. 10, 535 (2019).

Mockus, J., Tiesis, V. & Zilinskas, A. in Towards Global Optimisation, (eds Dixon, L. C. W. & Szego, G. P.) 2, (Elsevier, 1978).

Author information

Authors and Affiliations

Contributions

Y.K.W. and T.O. conceived the idea, designed the experiments, and directed and supervised the project. T.O. and Y.K.W. implemented the Bayesian optimization algorithm. T.O. performed simulations with the virtual data. Y.K.W. carried out the ML-MBE growth and characterization of the samples. Y.K.W. and T.O. co-wrote the paper with input from all authors. Y.K.W. and T.O. contributed equally to this work.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wakabayashi, Y.K., Otsuka, T., Krockenberger, Y. et al. Bayesian optimization with experimental failure for high-throughput materials growth. npj Comput Mater 8, 180 (2022). https://doi.org/10.1038/s41524-022-00859-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-022-00859-8

This article is cited by

-

Machine-learning-assisted and real-time-feedback-controlled growth of InAs/GaAs quantum dots

Nature Communications (2024)

-

High-mobility two-dimensional carriers from surface Fermi arcs in magnetic Weyl semimetal films

npj Quantum Materials (2022)