Abstract

Recent advances in (scanning) transmission electron microscopy have enabled a routine generation of large volumes of high-veracity structural data on 2D and 3D materials, naturally offering the challenge of using these as starting inputs for atomistic simulations. In this fashion, the theory will address experimentally emerging structures, as opposed to the full range of theoretically possible atomic configurations. However, this challenge is highly nontrivial due to the extreme disparity between intrinsic timescales accessible to modern simulations and microscopy, as well as latencies of microscopy and simulations per se. Addressing this issue requires as a first step bridging the instrumental data flow and physics-based simulation environment, to enable the selection of regions of interest and exploring them using physical simulations. Here we report the development of the machine learning workflow that directly bridges the instrument data stream into Python-based molecular dynamics and density functional theory environments using pre-trained neural networks to convert imaging data to physical descriptors. The pathways to ensure structural stability and compensate for the observational biases universally present in the data are identified in the workflow. This approach is used for a graphene system to reconstruct optimized geometry and simulate temperature-dependent dynamics including adsorption of Cr as an ad-atom and graphene healing effects. However, it is universal and can be used for other material systems.

Similar content being viewed by others

Introduction

Over the last two decades, electron and scanning probe microscopies have evolved as one of the primary tools to study systems in the domain of physical and life sciences at the atomic to mesoscopic length scales1,2,3,4,5. Advances in (Scanning) transmission electron microscopy, (S)TEM, and scanning tunneling microscopy (STM) measurements produce highly reliable structural and spectral data containing a wealth of information on structures and functionalities of materials. In particular, aberration-corrected STEM inclusive of single-atom EELS imaging allows the study of single impurity atoms6, structures with grain boundaries7, probe orbital8,9, and magnetic phenomena10, plasmons11,12, phonons13, and even the anti-Stokes excitations in complex materials14.

There also exists ample detailed fundamental studies exploring quantum corrals15, molecular cascades16, quantum dots along with investigations of surface chemistry17,18 that have been carried out utilizing STM measurements. STEM offers the potential for much higher throughput imaging and data generation as compared to STM due to intrinsic latencies in electron beam motion and image acquisition. Both in STM and STEM, the probe can induce changes in materials structure. In STM, this is often associated with probe damage as well, and in most cases, perceived as a negative effect; controllable modifications of the surface by an STM probe are actively pursued for atomic fabrication and exploration of surface chemistry16,19,20,21. Comparatively, in STEM the changes in material structure do not affect the probe state, rendering this technique a powerful tool for exploring metastable chemical configurations and beam-, temperature-, and chemistry-induced processes22,23,24,25. It is possible to manipulate atoms26,27 and corresponding positions and thereby solids and molecular systems with both techniques in controlled environments.

The images generated at different stages of STEM observations contain a wealth of information of materials structures, functionality, and chemical transformation pathways encoded in observed positions. The information from such experiments can be both qualitative and quantitative in nature. Images generated by such observations lead to high-resolution data, creating a platform to build deep learning (DL) models for finding features28,29, predicting scalar functional quantities (such as values of ferroelectric polarizations), or even crafting chemical or structural space maps. Comprehensive studies30,31,32,33,34,35 utilizing such datasets combined with modern DL techniques such as convolution networks, variational autoencoders as implemented in general machine learning (ML) frameworks36,37,38 or ensemble settings, have already shown great potential to advance physics-based understanding of materials by establishing causal relationships between structures and properties.

On the other hand, the development and availability of more computational capabilities including accessible CPU/GPUs, efficient algorithms, and corresponding implementations have significantly boosted the advancement of physical simulations. Physical models constructed using first-principles theory to quantum Monte Carlo (MC) and finite-element methods, spanning over quantum-mechanical to continuum scales, lead to an abundance of insights on structural, thermodynamic, and electronic properties of solids, glasses, and liquids. In general, atomistic simulations provide information on atomic length scales where continuum theory breaks down and instead complex many-body quantum-mechanical theory comes into play to model behavior of each atom and how collectively these atoms give rise to properties of a material under specific conditions. Even though such detailed studies have become quite common in the past decade, atomistic understandings do not readily translate to the macroscales, and hence there has been a consistent effort in the scientific community to merge multi-scale investigations performed across overlapping length and timescales. If we compare some of the nuances of various simulations, we can easily narrow down the primary challenges and the need for transferring the knowledge between each type of these models. For e.g., density functional theory-based (DFT) computations lead to studying behaviors of materials conventionally at 0 K and molecular dynamics (MD) techniques can overcome such challenges by including temperature-dependent comportment. The accuracy of MD simulations is often limited by the type of particle–particle interactions represented by force fields as well as the dilemma to choose between computation efficiency and length of simulations. Consequently, a slow thermodynamic process like diffusion cannot always be modeled by this approach and that is where MC methods can become useful. It is possible to randomly probe molecular systems using such simulations, enabling researchers to study various mechanisms, steady-state properties, and even dynamics using kinetic MC. There also exist several advanced frameworks combining some of these methods such as ab initio MD (AIMD) that integrates quantum-mechanical estimation of interatomic forces and classical Newtonian physics to move atoms from one instant to another. Overall, irrespective of the simulations utilized to study the complex behavior of material systems, there is always a colossal flow of data that gets generated. This is one of the many reasons why data-driven and ML approaches have become so popular in recent years in any scientific domain.

There is a growing availability of databases39,40,41,42,43,44 collating simulations and experimental data across disciplines, which are being employed to accelerate the discoveries of novel materials and study advanced material functionalities. A variety of successful examples45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62 are illustrated for technological applications in the fields of energy, catalysis, and photovoltaics, for pharmaceutical applications in drug design and reaction mechanisms mapping, as well as in advancing fundamental knowledge of materials behavior, including magnetism, ferroelectricity, and superconductivity. There are also exciting studies showing the efficacy of ML and DL techniques to facilitate meaningful contributions to solve the electronic structure, force-field-related technical challenges. Most of these constructed frameworks either are solely built on theoretical, simulations-based, or experimental data or at times combinations of these. In addition, there are examples of direct comparisons of endpoint-like properties such as polarization, magnetic structures obtained from simulations, and measurements that can be found in the literature.

In addition, there are already comprehensive efforts in the materials community to bridge the gap between knowledge acquired from experiments and theory, to go from observational to synthetic learning, and vice versa. A non-exhaustive list of such frameworks include Ingrained63, EXSCLAIM64, and BEAM65, abTEM66 show how to utilize already existing data from the literature to create labeled datasets, use image-based data and parameters based on users’ choice to find optimized fit-to-experiment structures via forward modeling, perform scalable data analyses and simulations on characterization data, compute potential via DFT, simulate standard imaging modes. In addition, there is an extensive list of examples from the STEM to model electron beam effects for various materials systems including atoms assembly, atomic manipulations, or insertions67,68,69,70. Finally, multiple reports on 2D materials are available in the literature that have explored formations, dynamics, and stability of defects, edge reconstructions, bond inversions using a combination of STEM observations and simulations71,72,73,74,75,76,77,78,79,80,81.

However, systematic studies utilizing an across-the-board framework mapping directly between experimental observations and computational studies using DL approaches is still in its infancy. As a first relevant aspect, the timescales of STEM observations and intrinsic molecular dynamics are strongly different, with the DFT and MD models capable of simulating system sizes of Å to nanometer scales for up to microseconds, but taking multiple CPU hours, while STEM images are typically available at the fraction of a second. The length and timescales of such simulations, analyses methods, and retrieval of observational data are shown by a comparative chart in Fig. 1. At the same time, there is a significant disparity in the latencies of calculations, with the DFT or MD simulations often taking many hours to days and weeks of time, well above the timescale of STEM measurements.

While DFT and MD can address the system dynamics on the picoseconds to microseconds, electron microscopy generally explores dynamic changes within milliseconds—hours interval. This significant time disparity is further exacerbated by the fact that the time to complete DFT/MD simulations for a sufficiently large system is typically hours or days, way above the imaging time. Hence, dynamic integration of theory and experiment in a single workflow necessitates the use of machine learning both to identify (based on observations) the regions of potential interest and to accelerate simulations via proxy models and theory to define proper embeddings that preserve the functionality of material in small number of atom models.

Hence, while the theoretical methods directly match the experimental observables, there is a drastic mismatch between both the accessible and computational timescales, making the integration between the two highly nontrivial and necessitating development of strategies to deal with this mismatch and, importantly, formulating the physics-relevant questions that can be addressed.

With these caveats, the first enabling step towards bridging these two areas is direct piping of the STEM data into the simulation environment. The crucial roadblocks to materialize this framework are listed below.

-

Finding features such as atoms, defects (nearly identical objects) from a microscopic image using the DL model and extending it to recognize features from images retrieved under different experimental conditions leading to out-of-distribution effects, is itself a challenge.

-

Defining regions of interest such as parts of the image showing defects and determining the origins of such defects (could be electron-beam induced) is also not trivial. This gives rise to a choice of an intractable number of possibilities to define the initial states of the simulations.

-

Importing coordinates of atoms directly predicted by either a DL model or experiments to simulations require quantifying uncertainties at all stages of the framework. In other words, predictions with high uncertainty, if transferred to initiate simulations, may never converge.

-

Ways to close the loop where information from the theoretical simulations can guide future experiments and on-the-fly analyses, are also yet to be investigated.

In this work, we show how deep learning can bridge together the knowledge learned from microscopic images (stage 1 in Fig. 2) and first-principles simulations to develop a comprehensive understanding of the physics (stages 2 and 3 in Fig. 2) of the materials of interest. The schematic of the entire workflow is shown in Fig. 2. Here, we focus on how deep convolutional neural networks can be employed to identify atomic features (type and position) in graphene, use them to construct supercells, perform DFT simulations to find optimized geometry of the structures followed by studying temperature-dependent dynamics of system evolutions with ad-atoms and defects. The results along with associated uncertainties in predictions at various levels as obtained utilizing this framework may be used to evaluate and modify experimental conditions and regions of interest.

Figure shows three primary stages of the framework. Stage 1 (a) consists of how deep learning models take microscopic images (STEM image of graphene) as inputs and identify features such as atoms, defects, and respective positions. In Stage 2 as shown in (b), the coordinates are put together to build a simulation object (c) followed by performing atomistic simulations in Stage 3 to study physical phenomena. The results from such simulations are used later to better build and guide physics-informed experiments.

Results and discussion

Workflow and its implementations

The first stage of the workflow involves the application of DL to the experimental imaging of atoms. A standard DL workflow consists of preparing a single labeled training set, choosing a suitable neural network architecture, dividing the prepared training set into training, test, and validation sets, and tuning the training parameters until the optimal performance on the test and/or “holdout” set is achieved. Once the labeling is accomplished, the DL models are utilized to find the features, in this case, positions and types of atoms (C), defects (Si), and the uncertainties are determined in Stage 2 using AtomAI36.

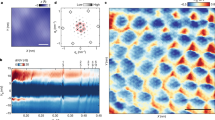

Figure 3 shows one of the STEM graphene image frames (a) and corresponding C and Si atoms, as predicted by the DL (b) model. We note that the associated uncertainties in such predictions may vary from one image frame to another. With minimal to null human interventions, it is also possible to make sure that all atoms are identified accurately. However, the focus of this framework is to enable the transformation from microscopic images to ML predictions directly (automated) to simulation environments. As a part of Stage 3, simulation objects (could be bulk, supercell, surface) are created (c) using AtomAI utility functions. These objects are constructed such that the simulation cells (cubic or any Bravais lattice type of user’s choice) can accommodate all atoms with acceptable bond lengths and imposed periodic boundary conditions, as recognized via the specific sample view or perspective.

Figure shows a STEM image frame (a) of graphene, all C atoms, and defects (Si) as recognized by DCNN (b), corresponding simulation object (c), and an example patch (identified region of interest) used (d) to perform DFT and AIMD simulations. The blue atoms represent Si atoms, and the brown ones are C atoms. Here, we note that some atomic species got misclassified but it did not affect the workflow since we cropped the high-certainty (and correctly classified) region. This is somewhat expected during the actual real-time deployment of the workflow.

We note that for this image frame, there are a total of 2021 C and 22 Si atoms were detected. While performing DFT computations using these many atoms is possible with the advancement in parallelized codes and computational power, it is heavily dependent on the availability of such resources. Furthermore, it may not even be interesting from the physics point of view to explore the full structure. As an alternative, we can identify several parts or patches such as those containing defects using our domain knowledge and explore these regions of interest. In addition, the system is continuous in one image frame, meaning the graphene sheet spans over the full-frame, be it in lattice or amorphous phase. However, for simulations, we only assume the lattice phase and the rest to be vacuum corresponding to the amorphous region within the DFT framework. Hence, we terminate all twofold coordinated C atoms with H for any “cropped” structure, irrespective of whether they are surrounded by graphene lattice or amorphous phase in the original structure to maintain charge neutrality and stoichiometry. Figure 3d shows one of the identified patches that is used to perform simulations to obtain optimized geometry and investigate electronic properties and temperature-dependent dynamical evolutions.

Details on DL models

For DL-based image analysis, individual images are labeled at the pixel level, where each pixel represents an atom, impurity, or background. Recognizing features as represented by individual pixels is referred to as semantic segmentation. This differs from a typical image-classification task of natural images where the image gets categorized as a whole. Each graphene image frame is of dimension (height = 896 pixels × width = 896 pixels) and 100 such frames combined are used to construct the training set. Each pixel is of 0.104 Å length as considered to convert pixels into cartesian coordinates based on the STEM scan size. A U-Net82 type neural network as used in this workflow takes the images as the input and gives output as clean images of circular-shaped “blobs” on a uniform background, from which one can identify the (x,y) centers of atoms. At the initial stages of the DL workflow, atoms are classified based on the variation in intensity using a Gaussian mixture model. In the later stage of the training, such information is utilized to generate a multi-label segmentation mask to perform multi-class classifications for both types of atoms, as present in the system. Here, the predictions are multi-class representing the C and Si atoms. We have utilized an ensemble setting35 such that an artifact-free model or a subset of models can be identified that is capable of not predicting any ‘unphysical features’ in experimental data and can be extended to provide robustness and pixel-wise uncertainty estimates in prediction as one transition from one experiment to another. The uncertainty is estimated as a standard deviation of ensemble predictions for each pixel. For our predictions within the DL framework, we can obtain spatial maps of uncertainty estimates. The regions characterized by high uncertainty may be due to some “unknown” defects/species (that were not a part of training data). This may lead to further investigation of those specific regions closer and explore them with DFT/MD (e.g., by adding functional groups, etc.). This procedure can be repeated multiple times to achieve a high detection rate for the entire dataset of dynamical data. In addition, it is important to note that the DL framework exploited here may also help to minimize the effects associated with out-of-distribution effects83 as present in the observational space due to variations in experimental parameters. The utilization of atom positions with high confidence level is crucial before transforming these predicted DL coordinates to simulation objects such that a meaningful initial state for each simulation is guaranteed.

Geometry reconstruction using first-principles computations

All first-principles computations are performed using DFT within the generalized gradient approximation (GGA) framework and more details on the computations are given in the Methods section. To generalize this framework and make it more open-access, we first explored the possibilities utilizing Python-based codes which consist of computationally inexpensive algorithms to optimize the electronic structure and consequently use the resulting system to perform MD simulations. More details on this implementation can be found in the associated Jupyter notebook given in the “Code availability” section. It is important to mention that appropriate reconstruction of geometries of such structures cannot be obtained using methods like quasi-Newton algorithms and pair-potentials to study dynamics, as implemented in the popular Python-based frameworks84,85.

Hence, we have employed DFT within GGA using VASP to first obtain the optimized structures. Once the forces and stress tensors are minimized within the given criteria by solving the Kohn–Sham (KS) equations, the optimized geometry is imported to the AIMD environment. DFT has been known for yielding reasonable geometries, meaning lattice parameters, bond angles, bond lengths along with coordinates of atoms for a bulk or surface cell and can be comparable to that retrieved from experiments. We note that more detailed techniques such as coupled cluster, and even DFT with the inclusion of van der Waals interactions, would be more appropriate to include dispersions that are important for graphene surfaces. However, these are computationally demanding and go beyond the scope of this paper that is focused on establishing a workflow, going from a microscopic image to performing simulations with recognized features with the help of DL approaches.

We started by constructing a graphene supercell (patch extracted from the full 2000 atoms supercell) of 91 C atoms with lattice parameters a = b = c = 25.786 Å, with α = β = γ = 90°, where all the twofold C atoms are terminated with H atoms. Along with aiming for the full geometry optimization where all atoms (C and H), cell volume, and shape are in the ground state, we also designed different configurations to perform selective dynamics within DFT simulations. These include computations where (a) all the perimeter atoms positions are fixed, (b) coordinates in a, b, or (x and y) directions are fixed, only coordinates along z are allowed to relax, and (c) positions of all H atoms remain fixed. The resulting structures are shown in Fig. 4a–d. The average C–C and C–H bond lengths resulting from the full geometry optimizations corresponding to Fig. 4a are 1.42 and 1.09 Å, respectively. For the simulations performed with selective dynamics, these bond lengths for C–C and C–H vary between 1.43–1.50 and 0.94–1.04 Å, respectively. Such changes can be attributed to the change in the charge densities of the atoms, especially around the already existing defect regions86,87. We do observe potential for the formation of a carbon cluster defect region that was proliferated in the initial state (may have formed during growth or could be electron beam induced). The structures are all metallic in nature, as expected. Whether this region may show and evolve into one of the common defects such as a Stone–Wales defect (5-7-7-5) or 5-8-5 defects at higher temperatures, can be explored using AIMD simulations under various annealing conditions or environments. If we compare the coordinates in x, y, and z directions for all C atoms as obtained after DFT simulation and those predicted by DL, the average percentage error in the coordinates along x and y directions are <5% which is reasonable. The full list of coordinates from both predictions along with point-by-point errors can be found in Table 1 of Supplementary Material. Overall, the optimized geometries of this representative patch can be reconstructed very well from starting with the ML-predicted coordinates using DFT-based computations as shown by this stage of the workflow.

Temperature-dependent system evolutions using AIMD simulations

To study the temperature-dependent behavior of the fully relaxed system obtained from DFT at 0 K, we move on to applying AIMD within VASP. The temperatures considered using a Nose–Hoover thermostat to control the heat bath are 300, 500, 700, 900, 1200, 2000, 3000, 4000, and 5000 K. Here, the simulations are performed for 2 ps, and the rest of the computational details can be found in the Methods section.

While the displacement of the atoms in x and y directions are the most and increase as the temperature goes higher, the bond angles between C atoms inside the hexagonal, heptagonal, and pentagonal rings along with angles between the shared atoms belonging to each type of these rings vary between 1–5 degrees dependent on the temperature range up to 1200 K. The distances between C atoms in heptagonal and pentagonal rings present at 300 and 1200 K temperatures are marked and noted in Table 1. The resulting structures however do not show many changes or reconstructions compared to each other in this temperature range. However, once the system is heated up to 4000 K, the defects propagate and rearrange themselves to form 5-7-7-5 defects. The bond lengths of the newly formed pentagonal and heptagonal rings are listed in Table 2. The average bond lengths in pentagonal, hexagonal, and heptagonal rings at 300 K are 1.713, 1.443, and 1.447 Å, respectively. At 2000 K, only hexagonal rings stay with a changed average bond length of 1.422 Å. As the temperature rises to 4000 K, the system fully reconstructs itself to form the 5-7-7-5 defects with an average bond length of the seven-members and five-members rings to be 1.430 and 1.606 Å, respectively.

Temperature-dependent system evolutions with ad-atoms

The presence of impurities is quite common in materials in general and could be responsible for altering electronic properties as well as introducing point defects in materials making them suitable for technological applications such as in electronic and optoelectronic devices, gas sensors, biosensors, and batteries for energy storage. For graphene, two types of defects88,89,90 are common. One is Stone–Wales that is generated by a pure reconstruction of a graphene lattice (switching between pentagons, hexagons, and heptagons). Here no atoms are added or removed. The previous section has explored this scenario. Another is defect reconstruction that can either originate by removing an atom from its lattice position such that the structure relaxes into a lower energy state with a different bonding geometry or by adding a foreign atom to bridge, hollow or top sites potentially leading to different bonding with the graphene atoms.

As example cases, we have studied two different scenarios of putting (a) transitional metal atom91 such as Cr and (b) CH, CH2, CH3 groups92 as ad-atoms at different temperatures. While the first choice is mostly driven by already studied effects of metal-carbon binding effects with direct applications into developing battery materials, the latter is propelled by the self-healing mechanism of graphene sheets observed under electron beams. For both studies, the configurational space to be explored is huge in terms of choosing the initial positions of ad-atoms, distances between the atoms and surfaces, or the bonds to be broken to see if the graphene sheet can rearrange itself. This also creates a computational challenge for such a workflow to investigate all possible configurations. A sample averaging technique can be employed to perform such studies93. For this project, we have limited ourselves to probing a few representative cases. While for Cr ad-atom we have chosen one configuration for each of the bridge, hollow and top sites, for CH groups, three different initial configurations are constructed by breaking bonds between C, H atoms and placing the molecules on the top. The temperature-dependent dynamics is then explored using AIMD simulations at 300 K.

A Cr atom is added to one of the bridge, hollow and top sites in the graphene cell as demonstrated by Fig. 5a, c, e. Magnetism was not considered for the Cr atom. The ad-atom, initially assigned to the bridge site, likes to diffuse into the system causing bond-breakings between the hexagonal geometries at 300 K after 10 fs as shown in Fig. 5b. For the hollow site, the metal ion forms a stable bond with the nearest C atoms with an average bond length of 2.165 Å and maintains this formation for the entirety of the simulation as depicted in Fig. 5d. The ad-atom added to the top site (Fig. 5f) shows the highest displacement in the c direction at 2.237 Å distance from the closest in-plane C atom. The hollow site is the most stable of all if we compare the total energies such as −910.514, −920.305, and −923.351 eV for bridge, top, and hollow sites, respectively. We do note that spin-polarized computations along with detailed band structures analyses are needed to be performed to further account for the temperature dependence of the adsorption energies which turn out to be above −8 eV in this nonmagnetic picture. The adsorption is the lowest for adsorption at the hollow site which agrees reasonably well with that reported in the literature. The bond distances between C and Cr atoms can also be varied in which case the binding energy tends to increase when the distance is smaller, leading towards chemical adsorption. This relates to the hybridization of 3d orbitals of the metal ad-atom (applicable for other transition metal atoms with partially unoccupied d states) and p states of graphene and the localized behavior of these orbitals.

The well-studied healing process of graphene involves C atoms interacting with hole regions, hydrocarbon groups as present as impurities, which participate in the reconstruction of hexagonal and other rings in the sheet. The results shown in Figs. 6, 7 from AIMD simulations performed at room temperature show the interaction of C atoms with the ad-atoms to reconstruct the geometry. We do see that the holes have healed completely or partially (not all hexagonal rings can be achieved) in all cases depending on the variations in the energy landscapes. Examples of such healings are observed for CH3 molecule in all configurations after 2 ps. The corresponding adsorption energies are −3.709, −7.220, and −4.306 eV, respectively for three systems displayed in Fig. 6. For CH, CH2 cases, the molecules tend to get attached or bonded to the C atoms at this temperature rather than enough rearrangements required for healing, although interactions of these molecules with the edge atoms can still be observed. Some of the atoms from these hydrocarbons are expected to remain in the environment rather than getting adsorbed to the surface depending on the energy barriers as also seen in the example of the CH3 molecule. The adsorption energies for CH and CH2 molecules for these different configurations vary between −7.018 and −14.549 eV. From these results, it is also evident that there is a strong dependence on choosing the initial configuration of the bonds and distances between the molecule and the surface along with the temperature. This is particularly important and opens avenues to reconnoiter electron beam effects where these mechanisms can be explored under high-intensity beam, providing a local heat source to raise the temperature of the graphene surfaces.

Regions of interest and end-to-end workflow

From the abovementioned studies, it is evident that it is possible to reconstruct geometries utilizing first-principles simulations based on coordinates predicted by a DL framework constructed on microscopic images. This can even be expanded to incorporate edge-computing that involves direct transfer of image-based data from microscopes via light edge devices such as the Nvidia Jetson series and then analyze, train DL networks using a GPU-based platform followed by performing simulations with CPU/GPU-based high-performance computing resources and feedback to the human in the loop, altogether on-the-fly, to better guide experiments while learning from theoretical models. Furthermore, the results from the simulations as obtained in this study can be used to choose regions of interest, in terms of studying chemical or physical adsorption, or healing mechanisms under different experimental conditions. The fully constructed simulation cell can be sampled through randomly selected patches and can determine the similarity of information carried down to select regions to be investigated in the next runs. It is also possible to improve the predictions with higher uncertainties with such outputs. While DL networks and prediction of coordinates of the system of interest fully use open-source packages, in the simulation stage, it is dependent on the users to choose between the level of theoretical accuracy and corresponding potentials or force fields to enable the active causal loop. Thus, this framework also adheres to the FAIR37 principles that is necessary to enable findability, accessibility, interoperability, and reusability of data in scientific domains.

In summary, we have established the first step towards direct on-the-fly data analytics and experiment augmentation in STEM by DFT and MD models. We accentuate that this vision, while actively discussed in the scientific community is highly nontrivial due to extreme disparity between the timescales experimentally accessible to STEM and amenable to atomistic modeling, as well as fundamentally different latencies of imaging and simulation. Thus, matching the two necessitates both the development of infrastructure linking the microscope data streams to the simulation environment and solving the coupled challenge of ML selection of regions of interest, simulation-based discovery, ultimately enabling feedback to experiment. Here we have shown how an end-to-end workflow can be constructed to study a few instances of graphene physics starting from a microscopic image to performing simulations with the help of DL. While the simulations performed can be of higher accuracy, these still can show the efficacy of this framework which is dependent on feature predictions using DL from images and using those to provide initial conditions for more theoretical studies.

Overall, this approach allows for a couple of significant advancements in the communities of DL applications to both experiments and simulations. The first is the rapid exploration and analyses of images to extract features with associated uncertainties and a reasonable comparison between these predictions with computational simulations at different length scales. The second is to utilize “the human in the loop” along with the results from observational and synthetic data to improve the DL frameworks for better adaptability, even under different experimental conditions compared to that utilized in training. Finally, we pose that enabling this workflow will allow formulating the specific physical and chemical challenge that will push, but not hopelessly exceed, the regions experimentally accessible.

Methods

Samples preparation and Imaging

The graphene sample used in this work was grown using atmospheric pressure chemical vapor deposition (AP-CVD) and Nion UltraSTEM 200 was used to perform STEM imaging. Associated all other details of the measurements can be found in this ref. 35.

DFT and AIMD

Details on DFT simulations for the graphene movies: DFT within GGA was performed using the projector augmented plane-wave (PAW) method and PAW-PBE potential94 as implemented in the Vienna ab initio simulation package (VASP)95,96.

A graphene supercell (patch extracted from the full 2,000 atoms supercell) of 91 C atoms with lattice parameters a = b = c = 25.786 Å, with α = β = γ = 90° was considered as the initial structure for performing the full geometry optimization. All the twofold C atoms were terminated with H atoms. The structure optimization was performed by relaxing the atoms steadily toward the equilibrium until the Hellman-Feynman forces are less than 10 − 3 eV/Å. All geometry optimization computations were carried out with 400-eV plane-wave cutoff energy with Monkhorst Pack97 2 × 2 × 2 k-point meshes. Three different configurations such as (a) fixed perimeter atoms positions, (b) fixed coordinates in a,b, or (x and y) directions, and (c) fixed all H atoms, were also considered and subjected to optimization.

Details on AIMD simulations for the graphene movies: Ab initio quantum-mechanical MD simulations were performed using the projector augmented plane-wave (PAW) method and PAW-PBE potential94 as implemented in the Vienna ab initio simulation package (VASP)95,96.

The fully optimized structure (H-terminated) obtained from DFT within GGA was considered as the initial structure for performing all AIMD simulations. All computations were carried out with 400-eV plane-wave cutoff energy with appropriate Monkhorst Pack97 k-point meshes, at 300, 500, 700, 900, and 1200 K temperatures with 2000 time steps of 1 fs each using a Nose–Hoover thermostat. For exploring the ad-atoms, three different sites such as bridge, hollow and top were considered. Metal ions such as Cr were put into these sites to look at the system evolutions at various temperatures. Another range of AIMD simulations involved adding molecular groups like CH, CH2, CH3 to surfaces to study the temperature-dependent dynamics.

The adsorption energy is calculated using the following equation:

DFTB

Density functional based tight-binding (DFTB) and the extended tight-binding method, enables simulations of large systems and relatively long timescales at a reasonable accuracy and are considerably faster for typical DFT ab initio98 We incorporated the DFTB approach (version 21.1) into the developed workflow in a similar fashion as detailed for DFT and AIMD. DFTB allowed directly getting reliable results for the graphene systems (graphene-Si) for the full 2043 atom cell on a timeframe of a few hours. A picture of the optimized structure is given in Fig. 1 of Supplementary Material. Likewise, DFTB MD could be performed for many picoseconds on the full system. Thus, we note that this approximate DFT approach can permit the study of a more complete material system and to evaluate non-local effects in self-healing, etc.

Length and timescales for each step of the workflow

The deep learning models relevant to this work were trained using GPU (Nvidia Tesla K80) as provided by the Google Colab platform. The training time varied depending on the training set size and network architecture. A typical network takes ~0.5 h to train on ~4500 (256 × 256 window sizes) sub-images with a 2-3-3-4-3-3-2 architecture (numbers correspond to convolution layers in each U-Net block) of a U-Net model. Generally, we train 20 models in an ensemble. Depending on the availability of GPUs, the model can be trained in a sequential or parallel regime. The feature prediction along with creating a simulation object takes a few seconds. A high GPU RAM is preferred to avoid memory issues during training or performing post-training analyses. For a 91 atoms graphene supercell, a full geometry optimization takes up to ten CPU hours to converge and a similar timescale is applicable for the temperature-dependent AIMD simulations. For geometry optimization of the full 2043 atoms structure, it takes a few hours to converge. This potentially reduces the overall timeframe for the end-to-end workflow down to something more amendable to feedback during a STEM experiment.

Data availability

The data used for all analysis are available through the Jupyter notebooks located at https://github.com/aghosh92/ELIT.

Code availability

The functions used to simulate structures from DL predictions can be found at https://github.com/pycroscopy/atomai. The details of training DL networks used in this work are available through the Jupyter notebook located at https://github.com/aghosh92/ELIT. The python-based implementations to construct simulation objects and perform MD simulations can be found at https://github.com/aghosh92/DCNN_MD. We typically will initialize multiple independent AtomAI models with different seeds and run them on separate GPUs, combining them into an ensemble to get the feature predictions. Next, we import them into the CPU environment to perform DFT computations.

References

Pennycook, S. J. The impact of STEM aberration correction on materials science. Ultramicroscopy 180, 22–33 (2017).

Pennycook, S. J. Seeing the atoms more clearly: STEM imaging from the Crewe era to today. Ultramicroscopy 123, 28–37 (2012).

Gerber, C. & Lang, H. P. How the doors to the nanoworld were opened. Nat. Nanotechnol. 1, 3–5 (2006).

Barth, C., Foster, A. S., Henry, C. R. & Shluger, A. L. Recent trends in surface characterization and chemistry with high-resolution scanning force methods. Adv. Mater. 23, 477–501 (2011).

Bonnell, D. A. & Garra, J. Scanning probe microscopy of oxide surfaces: atomic structure and properties. Rep. Prog. Phys. 71, 044501 (2008).

Varela, M. et al. Spectroscopic imaging of single atoms within a bulk solid. Phys. Rev. Lett. 92, 095502 (2004).

Browning, N. D. et al. The influence of atomic structure on the formation of electrical barriers at grain boundaries in SrTiO3. Appl. Phys. Lett. 74, 2638–2640 (1999).

Nguyen, D. T., Findlay, S. D. & Etheridge, J. A menu of electron probes for optimising information from scanning transmission electron microscopy. Ultramicroscopy 184, 143–155 (2018).

Grillo, V. et al. Generation of nondiffracting electron Bessel beams. Phys. Rev. X 4, 7 (2014).

Grillo, V. et al. Observation of nanoscale magnetic fields using twisted electron beams. Nat. Commun. 8, 6 (2017).

Koh, A. L. et al. Electron energy-loss spectroscopy (EELS) of surface plasmons in single silver nanoparticles and dimers: influence of beam damage and mapping of dark modes. ACS Nano 3, 3015–3022 (2009).

Scholl, J. A., Koh, A. L. & Dionne, J. A. Quantum plasmon resonances of individual metallic nanoparticles. Nature 483, 421–427 (2012).

Senga, R. et al. Position and momentum mapping of vibrations in graphene nanostructures. Nature 573, 247–250 (2019).

Idrobo, J. C. et al. Temperature measurement by a nanoscale electron probe using energy gain and loss spectroscopy. Phys. Rev. Lett. 120, 095901 (2018).

Roushan, P. et al. Topological surface states protected from backscattering by chiral spin texture. Nature 460, 1106–1109 (2009).

Heinrich, A. J., Lutz, C. P., Gupta, J. A. & Eigler, D. M. Molecule cascades. Science 298, 1381–1387 (2002).

Batzill, M. & Diebold, U. The surface and materials science of tin oxide. Prog. Surf. Sci. 79, 47–154 (2005).

Acharya, D. P., Camillone, N. & Sutter, P. CO2 adsorption, diffusion, and electron-induced chemistry on rutile TiO2(110): a low-temperature scanning tunneling microscopy study. J. Phys. Chem. C. 115, 12095–12105 (2011).

Schofield, S. R. et al. Atomically precise placement of single dopants in Si. Phys. Rev. Lett. 91, 1316104 (2003).

Fuechsle, M. et al. A single-atom transistor. Nat. Nanotechnol. 7, 242–246 (2012).

Eigler, D. M., Lutz, C. P. & Rudge, W. E. An atomic switch realized with the scanning tunneling microscope. Nature 352, 600–603 (1991).

Kalinin, S. V. & Pennycook, S. J. Single-atom fabrication with electron and ion beams: From surfaces and two-dimensional materials toward three-dimensional atom-by-atom assembly. MRS Bull. 42, 637–643 (2017).

Markevich, A. et al. Electron beam controlled covalent attachment of small organic molecules to graphene. Nanoscale 8, 2711–2719 (2016).

Jiang, N. Electron beam damage in oxides: a review. Rep. Prog. Phys. 79, 016501 (2016).

Gonzalez-Martinez, I. G. et al. Electron-beam induced synthesis of nanostructures: a review. Nanoscale 8, 11340–11362 (2016).

Dyck, O., Kim, S., Kalinin, S. V. & Jesse, S. Placing single atoms in graphene with a scanning transmission electron microscope. Appl. Phys. Lett. 111, 113104 (2017).

Dyck, O., Kim, S., Jimenez-Izal, E., Alexandrova, A. N., Kalinin, S. V. & Jesse, S. Building structures atom by atom via electron beam manipulation. Small 14, 1801771 (2018).

Ziatdinov, M., Maksov, A. & Kalinin, S. V. Learning surface molecular structures via machine vision. npj Comput. Mater. 3, 1–9 (2017).

Ziatdinov, M., Dyck, O., Jesse, S. & Kalinin, S. V. Atomic mechanisms for the Si atom dynamics in graphene: chemical transformations at the edge and in the bulk. Adv. Funct. Mater. 29, 1904480 (2019).

Rashidi, M. & Wolkow, R. A. Autonomous scanning probe microscopy in situ tip conditioning through machine learning. Acs Nano 12, 5185–5189 (2018).

Ziatdinov, M. et al. Deep learning of atomically resolved scanning transmission electron microscopy images: chemical identification and tracking local transformations. ACS Nano 11, 12742–12752 (2017).

Gordon, O. M., Hodgkinson, J. E. A., Farley, S. M., Hunsicker, E. L. & Moriarty, P. J. Automated searching and identification of self-organized nanostructures. Nano Lett. 20, 7688–7693 (2020).

Horwath, J. P., Zakharov, D. N., Mégret, R. & Stach, E. A. Understanding important features of deep learning models for segmentation of high-resolution transmission electron microscopy images. npj Comput. Mater. 6, 1–9 (2020).

Lee, C.-H. et al. Deep learning enabled strain mapping of single-atom defects in two-dimensional transition metal dichalcogenides with sub-picometer precision. Nano Lett. 20, 3369–3377 (2020).

Ghosh, A., Sumpter, B. G., Dyck, O., Kalinin, S. V. & Ziatdinov, M. Ensemble learning-iterative training machine learning for uncertainty quantification and automated experiment in atom-resolved microscopy. npj. Comput. Mater. 7, 1–8 (2021).

Ziatdinov, M., Ghosh, A., Wong, T. & Kalinin, S. V. AtomAI: a deep learning framework for analysis of image and spectroscopy data in (Scanning) transmission electron microscopy and beyond. Preprint at https://arxiv.org/pdf/2105.07485.pdf (2021).

Mons, B. et al. Cloudy, increasingly FAIR; revisiting the FAIR data guiding principles for the European Open Science Cloud. Inf. Serv. Use 37, 49–56 (2017).

Lin, R., Zhang, R., Wang, C., Yang, X. Q. & Xin, H. L. TEMImageNet training library and AtomSegNet deep-learning models for high-precision atom segmentation, localization, denoising, and deblurring of atomic-resolution images. Sci. Rep. 11, 1–15 (2021).

Jain, A. The Materials Project: a materials genome approach to accelerating materials innovation. APL Mater. 1, 011002 (2013).

Hicks, D. et al. The AFLOW library of crystallographic prototypes: part 2. Comp. Mat. Sci. 161, S1–S1011 (2019).

Saal, J. E., Kirklin, S., Aykol, M., Meredig, B. & Wolverton, C. Materials design and discovery with high-throughput density functional theory: the open quantum materials database (OQMD). JOM 65, 1501–1509 (2013).

Mehl, M. J., Hicks, D., Toher, C., Levy, O., Hanson, R. M., Hart, G. & Curtarolo, S. The AFLOW library of crystallographic prototypes: Part 1. Comp. Mat. Sci. 136, S1–S828 (2017).

Kirklin, S., Saal, J. E., Meredig, B., Thompson, A., Doak, J. W., Aykol, M., Rühl, S. & Wolverton, C. The open quantum materials database (OQMD): assessing the accuracy of DFT formation energies. npj Comput. Mater. 1, 1–15 (2015).

Draxl, C. & Scheffler, M. The NOMAD laboratory: from data sharing to artificial intelligence. J. Phys. Mater. 2, 036001 (2019).

Jain, A. et al. The Materials Project: Accelerating Materials Design Through Theory-Driven Data and Tools (Springer, 2020).

Ghosh, A., Ronning, R. R., Nakhmanson, S. M. & Zhu, J.-X. Machine learning study of magnetism in uranium-based compounds. Phys. Rev. Mat. 4, 064414 (2020).

Ghosh, A. et al. Assessment of machine learning approaches for predicting the crystallization propensity of active pharmaceutical ingredients. CrystEngComm 21, 1215–1223 (2019).

Batra, R. Accurate machine learning in materials science facilitated by using diverse data sources. Nature 589, 524–525 (2021).

Botu, V., Batra, R., Chapman, J. & Ramprasad, R. Machine learning force fields: construction, validation, and outlook. J. Phys. Chem. C. 121, 511–522 (2017).

Toher, C. et al. The AFLOW Fleet for Materials Discovery (Springer, 2020).

Pilania, G., Ghosh, A., Hartman, S. T., Mishra, R., Stanek, C. R. & Uberuaga, B. P. Anion order in oxysulfide perovskites: origins and implications. npj Comput. Mater. 6, 1–11 (2020).

Merz, K. M. Jr et al. Method and data sharing and reproducibility of scientific results. J. Chem. Inf. Model. 60, 5868–5869 (2020).

Chen, L. et al. Polymer informatics: current status and critical next steps. Mater. Sci. Eng. R. Rep. 144, 100595 (2021).

Meredig, B. et al. Combinatorial screening for new materials in unconstrained composition space with machine learning. Phys. Rev. B 89, 094104 (2014).

Artrith, N. et al. Best practices in machine learning for chemistry. Nat. Chem. 13, 505–508 (2021).

Isayev, O., Tropsha, A. & Curtarolo, S. Materials Informatics: Methods, Tools, and Applications. (John Wiley & Sons, 2019).

Isayev, O., Popova, M. & Tropsha, A. Methods, systems and non-transitory computer readable media for automated design of molecules with desired properties using artificial intelligence. US Patent 16,632,328 (2020).

Ramprasad, R., Batra, R., Pilania, G., Mannodi-Kanakkithodi, A. & Chiho, K. Machine learning in materials informatics: recent applications and prospects. npj Comput. Mater. 3, 1–13 (2017).

Schmidt, J., Marques, M. R., Botti, S. & Marques, M. A. Recent advances and applications of machine learning in solid-state materials science. npj Comput. Mater. 5, 1–36 (2019).

Snyder, J. C., Rupp, M., Hansen, K., Muller, K.-R. & Burke, K. Finding density functionals with machine learning. Phys. Rev. Lett. 108, 253002 (2012).

Stanev, V. et al. Machine learning modeling of superconducting critical temperature. npj Comput. Mater. 4, 1–14 (2018).

Sanvito, S. et al. Machine Learning and High-throughput Approaches to Magnetism (Springer, 2020).

Schwenker, E. Ingrained--An automated framework for fusing atomic-scale image simulations into experiments. Preprint at https://doi.org/10.1002/smll.202102960 (2021).

Schwenker, E. et al. EXSCLAIM!--An automated pipeline for the construction of labeled materials imaging datasets from literature. Preprint at https://arxiv.org/pdf/2103.10631.pdf (2021).

Lingerfelt, E. J. et al. BEAM: a computational workflow system for managing and modeling material characterization data in HPC environments. Procedia Comput. Sci. 80, 2276–2280 (2016).

Madsen, J. & Susi, T. The abTEM code: transmission electron microscopy from first principles. Open Res. Eur. 1, 24 (2021).

Dyck, O. et al. Building structures atom by atom via electron beam manipulation. Small 14, 1801771 (2018).

Dyck, O. et al. Doping of Cr in graphene using electron beam manipulation for functional defect engineering. ACS Appl. Nano Mater. 3, 10855–10863 (2020).

Dyck, O. et al. Doping transition-metal atoms in graphene for atomic-scale tailoring of electronic, magnetic, and quantum topological properties. Carbon 173, 205–214 (2021).

Dyck, O. et al. Electron-beam introduction of heteroatomic Pt–Si structures in graphene. Carbon 161, 750–757 (2020).

Lin, J., Zhang, Y., Zhou, W. & Pantelides, S. T. Structural flexibility and alloying in ultrathin transition-metal chalcogenide nanowires. ACS Nano 10, 2782–2790 (2016).

Sang, X. et al. In situ edge engineering in two-dimensional transition metal dichalcogenides. Nat. Commun. 24, 1–7 (2018).

Lehnert, T. et al. Electron-beam-driven structure evolution of single-layer MoTe2 for quantum devices. ACS Appl. Nano Mater. 2, 3262–3270 (2019).

Zhou, W. et al. Intrinsic structural defects in monolayer molybdenum disulfide. Nano Lett. 13, 2615–2622 (2013).

Hong, J. et al. Exploring atomic defects in molybdenum disulphide monolayers. Nat. Commun. 6, 1–8 (2015).

Susi, T. et al. Silicon–carbon bond inversions driven by 60-keV electrons in graphene. Phys. Rev. Lett. 113, 115501 (2014).

Yang, Z. et al. Direct Observation of atomic dynamics and silicon doping at a topological defect in graphene. Angew. Chem. 126, 9054–9058 (2014).

Lee, J., Zhou, W., Pennycook, S. J., Idrobo, J. C. & Pantelides, S. T. Direct visualization of reversible dynamics in a Si6 cluster embedded in a graphene pore. Nat. Commun. 4, 1–7 (2013).

Robertson, A. W. et al. The role of the bridging atom in stabilizing odd numbered graphene vacancies. Nano Lett. 14, 3972–3980 (2014).

Robertson, A. W. et al. Stability and dynamics of the tetravacancy in graphene. Nano Lett. 14, 1634–1642 (2014).

He, Z. et al. Atomic structure and dynamics of metal dopant pairs in graphene. Nano Lett. 14, 3766–3772 (2014).

Ronneberger, O., Fischer, P. & Brox, T. U-net: convolutional networks for biomedical image segmentation. Med. Image Comput. Comput. Assist. Inter. 9351, 234–241 (2015).

Gulrajani, I. & Lopez, P. D. In search of lost domain generalization. Preprint at https://openreview.net/forum?id=lQdXeXDoWtI, https://openreview.net/pdf?id=lQdXeXDoWtI (2020).

Larsen, A. H. et al. The atomic simulation environment—a Python library for working with atoms. J. Phys. Condens. Matter 29, 273002 (2017).

Lingerfelt, D. B. et al. Nonadiabatic effects on defect diffusion in silicon-doped nanographenes. Nano Lett. 21, 236–242 (2021).

Warner, J. H. et al. Bond length and charge density variations within extended arm chair defects in graphene. ACS Nano 7, 9860–9866 (2013).

Skowron, S. T., Lebedeva, I. V., Popov, A. M. & Bichoutskaia, E. Energetics of atomic scale structure changes in graphene. Chem. Soc. Rev. 44, 3143–3176 (2015).

Aoki, H. & Dresselhaus, M. S. Physics of Graphene (Springer Science & Business Media, 2013).

Banhart, F., Kotakoski, J. & Krasheninnikov, A. V. Structural defects in graphene. ACS Nano 5, 26–41 (2011).

Liu, L., Qing, M., Wang, Y. & Chen, S. Defects in graphene: generation, healing, and their effects on the properties of graphene: a review. J. Mater. Sci. Technol. 31, 599–606 (2015).

Dyck, O., Yoon, M., Zhang, L., Lupini, A. R., Swett, J. L. & Jesse, S. Doping of Cr in graphene using electron beam manipulation for functional defect engineering. ACS Appl. Nano Mater. 3, 10855–10863 (2020).

Nakada, K., & Ishii, A. in Graphene Simulation (ed. Gong, J. R.) Ch. 1 (InTech, 2011).

Pártay, L. B., Bartók, A. P. & Csányi, G. Efficient sampling of atomic configurational spaces. J. Phys. Chem. B 114, 10502–10512 (2010).

Kresse, G. & Joubert, D. From ultrasoft pseudopotentials to the projector augmented-wave method. Phys. Rev. B 59, 1758 (1999).

Kresse, G. & Furthmüller, J. Efficient iterative schemes for ab initio total-energy calculations using a plane-wave basis set. Phys. Rev. B 54, 11169 (1996).

Kresse, G. & Hafner, J. Ab initio molecular dynamics for liquid metals. Phys. Rev. B 47, 558 (1993).

Monkhorst, H. J. & Pack, J. D. Special points for Brillouin-zone integrations. Phys. Rev. B 13, 5188 (1976).

Hourahine, B. et al. DFTB+, a software package for efficient approximate density functional theory based atomistic simulations. J. Chem. Phys. 152, 124101 (2020).

Acknowledgements

This development of the experiment-to-simulations pipeline was supported by the US Department of Energy (DOE), Office of Science, Office of Basic Energy Sciences Data, Artificial Intelligence Nanoscale Science Research (NSRC AI) Centers program (A.G., B.S., and S.V.K.). The STEM experiment was supported by the DOE, Office of Science, Basic Energy Sciences (BES), Materials Sciences and Engineering Division (O.D.). The development of deep learning models was supported by the Center for Nanophase Materials Sciences (CNMS), a DOE Office of Science User Facility at Oak Ridge National Laboratory (M.Z.).

Author information

Authors and Affiliations

Contributions

S.V.K., M.Z., and A.G. conceived the project. A.G. has implemented the workflow, performed simulations and all analyses. O.D. obtained STEM data on graphene. M.Z. realized the DL framework via AtomAI and PyTorch libraries. B.S. ran MD simulations. A.G., M.Z., and S.V.K., wrote the drafts of the manuscript while B.S. and O.D. contributed to the writing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing non-financial interests but the following competing financial interests. Sergei V. Kalinin is a member of the Editorial Board for npj Computational Materials. S.V.K. was not involved in the journal’s review of or decisions related to this manuscript. The remaining authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ghosh, A., Ziatdinov, M., Dyck, O. et al. Bridging microscopy with molecular dynamics and quantum simulations: an atomAI based pipeline. npj Comput Mater 8, 74 (2022). https://doi.org/10.1038/s41524-022-00733-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-022-00733-7