Abstract

We present a method to model interatomic interactions such as energy and forces in a computationally efficient way. The proposed model approximates the energy/forces using a linear combination of random features, thereby enabling fast parameter estimation by solving a linear least-squares problem. We discuss how random features based on stationary and non-stationary kernels can be used for energy approximation and provide results for three classes of materials, namely two-dimensional materials, metals and semiconductors. Force and energy predictions made using the proposed method are in close agreement with density functional theory calculations, with training time that is 96% lower than standard kernel models. Molecular Dynamics calculations using random features based interatomic potentials are shown to agree well with experimental and density functional theory values. Phonon frequencies as computed by random features based interatomic potentials are within 0.1% of the density functional theory results. Furthermore, the proposed random features-based potential addresses scalability issues encountered in this class of machine learning problems.

Similar content being viewed by others

Introduction

Classical molecular dynamics (MD) based on empirical force fields has emerged as a powerful technique for predicting material properties and behaviour at the atomistic scale. This is because the use of empirical models of interatomic interactions enable significantly faster and scalable computations compared to ab initio methods such as density functional theory (DFT)1. The central component of any MD simulation is the Interatomic Potential (IP) function that determines interatomic interactions1,2. The choice of IP can significantly alter the property predictions, thus affecting the derived insights from the corresponding MD simulations3,4. It has been shown that IPs based on physical and chemical theories can be sensitive to small changes in their parameters5,6. Furthermore, due to their rigid physically motivated functional forms, they cannot be generalized across different material systems. An alternative is to use IPs developed using machine learning (ML) tools7,8,9,10,11,12,13,14,15,16,17. These models make fewer assumptions on the functional form of the IP and can be easily generalized to different material systems10,18.

The most popular ML approaches for IP development include linear regression12,14, kernel regression models7,15, and neural networks8,11. All of these models use atomic descriptors, functions describing local atomic environment, as inputs and predict energy, and its higher-order derivatives as outputs19,20. More recently, Zhang et al.21 and Jia et al.22 proposed neural network-based IPs, that use the atomic coordinates as input and learn the underlying descriptors through an embedding neural network. Training of embedding as well as prediction network is performed end-to-end. A review of ML-based IPs can be found in refs. 16,17,23,24,25,26,27. The present study focuses on kernel regression models such as Gaussian approximation potential (GAP)7. GAP is based on Gaussian processes and approximates the local atomic energy with a kernel defining the similarity between different atomic descriptors. This kernel-based IP has been used to predict the energy/forces for a wide range of materials including metals, semiconductors, amorphous solids etc.9,28,29,30,31,32. Another popular IP based on kernel regression is Adaptive Generalizable Neighbourhood Informed (AGNI) potential, which has been mainly used for modelling interactions in metals15.

Parameter estimation in standard kernel methods costs typically \({{{\mathcal{O}}}}({N}^{3})\), where N is the number of training points. In addition, standard kernel methods require storage and factorization of a dense (N × N) Gram matrix, which poses a significant computational and memory bottleneck for large N. In IP learning applications, N can easily be in the range of 106−107, requiring significant computational resources for parameter estimation. The large Gram matrix also hinders the hyper-parameter tuning process where multiple model runs are required to identify good settings for the hyper-parameters such as kernel length scales, cut-off radius etc. Slow training becomes a bottleneck when more than one element is involved e.g., alloys, or when active learning methods are used for training13,33. Another major disadvantage of kernel methods is that prediction at a new point costs \({{{\mathcal{O}}}}(ND)\), where D is the number of input features. Depending on the descriptors used, D can easily take values of the order 102, leading to slower MD run time. In the GAP approach, a sparse approximation is used to reduce the training complexity to \({{{\mathcal{O}}}}({N}^{^{\prime} 2}N)\) and prediction complexity to \({{{\mathcal{O}}}}({N}^{\prime}D)\), where \({N}^{\prime}\) denotes the number of sparse points7.

In order to address the high computational cost associated with the application of ML to learn IPs, this study presents a method that approximates the local atomic energy as a linear combination of random features associated with a kernel. The basis function used for energy modelling is a low-dimensional representation of the infinite-dimensional feature space associated with a kernel. The models presented here are tested using random features based on both stationary and non-stationary kernels. Energy and forces are predicted for three material systems—graphene, diamond, and tungsten. For each material system, mean absolute error (MAE) for force predictions are of the order 10−2 eVÅ−1 as compared to the corresponding DFT results. Performance of IPs based on random features is compared against state-of-the-art ML-based IPs such as GAP7 and empirical IP such as AIREBO34, EAM35 etc. Apart from providing better energy/force fittings, IPs based on random features significantly reduce the training time by 96% as compared to GAP. The main reason for this is the smaller number of parameters needed to fit the energy function of random features based IPs. The low-dimensional parameter space has a direct impact on the computational cost associated with energy/force evaluations during MD run time, with fewer parameters leading to faster evaluations as detailed in Supplementary Note 3.

Results

Random feature models

In this and the following sections, an atomic configuration will refer to a particular group of atoms in ordered or disordered state. The total number of atomic configurations in the training set will be denoted by L, with the lth configuration having Nl atoms, where l = 1, 2, …, L. Each atom is described by rotationally and translationally invariant descriptors. A simple descriptor can be the distance between two atoms or functions of distance as discussed by Behler11. More complex descriptors include functions based on spherical harmonics of charge density expansion such as smooth overlap of atomic positions (SOAP)20. The goal is to learn a model for energy and forces given the atomic descriptors.

The local atomic energy, E, for an atom described by the descriptor q, can be approximated as follows

where K(q, qt) is a kernel function denoting the similarity measure between atomic descriptors q and qt. The contribution of each training atomic descriptor, qt, is weighted by a scalar weight, wt, where the index t goes over all the atoms in the training set. The above model of energy approximation forms the basis of various ML-based interatomic potentials7. Two types of kernels: stationary such as squared exponential (used mostly for two-body and three-body descriptors) or non-stationary such as polynomial kernel (used mostly for SOAP descriptors) are used to approximate the atomic energy as per Eq. 1. However, as mentioned earlier the training cost and runtime associated with kernel methods can be exorbitant for large values of N and D. More specifically, the training data used to learn IP models can easily scale to 100,000 atomic configurations, which makes full kernel evaluation infeasible in practice.

In order to circumvent the issues associated with full kernel evaluation, we use the idea of random features. Kernel approximations based on random features have been used to solve a range of challenging problems in machine learning36,37,38. However, the use of these models to develop IPs has not been explored yet. In the following sections, we discuss how random features corresponding to stationary and non-stationary kernels can be used to efficiently learn IPs from DFT simulation datasets.

To approximate stationary kernels, we use the notion of random Fourier features (RFF). The main idea behind RFF is that stationary kernels can be approximated by a dot product of random features as follows

where z(q) is a vector of random features associated with the atom descriptor \({{{\bf{q}}}}\in {{\mathbb{R}}}^{D}\). For stationary kernels such as the squared exponential, these random features are obtained by invoking Bochner’s theorem which states that a kernel is positive definite if and only if it is a Fourier transform of a non-negative measure. Writing the Fourier transform of the kernel as \(K({{{\bf{q}}}}-{{{{\bf{q}}}}}^{t})=\int p({{{\boldsymbol{\omega }}}}){{\rm{e}}}^{j{{{{\boldsymbol{\omega }}}}}^{T}({{{\bf{q}}}}-{{{{\bf{q}}}}}^{t})}{\rm{d}}{{{\boldsymbol{\omega }}}}\), and using the ideas presented by Rahimi and Recht39, the kernel can be approximated in the form of Eq. 2, with

and ωm ~ p(ω), m = 1, 2, … M, are random vectors of length D (for details see algorithm 1). For different kernels, the distribution p(ω) takes the specific forms as detailed by Rahimi and Recht39. Detailed derivations for the above basis functions and generalization error bounds can be found in Rahimi and Recht39,40,41. The local atomic energy can be approximated using the random features as follows

where αm are the model weights and zm(q) denotes the mth component of the vector z(q) defined in (3). A smooth function that zeroes out the energy contributions of atoms falling outside a cutoff radius can be used with the above energy model. The mathematical form of the cutoff function used in this work is described in the Methods section.

For many body features such as SOAP, a dot product or polynomial kernel provides a better approximation of energy than a stationary kernel. A dot product kernel is of the form \(K({{{\bf{q}}}},{{{{\bf{q}}}}}^{t})={({{{{\bf{q}}}}}^{T}{{{{\bf{q}}}}}^{t}+r)}^{n}\), where \(n\in {\mathbb{N}}\) and \(r\in {\mathbb{{R}^{+}}}\). This kernel can be approximated using a random features map (RFM) based on results from harmonic analysis42. According to Shor’s theorem, a kernel is positive definite if it is an analytic function admitting Maclaurin expansion42. The mth component of the random feature map that provides an unbiased estimate of the dot-product kernel can be written as

where γ is a randomly chosen scaled Maclaurin coefficient as defined in algorithm 2 and ωj ∈ {−1, 1}D is a random vector. To reduce the variance due to random sampling, n copies of ω are generated and multiplied together in the preceding equation. Kar and Karnick42 emphasized that the coefficients of the Maclaurin expansion needs to be non-negative, otherwise it will lead to indefinite kernels. Similar to stationary kernel approximation, the energy of an atomic configuration, considering a non-stationary kernel, is modelled by a linear combination of these random features as follows

where βm are model weights to be estimated from the training data. Algorithms to generate random features for both stationary and non-stationary kernels are detailed in the Methods section.

Parameter estimation

The energy of an atom can be modelled using random feature models as per Eqs. 4 and 6. The weight vectors, α and β, are estimated by minimizing the regularized least-squares loss function

where \({E}_{i}^{l}\) denotes the predicted values of local energy for the ith atom belonging to the lth atomic configuration and \({F}_{ijk}^{l}\) denotes the predicted forces in direction k for the (i, j) atom pair in atomic configuration l. The local energy is modelled using (3) and/or (6) while the predicted forces are obtained by differentiating the energy models as outlined in the Methods section. Quantities of interest calculated using DFT (available in the training dataset) are marked by an asterisk, where El,* denotes the DFT calculated energy for configuration l and \({F}_{ijk}^{l,* }\) denotes DFT calculated force in direction k for atom pair (i, j). λ denotes the regularization parameter which can be estimated by cross-validation. Since this loss function is quadratic in α and β, the weights can be estimated by solving a system of linear algebraic equations. The complexity of weight estimation through this method is \({{{\mathcal{O}}}}({M}^{2}N)\) which is significantly lower than traditional full kernel methods (\({{{\mathcal{O}}}}({N}^{3})\)). Similarly, the computational cost of prediction at a test point is \({{{\mathcal{O}}}}(M)\) as compared to \({{{\mathcal{O}}}}(ND)\) for classical kernel methods. Here, N denotes the training set size, D denotes the number of descriptors and M denotes the total number of random features.

In the present study, we approximate the squared exponential kernel using RFFs. By writing the Fourier transform of this kernel, the corresponding probability distribution for ω can be written as \({{{\boldsymbol{\omega }}}} \sim {{{\mathcal{N}}}}({{{\bf{0}}}},{\sigma }^{-1}{{{\bf{I}}}})\),where σ denotes the shape parameter of the kernel39 and I denotes the D × D identity matrix. From the above probability distribution, random samples for ω are generated, which are used to obtain z(q) as defined in Eq. 3. To make this approach deterministic, ω can be generated from the above distribution using quasi-random sequences43. Random features are obtained for dot product kernels of order 4 using RFM as described in the Results section.

Data

We tested the IP based on random features on three classes of materials namely metals, semiconductors, and 2D materials. Datasets for tungsten, diamond and graphene were obtained from libatoms.org, as accessed on March 3, 2019. The data consist of energy and forces for a range of configurations computed through first principle methods, details of which can be found in references7,28,31. For graphene, we used the same training and validation dataset as detailed in Rowe et al.28. For the tungsten dataset, we used GAP5 as training and GAP6 as test set31. The atomic configurations included in the sets GAP5 and GAP6 are described in ref. 31. Random partition of diamond data was performed to obtain training and test set. For diamond and tungsten, we used SOAP descriptors, and thus only the RFM model was applied to these datasets. To isolate the performance of the RFF model, we employed two-body and three-body descriptors only for the graphene datasets. The parameters for the descriptors are kept the same as presented in refs. 7,28,31.

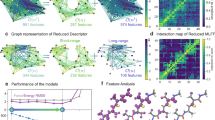

Figure 1 shows the predicted forces versus the DFT computed forces for graphene, diamond, and tungsten. As can be seen from Fig. 1, the forces predicted closely agree with the DFT computed values. R-squared value for these plots is more than 0.99 exhibiting the excellent fit between predicted and true forces. MAE in energy and force prediction in these three classes of materials is detailed in the inset of Fig. 1. Energy and force fittings exhibit low MAE with energy fittings approaching the chemical accuracy of 1 kcal/mol (0.043 eV).

Plot of forces predicted through random features versus the DFT computed values. Data was obtained from libatoms.org. Mean absolute error in energy (Etest) and force (Ftest) prediction is shown in inset for the corresponding test sets (a, c, e). Histogram of the force prediction errors is provided for graphene (b), diamond (d), and tungsten (f).

Graphene potential

Performance of IPs based on random features was also compared against existing classical IPs. On the graphene dataset, Rowe et al.28 compared GAP IP to classical IPs as well as other DFT methods. We computed the same error metric considering RFF, i.e., root mean squared error of in-plane and out-plane forces, and found these numbers to be 0.070 and 0.044 eVÅ−1, respectively. Though these error metrics are slightly higher than GAP, they are significantly lower than the other IPs28.

Apart from excellent energy/force fittings, another computational advantage considering RFFs is discussed here. Table 1 compares the MAE in energy and forces as predicted by GAP potential and RFF potential for two-body (GAP(2B), RFF(2B)) and three-body (GAP(3B), RFF(3B)) descriptors, respectively. It can be easily seen from Table 1 that the RFF model provides a much better energy fitting than GAP while also maintaining comparable force-fitting accuracies. Particularly, MAE for energy fittings considering three body descriptors is lower than GAP (3B) by two orders of magnitude while both IPs exhibit similar performance for force fittings. The last column in Table 1 shows the time taken to train each potential on the same dataset. It is clearly evident that RFF based IP not only provides better energy and force fittings, they also outperform GAP in terms of training time. The main drawback of RFF potential is that the number of parameters required for energy/force fittings is higher than the corresponding GAP potentials. This will result in slower prediction of energy/forces during MD run-time.

In order to reduce the number of parameters, we constrained each sample of ω to be orthogonal to each other. The importance of orthogonal random numbers has been discussed previously in other works and corresponding performance bounds can be found in refs. 44,45,46. We will refer to RFF model with orthogonal random numbers as O-RFF and corresponding performance metrics are detailed in Table 1. By introducing orthogonal random numbers, the number of parameters reduced by one order of magnitude, while maintaining accuracy in energy/force predictions. Furthermore, training time is reduced by approximately 96% as compared to RFF, leading to significant computational savings. Supplementary Note 2 details the energy and force error convergence as number of random features is increased in O-RFF based IPs. It can be easily seen that, for both the two-body and three-body descriptors, error drops at much faster rate and plateaus earlier when orthogonal random numbers are used.

To test the ability of RFF based IP, an extensive set of structural, thermal, and elastic MD simulations were performed on monolayer graphene. MD simulations were performed using the Atomic Simulation Environment (ASE) library47. Predictions made through RFF based IP were compared against those obtained from classical and GAP potentials. Calculations involving GAP and classical potentials were performed using the QUIP framework within the ASE library47. Reproducibility of DFT and experimental values is also assessed and comparison to RFF based predictions is presented in Table 3.

The first set of MD simulations that we analyzed correspond to structural properties such as cohesive energy and lattice constant. In-plane lattice parameter was obtained by fitting Birch-Murnaghan equation of state (EOS)48 on energy and volume data. Corresponding minimum energy configuration provided the cohesive energy of the graphene sheet. From Table 3, it can be seen that RFF prediction of cohesive energy is close to GAP. The predicted value of cohesive energy also lies within 1% of the DFT predictions. From Table 3, it is evident that prediction using AIREBO potential is off by 4% from DFT computed values. It is worth noting that both GAP and RFF over-predicts cohesive energy as compared to experimental value of −7.73 eV.

We also consider the elastic properties of monolayer graphene sheet. The elastic constants, C11 and C12 were computed by fitting elastic tensor to stress−strain data. Independent normal and shear strained configurations were obtained and corresponding stress tensors were computed after performing force relaxation. For stress calculations, we considered the thickness of graphene sheet to be 3.34 Ao. MD simulations reveal that C11 as predicted by RFF was within 0.2% of the GAP predictions. Value of C12 as obtained from RFF is 221.93 GPa, which is showing a deviation of 12% from the GAP prediction. Compared to the value obtained from experiments, RFF computed value for C11 is underestimated by 8%. This deviation can be addressed by including more strained configurations during the training phase.

Table 3 also shows the properties predicted using the O-RFF potential. O-RFF predicted values of lattice constant and cohesive energy are in close agreement with the full RFF model. The values of C11 are off by 2% while C12 are off by 3.2% as compared to RFF.

Another important quantity of interest is the phonon dispersion curve for graphene. To model thermal transport properties such as thermal expansion coefficient and specific heat, it is highly critical to accurately determine phonon frequencies. It has been shown in previous studies that classical IPs fail to reproduce experimentally or DFT obtained phonon dispersion curves. Considering ML-based IP, GAP has been shown to accurately reproduce the experimentally obtained phonon frequencies28. In the present study, we compared the phonon frequency as obtained from DFT to those obtained using GAP, RFF, and O-RFF potentials. Phonon dispersion curve and frequencies were obtained using small displacement method as implemented in the ASE library47. The DFT study was conducted using ASE with VASP as underlying calculator.

From Fig. 2c, it is evident that the RFF predictions closely match DFT obtained phonon dispersion curves. We also compared the phonon frequencies as obtained from RFF and GAP. Absolute error in frequencies as predicted by both the potentials is less than 7 meV, thus confirming that RFF based IP can accurately reproduce phonon dispersion curve within the experimental tolerance. Figure 2e compares the phonon dispersion curves as obtained from O-RFF and DFT. The absolute error in frequencies is shown in Fig. 2f. It is clear that the O-RFF predictions also closely match DFT obtained phonon dispersion curve with an accuracy of 6 meV.

Phonon dispersion curves as obtained by DFT (shown by solid lines) and by GAP (a), RFF (c), and O-RFF (e). Absolute error in frequency prediction between DFT and respective potentials is shown in subfigure (b), d, and (f). Maximum absolute error in frequency prediction through RFF is 7 meV while from O-RFF its 6 meV.

Diamond and Tungsten potentials

Considering diamond and tungsten data, Fig. 3 shows the dependence of (a) MAE in energy and (b) force on the number of random features. For both the material systems, a clear trend of reduction of MAE in energy and force fittings is evident. Performance is compared against the GAP IP, where dashed lines show corresponding MAE in energy and forces. As the number of random features is increased, errors in force fittings, Fig. 3b, for both diamond and tungsten falls below the GAP benchmarks. MAE in energy fittings for both the materials reduces as we increase the number of random features but at different rate than the corresponding error in force fittings. Supplementary Note 1 details the variation of energy and force errors as the training sample size is increased. Both energy and force errors converge with increase in training sample size.

Accuracy of force fittings for RFM potentials is also compared to those obtained from classical IPs. We considered the diamond data and obtained forces using AIREBO34, AIREBO-M49, and Tersoff50 potentials. Force prediction for each potential is compared against DFT predictions and MAE is presented in Table 2. It can be noted that RFM has the lowest MAE for both training and test set.

To validate the proposed RFM potential, we performed a series of MD simulations and compared the results to DFT and experimental values. In order to perform MD simulations, we implemented RFM potentials using the open-source ASE library47. We start the performance analysis of the RFM potential by first assessing the structural properties of both diamond and tungsten. From the energy−volume curves, ground state energy and corresponding lattice constant is obtained for the respective material systems. Figure 4 shows energy−volume curves for diamond and BCC tungsten using both RFM and GAP7,31 potentials. It is clearly evident that RFM matches GAP predicted energies with high accuracy for both the material systems. Lattice constant values are listed in Table 3 and are compared to DFT and experimental values. Comparing RFM and DFT values, the error in lattice constant prediction is within 0.01% for both the material systems. We also obtained bulk modulus, by fitting the Birch-Murnaghan equation to the energy volume data. For both the material systems the values lie closely to DFT predicted values.

Using linear elasticity theory, elastic constants C11, C12, and C44 were computed for both diamond and tungsten. Pristine atomic configurations were strained within 1% and corresponding stresses were computed. The results for RFM and GAP are given in Table 3 and compared to the corresponding DFT and experimental results. Considering tungsten, the values of C11 and C44 are within 4% of the DFT values. There is a slight overprediction of C12 with the RFM predicted value to be 225.07 GPa while the corresponding DFT value is 203 GPa. The elastic constants obtained for the diamond dataset were within 3% of the DFT values except C44. We also computed mechanical properties such as Young’s modulus and Poisson’s ratio and found them to be in excellent agreement with the DFT results (see Table 3). Another validation of RFM based potentials is assessed by performing simulations considering defects such as vacancies for tungsten. From Table 3, it is clear that RFM based potentials can model defects such as vacancy formation and provide accuracy levels comparable to DFT.

Discussion

The present study provides a computationally efficient approach to model interatomic interactions. The generalized linear models presented in this study provide energy and force predictions with DFT level accuracy (MAE less than 0.03 eVÅ−1 when compared to DFT results). By providing potentials for three different classes of materials, we have shown that the same functional form, as presented in Eqs. 4 and 6, can be used to model a wide range of material classes. It was shown that MD simulations performed using the above approach provided results within 7% of the experimental values or DFT predictions.

In certain applications, material behaviour is accurately represented by a combination of two body, three body, and many body descriptors. In those cases, a linear combination of RFF and RFM can be easily applied. We also like to highlight that there is no restriction on using the descriptors discussed in this study. In fact, the algorithms used to generate random features (RFF/RFM) can be easily applied to Behler’s symmetry functions19, bispectrum coefficients12, or the descriptors used in AGNI framework15 etc.

The major advantage of the RFF/RFM based IP is the low memory requirements as compared to standard kernel methods such as GAP and AGNI. Prediction through RFF/RFM potentials does not need storage of training set and requires computational effort of \({{{\mathcal{O}}}}(M)\), where M is the number of random features. This low memory advantage of RFF/RFM based IP makes them scalable compared to standard kernel methods. This computational advantage is achieved without sacrificing prediction accuracy as is evident through MD simulation results.

The potential’s functional form can be easily extended to model complex material interactions that involve different material classes. These interactions are hard to capture by classical IPs, as they are tuned to very specific chemical compositions, bonding types, and surface geometry. On the other hand, ML-based potentials can have challenges with descriptor and parameter identification for the large dataset involving different elements. In the light of these issues, RFF/RFM based potentials come up as a worthy candidate for accurate modelling of such complex interactions in a computationally efficient way. Not only have these potentials been shown to provide forces with DFT level accuracy, the simple generalized linear form can provide faster training and prediction of energy/forces in comparison to standard kernel methods. To test our above hypothesis, we developed O-RFF potentials for two different material systems (a) Li-Si-P-S51 and (b) Li-P-S-Sn51. The data for both the material systems was obtained from DeepMD library (http://dplibrary.deepmd.net/). For both the material systems, we obtained forces agreeing well with DFT calculations, with MAE between DFT and O-RFF predicted forces around 0.08 eVÅ−1. Detailed results are presented in Supplementary Note 4.

One major issue that we encountered was slow run time of RFF based IP as compared to the classical IP. The main reason for this is the expensive evaluation of cos and sin functions at every timestep. We tackled this issue by introducing potentials based on orthogonal random numbers, which reduced the number of required random features by more than half. For different sheets of graphene, Supplementary Fig. 3 (ref. Supplementary Note 3) shows the average time taken by 10 steps of NVT ensemble considering O-RFF, RFF, and GAP28 IPs. Run time of O-RFF is considerably lower than both GAP and RFF IP, while two orders of magnitude slower than the classical IP such as AIREBO34. Another way to reduce the runtime cost would be to include a sparsity-inducing regularizer in the objective function or by using Kronecker matrix algebra52. This is the focus of an ongoing study.

ML-based IPs can outperform classical empirical IP, by using a generic functional form, with DFT level accuracy for energy/force prediction. However, the main disadvantage of ML-based IP is the computational time needed for training the model and prediction of energy/forces during MD runs. Kernel-based methods are memory intensive as they have to store the training configurations for prediction purposes. The present study eliminated these limitations by providing a linear model-based IP using random features for stationary and non-stationary kernels. Energy and force fittings are comparable to DFT predictions. Low parametric space and linear functional form enabled these models to be computationally faster than DFT. Time to learn IP is significantly reduced by 96% as compared to GAP, thus allowing faster hyper parameter search and optimization.

Methods

Algorithms to generate random features

The algorithms used to generate random features for both the models are presented below

Cutoff radius

The cutoff function, denoted by fcut(rij), is a smooth function that zeroes out the energy contributions for atoms falling outside a cutoff radius and is as described below

where rij denotes the distance between two atoms and rcut is the cutoff radius. Tuning can be achieved by setting wcut. For three body descriptors such as \([{r}_{ij}+{r}_{ik},{({r}_{ij}-{r}_{ik})}^{2},{r}_{jk}]\), the cutoff function takes the form fcut(rij)fcut(rik).

Expression for forces for RFF and RFM models

This section outlines the steps involved in computation of forces from potential energy expressions. The forces are computed as derivative of the energy and corresponding expressions were obtained through the chain rule. The derivative of energy contribution of RFF features (see Eq. 4) with respect to descriptor q can be written as

where

It can be easily verified that for descriptor q with dimension D

where

and

Note that ωm(i) denotes the ith component of the vector ωm. Following the same line of reasoning, the gradient for RFM features (Eq. 6 in the main text) can be written as

The final step in obtaining forces is to multiply ∇ zm(q) with the gradient of the descriptor q with respect to position vector r. The last quantity is descriptor-dependent and should be handled accordingly.

Phonon dispersion DFT calculation

Plane wave DFT calculations were conducted using Vienna Ab initio Simulation Package (VASP)53,54 to get energy and forces. The interaction between the valence electrons and the ionic core was described by generalized gradient approximation (GGA) in the Perdew-Burke-Ernzerhof (PBE) formulation along with projector augmented wave (PAW) pseudopotentials55,56,57. A 7 × 7 × 1 supercell of graphene was constructed for the calculations and a 4 × 4 × 1 k-point mesh generated using Monkhorst−Pack scheme was used for Brillouin zone sampling58. An additional support grid was added for the evaluation of the augmentation charges and non-spherical contributions related to the gradient of the density in the PAW spheres were included.

Data availability

Data to train interatomic potentials was obtained from libatoms.org, as accessed on March 3, 2019.

Code availability

Software library to facilitate the reproduction of the results presented here can be found at https://github.com/g7dhaliwal/RandomFeatures.

References

Allen, M. P. & Tildesley, D. J. Computer Simulation of Liquids (Oxford University Press, 2017).

Griebel, M., Knapek, S. & Zumbusch, G. Numerical Simulation in Molecular Dynamics Vol. 5 (Society for Industrial and Applied Mathematics, 2007).

Becker, C. A., Tavazza, F. & Levine, L. E. Implications of the choice of interatomic potential on calculated planar faults and surface properties in nickel. Philos. Mag. 91, 3578–3597 (2011).

Becker, C. A., Tavazza, F., Trautt, Z. T. & de Macedo, R. A. B. Considerations for choosing and using force fields and interatomic potentials in materials science and engineering. Curr. Opin. Solid State Mater. Sci. 17, 277–283 (2013).

Dhaliwal, G., Nair, P. B. & Singh, C. V. Uncertainty analysis and estimation of robust airebo parameters for graphene. Carbon 142, 300–310 (2019).

Dhaliwal, G., Nair, P. B. & Singh, C. V. Uncertainty and sensitivity analysis of mechanical and thermal properties computed through embedded atom method potential. Comput. Mater. Sci. 166, 30–41 (2019).

Bartók, A. P., Payne, M. C., Kondor, R. & Csányi, G. Gaussian approximation potentials: The accuracy of quantum mechanics, without the electrons. Phys. Rev. Lett. 104, 136403 (2010).

Behler, J. & Parrinello, M. Generalized neural-network representation of high-dimensional potential-energy surfaces. Phys. Rev. Lett. 98, 146401 (2007).

Nyshadham, C. Machine-learned multi-system surrogate models for materials prediction. npj Comput. Mater. 5, 51 (2019).

Ramprasad, R., Batra, R., Pilania, G., Mannodi-Kanakkithodi, A. & Kim, C. Machine learning in materials informatics: Recent applications and prospects. npj Comput. Mater. 3, 54 (2017).

Behler, J. Representing potential energy surfaces by high-dimensional neural network potentials. J. Phys.: Condens. Matter 26, 183001 (2014).

Thompson, A. P., Swiler, L. P., Trott, C. R., Foiles, S. M. & Tucker, G. J. Spectral neighbor analysis method for automated generation of quantum-accurate interatomic potentials. J. Comput. Phys. 285, 316–330 (2015).

Podryabinkin, E. V. & Shapeev, A. V. Active learning of linearly parametrized interatomic potentials. Comput. Mater. Sci. 140, 171–180 (2017).

Shapeev, A. V. Moment tensor potentials: A class of systematically improvable interatomic potentials. Multiscale Modeling Simul. 14, 1153–1173 (2016).

Botu, V., Batra, R., Chapman, J. & Ramprasad, R. Machine learning force fields: Construction, validation, and outlook. J. Phys. Chem. C. 121, 511–522 (2016).

Hansen, K. Assessment and validation of machine learning methods for predicting molecular atomization energies. J. Chem. Theory Comput. 9, 3404–3419 (2013).

Mueller, T., Kusne, A. G. & Ramprasad, R. Machine learning in materials science: Recent progress and emerging applications. Rev. Comput. Chem. 29, 186–273 (2016).

Behler, J. Perspective: Machine learning potentials for atomistic simulations. J. Chem. Phys. 145, 170901 (2016).

Behler, J. Atom-centered symmetry functions for constructing high-dimensional neural network potentials. J. Chem. Phys. 134, 074106 (2011).

Bartók, A. P., Kondor, R. & Csányi, G. On representing chemical environments. Phys. Rev. B 87, 184115 (2013).

Zhang, L. et al. End-to-end symmetry preserving inter-atomic potential energy model for finite and extended systems. Proceedings of the 32nd International Conference on Neural Information Processing Systems, 4441–4451 (2018).

Jia, W. et al. In SC20: International Conference for High Performance Computing, Networking, Storage and Analysis 1–14 (IEEE, 2020).

Rupp, M. Machine learning for quantum mechanics in a nutshell. Int. J. Quantum Chem. 115, 1058–1073 (2015).

Liu, Y., Zhao, T., Ju, W. & Shi, S. Materials discovery and design using machine learning. J. Materiomics 3, 159–177 (2017).

Mishin, Y. Machine-learning interatomic potentials for materials science. Acta Mater. 214, 116980 (2021).

Deringer, V. L., Caro, M. A. & Csányi, G. Machine learning interatomic potentials as emerging tools for materials science. Adv. Mater. 31, 1902765 (2019).

Behler, J. Four generations of high-dimensional neural network potentials. Chem. Rev. 121, 10037–10072 (2021).

Rowe, P., Csányi, G., Alfè, D. & Michaelides, A. Development of a machine learning potential for graphene. Phys. Rev. B 97, 054303 (2018).

Deringer, V. L. & Csányi, G. Machine learning based interatomic potential for amorphous carbon. Phys. Rev. B 95, 094203 (2017).

Bartók, A. P., Kermode, J., Bernstein, N. & Csányi, G. Machine learning a general-purpose interatomic potential for silicon. Phys. Rev. X 8, 041048 (2018).

Szlachta, W. J., Bartók, A. P. & Csányi, G. Accuracy and transferability of Gaussian approximation potential models for tungsten. Phys. Rev. B 90, 104108 (2014).

Rosenbrock, C. W. Machine-learned interatomic potentials for alloys and alloy phase diagrams. npj Comput. Mater. 7, 1–9 (2021).

Li, Z., Kermode, J. R. & De Vita, A. Molecular dynamics with on-the-fly machine learning of quantum-mechanical forces. Phys. Rev. Lett. 114, 096405 (2015).

Stuart, S. J., Tutein, A. B. & Harrison, J. A. A reactive potential for hydrocarbons with intermolecular interactions. J. Chem. Phys. 112, 6472–6486 (2000).

Zhou, X. Atomic scale structure of sputtered metal multilayers. Acta Mater. 49, 4005–4015 (2001).

Xiong, K. & Wang, S. The online random Fourier features conjugate gradient algorithm. IEEE Signal Process. Lett. 26, 740–744 (2019).

Nelsen, N. H. & Stuart, A. M. The random feature model for input-output maps between Banach spaces. SIAM J. Sci. Comput. 43, A3212–A3243 (2021).

Hung, T. H. & Chien, P. A random Fourier feature method for emulating computer models with gradient information. Technometrics 63, 1–10 (2020).

Rahimi, A. & Recht, B. Random Features for Large-Scale Kernel Machines. Adv. Neural. Inf. Process. Syst. 1177–1184 (2008).

Rahimi, A. & Recht, B. In 2008 46th Annual Allerton Conference on Communication, Control, and Computing 555–561 (IEEE, 2008).

Avron, H., Kapralov, M., Musco, C., Musco, C., Velingker, A., & Zandieh, A. In Proceedings of the 34th International Conference on Machine Learning Vol. 70, 253–262 (JMLR. org, 2017).

Kar, P. & Karnick, H., Random feature maps for dot product kernels. Artif. Intell. Stat. 583–591 (2012).

Niederreiter, H. Random Number Generation and Quasi-Monte Carlo Methods (SIAM, 1992).

Yu, F. X. X., Suresh, A. T., Choromanski, K. M., Holtmann-Rice, D. N. & Kumar, S. Orthogonal random features. Adv. Neural. Inf. Process. Syst. 1975–1983 (2016).

Choromanski, K. M., Rowland, M. & Weller, A. The Unreasonable Effectiveness of Structured Random Orthogonal Embeddings. Adv. Neural. Inf. Process. Syst. 219–228 (2017).

Choromanski, K. et al. The geometry of random features. Int. Conf. Artif. Intell. Stat. 1–9 (2018).

Larsen, A. H. The atomic simulation environment-a python library for working with atoms. J. Phys.: Condens. Matter 29, 273002 (2017).

Birch, F. Finite elastic strain of cubic crystals. Phys. Rev. 71, 809 (1947).

O’Connor, T. C., Andzelm, J. & Robbins, M. O. Airebo-m: A reactive model for hydrocarbons at extreme pressures. J. Chem. Phys. 142, 024903 (2015).

Tersoff, J. Modeling solid-state chemistry: Interatomic potentials for multicomponent systems. Phys. Rev. B 39, 5566 (1989).

Huang, J. et al. Deep potential generation scheme and simulation protocol for the li10gep2s12-type superionic conductors. J. Chem. Phys. 154, 094703 (2021).

Evans, T. W. & Nair, P. B. Scalable Gaussian processes with grid-structured eigenfunctions (GP-GRIEF) Int. Conf. Mach. Learn. 1416–1425 (2018).

Kresse, G. & Hafner, J. Ab initio molecular dynamics for liquid metals. Phys. Rev. B 47, 558 (1993).

Kresse, G. & Furthmüller, J. Efficient iterative schemes for ab initio total-energy calculations using a plane-wave basis set. Phys. Rev. B 54, 11169 (1996).

Perdew, J. P., Burke, K. & Ernzerhof, M. Generalized gradient approximation made simple. Phys. Rev. Lett. 77, 3865 (1996).

Blöchl, P. E. Projector augmented-wave method. Phys. Rev. B 50, 17953 (1994).

Kresse, G. & Joubert, D. From ultrasoft pseudopotentials to the projector augmented-wave method. Phys. Rev. B 59, 1758 (1999).

Monkhorst, H. J. & Pack, J. D. Special points for Brillouin-zone integrations. Phys. Rev. B 13, 5188 (1976).

Li, L., Reich, S. & Robertson, J. Defect energies of graphite: Density-functional calculations. Phys. Rev. B 72, 184109 (2005).

Behera, H. & Mukhopadhyay, G. In AIP Conference Proceedings, Vol. 1313, 152–155 (AIP, 2010).

Baskin, Y. & Meyer, L. Lattice constants of graphite at low temperatures. Phys. Rev. 100, 544 (1955).

Shulenburger, L. & Mattsson, T. R. Quantum monte carlo applied to solids. Phys. Rev. B 88, 245117 (2013).

Shin, H. et al. Cohesion energetics of carbon allotropes: Quantum Monte Carlo study. J. Chem. Phys. 140, 114702 (2014).

Lee, C., Wei, X., Kysar, J. W. & Hone, J. Measurement of the elastic properties and intrinsic strength of monolayer graphene. Science 321, 385–388 (2008).

Wei, X., Fragneaud, B., Marianetti, C. A. & Kysar, J. W. Nonlinear elastic behavior of graphene: Ab initio calculations to continuum description. Phys. Rev. B 80, 205407 (2009).

Wang, R., Wang, S., Wu, X. & Liang, X. First-principles calculations on third-order elastic constants and internal relaxation for monolayer graphene. Phys. B: Condens. Matter 405, 3501–3506 (2010).

Lide, D. R. CRC Handbook of Chemistry and Physics Vol. 85 (CRC Press, 2004).

Ochs, T., Beck, O., Elsässer, C. & Meyer, B. Symmetrical tilt grain boundaries in body-centred cubic transition metals: An ab initio local-density-functional study. Philos. Mag. A 80, 351–372 (2000).

Wang, J., Zhou, Y., Li, M. & Hou, Q. A modified w–w interatomic potential based on ab initio calculations. Model. Simul. Mater. Sci. Eng. 22, 015004 (2013).

Marinica, M. C. Interatomic potentials for modelling radiation defects and dislocations in tungsten. J. Phys.: Condens. Matter 25, 395502 (2013).

Grünwald, E. et al. Young’s modulus and Poisson’s ratio characterization of tungsten thin films via laser ultrasound. Mater. Today.: Proc. 2, 4289–4294 (2015).

Donohue, J. Structures of the Elements (John Wiley and Sons, Inc., 1974).

Mounet, N. & Marzari, N. First-principles determination of the structural, vibrational and thermodynamic properties of diamond, graphite, and derivatives. Phys. Rev. B 71, 205214 (2005).

McSkimin, H. & Andreatch Jr, P. Elastic moduli of diamond as a function of pressure and temperature. J. Appl. Phys. 43, 2944–2948 (1972).

McSkimin, H., Andreatch Jr, P. & Glynn, P. The elastic stiffness moduli of diamond. J. Appl. Phys. 43, 985–987 (1972).

Hebbache, M. First-principles calculations of the bulk modulus of diamond. Solid State Commun. 110, 559–564 (1999).

Klein, C. A. & Cardinale, G. F. Young’s modulus and Poisson’s ratio of CVD diamond. Diam. Relat. Mater. 2, 918–923 (1993).

Finnis, M. & Sinclair, J. A simple empirical n-body potential for transition metals. Philos. Mag. A 50, 45–55 (1984).

Acknowledgements

This research is supported by grants from Natural Sciences and Engineering Research Council of Canada, Hart Professorship, Canada Research Chairs programme, the University of Toronto, and Compute Canada. The authors would like to thank Abu Anand for providing DFT calculated phonon dispersion curves.

Author information

Authors and Affiliations

Contributions

Conceptualization, G.D., P.B.N., and C.V.S.; methodology, G.D. and P.B.N.; software, G.D.; formal analysis, G.D.; investigation, G.D. and P.B.N.; data curation, G.D.; writing—original draft, G.D.; writing—review and editing, G.D., P.B.N., and C.V.S.; visualization, G.D.; supervision, P.B.N. and C.V.S.; project administration, C.V.S.; funding acquisition: C.V.S.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dhaliwal, G., Nair, P.B. & Singh, C.V. Machine learned interatomic potentials using random features. npj Comput Mater 8, 7 (2022). https://doi.org/10.1038/s41524-021-00685-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-021-00685-4

This article is cited by

-

Recent advances in machine learning interatomic potentials for cross-scale computational simulation of materials

Science China Materials (2024)

-

Accelerating the design of compositionally complex materials via physics-informed artificial intelligence

Nature Computational Science (2023)

-

Neural network interatomic potential for laser-excited materials

Communications Materials (2023)