Abstract

Crystallographic defects can now be routinely imaged at atomic resolution with aberration-corrected scanning transmission electron microscopy (STEM) at high speed, with the potential for vast volumes of data to be acquired in relatively short times or through autonomous experiments that can continue over very long periods. Automatic detection and classification of defects in the STEM images are needed in order to handle the data in an efficient way. However, like many other tasks related to object detection and identification in artificial intelligence, it is challenging to detect and identify defects from STEM images. Furthermore, it is difficult to deal with crystal structures that have many atoms and low symmetries. Previous methods used for defect detection and classification were based on supervised learning, which requires human-labeled data. In this work, we develop an approach for defect detection with unsupervised machine learning based on a one-class support vector machine (OCSVM). We introduce two schemes of image segmentation and data preprocessing, both of which involve taking the Patterson function of each segment as inputs. We demonstrate that this method can be applied to various defects, such as point and line defects in 2D materials and twin boundaries in 3D nanocrystals.

Similar content being viewed by others

Introduction

Crystallographic defects are interruptions of periodic patterns in crystals and play a key role in defining materials properties. For example, point defects in 2D materials such as vacancies and interstitials can introduce strain to the lattice and modify the electronic properties; line defects like dislocations and planar defects such as grain boundaries and twin boundaries in 3D materials can define their mechanical properties. Studies of crystallographic defects require structural knowledge of crystallographic defects at atomic-scale resolution, which has greatly benefited from characterizations by transmission electron microscopy and scanning probe microscopy.

The current trend of applying machine learning (ML) to electron microscopy demands an ‘AI crystallographer’ that can automatically detect, identify, and analyze structural properties such as crystallographic defects from scanning transmission electron microscopy (STEM) images1. Previous work demonstrated the detection and classification of defects based on supervised learning methods where human-labeled data from experiments or simulations are used to train the models2,3,4,5,6,7. In this case, a certain level of prior knowledge about the crystal structure and atomic arrangements of various defects is always required for the training, whether through weak supervision with limited experimental data2 or via standard supervision with a large set of simulated data3,4,5,6,7. These trained models are usually limited to the crystal structures in the training datasets and cannot easily be generalized to other crystal structures. In addition, labeling training sets can become costly when the crystal structure is complex or a large variety of defects exist (e.g., dynamic defects that arise from electron-beam irradiation). Apart from supervised ML, automatic detection of crystal defects has also been approached by explicit programming with a set of rules derived from graph theory8. While extremely powerful, this algorithm will become less efficient as the number of atoms in the unit cell increases. Currently, a reliable method of unsupervised ML for detection of crystallographic defects in STEM images is lacking.

In a typical application of ML to STEM images, an atomic-resolution image is segmented into multiple subimages of n × m pixels2, which are flattened into vectors (that can each be thought of as the multidimensional coordinate of a data point) of nm × 1 pixels2. These data points become the inputs of the model to be trained. If each subimage consists of one unit cell, then the majority of the subimages will show one class of atomic arrangement, which is similar to the perfect unit cell, and the subimages that contain crystallographic defects will be outliers. From this perspective, the detection of crystallographic defects can be turned into a problem of outlier detection in the context of one-class classification. Here, we explore the application of the one-class support vector machine (OCSVM)9, which has been widely used for outlier detection in industry (such as fast fault diagnosis in manufacturing and cyber security analyses), to the detection of crystallographic defects.

In the basic view of the mathematics behind OCSVM, data points are mapped from the input space by kernels (such as Gaussian kernels) into a feature space where the majority of the data points lie inside a hypersphere located away from the origin of the feature space. This description differs from most unsupervised learning algorithms, which are for estimating the true probability densities in the feature space from training examples (the densities can then be used for classification or regression). The OCSVM addresses a subproblem of the density estimation: it computes a sign function that defines the boundary of the hypersphere and minimizes the volume of the hypersphere9,10,11. It is partly because of targeting this simpler problem that the OCSVM is successful in difficult cases, such as detecting a small fraction of outliers. In addition, the OCSVM with adjusted parameters can be used as an unsupervised binary classifier even where the outliers (or data points in the other class) occupy a much larger fraction of the feature space.

In order to apply an OCSVM to the defect-detection problem, we must ensure that most (or at least a large fraction) of the subimages lie in a single class (called a normal class), which is associated with the unit cell. The common practice in the segmentation is to enclose the neighborhood of every single atom in each subimage2,3,5. However, when the unit cell contains more than one unique atom site, this common segmentation results in more than one major class of data points and invalidates the requirement for applying OCSVM and thus, we must redesign our scheme of segmentation and data preprocessing for the application of OCSVM. We introduce two schemes of segmentation to satisfy the requirement of having one major class of data points. In the first scheme, we crop the neighborhood of the unique maximum/minimum intensity site in the projection of the unit cell/repeat unit (e.g., the central hole of the honeycomb lattice or the brightest atom column in the image of a unit cell). The first scheme is applicable to a wide range of crystal structures although it imposes limitations to the crystal symmetry. To remove the limitations, we developed a second scheme where we crop the Fourier filtered images and each cropped image is centered at the position of an atom column. In the first method, the running time of the algorithm does not increase with the number of atoms in the unit cell while in the second scheme the running time is proportional to the number of Fourier filtered images used for the one-class classification that will increase with the number of atoms in the unit cell. In order to make sure that the features in each subimage are independent of translation, we introduce the application of the Patterson function12 as a feature extraction descriptor to both schemes, which only depends on the interatomic vectors (excluding the signs of their directions). Although there are many other descriptors available in machine vision, a certain descriptor usually has strengths over others in a certain context (for a comparison of various descriptors in machine vision, see ref. 13). The Patterson function is chosen because it was invented and widely used for crystallography, which matches the context here. A recent document also presents a similar idea of using Patterson maps as inputs to neural networks in the context of solving the phase problem14.

In the results, we will present two demonstrations: the first case includes point and line defects in a 2D crystal; the second case includes a twin boundary as an example of planar defects in a 3D nanocrystal. Based on the same principles as these two demonstrations, some additional examples including detection of dislocations will be provided in the Supplementary information. The workflows in the present work enable detection of any present defects in STEM images (or even in a single image) without any labelled training sets and can return the coordinates of defects in the images. This can be useful for studying new and/or complicated crystal structures with defects where not enough labelled datasets are available to effectively apply supervised learning. It should also be noted that the OCSVM in the current work only provides the distinction between defects and nondefects (or one domain from the other) but does not distinguish between different types of defects. Further work will be required to achieve such a distinction, such as by clustering of the segments or consideration of the defect coordinates.

Results

Demonstration for bilayer (MoW)Te2

Here, we apply defect detection using the first scheme of segmentation and data preprocessing (see Methods section for more details) to the image of bilayer Mo0.91W0.09Te2. An experimental STEM image is shown in Fig. 1a that contains both pristine areas and regions with electron-beam-induced defects. In order to apply an OCSVM to locate defects, we need to ensure that there is only one type of unit cell present. However, a large fraction of image segments will contain dopants at the different sites, which could violate this requirement. Therefore, we need to exclude the influence of dopants before applying OCSVM to identify defects such as displaced or absent atom columns.

a An ADF-STEM image acquired at 100 kV. b, c Classification of atom columns by applying GMM to integrated intensities of all atom columns. W dopants and Te vacancies (1 or 2 Te atoms missing) are labeled on top of the Gaussian-smoothed image of a. d Image of atomic coordinates derived from b. e, f Detection of defect sites with absent or displaced atom columns by applying OCSVM to d. Red dots indicate centers of segments that are predicted to be outliers. f shares the same region as c. The scale bars represent 3 nm. Panels a and d are adapted with permission from ref. 38, © 2021 The authors.

In the absence of defects, there are four types of atomic arrangement in an atomic column of the bilayer material: Mo-Te-Te, Te-Te-Mo, W-Te-Te, or Te-Te-W. A column with a W atom is significantly brighter than that with a Mo atom, whereas the atomic stacking in the column does not significantly change the intensity of the column. In this way, we can distinguish between the two types of columns in the regions (Mo vs. W) without structural defects.

We find two kinds of defects in the STEM image in Fig. 1a: one is associated with vacancies (one or two Te atoms missing), which generates lower contrast columns at the expected locations; the other kind of defect is associated with displaced atoms or missing columns.

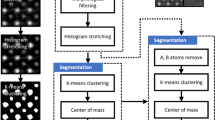

The procedure undertaken is as follows:

Step 1: We classified all the atom columns according to their integrated intensities into three classes (i.e., columns with Mo, W, and missing atoms) by using the Gaussian mixture model (GMM)15, an unsupervised learning algorithm for data clustering that has been introduced previously in a similar context16. Details of the GMM are shown in Supplementary Note 1. Classification of atom columns in STEM images of 2D transition metal chalcogenides has been preceded by several workers using traditional approaches17,18. After identifying the atom columns with dopants and vacancies, we replaced each atom column with a standard 2D Gaussian function and generated an image of atomic coordinates.

Step 2: The image of atomic coordinates was segmented and preprocessed according to the first scheme in the Methods section. Principal component analysis (PCA) was applied to reduce the dimension of each subimage, where nine principal components represented 98% of the variability in the features. At this point, we can see the power of using the Patterson function method to reduce segmentation errors and greatly reduce the number of significant principle components from 36 to 9, which indicates that the variability in the input space due to segmentation errors has been dramatically reduced (Fig. 2).

a Subimage of atom-coordinate image (Fig. 1d) where unit cell is not well centered and b Patterson function of the subimage, where Patterson function is always centrosymmetric. PCA is performed on two datasets, one consisting of subimages like in a and the other consists of Patterson maps like in b. PCA scree plots without c and with d Patterson mapping plotted on a logarithmic scale. PCA scree plot shows how much each principal component accounts for covariance of data matrix (the size for the flattened vector for each image × the number of images). c The explained variance (%) drops slowly and 36 principal components are needed to account for 98% of the covariance of data matrix. d The explained variance (%) drops more rapidly than that in c and only 9 principal components are needed to account for 98%. This comparison shows that the highly dispersive covariance in c, caused by arbitrary shifts, is greatly reduced by the Patterson map.

After segmentation and preprocessing of data (see Methods section for details), we obtained training sets, X (image stacks or vectors of the significant PCA coefficients), which consist of the shift-invariant information about the relative atom positions in each unit cell, allowing us to apply an OCSVM to the datasets.

Step 3: We applied the OCSVM in the library, LIBSVM19, within scikit-learn15. Two free parameters, υ and γ, needed to be selected. The υ parameter is the upper bound limit on the fraction of outliers and γ defines the dispersion of the Gaussian kernel in OCSVM. For γ we chose the default value:

We selected υ at the knee point of the curve for the distance, D(υ)20,21 (Fig. 3a, b), defined as:

where \(f\left( X \right)\) is the decision function and \(f\left( X \right) = 0\) defines the boundary of the hypersphere. The histogram of \(f\left( X \right)\) shows the distribution of the assigned distances of data points to the boundary in the feature space (Fig. 3c). For optimal values of γ and υ, the histogram should show an approximate Gaussian distribution in the distances for the normal set, \(f\left( {X_{{\mathrm{normal}}}} \right)\), and an abrupt change near the boundary (as shown in Fig. 3c). We also checked the result of outlier detection at various values of γ ranging from 0.5 to 3 times the default value (where the optimal υ also varied with γ) and confirmed that the results were similar in this case. In some other cases (see Supplementary Figs. 2,3 more examples), the optimal value of γ can be 5–6 times the default value and selection of the optimal γ can also invoke the metric \(D\left( \upsilon \right)\)20,21. This completes the determination of the two free parameters.

a, b Upper bound on the fraction of outliers, the ν parameter, is chosen from knee point of the curve for distance between the median values of decision functions in the normal class and outliers, \(D(\nu )\). c Histogram of decision function shows density of data points with different signed distances to the hypersphere (zero point at horizontal axis). Outliers lie on the negative side. d–f Distribution of data points in eigenspace of PCA. Axis labels, PC1, PC2, and PC3, are first three significant principal components; outliers are labeled in purple.

Step 4: With the optimized hyperparameters υ and γ, the outlier detection can be carried out on the datasets of either the preprocessed image stack or their significant PCA coefficients. The center of the outlier segments are labeled by red dots in the image of atomic coordinates (Fig. 1d–f), most of which can be verified by eye as defects associated either with displaced atoms or the absence of columns. There are a few sites that are predicted to be defects but appear by eye to be normal that should be attributed to errors of the unsupervised learning prediction. To estimate the accuracy of the prediction, we counted the numbers of correctly (true) and incorrectly (false) detected defects (positive) as well as the numbers of true and false nondefects (negative) in Fig. 1d and summarized the results in Table 1. The balanced accuracy (a suitable metric for datasets with an imbalanced proportion of positive and negative events) can be directly calculated from the values in Table 1 (see details of calculation in Supplementary Note 2) and was determined to be 98.1%. Such a high accuracy value depends on the choice of the two hyperparameters and overfitting can be an issue if the same values of hyperparameters are used for a different dataset. However, the hyperparameters can be optimized on a case-by-case basis and optimization does not require any labels. We reproduced the high balanced accuracy for a different STEM image that was four times larger in size by optimizing the hyperparameters using the same heuristic approach in Step 3 (see Supplementary Figs. 2,3 for details).

The distribution of outliers can also be visualized in PCA space (Fig. 3d–f), where the outliers lie far from the center of the single cluster (the normal class). Since PCA is an orthogonal linear transformation, the visibility of defects as outliers in PCA space suggests that the task of detecting defects from atomic-resolution images is well suited to the SVM architecture. In general, SVMs without customized kernels cannot handle highly nonlinear mappings, which is where deep neural networks would likely have an advantage, but they are accurate and efficient in handling tasks otherwise. Such visualization helps to understand the result.

In this demonstration, there is a very small fraction (~3%) of defect sites in the image and there is only one major class in the PCA space. As the fraction of defects increases, some defects with similar configurations may form another group/cluster in the PCA space. In this scenario, a GMM can be applied to the data points to distinguish and clean the minor group in the PCA space before Step 3 (see Supplementary Fig. 4). A demonstration of such a scenario for an image stack of 100 frames can be found in the Supplementary Note 3.

Demonstration for ZrO2

In the previous example for (MoW)Te2, we demonstrated how the combination of the Patterson function and a OCSVM allows detection of defects either belong to point or line defects in a 2D crystal. To complete the demonstration for other sorts of defects, we turn to the example of a planar defect in a ZrO2 nanoparticle that consists of two domains separated by a twin boundary. The crystal structure has a monoclinic structure22 that often imposes a challenge for segmentation.

The procedure for data analysis is as follows: (1) a Friedel pair of fast Fourier transform (FFT) spots with the shortest distance, which corresponds to the unit cell length in one direction, is selected (Fig. 4b) for Fourier filtering and to generate an inverse FFT (IFFT) image (Fig. 4c); (2) the IFFT image is segmented and preprocessed according to the second scheme of data segmentation and preprocessing in the Methods section; (3) OCSVM is applied to the image stacks at the optimized υ as determined from the knee point of the \(D(\upsilon )\) curve (Fig. 4d); (4) the histogram of the decision function (Fig. 4e) is computed and used for clustering. After processing, the atom columns are clustered into two groups (labeled in different colors in Fig. 4f) that show a boundary in real space, which appears to be consistent with the twin boundary identified by eye.

a A STEM image of ZrO2 nanoparticle recorded at 100 kV. b FFT of atom-coordinate image of a. c Fourier filtered image formed by the IFFT from the circled spots in b which belong to one domain (in red coordinate in panel b) but not the other (in yellow coordinate). d Optimized ν is selected from knee point of \(D(\nu )\) curve. e Two separated clusters of data points are detected using OCSVM with optimized ν parameter. f Two classes of data points in e are mapped onto atom-coordinate image with two different colors. Each color corresponds to a single domain; boundary between colors is the detected twin boundary. The scale bars in (a, c) represent 3 nm, and the scale bar in panel b represents 1/nm. Panel a is adapted with permission from ref. 38, © 2021 The authors.

This procedure can be repeated for other pairs of FFT spots. If multiple grains/domains are present in a STEM image, the method can be used to separate multiple domains/grains and to associate each pair of FFT spots with specific grains/domains. In addition, if dislocations are present in a STEM image, the method can be used to detect their presence since dislocations are displayed as interruptions of the periodic fringes in the Bragg-filtered images23. See the Supplementary Fig. 7 for a demonstration of detecting dislocations.

Discussion

Although application of deep learning to electron microscopy has made a lot of progress in collection, compression and analyses of big data24,25,26, the power of simpler algorithms (without neural networks) should not be ignored in some applications. Here we explored the use of OCSVM for detecting crystallographic defects in STEM images, where the defects and normal unit cells can be separated almost linearly with application of the Gaussian kernel in OCSVM. Two schemes for image segmentation and data preprocessing are introduced (see Methods section). Image segmentation in the first scheme can efficiently deal with crystals that have a hole in the center of the unit cell. Segmentation in the second scheme can in principle deal with any type of unit cell and the size of the subimages can be chosen based on the average inter-planar spacing; therefore, the second scheme solves one of the major issues with traditional segmentation methods. In these segmentation methods, the window size is usually a user-defined free parameter and the choice can significantly affect the results especially for difficult cases, such as oblique Bravais lattices. Data preprocessing in both schemes utilizes the Patterson function as a descriptor, which results in features with translation-invariance. Applying the Patterson function greatly reduces the segmentation errors and results in subimages with shift-invariant structural information, which are then suitable for the application of OCSVM. We expect that similar schemes are likely to benefit other ML algorithms as well.

Although literature focused on the OCSVM indicates that the result can be sensitive to the choice of the two free parameters γ and υ, we found that the default value of γ in the open source library LIBSVM18 and the choice of υ based on the \(D(\upsilon )\) curve20,21 worked well for both examples here if a normalization was done to each input image before training.

Although the image of atomic coordinates in the first demonstration shows no Z-contrast (where Z is the atomic number of the element), the OCSVM can work for STEM images exhibiting Z-contrast if the dopants are excluded since dopants can complicate the one-class classification.

For rigid 3D crystals where the crystal lattice is not highly distorted, the distinction between outliers and the normal class is large, as shown in Fig. 4e, meaning that the separation can be done more feasibly than for soft materials, as shown in Fig. 3c. Here, by ‘soft’ materials we refer to cases such as the 2D material example, where varying amounts of lattice distortions are observed in many places and therefore, the boundary between the outliers (defects) and normal class (normal unit cells) becomes more ambiguous, as shown in Fig. 3c. We note that such ambiguity is also common for human judgement.

Our approach can be applied to rapidly screen a large number of STEM images to detect crystallographic defects, which may be beneficial when analyzing in situ data27,28,29,30. For example, the modification of 2D materials by moving atoms with the electron beam in STEM31,32 requires rapid detection of defects to guide the atom-by-atom fabrication33. When combined with automatic scan control systems34, automatically identifying and classifying different types of defects will be another important step toward this goal.

Another application is to improve the crystal structure reconstruction from noisy atomic images of beam-sensitive materials such as metal-organic frameworks (MOFs). With the development of extremely fast and sensitive detectors in recent years, the rapid imaging of structural details of such materials has become plausible. Nevertheless, beam-induced defects in these structures are still difficult to avoid. Using OCSVM in the process for crystal structure reconstruction by removing subimages of beam-damaged unit cells or local structures should, in principle, clean up the image stack for averaging.

In addition, the second scheme of image segmentation and data preprocessing can also be followed by other ML algorithms, such as variational autoencoders (VAEs)35 that accept training data with noise and carry out approximate Bayesian inference. For example, a modified version of VAE has been applied to denoise image data36 and we note that datasets prepared with scheme two (in Methods section) are essentially Patterson maps of the noisy Bragg-filtered images, meaning that this approach should be adaptable to other applications, such as the ML-based determination of strain components.

Methods

Sample preparation and STEM experiments

The Mo0.91W0.09Te2 sample was exfoliated from the bulk crystal grown by chemical vapor transport (CVT). An annular dark field (ADF)-STEM image was recorded with a NION UltraSTEM200 operated at 100 kV with a nominal beam current of ~25 pA. A convergence angle of 30 mrad was used and the ADF detector had an angular range of 80–350 mrad.

The ZrO2 sample was prepared from a dense ZrO2 substrate (purchased from Fuel Cell Materials) by in situ lift-out on a Hitachi NB5000 focused ion-beam (FIB) instrument. The final thinning step was performed on a Fishione Nanomill model 1040 at 900 eV for 10 min. on each side of the foil to remove potential ion-beam damage. The atomic-resolution ADF-STEM image (Fig. 4a) was acquired using an aberration-corrected Nion UltraSTEM 100 operated at 100 kV.

Segmentation and data preprocessing

Two schemes of image segmentation and data preprocessing are developed for different situations. The first scheme works for scenarios where a rapid algorithm is needed for tracing defects within a single grain/domain where the maximum or minimum intensity is located at one site of the unit cell. As an example, a centrosymmetric pore in the middle of a graphene honeycomb can satisfy this requirement. The presence of such structural features facilitates segmentation of a STEM image into subimages, each of which contain one unit cell. To remove the limits imposed by the symmetries of the crystal structure in the first scheme, we developed a second scheme, which may be somewhat slower than the first scheme but can be applied to any type of unit cell with no limit imposed by crystal symmetries. In addition, the second scheme can be applied to STEM images that include multiple domains/grains. Both schemes involve the application of the Patterson function to map the subimage to the inputs of the ML algorithm (i.e., OCSVM). These two schemes can also be combined with other ML algorithms in different contexts.

Scheme one

First, the positions of holes are located by peak finding algorithms and refined by minimizing the summed intensities over the neighborhood. Second, square-shaped subimages, which are centered at the holes, are cropped and collated. Third, to eliminate arbitrary shifts of the central hole in each subimages due to segmentation errors, each subimage is transformed by the Patterson function to a new subimage. The stack of the new subimages forms the training set, also known as the input space, for the unsupervised learning.

The translational invariance of taking the Patterson function is illustrated in Fig. 5. An image of four 2D Gaussian functions representing four different atoms is created, with the image intensity representing the charge density, \(\rho \left( {{{\boldsymbol{r}}}} \right)\) (Fig. 5a) or \(\rho (x,y)\). In 2D, the Patterson function is defined as:

where \(F_{hk}\) is the structure factor (Fourier transform) of the charge density, ρ and (u, v) is the interatomic vector. When the atoms are shifted by a common vector inside the image (Fig. 5c), the interatomic vectors are invariant and the Patterson function is invariant (Fig. 5b, d, f). Therefore, taking the Patterson function of the original subimages is a promising method to obtain training data when the translational dependence is removed.

a An artificially created unit cell that consists of four different atoms. b The Patterson function of the unit cell in a. c The unit cell in a shifted by vector, \({{{\boldsymbol{r}}}}_0\). d The Patterson function of the shifted unit cell. e Difference between two unit cells in a and c. f Difference between two Patterson functions in b and d, which is zero. g–l Above procedure is repeated for two inverse FFT images (g, i) that differ by a phase shift—no difference in Patterson functions (h, j) observed, as shown in l. Periodic boundary conditions have been applied to calculating the Patterson functions.

Here, to apply the Patterson function to STEM images, we take \(\rho ({{{\boldsymbol{r}}}})\) to be the image intensity. In a similar way to the above argument, this allows elimination of image segmentation errors. It should be noted that a periodic boundary condition is implicitly applied to the Patterson function.

Scheme two

First, the IFFT acquired for a selected and masked pair of spots, \(hkl\) and \(\bar h\,\bar k\,\bar l\), in the FFT. This step is called Bragg filtering and is similar to the method used to produce a single-phase image in geometric phase analysis (GPA)37. Second, the whole IFFT image is then rotated so that the fringes are parallel to either the horizontal axis or the vertical axis. Third, the rotated IFFT image is segmented into square-shaped subimages with a length equal to the inter-planar spacing of (\(hkl\)). Each subimage is centered on an atom column and subimages centered at different atoms in the same unit cell show different phases of the oscillating fringes. Fourth, to ensure the existence of one major class, we need to eliminate this phase difference by taking the Patterson function creating a stack of segmented IFFT images for each \(hkl\). The removal of the phase difference by applying the Patterson function to the segmented IFFT images is illustrated in Fig. 5g–l.

After segmentation and preprocessing of data, we obtain the training sets (image stacks), which consist of the shift-invariant information about the relative atom positions in each unit cell, allowing us to apply an OCSVM to the datasets.

Data availability

All the datasets are freely available at https://doi.org/10.5281/zenodo.5520169.

Code availability

All the codes are freely available at https://github.com/DrYGuo/Defect-detection-in-atomic-resolution-image-via-unsupervised-learning-with-translational-invariance.

References

Ziatdinov, M. AtomAI: Deep and machine learning for atom-resolved data. GitHub https://github.com/pycroscopy/atomai (2020).

Ziatdinov, M. et al. Deep learning of atomically resolved scanning transmission electron microscopy images: chemical identification and tracking local transformations. ACS Nano 11, 12742–12752 (2017).

Vasudevan, R. et al. Mapping mesoscopic phase evolution during E-beam induced transformations via deep learning of atomically resolved images. npj Comput. Mater. 4, 30 (2018).

Li, W. et al. Automated defect analysis in electron microscopic images. npj Comput. Mater. 4, 36 (2018).

Maksov, A. et al. Deep learning analysis of defect and phase evolution during electron beam-induced transformations in WS2. npj Comput. Mater. 5, 12 (2019).

Lin, R. et al. TEMImageNet training library and AtomSegNet deep-learning models for high-precision atom segmentation, localization, denoising, and deblurring of atomic-resolution images. Sci. Rep. 11, 5386 (2021).

Lee, C. H. et al. Deep learning enabled strain mapping of single-atom defects in two-dimensional transition metal dichalcogenides with sub-picometer precision. Nano Lett. 20, 3369–3377 (2020).

Ovchinnikov, O. S. et al. Detection of defects in atomic-resolution images of materials using cycle analysis. Adv. Struct. Chem. Imag. 6, 3 (2020).

Scholkopf, B., Williamson, R., Smola, A., Shawe-Taylor, J. & Platt, J. Support vector method for novelty detection. Adv. Neur. 12, 582–588 (2000).

Scholkopf, B., Platt, J. C., Shawe-Taylor, J., Smola, A. J. & Williamson, R. C. Estimating the support of a high-dimensional distribution. Neural Comput. 13, 1443–1471 (2001).

Tax, D. M. J. & Duin, R. P. W. Support vector data description. Mach. Learn. 54, 45–66 (2004).

Patterson, A. L. A Fourier series method for the determination of the components of interatomic distances in crystals. Phys. Rev. 46, 372–376 (1934).

Mikolajczyk & Schmid A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 27, 1615–1630 (2005).

Hurwitz, D. From Patterson maps to atomic coordinates: training a deep neural network to solve the phase problem for a simplified case. Preprint at https://arxiv.org/ftp/arxiv/papers/2003/2003.13767.pdf (2020).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Vasudevan, R. K. et al. Investigating phase transitions from local crystallographic analysis based on statistical learning of atomic environments in 2D MoS2-ReS2. Appl. Phys. Rev. 8, 011409 (2021).

Gong, Y. J. et al. Band gap engineering and layer-by-layer mapping of selenium-doped molybdenum disulphide. Nano Lett. 14, 442–449 (2014).

Dumcenco, D. O., Kobayashi, H., Liu, Z., Huang, Y. S. & Suenaga, K. Visualization and quantification of transition metal atomic mixing in Mo1-xWxS2 single layers. Nat. Commun. 4, 2351 (2013).

Chang, C. C. & Lin, C. J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2, 27 (2011).

Ghafoori, Z. et al. Efficient unsupervised parameter estimation for one-class support vector machines. IEEE Trans. Neural Netw. Learn. Syst. 29, 5057–5070 (2018).

Ghafoori Z., Rajasegarar S., Erfani S.M., Karunasekera S., Leckie C.A. (2016) Unsupervised Parameter Estimation for One-Class Support Vector Machines. In: Bailey J., Khan L., Washio T., Dobbie G., Huang J., Wang R. (eds) Advances in Knowledge Discovery and Data Mining. PAKDD 2016. Lecture Notes in Computer Science, vol 9652. Springer, Cham.

Mccullough, J. D. & Trueblood, K. N. The crystal structure of Baddeleyite (monoclinic ZrO2). Acta Cryst. 12, 507–511 (1959).

Carlton, C. E. & Ferreira, P. J. Are dislocations present in nanoparticles?: Fourier filtering of images obtained from in-situ TEM nanoindentation. Microsc. Microanal. 15, 736–737 (2009).

Zheng, S. et al. Super-compression of large electron microscopy time series by deep compressive sensing learning. Patterns 2, 100292 (2021).

Ding, G. et al. A joint deep learning model to recover information and reduce artifacts in missing-wedge sinograms for electron tomography and beyond. Sci. Rep. 9, 12803 (2019).

Wang et al. 0.7 Å resolution electron tomography enabled by deep learning aided information recovery. Adv. Intell. Syst. 2, 2000152 (2020).

Sang, X. H. et al. In situ atomistic insight into the growth mechanisms of single layer 2D transition metal carbides. Nat. Commun. 9, 2266 (2018).

Leiter, R., Li, Y. L. & Kaiser, U. In-situ formation and evolution of atomic defects in monolayer WSe(2) under electron irradiation. Nanotechnology 31, 495704 (2020).

Hashimoto, A., Suenaga, K., Gloter, A., Urita, K. & Iijima, S. Direct evidence for atomic defects in graphene layers. Nature 430, 870–873 (2004).

Xin, H. L. L., Niu, K. Y., Alsem, D. H. & Zheng, H. M. In situ TEM study of catalytic nanoparticle reactions in atmospheric pressure gas environment. Microsc. Microanal. 19, 1558–1568 (2013).

Susi, T. et al. Silicon-carbon bond inversions driven by 60-keV electrons in graphene. Phys. Rev. Lett. 113, 115501 (2014).

Susi, T., Meyer, J. C. & Kotakoski, J. Manipulating low-dimensional materials down to the level of single atoms with electron irradiation. Ultramicroscopy 180, 163–172 (2017).

Kalinin, S. V., Borisevich, A. & Jesse, S. Fire up the atom forge. Nature 539, 485–487 (2016).

Sang, X. H. et al. Dynamic scan control in STEM: spiral scans. Adv. Struct. Chem. Imag. 2, 6 (2016).

Kingma, D. P. & Welling, M. Auto-encoding variational bayes. Preprint at https://arxiv.org/abs/1312.6114 (2013).

Im, D. J., Ahn, S., Memisevic, R. & Bengio, Y. Denoising Criterion for Variational Auto-Encoding Framework. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence (AAAI-17), 2059–2065 (2017).

Hytch, M. J., Snoeck, E. & Kilaas, R. Quantitative measurement of displacement and strain fields from HREM micrographs. Ultramicroscopy 74, 131–146 (1998).

Guo, Y. et al. Automatic detection of crystallographic defects in STEM images by unsupervised learning with translational invariance. Microsc. Microanal. 27, 1460–1462 (2021).

Acknowledgements

This effort is primarily based upon work supported by the U.S. Department of Energy (DOE), Office of Science, Basic Energy Sciences (BES), Materials Sciences and Engineering Division (Y.G., S.V.K., and A.R.L.). Electron microscopy with Nion UltraSTEM 100 and TEM sample preparation were performed at the Oak Ridge National Laboratory’s Center for Nanophase Materials Sciences (CNMS), a U.S. Department of Energy Office of Science User Facility. S.V.K. and A.V.D. acknowledge support through the Materials Genome Initiative funding allocated to NIST.

Disclaimer

Certain commercial equipment, instruments, or materials are identified in this paper to specify the experimental procedure adequately. Such identification is not intended to imply recommendation or endorsement by the National Institute of Standards and Technology, nor is it intended to imply that the materials or equipment identified are necessarily the best available for the purpose.

Author information

Authors and Affiliations

Contributions

Y.G. conceived the ideas and wrote the python codes for the paper. Y.G. and A.R.L. wrote the manuscript. S.V.K. supervised Y.G. on machine learning and python programming, and contributed to discussions. A.R.L. supervised Y.G. on performing electron microscopy. Y.G. and Q.G. performed the electron microscopy. H.C., K.X., and Y.G. prepared the TEM samples of (MoW)Te2, and Q.G. prepared the TEM sample of ZrO2 nanoparticles. S.K. and A.V.D. grew the crystal of (MoW)Te2. All authors agreed on the final draft.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Guo, Y., Kalinin, S.V., Cai, H. et al. Defect detection in atomic-resolution images via unsupervised learning with translational invariance. npj Comput Mater 7, 180 (2021). https://doi.org/10.1038/s41524-021-00642-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-021-00642-1

This article is cited by

-

Automatic identification of crystal structures and interfaces via artificial-intelligence-based electron microscopy

npj Computational Materials (2023)

-

Leveraging generative adversarial networks to create realistic scanning transmission electron microscopy images

npj Computational Materials (2023)

-

AtomGAN: unsupervised deep learning for fast and accurate defect detection of 2D materials at the atomic scale

Science China Information Sciences (2023)