Abstract

Artificial Intelligence (AI) is currently experiencing a bloom driven by deep learning (DL) techniques, which rely on networks of connected simple computing units operating in parallel. The low communication bandwidth between memory and processing units in conventional von Neumann machines does not support the requirements of emerging applications that rely extensively on large sets of data. More recent computing paradigms, such as high parallelization and near-memory computing, help alleviate the data communication bottleneck to some extent, but paradigm- shifting concepts are required. Memristors, a novel beyond-complementary metal-oxide-semiconductor (CMOS) technology, are a promising choice for memory devices due to their unique intrinsic device-level properties, enabling both storing and computing with a small, massively-parallel footprint at low power. Theoretically, this directly translates to a major boost in energy efficiency and computational throughput, but various practical challenges remain. In this work we review the latest efforts for achieving hardware-based memristive artificial neural networks (ANNs), describing with detail the working principia of each block and the different design alternatives with their own advantages and disadvantages, as well as the tools required for accurate estimation of performance metrics. Ultimately, we aim to provide a comprehensive protocol of the materials and methods involved in memristive neural networks to those aiming to start working in this field and the experts looking for a holistic approach.

Similar content being viewed by others

Introduction

The development of sophisticated artificial neural networks (ANNs) has become one of the highest priorities of technological companies and governments of wealthy countries, as they can boost the fabrication of artificial intelligence (AI) systems that generate economic and social benefits in multiple fields (e.g., logistics, commerce, health care, national security, etc.)1. ANNs are able to compute and store the huge amount of electronic data produced (either by humans or machines), and to execute complex operations with them. Examples of electronic products that contain ANNs with which we interact in our daily lives are those that identify biometric patterns (e.g., face, fingerprint) for access control in smartphones2 or online banking apps3, and those that identify objects in images from social networks4 and security/traffic cameras5. Beyond image recognition, other examples are the engines that convert speech to text in computers and smartphones6, natural language processing as for example the novel automated chat system chat-GPT7, and those that provide accurate recommendations for online shopping based on previous behaviours from ourselves and/or people in our network8.

ANNs can be understood as the implementation of a sequence of mathematical operations. The structure of ANNs consists of multiple nodes (called neurons) interconnected to each other (by synapses), and the learning is implemented by adjusting the strength (weight) of such connections. Modern ANNs are implemented via software in general-purpose computing systems based on a central processing unit (CPU) and a memory —the so-called Von Neumann architecture9. However, in this architecture a large amount of the energy consumption and computing time is related to continuous data exchange between both units, which is not efficient. The computing time can be accelerated by using graphics processing units (GPUs) to implement the ANNs (see Fig. 1a), as these can perform multiple operations in parallel10,11,12. However, this approach consumes even more energy, which requires large computing systems and thereby cannot be integrated in mobile devices. Another option is to use field programable gate arrays (FPGAs), which consume much less energy than GPUs while providing an intermediate computing efficiency between CPUs and GPUs13,14,15,16,17. A survey carried out by Guo et al.18 on the existing hardware solutions for ANN implementation and their performance is condensed in Fig. 1b.

a The increase in computing power demands over the past four decades expressed in petaFLOPS per days. Until 2012, computing power demand doubled every 24 months; recently this has shortened to approximately every 2 months. The colour legend indicates different application domains10. Mehonic, A., Kenyon, A.J. Brain-inspired computing needs a master plan. Nature 604, 255–260 (2022), reproduced with permission from SNCSC. b A comparison of neural network accelerators for FPGA, ASIC, and GPU devices in terms of speed and power consumption. GOP/s giga operations per second, TOP/s tera operations per second.

In the past few years, some companies and universities have presented application specific integrated circuits (ASICs) based on the complementary metal oxide semiconductor (CMOS) technology that are capable to compute and store information in the same unit. This allow such ASICs to perform multiple operations in parallel very fast, making them capable of mimicking, directly in the hardware, the behaviour of the neurons and synapses in the ANN. A comprehensive list of these ASICs comprising those such as the Google TPU19, Amazon inferentia20, Tesla NPU21, etc., are summarized in ref. 22. Such integrated circuits can be grouped in two categories. On one hand, dataflow processors are custom-designed processors for neural network inference and training. Since neural network training and inference computations can be entirely deterministically laid out, they are amenable to dataflow processing in which computations, memory accesses, and inter-ALU communications actions are explicitly/statically programmed or placed-and-routed onto the computational hardware. On the other hand, processor in memory (PIM) accelerators integrate processing elements with memory technology. Among such PIM accelerators are those based on an analogue computing technology that augments flash memory circuits with in-place analogue multiply-add capabilities. Please refer to the references for the Mythic23 and Gyrfalcon24 accelerators for more details on this innovative technology.

Previously mentioned ANNs and those reported in detail in the survey presented in ref. 22 belongs to the subgroup of so-called deep neural networks (DNNs). In a DNN the information is represented with values that are continuous in time and can achieve high data recognition accuracy by using at least two layers of nonlinear neurons interconnected by adjustable synaptic weights25. Conversely, there is an alternative information codification which gave birth to another type of ANNs, the Spiking Neural Networks (SNN). In SNNs the information is coded with time-dependent spikes, which remarkably reduces the power consumption compared to DNNs26. Moreover, the functioning of SNNs is more similar to the actual functioning of biological neural networks, and it can help to understand complex mammal’s neural systems. Intel probably has the most extensive research program for evaluating the commercial viability of SNN accelerators with their Loihi technology27,28, and Intel Neuromorphic Development Community29. Among the applications that have been explored with Loihi are target classification in synthetic aperture radar and optical imagery30, automotive scene analysis31, and spectrogram encoder27. Further, one company, Innatera, has announced a commercial SNN processor32. Also, the platforms developed by IBM (TrueNorth33), and Tsingshua34 are well known examples of the research effort of both the industry and the academia in this field.

However, fully-CMOS implementations of ANNs require tens of devices to simulate each synapse, which threatens energy and area efficiency, and thereby renders large-scale systems impractical. As a result, the performance of CMOS-based ANNs is still very far from that of biological neural networks. To emulate the complexity and ultra-low power consumption of biological neural networks, hardware platforms for ANNs must achieve an ultra-high integration density (>1 Terabyte per cm2) and low energy consumption (<10 fJ per operation)35.

Recent studies have proposed that the use of memristive devices to emulate the synapses may accelerate ANN computational tasks while reducing the overall power consumption and footprint36,37,38,39,40,41,42. Memristive devices are materials systems whose electrical resistance can be adjusted to two or more stable (i.e., non-volatile) states by applying electrical stresses43. Memristive devices that exhibit two non-volatile states are already being commercialized as standalone memory44,45, although their global market is still small (~621 million USD by 2020, i.e., ~0.5% of the 127-billion-worth standalone memory market46). However, memristive devices can also exhibit three disruptive attributes particularly suitable for the hardware implementation of ANNs: i) the possibility to program multiple non-volatile states (up to ~10047,48, and even ~100049), ii) a low-energy consumption for switching (~10fJ per state transition with zero-static consumption when idle50), and iii) a scalable structure appropriate for matrix integration (often referred to as crossbar51) and even 3D stacking52. Moreover, the switching time can be as short as 85 ps42.

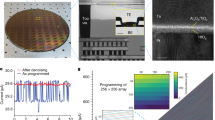

So far, several groups and companies have claimed the realization of hybrid CMOS/memristor implementations of ANNs53,54,55,56,57,58,59,60,61, —from now on, memristive ANNs— with performance that is superior to that of fully-CMOS counterparts. However, most of those studies in fact only measured the figures-of-merit of one/few devices and simulated the accuracy of an ANN via software62,63,64,65,66,67 in such type of studies the connection between the memristors fabricated and the ANN is relatively weak. Few studies went beyond that and built/characterized crossbar arrays of memristive devices48,68,69,70, but that are still very far from real full-hardware implementations of all the mathematical operations required by the ANN. The most advanced studies in this field have reported fully integrated memristor-based compute-in-memory systems48,53,54,55,58,59,61,71,72,73, but a systematic description of essential details on the device structure or circuit architecture are generally lacking in these reports.

In this article we provide a comprehensive step-by-step description of the hardware implementation of memristive ANNs for image classification —the most studied application often used to benchmark performance, describing all the necessary building blocks and the information processing flow. For clarity, we consider relatively simple networks, being the multilayer perceptron the most complex case. We take into account the challenges arising at both the device and circuit levels and discuss a SPICE-based approach for their study in the design stage, as well as the required circuital topologies for the fabrication of a memristive ANN.

Structure of memristor-based ANNs

Figure 2 shows a flowgraph depicting the generalized structure of an ANN; it has multiple inputs (for single channel images like indexed color, grayscale and bitmap images, there are as many inputs as pixels the image to classify has) and several outputs (as many as types/classes of images the ANN will recognize). As it can be seen, the ANN consists of multiple mathematical operations (green boxes), such as vector matrix multiplication (VMM), activation function, and softargmax function. Among all the critical operations in the ANN, the VMM is the most complex and demanding, and it is carried out multiple times both during the training process and inference. Hence, the development of new hardware for ANN implementation is strongly oriented to realize VMM operations in a more efficient way. Interestingly, the VMM operation —often understood as multiply and accumulate (MAC) routine—can be implemented using a crossbar array of memory elements. Those memory devices could be either charge-based memories as well as resistance-based memories25,74.

Green blocks (3, 5, 7 and 8) indicate the required mathematical operations (such as the VMM or activation functions). Red blocks (1, 2, 4, 6, 9, 11, 12, 13, 14, 15, 16) identify the required circuits for signal adaptation and/or conversion. The data path followed during the inference (or forward pass) is indicated by the red arrows/lines. The data path followed for in-situ training is indicated by the blue arrows/lines. The data path followed under ex-situ training is shown by the yellow arrows/lines. For each box, the upper (colored) part indicates the name of the function to realize by the circuital block, and the bottom part indicates the type of hardware required. The box titled successive neural layers would encompass multiple sub-blocks with a structure similar to the group titled First neural layer. 1S1R stands for 1Selector 1 Resistor while 1R stands for 1 Resistor. UART, SPI and I2C are well known communication standards. RISC stands for Reduced Instruction Set Computer.

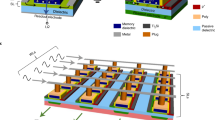

Before explaining memristive hardware for ANN, in this paragraph we describe the state of the art of CMOS hardware for ANNs, to provide the author with a comprehensive picture of the different technologies available for hardware based ANNs. Among charge-based memories, SRAM cells (a bi-stable transistor structure typically made of two CMOS inverters connected back-to-back which retains a charge concentration, see Fig. 3a for an example of the structure of a crossbar array of 6T SRAM) have been widely used for VMM75,76,77. If the elements of the input vector and the weight matrix are limited to signed binary values, the multiply operation is simplified to a combination of XNOR and ADD functions carried out directly through SRAM cells. An example of this is the work by Khwa et al., which reports a compute in memory system based on a crossbar array of 6T SRAM memory cells as binary synaptic connections that uses binary inputs/outputs78. The proposed circuit comprises 4 kb synapses fabricated in a 65 nm CMOS process and reported an energy efficiency of 55.8 TOPS per W. In cases where x is non-binary, one approach is to employ capacitors in addition to the SRAM cells76,77,79, involving a three-step process. However, a major drawback of SRAM memories is their volatile nature. Due to the low field-effect transistor barrier height (0.5 eV), the charge constantly needs to be replenished from an external source and hence SRAM always needs to be connected to a power supply. An alternative memory element for VMM operation is the flash memory cell80,81, in which the charge storage node is coupled to the gate of a FET with charge stored either on a conductive electrode surrounded by insulators (floating gate) or in discrete traps within a defective insulator layer (charge trapping layer). Unlike in SRAM, the barrier height of the storage node is sufficiently high for long-term data retention. Also, flash-based VMM operates in a slightly different manner than SRAM-based VMM. In Flash-based VMM, each memory element contribute a different amount to the current in each column of the crossbar depending on the voltage applied to the input or crossbar row and matrix element are stored as charge on the floating gate81 (i.e., multiplication) and all the currents in a column are instantaneously summed (i.e., accumulation) by Kirchhoff’s currents law. Because the devices can be accessed in parallel along a BL, NOR Flash has generally been preferred over NAND Flash for in-memory computing. This is the case of the work by Fick et al from the company Mythic23, which relies on a 1024×1024 NOR Flash array to develop an analogue matrix processor for human pose detection in real time video processing. However, there is recent work describing the use of 3D NAND, consisting of vertically stacked layers of serially connected FLASH devices, whereby each layer of the array encodes a unique matrix82. This approach could help to overcome the scalability issue of NOR Flash, which is difficult to scale beyond the 28 nm technology node. The proposed 3D-aCortex accelerator83 is a fully CMOS implementation that relies on a commercial 3D-NAND flash crossbar array as synaptic element. Partial outputs from multiple crossbars are temporally aggregated and digitized using digital counters, shared by all the crossbars along a row of the grid, avoiding the communication overhead of performing these reductions across multiple levels of hierarchy. The entire 3D array shares a global memory and a column of peripheral circuits, increasing its storage efficiency. This is however still theoretical and is yet to be fabricated. Nonetheless, the write operation on flash memories requires high voltages (typically >10 V) and entails significant latency (>10 µs) due to the need to overcome the storage node barriers. These problems can be potentially solved using resistance-based memories, or memristors as memory element at the intersections of the crossbar, as they can realize the multiplication operation by Ohm’s Law (I=V·G, where I is current, V is the input voltage and G is the conductance of each memristor), while reducing the energy consumption and area footprint as well as providing CMOS compatible operation voltages. The structure of memristive crossbar arrays for VMM is depicted in Fig. 3b, c: a common integration option is to place a CMOS transistor in series with the memristor to control the current through it (Fig. 3b) in a so called 1 transistor 1 resistor (1T1R) structure, while the highest integration density would be achieved by a crossbar comprising no transistors, i.e., considering cells usually referred to as 1 resistor/memristor (1R or 1M) structures or passive crossbar (Fig. 3c). When using crossbar arrays of memristors to perform VMM operations, additional circuitry might be needed at the input and output to sense and/or convert electrical signals (see red boxes in Fig. 2). Examples of such circuits are digital-to-analogue (DAC), analogue-to-digital (ADC) converters and transimpedance amplifiers (TIA). Note that other studies employed implementations slightly different from this scheme, i.e., combining or avoiding certain blocks to save area and/or reduce power consumption (see Table 1).

a Full-CMOS SRAM (Static Random Access Memory) crossbar array, b Hybrid memristor/CMOS 1T1R crossbar array and c Full-memristive passive crossbar array. All cases assume a crossbar array integration structure which performs the Multiply-and-Accumulate (MAC) by exploiting the Kirchhoff’s law of currents. The use of memristors allows a smaller footprint per synapse as a lower number of smaller devices is employed. Passive crossbar arrays of memristors allow the highest possible integration density, yet they are still an immature technology with plenty of room for optimization. a290 Yamaoka, M. Low-Power SRAM. In: Kawahara, T., Mizuno, H. (eds) Green Computing with Emerging Memory. Springer, New York, NY (2013), reproduced with permission from SNCSC. b is adapted with permission under CC BY 4.0 license from ref. 54. c is adapted with permission under CC BY 4.0 license from ref. 93. F is the feature size of the litography and the energy estimation is on the cell-level. FEOL and BEOL stands for Front End Of Line and Back End Of Line, respectively.

In the following subsections we describe in detail all the circuital blocks required for a truly full-hardware implementation of a memristive ANN. To provide both a clear global picture and detailed explanations, the titles of the sub-sections correspond to the names of the blocks in Fig. 2.

Image capture hardware (block 1) and input vector conformation (block 3)

An image (or pattern) is a collection of pixels with different colours arranged in a matrix form (referred as p×p in this article). In this work, we will consider grayscale images, in which the colour of those pixels can be codified by one single value. However, in coloured images, each pixel is represented by 3 (in RGB encoding) or 4 (in CMYK encoding) values, this arranged in a tensor fashion, i.e., p×p×3 or p×p×4. Both the training and testing of an ANN for image classification are conducted by presenting large datasets of images to its inputs. In a real ANN each image could come directly from an embedded camera (block 1), or it could be provided as a file by the user (block 2). Depending on the format of the image (e.g., black/white, 8-bit *.bmp, 24-bit *.bmp, *.jpg, *.png, among many others) the range of possible colours (encoded as numerical values) for each pixel will be different. Each of the above mentioned approaches to feed images to the neural network implies different hardware overhead. For the case of on-the-fly image classification, a CMOS imager is necessary to capture the input images82,83. For instance, ref. 84 uses a 480×330 pixel image sensor, with each pixel consisting of a photo diode and four transistors that generates an analogue signal whose amplitude is proportional to the light intensity. Then a 5×6 pixel binary image is generated by mapping 96×55 neighbourhood pixels into one pixel in the binary image. A similar approach is considered in ref. 85 where a 640×480 pixels image is captured by an image sensor and then resized to a 16×16 image. The resizing procedure and the need of such a procedure will be covered later in this Sub-section. Both cases consider an FPGA in order interface the image acquisition system (i.e. CMOS image sensor and the resizing algorithm) with the memristor crossbar and its peripheral circuitry. On the other hand, some studies exclusively focused on the memristor crossbar use an on-chip communication interface to acquire the image from a computer (e.g. ref. 54 uses a serial communication port) already shaped in the required input format.

Regarding the input images, there are multiple datasets of images online available for ANN training and testing. Some of the most commonly used ones are: 1) MNIST (Modified National Institute of Standards and Technology), which is basically a dataset containing 70,000 greyscale images showing handwritten numbers from 0 to 9 (i.e., around 7,000 for each number); 60,000 of them used for training and 10,000 for testing86; 2) CIFAR (Canadian Institute for Advanced Research), which contains 60, 000 color images divided into 10 classes for CIFAR-10 and 100 classes for CIFAR-10087; 3) ImageNet, one of the largest image datasets, which consists of over 1.2 million labelled from 1000 classes for the ImageNet competition88. MNIST is a good starting point, since this simple dataset can be classified with even small neural networks. For benchmarking a device or a chip, it is essential to evaluate the accuracy of standard deep neural network models like VGG89 and ResNet90 on CIFAR and ImageNet dataset by utilizing architecture-level simulation and realistic hardware statistics91,92. For clarity, here we illustrate with MNIST dataset. The number of types/classes of images (referred to as m in this article) in the MNIST dataset is 10. The images are compressed in a *.idx3-ubyte file that can be opened with MATLAB; each of them comes in grayscale and with a resolution of 28×28 pixels. In Python, the MNIST images can be found embedded in a library named Keras. The training images are used to let the ANN understand the characteristic features of each pattern (i.e., the numbers), and the testing images are presented to the ANN (after training) to be classified. A few examples of these images can be seen in Fig. 4a, where the X and Y axis stand for the pixel index. Pixel’s brightness is codified in 256 grey levels between 0 (fully OFF, black) and 255 (fully ON, white). In the MNIST dataset, each of the 60,000 p×p training images is represented as a p2×1 column vector, and all these vectors are horizontally concatenated to render a p2×60,000 matrix. Similarly, the test dataset consists of a p2×10,000 matrix. In both cases, each of the p2 pixels must be fed to the crossbar array for further processing.

a Samples of the MNIST dataset of handwritten numeric digits considered in this article. In all cases images are represented in 28 × 28 px. Pixel brightness (or intensity) is codified in 256 levels ranging from 0 (fully OFF, black) to 1 (fully ON, white). b Readability loss as the resolution decreases from 28 × 28 pixels (case I) to 8 × 8 (case IV). c Schematic representation of the unrolling of the image pixels. Note that each of the n image columns of pixels are vertically concatenated to reach a n2 × 1 column vector. It is then scaled by VREAD to produce a vector of analogue voltages that is fed to the ANN.

As previously mentioned, the simplest ANN architectures (multi-layer perceptrons) should have as many inputs as pixels there are in the images to be classified. In software based ANNs, this is not a challenge. However, the available inputs in hardware ANNs are limited by the maximal size of the memristor crossbar. In the literature, such a challenge has been tackled considering different approaches: For instance, given the MNIST dataset in which images have a resolution of 28 × 28 pixels one option is to implement the synaptic layer using multiple crossbars to fit the 784 inputs (e.g., 13 64 × 64 or 4 256 × 256 crossbars would be needed93). However, for research efforts focused on the device level, this is usually out of reach as requires a non-straightforward CMOS – memristor integration. Another option is to consider more complex neural networks, such as the convolutional neural networks (CNN)55. LeNet-5 (a kind of CNN) first layer is 25 × 6, which can be implemented with a 64×64 crossbar. In fact, image classification tasks in modern deep learning usually rely on a convolutional layer. As for the previous case, this is not easy to implement for research projects centred on the device level as it also requires complex hybrid CMOS – memristor integration. Nonetheless, in some cases, the first convolutional layers are implemented on software and off-chip to reduce the image dimensionality and then the resulting feature vector is feed to the memristive part of the ANN. Note that in this case, device non-idealities are not equally represented through-out the network, and their influence is only assessed for the fully-connected part55. Finally, other option is to rescale each of the images of the original MNIST dataset (in this work, represented by block 3). For example, if our crossbar has 64 inputs, then the image would have to be rescaled from 28 × 28 to 8 × 8 (i.e., 64 pixels); the size of the rescaled image will be referred as n×n. The rescaling can be easily done via software, using for example MATLAB and its Deep Learning Toolbox as language/platform to carry out this type of computational operations, or Python altogether with the TensorFlow, Keras or Pytorch libraries. However, and as shown in Fig. 4b, the aggressively rescaled images becomes barely readable and therefore the entire dataset is changed and so it is the benchmark, i.e. inference results obtained for the 8×8 MNIST rescaled images should only be compared with 8×8 MNIST results and not with the original MNIST benchmark results. This is similar to using a custom-made dataset. With this in mind, and provided the frequent use of this methodology in the literature, we will consider its usage yet stressing the aforementioned considerations, and we encourage authors not to rescale the image dataset if aiming to compare their results against the original datasets.

As an example, Supplementary Algorithm 1 shows the MATLAB code used for image dataset rescaling from 28 × 28 to 8 × 8 pixels. Before downscaling the images, each of them needs to be reshaped from a p2×1 column vector to a p×p matrix, using the MATLAB function reshape(). Then, the image is resized to the desired n×n size in pixels by the MATLAB function imresize()94. This function receives as argument the desired down-sampling method, which in this example was selected to be the bi-cubic interpolation (as in other articles in the field of memristive ANNs54). The results of the rescaling for a single image are shown in Fig. 4b. Note that using this method, values outside the [0, 1] range are expected. Thereby, the downscaled image is processed and any output value exceeding such range is truncated to 0 or 1. The re-scaled images are then reshaped back to the n2×1 column vector representation format and stored in a new matrix. Now this image can be used as input in the crossbar array of memristors.

Input driving circuits (Block 4)

The colour of each pixel in the image (represented as n2 × 1 column) is codified as a voltage that is applied to a row in the crossbar (i.e., word-line), as depicted in Fig. 4c, resulting in a vector V of analogue voltages Vi. If the image is black-white (i.e., 2 possible values), the values of the voltage Vi of each pixel will be 0 and VREAD (VREAD being a reference voltage defined by the application); however, the colour of each pixel can also range within a greyscale, which leads to a range of analogue voltages. For instance, the colour of each pixel in the 8-bits p×p images of the MNIST dataset (and hence, the colour of each pixel in the resized n×n image to be input to the crossbar) varies within a greyscale of 28 = 256 possible values (codified in binary representation from 00000000 to 11111111), meaning that the voltages to be applied to each input of the crossbar may take values such as 0V, VREAD/256, 2·VREAD/256, etcetera until VREAD. Hence, an 8-bit digital-to-analogue converter (DACs) is necessary for each input to convert the 8-bits-code into a single voltage. When the ANN is employed to recognize other types of images codified with a different format (e.g., 24-bit), DACs of different resolution are needed. The format in which the images are presented depends on the ultimate application of the network, i.e., ANNs for plate number identification may work well with black/white (i.e., 1-bit) images, and ANNs for object identification may need to consider 24 bits (16.7 million) colours. Examples of DACs often employed in memristive ANNs are displayed in Fig. 5: N-bit weighted Binary (Fig. 5a), Current-steering DAC Fig. 5b, Memristive-DAC (Fig. 5c), N-bit R-2R DAC (Fig. 5d) and Pulse Width Modulation (PWM)-based DAC (Fig. 5e).

Deciding the resolution of the DACs at the input of each row of the crossbar is a critical factor affecting power consumption, area, and output impedance of the ANN —lowering impedance is important to realize large crossbars. Conventional high-resolution DACs with a low output impedance comprise a DAC core with an operational amplifier (in a buffer configuration) as output stage in order to lower the output resistance. As such, the power dissipation of the DAC can be divided into the switching/leakage power of the digital DAC core and the static/dynamic power of the operational amplifier. On one hand, the power dissipation of the digital DAC core can be estimated as PD = fDCDV2+Pleakage, where fD is the output frequency, CD is the parasitic capacitance, V is the supply voltage, and Pleakage is the leakage power that depends on the technology node, and for a 65 nm technology with a 1 V power supply is of several pico-Watts in an inverter. On the other hand, the power dissipation of the analogue block can be estimated by assuming a class-AB follower stage, with an efficiency of 50%. In this scenario the static power of this block equals its dynamic power and the addition of them can be computed as PA=nV/R2, where n is the number of memristors to drive and R is their minimum resistance. Below frequencies of roughly 100 MHz, PA is dominant, whereas above this threshold, the dissipated power during the switching makes PD bigger than PA.

Regarding the silicon area required for the DACs, this is mainly defined by the DAC resolution, which in turn is limited by device noise element matching. For DAC relying in resistors, the major noise source is from the CMOS operational amplifier in the output stage95, and it can be minimized using larger transistors (both in width and length) for the differential input pair. Similarly, to maximize the matching between the reference resistors, wider devices are encouraged, ultimately contributing to the increase in the silicon area required per DAC.

To minimize silicon area and power consumption, the lower the DAC resolution the better. As a result, apart from amplitude-based encoding for crossbar inputs, time-encoding schemes are also considered96. For instance, in pulse-width modulation (PWM) schemes, inputs are codified in different pulse widths (0 s, TREAD/256 s, 2·TREAD/256 s, etc. until TREAD). This allows overcoming device non-linearity but suffers from low throughput57. Alternatively, in the so-called bit-serial encoding97 approaches, high-resolution crossbar inputs are presented as a stream of voltage pulses with constant amplitude and width48,56. For example, to represent 16-bit crossbar inputs, m-bit voltage signals are streamed to the crossbar row over 16/m time cycles98. After VMM calculation, the partial products (the outputs of each time step) are accumulated together to form the final output value. Also, many papers55,60,99,100, have explored the case of ANNs with binarized inputs, as they employ the simplest DACs (1-bit). In the case of the 1-bit input stream, DACs can also be replaced by inverters followed by an output amplifier to allow the inverter to drive all the devices connected to it98. In addition, the computation with time-encoded inputs is less affected by the noise variations, which mostly affect the amplitude of the input signals rather than the pulse width. However, the disadvantage of time-encoding schemes is the reduction of computation speed and hardware overhead required for partial sums computation96.

An alternative to keep a high throughput and still employ a low-resolution DAC is using approximate computing101. When using low-resolution DACs (1-, 2- or 3-bit) there is a higher chance of multiple inputs requiring the same driving voltage, which allows sharing DACs among several lines, and thereby saving both power and area. However, one has to keep in mind that the output resistance of the DAC limits the number wordlines that can be biased. Also, this approach requires the use of analogue multiplexers (block 11) in between the input driving circuits and the memristor crossbar which leads to additional control circuit overhead. The problem of using low-resolution DACs at the input of the crossbar is a loss in the accuracy of the VMM operation. Hence, there is an inherent trade-off between all these variables. The accuracy loss can also be reduced by exploiting software-based training techniques for quantized neural networks.

VMM core (Block 5)

The voltages generated by each DAC (which represent the colour of each pixel of the rescaled n2 × 1 image) are applied at the inputs (rows) of the n2 × m crossbar array of memristors. The conductance of each memristor within the crossbar describes the synaptic connection between each input neuron (ith) and each output neuron (jth). This scheme is used in various papers54,102. However, some others consider also a bias term added to the weighted sum fed to the neuron57. This can be done digitally and off-chip, or in the analogue domain. If done analogue, an additionally row in the crossbar is needed, thereby requiring a crossbar of (n2+1) × m. This operation produces a row vector of size 1 × m (see Eq. 1). In a conventional Von Neumann computing system, VMM is performed by doing each sub-operation (multiplications and sums) sequentially, which is time consuming; moreover the calculation time increases quadratically with the dimensionality of the input arrays103, or in the case of using the so-called Big-O notation, the VMM algorithm has a time complexity of ~O(n2). Memristor crossbars (such as the one shown in Fig. 6a) allow performing VMM much more easily and faster because all the sub-operations are carried out in parallel. In the crossbar, the brightness (colour) of each pixel in each image is codified in terms of analogue voltages and applied to the input rows (also called wordlines and connected to the memristor’s top electrodes), while the output columns (also called bitlines and connected to the memristor’s bottom electrodes) are grounded through a transimpedance amplifier (see Fig. 6b for an idealized representation). Then, the VMM is performed in an analogue fashion, as the current flowing through each memristor will be given by the voltage applied to the line and the conductance of each memristor (Iij = gij·Vi). Note that in a pair {i,j} i stands for the crossbar row, and j for the crossbar column. Then, the currents flowing through the memristors connected to a given bitline are summed and sensed to form the output vector. Let us consider the following notation to better explain this idea:

a Sketch of the crossbar array structure. Red and blue arrows exemplify the electron flow through the memristors connecting the top (Word lines -WL-) and bottom lines (Bit lines -BL-). Different memristor resistance states are schematically represented (High Resistance State -HRS- to Low Resistance State -LRS-). The dashed blue line depicts the so-called sneak path problem. The parasitic wire resistance is indicated for WLi and BLi. Reproduced with permission under CCBY 4.0 license from ref. 253. b Equivalent circuit representation of the CPA sketched in a, showing the input voltages, output currents and TIA blocks that translates the output CPA current to a vector of analogue voltages. In this case the circuit was simplified by ignoring the line resistances. Finally, two different realizations of the memristive-based ANN synaptic layer are shown in c – unbalanced – and d – balanced –.

For the classification of the MNIST images with a n×n pixel resolution with an ANN, multiple VMM operations are required, in which the matrix of conductances gij in Eq. 1 is defined based on the matrix \({{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}\) of synaptic weights, which has a size of n2 × 10, and all the numbers that form it are real numbers (\({{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}\in {{\mathbb{R}}}^{{n}^{2}\times 10}\)) with both positive and negative values being possible —the way in which \({{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}\) is calculated is described in detail in section ANN training and synaptic weight update (Blocks 2, 11-15): Learning algorithm. As the negative values cannot be represented directly with memristors, some strategies have been adopted. Reference 104 added an extra column in the crossbar (named reference column, see blue arrow in Fig. 6c) with all its memristors set to 0.5·GLRS, so totalling n2 × (m + 1) memristors in the crossbar. Then, the total current at the {j} output of the crossbar is obtained by subtracting the current generated by the reference column {ref} to the current generated from a {j} column (see Fig. 6c). This concept is mathematically represented in Eq. 2.

where gref stands for the 0.5·GLRS conductances of the reference column and \({{g}^{{\prime} }}_{{{{{{\rm{i}}}}}},{{{{{\rm{j}}}}}}}\) is calculated in such a way that devices with a conductance above 0.5·GLRS produce positive synaptic weights, and those with a conductance below 0.5·GLRS produce negative synaptic weights104. This strategy has two disadvantages: on one hand, one can only employ half of the states exhibited by the memristor for the positive weights and the other half for the negative weights, thus reducing the range between the maximum and minimum weight. On the other hand, routing the reference column to the rest of the crossbar columns to make the corresponding subtraction operation, is not trivial. Another strategy is to use two memristors per synaptic weight, resulting in two crossbars of n2 × 10 (20n2 synapses)105,106. Within this approach, Eq. 2 could be re-written as

Where the positive and negative conductances are codified by a pair of two adjacent memristors (\({{g}^{+}}_{{{{{{\rm{i}}}}}},{{{{{\rm{j}}}}}}}\) and \({{g}^{-}}_{{{{{{\rm{i}}}}}},{{{{{\rm{j}}}}}}}\)), each of them set to a positive value of conductance. This representation method, shown in Fig. 6d, has been chosen in this study because it doubles the range of conductance levels of the crossbar, making it less susceptible to noise and variability104.

To calculate the required conductance value for each of the memristors in the pair, we begin by splitting WM into two matrices \({{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{+}}}}}}}\) and \({{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{-}}}}}}}\) as:

each of them containing only positive weights, so that \({{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}{{{{{\boldsymbol{=}}}}}}{{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{+}}}}}}}{{{{{\boldsymbol{-}}}}}}{{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{-}}}}}}}\). The matrix in the left side (\({{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}\), containing both positive and negative values) can be represented as a difference between the two matrices in the right side (\({{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{+}}}}}}}\) and \({{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{-}}}}}}}\), both containing only positive numbers). Thereby, by applying Eq. 4, we obtain \({{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{+}}}}}}}\) by replacing all the negative elements from \({{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}\) by 0, while \({{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{-}}}}}}}\) was obtained by first multiplying matrix \({{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}\) by -1 and then replacing al the negative values by 0.

In the next step, the conductance matrices \({{{{{{\bf{G}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{+}}}}}}}\) and \({{{{{{\bf{G}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{-}}}}}}}\) (Equation 5) to be mapped into the crossbars are calculated by employing a linear transformation,107,108:

here \({G}_{\min }\) and \({G}_{\max }\) are the minimal and maximal conductance values of the memristors in the crossbar, and \(\max \left\{\right.{{{{{\bf{W}}}}}}_{{{{{{\bf{M}}}}}}}\)} and \(\min \left\{\right.{{{{{\bf{W}}}}}}_{{{{{{\bf{M}}}}}}}\)} are the maximum and minimum values in WM. At this point, it is critical to note that this mapping strategy presents the synaptic weights from WM to a continuum of conductance values in the range \(\left[{G}_{\min },{G}_{\max }\right]\). However, it has been widely reported109,110,111, that the more states one memristor has, the more difficult to identify them, due to the inherent variability. Moreover, depending on the material and fabrication methods, some memristor devices can have only a limited number of stable conductance states. To deal with these non idealities, advanced mapping techniques have been proposed in the literature and they are summarized in Supplementary Note 1 and Supplementary Note 2, the latter focused on mitigating the heat-induced drift of synaptic weights. Thereby, when considering a device with a number x of states, each position of the resulting conductance matrices should have only x possible values. In order to exploit the entire dynamic range of the memristors (which would make easier to identify each conductance value), we consider \({G}_{\max }={G}_{{{{{{\rm{LRS}}}}}}}\) and \({G}_{\min }={G}_{{{{{{\rm{HRS}}}}}}}\), being GLRS and GHRS the conductance of the most and least conductive states (respectively). In this way, the synaptic weights in the \({{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{+}}}}}}}\) and \({{{{{{\bf{W}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{-}}}}}}}\) matrices are converted to conductance values within the range \(\left[{G}_{{{{{{\rm{HRS}}}}}}},{G}_{{{{{{\rm{LRS}}}}}}}\right].\)The following example illustrates the procedure to convert the WM matrix returned by the MATLAB training phase (i.e., a matrix of real values in the range [−5, 5]) into two crossbar arrays of memristors (considering that each memristor can have 6 linearly distributed resistive states at GHRS, 0.2·GLRS, 0.4·GLRS, 0.6·GLRS, 0.8·GLRS and GLRS):

First, the ex-situ training produces a matrix of n2×m synaptic weights:

Second, the synaptic weights are represented as the difference between two matrices:

Third, the weights are rounded to the closest state among the x available states:

Finally, the quantized weights are mapped to a conductance value:

The output value caused by a negative synaptic weight is achieved by subtracting the current flowing through the memristors connected to bitline i in \({{{{{{\bf{G}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{-}}}}}}}\) matrix from that in the corresponding bitline i in \({{{{{{\bf{G}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{+}}}}}}}\) matrix.

Sensing electronics (Block 6)

Once the input voltages are applied to the inputs (rows) of the crossbar, currents at the outputs (columns) are almost instantaneously generated, which need to be sensed. There are three widely used sensing modes for the output voltages112. The simplest approach is the use of a sensing resistor (Fig. 7a). However, grounding the bitlines through a resistor might alter the potential applied to the bitline, which will no longer be 0 volts, adding variability and thus altering the read over the sensing resistor100,112. To sense low currents without this problem, one option is to use trans-impedance amplifiers (TIA, see Fig. 7b). In this case, the crossbar bitlines are grounded through a TIA implemented with an operational amplifier or an operational transconductance amplifier which ensures the bitline potential to remain at 0 V. Although very popular102,113,114,115,116, this approach might be limited for the case of the smallest technology nodes implementations as the gain and bandwidth of the amplifiers are limited by the intrinsic transistor gain95,117. An alternative is to replace the TIA block by a charge-based accumulation circuit. This strategy was used to cope with pulse width modulation encoding that excludes the utilization of one TIA. Note that the same approach could be used along with other encoding techniques such as digitization of inputs and pulse amplitude modulation. In its most basic implementation, it is very similar to the use of a sensing resistor but replacing the resistor by a capacitor (see Fig. 7c). The capacitor then develops a voltage which is proportional to the integrated current flowing through it. As such, this method adds the time-dimension to the process of sensing the outputs: the current must be integrated over a constant and well-defined period of time to generate an output voltage. Note that in many cases, to reduce the current to be integrated (and thus the size of the integration capacitors), current divider circuits57 or differential pair integrators118 are considered (see Fig. 7d).

In all cases, the goal is to translate a current signal into a voltage signal. a The sensing resistor is the simplest case, as it translates current into voltage directly by the Ohm’s law. b The use of a TIA allows to connect the crossbar columns to 0 volts and operate with lower output currents. As well as in the resistor-based approach, the current voltage conversion is linear when operating the TIA within its linear range and the output voltage signal is immediately available as soon as the output of the TIA settles. c For currents below the nano-ampere regime, charge integration is the most suitable option for current-voltage conversion. This can be achieved by using a capacitor. As such, the measurement is not instantaneous as a constant, controllable integration time is required before the measurement. d To minimize the area requirements of the integration capacitor, the use of a current divider allows to further reduce the current and, with it, the size of the required capacitor. The tradeoff in this case is with precision (mainly due to transistor mismatch) and output voltage dynamic range.

Finally, note that the design choice of the sensing circuit will depend on the input signals to the memristor crossbar, as shown in Fig. 8. Assuming that the input signals of both positive and negative cells are of the same polarity, an independent sensing/transducing circuit is required for both the positive and negative bitline. Then a subtractor circuit (implemented for instance with an operational amplifier, as shown in Fig. 8a) generates an output voltage proportional to the current difference. On the contrary, when it is possible to apply input signals of different polarity to the \({{{{{{\bf{G}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{-}}}}}}}\) and \({{{{{{\bf{G}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{+}}}}}}}\) matrix, the sensing electronics can be simplified, as by connecting the i bitlines from the \({{{{{{\bf{G}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{-}}}}}}}\) and \({{{{{{\bf{G}}}}}}}_{{{{{{\bf{M}}}}}}}^{{{{{{\boldsymbol{+}}}}}}}\) directly performs the substraction in terms of currents, and thereby only one sensing amplifier is needed (as shown by the single transimpedance amplifier in Fig. 8b).

a Assuming that voltage inputs are unipolar (that is, only negative or positive), it is required to first transduce the current signals into voltage and then add an operational amplifier in a subtractor configuration. b If bipolar signals can be applied in the inputs, by biasing the negative synaptic weights with a voltage or opposite polarity, summing the resulting currents in a common node (Kirchhoff’s Law for Current) already solves the subtraction operation, and only one transimpedance amplifier is required per column.

Activation function (Block 7)

Ideally, the output current of each bitline (column) pair in a crossbar-based implementation of a VMM is a linear-weighted sum of all the wordlines (rows) connected to such column. Since a combination of linear functions results in a new linear function, complex nonlinear relationships could not be replicated by an ANN regardless of the number of the linear neural layers considered. This problem can be overcome by introducing a non-linearity transformation on the weighted sum output by each column. This is done by the so-called neuron activation functions, and the most common are: Sigmoid (also called Logistic)119,120, Hyperbolic Tangent120 and Rectified Linear Unit (ReLU)120,121. Also, for the particular case of pattern classification tasks, the output values of the VMM performed by the last neural layer have the added requirement of being mapped to the [−1, 1] or [0, 1] range as they indicate the probability of the input to belong to each class. To this end, the gap difference between the value of the most active output (column) and the rest needs to be compressed and the differences among the less active outputs, amplified. It must be noted, that although not necessary in the case of neural networks implemented in the software domain, in the case of neural networks based on memristor-VMM cores, the elements of the input vectors to each neural layer must be within a range of analogue voltages. For this reason, ReLU activation functions, which are by definition unbounded activation functions [0, ∞), needs to be slightly modified with an upper limit to prevent the alteration of the synaptic weights recorded in the neural layer memristors.

All these activation functions could be realized either in software or hardware, and each implementation has its own virtues and drawbacks. In this study, software-based implementations refer to the designs, where the calculation of the activation functions and processing of intermediate outputs between ANN layers is performed in a separate hardware unit outside the crossbar. This hardware unit can be an CPU, FPGA, microcontroller, microprocessor or printed circuit board (PCB) depending on how crossbar architecture is integrated with the other processing units. Hardware-based implementations refer to the integration of the memristive crossbars and activation function units into the same chip. In software based implementations, the output of each crossbar column needs to be converted to the digital domain using an ADC (which remarkably increases the area and power consumption) and then sent for the further processing. This is the most commonly used approach on research prototypes developed as technology demonstrators due to its versatility, as the activation function can be implemented and changed by simply modifying the software code55,100,113,114,115,122,123. In the context of future product development, reconfigurable ASICs are proposed for post analogue-digital signal processing. Conversely, hardware ASIC-based implementations of activation functions integrated into the same chip as a crossbar cannot be changed once the circuit is fabricated. Such activation functions can be implemented in both digital and analogue domains. Digital domain processing leads to the ADC overhead (same as for software-based implementations) but is less affected by the noise and transistor mismatches. Digital domain implementation of a ReLU activation integrated into the sensing circuit is shown in124,125. In general, analogue CMOS implementations of the activation functions require a smaller number of transistors and help to avoid analogue to digital conversion at this stage. Analogue CMOS implementations of the activation functions are shown in Fig. 9 (see Fig. 9a for the Sigmoid activation function and Fig. 9b for the ReLU activation function). Even though such designs cannot be reconfigured when fabricated, this weakness is compensated by a much reduced power consumption (estimated in ref. 102 for a 65 nm CMOS node to be roughly 30 times lower). References 119,126, presented analogue CMOS implementations of Sigmoid, ReLU and Hyperbolic Tangent activation functions within ANNs and Generative Adversarial Networks (GAN), respectively.

Since ANNs need to have a very large number of activations to achieve high accuracy, the reduced power consumption of such custom-made analogue CMOS activation functions could still be excessive. Using a compact and energy-efficient nano device implementing the non-linear activation functions could further advance the performance and integration density of memristive ANNs. Reference 121 proposed the use of a vanadium dioxide (VO2) Mott insulator device (which is heated up by joule power dissipation) to achieve the desired ReLU function (see Fig. 9c), and reference 127 proposed the use of a periodically-poled thin-film lithium niobate nanophotonic waveguide to implement this function in optical ANNs. Even though such designs are promising as a small energy-efficient solution for implementing the activation functions, their efficient integration with the other peripheral circuits and CMOS components is still an open challenge.

SoftArgMax function (Block 8)

Instead of the activation functions previously described, the final synaptic layer in an ANN as those here covered, uses a different block. In this case it is necessary to have a block that detects which is the most active output of the crossbar (i.e., which column drives the highest current). This block (often named SoftArgMax function or SoftArgMax activation function) with as many inputs as bitlines has the memristor crossbar, basically implements Eq. 10:

which indicates that the ith element of the vector Z is the maximum among all the elements of Z, and thereby identifies the input pattern as a member of class i. The input vector Z represents the crossbar outputs. This behaviour is achieved by combining two functions, the argmax() and the softmax() functions, shown in Eqs. 11 and 12, respectively.

It could be argued that such a behaviour (i.e. identifying the largest output of the network) could be achieved directly by the argmax() function without the need of the softmax() operation. This is because as indicated in Eq. 11, argmax() is an operation that finds the argument that gives the maximum value from a target function. So, for inference-only accelerators it is acceptable to fed the output of the activation functions directly to the argmax() function, omitting the softmax() function. Some studies proposed to implement the argmax() function via hardware128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148, which could be beneficial to reduce the total transistor count and power consumption while at the same time increasing the throughput. In this regard, there are two possibilities: to use of a CMOS digital block128,129,130,131, or to use a CMOS analogue block132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148, which can either operate with a current or voltage input (see Fig. 10a, b, respectively). Note that this blocks in fact implement the so-called winner-takes-all function, widely used in SNNs and particularly in unsupervised competitive learning (this could be regarded as similar to the argmax() function but with the addition of lateral inhibition). The use of a digital block is simpler and more robust (it can be easily written in Verilog or VHDL), but it presents the big drawback of requiring an ADC at each output (i.e., column) of the crossbar.

a WTA CMOS block with voltage input291. The gate terminal of transistor Q5, and the source terminals of transistors Q6 and Q7 are common to all WTA cells. b WTA CMOS block with current input148. Node Vcom is common to all WTA cells. In both cases, the output voltage of the WTA cell with the highest input voltage/current is driven to the positive reference voltage (VDD), while the output voltage of the remaining WTA cells is driven to ground. The number of cells in the WTA module is the same to the number of classes of images to identify by the ANN.

Yet, it is recommended (even for inference-only) to consider the softmax() function as well, as it turns the vector formed by the output of the activation functions to a vector of probabilities, where the probabilities of each value are proportional to the relative scale of each value in the vector (the summatory of the probabilities of all elements is equal to 1). Note that the ith output of the softmax() function is determined not only by the value (z) ith input but also by the value of the other jth inputs. Furthermore, for training-capable accelerators, it is usually not possible to omit the softmax() function, as it is required for calculating the loss function, which determines the way in which the synaptic connections are adjusted. This process is done by backpropagating the gradient of each mathematical function of the network, to the previous layer (the details of these procedure will be further described in section ANN training and synaptic weight update (Blocks 2, 11-15): Learning algorithm). Since the gradient of the argmax() function is always zero, its usage without the softmax() function would result in no update of the synaptic weights. Most studies implement this block via software54,57,85, which uses a digitalized representation of the voltage signal provided by the preceding activation function (discussed in section Activation function (Block 7)). This approach requires the use of an ADC at the output of the activation function for each column (analogue hardware). This digitized vector is read by a Python57 or MATLAB54 routine running on a PC or FPGA85 and the highest valued element is identified. Although these examples are essentially proofs-of-concept focusing on the hardware implementation of ANNs, it could be argued that future systems-on-chip including both in-memory-computing tiles and conventional Von Neumann cores could rely on the latter ones for implementing functions such as softargmax() function on the digitized vector provided by the in-memory-computing tiles57. Note that in some cases, the activation function is also implemented digitally and thereby the ADC block is placed right after the sensing electronics discussed in section Sensing electronics (Block 6).

Analogue to digital converters (Block 9)

In the cases in which ADCs are needed (either between the output of the crossbar and the activation function block or between the activation function block and the softargmax() block), the most important metrics to consider are: (i) their resolution (as it affects the accuracy), (ii) sampling frequency (fs) (affects throughput or in other words, the number of operations per second), and iii) surface area on the die (limits the available silicon area to be destined to synaptic weights, that is the 1T1R structures, which thus affects cost).

The resolution of ADC required to represent all possible outputs of the VMM operation depends on input precision \(K\) (DAC resolution), number of crossbar rows \(N\), and precision of the weights cells \(M\) (conductance resolution), and can be calculated as \({{{{{\rm{ceil}}}}}}({\log }_{2}(({2}^{K}-1)* ({2}^{M}-1)*N))\)96. For example, 1-bit memristors (binary weights) and binary inputs (1-bit) in a 256 × m crossbar requires at least a resolution of 8-bit to discriminate all output levels. 5-bit memristors with the same vector dimension and binary inputs require a 13-bit ADC, which represents a serious design challenge to preserve energy consumption/area efficiency and thereby requires a careful cost and overhead analysis149 since all these metrics are strongly linked. For instance, based on refs. 150,151,152, increasing 1-bit resolution or increasing the throughput by doubling the sampling frequency results in a 4× increase in power consumption (particularly for highly scaled CMOS technology nodes, where the power consumption is usually bounded by the thermal noise153). Similarly, cutting the power consumption by half or adding 1-bit resolution comes at the expense of 25% more silicon area. Moreover, ADC can consume up to 70–90% of the on-chip area of the crossbar-based computation unit, including memristive crossbar and peripheral circuits, and up to 80-88% of energy55,154,155. In summary, ADCs are commonly the largest and most power-hungry circuit block in a memristive neural network55,156. For these reasons, many authors focusing on the optimization of the 1T1R memory cell structures have opted for using off-the-shelf integrated circuits, assembled in printed circuit boards54,85, as in this way they can avoid the limitations posed by the trade-offs between resolution, area and power of the ADCs. Nonetheless, for full on-chip integration of memristive neural network, the impact of ADC resolution on VMM accuracy needs to be carefully evaluated to identify the lowest ADC resolution (and thereby required Silicon area) while preserving the neural network accuracy91,92.

Overall, the choice of ADC architecture depends on the needs of the application and proper system-level design can be very helpful to identify the required ADC performance. As a rule of thumb, ADCs with higher resolutions are slower and less power efficient, whereas the ADCs with a higher sampling frequency have worse energy efficiency and lower resolution. Thereby, if the focus is set on achieving high-resolution (>10-bit) successive approximation register (SAR-ADC, Fig. 11a) or delta-sigma (ΔΣ-ADC, Fig. 11b) can be utilized as they have small form factors and the best signal-to-noise and distortion ratio (SNDR). Furthermore, SAR-ADC and controlled oscillator-based ADCs (Current-Controlled-Oscillators -CCO, see Fig. 11c- and Voltage-Controlled-Oscillators -VCO, Fig. 11d-) are more suitable to smaller technology node implementations95,117. In this regard, and unlike the more commonly used VCO-based ADCs, CCO-based ADCs such as the one proposed by Khaddam-Aljameh et al.71 (see Fig. 11c) eliminate the need for additional conversion cycles and are amenable to trading off precision with latency. As such, this approach facilitates having one converter per column of the crossbar, thus minimizing the overall latency as no resource sharing will be required. On the contrary, if the focus is set on the sampling frequency (with reading times in the order of 10 ns), low-resolution/high-speed-flash ADC (Fig. 11e) can be applied via time multiplexing to minimize die area as for instance ADCs with at least 8-bit resolution are necessary to achieve high (>90%) classification accuracy in a ResNET50-1.5 ANN used to classify the ImageNET157 database or in a multi-layer perceptron to classify the breast cancer screening database57. This approach requires the use of analogue multiplexers (block 11).

In general, the reduction of ADC overhead is one of the main challenges in memristor-based ANN hardware design. One way to address this problem is approximate computation or using lower precision ADCs than required96,158. The other method is sharing a single ADC across several columns or using a single ADC per crossbar tile159,160. However, ADC sharing requires additional multiplexers and sample-and-hold circuits and also increases latency96 (i.e. more time is required to process each input pattern, thus reducing the throughput of the ANN). In binarized networks, ADC can be replaced by a 1-bit comparator96 or ADC-like multi-level sense amplifier158.

Having introduced the interplay between crossbar size, input vector resolution, memristor’s available levels and ADC resolution, and how the ADC resolution impacts the Silicon area, it is worth discussing how these set a constraint for how the memristive ANN will handle input vectors with bipolar (positive and negative) elements. The obvious approach i) is to design the DAC circuits with the capability of providing both positive and negative voltages161. This means doubling the number of DAC output levels, and thereby increasing the DAC resolution in 1 bit (with the associated increase in the Silicon area cost as explained in Section Input driving circuits (Block 4)). Nourazar et al suggest in162 the use of an analogue inverter with low output impedance which is alternatively connected to the DAC output or bypassed based on the sign bit. Nonetheless, increasing the input DACs resolution by 1 bit, also means increasing the output ADCs by 1 bit, as the number of levels to be distinguished doubles. Therefore, not only the system becomes more sensitive and error-prone, but also its power consumption increases exponentially as the resolution of DACs and ADCs increase163,164. An alternative to avoid the Silicon area and power consumption is to apply the positive and negative inputs in two separate read phases with unipolar voltages and subtracting the resulting ADC outputs via digital post-processing. This is similar to what the platform ISAAC160 does, which provides 16-bit signed data to the crossbar in 16 cycles (one bit per cycle) in 2’s complement format. Despite being an appealing solution from the cost side, this approach comes with an inevitable reduction of throughput as at least two separate read phase must be employed to complete a single VMM product.

ANN training and synaptic weight update (Blocks 2, 11-15)

Apart from driving the input and output signals, to perform a fruitful VMM operation, it is fundamental to set the conductance of the memristors in the crossbars to the required values. In the context of ANNs, the process of determining such values is called training or learning, and it can be classified based on i) the nature of the training algorithm, and on ii) how the selected algorithm is implemented. First, regarding the nature of the training algorithm, the typical method of choice for classification problems (as the example discussed here) is supervised learning. Supervised learning is a machine learning approach that is defined by the use of labelled datasets, i.e., the training and test data are paired with the correct label. For the MNIST dataset, this means that an image displaying the number ‘9’ is paired with a tag with the value ‘9’. By using labelled inputs and outputs, the model can measure its accuracy and learn over time. Other learning approaches include unsupervised learning165, semi-supervised learning, adversarial learning and reinforcement learning, but their hardware implementation is much more complex. Note that most of the literature claiming unsupervised learning with memristive devices used software166, and we are only aware of a few works53,116,167, that demonstrated hardware-based unsupervised learning. Second, and concerning how the learning algorithm is implemented, this could be done ex situ, that is, using an idealized model of the network written in software (blocks 2, 11-14) and writing the synaptic weights to the conductances once the training is finished or in situ, that is, using the memristive ANN to compute the VMM operations (blocks 12-15) and progressively updating the concuctance values during the training process. In the following sub-sections the basics of the supervised learning, the difference between ex-situ and in-situ training and the procedure to tune the memristor conductance will be further discussed.

Learning algorithm

During the supervised learning, we compute the output of the ANN when presenting an input vector from the training dataset. Such output is then compared against the label associated to the input vector to determine the network’s error. For the case of ANN with n2 inputs, m outputs and no hidden layers, such error is a function of the n2m synaptic weights of the network (\({{\mathbb{R}}}^{{n}^{2}m}{\mathbb{\to }}{\mathbb{R}}\)), often called loss function. In order to reduce the error, the synaptic weights are updated periodically after a number z of input vectors (images) are presented to the network. Then, the learning procedure can be understood as a multivariate optimization problem, where the synaptic weights must be adjusted to values that minimize the loss function. To achieve this goal two families of algorithms could be employed: gradient-free and gradient-based algorithms (as shown in Fig. 12a). Gradient-free methods such as the Particle Swarm optimization168, Genetic Algorithms169 and Simulated Annealing170 algorithms are more demanding from a computational point of view, and hence, they are rarely employed for ANN training, by which they lie beyond the scope of this article.

a Simplified organization of the most common terms reported in the literature, differentiating between gradient based and gradient free training tools. For the gradient-based tools, we propose an organization of the algorithms for (i) gradient computation, (ii) optimization and (iii) learning rate. b Illustration of the gradient descent method, for a trivial 2 × 1 neural network trained with supervised learning.

To understand the basics of the gradient-based algorithms, let us consider an example in which the loss function is a convex bivariate-function, which describes the error of the output (against the labels) for a small network with only two inputs and one output (and thereby 2 synaptic weights, as presented in Fig. 12b), that is \({{\mathbb{R}}}^{2}{\mathbb{\to }}{\mathbb{R}}\). The gradient for such a function indicates, for a random point x1 = (w1,w2), the direction in which the loss increases. Using the information provided by the gradient, we can take a step by advancing contrary to the gradient to a new point x2 = (w’1,w’2) and expect a lower loss. We can then repeat the same action and make a further step in the direction opposite to the gradient for the point x2 and reach a new point x3 = (w”1,w”2). Such a process will continue iteratively until ideally finding that the gradient is 0, or at least lower than a termination criterion. Within the field of supervised training, each of these iterations is called Epoch. At this point (assuming that we managed to avoid the local minima) we would have found the values for w1 and w2 that minimizes the loss function. A frequently used loss function for training ANNs is the cross-entropy loss, which is calculated as follows:

were pi is the probability of each class for a certain input pattern (calculated with the softmax function), and yi is 1 only for the class with the highest probability and 0 otherwise. However, when generalizing these concepts to \({{\mathbb{R}}}^{{n}^{2}m}{\mathbb{\to }}{\mathbb{R}}\), a plethora of challenges and varieties appear, depending on: i) how the required gradient of the loss function is computed, ii) how the loss function is evaluated, iii) how the direction in which to advance is determined, and iv) what is the size of the step in each iteration (among other factors).

In most ANNs, the gradient of the loss function is normally computed by the backpropagation algorithm171. Then the evaluation of the loss function could be done deterministically or stochastically. For a deterministic evaluation, all the samples in the train dataset are presented to the network and the loss is computed as the average loss over all the samples. For the stochastic evaluation, the loss is estimated by presenting one single input vector, which introduces a higher degree of variability but speeds up the training process. Alternatively, the use of batches has been also proposed to help reducing the variability, by computing the loss over a batch of input vectors. In other words, under deterministic evaluation of the loss function and considering the MNIST dataset, every Epoch supposes the presentation of 60,000 images. Instead, during stochastic evaluation, every Epoch may consist in presenting 1 image. Note that for the sake of comprehensiveness, and to provide the most complete overview as possible to potential readers who are not already familiar with the field of deep learning, we list both deterministic and stochastic optimization methods. However, deterministic methods are rarely (if ever) used in modern deep learning frameworks, with stochastic optimizers being the de facto standard for the entire community. The reason for this is the high computational burden involved in sending the entire dataset to compute the gradient.

For each case (deterministic/stochastic) there are different algorithms to determine the optimum direction in which search for the minima based on the information provided by the gradient. These are the so-called optimization algorithms. For the case of deterministic evaluation, common optimization algorithms are the following: (i) Gradient Descent165 (the simplest one and closest to the previous paragraph’s explanation) and its variants (Gradient Descent with Momentum165), (ii) Newton (analytically complex, as besides the gradient it also requires the Hessian matrix associated of the loss function) and Quasi-Newton methods (which operates over an approximation of the Hessian matrix to simplify the problem computation, as the Broyden–Fletcher–Goldfarb–Shanno Quasi-Newton172), (iii) Conjugate Gradient methods (an intermediate between the Gradient descent and the Newton methods which avoids the use of the Hessian matrix and instead makes use of the conjugated direction of the gradient, e.g. Scaled Conjugate Gradient173, Conjugate Gradient with Powell-Beale restarts174, Fletcher-Powell Conjugate Gradients175 and Polak-Ribiere Conjugate Gradient165,175). Alternatively, other methods are the Levenberg-Marquardt176 (uses the Jacobian matrix instead of the Hessian Matrix), Resilient Backpropagation177 and One Step Secant178, but these are more demanding from a computational point of view. For stochastic evaluation, the most common optimization algorithms are: the i) Stochastic Gradient Descent179 (the stochastic equivalent of the Gradient Descent165 method previously mentioned, assuming that one epoch consists of only 1 training input vector) and Mini-batch Gradient Descent180 (which is a generalization of the stochastic gradient descent method for Epoch sizes greater than 1 and smaller than the entire dataset) and ii) the Manhattan Update Rule181 (synaptic weights are updated by increasing or reducing them depending on the gradient direction, but the step is equal for all of them).

The size of the step made in each Epoch to update the synaptic weights is critical because it severely affects the probability of the algorithm to converge, as well as the convergence time, i.e., a large step value will cause the learning not to converge, while small values will result in a sometimes-unacceptable learning time. The simplest approach is to consider a fixed step, although the most advanced learning methods rely in a variable step that is auto-adjusted based on a variety of metrics. In particular, for the case of deterministic evaluation of the loss function the Variable Learning Rate Gradient Descent is often employed165, and for stochastic evaluation of the loss function using a mini-batch of images diverse methods have been employed, including Adaptive Gradient Algorithm (or AdaGrad)182, Root Mean Square Propagation (or RMSProp)183, Adaptive Moment Estimation (or Adam)184 and Adadelta185.

Each training algorithm has different mathematical characteristics, which can severely change the accuracy and computing time. For this reason, before employing any of them to compute the 60,000 images of the MNIST dataset, we conduct a small test (called k-fold cross validation) in which a small number of training images and the accuracy depending on the training algorithm is recorded. As an example, Supplementary Algorithm 2 shows the detailed MATLAB code used for this k-fold cross validation using 100 images. The small number of training images is partitioned into k groups: k-1 groups are effectively used to train the network, while the remaining group is used to validate the training results. Then, this process is repeated r times, in each of them using a new set of k groups formed by the same small group of images (100 in this example) but shuffled in each repetition. The idea behind this approach is to check whether the trained accuracy depends on the set of data used for the training or not. In this example we divided the 100 images in 5 groups (k=5), leading to 80 images for training and 20 for validation (which are different in each repetition), and the accuracy of the ANN was recorded for every repetition (r=10 in this example) for each training algorithm. For brevity, we considered only the algorithms for the deterministic evaluation of the cost function provided in the MATLAB Deep Learning toolbox. This implied in total 110 trainings for the 100 images. The result of these tests are reported in Fig. 13a, b, which shows that the Scaled Conjugate Gradient and the Levenberg-Marquardt learning algorithms176 provide the highest accuracy; however, the first one is much faster, and for this reason it is the one selected for this example. It is also clear from Fig. 13a, that apart from a lower accuracy, the accuracy obtained with Gradient Descent with Momentum is highly dependent on the training and testing datasets. Further details concerning each training algorithm lie beyond the scope of this article, as we focus on the crossbar-based implementation of the ANN.