Abstract

Finding the ground state of a quantum many-body system is a fundamental problem in quantum physics. In this work, we give a classical machine learning (ML) algorithm for predicting ground state properties with an inductive bias encoding geometric locality. The proposed ML model can efficiently predict ground state properties of an n-qubit gapped local Hamiltonian after learning from only \({{{{{{{\mathcal{O}}}}}}}}(\log (n))\) data about other Hamiltonians in the same quantum phase of matter. This improves substantially upon previous results that require \({{{{{{{\mathcal{O}}}}}}}}({n}^{c})\) data for a large constant c. Furthermore, the training and prediction time of the proposed ML model scale as \({{{{{{{\mathcal{O}}}}}}}}(n\log n)\) in the number of qubits n. Numerical experiments on physical systems with up to 45 qubits confirm the favorable scaling in predicting ground state properties using a small training dataset.

Similar content being viewed by others

Introduction

Finding the ground state of a quantum many-body system is a fundamental problem with far-reaching consequences for physics, materials science, and chemistry. Many powerful methods1,2,3,4,5,6,7 have been proposed, but classical computers still struggle to solve many general classes of the ground state problem. To extend the reach of classical computers, classical machine learning (ML) methods have recently been adapted to study this and related problems both empirically and theoretically8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35. A recent work36 proposes a polynomial-time classical ML algorithm that can efficiently predict ground state properties of gapped geometrically local Hamiltonians, after learning from data obtained by measuring other Hamiltonians in the same quantum phase of matter. Furthermore36, shows that under a widely accepted conjecture, no polynomial-time classical algorithm can achieve the same performance guarantee. However, although the ML algorithm given in36 uses a polynomial amount of training data and computational time, the polynomial scaling \({{{{{{{\mathcal{O}}}}}}}}({n}^{c})\) has a very large degree c. Here, \(f(x)={{{{{{{\mathcal{O}}}}}}}}(g(x))\) denotes that f(x) is asymptotically upper bounded by g(x) up to constant factors with respect to the limit n → ∞. Moreover, when the prediction error ϵ is small, the amount of training data grows exponentially in 1/ϵ, indicating that a very small prediction error cannot be achieved efficiently.

In this work, we present an improved ML algorithm for predicting ground state properties. We consider an m-dimensional vector x ∈ [−1, 1]m that parameterizes an n-qubit gapped geometrically local Hamiltonian given as

where x is the concatenation of constant-dimensional vectors \({\overrightarrow{x}}_{1},\ldots,{\overrightarrow{x}}_{L}\) parameterizing the few-body interaction \({h}_{j}({\overrightarrow{x}}_{j})\). Let ρ(x) be the ground state of H(x) and O be a sum of geometrically local observables with ∥O∥∞ ≤ 1. We assume that the geometry of the n-qubit system is known, but we do not know how \({h}_{j}({\overrightarrow{x}}_{j})\) is parameterized or what the observable O is. The goal is to learn a function h*(x) that approximates the ground state property \({{{{{{{\rm{Tr}}}}}}}}(O\rho (x))\) from a classical dataset,

where \({y}_{\ell }\,\approx\, {{{{{{{\rm{Tr}}}}}}}}(O\rho ({x}_{\ell }))\) records the ground state property for xℓ ∈ [−1, 1]m sampled from an arbitrary unknown distribution \({{{{{{{\mathcal{D}}}}}}}}\). Here, \({y}_{\ell }\,\approx \,{{{{{{{\rm{Tr}}}}}}}}(O\rho ({x}_{\ell }))\) means that yℓ has additive error at most ϵ. If \({y}_{\ell }\,=\,{{{{{{{\rm{Tr}}}}}}}}(O\rho ({x}_{\ell }))\), the rigorous guarantees improves.

The setting considered in this work is very similar to that in36, but we assume the geometry of the n-qubit system to be known, which is necessary to overcome the sample complexity lower bound of N = nΩ(1/ϵ) given in36. Here, f(x) = Ω(g(x)) denotes that f(x) is asymptotically lower bounded by g(x) up to constant factors. One may compare the setting to that of finding ground states using adiabatic quantum computation37,38,39,40,41,42,43,44. To find the ground state property \({{{{{{{\rm{Tr}}}}}}}}(O\rho (x))\) of H(x), this class of quantum algorithms requires the ground state ρ0 of another Hamiltonian H0 stored in quantum memory, explicit knowledge of a gapped path connecting H0 and H(x), and an explicit description of O. In contrast, here we focus on ML algorithms that are entirely classical, have no access to quantum state data, and have no knowledge about the Hamiltonian H(x), the observable O, or the gapped paths between H(x) and other Hamiltonians.

The proposed ML algorithm uses a nonlinear feature map x ↦ ϕ(x) with a geometric inductive bias built into the mapping. At a high level, the high-dimensional vector ϕ(x) contains nonlinear functions for each geometrically local subset of coordinates in the m-dimensional vector x. Here, the geometry over coordinates of the vector x is defined using the geometry of the n-qubit system. The ML algorithm learns a function h*(x) = w* ⋅ ϕ(x) by training an ℓ1-regularized regression (LASSO)45,46,47 in the feature space. An overview of the ML algorithm is shown in Fig. 1. We prove that given ϵ = Θ(1), Here, the notation f(x) = Θ(g(x)) denotes that \(f(x)={{{{{{{\mathcal{O}}}}}}}}(g(x))\) and f(x) = Ω(g(x)) both hold. Hence, f(x) is asymptotically equal to g(x) up to constant factors. the improved ML algorithm can use a dataset size of

to learn a function h*(x) with an average prediction error of at most ϵ,

with high success probability.

Given a vector x ∈ [−1, 1]m that parameterizes a quantum many-body Hamiltonian H(x), the algorithm uses a geometric structure to create a high-dimensional vector \(\phi (x)\in {{\mathbb{R}}}^{{m}_{\phi }}\). The ML algorithm then predicts properties or a representation of the ground state ρ(x) of Hamiltonian H(x) using the mϕ-dimensional vector ϕ(x).

The sample complexity \(N={{{{{{{\mathcal{O}}}}}}}}\left(\log \left(n\right)\right)\) of the proposed ML algorithm improves substantially over the sample complexity of \(N={{{{{{{\mathcal{O}}}}}}}}({n}^{c})\) in the previously best-known classical ML algorithm36, where c is a very large constant. The computational time of both the improved ML algorithm and the ML algorithm in36 is \({{{{{{{\mathcal{O}}}}}}}}(nN)\). Hence, the logarithmic sample complexity N immediately implies a nearly linear computational time. In addition to the reduced sample complexity and computational time, the proposed ML algorithm works for any distribution over x, while the best previously known algorithm36 works only for the uniform distribution over [−1, 1]m. Furthermore, when we consider the scaling with the prediction error ϵ, the best known classical ML algorithm in36 has a sample complexity of \(N={n}^{{{{{{{{\mathcal{O}}}}}}}}(1/\epsilon )}\), which is exponential in 1/ϵ. In contrast, the improved ML algorithm has a sample complexity of \(N=\log (n){2}^{{{{{{{{\rm{polylog}}}}}}}}(1/\epsilon )}\), which is quasi-polynomial in 1/ϵ.

We also discuss a generalization of the proposed ML algorithm to predicting ground state representations when trained on classical shadow representations48,49,50,51,52. In this setting, the proposed ML algorithm yields the same reduction in sample and time complexity compared to36 for predicting ground state representations.

Results

The central component of the improved ML algorithm is the geometric inductive bias built into our feature mapping \(x\in {[-1,1]}^{m}\mapsto \phi (x)\in {{\mathbb{R}}}^{{m}_{\phi }}\). To describe the ML algorithm, we first need to present some definitions relating to this geometric structure.

Definitions of the geometric inductive bias

We consider n qubits arranged at locations, or sites, in a d-dimensional space, e.g., a spin chain (d = 1), a square lattice (d = 2), or a cubic lattice (d = 3). This geometry is characterized by the distance \({d}_{{{{{{{{\rm{qubit}}}}}}}}}(i,{i}^{{\prime} })\) between any two qubits i and \({i}^{{\prime} }\). Using the distance dqubit between qubits, we can define the geometry of local observables. Given any two observables OA, OB on the n-qubit system, we define the distance dobs(OA, OB) between the two observables as the minimum distance between the qubits that OA and OB act on. We also say an observable is geometrically local if it acts nontrivially only on nearby qubits under the distance metric dqubit. We then define S(geo) as the set of all geometrically local Pauli observables, i.e., geometrically local observables that belong to the set {I, X, Y, Z}⊗n. The size of S(geo) is \({{{{{{{\mathcal{O}}}}}}}}(n)\), linear in the total number of qubits.

With these basic definitions in place, we now define a few more geometric objects. The first object is the set of coordinates in the m-dimensional vector x that are close to a geometrically local Pauli observable P. This is formally given by,

where hj(c) is the few-body interaction term in the n-qubit Hamiltonian H(x) whose parameters \({\overrightarrow{x}}_{j(c)}\) include the variable xc ∈ [ − 1, 1], and δ1 is an efficiently computable hyperparameter that is determined later. Each variable xc in the m-dimensional vector x corresponds to exactly one interaction terms \({h}_{j(c)}={h}_{j(c)}({\overrightarrow{x}}_{j(c)})\), where the parameter vector \({\overrightarrow{x}}_{j(c)}\) contains the variable xc. Intuitively, IP is the set of coordinates that have the strongest influence on the function \({{{{{{{\rm{Tr}}}}}}}}(P\rho (x))\).

The second geometric object is a discrete lattice over the space [−1, 1]m associated to each subset IP of coordinates. For any geometrically local Pauli observable P ∈ S(geo), we define XP to contain all vectors x that take on value 0 for coordinates outside IP and take on a set of discrete values for coordinates inside IP. Formally, this is given by

where δ2 is an efficiently computable hyperparameter to be determined later. The definition of XP is meant to enumerate all sufficiently different vectors for coordinates in the subset IP ⊆ {1, …, m}.

Now given a geometrically local Pauli observable P and a vector x in the discrete lattice XP ⊆ [−1, 1]m, the third object is a set Tx,P of vectors in [−1, 1]m that are close to x for coordinates in IP. This is formally defined as,

The set Tx,P is defined as a thickened affine subspace close to the vector x for coordinates in IP. If a vector \({x}^{{\prime} }\) is in Tx,P, then \({x}^{{\prime} }\) is close to x for all coordinates in IP, but \({x}^{{\prime} }\) may be far away from x for coordinates outside of IP. Examples of these definitions are given in Supplementary Figs. 1 and 2.

Feature mapping and ML model

We can now define the feature map ϕ taking an m-dimensional vector x to an mϕ-dimensional vector ϕ(x) using the thickened affine subspaces \({T}_{{x}^{{\prime} },P}\) for every geometrically local Pauli observable P ∈ S(geo) and every vector \({x}^{{\prime} }\) in the discrete lattice XP. The dimension of the vector ϕ(x) is given by \({m}_{\phi }={\sum }_{P\in {S}^{{{{{{{{\rm{(geo)}}}}}}}}}}| {X}_{P}|\). Each coordinate of the vector ϕ(x) is indexed by \({x}^{{\prime} }\in {X}_{P}\) and P ∈ S(geo) with

which is the indicator function checking if x belongs to the thickened affine subspace. Recall that this means each coordinate of the mϕ-dimensional vector ϕ(x) checks if x is close to a point \({x}^{{\prime} }\) on a discrete lattice XP for the subset IP of coordinates close to a geometrically local Pauli observable P.

The classical ML model we consider is an ℓ1-regularized regression (LASSO) over the ϕ(x) space. More precisely, given an efficiently computable hyperparameter B > 0, the classical ML model finds an mϕ-dimensional vector w* from the following optimization problem,

where \({\{({x}_{\ell },{y}_{\ell })\}}_{\ell=1}^{N}\) is the training data. Here, xℓ ∈ [−1, 1]m is an m-dimensional vector that parameterizes a Hamiltonian H(x) and yℓ approximates \({{{{{{{\rm{Tr}}}}}}}}(O\rho ({x}_{\ell }))\). The learned function is given by h*(x) = w* ⋅ ϕ(x). The optimization does not have to be solved exactly. We only need to find a w* whose function value is \({{{{{{{\mathcal{O}}}}}}}}(\epsilon )\) larger than the minimum function value. There is an extensive literature53,54,55,56,57,58,59 improving the computational time for the above optimization problem. The best known classical algorithm58 has a computational time scaling linearly in mϕ/ϵ2 up to a log factor, while the best known quantum algorithm59 has a computational time scaling linearly in \(\sqrt{{m}_{\phi }}/{\epsilon }^{2}\) up to a log factor.

Rigorous guarantee

The classical ML algorithm given above yields the following sample and computational complexity. This theorem improves substantially upon the result in36, which requires \(N={n}^{{{{{{{{\mathcal{O}}}}}}}}(1/\epsilon )}\). The proof idea is given in Section “Methods”, and the detailed proof is given in Supplementary Sections 1, 2, 3. Using the proof techniques presented in this work, one can show that the sample complexity \(N=\log (n/\delta ){2}^{{{{{{{{\rm{polylog}}}}}}}}(1/\epsilon )}\) also applies to any sum of few-body observables O = ∑jOj with ∑j∥Oj∥∞≤1, even if the operators {Oj} are not geometrically local.

Theorem 1

(Sample and computational complexity). Given \(n,\,\delta \, > \, 0,\,\frac{1}{e} \, > \,\epsilon \, > \, 0\) and a training data set \({\{{x}_{\ell },{y}_{\ell }\}}_{\ell=1}^{N}\) of size

where xℓ is sampled from an unknown distribution \({{{{{{{\mathcal{D}}}}}}}}\) and \(| {y}_{\ell }-{{{{{{{\rm{Tr}}}}}}}}(O\rho ({x}_{\ell }))| \le \epsilon\) for any observable O with eigenvalues between −1 and 1 that can be written as a sum of geometrically local observables. With a proper choice of the efficiently computable hyperparameters δ1, δ2, and B, the learned function h*(x) = w* ⋅ ϕ(x) satisfies

with probability at least 1 − δ. The training and prediction time of the classical ML model are bounded by \({{{{{{{\mathcal{O}}}}}}}}(nN)=n\log (n/\delta ){2}^{{{{{{{{\rm{polylog}}}}}}}}(1/\epsilon )}\).

The output yℓ in the training data can be obtained by measuring \({{{{{{{\rm{Tr}}}}}}}}(O\rho ({x}_{\ell }))\) for the same observable O multiple times and averaging the outcomes. Alternatively, we can use the classical shadow formalism48,49,50,51,52,60 that performs randomized Pauli measurements on ρ(xℓ) to predict \({{{{{{{\rm{Tr}}}}}}}}(O\rho ({x}_{\ell }))\) for a wide range of observables O. We can also combine Theorem 1 and the classical shadow formalism to use our ML algorithm to predict ground state representations, as seen in the following corollary. This allows one to predict ground state properties \({{{{{{{\rm{Tr}}}}}}}}(O\rho (x))\) for a large number of observables O rather than just a single one. We present the proof of Corollary 1 in Supplementary Section 3B.

Corollary 1

Given \(n,\,\delta\, > \, 0,\,\frac{1}{e} \, > \, \epsilon \, > \, 0\) and a training data set \({\{{x}_{\ell },{\sigma }_{T}(\rho ({x}_{\ell }))\}}_{\ell=1}^{N}\) of size

where xℓ is sampled from an unknown distribution \({{{{{{{\mathcal{D}}}}}}}}\) and σT(ρ(xℓ)) is the classical shadow representation of the ground state ρ(xℓ) using T randomized Pauli measurements. For \(T=\tilde{{{{{{{{\mathcal{O}}}}}}}}}(\log (n)/{\epsilon }^{2})\), then the proposed ML algorithm can learn a ground state representation \({\hat{\rho }}_{N,T}(x)\) that achieves

for any observable O with eigenvalues between −1 and 1 that can be written as a sum of geometrically local observables with probability at least 1 − δ.

We can also show that the problem of estimating ground state properties for the class of parameterized Hamiltonians \(H(x)={\sum }_{j}{h}_{j}({\overrightarrow{x}}_{j})\) considered in this work is hard for non-ML algorithms that cannot learn from data, assuming the widely believed conjecture that NP-complete problems cannot be solved in randomized polynomial time. This is a manifestation of the computational power of data studied in61. The proof of Proposition 1 in36 constructs a parameterized Hamiltonian H(x) that belongs to the family of parameterized Hamiltonians considered in this work and hence establishes the following.

Proposition 1

(A variant of Proposition 1 in36). Consider a randomized polynomial-time classical algorithm \({{{{{{{\mathcal{A}}}}}}}}\) that does not learn from data. Suppose for any smooth family of gapped 2D Hamiltonians \(H(x)={\sum }_{j}{h}_{j}({\overrightarrow{x}}_{j})\) and any single-qubit observable \(O,{{{{{{{\mathcal{A}}}}}}}}\) can compute ground state properties \({{{{{{{\rm{Tr}}}}}}}}(O\rho (x))\) up to a constant error averaged over x ∈ [−1, 1]m uniformly. Then, NP-complete problems can be solved in randomized polynomial time.

This proposition states that even under the restricted settings of considering only 2D Hamiltonians and single-qubit observables, predicting ground state properties is a hard problem for non-ML algorithms. When one consider higher-dimensional Hamiltonians and multi-qubit observables, the problem only becomes harder because one can embed low-dimensional Hamiltonians in higher-dimensional spaces.

Numerical experiments

We present numerical experiments to assess the performance of the classical ML algorithm in practice. The results illustrate the improvement of the algorithm presented in this work compared to those considered in36, the mild dependence of the sample complexity on the system size n, and the inherent geometry exploited by the ML models. We consider the classical ML models previously described, utilizing a random Fourier feature map62. While the indicator function feature map was a useful tool to obtain our rigorous guarantees, random Fourier features are more robust and commonly used in practice. Moreover, we still expect our rigorous guarantees to hold with this change because Fourier features can approximate any function, which is the central property of the indicator functions used in our proofs. Furthermore, we determine the optimal hyperparameters using cross-validation to minimize the root-mean-square error (RMSE) and then evaluate the performance of the chosen ML model using a test set. The models and hyperparameters are further detailed in Supplementary Section 4.

For these experiments, we consider the two-dimensional antiferromagnetic random Heisenberg model consisting of 4 × 5 = 20 to 9 × 5 = 45 spins as considered in previous work36. In this setting, the spins are placed on sites in a 2D lattice. The Hamiltonian is

where the summation ranges over all pairs 〈ij〉 of neighboring sites on the lattice and the couplings {Jij} are sampled uniformly from the interval [0, 2]. Here, the vector x is a list of all couplings Jij so that the dimension of the parameter space is m = O(n), where n is the system size. The nonnegative interval [0, 2] corresponds to antiferromagnetic interactions. To minimize the Heisenberg interaction terms, nearby qubits have to form singlet states. While the square lattice is bipartite and lacks the standard geometric frustration, the presence of disorder makes the ground state calculation more challenging as neighboring qubits will compete in the formation of singlets due to the monogamy of entanglement63.

We trained a classical ML model using randomly chosen values of the parameter vector x = {Jij}. For each parameter vector of random couplings sampled uniformly from [0, 2], we approximated the ground state using the same method as in36, namely with the density-matrix renormalization group (DMRG)64 based on matrix product states (MPS)65. The classical ML model was trained on a data set \({\{{x}_{\ell },{\sigma }_{T}(\rho ({x}_{\ell }))\}}_{\ell=1}^{N}\) with N randomly chosen vectors x, where each x corresponds to a classical representation σT(ρ(xℓ)) created from T randomized Pauli measurements48. For a given training set size N, we conduct 4-fold cross validation on the N data points to select the best hyperparameters, train a model with the best hyperparameters on the N data points, and test the performance on a test set of size N. Further details are discussed in Supplementary Section 4.

The ML algorithm predicted the classical representation of the ground state for a new vector x. These predicted classical representations were used to estimate two-body correlation functions, i.e., the expectation value of

for each pair of qubits 〈ij〉 on the lattice. Here, we are using the combination of our ML algorithm with the classical shadow formalism as described in Corollary 1, leveraging this more powerful technique to predict a large number of ground state properties.

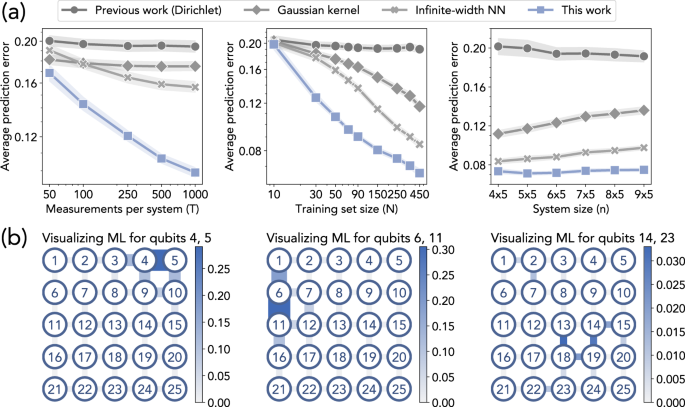

In Fig. 2A, we can clearly see that the ML algorithm proposed in this work consistently outperforms the ML models implemented in36, which includes the rigorous polynomial-time learning algorithm based on Dirichlet kernel proposed in36, Gaussian kernel regression66,67, and infinite-width neural networks68,69. Figure 2A (Left) and (Center) show that as the number T of measurements per data point or the training set size N increases, the prediction performance of the proposed ML algorithm improves faster than the other ML algorithms. This observation reflects the improvement in the sample complexity dependence on prediction error ϵ. The sample complexity in36 depends exponentially on 1/ϵ, but Theorem 1 establishes a quasi-polynomial dependence on 1/ϵ. From Fig. 2A (Right), we can see that the ML algorithms do not yield a substantially worse prediction error as the system size n increases. This observation matches with the \(\log (n)\) sample complexity in Theorem 1, but not with the poly(n) sample complexity proven in36. These improvements are also relevant when comparing the ML predictions to actual correlation function values. Figure 3 in36 illustrates that for the average prediction error achieved in their work, the predictions by the ML algorithm match the simulated values closely. In this work, we emphasize that significantly less training data is needed to achieve the same prediction error36 and agree with the simulated values.

a Prediction error. Each point indicates the root-mean-square error for predicting the correlation function in the ground state (averaged over Heisenberg model instances and each pair of neighboring spins). We present log-log plots for the scaling of prediction error ϵ with T and N: the slope corresponds to the exponent of the polynomial function ϵ(T), ϵ(N). The shaded regions show the standard deviation over different spin pairs. b Visualization. We plot how much each coupling Jij contributes to the prediction of the correlation function over different pairs of qubits in the trained ML model. Thicker and darker edges correspond to higher contributions. We see that the ML model learns to utilize the local geometric structure.

An important step for establishing the improved sample complexity in Theorem 1 is that a property on a local region R of the quantum system only depends on parameters in the neighborhood of region R. In Fig. 2B, we visualize where the trained ML model is focusing on when predicting the correlation function over a pair of qubits. A thicker and darker edge is considered to be more important by the trained ML model. Each edge of the 2D lattice corresponds to a coupling Jij. For each edge, we sum the absolute values of the coefficients in the ML model that correspond to a feature that depends on the coupling Jij. We can see that the ML model learns to focus only on the neighborhood of a local region R when predicting the ground state property.

Discussion

The classical ML algorithm and the advantage over non-ML algorithms as proven in36 illustrate the potential of using ML algorithms to solve challenging quantum many-body problems. However, the classical ML model given in36 requires a large amount of training data. Although the need for a large dataset is a common trait in contemporary ML algorithms70,71,72, one would have to perform an equally large number of physical experiments to obtain such data. This makes the advantage of ML over non-ML algorithms challenging to realize in practice. The sample complexity \(N={{{{{{{\mathcal{O}}}}}}}}(\log n)\) of the ML algorithm proposed here illustrates that this advantage could potentially be realized after training with data from a small number of physical experiments. The existence of a theoretically backed ML algorithm with a \(\log (n)\) sample complexity raises the hope of designing good ML algorithms to address practical problems in quantum physics, chemistry, and materials science by learning from the relatively small amount of data that we can gather from real-world experiments.

Despite the progress in this work, many questions remain to be answered. Recently, powerful machine learning models such as graph neural networks have been used to empirically demonstrate a favorable sample complexity when leveraging the local structure of Hamiltonians in the 2D random Heisenberg model29,30. Is it possible to obtain rigorous theoretical guarantees for the sample complexity of neural-network-based ML algorithms for predicting ground state properties? An alternative direction is to notice that the current results have an exponential scaling in the inverse of the spectral gap. Is the exponential scaling a fundamental nature of this problem? Or do there exist more efficient ML models that can efficiently predict ground state properties for gapless Hamiltonians?

We have focused on the task of predicting local observables in the ground state, but many other physical properties are also of high interest. Can ML models predict low-energy excited state properties? Could we achieve a sample complexity of \(N={{{{{{{\mathcal{O}}}}}}}}(\log n)\) for predicting any observable O? Another important question is whether there is a provable quantum advantage in predicting ground state properties. Could we design quantum ML algorithms that can predict ground state properties by learning from far fewer experiments than any classical ML algorithm? Perhaps this could be shown by combining ideas from adiabatic quantum computation37,38,39,40,41,42,43,44 and recent techniques for proving quantum advantages in learning from experiments73,74,75,76,77. It remains to be seen if quantum computers could provide an unconditional super-polynomial advantage over classical computers in predicting ground state properties.

Methods

We describe the key ideas behind the proof of Theorem 1. The proof is separated into three parts. The first part in Supplementary Section 1 describes the existence of a simple functional form that approximates the ground state property \({{{{{{{\rm{Tr}}}}}}}}(O\rho (x))\). The second part in Supplementary Section 2 gives a new bound for the ℓ1-norm of the Pauli coefficients of the observable O when written in the Pauli basis. The third part in Supplementary Section 3 combines the first two parts, using standard tools from learning theory to establish the sample complexity corresponding to the prediction error bound given in Theorem 1. In the following, we discuss these three parts in detail.

Simple form for ground state property

Using the spectral flow formalism78,79,80, we first show that the ground state property can be approximated by a sum of local functions. First, we write O in the Pauli basis as \(O={\sum }_{P\in {\{I,X,Y,Z\}}^{\otimes n}}{\alpha }_{P}P\). Then, we show that for every geometrically local Pauli observable P, we can construct a function fP(x) that depends only on coordinates in the subset IP of coordinates that parameterizes interaction terms hj near the Pauli observable P. The function fP(x) is given by

where χP(x) ∈ [−1, 1]m is defined as χP(x)c = xc for coordinate c ∈ IP and χP(x)c = 0 for coordinates c ∉ IP. The sum of these local functions fP can be used to approximate the ground state property,

The approximation only incurs an \({{{{{{{\mathcal{O}}}}}}}}(\epsilon )\) error if we consider \({\delta }_{1}=\Theta ({\log }^{2}(1/\epsilon ))\) in the definition of IP. The key point is that correlations decay exponentially with distance in the ground state of a gapped local Hamiltonian; therefore, the properties of the ground state in a localized region are not sensitive to the details of the Hamiltonian at points far from that localized region. Furthermore, the local function fP is smooth. The smoothness property allows us to approximate each local function fP by a simple discretization,

One could also use other approximations for this step, such as Fourier approximation or polynomial approximation. In fact, we apply a Fourier approximation instead in the numerical experiments, as discussed in Supplementary Section 4. For simplicity of the proof, we consider a discretization-based approximation with δ2 = Θ(1/ϵ) in the definition of \({T}_{{x}^{{\prime} },P}\) to incur at most an \({{{{{{{\mathcal{O}}}}}}}}(\epsilon )\) error. The point is that, for a sufficiently smooth function fP(x) that depends only on coordinates in IP and a sufficiently fine lattice over the coordinates in IP, replacing x by the nearest lattice point (based only on coordinates in IP) causes only a small error. Using the definition of the feature map ϕ(x) in Eq. (8), we have

where \({{{{{{{{\bf{w}}}}}}}}}^{{\prime} }\) is an mϕ-dimensional vector indexed by \({x}^{{\prime} }\in {X}_{P}\) and P ∈ Sgeo given by \({{{{{{{{\bf{w}}}}}}}}}_{{x}^{{\prime} },P}^{{\prime} }={f}_{P}({x}^{{\prime} })\). The approximation is accurate if we consider \({\delta }_{1}=\Theta ({\log }^{2}(1/\epsilon ))\) and δ2 = Θ(1/ϵ). Thus, we can see that the ML algorithm with the proposed feature mapping indeed has the capacity to approximately represent the target function \({{{{{{{\rm{Tr}}}}}}}}(O\rho (x))\). As a result, we have the following lemma.

Lemma 1

(Training error bound). The function given by \({{{{{{{{\bf{w}}}}}}}}}^{{\prime} }\cdot \phi (x)\) achieves a small training error:

This lemma follows from the two facts that \({{{{{{{{\bf{w}}}}}}}}}^{{\prime} }\cdot \phi (x)\,\approx \,{{{{{{{\rm{Tr}}}}}}}}(O\rho (x))\) and \({{{{{{{\rm{Tr}}}}}}}}(O\rho ({x}_{\ell }))\,\approx \,{y}_{\ell }\).

Norm inequality for observables

The efficiency of an ℓ1-regularized regression depends greatly on the ℓ1 norm of the vector \({{{{{{{{\bf{w}}}}}}}}}^{{\prime} }\). Moreover, the ℓ1-norm of \({{{{{{{{\bf{w}}}}}}}}}^{{\prime} }\) is closely related to the observable O = ∑jOj given as a sum of geometrically local observables with ∥O∥∞≤1. In particular, again writing O in the Pauli basis as \(O={\sum }_{Q\in {\{I,X,Y,Z\}}^{\otimes n}}{\alpha }_{Q}Q\), the ℓ1-norm \(\parallel {{{{{{{{\bf{w}}}}}}}}}^{{\prime} }{\parallel }_{1}\) is closely related to \({\sum }_{Q}\left\vert {\alpha }_{Q}\right\vert,\) which we refer to as the Pauli 1-norm of the observable O. While it is well known that

there do not seem to be many known results characterizing \({\sum }_{Q}\left\vert {\alpha }_{Q}\right\vert\). To understand the Pauli 1-norm, we prove the following theorem.

Theorem 2

(Pauli 1-norm bound). Let \(O={\sum }_{Q\in {\{I,X,Y,Z\}}^{\otimes n}}{\alpha }_{Q}Q\) be an observable that can be written as a sum of geometrically local observables. We have,

for some constant C.

A series of related norm inequalities are also established in81. However, the techniques used in this work differ significantly from those in81.

Prediction error bound for the ML algorithm

Using the construction of the local function fP(xc, c ∈ IP) given in Eq. (16) and the vector \({{{{{{{{\bf{w}}}}}}}}}^{{\prime} }\) defined in Eq. (19), we can show that

The second inequality follows by bounding the size of our discrete subset XP and noticing that ∣IP∣ = poly(δ1). The norm inequality in Theorem 2 then implies

because ∥O∥∞ ≤ 1 and \({\delta }_{1}=\Theta ({\log }^{2}(1/\epsilon )),{\delta }_{2}=\Theta (1/\epsilon )\). This shows that there exists a vector \({{{{{{{{\bf{w}}}}}}}}}^{{\prime} }\) that has a bounded ℓ1-norm and achieves a small training error. The existence of \({{{{{{{{\bf{w}}}}}}}}}^{{\prime} }\) guarantees that the vector w* found by the optimization problem with the hyperparameter \(B\ge \parallel {{{{{{{{\bf{w}}}}}}}}}^{{\prime} }{\parallel }_{1}\) will yield an even smaller training error. Using the norm bound on \({{{{{{{{\bf{w}}}}}}}}}^{{\prime} }\), we can choose the hyperparameter B to be \(B={2}^{{{{{{{{\rm{poly}}}}}}}}\log (1/\epsilon )}\). Using standard learning theory46,47, we can thus obtain

with probability at least 1 − δ. The first term is the training error for w*, which is smaller than the training error of 0.53ϵ for \({{{{{{{{\bf{w}}}}}}}}}^{{\prime} }\) from Lemma 1. Thus, the first term is bounded by 0.53ϵ. The second term is determined by B and mϕ, where we know that \({m}_{\phi }\le | {S}^{{{{{{{{\rm{(geo)}}}}}}}}}| {(1+\frac{2}{{\delta }_{2}})}^{{{{{{{{\rm{poly}}}}}}}}({\delta }_{1})}\) and \(| {S}^{{{{{{{{\rm{(geo)}}}}}}}}}|={{{{{{{\mathcal{O}}}}}}}}(n)\). Hence, with a training data size of

we can achieve a prediction error of ϵ with probability at least 1 − δ for any distribution \({{{{{{{\mathcal{D}}}}}}}}\) over [−1, 1]m.

Data availability

Source data are available for this paper. All data can be found or generated using the source code at https://github.com/lllewis234/improved-ml-algorithm83.

Code availability

Source code for an efficient implementation of the proposed procedure is available at https://github.com/lllewis234/improved-ml-algorithm83.

Change history

26 February 2024

A Correction to this paper has been published: https://doi.org/10.1038/s41467-024-46164-4

References

Hohenberg, P. & Kohn, W. Inhomogeneous electron gas. Phys. Rev. 136, B864–B871 (1964).

Kohn, W. Nobel lecture: Electronic structure of matter—wave functions and density functionals. Rev. Mod. Phys. 71, 1253–1266 (1999).

Ceperley, D. & Alder, B. Quantum Monte Carlo. Science 231, 555–560 (1986).

Sandvik, A. W. Stochastic series expansion method with operator-loop update. Phys. Rev. B 59, R14157–R14160 (1999).

Becca, F. & Sorella, S. Quantum Monte Carlo Approaches for Correlated Systems. Cambridge University Press, (2017).

White, S. R. Density matrix formulation for quantum renormalization groups. Phys. Rev. Lett. 69, 2863–2866 (1992).

White, S. R. Density-matrix algorithms for quantum renormalization groups. Phys. Rev. B 48, 10345–10356 (1993).

Carleo, G. et al. Machine learning and the physical sciences. Rev. Mod. Phys. 91, 045002 (2019).

Carrasquilla, J. Machine learning for quantum matter. Adv. Phys. X 5, 1797528 (2020).

Deng, Dong-Ling, Li, X. & Das Sarma, S. Machine learning topological states. Phys. Rev. B 96, 195145 (2017).

Carrasquilla, J. & Melko, R. G. Machine learning phases of matter. Nat. Phys. 13, 431 (2017).

Carleo, G. & Troyer, M. Solving the quantum many-body problem with artificial neural networks. Science 355, 602–606 (2017).

Torlai, G. & Melko, R. G. Learning thermodynamics with Boltzmann machines. Phys. Rev. B 94, 165134 (2016).

Nomura, Y., Darmawan, A. S., Yamaji, Y. & Imada, M. Restricted boltzmann machine learning for solving strongly correlated quantum systems. Phys. Rev. B 96, 205152 (2017).

van Nieuwenburg, EvertP. L., Liu, Ye-Hua & Huber, S. D. Learning phase transitions by confusion. Nat. Phys. 13, 435 (2017).

Wang, L. Discovering phase transitions with unsupervised learning. Phys. Rev. B 94, 195105 (2016).

Gilmer, J., Schoenholz, S. S., Riley, P. F., Vinyals, O. & Dahl, G. E. Neural message passing for quantum chemistry. International conference on machine learning. PMLR (2017).

Torlai, G. et al. Neural-network quantum state tomography. Nat. Phys. 14, 447–450 (2018).

Vargas-Hernández, R. A., Sous, J., Berciu, M. & Krems, R. V. Extrapolating quantum observables with machine learning: inferring multiple phase transitions from properties of a single phase. Phys. Rev. Lett. 121, 255702 (2018).

Schütt, K. T., Gastegger, M., Tkatchenko, A., Müller, K.-R. & Maurer, R. J. Unifying machine learning and quantum chemistry with a deep neural network for molecular wavefunctions. Nat. Commun. 10, 1–10 (2019).

Glasser, I., Pancotti, N., August, M., Rodriguez, I. D. & Cirac, J. I. Neural-network quantum states, string-bond states, and chiral topological states. Phys. Rev. X 8, 011006 (2018).

Caro, M. C. et al. Out-of-distribution generalization for learning quantum dynamics. Preprint at arXiv https://doi.org/10.48550/arXiv.2204.10268 (2022).

Rodriguez-Nieva, J. F. & Scheurer, M. S. Identifying topological order through unsupervised machine learning. Nat. Phys. 15, 790–795 (2019).

Qiao, Z., Welborn, M., Anandkumar, A., Manby, F. R. & Miller III, T. F. Orbnet: deep learning for quantum chemistry using symmetry-adapted atomic-orbital features. J. Chem. Phys. 153, 124111 (2020).

Choo, K., Mezzacapo, A. & Carleo, G. Fermionic neural-network states for ab-initio electronic structure. Nat. Commun. 11, 2368 (2020).

Kawai, H. & Nakagawa, Y. O. Predicting excited states from ground state wavefunction by supervised quantum machine learning. Mach. Learn. 1, 045027 (2020).

Moreno, JavierRobledo, Carleo, G. & Georges, A. Deep learning the hohenberg-kohn maps of density functional theory. Phys. Rev. Lett. 125, 076402 (2020).

Kottmann, K., Corboz, P., Lewenstein, M. & Acín, A. Unsupervised mapping of phase diagrams of 2d systems from infinite projected entangled-pair states via deep anomaly detection. SciPost Phys. 11, 025 (2021).

Wang, H., Weber, M., Izaac, J. & Yen-Yu Lin, C. Predicting properties of quantum systems with conditional generative models. Preprint at arXiv https://doi.org/10.48550/arXiv.2211.16943 (2022).

Tran, V. T. et al. Using shadows to learn ground state properties of quantum hamiltonians. Machine Learning and Physical Sciences Workshop at the 36th Conference on Neural Information Processing Systems (NeurIPS), (2022).

Mills, K., Spanner, M. & Tamblyn, I. Deep learning and the schrödinger equation. Phys. Rev. A 96(Oct), 042113 (2017).

Saraceni, N., Cantori, S. & Pilati, S. Scalable neural networks for the efficient learning of disordered quantum systems. Phys. Rev. E 102, 033301 (2020).

Huang, C. & Rubenstein, B. M. Machine learning diffusion monte carlo forces. J. Phys. Chem. A 127, 339–355 (2022).

Rupp, M., Tkatchenko, A., Müller, Klaus-Robert & Von Lilienfeld, O. A. Fast and accurate modeling of molecular atomization energies with machine learning. Phys. Rev. Lett. 108, 058301 (2012).

Faber, F. A. et al. Prediction errors of molecular machine learning models lower than hybrid dft error. J. Chem. Theory Comput. 13, 5255–5264 (2017).

Huang, Hsin-Yuan, Kueng, R., Torlai, G., Albert, V. V. & Preskill, J. Provably efficient machine learning for quantum many-body problems. Science 377, eabk3333 (2022).

Farhi, E., Goldstone, J., Gutmann, S. & Sipser, M. Quantum computation by adiabatic evolution. Preprint at arXiv https://doi.org/10.48550/arXiv.quant-ph/0001106 (2000).

Mizel, A., Lidar, D. A. & Mitchell, M. Simple proof of equivalence between adiabatic quantum computation and the circuit model. Phys. Rev. Lett. 99, 070502 (2007).

Childs, A. M., Farhi, E. & Preskill, J. Robustness of adiabatic quantum computation. Phys. Rev. A 65, 012322 (2001).

Aharonov, D. et al. Adiabatic quantum computation is equivalent to standard quantum computation. SIAM Rev. 50, 755–787 (2008).

Barends, R. et al. Digitized adiabatic quantum computing with a superconducting circuit. Nature 534, 222–226 (2016).

Albash, T. & Lidar, D. A. Adiabatic quantum computation. Rev. Mod. Phys. 90, 015002 (2018).

Du, J. et al. Nmr implementation of a molecular hydrogen quantum simulation with adiabatic state preparation. Phys. Rev. Lett. 104, 030502 (2010).

Wan, K. & Kim, I. Fast digital methods for adiabatic state preparation. Preprint at arXiv https://doi.org/10.48550/arXiv.2004.04164 (2020).

Santosa, F. & Symes, W. W. Linear inversion of band-limited reflection seismograms. SIAM J. Sci. Stat. Comput. 7, 1307–1330 (1986).

Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. 58, 267–288 (1996).

Mohri, M., Rostamizadeh, A. & Talwalkar, A. Foundations of Machine Learning. (The MIT Press, 2018).

Huang, Hsin-Yuan, Kueng, R. & Preskill, J. Predicting many properties of a quantum system from very few measurements. Nat. Phys. 16, 1050–1057 (2020).

Elben, A. et al. Mixed-state entanglement from local randomized measurements. Phys. Rev. Lett. 125, 200501 (2020).

Elben, A. et al. The randomized measurement toolbox. Nat. Rev. Phys. 5, 9–24 (2023).

Wan, K., Huggins, W. J., Lee, J. & Babbush, R. Matchgate shadows for fermionic quantum simulation. Commun. Math. Phys. 404, 1–72 (2023).

Bu, K., Koh, Dax Enshan, Garcia, R. J. & Jaffe, A. Classical shadows with pauli-invariant unitary ensembles. Npj Quantum Inf. 10, 6 (2024).

Efron, B., Hastie, T., Johnstone, I. & Tibshirani, R. Least angle regression. Ann. Stat. 32, 407–499 (2004).

Daubechies, I., Defrise, M. & De Mol, C. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 57, 1413–1457 (2004).

Combettes, P. L. & Wajs, ValérieR. Signal recovery by proximal forward-backward splitting. Multiscale Model. Simul. 4, 1168–1200 (2005).

Cesa-Bianchi, N., Shalev-Shwartz, S. & Shamir, O. Efficient learning with partially observed attributes. J. Mach. Learn. Res. 12, 2857–2878 (2011).

Friedman, J., Hastie, T. & Tibshirani, R. Regularization paths for generalized linear models via coordinate descent. J. Stat. Softw. 33, 1 (2010).

Hazan, E. & Koren, T. Linear regression with limited observation. In Proceedings of the 29th International Conference on Machine Learning, 1865–1872 (2012).

Chen, Y. & de Wolf, R. Quantum algorithms and lower bounds for linear regression with norm constraints. Leibniz Int. Proc. Inf. 38, 1–21 (2023).

Van Kirk, K., Cotler, J., Huang, Hsin-Yuan & Lukin, M. D. Hardware-efficient learning of quantum many-body states. Preprint at arXiv https://doi.org/10.48550/arXiv.2212.06084 (2022).

Huang, H.-Y. et al. Power of data in quantum machine learning. Nat. Commun. 12, 1–9 (2021).

Rahimi, A. & Recht, B. Random features for large-scale kernel machines. In Proceedings of the 20th International Conference on Neural Information Processing Systems, 1177–1184 (2007).

Liu, L., Shao, H., Lin, Yu-Cheng, Guo, W. & W Sandvik, A. Random-singlet phase in disordered two-dimensional quantum magnets. Phys. Rev. X 8, 041040 (2018).

White, S. R. Density matrix formulation for quantum renormalization groups. Phys. Rev. Lett. 69, 2863 (1992).

Schollwoeck, U. The density-matrix renormalization group in the age of matrix product states. Ann. Phys. 326, 96–192 (2011).

Cortes, C. & Vapnik, V. Support-vector networks. Mach. Learn. 20, 273–297 (1995).

Murphy, K. P. Machine Learning: A Probabilistic Perspective. (MIT press, 2012).

Jacot, A., Gabriel, F. & Hongler. C. Neural tangent kernel: Convergence and generalization in neural networks. In NeurIPS, pp. 8571–8580 (2018).

Novak, R. et al. Neural tangents: Fast and easy infinite neural networks in python. In International Conference on Learning Representations (2020).

Brown, T. et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 33, 1877–1901 (2020).

Deng, J. et al. Imagenet: a large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition, pp. 248–255. (IEEE, 2009).

Saharia, C. et al. Photorealistic text-to-image diffusion models with deep language understanding. Adv. Neural Inf. Process. Syst. 35, 36479–36494 (2022).

Aharonov, D., Cotler, J. S. & Qi, Xiao-Liang. Quantum algorithmic measurement. Nat. Commun. 13, 887 (2022).

Chen, S., Cotler, J., Huang, Hsin-Yuan & Li, J. Exponential separations between learning with and without quantum memory. In 2021 IEEE 62nd Annual Symposium on Foundations of Computer Science (FOCS), pp. 574–585. (IEEE, 2022).

Huang, Hsin-Yuan, Flammia, S. T. & Preskill, J. Foundations for learning from noisy quantum experiments. Preprint at arXiv https://doi.org/10.48550/arXiv.2204.13691 (2022).

Huang, Hsin-Yuan, Kueng, R. & Preskill, J. Information-theoretic bounds on quantum advantage in machine learning. Phys. Rev. Lett. 126, 190505 (2021).

Huang, Hsin-Yuan et al. Quantum advantage in learning from experiments. Science 376, 1182–1186 (2022).

Bachmann, S., Michalakis, S., Nachtergaele, B. & Sims, R. Automorphic equivalence within gapped phases of quantum lattice systems. Commun. Math. Phys. 309, 835–871 (2012).

Hastings, M. B. & Wen, X.-G. Quasiadiabatic continuation of quantum states: the stability of topological ground-state degeneracy and emergent gauge invariance. Phys. Rev. B 72, 045141 (2005).

Osborne, T. J. Simulating adiabatic evolution of gapped spin systems. Phys. Rev. A 75, 032321 (2007).

Huang, Hsin-Yuan, Chen, S. & Preskill, J. Learning to predict arbitrary quantum processes. PRX Quantum 4, 040337 (2022).

Onorati, E., Rouzé, C., França, Daniel Stilck & Watson, J. D. Efficient learning of ground and thermal states within phases of matter. Preprint at arXiv https://doi.org/10.48550/arXiv.2301.12946 (2023).

Lewis, L. et al. Improved machine learning algorithm for predicting ground state properties. improved-ml-algorithm. https://doi.org/10.5281/zenodo.10154894 (2023).

Acknowledgements

The authors thank Chi-Fang Chen, Sitan Chen, Johannes Jakob Meyer, and Spiros Michalakis for valuable input and inspiring discussions. We thank Emilio Onorati, Cambyse Rouzé, Daniel Stilck França, and James D. Watson for sharing a draft of their new results on efficiently predicting properties of states in thermal phases of matter with exponential decay of correlation and in quantum phases of matter with local topological quantum order82. LL is supported by Caltech Summer Undergraduate Research Fellowship (SURF), Barry M. Goldwater Scholarship, and Mellon Mays Undergraduate Fellowship. HH is supported by a Google PhD fellowship and a MediaTek Research Young Scholarship. JP acknowledges support from the U.S. Department of Energy Office of Science, Office of Advanced Scientific Computing Research (DE-NA0003525, DE-SC0020290), the U.S. Department of Energy, Office of Science, National Quantum Information Science Research Centers, Quantum Systems Accelerator, and the National Science Foundation (PHY-1733907). The Institute for Quantum Information and Matter is an NSF Physics Frontiers Center.

Author information

Authors and Affiliations

Contributions

H.H. and J.P. conceived the project. L.L. and H.H. developed the mathematical aspects of this work. L.L., H.H., S.L., and V.T. conducted the numerical experiments and wrote the open-source code. L.L., H.H., R.K., and J.P. wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewers for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lewis, L., Huang, HY., Tran, V.T. et al. Improved machine learning algorithm for predicting ground state properties. Nat Commun 15, 895 (2024). https://doi.org/10.1038/s41467-024-45014-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-024-45014-7

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.