Abstract

Near-eye displays are fundamental technology in the next generation computing platforms for augmented reality and virtual reality. However, there are remaining challenges to deliver immersive and comfortable visual experiences to users, such as compact form factor, solving vergence-accommodation conflict, and achieving a high resolution with a large eyebox. Here we show a compact holographic near-eye display concept that combines the advantages of waveguide displays and holographic displays to overcome the challenges towards true 3D holographic augmented reality glasses. By modeling the coherent light interactions and propagation via the waveguide combiner, we demonstrate controlling the output wavefront using a spatial light modulator located at the input coupler side. The proposed method enables 3D holographic displays via exit-pupil expanding waveguide combiners, providing a large software-steerable eyebox. It also offers additional advantages such as resolution enhancement capability by suppressing phase discontinuities caused by pupil replication process. We build prototypes to verify the concept with experimental results and conclude the paper with discussion.

Similar content being viewed by others

Introduction

Near-eye display technology is evolving rapidly along with the pursuit of next generation computing platforms. For augmented reality (AR)1 in particular, various see-through near-eye display architectures have been invented and explored in the recent decades. Examples include birdbath type displays, curved mirror type displays, retinal projection displays, and pin mirror displays2,3,4. Among the plethora of architectures, waveguide image combiners (or exit-pupil expanding waveguides) remain a leading candidate for augmented reality glasses in the industry because of their compact form factor5,6. Additionally, there has been significant effort to realize 3D holographic displays that provide realistic visual experiences7. In this work, we propose a display architecture that combines the advantages of both waveguide displays and holographic displays, enabling the path towards true 3D holographic AR glasses.

As a near-eye display application, the waveguide image combiner or waveguide display refers to a thin, transparent slab that guides the light as a total internal reflection (TIR) mode and replicates the exit-pupils to be delivered to the user’s eye. These waveguides can be designed using different types of light coupling elements. Geometric waveguides use partially reflective surfaces inside the slab to re-direct and extract the light from the waveguide5,8,9,10. Diffractive waveguides may utilize surface relief gratings, volume Bragg gratings, polarization gratings, and meta surface or geometric phase elements as in/out-couplers11,12,13. TIR propagation allows the optical path to be secured in the waveguide without being obstructed, while no bulky projector or imaging optics are needed to be placed in front of user’s eye. The image projector of a waveguide display is typically located at the temple side with an infinity corrected lens, providing high resolution images. The most unique advantage of a waveguide is its étendue expansion capability by pupil replications14. This provides a sufficient eyebox with a fairly large field of view while many other architectures suffer from their trade-off relation imposed by limited étendue. Such advantages make waveguide displays the leading technology of AR displays in recent years4.

Despite the advantages of waveguide displays, there are some limitations to be addressed. First, waveguides can only convey a fixed depth, typically as infinity conjugate images. If finite-conjugate images are projected into the waveguide, the pupil replication process produces copies of different optical paths and aberrations that create severe ghost noise, which is often called focus spread effect6. Generating natural focus cues and addressing the vergence-accommodation conflict7,15 are among the challenging goals of AR in the pursuit of realistic and comfortable visual experiences. Dual or multi-imaging plane waveguide architectures have been studied16,17, but inherently lead to a bulkier form factor and diminished performance, along with added hardware restrictions. Additionally, achieving sufficient brightness with conventional light sources, such as micro LEDs18, is challenging due to the low efficiency of waveguide image combiners. Although laser light sources could greatly reduce the loss from coupling efficiency, their use with waveguides is restricted because coherent light interaction during TIR propagation leads to artifacts and significant image quality degradation.

Meanwhile, holographic display technology is believed to be the ultimate 3D display approach, which modulates the wavefront of light using spatial light modulators (SLMs)7. It also offers unique benefits such as aberration-free, high-resolution images, per-pixel depth control, ocular parallax depth cues, vision correction functionality19,20,21, as well as a large color gamut. Recently, a lot of progress has been made in the field of computer-generated hologram (CGH) rendering, garnering more attention from the industry22,23,24,25,26,27,28,29,30,31,32,33,34,35,36. Several conventional issues with holographic displays, including speckle, image quality, and heavy computational load, have been shown to be resolved with the help of enhanced CGH rendering models and the increased computing power of recent graphics processing units (GPUs)37,38,39,40. However, designing a compact architecture for near-eye holographic displays remains an unsolved problem due to limited étendue27,41. Retinal projection type designs have been explored with a holographic projector at the temple side that projects the hologram via oblique free-space projection to the eyepiece combiner22,42,43. However, such configurations have limited space and angular bandwidth to transmit enough étendue from the temple side to the eyepiece even with mechanical pupil steering42,43, making the ergonomic glasses form factor an even more ambitious goal.

There have been early efforts to use waveguides as an illumination source to produce a projection pattern or image formed by an out-coupler grating with an embedded hologram pattern44,45,46. Because only a static image could be displayed and no information was carried inside the waveguide until out-coupled, this approach was not suitable for augmented reality display purposes, but represented a very early stage attempt to combine waveguides and holograms together.

Recently, researchers have attempted to implement dynamic holographic displays using the light guiding slabs47, with further efforts being made to compensate for aberrations and improve image quality48,49,50. While they share similar motivations for transmitting holograms via waveguides, there are fundamental limitations on scalability because the method is not intended to support pupil replication; in other words, the focus spread effect remains unsolved. The light guiding slab must be thick enough to avoid replication, otherwise the overlapped wavefront becomes scrambled, creating severe artifacts such as multiple ghost images and low contrast. As a result, thick substrates (347−849 mm) are chosen for such architectures, which would not be suitable for true glasses form factor. Additionally, the eyebox and field of view are fundamentally limited to be small in such architectures6.

In this study, we present a compact near-eye display system titled waveguide holography, which combines the merits of waveguide image combiners and holographic displays. Our approach fundamentally differs from previous works47,48,49,50 as it addresses the focus spread effect of exit-pupil expanding waveguides. The core idea is to model the coherent light interaction inside exit-pupil expanding waveguides as a propagation with multi-channel kernels. Precise model calibration is enabled by a complex wavefront capturing system and algorithm based on phase-shifting digital holography. As a result, we demonstrate that the out-coupled wavefront from the waveguide can be precisely controlled by modulating the input wavefront using our model.

We experimentally verify the capability of displaying full 3D images and the étendue expansion, which enables a large software-steered eyebox. In addition, we demonstrate that our method offers enhanced resolution beyond the limit of conventional waveguide displays. We present a detailed analysis of architecture design and scalability in the Supplementary Material (See Supplementary Figs. 4-8), and conclude in the Discussion section with some limitations as well as interesting future works.

Results

Architecture

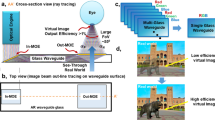

Figure 1a illustrates the architecture of the proposed system, while Fig. 1b, c illustrate the compact prototype and benchtop prototype, respectively. The system consists of a collimated laser light source, a spatial light modulator (SLM), a exit-pupil expanding waveguide with surface relief gratings, and linear polarizers laminated on the SLM and out-coupler of the waveguide. Compared with the conventional waveguide display, the major difference is that the image projector is replaced with the hologram projection module. The SLM is placed without any projection lens, eliminating the need of physical propagation distance, as well as achieving a light weight design. The benchtop prototype is built on the optical table with the same architecture and similar specifications, while the SLM is relayed with de-magnifying 4- f imaging system. The benchtop prototype is useful for iterating design parameters and benchmarking the performance, while the compact prototype showcases its form factor. More details and further miniaturization methods are provided in Method section.

a Conceptual illustration of the proposed display architecture using the exit-pupil expanding waveguide (WG). The hologram projection module consists of SLM, a linear polarizer (LP), a half wave plate (HWP), and beam splitter (BS) for illumination path. Apertures with different colors indicates software-steered eyebox that can be formed in the arbitrary 3D space at the out-coupler side, fully exploiting the étendue expansion capability of the waveguide. b A photograph of the compact prototype for the proof-of-concept. The SLM size can be further reduced since the active area is only as large as the input coupler size, which is 20% of the total SLM area. c A benchtop prototype built for design iteration and benchmarking the performance. L1 and L2: lenses for 4- f imaging system, M: mirror. See Method for the details of the system implementation. Graphics rendered by Eric Davis.

The input light is modulated by the SLM and coupled by the in-coupler grating into the waveguide. The light propagates as a total internal reflection mode and is diffracted by an exit-pupil expanding (EPE) grating and out-coupler grating that are typically designed as leaky gratings14. This pupil-replication process generates manifold shifted copies of the wavefront having different optical paths inside the waveguide, that interfere with each other so that the phase and intensity of the final output wavefront is intricately scrambled. In conventional waveguide image combiners, these phenomena are understood as coherence artifacts which should be avoided. However, we fully exploit this coherent interaction of light to precisely shape the output wavefront using spatial light modulator from the hologram projection module.

Note that the étendue of transmitted light is expanded by the exit-pupil expanding waveguide, but the bandwidth of information is unchanged. Thus, controlling the entire output wavefront from modulating only the input wavefront is fundamentally an over-constrained problem. To overcome the shortage of information bandwidth, we take advantage of the fact that most of the output wavefront does not enter the eye pupil. We set the virtual target aperture at the eyebox domain as a region of interest (ROI) for wavefront shaping, and this aperture can be computationally steered to match the size and 3D location of user’s eye pupil. This idea is similar to some of the previous CGH generation algorithms51. With the aid of eye tracking, the system can fully utilize the expanded étendue and achieve a software-steered eyebox without mechanical steering as large as conventional waveguide displays can provide.

Modeling of hologram propagation in waveguides

In other to model the coherent light interaction inside the exit-pupil expanding waveguide, we start with making an assumption that the waveguide can be approximated as a linear shift invariant (LSI) system. The light in-coupling and out-coupling process of the waveguide can be simplified as combination of three major optical interactions: optical propagation in the waveguide substrate; total internal reflection at the substrate boundary; and the first order diffraction at the gratings. All the three interactions are linear operators with complex-valued input and output. Also, the spatially shift invariant property can be satisfied under the assumption of homogeneous grating profiles; in other words, each grating does not have optical power or boundary. Although there are physical boundaries of the gratings, this condition can be approximately satisfied to light paths that do not encounter grating boundaries. The LSI assumption enables key advantages to model the waveguide system in terms of Fourier optics regimes. First, all the complicated interactions can be simplified as a single convolution operation. This interpretation is computationally efficient compared to tracking all the light interactions of different paths inside the waveguide, which involves manifold operations with heavy computation. Based on the assumption, we build a differentiable forward model that is useful for model calibration and CGH rendering. The analytic derivation of the convolution kernel and its gradient is provided in Supplementary Material and Supplementary Fig. 1.

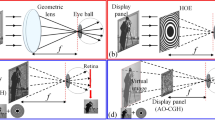

Despite the advantages of the LSI assumption, typical waveguides are not perfect shift invariant systems in practice. There are a plethora of factors that alleviate the spatially shift invariant assumption, such as the non-uniformity of the grating, the surface flatness of the substrate, or the slant angle of the slab. In particular, physical boundaries of the grating introduce clipping of the wavefront and edge diffraction, resulting in different optical paths depending on the position at the input domain. In addition, defects in the grating and unwanted scattering from particles or dust all contribute to invalidating LSI approximation. Therefore, we introduce the multi-channel convolution model with complex apertures to handle the spatially variant nature of the system. Our model pipeline is illustrated in the upper part of Fig. 2, which consists of the multi-channel kernels h and the complex apertures Q, R, and their visualization is presented in Fig. 3. All the apertures and kernels are complex valued 2D matrices, and their sizes are dependent on the input SLM size and output ROI size. Aperture Q is intended to model the in-coupler of the waveguide, and also helps to select a different convolution path depending on the position at the in-coupler. Each h is intended to emulate different possible light interaction paths inside the waveguide, which is the main source of spatial variance characteristics. Aperture R additionally calibrates the intensity and phase fluctuation of the resultant field after the convolution, possibly caused by out-coupler grating or EPE grating. By merging output wavefronts from all the paths as a linear complex-number summation, the model acquires capacity to capture spatially variant properties. The linear summation also induces a smooth transition between adjacent positions, which prevents the model from becoming too sensitive while still maintaining its differentiable property.

The upper part represents the proxy path that models the waveguide propagation system, while the middle part represents the real-world path that demonstrates the experimental pipeline to measure the out complex wavefront from the waveguide, which is used to generate the dataset for model calibration. Once the model is calibrated, a free space propagation can be added to render the CGH. Robot images are rendered by Tech Art team in Meta.

a Visualizations of optimized model parameters as gray-scale amplitude and color map phase images: multi-channel complex apertures Q (top), multi-channel kernels h (middle), and R (bottom). b Comparison of the measured output wavefront and the estimated wavefront using the model. Orange and red insets are sampled at the same position in the eyebox domain, so as blue and green insets. Magnified images of insets are displayed in the right side for visual comparison. c The ablation analysis result of complex wavefront estimation performance with their standard deviation according to the variations of the modeling (left), structures of multi-channel model (middle), and scaling the size of the model by changing the ROI size and the number of channels (right).

We also add the parameters to model SLM response and the physical propagation of wavefronts in front of the waveguide model. The SLM modeling consists of a crosstalk kernel and spatially varying phase response function. The physical propagation includes free space propagation, as well as 3D tilt and a homography changes from the alignment mismatch and aberration. Details are provided in the Method Section and Supplementary Material.

Model calibration using complex wavefront camera

The real-world path of Fig. 2 illustrates the complex wavefront dataset acquisition pipeline. We implement the Mach-Zehnder type phase-shifting interferometer system at the out-coupler side of the waveguide that captures the interferogram of the output wavefront from the waveguide and the plane reference beam52,53. The complex wavefront is then retrieved using the phase-shifting algorithm. We call the interferometer system wavefront camera for convenience. Random phase input is used for generating the dataset since it contains all the frequency components uniformly. After the data acquisition is finished, the loss is calculated as an L1 norm between the estimated complex field and the measured complex field dataset during the training stage as:

We emphasize that the complex wavefront capture is one of the key factors that enables the precise training of waveguide propagation model. Compared with generic free space propagation, the light propagation inside the waveguide generates complicated overlapping and coherent interference of replicated wavefronts. By measuring the intensity only, it is difficult to infer the waveguide kernels and complex apertures in the model as the useful information is buried in the noisy interference pattern. With the wavefront camera, the access to phase information could successfully retrieve the coherent light interaction in the waveguide.

Also, our method offers a one-time calibration for a large 3D eyebox area. Once the model is trained, the pupil size, location, and position can be freely selected within the ROI by cropping a different area from the estimated wavefront. Additionally, eye relief of the eyebox can be changed by numerically propagating the wavefront. This is a significant difference from conventional camera-in-the-loop calibration methods, which were not practical for calibrating all the possible pupil locations and sizes separately. The size of the ROI of the model can be selected by scaling the model size to its area. Up to 7 mm square eyebox could be fitted to the model, mainly restricted by the sensor size of the wavefront camera.

Ablation analysis

Figure 3b illustrates the estimation result of the output complex wavefront. To evaluate the contribution of the different elements consisting the model, ablation analysis is performed as presented in the left of Fig. 3c, where the ROI is set as 3.5 mm square. First, the kernel-only model consists of only a single h kernel while the physical propagation module, Q, R and DC components are omitted. Then we add the physical propagation module to the kernel-only model. The single channel indicates the full pipeline including h, Q, R and DC components as shown in Fig. 2. We use peak signal-to-noise ratio (PSNR) and complex PSNR (c-PSNR) values to evaluate the similarity between the estimated wavefront and the measured wavefront. The complex PSNR is calculated by concatenating real and imaginary part of the complex wavefront to form a real-valued matrix. A higher value indicates that the model predicts the output wavefront with a higher precision. The c-PSNR tends to be lower than PSNR as only a slight change in the phase offset will result in a large error distance in the complex number domain. The result shows that the complex apertures and DC component are helpful to enhance the fidelity of the model. Also, it can be verified that multi-channel model significantly boosts the performance compared with single-channel model. The effectiveness is eventually saturated as 9-channel and 16-channel do not show a noticeable difference.

We also tested the contribution of each multi-channel parameter Q, h, and R. When Q is set as a single channel (1 − N − N), all the kernels h share the same input complex aperture, therefore the model loses the capacity to handle the spatially variant property which is described above. As a result, the fidelity of prediction drops significantly as shown in Fig. 3c. Meanwhile, when R channel is set as a single channel (N − N − 1), the PSNR drop is relatively slight. This result aligns with the physical intuition of the modeling as R is expected to capture the wavefront modulation at the out-coupler side or outside of the waveguide, which we expect to show more homogeneous response than inner waveguide interactions.

In the right of Fig. 3c, we show the scalability of the model by varying the size of the output ROI. The size of the ROI does not significantly affect the model performance which aligns with the assumptions used in the modeling. At the in-coupler side, each channel that shares a similar convolution path can be assorted spatially, as shown in the shape of Q. Then the pupil replication process extends the receptive field of each channel to entire out-coupler area. Therefore the required number of channels is not dependent on the out-coupler domain, but dominantly decided at the in-coupler domain.

CGH rendering

Once the model training is finished, the CGH can be calculated by adding a numerical propagation at the end of the model pipeline with parameterized input phase of the SLM as illustrated in 2. The input phase is initialized as random and propagates through a forward path to generate the output retinal image. The loss is calculated as an L1 norm of the difference of the target image and the model output. For 3D contents, the loss can be calculated at multiple depths and added together. Focal stacks or light field images can be used to promote the accurate blurring effect or ocular parallax24,54,55,56. The loss is back propagated to update the input phase and the whole process is iterated until the estimated result reaches a certain PSNR value.

Experimental results

Figure 4 demonstrates the experimental results captured in a benchtop prototype, where the field of view is slightly less than 11 degrees diagonally, determined by the SLM pitch size. Further system details are provided in the Method section and Supplementary Material. Capture is performed using two different methods. First, we put an imaging camera with the 3D printed entrance pupil mask with the exact size and position of the targeted ROI and capture the image directly. Second, we capture the complex wavefront in the eyebox domain using a wavefront camera and numerically propagate it to the image plane. Wavefront cameras can avoid aberration from the camera lens or alignment error because numerical propagation replaces a physical aperture and camera lens. Also, it offers precise numerical refocusing capability with a much larger depth range. However, the phase shifting process could add noise to the reconstructed image52. We use both capturing methods to evaluate the results. The first column of Fig. 4a is presented for comparison, where the waveguide module in the pipeline has been replaced with a generic wave propagation function that is agnostic to the waveguide. It is noteworthy that our model improves the image quality significantly even when the image is displayed at the infinity depth, where there is no explicit presence of ghost noise. When finite depth holograms are displayed, the images suffer severely from ghost noise and aberration created by duplicated pupils without our method. The second and third column of Fig. 4a show the display results using our model captured with the imaging camera and wavefront camera, respectively, with the latter showing slightly higher resolution, albeit with a marginally diminished contrast as previously discussed. The results verify that the focus spread artifacts are solved and holograms are reconstructed at desired depths via the waveguide.

a The first column illustrates the results captured without our method while the second and third columns illustrate the result with holograms generated using our model, captured with imaging camera and wavefront camera respectively. Finite depth images are displayed at 3 diopter (D) from the user’s pupil. The yellow/blue insets correspond to 1.3 degree of field of view and red inset corresponds to 10 arcmin. Limited fill factor of the SLM generates a DC noise at the center of the field of view. b Demonstration of full depth range. The infinity diopter indicates the user’s pupil plane and the image is captured directly by putting the camera sensor without lens. See Supplementary Figs. 9-14 for more results. Cat image by Lali Masriera (CC BY 2.0), Seattle skyline image by fiction-parade (CC BY-SA 2.0), bicycle image by Fiore Power (CC BY 2.0).

Figure 4b shows the holograms generated and captured at full depth range from zero to infinity distance. We note that Fig. 4a, b is captured at arbitrarily selected eyebox positions different from each other. Once the model is calibrated, any size and location in 3D space of the eyebox can be chosen without additional pupil calibration. We provide more results demonstrating the large eyebox and effect of pupil offset in the Supplementary Material.

3D display results are presented in Fig. 5. Display results of the compact prototype are presented in Fig. 5b, where the scene is captured through the waveguide to demonstrate the see-through quality. The 4 K SLM used in the prototype exhibits a phase flicker artifact that compromises calibration accuracy and image quality, with further details elaborated in the Method section. Figure 5c shows the temporally-multiplexed 3D results captured with the wavefront camera. CGH is rendered using focal stack target (12 planes) with accurately rendered blur and occlusion. Since our waveguide is designed for a single wavelength, we showcase pseudo-color images by merging separately captured RGB channels images in order to facilitate the intuitive visual perception of the 3D effect and image quality. View the Supplementary Movie 157 for the continuous focus change.

a All fish and dandelion images are displayed with a single frame of 3D hologram and captured at the different focus distances. b Augmented reality display results captured directly through the compact prototype glasses. The dandelion image is displayed at 3 diopter and the fish is displayed at 1 diopter. c Full 3D results captured with temporally multiplexed CGH (pseudo-color). RGB channel images are captured separately with the same wavelength and merged to facilitate the visual perception of the 3D effect and image quality. Each color channel has 3 sub-frames. View the Supplementary Movie 157 for the continuous focus change. Robot images are rendered by Tech Art team in Meta.

In an ideal lossless waveguide, the angular resolution of the transmitted wavefront is decided by the number of the modes and mode spacing that a waveguide can support for the monochromatic light with wavelength of λ as:

where t is the thickness of the substrate and θT is TIR angle field of view component. However, this does not hold in typical waveguide displays because a waveguide image combiner consists of leaky diffraction gratings with finite boundaries. Numerous beam clippings at the edges of the gratings during the pupil replication, along with clipping at the user’s eye pupil, reduce the effective numerical aperture and the resolution. This beam clipping effect has been an inevitable degradation factor that sets the fundamental limit of the resolution in the waveguide display system in the most cases. Additionally, there are various non-idealities in the system that further degrade the resolution, such as aberration from the projection module or surface flatness.

We demonstrate that such resolution limitations can be overcome by adoption of holographic displays, fully utilizing the coherent nature of light. With the knowledge of light interaction in the waveguide, the phase discontinuities caused by beam clippings can be stitched to achieve smooth phase in the eyebox. Figure 6 illustrates the experimental results to display a tilted plane wave target, captured by the wavefront camera. Without using our method, severe phase discontinuities are observed in the wavefront. With the optimization, it can be visually verified that the phase discontinuity is minimized over the pupil. Also, the amplitude is optimized to be more uniform, resulting the output wavefront to be an ideal infinite conjugated plane beam. This effectively increases the numerical aperture of the display system and improve the resolution. A point spread function (PSF) and modulation transfer function (MTF) in Fig. 6 clearly visualize the improvement. The result shows sub-arc-minute resolution is achieved with over threefold increased Strehl ratio. Strehl ratio is calculated using Mahajan formula58.

The top row and bottom row illustrate experimentally captured output wavefronts using a tilted plane wave as a target, which forms a single image point at an infinite distance, without and with our method, respectively. In the top row, the target tilted plane wave is used as the input wavefront for the waveguide, without the knowledge of the waveguide model. In the bottom row, the output wavefront is optimized to form the target tilted plane wave using our model. The carrier frequency is removed to visualize phase discontinuities by dividing with the target wavefront phase. On the right, the PSFs and MTFs are obtained from the measured wavefronts. In the PSF plots, a single pixel corresponds to 0.53 arcmin. The axis of MTF plot is in cycles per degree (cpd).

Discussion

The following outlines current limitations and challenges of our work for the future research topics. In the current prototypes, the SLM causes some artifacts such as a DC noise and phase flickering. Also, the FOV is limited by the pixel pitch of the SLM. Using a projection lens could increase the FOV by sacrificing form factor; nevertheless, we choose to showcase the feasibility of the ultimate lens-free architecture, betting on future advancements in micro-display technology. With the growing expectations of AR/VR, there are ongoing efforts in academia and industry that aim to achieve breakthroughs in SLMs, such as a sub-micrometer pixel pitch59,60, complex modulation capability61, and high refresh rate62,63,64. Such breakthroughs will greatly benefit the performance and scalability of the proposed architecture. We discuss related details including further miniaturization strategies and potential solutions for the DC noise in the Supplementary Material. Also, the calibration process is sensitive to mechanical perturbation by the nature of an interferometer system. Empirically, the system exhibits better robustness during the display stage than the calibration data acquisition stage. Further investigation into the system’s mechanical sensitivity and improvement on the calibration algorithm would be beneficial. In the modeling perspective, a more accurate representation of the waveguide system can be studied. Our model is built on the physical intuition of the waveguide propagation process consisting of interpretable parameters. Such approach allows useful performance analysis which will be helpful for understanding the system requirements and optimizing the design. A further improvement on fidelity of modeling will lead to better calibration and display quality. On the other hand, computationally efficient modeling is another direction to be pursued. We observe some redundancies in the model parameters; for example, each kernel shares similarities in amplitude and phase shapes and the gain from increasing the number of channels saturates. Such redundancies can be reduced to shrink down the size and computation load of the model. Proposed methods can be adapted for wider applications. The multi-channel model can be modified to capture other aspects of light interaction, such as modeling of partial coherence modes or partially polarized light. The calibration method based on complex wavefront measurements may serve as a valuable tool for calibrating other intricate holographic display systems using the coherent light source. Additionally, adoption of laser light sources for waveguide displays could potentially overcome the brightness and efficiency issues. We believe our contributions would facilitate more follow-up research to take further steps towards the ultra-compact, true 3D holographic AR glasses.

Methods

Waveguide fabrication

The waveguide is fabricated with a glass substrate with refractive index of 1.5 and 1.15 mm thickness. The thickness of the substrate is selected based on the simulation to achieve a good performance and still retain the thin form factor. The center TIR angle is set at 50 degrees with the center wavelength of 532 nm. The waveguide is designed to support 28 degrees of diagonal field of view with an outcoupler size of 16 × 12 mm. The waveguide samples are fabricated using a nano-imprinting method which is suitable for mass production. The specifications of surface relief gratings such as shape, slant angle, and aspect ratio are fine-tuned using rigorous simulations to achieve spatial and angular uniformity at the eyebox domain (see Supplementary Fig. 3). In general, targeting higher uniformity reduces the grating efficiency and thus trades overall efficiency. We set the merit function to balance between uniformity and efficiency, to achieve over 5% of end-to-end throughput efficiency on average and maximize the uniformity. The grating structure is designed as a saw-tooth shape to minimize the unwanted diffraction orders65,66, however it diffracts some portion of light as −1st order. Therefore, we used a beam splitter in the hologram projection module and the light source was expanded outside of the system. Further details are presented in Supplementary Material.

Implementation of prototypes

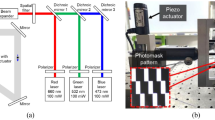

In the optical benchtop prototype, a 532 nm Cobolt Samba 1500 mW laser is used as a light source, a Piezosystem Jena PZ-38 as a piezo actuator for phase shifting digital holography, and a Meadowlark E-series 1920 × 1200 SLM. In de-magnifying relay system, 150 mm (L1) and 75 mm (L2) focal length lenses are used. 62.5% of the SLM area (1200 × 1200 pixels) is used to generate input wavefronts. We built two wavefront cameras in the system; one for capturing the waveguide output wavefront, and the other for capturing relayed SLM to optimize SLM parameters and homography in the model. Both wavefront cameras share the same piezo actuator for phase shifting. A neutral density (ND) filter is used to attenuate the light intensity in the reference path for both wavefront cameras. Details of calibration algorithm is presented in Supplementary Material (see Supplementary Fig. 2). To capture the result images, a 3D printed pupil aperture (3.4 mm square) and an imaging camera are mounted on 3-axis motorized stages and placed at the copy of the output wavefront, duplicated using a beam splitter. The benchtop prototype has 11 degrees of diagonal field of view with 16 × 12 mm eyebox size. The photograph of the system is presented in Supplementary Fig. 7.

In the compact prototype, a 4-f relay system is eliminated and instead we used a 4 K SLM with 3840 × 2160 resolution and 3.74 µm pixel pitch, supporting 12 degrees of field of view. Only 20% of the SLM area (1300 × 1300 pixels) is actively used while other pixels are deactivated. The image quality degradation in the compact prototype is majorly caused by the SLM performance. The SLM has about 10% of phase flicker, which severely degrades the fidelity of model calibration compared with the benchtop prototype since the complex wavefront calibration method is highly sensitive to the phase error. A poorly calibrated DC component causes interference pattern artifacts at the far distance. Also, the SLM has more severe fringe field effects that further deteriorate the image quality. We identified that unfiltered high-order diffraction from the SLM is not a major cause of the quality degradation as they are above the sampling rate for wavefront capturing. The compact prototype experiment is performed on the optical table and a collimated laser is provided externally through a 5 mm beam splitter to the hologram projection module. For calibration, another beam splitter is placed at the eyebox of the waveguide to combine the reference beam.

Details of the algorithm

The physical propagation module in Fig. 2 consists of a crosstalk kernel of the adjacent SLM pixels, spatially varying phase modulation function of the SLM (γ), free space propagation with 3D tilt67,68, and a homography transformation function. The crosstalk kernel has 3 × 3 size and γ is modeled as a polynomial with 18 coefficients. The free space propagation function has 3 parameters including 2D tilt angles and the propagation distance. The homography transformation function is up to second order with 12 coefficients. The physical propagation module is calibrated in advance in the benchtop prototype using the wavefront camera placed at the relayed SLM and then used as the initial value of the model calibration to accelerate the calibration. In the waveguide model, the size of Q is selected to cover the physical size of the in-coupler grating, which is 1200 × 1200. The size of R and DC is selected to be equal to the ROI size. The side length of Kernel h is set as summation of Q and R. For the model calibration, about a thousand captured wavefronts are used as a dataset. In the CGH rendering stage, the loss starts to converge around 1000 iterations and we run up to 3000 iterations. Further implementation details are provided in Supplementary Material.

Data availability

The source data used for Figs. 3 and 6 have been deposited in Figshare under accession code https://doi.org/10.6084/m9.figshare.2267251957.

Code availability

The code used for modeling the hologram propagation in waveguides will be made publicly available (on GitHub) along with the paper. Additional codes are available from the corresponding authors upon request.

References

Azuma, R. A survey of augmented reality. Presence: Teleoperators virtual Environ. 6, 335–385 (1997).

Kress, B. & Starner, T. A review of head-mounted displays (hmd) technologies and applications for consumer electronics. In Proc. SPIE Defense, Secur. Sensing, Int. Soc. for Opt. Photonics (2013).

Lee, B., Jo, Y., Yoo, D. & Lee, J. Recent progresses of near-eye display for AR and VR. In Stella, E. (ed.) Multimodal Sensing and Artificial Intelligence: Technologies and Applications II, vol. 11785, 1178503, https://doi.org/10.1117/12.2596128. International Society for Optics and Photonics (SPIE, 2021).

Kress, B. C. Optical architectures for augmented-, virtual-, and mixed-reality headsets (Society of Photo-Optical Instrumentation Engineers, 2020).

Levola, T. 7.1: Invited paper: Novel diffractive optical components for near to eye displays. In SID Symposium Digest of Technical Papers, vol. 37, 64–67 (Wiley Online Library, 2006).

Kress, B. C. & Chatterjee, I. Waveguide combiners for mixed reality headsets: a nanophotonics design perspective. Nanophotonics 10, 41–74 (2021).

Chang, C., Bang, K., Wetzstein, G., Lee, B. & Gao, L. Toward the next-generation vr/ar optics: a review of holographic near-eye displays from a human-centric perspective. Optica 7, 1563–1578 (2020).

Amitai, Y. Substrate-guided optical devices (2010). US Patent 7,672,055.

Cheng, D., Wang, Y., Xu, C., Song, W. & Jin, G. Design of an ultra-thin near-eye display with geometrical waveguide and freeform optics. Opt. Exp. 22, 20705–20719 (2014).

Xu, M. & Hua, H. Methods of optimizing and evaluating geometrical lightguides with microstructure mirrors for augmented reality displays. Opt. Exp. 27, 5523–5543 (2019).

Kress, B. C. & Cummings, W. J. 11-1: invited paper: towards the ultimate mixed reality experience: Hololens display architecture choices. In SID symposium digest of technical papers, vol. 48, 127–131 (Wiley Online Library, 2017).

Gu, Y. et al. Holographic waveguide display with large field of view and high light efficiency based on polarized volume holographic grating. IEEE Photon. J. 14, 1–7 (2022).

Fernández, R. et al. Holographic waveguides in photopolymers. Opt. Exp. 27, 827–840 (2019).

Äyräs, P., Saarikko, P. & Levola, T. Exit pupil expander with a large field of view based on diffractive optics. J. Soc. Inf. Disp. 17, 659–664 (2009).

Erkelens, I. M. & MacKenzie, K. J. 19-2: Vergence-accommodation conflicts in augmented reality: Impacts on perceived image quality. SID Symp. Dig. Tech. Pap. 51, 265–268 (2020).

Shi, X. et al. Design of a dual focal-plane near-eye display using diffractive waveguides and multiple lenses. Appl. Opt. 61, 5844–5849 (2022).

Yoo, C. et al. Dual-focal waveguide see-through near-eye display with polarization-dependent lenses. Opt. Lett. 44, 1920–1923 (2019).

Erickson, A., Kim, K., Bruder, G. & Welch, G. F. Exploring the limitations of environment lighting on optical see-through head-mounted displays. In Symposium on Spatial User Interaction, Vol. 9, 1–8 (2020).

Nam, S.-W. et al. Aberration-corrected full-color holographic augmented reality near-eye display using a pancharatnam- berry phase lens. Opt. Exp. 28, 30836–30850 (2020).

Kim, D. et al. Vision-correcting holographic display: evaluation of aberration correcting hologram. Biomed. Opt. Exp. 12, 5179–5195 (2021).

Kavakli, K. et al. Pupil steering holographic display for pre-operative vision screening of cataracts. Biomed. Opt. Exp. 12, 7752–7764 (2021).

Maimone, A., Georgiou, A. & Kollin, J. S. Holographic near-eye displays for virtual and augmented reality. ACM Trans. Graph. 36, https://doi.org/10.1145/3072959.3073624 (2017).

Shi, L., Li, B., Kim, C., Kellnhofer, P. & Matusik, W. Towards real-time photorealistic 3d holography with deep neural networks. Nature 592, 234–239 (2021).

Choi, S., Gopakumar, M., Peng, Y., Kim, J. & Wetzstein, G. Neural 3d holography: Learning accurate wave propagation models for 3d holographic virtual and augmented reality displays. ACM Trans. Graph. 40, https://doi.org/10.1145/3478513.3480542 (2021).

Peng, Y., Choi, S., Padmanaban, N. & Wetzstein, G. Neural Holography with Camera-in-the-loop Training. ACM Trans. Graph. (SIGGRAPH Asia) (2020).

Aks¸it, K. et al. Perceptually guided computer-generated holography. In Advances in Display Technologies XII, vol. 12024, 11–14 (SPIE, 2022).

Kuo, G., Waller, L., Ng, R. & Maimone, A. High resolution Étendue expansion for holographic displays. ACM Trans. Graph. 39, https://doi.org/10.1145/3386569.3392414 (2020).

Chakravarthula, P., Peng, Y., Kollin, J., Fuchs, H. & Heide, F. Wirtinger holography for near-eye displays. ACM Trans. Graph. 38, 213 (2019).

Muhamad, R. K. et al. Jpeg pleno holography: scope and technology validation procedures. Appl. Opt. 60, 641–651 (2021).

Yang, F. et al. Perceptually motivated loss functions for computer generated holographic displays. Sci. Rep. 12, 1–12 (2022).

Park, J., Lee, K. & Park, Y. Ultrathin wide-angle large-area digital 3d holographic display using a non-periodic photon sieve. Nat. Commun. 10, 1–8 (2019).

An, J. et al. Slim-panel holographic video display. Nat. Commun. 11, 5568 (2020).

Peng, Y., Choi, S., Kim, J. & Wetzstein, G. Speckle-free holography with partially coherent light sources and camera-in-the-loop calibration. Sci. Adv. 7, eabg5040 (2021).

Kim, J. et al. Holographic glasses for virtual reality. In Proceedings of the ACM SIGGRAPH, Vol. 33, 1–8 (2022).

Matsushima, K. Computer-generated holograms for three-dimensional surface objects with shade and texture. Appl. Opt. 44, 4607–4614, (2005).

Kaczorowski, A., Gordon, G. S. D. & Wilkinson, T. D. Adaptive, spatially-varying aberration correction for real-time holographic projectors. Opt. Exp. 24, 15742–15756 (2016).

Chen, J.-S. & Chu, D. Improved layer-based method for rapid hologram generation and real-time interactive holographic display applications. Opt. Exp. 23, 18143–18155 (2015).

Shimobaba, T., Kakue, T. & Ito, T. Review of fast algorithms and hardware implementations on computer holography. IEEE Trans. Ind. Inform. 12, 1611–1622 (2015).

Lee, J. et al. Deep neural network for multi-depth hologram generation and its training strategy. Opt. Exp. 28, 27137–27154 (2020).

Mengu, D., Ulusoy, E. & Urey, H. Non-iterative phase hologram computation for low speckle holographic image projection. Opt. Exp. 24, 4462–4476 (2016).

Kozacki, T., Finke, G., Garbat, P., Zaperty, W. & Kujawin´ska, M. Wide angle holographic display system with spatiotem- poral multiplexing. Opt. Exp. 20, 27473–27481 (2012).

Jang, C., Bang, K., Li, G. & Lee, B. Holographic near-eye display with expanded eye-box. ACM Trans. Graph. 37, https://doi.org/10.1145/3272127.3275069 (2018).

Park, J.-H. & Kim, S.-B. Optical see-through holographic near-eye-display with eyebox steering and depth of field control. Opt. Express 26, 27076–27088 (2018).

Suhara, T., Nishihara, H., Koyama, J. Waveguide holograms: a new approach to hologram integration. Opt. Commun. 19, 353–358 (1976).

Backlund, J., Bengtsson, J., Carlström, C.-F. & Larsson, A. Incoupling waveguide holograms for simultaneous focusing into multiple arbitrary positions. Appl. Opt. 38, 5738–5746 (1999).

Huang, Z., Marks, D. L. & Smith, D. R. Out-of-plane computer-generated multicolor waveguide holography. Optica 6, 119–124 (2019).

Yeom, H.-J. et al. 3d holographic head mounted display using holographic optical elements with astigmatism aberration compensation. Opt. Express 23, 32025–32034 (2015).

Yeom, J., Son, Y. & Choi, K. Crosstalk reduction in voxels for a see-through holographic waveguide by using integral imaging with compensated elemental images. Photonics 8, https://doi.org/10.3390/photonics8060217 (2021).

Lin, W.-K., Matoba, O., Lin, B.-S. & Su, W.-C. Astigmatism and deformation correction for a holographic head-mounted display with a wedge-shaped holographic waveguide. Appl. Opt. 57, 7094–7101 (2018).

Lin, W.-K., Matoba, O., Lin, B.-S. & Su, W.-C. Astigmatism correction and quality optimization of computer-generated holograms for holographic waveguide displays. Opt. Express 28, 5519–5527 (2020).

Georgiou, A., Christmas, J., Collings, N., Moore, J. & Crossland, W. Aspects of hologram calculation for video frames. J. Opt. A: Pure Appl. Opt. 10, 035302 (2008).

Kim, M. K. Principles and techniques of digital holographic microscopy. SPIE Rev. 1, 018005 (2010).

Kim, M. K. Digital Holographic Microscopy, 149–190 (Springer New York, New York, NY, 2011).

Lee, B., Kim, D., Lee, S., Chen, C. & Lee, B. High-contrast, speckle-free, true 3d holography via binary cgh optimization. Sci. Reports 12, https://doi.org/10.1038/s41598-022-06405-2 (2022).

Kavaklı, K., Itoh, Y., Urey, H. & Aks¸it, K. Realistic defocus blur for multiplane computer-generated holography. arXiv preprint arXiv:2205.07030 https://doi.org/10.48550/arXiv.2205.07030 (2022).

Choi, S. et al. Time-multiplexed neural holography: a flexible framework for holographic near-eye displays with fast heavily-quantized spatial light modulators. In Proceedings of the ACM SIGGRAPH, Vol. 32, 1–8 (2022).

Jang, C., Bang, K., Chae, M., Lanman, D. & Lee, B. Supplementary data of waveguide holography, https://doi.org/10.6084/m9.figshare.22672519 (2023).

Mahajan, V. N. & Strehl ratio for primary aberrations: some analytical results for circular and annular pupils. J. Opt. Soc. Am. 72, 1258–1266, (1982).

Kim, Y.-H. et al. Development of high-resolution active matrix spatial light modulator. Opt. Eng. 57, 061606 (2018).

Hwang, C.-S. et al. 21-2: Invited paper: 1 µm pixel pitch spatial light modulator panel for digital holography. In SID Symposium Digest of Technical Papers, vol. 51, 297–300 (Wiley Online Library, 2020).

Jang, S.-W. et al. Complex spatial light modulation capability of a dual layer in-plane switching liquid crystal panel. Sci. Rep. 12, https://doi.org/10.1038/s41598-022-12292-4 (2022).

Martínez-García, A., Moreno, I., Sanchez-Lopez, M. & Garcia-Martinez, P. Operational modes of a ferroelectric lcos modulator for displaying binary polarization, amplitude, and phase diffraction gratings. Appl. Opt. 48, 2903–2914 (2009).

Bartlett, T. A., McDonald, W. C. & Hall, J. N. Adapting texas instruments dlp technology to demonstrate a phase spatial light modulator. In OPTO (2019).

Serati, S., Xia, X., Mughal, O. & Linnenberger, A. High-resolution phase-only spatial light modulators with submillisecond response. Proc. SPIE Int. Soc. Opt. Eng. 5106, 138–145 (2003).

Tamir, T. & Peng, S.-T. Analysis and design of grating couplers. Appl. Phys. 14, 235–254 (1977).

Wang, B., Jiang, J. & Nordin, G. P. Compact slanted grating couplers. Opt. Exp. 12, 3313–3326 (2004).

Matsushima, K. & Shimobaba, T. Band-limited angular spectrum method for numerical simulation of free-space propagation in far and near fields. Opt. Exp. 17, 19662–19673 (2009).

Matsushima, K., Schimmel, H. & Wyrowski, F. Fast calculation method for optical diffraction on tilted planes by use of the angular spectrum of plane waves. J. Opt. Soc. Am. A 20, 1755–1762 (2003).

Acknowledgements

Giuseppe Calafiore, Heeyoon Lee, and Alexander Koshelev have contributed to the design and fabrication of the waveguide used in this work. Clinton Smith designed the compact prototype and provided engineering support. We also thank the valuable discussion provided by Grace Kuo and Suyeon Choi. Julia Majors provided proofreading and valuable feedback. Eric Davis rendered the graphics in Fig. 1c. In Fig. 4, the cute cat image by Lali Masriera (CC BY 2.0), the beautiful Seattle skyline image by fiction-parade (CC BY-SA 2.0), and the nice bicycle image by Fiore Power (CC BY 2.0). 3D contents in Fig. 5c are rendered by Tech Art team in Meta. The research is supported by Meta.

Author information

Authors and Affiliations

Contributions

C.J. and K.B. initiated the project, designed the architecture, conceived algorithms, and conducted the experiments. M.C. conducted the experiments. D.L. and B.L. advised and supervised the project. C.J. wrote the initial draft of the manuscript and all authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

C.J. and D.L. are currently employees of Meta. K.B. was an employee of Meta. We declare that M.C. and B.L. do not have any conflicts of interest.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jang, C., Bang, K., Chae, M. et al. Waveguide holography for 3D augmented reality glasses. Nat Commun 15, 66 (2024). https://doi.org/10.1038/s41467-023-44032-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-023-44032-1

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.