Abstract

The Maxwellian near-eye displays have attracted growing interest in various applications. By using a confined pupil, a Maxwellian display presents an all-in-focus image to the viewer where the image formed on the retina is independent of the optical power of the eye. Despite being a promising technique, current Maxwellian near-eye displays suffer from various limitations such as a small eyebox, a bulky setup and a high cost. To overcome these drawbacks, we present a holographic Maxwellian near-eye display based on computational imaging. By encoding a complex wavefront into amplitude-only signals, we can readily display the computed histogram on a widely-accessible device such as a liquid-crystal or digital light processing display, creating an all-in-focus virtual image augmented on the real-world objects. Additionally, to expand the eyebox, we multiplex the hologram with multiple off-axis plane waves, duplicating the pupils into an array. The resultant method features a compact form factor because it requires only one active electronic component, lending credence to its wearable applications.

Similar content being viewed by others

Introduction

Compact and portable, near-eye displays hold great promises in augmented reality (AR), virtual reality (VR) and mixed reality (MR) applications such as social communication, healthcare, education, and entertainment1,2. In the past decade, various optical schemes have been adopted in near-eye 3D displays. Representative implementations encompass light field displays3,4,5, freeform surfaces6,7, multi-focal-plane displays8,9, and holographic related display techniques including holographic waveguide10,11,12 and holographic optical element (HOE)13,14,15. Capable of providing depth cues that mimic the real world, these methods reduce the eye fatigue by alleviating the vergence-accomodation conflict (VAC)16. However, solving one problem creates another—because of complicated optics employed, current near-eye 3D displays generally suffer from a large form factor9 and/or a low resolution5, limiting their use in wearable devices.

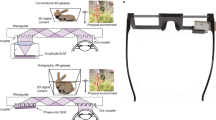

As an alternative approach, a Maxwellian display solves VAC while still maintaining a high resolution17 in a compact enclosure. In an imaging system, the beam width limited by the pupil diameter determines the depth of field (DOF) within which the image is considered in focus18. In Maxwellian displays, the chief rays emitted from the display panel converge at the eye pupil and then form an image at the retina (Fig. 1a). By using a narrow pencil beam, Maxwellian displays reduce the effective pupil size and thereby significantly increase the DOF—the image appears in focus at all depths. The ability of creating this all-in-focus image thus provides a simple solution to eliminate the VAC because the eye lens does not need to accommodate the virtual object.

Operating principle. (a) Maxwellian display using a geometric lens. (b) Maxwellian display using a HOE. (c) Holographic Maxwellian display based on direct complex wavefront encoding. (d) Holographic Maxwellian display based on computational complex hologram encoding. (The schematic image of “cameraman” in this figure is a popularly used standard test image that can be downloaded online from one of the test images database http://www.imageprocessingplace.com/root_files_V3/image_databases.htm).

Despite being a promising technique, Maxwellian displays have a major drawback in the eyebox size because of the small pupil employed. To expand the eyebox, there are generally two strategies: temporal multiplexing and spatial multiplexing. The temporal-multiplexing-based Maxwellian displays rapidly translate the pupil by using either a LED array19 or dynamic tilted mirrors20. Because the scanning must be performed within the flicker-fusion threshold time, the temporal-multiplexing-based Maxwellian displays face a fundamental trade-off between the image refreshing rate and eye box size. By contrast, the spatial-multiplexing-based Maxwellian displays21,22 use HOEs to simultaneously create multiple laterally-shifted, duplicated pupils, expanding the eyebox while maintaining the original frame rate. Additionally, because HOEs can be directly fabricated on waveguides, the resultant devices normally feature a compact form factor. Nonetheless, the fabrication of HOEs is nontrivial—the recording interference patterns from two coherent beams requires extensive alignment and stable optical setups. More problematically, the HOEs cannot be modified once they are fabricated. Alternatively, the spatial multiplexing can also be achieved through computer-generated holography (CGH)23, which computes and displays a histogram on an electronic device like a spatial light modulator. Despite being flexible in the hologram created, the current CGH implementations require additional eye pupil tracking devices24 or HOEs23, resulting in a bulky and complicated setup.

To overcome these limitations, herein we present a computational holographic Maxwellian near-eye display. After multiplexing different directional plane carrier waves to the virtual target image, we first compute a multiplexing complex hologram based on free-space Fresnel diffraction. This hologram converges the light rays into multiple pupils and produce Maxwellian all-in-focus images at each pupil location. Next, we encode this complex hologram into an amplitude image to facilitate its display on a commonly-accessible device such as a liquid-crystal display (LCD) or a digital light processing (DLP) display. Our method enables high-resolution Maxwellian display with an expanded eyebox in a compact configuration.

Principle and Method

We illustrate the operating principle of the Maxwellian near-eye display using a geometric lens and HOE (i.e., a diffractive lens) in Fig. 1(a,b), respectively. Under monochromatic illumination, the complex light wavefront right after the lens plane is A·exp(iφ), where A is the amplitude and φ is the phase. Herein the display panel produces the image amplitude A, and the geometric or diffractive lens generates the converging spherical phase φ. This complex wavefront propagates and converges to a focal spot at the pupil plane. Therefore, the key to create a holographic Maxwellian view is to modulate the wavefront in a way that combines the amplitude function of a target image and the phase function of a lens.

Figure 1(c,d) show two basic configurations of holographic Maxwellian near-eye displays, respectively. In Fig. 1(c), the complex hologram is a superimposed image of a real target image and a spherical phase. The light emitted from this hologram enters the eye pupil and forms an image on the retina. Because the target image is close to the eye (the focal length f is usually small in near-eye displays), the DOF is relatively small24. By contrast, in Fig. 1(d), the target image is virtual, and it locates at a far distance from the eye pupil. To compute the hologram, one need to forward propagate the wavefront from the virtual target image to the display panel. Because it yields a larger DOF than that presented in Fig. 1(c) 24, we adopt this method in experiments. Next, we encode this complex amplitude into an amplitude-only CGH (AO-CGH) using a holographic complex beam shaping technique25,26. The resultant AO-CGH can be displayed on a conventional micro-display device and provide a Maxwellian-view image. Because only a display panel is required, our method is simple and low cost. Moreover, it can be readily combined with various optical combiners such as a waveguide.

Calculation of complex holograms

We illustrate the basic model for calculating the complex hologram in Fig. 2(a). The system consists of a virtual image plane, a display plane, and an eye pupil plane. The virtual target image is compulsively multiplied by a spherical converging phase factor. This operation is equivalent to illuminating the image by virtual converging light rays which focus at the eye pupil. Therefore, the wavefront at the virtual image plane can be written as

where I(xv, yv) is the amplitude of the target image, k = 2π/λ is the wave number. d1 and d2 are the distances from the virtual image to the display plane and from the display plane to the eye pupil plane, respectively. The complex hologram at the display plane can be calculated from the virtual image using the forward Fresnel diffraction approximation27 as

Here H(xh, yh) denotes the complex-amplitude distribution of the hologram. Equation (2) can be numerically calculated by employing the “ARSS-Fresnel diffraction” algorithm28,29 which involves convolution using three fast Fourier transforms (FFTs). The resultant complex hologram can yield an sharp image on the retina plane through eye pupil filtering of the focal spot, visually equivalent to staring at a virtual image whose distance is dvirtual = d1 + d2 in front of the eye.

Computation and reconstruction of holograms. (a) Geometry model for computation. (b) Hologram multiplexing procedure. (c) Geometry model for reconstruction. (The schematic image of “cameraman” in this figure is a popularly used standard test image that can be downloaded online from one of the test images database http://www.imageprocessingplace.com/root_files_V3/image_databases.htm).

Eyebox expansion through pupil duplication

To expand the eyebox, we further calculate a multiplexing complex hologram to generate multiple laterally shifted pupils at the eye pupil plane. As illustrated in Fig. 2(b), we first calculate multiple complex sub-holograms at the display plane using Fresnel diffraction in Eq. 2. Each sub-hologram is associated with a duplicated pupil, and we calculate it by applying an individual plane carrier wave (blazing tilting phase) at angle (θx(i), θy(i)) to the virtual target image V(xv, yv). Here i denotes the sub-hologram index and the i-th sub-hologram is therefore calculated by:

Finally, we multiplex all sub-holograms into a composite hologram:

Adding different plane carriers to the virtual image allows independently steering the beam toward given directions. This operation enables computational modulation of multiple beams, converging them at different pupils simultaneously. We calculate the angles for the i-th pupil as θx(i) = xp(i)/(d1 + d2), θy(i) = yp(i)/(d1 + d2), where (xp(i), yp(i)) denotes the center coordinate of the i-th pupil. The distance between adjacent pupils (xp(i + 1)-xp(i), yp(i + 1)-yp(i)) is larger than the eye pupil size so the eye sees a single pupil at a time when it moves, thereby expanding the effective eyebox.

To display the complex hologram on the amplitude displays, we encode the complex amplitude given by Eq. (4) into an AO-CGH. Given a complex amplitude Hc(xh, yh) = a(xh, yh)·exp[iφ(xh, yh)], where the amplitude a(xh, yh) is a positive normalized function and the phase φ(xh, yh) takes values in the domain [−π, π], we can encode it into an interferometric AO-CGH with a normalized transmittance function

where c0 ≅ 1/2 is a normalization constant, (u0, v0) are the spatial frequencies of the linear phase hologram carrier, and b(xh, yh) is the bias function generally defined as b(xh, yh) = [1 + a2(xh, yh)]/2. Generation of the hologram transmittance in Eq. (5) is optically equivalent to recording the interference pattern formed by the complex hologram Hc(xh, yh) with an off-axis plane reference wave exp[i2π(u0xh + v0yh)]. The main spectral band of the encoded AO-CGH only presents the off-axis signal term (i.e., the desired complex amplitude to generate the pupil array) accompanied by the DC term and the conjugate of signal. The isolation of the off-axis signals from the DC and conjugate can be achieved through pupil filtering, as shown in Fig. 2(c), enabling high-quality image reconstruction on the retina. It is noteworthy the parameterization of Eq. 5 is not unique. For instance, rather than using its general definition, we can choose the bias b(xh, yh) in Eq. (5) in other forms to make the transmittance A(xh, yh) positive26.

It should be emphasized that in conventional Maxwellian display, the electronic screen displays the image amplitude while the refraction lens (or diffractive lens such as HOEs) alters the phase of the light. By contrast, in our method, we encode both the image amplitude and phase into a single hologram using wavefront modulation, eliminating the need for the focusing lens and thereby leading to a more compact form factor. Moreover, because the image is reconstructed by wavefront modulation, we can correct for the aberrations of the system simply by digitally adding an auxiliary phase to the wavefront, thereby offering more flexibilities in improving the image quality24.

Experiments and Results

To demonstrate our method, we built a prototype using only off-the-shelf optics. The system schematic and photograph are shown in Fig. 3(a,b), respectively. Our amplitude display consists of a phase spatial light modulator (SLM) (Meadowlark, 9.2 μm pixel pitch, 1920 × 1152 resolution) and a linear polarizer oriented 45° with respect to the x-axis30. We load AO-CGH with a 1024 × 1024 resolution into SLM, and we obliquely illuminate the SLM with a 532 nm laser beam. To characterize the image quality seen from each pupil, we translated an iris at the pupil plane. The resultant image is then captured by a digital camera which consists of a CMOS sensor (Sony Alpha a7s) and a varifocal lens (focal distance: 450 mm to infinity).

We used three test images (Fig. 4a–c) in our proof-of-concept experiments. To calculate the correspondent hologram, we set d1 = 350 mm, d2 = 150 mm, so the resultant virtual image is located at dvirtual = 350 mm + 150 mm = 500 mm in front of the eye. Figure 4(d) shows a representative AO-CGH associated with Fig. 4(a). The composite AO-CGH for eyebox expansion is calculated by multiplexing nine sub-holograms with different plane carrier waves, yielding a 3 × 3 pupil array at the eye pupil plane. The distance between adjacent pupils is 1 mm along both axes. By placing a monochromatic CCD (PointeGray Chameleon3) directly at the pupil plane, we captured the pupil array image (Fig. 4(f)), which closely matches with simulation (Fig. 4(e)). In our experiments, the distance between adjacent pupils is limited by the SLM pixel size. We could increase this separation by using a SLM with a smaller pixel pitch and thereby a larger diffraction angle.

We moved the iris to nine pupil locations. Figure 5 shows the captured images by the camera behind the iris, and in the insets we labeled the correspondent iris location at the eye pupil plane. Because of using an optical combiner, we see both the reconstructed image and real objects. We varied the focal distance of the camera from 2 diopters to 0.4 diopters to focus on a near and far real-world object, respectively, while the reconstructed virtual image is always in focus.

Figure 6 shows the captured images of other two test images (an Illinois logo and a grid) seen from nine pupil locations when the camera focused at the near and far real-world objects. And we show the dynamic focusing process in supplementary movies (see Movies 1 and 2), where we fixed the iris at the upper-left pupil location and continuously varied its focus from the near object (2 diopters) to the far object (0.4 diopters). Due to the extension of the DOF of the eye imaging system by limiting the display beam width in converging propagation, the reconstructed target images remain in focus during focus adjustment while the two real-world objects appear sharp and blurred alternatively, proving the presentation of the always-focused images as expected. Other two supplementary movies (see Movies 3 and 4) record the process when we moved the iris at the pupil plane along the direction indicated by the red arrows in Fig. 6(a,c), visualizing the smooth image transition when iris changes between adjacent pupils. These results imply that we have created a Maxwellian-view image with an expanded eyebox in this optical see-through setup. And this is the first time such a system has been demonstrated in a lensless compact configuration—only a simple amplitude display is used.

Images of test targets (“logo” and “grid”) seen through different pupils at varied focal distances. (a) Image of “logo” when camera focuses at a near object. (b) Image of “logo” when camera focuses at a far object (c) Image of “grid” when camera focuses at a near object. (d) Image of “grid” when camera focuses at a far object. See Supplementary Movies (Movies 1, 2, 3 and 4) for the dynamic focusing of camera and iris shifting.

Discussions

Light throughput

The light throughput of our system is mainly limited by both the display device and diffraction. First, in an amplitude display, each pixel modulates the light intensity by blocking the light transmission. For example, in our experiment, due to the use of a polarizer in front of the SLM, the light with only one polarization direction can pass. Similar to conventional amplitude displays, the light transmission at each pixel is determined by the voltage (or hologram gray-scale) response of SLM. Therefore, the light throughput varies pixelwise according to the displayed content. Additionally, for most liquid-crystal-based passive displays, the light experiences an additional loss due to the filling gap (fill factor) between pixels in either transmissive or reflective configuration. For example, the fill factor is 95.7% for the SLM used in our experiments. Although the light efficiency of amplitude displays is generally lower than that of their phase counterparts, the amplitude displays such as LCD and DLP are more accessible for consumer applications because of their low cost.

The second major light throughput loss is attributable to diffraction. Due to the pixelated structure of the amplitude displays, the light emitted from the display panel diffracts into different orders, each associated with a duplicated image. The common practice is to use only the zero-order diffraction (also known as SLM bandwidth) because it has the maximum energy (78% for the zero-order diffraction efficiency in our SLM). This efficiency can be improved by using an SLM with a smaller pixel.

Reconstruction efficiency

The functionality of our method hinges on our ability to control both the phase and amplitude of the light wavefront to produce multiple converging beams carrying the image information. The encoding of a complex wavefront into an AO-CGH reduces the reconstruction efficiency because the AO-CGH contains the target complex wavefront as well as the DC and conjugated terms. The reconstruction efficiency can be numerically estimated through simulating the wavefront propagation from the encoded AO-CGH to the retina plane via pupil filtering. Also, to avoid the crosstalk between the signals and DC term, the incident beam on the hologram must have a slightly converging wavefront to increase the diffraction angle of the SLM.

To calculate the reconstruction efficiency, we first set all pixel amplitude values of the AO-CGH to unity and calculated the total power, P0, of the reconstructed image with no pupil filtering. Next, we computed the power P1 of the reconstructed image with an encoded AO-CGH and pupil filtering. We define the reconstruction efficiency as P1/P0, and this ratio largely depends on the image content. For example, the calculated reconstruction efficiency is ~0.2% for the “letters” image (Fig. 4a), ~0.1% for the “logo” image (Fig. 4b), and ~0.7% for the “grid” image (Fig. 4c).

Eyebox size and field of view

We define the eyebox size as the area within which the Maxwellian-view image can be seen by the eye. In our proof-of-concept experiments, we form a 3 × 3 pupil array, and the distance between adjacent pupils is 1 mm. Therefore, the eyebox size is 3 mm × 3 mm. In general, when the eye pupil size is larger than the pupil spacing in pupil array, aliasing appears in the observed image where multiple duplicated Maxwellian-view images from two or more pupils overlap. In order to avoid image aliasing, the pupil distance must be greater than the physical eye pupil size, which varies from 1.5 mm to 8 mm dependent on the lighting condition. One possible solution is to update the AO-CGH by adjusting the plane carrier wave in Eq. (3) according to the detected eye pupil position and size from the pupil tracking device. The 1 mm pupil spacing in our experiments is limited by the small diffraction angle of the SLM, and this value can be increased for a smaller SLM pixel size.

In Fig. 7(a), we developed a theoretical framework to calculate the eyebox size. For simplicity, we used a one-dimensional model. Herein we denote the resolution and pixel pitch of the display as N and dx. The effective area of the display (AO-CGH) can be computed as L = Ndx, which is also the dimension of the DC term (LDC = L = Ndx). Provided that the desired signals (i.e., pupil array), DC, and conjugated terms occupy the full bandwidth of the zero-order diffraction (Lb = λd2/dx under paraxial approximation31), to separate the off-axial signals from the DC term, the dimension of signal area Ls (i.e., eye box) must be no greater than Lb/2- LDC/2, i.e.,

To increase the eyebox area, Eq. 6 implies that we can increase the distance (d2), decrease the resolution (N), or reduce the pixel size (dx). However, for near-eye displays, a small d2 and a large N are desired because they yield a compact form factor and a high resolution, respectively. Therefore, the practical approach is to use a small pixel size. For example, to achieve 3 mm pupil spacing in a 9 × 9 pupil array, i.e., Ls = 3 mm × 3 = 9 mm, the required dx is 3.7 μm in our current setup.

We calculated the field of view (FOV) of our system based on geometrical optics. As shown in Fig. 7(b), for each focal spot in the effective eyebox, the chief rays emitted from the virtual image (Lv) converge at the eye pupil via the display. The angle θ between the chief rays associated with the top and bottom of the virtual image defines the FOV. We assume this angle is approximately the same for all Maxwellian views seen from different pupils, and we calculate it as θ ≈ Lv/(d1 + d2).

The FOV depends on the virtual image dimension Lv, and it reaches the maximum when the chief rays associated with top and bottom pupil locations intercept the display screen edges as marked in Fig. 7(b). The maximum Lv-max and the correspondent FOV θmax can be derived based on the trapezoidal geometry as:

In our proof-of-concept experiments, the size of virtual image Lv = 24 mm. The FOV is calculated as θ ≈ Lv/(d1 + d2) = 24 mm/500 mm ≈ 2.75°, close to its maximum θmax ≈ 2.8° defined by Eq. (7).

Equation 7 indicates that, given distances d1 and d2, there is a trade-off between the FOV θmax and eyebox Ls—increasing the FOV would unfavorably reduce the eyebox. To maintain the desired eyebox, we can alternatively reduce the distance d2. However, to display the correspondent hologram, the required pixel pitch becomes much smaller. For example, to increase the FOV by a factor of two, the required pixel pitch is 3.2μm compared to 9.2μm in the current setup. Alternatively, rather than using a plane illumination, we can shine a convergent wavefront onto the SLM to increase the FOV at the expense of using an additional lens32.

Resolution and color reproduction

The resolution of the AO-CGH in our experiment is 1024 × 1024 which is the same as the virtual target image. However, during the image reconstruction through diffraction, the high frequency information is lost due to pupil filtering. Also, the multiplexing of duplicated perspective views in to a single hologram reduces the information content of each Maxwellian-view image. To quantitatively evaluate the relations, we numerically reconstructed the Maxwellian-view image from one pupil view in different pupil array cases, and calculated the root mean square error (RMSE) values (all of the calculated intensities are normalized in [0, 1]) between each simulated reconstruction and the original target image. Figure 8 shows the simulation results for the two test images with the RMSE values marked in each image. Yellow dashed circles in each image column indicate the selected pupil positions of the numerical reconstructions from the pupil array of 1 × 1, 2 × 2, 3 × 3 and 4 × 4 cases. The reconstructions as well as the enlarged details imply that the quality and resolution of the image from single Maxwellian view degrade when the number of pupils increases, which is quantitatively verified by the RMSE values in each simulation result.

Although beyond the scope of current work, our method has an advantage in reproducing colors. Conventional HOE-based Maxwellian displays suffer from chromatic aberrations because the recorded interference pattern is wavelength dependent, causing both on- and off- axial miss-alignment of RGB channels in color mixing. Our method alleviates this problem because the modulation of light beams is achieved by a single AO-CGH. To reproduce colors, we can load three independent AO-CGHs into the RGB channels of the display and display them simultaneously. Then the RGB light emitted from these holograms propagates independently and merges at the retina, creating a color representation.

Conclusions

In summary, we developed an optical see-through holographic Maxwellian near-eye display with an extended eyebox. We computationally generate an AO-CGH and display it on an amplitude-modulation device. The multiplexing of holograms on the AO-CGH enables pupil duplication, thereby significantly increasing the eyebox size. Because our system consists of only an amplitude display panel, it is simple and compact, lending an edge to its application in various wearable devices.

References

Carmigniani, J. et al. Augmented reality technologies, system and applications. Multimedia Tools Appl. 51, 341–377 (2011).

Rabbi, I. & Ullah, S. A survey on augmented reality challenges and tracking. Acta Graph. 24, 29–46 (2013).

Lee, S., Jang, C., Moon, S., Cho, J. & Lee, B. Additive light field displays. ACM Trans. Graph. 35, 1 (2016).

Xie, S., Wang, P., Sang, X. & Li, C. Augmented reality three-dimensional display with light field fusion. Opt. Express 24, 11483–11494 (2016).

Hua, H. & Javidi, B. A 3D integral imaging optical see-through head-mounted display. Opt. Express 22, 13484–13491 (2014).

Cheng, D., Wang, Y., Xu, C., Song, W. & Jin, G. Design of an ultra-thin near-eye display with geometrical waveguide and freeform optics. Opt. Express 22, 20705–20719 (2014).

Wei, L., Li, Y., Jing, J., Feng, L. & Zhou, J. Design and fabrication of a compact off-axis see-through head-mounted display using a freeform surface. Opt. Express 26, 8550–8565 (2018).

Liu, S., Li, Y., Zhou, P., Chen, Q. & Su, Y. Reverse-mode PSLC multi-plane optical see-through display for AR applications. Opt. Express 26, 3394–3403 (2018).

Cui, W. & Gao, L. Optical mapping near-eye three-dimensional display with correct focus cues. Opt. Lett. 42, 2475–2478 (2017).

Martinez, C., Krotov, V., Meynard, B. & Fowler, D. See-through holographic retinal projection display concept. Optica 5, 1200–1209 (2018).

Xiao, J., Liu, J., Lv, Z., Shi, X. & Han, J. On-axis near-eye display system based on directional scattering holographic waveguide and curved goggle. Opt. Express 27, 1683–1692 (2019).

Yoo, C. et al. Dual-focal waveguide see-through near-eye display with polarization-dependent lenses. Opt. Lett. 44, 1920–1923 (2019).

Yeom, H. J. et al. 3D holographic head mounted display using holographic optical elements with astigmatism aberration compensation. Opt. Express 23, 32025–32034 (2015).

Liu, S., Sun, P., Wang, C. & Zheng, Z. Color waveguide transparent screen using lens array holographic optical element. Opt. Commun. 403, 376–380 (2017).

Zhou, P., Li, Y., Liu, S. & Su, Y. Compact design for optical-see-through holographic displays employing holographic optical elements. Opt. Express 26, 22866–22876 (2018).

Hoffman, D. M., Girshick, A. R., Akeley, K. & Banks, M. S. Vergence - accommodation conflicts hinder visual performance and cause visual fatigue. J. Vis. 8, 1–30 (2008).

Westheimer, G. The Maxwellian view. Vision Res. 6, 669–682 (1966).

Green, D. G., Powers, M. K. & Banks, M. S. Depth of focus, eye size and visual acuity. Vision Res. 20, 827–835 (1980).

Hedili, M. K., Soner, B., Ulusoy, E. & Urey, H. Light-efficient augmented reality display with steerable eyebox. Opt. Express 27, 12572–12581 (2019).

Jang, C. et al. Retinal 3D: augmented reality near-eye display via pupil-tracked light field projection on retina. ACM Trans. Graph. 36, 190 (2017).

Lee, J., Kim, Y. & Won, Y. See-through display combined with holographic display and Maxwellian display using switchable holographic optical element based on liquid lens. Opt. Express 26, 19341–19355 (2018).

Kim, S. B. & Park, J. H. Optical see-through Maxwellian near-to-eye display with an enlarged eyebox. Opt. Lett. 43, 767–770 (2018).

Park, J. H. & Kim, S. B. Optical see-through holographic near-eye-display with eyebox steering and depth of field control. Opt. Express 26, 27076–27088 (2018).

Takaki, Y. & Fujimoto, N. Flexible retinal image formation by holographic Maxwellian-view display. Opt. Express 26, 22985–22999 (2018).

Zhang, Z., Liu, J., Gao, Q., Duan, X. & Shi, X. A full-color compact 3D see-through near-eye display system based on complex amplitude modulation. Opt. Express 27, 7023–7035 (2019).

Arrizón, V., Méndez, G. & Sánchez-de-La-Llave, D. Accurate encoding of arbitrary complex fields with amplitude-only liquid crystal spatial light modulators. Opt. Express 13, 7913–7927 (2005).

Chang, C., Qi, Y., Wu, J., Xia, J. & Nie, S. Speckle reduced lensless holographic projection from phase-only computer-generated hologram. Opt. Express 25, 6568–6580 (2017).

Shimobaba, T. et al. Aliasing-reduced Fresnel diffraction with scale and shift operations. J. Opt. 15, 536–544 (2013).

Shimobaba, T. & Ito, T. Random phase-free computer-generated hologram. Opt. Express 23, 9549–9554 (2015).

Harm, W., Jesacher, A., Thalhammer, G., Bernet, S. & Marte, M. R. How to use a phase-only spatial light modulator as a color display. Opt. Lett. 40, 581–584 (2015).

Goodman, J. W. Introduction to Fourier Optics (Roberts & Company Publishers, 2005).

Chang, C., Qi, Y., Wu, J., Xia, J. & Nie, S. Image magnified lensless holographic projection by convergent spherical beam illumination. Chin. Opt. Lett. 16, 100901 (2018).

Acknowledgements

This work was supported in part by discretionary funds from UIUC and a research grant from Futurewei Technologies, Inc.

Author information

Authors and Affiliations

Contributions

C.C. and L.G. conceived the initial idea. C.C. W.C. and J.P. performed the experiments and analysed the data. C.C wrote the first draft of the manuscript and L.G. provided major revisions. L.G. supervised the project. All authors discussed the results and commented on the manuscript. Correspondence and requests for materials should be addressed to L.G.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chang, C., Cui, W., Park, J. et al. Computational holographic Maxwellian near-eye display with an expanded eyebox. Sci Rep 9, 18749 (2019). https://doi.org/10.1038/s41598-019-55346-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-55346-w

This article is cited by

-

Metasurface wavefront control for high-performance user-natural augmented reality waveguide glasses

Scientific Reports (2022)

-

High-contrast, speckle-free, true 3D holography via binary CGH optimization

Scientific Reports (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.