Abstract

This study introduces a method that is applicable across various powder materials to predict process conditions that yield a product with a relative density greater than 98% by laser powder bed fusion. We develop an XGBoost model using a dataset comprising material properties of powder and process conditions, and its output, relative density, undergoes a transformation using a sigmoid function to increase accuracy. We deeply examine the relationships between input features and the target value using Shapley additive explanations. Experimental validation with stainless steel 316 L, AlSi10Mg, and Fe60Co15Ni15Cr10 medium entropy alloy powders verifies the method’s reproducibility and transferability. This research contributes to laser powder bed fusion additive manufacturing by offering a universally applicable strategy to optimize process conditions.

Similar content being viewed by others

Introduction

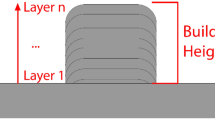

Laser powder bed fusion (L-PBF), also known as selective laser melting, is a high-precision additive manufacturing (AM) method to produce metal components that have superior mechanical properties, and that can use a wider range of metal feedstock materials than other AM methods1. During L-PBF, a layer of powdered metal is spread on a bed, then a laser is used to fuse the powder layer. The molten layer is allowed to cool rapidly, then another thin layer of powder is spread over it. This process is repeated until the desired part is fully constructed layer-by-layer. This process permits creation of intricate structures with high-quality material properties.

The material properties of parts created using L-PBF are heavily influenced by process parameters, such as laser power P [W], scan speed v [mm/s], hatch distance h [mm], and layer thickness t [mm]. Inappropriate combinations of these parameters can lead to keyhole formation or lack of fusion, both of which result in parts that have undesirable porosity and microstructure2. Pores can provide initiation sites for cracks within the structure of AM-produced parts3, so high porosity within the material can significantly compromise its mechanical properties. Consequently, effective L-PBF requires use of process parameters that yield parts with low porosity, i.e., high relative density \(\widetilde{\rho }\). \(\widetilde{\rho }\) depends on laser energy density Ed [J/mm3], calculated as4

Pores can occur due to lack of fusion occur when Ed is insufficient, or due to vaporization when Ed is excessive. However, the optimal Ed to achieve high \(\widetilde{\rho }\) varies among materials, and the \(\widetilde{\rho }\) of parts fabricated using the same material and Ed can differ depending on the process parameters used5 (Fig. 1). Because of this variability, the parameters must be tailored to the material to ensure the best possible results.

Description: The scatter plot highlights the variation in optimal energy density required to achieve high relative density for each material, as well as the differing relative densities achieved with the same energy density when different process parameters are used. The references of the data are given in the supplementary materials. Source data are provided as a Source Data file.

Numerous prior studies6,7,8,9,10,11,12,13,14 have used ML as a tool to predict and optimize part quality, such as \(\widetilde{\rho }\) by using process conditions to predict various properties of products. However, these studies have considered only one powder material for training, prediction, and model validation.

On the other hand, Cacace & Semeraro15 presented an intriguing approach to calculate the lack of fusion probability by integrating semi-analytical thermal model results with a geometric-based defect model. Their research offered an innovative method of establishing PBF process conditions for a variety of materials, with a focus on balancing porosity and productivity.

Despite the strengths of their approach, there are limitations in terms of time-efficiency and adaptability. Obtaining process conditions via their method can be time-consuming, and new regression models for melt pool depth and width are required for each material. Moreover, although Ed has been suggested as a simple criterion for calculating optimal process parameters across different materials, it has proven insufficiently reliable for this purpose5.

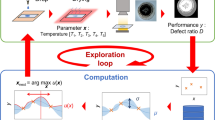

Our research aims to overcome these challenges by developing an ML model that optimizes \(\widetilde{\rho }\) simply, time-efficiently, and regardless of the material used. Our goal is to create an approach that enhances both accuracy and applicability across a wide array of materials.

In the present study, we seek to establish an ML model as a standard, aiming to replace Ed and the work of Cacace & Semeraro. To accomplish this goal, we trained a model that can determine whether the \(\widetilde{\rho }\) of a product is sufficiently high, when manufactured under specific process conditions using a particular powder. The model is trained using the material properties of the powder and the process parameters. We also developed a method that uses the model to suggest process parameters to achieve high \(\widetilde{\rho }\) in a product. To prove the reliability of the model and method, we predicted the optimal process conditions for STS 316 L, AlSi10Mg, and Fe60Co15Ni15Cr10 MEA, and experimentally verified the predicted process conditions.

This study presents three contributions. First, our method can propose optimized process parameters for any material, provided that its physical properties are known. This material-agnostic approach increases its applicability. Second, we modified the target values to facilitate the training of the ML model, which in turn improved its accuracy. Lastly, by using explainable AI (XAI) on the trained model, we analyzed the correlations among material properties, process parameters, and \(\widetilde{\rho }\), and obtained valuable insights into the relationships that affect these factors.

In this paper, we calculate \(\widetilde{\rho }\) as 100% minus area fraction of porosities, as measured using image analysis, aligning with the approach adopted in previous studies16,17,18. The decision to use image analysis over the Archimedes’ method was made primarily due to the potential inaccuracies associated with the latter. The Archimedes’ method calculates \(\widetilde{\rho }\) by dividing the product’s density, as measured by this method, by the ideal density inferred from the powder’s composition. However, this approach can lead to incorrect measurements of \(\widetilde{\rho }\) due to the evaporation of low-melting-point elements during product manufacturing, which can subsequently alter the composition. Given our focus on the porosity itself, we found image analysis, which directly relates to porosity, to be the more appropriate method for measuring \(\widetilde{\rho }\).

Results and discussion

Performance assessment of the developed ML model

To demonstrate that the present model is more accurate than both the simple regression model and the simple classification model, we compared their performance parameters, i.e., recall, precision, and accuracy (Table 1). Recall represents the proportion of positive instances that the model identifies out of all actual positive instances. Precision quantifies the proportion of true positive instances among all instances predicted as positive by the model. Accuracy represents the proportion of correct predictions made by the model, considering both true positive and true negative instances in relation to the total number of instances. The models were implemented using XGBoost19.

The present model is a regression model that uses a sigmoid function to transform the target value, then predicts the transformed value. In contrast, the simple regression model predicts the target value directly, and the simple classification model predicts the target value that is transformed by binary encoding.

The formula for transforming the target value in the present model is

where \({y}_{{present}}\) represents the target value, and \({y}_{{original}}\) denotes the original target value. A \(\widetilde{\rho }\) of 98% is high enough to ensure that product material properties are not severely compromised20,21,22, so we established 98% as our criterion for high \(\widetilde{\rho }\). When the \(\widetilde{\rho }\), which serves as the target value, is transformed using Eq. 2, \(\widetilde{\rho }\) ≥ 98% yield values between 0.5 and 1, and \(\widetilde{\rho }\) < 98% result in values between 0 and 0.5. In the present model, the predicted value is converted to 1 if it exceeds 0.5 and to 0 if it does not when evaluating recall, precision, and accuracy. For the regression model, the predicted value is transformed to 1 when it surpasses 98% and to 0 when it falls below 98% for the purpose of evaluating recall, precision, and accuracy.

The three models had differing strengths (Table 1). The simple regression model had the highest precision, and the simple classification model had the highest recall. The present model had higher accuracy than the other two models, but only moderately high precision and recall.

The simple regression model’s strength in precision stems from its tendency to underestimate the predicted value when dealing with data that have \(\widetilde{\rho }\) ≥ 98%. To substantiate this observation, we compared the averaged raw value and averaged prediction of two data sets: one with a \(\widetilde{\rho }\) below the average and another with a \(\widetilde{\rho }\) above the average. The average \(\widetilde{\rho }\) of all data sets used was 96.17%. The below-average data set had a mean \(\widetilde{\rho }\) = 88.4%, and a predicted mean \(\widetilde{\rho }\) = 88.9%. In contrast, the above-average dataset had a mean \(\widetilde{\rho }\) = 98.8%, and a predicted mean \(\widetilde{\rho }\) = 98.6%.

The simple regression model overestimated data that had \(\widetilde{\rho }\) below average, but underestimated data that had \(\widetilde{\rho }\) above average. This inclination to underestimate above-average \(\widetilde{\rho }\) increases the likelihood that the model will judge data with actual \(\widetilde{\rho }\) ≥ 98% as being <98%. Consequently, this tendency results in a decrease in recall and an increase in precision.

The simple classification model obtained results opposite to those of the simple regression model due to the classification model’s method of converting the target value to 1 when \(\widetilde{\rho }\) ≥ 98% and 0 otherwise. With 57.78% of the data having \(\widetilde{\rho }\) ≥ 98%, so more target values were 1 than 0. This difference in frequency causes the model to favor a judgment of 1, because it is more likely to be correct than a judgement of 0. In fact, the proportion of data predicted with \(\widetilde{\rho }\) ≥ 98% in the simple classification model was 58.7%, confirming that the predictions are biased, leading to lower precision and higher recall.

However, the present model mitigates these flaws by using a sigmoid function to normalize the target. The sigmoid-converted had an average \(\widetilde{\rho }\) = 0.5019. Because the model is technically a regression model, the problem of underestimating values when they are ≥ 0.5019 and overestimating them when they are <0.5019 persists. The dataset with above-average \(\widetilde{\rho }\) had average \(\widetilde{\rho }\) = 0.7654, and a predicted average \(\widetilde{\rho }\) = 0.7369. The dataset with below-average \(\widetilde{\rho }\) had average \(\widetilde{\rho }\) = 0.1445, and a predicted average \(\widetilde{\rho }\) = 0.1864.

However, the average \(\widetilde{\rho }\) of the entire dataset is close to the classification point of 0.5, so slight underestimations or overestimations do not significantly affect the classification result. This result occurs because not all data with \(\widetilde{\rho }\) ≥ 98% are underestimated, unlike the results of the regression model. In addition, the present model is not strictly a classification model, so it does not suffer from bias due to data frequency. Consequently, the present model achieves higher accuracy than the other two models, despite only moderately high recall and precision.

Furthermore, the reason that the present model is more accurate than the simple regression model and the simple classification model can be explained as follows. The simple regression model is somewhat inefficient to minimize the prediction error per training iteration to precisely match the process conditions that have \(\widetilde{\rho }\) ≥ 99.5% or ≤ 96%, when accurate matching of values is not necessary. The simple classification model has a different problem: for example, \(\widetilde{\rho }\) = 97.9999 is classified as 0, but \(\widetilde{\rho }\) = 98 is classified as 1, despite the negligible difference of 0.0001. In addition, both 100 and 98 are classified as 1, whereas both 97 and 80 are classified as 0. This binning into 1 or 0 loses information or trends that could be gleaned from the true values of \(\widetilde{\rho }\). This loss reduces the model’s accuracy and diminishes the accuracy when analyzing the model. Hence, the present model effectively overcomes these limitations of the other models and is therefore more reliable than they are for predicting \(\widetilde{\rho }\).

SHAP analysis of the input features

Shapley additive explanations (SHAP)23,24 analysis was conducted to uncover the correlations among process parameters (Ed, P, v, h, t), material properties of powder (thermal conductivity k [W/(m ⋅ K)], material density ρm [g/cm3], melting point TM [˚C], reflectivity R [%], and specific heat capacity Cp [J/(g ⋅ K)]), and \(\widetilde{\rho }\). The SHAP score quantifies the extent and direction of each feature’s contribution to the model’s prediction. The SHAP score enables detection of whether a particular feature increases or decreases the predicted value and the magnitude of its effect. The average of the absolute values of the SHAP score quantifies the effect of each feature on the model’s prediction; i.e., the importance of a given feature within the model.

The input features in the present model were composed of process parameters and material parameters of the powder. SHAP analysis (Fig. 2a) ranked the importance of process parameters as v > P > t > h, and the importance of material properties as k > ρm > TM > R > Cp. Although the degree of importance varied among the features, this analysis indicates that they all contribute to the prediction to some extent.

Description: a Average absolute SHAP scores of input features, highlighting the relative importance of process parameters and material properties of powder. Visualization of the SHAP main scores for b energy density, c scan speed, d laser power, e thermal conductivity, f density, g melting point, h reflectivity, i layer thickness, j hatch spacing, and k specific heat capacity and their effects on the model’s output, relative density. Source data are provided as a Source Data file.

The SHAP score can be divided into a main effect and an interaction effect. A main effect represents the isolated contribution of a single feature to the prediction, and signifies the individual impact of that feature. An interaction effect accounts for the combined influence of two features on the prediction, highlighting the contribution resulting from their interaction.

The contribution of each feature’s main effect on the model’s prediction \(\widetilde{\rho }\) was visualized by calculating the SHAP main values (Fig. 2b–k). The overall trend of these SHAP main values is represented by lines calculated using locally weighted regression, to clarify the tendencies.

Each process parameter had a distinct effect on \(\widetilde{\rho }\). When Ed was less than ~50 J/mm³, \(\widetilde{\rho }\) decreased significantly (Fig. 2b), possibly because energy delivered to the powder was insufficient to complete its fusion. When scan speed was excessively high or excessively low \(\widetilde{\rho }\) decreased (Fig. 2c), possibly because inappropriate scan speed leads to increased porosity caused by lack of fusion or vaporization. High P increased \(\widetilde{\rho }\) (Fig. 2d); at very low P, \(\widetilde{\rho }\) dropped substantially due to lack of fusion. Increasing t reduced predicted \(\widetilde{\rho }\) (Fig. 2i), because powders below the laser-irradiated area may not receive enough thermal energy melt. h reduced \(\widetilde{\rho }\) if h is too small or too large (Fig. 2j); we speculate that too-small h causes heat concentration and keyhole formation, whereas too-large h results in lack of fusion and increased porosity due to unmelted areas between scan tracks.

Material parameters also had distinct effects. As k decreased, \(\widetilde{\rho }\) increased (Fig. 2e). Low k means that thermal energy remains concentrated in the laser-irradiated area, so melting can be homogeneous. Increase in k causes increase in the Ed that is required to achieve homogeneous melting25. ρm had a threshold effect (Fig. 2f): above a certain level it reduced the predicted \(\widetilde{\rho }\). This result implies that ρm affects the model’s prediction by interactions with other inputs; this possibility will be analyzed later. TM did not seem to have a significant effect on the predicted \(\widetilde{\rho }\) (Fig. 2g), except that a very low TM did decrease the predicted \(\widetilde{\rho }\). Materials that have low TM also have low boiling points, so they are likely to vaporize; this process causes keyhole formation and reduced \(\widetilde{\rho }\). Increase in R decreased the predicted \(\widetilde{\rho }\) (Fig. 2h), because high R reduces energy transfer from the laser to the powder, so homogeneous melting is difficult to achieve. Cp also showed a threshold effect (Fig. 2k): above a certain level it reduced the predicted \(\widetilde{\rho }\). The effect of Cp is influenced by interactions with other inputs, and will be analyzed later.

SHAP analysis of interactions between input features

The contribution of input feature interactions to the model’s prediction was visualized by calculating the SHAP interaction values (Fig. 3). All computed SHAP interaction values are grouped based on whether one of the input features used in each interaction calculation has a high or low value. To clearly identify the trends within each group, a line to represent the tendency of the SHAP interaction scores was calculated using locally weighted regression.

Description: Visualization of the SHAP interaction values for a interaction between laser power and scan speed, b interaction between laser power and thermal conductivity, c interaction between scan speed and thermal conductivity, e interaction between hatch spacing and scan speed, f interaction between density and scan speed, and g interaction between heat capacity per volume and scan speed. d Visualization of the combined SHAP scores, representing the sum of SHAP interaction scores for scan speed and thermal conductivity, and the SHAP main scores for scan speed. h Visualization of the combined SHAP scores, representing the sum of SHAP interaction scores for scan speed and heat capacity per volume, and the SHAP main scores for scan speed. Source data are provided as a Source Data file.

The SHAP interaction values between P and v are illustrated in Fig. 3a. The SHAP interaction score was negative when low P was combined with high v; i.e., this interaction decreases predicted \(\widetilde{\rho }\). A lack-of-fusion phenomenon, which leads to a drop in \(\widetilde{\rho }\), occurs under these conditions. However, the SHAP interaction score was also negative when high P was combined with low v; i.e., this interaction also decreases predicted \(\widetilde{\rho }\). Under such conditions, keyhole formation, which reduces \(\widetilde{\rho }\), occurs. When P and v are appropriately balanced, their interaction increases \(\widetilde{\rho }\).

This analysis demonstrates that the trained ML model effectively recognizes the lack-of-fusion and keyhole-formation phenomena, which cause a drop in \(\widetilde{\rho }\). Consequently, the model’s deep understanding of the processes occurring in L-PBF enables insights through this analysis of interactions between input features. However, not all SHAP interaction scores exhibit a clear correlation. Only relationships between two features can be analyzed, so changes in the SHAP interaction score due to the influence of other features cannot be fully explained.

The interactions between input features and their corresponding averaged absolute SHAP scores were sorted in descending order (Table 2). Interaction analyses were performed only for interactions that had average absolute SHAP interaction scores ranking in the top 5. Interactions that involved Ed, a dependent input feature determined by other input features, are not displayed in Table 2 and are not included in the analysis.

The SHAP interaction values between k and P are displayed in Fig. 3b. For materials with high k, increasing P contributes to an increase in \(\widetilde{\rho }\), because a material with high k demands a higher P to achieve homogeneous melting, as heat dissipates more rapidly into the surroundings than with materials of low k when subjected to the same P. In contrast, for materials with low k, use of a low P increased the predicted \(\widetilde{\rho }\), possibly because adequate heat energy for melting is attained even at low P, because heat is retained near the irradiation site.

k and v showed a complex interaction (Fig. 3c), which is challenging to analyze using only the SHAP interaction scores. Consequently, an analysis was conducted using the sum of the SHAP interaction score for both features and the SHAP main score for v (‘SHAP Value’, Fig. 3d). For materials with high k, the range of appropriate v to increase \(\widetilde{\rho }\) is broader than the range for the materials with low k. In materials with low k, heat remains concentrated in the laser-irradiated area, and we suggest that lack of fusion may occur if v is too fast, and vaporization may occur if v is too slow. In contrast, materials that have high k are less prone to these effects, and therefore less sensitive to variation in v, than materials that have low k.

The SHAP interaction values between v and h are presented in Fig. 3e. At high v, increasing the h increased \(\widetilde{\rho }\), whereas at low v, reducing the h increased \(\widetilde{\rho }\). This finding contradicts the Ed equation (Eq. 1). If a certain material allows for a range of Ed, then reducing \(h\) to stay within that range would increase \(\widetilde{\rho }\) as v rises. However, the SHAP interaction value analysis suggests that the \(\widetilde{\rho }\) would be lowered in this case.

The reason for this discrepancy is that the SHAP score represents the contribution of the corresponding input feature or interaction to each predicted value. If both v and h are excessively large or small, Ed will be outside the appropriate range and will contribute to a significant decrease in \(\widetilde{\rho }\). For instance, when STS 316 L has process conditions with h = 0.15 mm and v ≥ 1200 mm/s, the Ed is outside the appropriate range, so the SHAP score of the Ed parameter rapidly decreases (Supplementary Fig 1).

As long as Ed remains within an appropriate range, increasing both v and h can increase \(\widetilde{\rho }\). In situations where P must be increased, increasing only one of v and h to maintain an adequate Ed may result in a slight decrease in \(\widetilde{\rho }\).

To better understand the interaction between v and ρm, a new input feature was created as heat capacity per volume Cp ⋅ ρm = Cv [J/(K∙cm3)] and a new model was trained with Cv and without Cp or ρm. Use of Cv and removal of Cp and ρm led to a slight loss of information represented by each input feature, so the model’s accuracy decreased to 85.6%. Furthermore, the complex trend of the SHAP interaction between v and Cv impedes the identification of the interaction by using only the SHAP interaction score. Therefore, analysis was conducted using the SHAP value obtained by adding the SHAP interaction value between v and Cv, and the SHAP main score of v.

The interactions between v and ρm (Fig. 3f) and between v and Cv (Fig. 3g) showed similar tendencies. This observation suggests that in the present model, ρm rather than Cp could be representing Cv. This effect can occur because ρm has a larger normalized distribution width than Cp. Variation in Cp across the materials in the dataset is relatively minor, so the suggestion that Cv is predominantly determined by ρm is plausible.

The SHAP main scores of ρm (Fig. 2f) and Cp (Fig. 2k) show that values above a certain threshold degrade \(\widetilde{\rho }\). This effect occurs because both ρm and Cp represent heat capacity per volume. A ρm or Cp implies a high Cv, so the necessary high temperature for melting in the required area cannot be easily achieved. Consequently, if ρm or Cp surpasses a certain level, lack of fusion is likely to occur, and this effect decreases \(\widetilde{\rho }\).

Increase in Cv indicates an increase in the amount of energy that is required to raise the temperature of a certain area (Fig. 3h); as a consequence, as Cv increases, the time required for the temperature to rise around the laser-irradiated area increases. Likewise, decrease in k slows the rate of increase in surrounding temperature. These effects suggest that the interaction between k and v when k is low shows a similar tendency to the interaction between Cv and v when Cv is high. Similarly, when k is high and Cv is low, the interaction between each feature and scan speed displays a similar tendency. In summary, the interactions between Cv and v exhibit opposite trends to the interactions between k and v, because the temperature rise behavior around the laser-irradiated area is contrary to each other.

Prediction of process conditions and experimental validation

We have developed a method to predict process parameters for a particular powder, which are expected to yield a product that has \(\widetilde{\rho }\) ≥ 98%, by inversely predicting the present model. The goal of this method is to suggest process parameters with the highest likelihood of yielding products that have \(\widetilde{\rho }\) ≥ 98%, as determined by the ML model. We introduced a fitness function

to evaluate the reliability of predictions made by the present model, which produces prediction values between 0 and 1.

To perform inverse prediction of the present model, we performed random search (details in Methods section), which is an advantageous metaheuristic technique to find multiple optimal values. This approach is preferable, because the goal of our method is to suggest several high-certainty process parameters, rather than only the parameter that has the highest certainty. All process condition predictions in this study were conducted with a fixed layer thickness of 0.05 mm, because it was the most suitable for the size of the powder used in this study (d50 = 46 μm).

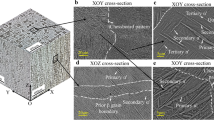

The validation was conducted using STS 316 L and AlSi10Mg, which are alloys included in the database used for model training. The process condition prediction and experimental verification for STS 316 L and AlSi10Mg (Tables 3 and 4) serve as tests to verify the model and method’s reproducibility. Optical micrographs (Fig. 4a, b) were obtained of the cut surfaces for the six STS 316 L specimens and the six AlSi10Mg specimens that had the highest \(\widetilde{\rho }\).

All 12 STS 316 L specimens had \(\widetilde{\rho }\) ≥ 98%; 11 had \(\widetilde{\rho }\) ≥ 99%. This result demonstrates the reliability of our model and method. The dataset for training the present model, including the test dataset, contains seven STS 316 L powder data entries26,27,28 with process conditions (141 ≤ P ≤ 398 W, 620 ≤ v ≤ 1540 mm/s, t = 0.05 mm, and 0.08 ≤ h ≤ 0.188 mm) (Supplementary Table 1), which are the ranges of process conditions in Table 3. Process condition No. 5 in Table 3 is similar to those in Supplementary Table 1, but the remaining conditions in Table 3 represent new, distinct process conditions. This result illustrates that our model and method effectively reproduce existing optimal processing conditions for the powder in use, and also generate novel optimal processing conditions.

On the other hand, compared to Fe-based alloys like STS 316 L, AlSi10Mg, an Al-based alloy, is more challenging for PBF processing. To get a more accurate reading of its relative density, we prepared multiple samples and averaged the values. The relative densities shown in Table 4 represent these average values. For more detailed information on each process and the exact relative densities, refer to Supplementary Table 2.

The results in Table 4 are promising: all 12 tested process conditions achieved an average \(\widetilde{\rho }\) over 98%. Impressively, 11 out of these 12 had average \(\widetilde{\rho }\) ≥ 99%. The dataset contains eight data entries29,30,31 for AlSi10Mg powder under specific process conditions (236 ≤ P ≤ 398 W, 980 ≤ v ≤ 2150 mm/s, t = 0.05 mm, and 0.08 ≤ h ≤ 0.148 mm) (Supplementary Table 3), which are the ranges of process conditions in Table 4. While process condition No. 9 in Table 4 is similar to those in Supplementary Table 3, the remaining conditions spotlight new and distinct process parameters. This reaffirms our model’s efficacy in both mirroring existing optimal conditions and pioneering novel ones.

To substantiate the transferability of our model and method, we conducted an experimental verification using Fe60Co15Ni15Cr10 MEA, which was not included in the database used to train the model. The prediction results of process conditions for Fe60Co15Ni15Cr10 MEA powder and the experimental verification of these conditions were arranged in order of decreasing \(\widetilde{\rho }\) of the manufactured specimens (Table 5). Optical micrographs (Fig. 4c) were obtained from the six Fe60Co15Ni15Cr10 MEA specimens had had the highest \(\widetilde{\rho }\).

To increase the reliability of our experimental results, we fabricated several specimens for each process condition, measured their \(\widetilde{\rho }\) (Supplementary Table 4), then averaged these values. For all 12 process conditions, specimens made with each process condition exhibited average \(\widetilde{\rho }\) ≥ 99%, which exceeds our established threshold of 98%. This result confirms the reliability and transferability of our model and method, and that they are applicable to generate several optimal process conditions that yield products with high \(\widetilde{\rho }\), regardless of the material used.

Our experimental results confirm that the method effectively generates process conditions that yield products with high \(\widetilde{\rho }\) for STS 316 L, AlSi10Mg, and Fe60Co15Ni15Cr10 MEA. However, our method does not assure optimal material properties, such as surface quality, other than \(\widetilde{\rho }\), because the primary focus of our model and method was to achieve high \(\widetilde{\rho }\). Consequently, although a specimen may achieve the desired \(\widetilde{\rho }\) under process conditions recommended by our method, other properties may be suboptimal. In future work, this limitation can be addressed by developing new models for other properties such as surface quality, and incorporating these models into our method.

In summary, we have successfully developed a method that can identify process parameters that yield a product that has \(\widetilde{\rho }\) ≥ 98%, in accordance with the properties of the powder used. This success was made possible by training a model that determines whether a product that was fabricated under specific process conditions and utilizing a particular powder has the \(\widetilde{\rho }\) ≥ 98%. By applying a sigmoid function to transform output, we mitigated bias in the dataset, and thereby increased the accuracy of our ML model. SHAP analysis provided insight into the model’s decision-making process, and reproduced well-understood interactions such as that between scan speed and laser power, while also uncovering knowledge regarding the effects of input features or interactions between these features on the \(\widetilde{\rho }\).

Our method, designed to generate process conditions conducive to high \(\widetilde{\rho }\), operates by using random search to inversely predict the present model. We substantiated the reproducibility and transferability of our model and method by predicting 12 process conditions for STS 316 L and AlSi10Mg powders of which data were included in the training dataset and Fe60Co15Ni15Cr10 MEA powder of which data were not included in the training dataset, respectively. Experimental validation affirmed that products fabricated using each powder and condition had \(\widetilde{\rho }\) ≥ 98%, and thus provided empirical evidence that our method can generate process conditions that lead to products with high \(\widetilde{\rho }\), even for previously unlearned alloys.

Methods

Dataset preparation

We collected 2167 process conditions for 50 metal powders and their corresponding \(\widetilde{\rho }\) from previous studies (references in supplementary materials) in which products were produced using respective process conditions and powders. The data include powder properties R, k, Cp, ρm. TM; process conditions P, v, t, h; and \(\widetilde{\rho }\).

In compiling our dataset, we favored \(\widetilde{\rho }\) data measured via image analysis but also included some data measured via the Archimedes method. This approach was adopted for two main reasons: firstly, the discrepancy between the two methods is typically minor within our low-porosity region of interest32,33, and the errors of relative densities far from 98% are disregarded as the relative density is normalized by the sigmoid function. Secondly, as supported by Halevy et al.34, larger datasets with some measurement errors are generally more beneficial for training and generalization than smaller, flawless datasets. It is important to note that that even data measured solely by image analysis might not be entirely free from experimental errors.

While other conditions including machine type and laser diameter could potentially serve as meaningful input features, we consciously decided to limit the number of input features due to the size of our dataset. Adding more input features would increase the dimensionality of the dataset’s input space, which, without a corresponding increase in data quantity, can lead to the “curse of dimensionality“35. This phenomenon can hinder effective learning and decrease the interpretability of the model36. As such, we focused on major process conditions, P, v, t, and h, as our model’s input features.

Our data compilation strategy ensures the dataset is complete, with no missing values. The powder properties were predominantly sourced from existing literature. When direct collection was not feasible, we computed the properties, as we will discuss in further detail later. The process conditions, P, v, t, and h, are typically reported in most PBF-related research publications. We ensured data quality by excluding any studies that did not provide these crucial details, thus maintaining the integrity of our dataset.

As we mentioned above, most powder properties were obtained from the literature37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54. But for some powders, Cp and TM were not available, so we estimated them by thermodynamic calculation using the TCFE2000 thermodynamic database and its upgraded version55,56,57,58,59 with Thermo-Calc software60. R of almost all alloy powders could not be found in the literature, we calculated R as a weighted arithmetic average of the composition, assuming that the alloy powder reflectivity followed the rule of mixture. R of pure elements for laser with a wavelength of 1 μm was also collected from literature61,62,63,64,65,66,67,68. For Fe60Co15Ni15Cr10 MEA, we calculated its properties or measured them experimentally. For MEA, we used thermodynamic calculation to determine its TM = 1461.75 °C, and computed its R = 66.15% as the weighted arithmetic average of the composition.

We further calculated the thermal conductivity of the MEA as

where \(\alpha\) = 3.739 mm2/s is thermal diffusivity, as measured using the laser flash technique (ASTM E1461, LFA 467, NETZSCH, Germany), ρm = 7.91 g/cm3 was determined using Archimedes’ principle (XP205, Mettler Toledo, USA), and Cp = 0.462 J/(g ⋅ K) was measured using a Differential Scanning Calorimeter (DSC, DSC8000, PerkinElmer, USA). The collected dataset is provided in Supplementary Data 1.

ML Model

In this study, we aimed to develop the most accurate model to address our problem; for this purpose, we selected the most suitable among a range of ML algorithms. We trained regression models with sigmoid-transformed outputs using several algorithms, all operating on the same training and test datasets. The highest test set accuracies were obtained using the Random Forest (86.2%) and XGBoost (85.8%) algorithms (Table 6).

To further improve these results, we optimized the hyperparameters of the Random Forest and XGBoost models, by using the hyperparameter optimization framework, Optuna69. We then trained 100 versions of each model, with shuffled training, validation, and test datasets. Comparing the average test set accuracy of the models, the Random Forest model achieved an accuracy of 85.7% and XGBoost achieved 87%. Consequently, we selected the XGBoost algorithm to train our final model. More details about the XGBoost model and the others are in the supplementary materials.

The XGBoost model was developed using the XGBoost library19, an open-source software library providing a gradient boosting framework. All other models were developed using scikit-learn70 a free software ML library for Python. The input data for all models was standardized using mean and standard deviation values. To accurately evaluate the models’ performances, we applied the holdout validation method. This technique randomly partitions the dataset into training, validation, and test sets. In our application, we allocated 70% of the data for training purposes, while the remaining 30% was equally partitioned between validation and testing, each constituting 15%.

Our primary model is an XGBoost model, which uses a tree structure, so we used tree SHAP (tree explainer)24 to calculate the SHAP23 scores. We analyzed our model by extracting the SHAP main scores and SHAP interaction scores by using the tree explainer function, which enables calculation of SHAP interaction values.

Method for prediction of process conditions

The method detailed here outlines how to identify process conditions that are expected to yield products that have \(\widetilde{\rho }\) ≥ 98%, considering the specific powder used, by inverse prediction of the present model. This technique incorporates a form of random search that involves three primary steps: random input generation, prediction, and selection.

The process initiates with the input of powder properties and the determination of process parameters, including which are fixed, and their values. Then the random-input-generation step produces even number of sets of inputs within the range of existing data for each input in the dataset. Subsequently, during the prediction step, these sets of inputs are standardized using the mean and standard deviation used during training, then fed into the model that had been trained to predict \(\widetilde{\rho }\).

The present model outputs values between 0 and 1, which are relative densities transformed by the sigmoid function, so they can be converted to Certainty (Eq. 3). The following step selects the half of the input sets that have the highest predicted value. However, any input sets that have process conditions that are deemed too similar to others are discarded before this selection process. The criterion for similarity is defined as:

where \({a}_{i}\) and \({b}_{i}\) represent the standardized process parameters of two input sets.

To replace discarded sets, new random input sets are generated. By repeating this process, we can identify process conditions that are expected to yield products that have high \(\widetilde{\rho }\). The iteration stops when the sets of inputs selected remain consistent for a certain number of iterations.

This method to predicts optimal process conditions considering the powder used, has been incorporated into a GUI program. This program, along with its user manual, is available for download at https://doi.org/10.5281/zenodo.838289071. For optimal printing results, we recommend using a layer thickness that exceeds the d50 size of the powder used in the process.

Experimental procedure

To evaluate the accuracy of our prediction method, we printed coupon samples of 316 L stainless steel, AlSi10Mg, and Fe60Co15Ni15Cr10 MEA, then measured their porosities. The metallic powder used for the L-PBF process was gas-atomized spherical powder with a size distribution of d50 = 46 μm (MK Inc., Republic of Korea). Using a commercial L-PBF machine (Concept Laser M2, GE Additive, USA), we printed cubic coupons of dimensions 10 × 10 × 10 mm³. Process parameters were selected for model validation (Tables 3, 5). Other process parameters: layer thickness of 50 μm, a laser spot size of 50 μm, a layer-by-layer rotation angle of 90°, and printing in N2 atmosphere, were maintained across all experiments.

The porosity of the samples was analyzed from the XZ-plane (where X denotes the powder coating direction and Z represents the building direction). The coupons were cut along this plane and subsequently polished mechanically to a 1200 mesh with emery paper. We then captured optical microscopic images of the polished sections and used ImageJ software to analyze and measure the porosity.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

The raw data generated in this study are available within the article and its supplementary materials. The same dataset has been deposited in the Figshare repository under the https://doi.org/10.6084/m9.figshare.2420379972. There are no restrictions on data access. Source data are provided with this paper.

Code availability

The codes generated in this study, along with the GUI program designed to predict process conditions for various metallic powders, are available for download at https://doi.org/10.5281/zenodo.8382890.71

References

Bibb, R. Physical reproduction – rapid prototyping technologies. Med. Model https://doi.org/10.1533/9781845692001.59 (2006).

Ahmed, N., Barsoum, I., Haidemenopoulos, G. & Al-Rub, R. K. A. Process parameter selection and optimization of laser powder bed fusion for 316L stainless steel: a review. J. Manuf. Process. 75, 415–434 (2022).

Al-Maharma, A. Y., Patil, S. P. & Markert, B. Effects of porosity on the mechanical properties of additively manufactured components: a critical review. Mater. Res. Express 7, 122001 (2020).

Olakanmi, E. O., Cochrane, R. F. & Dalgarno, K. W. Densification mechanism and microstructural evolution in selective laser sintering of Al–12Si powders. J. Mater. Process. Technol. 211, 113–121 (2011).

Prashanth, K. G., Scudino, S., Maity, T., Das, J. & Eckert, J. Is the energy density a reliable parameter for materials synthesis by selective laser melting? Mater. Res. Lett. 5, 386–390 (2017).

Aoyagi, K., Wang, H., Sudo, H. & Chiba, A. Simple method to construct process maps for additive manufacturing using a support vector machine. Addit. Manuf. 27, 353–362 (2019).

Silbernagel, C., Aremu, A. & Ashcroft, I. Using machine learning to aid in the parameter optimisation process for metal-based additive manufacturing. Rapid Prototyp. J. 26, 625–637 (2020).

Suzuki, A., Shiba, Y., Ibe, H., Takata, N. & Kobashi, M. Machine-learning assisted optimization of process parameters for controlling the microstructure in a laser powder bed fused WC/Co cemented carbide. Addit. Manuf. 59, 103089 (2022).

Hertlein, N., Deshpande, S., Venugopal, V., Kumar, M. & Anand, S. Prediction of selective laser melting part quality using hybrid Bayesian network. Addit. Manuf. 32, 101089 (2020).

Liu, Q. et al. Machine-learning assisted laser powder bed fusion process optimization for AlSi10Mg: new microstructure description indices and fracture mechanisms. Acta Mater. 201, 316–328 (2020).

Rankouhi, B., Jahani, S., Pfefferkorn, F. E. & Thoma, D. J. Compositional grading of a 316L-Cu multi-material part using machine learning for the determination of selective laser melting process parameters. Addit. Manuf. 38, 101836 (2021).

Toprak, C. B. & Dogruer, C. U. Neuro-fuzzy modelling methods for relative density prediction of stainless steel 316L metal parts produced by additive manufacturing technique. J. Mech. Sci. Technol. 37, 107–118 (2023).

Liu, J. et al. A review of machine learning techniques for process and performance optimization in laser beam powder bed fusion additive manufacturing. J. Intell. Manuf. https://doi.org/10.1007/S10845-022-02012-0/METRICS (2022).

Sing, S. L., Kuo, C. N., Shih, C. T., Ho, C. C. & Chua, C. K. Perspectives of using machine learning in laser powder bed fusion for metal additive manufacturing. Virtual Phys. Prototyp. 16, 372–386 (2021).

Cacace, S. & Semeraro, Q. Fast optimisation procedure for the selection of L-PBF parameters based on utility function. Virtual Phys. Prototyp. 17, 125–137 (2022).

Wen, S. et al. High-density tungsten fabricated by selective laser melting: densification, microstructure, mechanical and thermal performance. Opt. Laser Technol. 116, 128–138 (2019).

Yamamoto, T., Hara, M. & Hatano, Y. Effects of fabrication conditions on the microstructure, pore characteristics and gas retention of pure tungsten prepared by laser powder bed fusion. Int. J. Refract. Met. Hard Mater. 95, 105410 (2021).

Ozsoy, A., Yasa, E., Keles, M. & Tureyen, E. B. Pulsed-mode selective laser melting of 17-4 PH stainless steel: effect of laser parameters on density and mechanical properties. J. Manuf. Process. 68, 910–922 (2021).

Chen, T. & Guestrin, C. XGBoost: A Scalable Tree Boosting System. in Proc. 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 785–794 (ACM, 2016).

Fonseca, E. B. et al. Assessment of laser power and scan speed influence on microstructural features and consolidation of AISI H13 tool steel processed by additive manufacturing. Addit. Manuf. 34, 101250 (2020).

Ren, Y. et al. Investigation of mechanical properties and microstructures of GH536 fabricated by laser powder bed fusion additive manufacturing. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 235, 155–165 (2021).

Kim, E. S., Haftlang, F., Ahn, S. Y., Gu, G. H. & Kim, H. S. Effects of processing parameters and heat treatment on the microstructure and magnetic properties of the in-situ synthesized Fe-Ni permalloy produced using direct energy deposition. J. Alloy. Compd. 907, 164415 (2022).

Lundberg, S. M., Allen, P. G. & Lee, S.-I. A Unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 30, (2017).

Lundberg, S. M. et al. From local explanations to global understanding with explainable AI for trees. Nat. Mach. Intell. 2, 56–67 (2020).

Buchbinder, D., Schleifenbaum, H., Heidrich, S., Meiners, W. & Bültmann, J. High power selective laser melting (HP SLM) of aluminum parts. Phys. Procedia 12, 271–278 (2011).

Delgado, J., Ciurana, J. & Rodríguez, C. A. Influence of process parameters on part quality and mechanical properties for DMLS and SLM with iron-based materials. Int. J. Adv. Manuf. Technol. 60, 601–610 (2012).

Sun, Z., Tan, X., Tor, S. B. & Yeong, W. Y. Selective laser melting of stainless steel 316L with low porosity and high build rates. Mater. Des. 104, 197–204 (2016).

Cherry, J. A. et al. Investigation into the effect of process parameters on microstructural and physical properties of 316L stainless steel parts by selective laser melting. Int. J. Adv. Manuf. Technol. 76, 869–879 (2015).

Hyer, H. et al. Understanding the laser powder bed fusion of AlSi10Mg alloy. Metallogr. Microstruct. Anal. 9, 484–502 (2020).

Hastie, J. C., Kartal, M. E., Carter, L. N., Attallah, M. M. & Mulvihill, D. M. Classifying shape of internal pores within AlSi10Mg alloy manufactured by laser powder bed fusion using 3D X-ray micro computed tomography: Influence of processing parameters and heat treatment. Mater. Charact. 163, 110225 (2020).

Wang, P., Salandari-Rabori, A., Dong, Q. & Fallah, V. Effect of input powder attributes on optimized processing and as-built tensile properties in laser powder bed fusion of AlSi10Mg alloy. J. Manuf. Process. 64, 633–647 (2021).

Paraschiv, A., Matache, G., Condruz, M. R., Frigioescu, T. F. & Pambaguian, L. Laser powder bed fusion process parameters’ optimization for fabrication of dense IN 625. Materials 15, 5777 (2022).

Kreitcberg, A., Brailovski, V. & Prokoshkin, S. New biocompatible near-beta Ti-Zr-Nb alloy processed by laser powder bed fusion: process optimization. J. Mater. Process. Technol. 252, 821–829 (2018).

Halevy, A., Norvig, P. & Pereira, F. The unreasonable effectiveness of data. IEEE Intell. Syst. 24, 8–12 (2009).

Bellman, R. Dynamic programming. Science 153, 34–37 (1966).

Li, J. et al. Feature selection: a data perspective. ACM Comput. Surv. 50, 94 (2017).

Aboulkhair, N. T., Everitt, N. M., Ashcroft, I. & Tuck, C. Reducing porosity in AlSi10Mg parts processed by selective laser melting. Addit. Manuf. 1–4, 77–86 (2014).

ASM Handbook Committee. ASM Handbook Volume 2: Properties and Selection: Nonferrous Alloys and Special-Purpose Materials (ASM International, 1990).

Qi, X., Takata, N., Suzuki, A., Kobashi, M. & Kato, M. Managing both high strength and thermal conductivity of a laser powder bed fused Al–2.5Fe binary alloy: Effect of annealing on microstructure. Mater. Sci. Eng. A 805, 140591 (2021).

Peckner, D. & Bernstein, I. M. Handbook of Stainless Steels (McGraw-Hill, 1977).

Welsch, G., Boyer, R. & Collings, E. W. Materials Properties Handbook: Titanium Alloys (ASM International, 1994).

ASM Handbook Committee. Metals Handbook Volume 3: Properties and Selection Stainless Steels, Tool Materials & Special-purpose Metal (ASM International, 1980).

Ma, H. et al. Influence of nano-diamond content on the microstructure, mechanical and thermal properties of the ZK60 composites. J. Magnes. Alloy. 10, 440–448 (2022).

Zhang, B. et al. An efficient framework for printability assessment in laser powder bed fusion metal additive manufacturing. Addit. Manuf. 46, 102018 (2021).

Zhang, Y. C., Franco, V., Peng, H. X. & Qin, F. X. Structure and magnetic study of Ni-Mn-Ga/Al composite with modified magnetocaloric properties and enhanced thermal conductivity. Scr. Mater. 201, 113956 (2021).

ASM Handbook Committee. ASM Handbook Volume 15: Casting (ASM International, 2008).

Avedesian, M. M. & Baker, H. ASM Specialty Handbook: Magnesium and Magnesium Alloys (ASM International, 1999).

Bauccio, M. ASM Metals Reference Book, 3rd Edition. (ASM International, 1993).

Ho, C. Y., Holt, J. M. & Mindlin, H. Structural Alloys Handbook: 1996 Edition (Cindas/Purdue Univ., 1996).

Boyer, H. E. & Gall, T. L. Metals Handbook: Desk Edition (ASM International, 1985).

Hyer, H. et al. Composition-dependent solidification cracking of aluminum-silicon alloys during laser powder bed fusion. Acta Mater. 208, 116698 (2021).

Aluminum Association. Aluminum Standards and Data (Aluminum Association, 2000).

Qi, Y. et al. A high strength Al–Li alloy produced by laser powder bed fusion: densification, microstructure, and mechanical properties. Addit. Manuf. 35, 101346 (2020).

Aluminum Association. Standards for Aluminum Sand and Permanent Mold Castings (Aluminum Association, 2000).

TCFE2000: The Thermo-Calc Steels Database, upgraded by B.-J. Lee, B. Sundman at KTH, KTH, Stockholm (1999).

Choi, W.-M. et al. A Thermodynamic modelling of the stability of sigma phase in the Cr-Fe-Ni-V high-entropy alloy system. J. Phase Equilibria Diffus. 39, 694–701 (2018).

Choi, W.-M. et al. A thermodynamic description of the Co-Cr-Fe-Ni-V system for high-entropy alloy design. Calphad 66, 101624 (2019).

Do, H.-S., Choi, W.-M. & Lee, B.-J. A thermodynamic description for the Co–Cr–Fe–Mn–Ni system. J. Mater. Sci. 57, 1373–1389 (2022).

Do, H.-S., Moon, J., Kim, H. S. & Lee, B.-J. A thermodynamic description of the Al–Cu–Fe–Mn system for an immiscible medium-entropy alloy design. Calphad 71, 101995 (2020).

Andersson, J. O., Helander, T., Höglund, L., Shi, P. & Sundman, B. Thermo-Calc & DICTRA, computational tools for materials science. Calphad 26, 273–312 (2002).

Wall, W. E. Optical Reflectivity and Auger Spectroscopy of Titanium and Titanium-oxygen Surfaces. (Georgia Institute of Technology, 1978).

Samsonov, G. V. Handbook of the Physicochemical Properties of the Elements (Springer Science & Business Media, 2012).

Coblentz, W. W. & Stair, R. Reflecting power of beryllium, chromium and several other metals. J. Res. Natl Bur. Stand. 2, 343–354 (1929).

Zhao, L. Surface polishing of niobium for superconducting radio frequency (SRF) cavity application. Diss. Theses, Masters Proj. https://doi.org/10.21220/nv91-e949 (2015).

Teodorescu, G. Radiative emissivity of metals and oxidized metals at high temperature. (2007).

Burgess, G. K. & Waltenberg, R. G. The emissivity of metals and oxides. (US Government Printing Office Washington, DC, USA, 1915).

Yu, Z., Geng, H. Y., Sun, Y. & Chen, Y. Optical properties of dense lithium in electride phases by first-principles calculations. Sci. Rep. 8, 1–14 (2018).

Ferraton, J. P., Ance, C., Kofman, R., Cheyssac, P. & Richard, J. Reflectance and thermoreflectance of gallium. Solid State Commun. 20, 49–52 (1976).

Akiba, T., Sano, S., Yanase, T., Ohta, T. & Koyama, M. Optuna: a next-generation hyperparameter optimization framework. (2019).

Pedregosa, F. et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Wang, J., Jeong, S. G., Kim, E. S., Kim H. S. & Lee, B.-J. Material-agnostic machine learning approach enables high relative density in powder bed fusion products., Zenodo, https://doi.org/10.5281/zenodo.8382890 (2023).

Wang, J., Jeong, S. G., Kim, E. S., Kim H. S. & Lee, B.-J. Dataset for the article named material-agnostic machine learning approach enables high relative density in powder bed fusion products., Figshare, https://doi.org/10.6084/m9.figshare.24203799 (2023).

Acknowledgements

This work has been financially supported by the National Research Foundation of Korea (NRF) funded by the Ministry of Science and ICT, Korea (Grant No. NRF-2022R1A5A1030054 to B.-J.L. and Grant No. NRF-2022R1A2C2004331 to B.-J.L.).

Author information

Authors and Affiliations

Contributions

J.W.: conceptualization, methodology, software, validation, formal analysis, investigation, data curation, writing—original draft, visualization. S.G.J.: writing—review and editing, validation, formal analysis, investigation, resources. E.S.K.: conceptualization, investigation, resources. H.S.K.: supervision, project administration, funding acquisition. B.-J.L.: conceptualization, methodology, writing—review and editing, supervision, project administration, funding acquisition.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Source data

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, J., Jeong, S.G., Kim, E.S. et al. Material-agnostic machine learning approach enables high relative density in powder bed fusion products. Nat Commun 14, 6557 (2023). https://doi.org/10.1038/s41467-023-42319-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-023-42319-x

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.