Abstract

Quantum algorithms for simulating electronic ground states are slower than popular classical mean-field algorithms such as Hartree–Fock and density functional theory but offer higher accuracy. Accordingly, quantum computers have been predominantly regarded as competitors to only the most accurate and costly classical methods for treating electron correlation. However, here we tighten bounds showing that certain first-quantized quantum algorithms enable exact time evolution of electronic systems with exponentially less space and polynomially fewer operations in basis set size than conventional real-time time-dependent Hartree–Fock and density functional theory. Although the need to sample observables in the quantum algorithm reduces the speedup, we show that one can estimate all elements of the k-particle reduced density matrix with a number of samples scaling only polylogarithmically in basis set size. We also introduce a more efficient quantum algorithm for first-quantized mean-field state preparation that is likely cheaper than the cost of time evolution. We conclude that quantum speedup is most pronounced for finite-temperature simulations and suggest several practically important electron dynamics problems with potential quantum advantage.

Similar content being viewed by others

Introduction

Quantum computers were first proposed as tools for dynamics by Feynman1 and later shown to be universal for that purpose by Lloyd et al.2. Like those early papers, most work on this topic assumes that the advantage of quantum computers for dynamics is that they provide an approach to simulation with systematically improvable precision but without scaling exponentially. Here, we advance and analyze a different idea: certain (exact) quantum algorithms for dynamics may be more efficient than even classical methods that make uncontrolled approximations. By exact we mean that the time-evolved quantum state differs in 1-norm from the full configuration interaction dynamics within a basis by at most ϵ, regardless of the initial state, with a refinement cost scaling as \({{{{{{{\mathcal{O}}}}}}}}(\log (1/\epsilon ))\). We examine this in the context of simulating interacting fermions—systems of relevance in fields such as chemistry, physics, and materials science.

It is often the case that practically relevant ground-state problems in chemistry and materials science do not exhibit a strong correlation. For those problems, many classical heuristic methods work well3,4,5. Even for some strongly correlated systems, there are successful polynomial-scaling classical methods6. Here, we argue that even if electronic systems are well described by mean-field theory, quantum algorithms can achieve speedup over classical algorithms for simulating the time evolution of such systems. We focus on comparing to mean-field methods such as real-time time-dependent Hartree–Fock and density functional theory due to their popularity and well-defined scaling. Nonetheless, many of our arguments translate to advantages over other classical approaches to dynamics that are more accurate and expensive than mean-field methods. This is a sharp contrast to prior studies of quantum algorithms, which have focused on strongly correlated ground state problems such as FeMoCo7,8,9,10,11, P45012, chromium dimers13 and jellium14,15,16,17, assessing quantum advantage over only the most accurate and costly classical algorithms.

Quantum algorithms competitive with classical algorithms for efficient-to-simulate quantum dynamics have been analyzed in contexts outside of fermionic simulation. For example, work by Somma18 showed that certain one-dimensional quantum systems, such as harmonic oscillators, could be simulated with sublinear complexity in system size. Experimentally motivated work by Geller et al.19 also proposed simulating quantum systems in a single-excitation subspace, a task for which they suggested a constant factor speedup was plausible. However, neither work is connected to the context studied here.

In this work, we begin by analyzing the cost of classical mean-field dynamics and recent exact quantum algorithms in first quantization, focusing on why there is often a quantum speedup in the number of basis functions over classical mean-field methods. Next, we analyze the overheads associated with measuring quantities of interest on a quantum computer and introduce more efficient methods for measuring the one-particle reduced density matrix in the first quantization (which characterizes all mean-field observables). Then, we discuss the costs of preparing mean-field states on the quantum computer and describe new methods that make this cost likely negligible compared to the cost of time evolution. We conclude with a discussion of systems where these techniques might lead to a practical quantum advantage over classical mean-field simulations.

Results

Classical mean-field dynamics

Here we will discuss mean-field classical algorithms for simulating the dynamics of interacting systems of electrons and nuclei. Thus, we will focus on the ab initio Hamiltonian with η particles discretized using N basis functions, which can be expressed as

where \({a}_{\mu }^{({{{\dagger}}} )}\) is the fermionic annihilation (creation) operator for the μ-th orbital and integral values are given by

Here, V(r) is the external potential (perhaps arising from the nuclei) and ϕμ(r) represents a spatial orbital.

Exact quantum dynamics is encoded by the time-dependent Schrödinger equation given by

Mean-field dynamics, such as real-time time-dependent Hartree–Fock (RT-TDHF)20, employs a time-dependent variational principle within the space of single Slater determinants (i.e., anti-symmetrized product states) to approximate Eq. (4). Other methods with similar cost such as real-time time-dependent density functional theory (RT-TDDFT) rely on a relationship between the interacting system and an auxiliary non-interacting system to define dynamics within a space of single Slater determinants20,21,22. In both methods, there are η occupied orbitals, each expressed as a linear combination of N basis functions using the coefficient matrix, Cocc. The dimension of Cocc is N × η. These orbitals constitute a Slater determinant, requiring \({{{{{{{\mathcal{O}}}}}}}}(N\eta \log (1/\epsilon ))\) space for classical storage.

As a result of this approximation, we solve the following effective time-dependent equation for the occupied orbital coefficients that specify the Slater determinant Cocc(t) at a given moment in time:

where the effective one-body mean-field operator F(t), also known as the time-dependent Fock matrix, is

with \({{{{{{{\bf{P}}}}}}}}(t)={{{{{{{{\bf{C}}}}}}}}}_{{{{{{{{\rm{occ}}}}}}}}}(t){({{{{{{{{\bf{C}}}}}}}}}_{{{{{{{{\rm{occ}}}}}}}}}(t))}^{{{{\dagger}}} }\). While F(t) is an N × N dimensional matrix, we can apply it to Cocc(t) without explicitly constructing it, thus avoiding a space complexity of \({{{{{{{\mathcal{O}}}}}}}}({N}^{2}\log (1/\epsilon ))\). Using the most common methods of applying this matrix to update each of η occupied orbitals in Cocc(t) requires \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}({N}^{2}\eta )\) total operations. (Throughout the paper we use the convention that \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}(\cdot )\) implies suppressing polylogarithmic factors).

However, a recent technique referred to as occ-RI-K by Head-Gordon and co-workers23, and similarly Adaptively Compressed Exchange (ACE)24,25 by Lin and co-workers, further reduces this cost. These methods leverage the observation that, when restricted to the subspace of the η occupied orbitals, the effective rank of the Fock operator scales as \({{{{{{{\mathcal{O}}}}}}}}(\eta )\). This gives an approach to updating the Fock operator that requires only

operations. Below we will use gate complexity and the number of operations interchangeably when discussing the scaling of classical algorithms. Although these techniques are not implemented in every quantum chemistry code, we regard them as the main point of comparison to quantum algorithms. We also note that RT-TDDFT with hybrid functionals26 has the same scaling as RT-TDHF. Simpler RT-TDDFT methods (i.e., those without exact exchange) can achieve better scaling, \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}(N\eta )\) in a plane wave basis, but are often less accurate.

For finite-temperature simulation, one often needs to track M > η orbitals with appreciable occupations, increasing the space complexity to \({{{{{{{\mathcal{O}}}}}}}}(NM\log (1/\epsilon ))\). This increases the cost of occ-RI-K or ACE mean-field updates to \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}(N{M}^{2})\). At temperatures well above the Fermi energy, most orbitals have appreciable occupations so M ≃ N. More expensive methods for dynamics that include electron correlation in the dynamics tend to scale at least linearly in the cost of ground state simulation at that level of theory. Thus, speedup over mean-field methods implies speedup over more expensive methods.

In recent years, by leveraging the nearsightedness of electronic systems27, linear-scaling methods have been developed that achieve updates scaling as \({{{{{{{\mathcal{O}}}}}}}}(N)\)28. For RT-TDHF and RT-TDDFT, linear scaling comes from the fact that the off-diagonal elements of P fall off quickly with distance for the ground state29 and some low-lying excited states30 in a localized basis. One can show that for gapped ground states, the decay rate is exponential, whereas for metallic ground states, it is algebraic27. However, often such asymptotic behavior only onsets for very large systems, and the onset can be highly system-dependent. This should be contrasted with the scaling analyzed above and the scaling of quantum algorithms (vide infra) that onsets already at modest system sizes. Furthermore, the nearsightedness of electrons does not necessarily hold for dynamics of highly excited states and at high temperatures. It has also been suggested that one can exploit a low-rank structure of occupied orbitals using the quantized tensor train format31. Assuming the compression of orbitals in real space is efficient such that the rank does not grow with system size or the number of grid points, the storage cost is reduced to \(\tilde{{{{{{{{\mathcal{O}}}}}}}}}(\eta )\), and the update cost is \(\tilde{{{{{{{{\mathcal{O}}}}}}}}}({\eta }^{2})\). It is unclear how well compression can be realized for dynamics problems and finite-temperature problems, and to our knowledge, it has never been deployed for those purposes. Due to these limitations, we do not focus on comparing quantum algorithms to classical linear-scaling methods or quantized tensor train approaches.

We now discuss how many time steps are required to perform time evolution using classical mean-field approaches. The number of time steps will depend on the target precision as well as the total unitless time

where t is the duration of time-evolution and ∥⋅∥ denotes the spectral norm. This dependence on the norm of F is similar to what would be obtained in the case of linear differential equations despite the dependence on Cocc; see Supplementary Note 1 for a derivation. We can upper bound T by considering its scaling on a local basis, and with open boundary conditions. We find

where \(\delta={{{{{{{\mathcal{O}}}}}}}}({(\eta /N)}^{1/3})\) is the minimum grid spacing when taking cell volume proportional to η. The first term comes from the Coulomb operator, and the second comes from the kinetic energy operator.

We briefly describe how this scaling for the norm is obtained and refer the reader to Supplementary Note 1 for more details. The 1/δ2 term is obtained from the kinetic energy term in hμν. When diagonalized, that term will be non-zero only when μ = ν with entries scaling as \({{{{{{{\mathcal{O}}}}}}}}(1/{\delta }^{2})\) due to the ∇2 in the expression for hμν. That upper bounds the spectral norm for this diagonal matrix, and the spectral norm is unchanged under a change of basis. The η2/3/δ comes from the sum in the expression for Fμν. To bound the tensor norm of (μν∣λσ) − (μσ∣λν)/2 we can bound the norms of the two terms separately. For each, the tensor norm can be upper bounded by noting that the summing over μν, λσ with normalized vectors corresponds to transformations of the individual orbitals in the integral defining (μν∣λσ). Since orbitals cannot be any more compact than width δ, the 1/∣r1 − r2∣ in the integral averages to give \({{{{{{{\mathcal{O}}}}}}}}(1/\delta )\). There is a further factor of η2/3 when accounting for η electrons that cannot be any closer than η1/3δ on average.

The number of time steps required to effect evolution to within error ϵ depends on the choice of time integrator. Many options are available32,33,34, and the optimal choice depends on implementation details like the basis set and pseudization scheme, as well as the desired accuracy35. In Supplementary Note 1, we argue that the minimum number of time steps t/Δt one could hope for by using an arbitrarily high order integration scheme of this sort is T1+o(1)/ϵo(1). In particular, for an order k integrator, the error can be bounded as \({{{{{{{\mathcal{O}}}}}}}}({(\parallel {{{{{{{\bf{F}}}}}}}}\parallel {{\Delta }}t)}^{k+1})\), with a possibly k-dependent constant factor that is ignored in this expression. That means the error for t/Δt time steps is \({{{{{{{\mathcal{O}}}}}}}}(t\parallel {{{{{{{\bf{F}}}}}}}}{\parallel }^{k+1}{{\Delta }}{t}^{k})\). To obtain error no more than ϵ, take \(({t/{{\Delta }}t})^{k}={{{{{{{\mathcal{O}}}}}}}}\left(\right.(t\parallel {{{{{{{\bf{F}}}}}}}}{\parallel }^{k+1}/\epsilon )\), so the number of time steps is \(t/{{\Delta }}t={{{{{{{\mathcal{O}}}}}}}}({T}^{1+1/k}/{\epsilon }^{1/k})\). Plugging Eq. (9) into Eq. (8) and multiplying the update cost in Eq. (7) by T1+o(1)/ϵo(1) time steps, we find the number of operations required for classical mean-field time-evolution is

Finally, when performing mean-field dynamics, the central quantity of interest is often the one-particle reduced density matrix (1-RDM). The 1-RDM is an N × N matrix defined as a function of time with matrix elements

The 1-RDM is the central quantity of interest because it can be used to reconstruct any observable associated with a Slater determinant efficiently. For more general states, one would also need higher order RDMs; however, all higher order RDMs can be exactly computed from the 1-RDM via Wick’s theorem when the wavefunction is a single Slater determinant36. Thus, when mean-field approximations work well, the time-dependent 1-RDM can also be used to compute multi-time correlators such as Green’s functions and spectral functions.

Exact quantum dynamics in first quantization

One of the key advantages of some quantum algorithms over mean-field classical methods is the ability to perform dynamics using the compressed representation of first quantization. First-quantized quantum simulations date back to refs. 37,38,39,40. They were first applied to fermionic systems in ref. 38 and developed for molecular systems in refs. 41,42. In first quantization, one encodes the wavefunction using η different registers (one for each occupied orbital), each of size \(\log N\) (to index the basis functions comprising each occupied orbital). The space complexity of first-quantized quantum algorithms is \({{{{{{{\mathcal{O}}}}}}}}(\eta \log N)\).

As described previously, mean-field classical methods require space complexity of \({{{{{{{\mathcal{O}}}}}}}}(N\eta \log (1/\epsilon ))\) where ϵ is the target precision. Thus, these quantum algorithms require exponentially less space in N. Usually, when one thinks of quantum computers more efficiently encoding representations of quantum systems, the advantage comes from the fact that the wavefunction might be specified by a Hilbert space vector of dimension \(\left(\begin{array}{l}N\\ \eta \end{array}\right)\) and could require as much space to represent explicitly on a classical computer. However, this alone cannot give exponential quantum advantage in storage in N over classical mean-field methods since mean-field methods only resolve entanglement arising from antisymmetry and do not attempt to represent wavefunction in the full Hilbert space. Instead, the scaling advantage these quantum algorithms have over mean-field methods is related to the ability to store the distribution of each occupied orbital over N basis functions, using only \(\log N\) qubits. But quantum algorithms require more than the compressed representations of first quantization in order to realize a scaling advantage over classical mean-field methods; they must also have sufficiently low gate complexity.

Here we review and tighten bounds for the most efficient known quantum algorithms for simulating the dynamics of interacting electrons. Early first-quantized algorithms for simulating chemistry dynamics such as refs. 41,42 were based on Trotterization of the time-evolution operator in a real space basis and utilized the quantum Fourier transform to switch between a representation where the potential operator was diagonal and the kinetic operator was diagonal. This enabled Trotter steps with gate complexity \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}({\eta }^{2})\) but the number of Trotter steps required for the approach of those papers scaled worse than linearly in N, η, the simulation time t and the desired inverse error in the evolution, 1/ϵ.

Leveraging recent techniques for bounding Trotter error43,44,45, in Supplementary Note 2 we show that using sufficiently high-order Trotter formulas, the overall gate complexity of these algorithms can be reduced to

This is the lowest reported scaling of any Trotter-based first-quantized quantum chemistry simulation. We remark that the N1/3η7/3t scaling is dominant whenever N < Θ(η3). In that regime, it represents a quartic speedup in basis size for propagation over the classical mean-field scaling given in Eq. (10). While efficient explicit circuits such as those in ref. 46 can be used to perform Trotter steps in this representation, more work would be required to determine the constant factors associated with the number of Trotter steps required. Prior analyses of the requisite Trotter number for chemistry have generally found that constant factors are low, but focused on different representations or lower order formulas16,47,48,49,50.

The first algorithms to achieve sublinear scaling in N were those introduced by Babbush et al.51. That work focused on first-quantized simulation in a plane wave basis and leveraged the interaction picture simulation scheme of ref. 52 to give gate complexity scaling as

When N > Θ(η4), this algorithm is more efficient than the Trotter-based approach. Since that is also the regime where the second term in Eq. (10) dominates that scaling, this represents a quintic speedup in N and a quadratic slowdown in η over mean-field classical algorithms. The work of Su et al.53 analyzed the constant factors in the scaling of this algorithm for use in ground state preparation via quantum phase estimation54. In Supplementary Note 3 of this work, we analyze the constant factors in the scaling of this algorithm when deployed for time evolution. Su et al.53 also introduced algorithms with the same scaling as Eq. (13) but in a grid representation (see Appendix K therein).

A key component of the algorithms of refs. 51,53 is the realization of block encodings55 with just \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}(\eta )\) gates. The difficult part of block encoding is preparing a superposition state with amplitudes proportional to the square root of the Hamiltonian term coefficients. A novel quantum algorithm is devised in ref. 51, which scales only polylogarithmically in basis size. The N1/3 dependence of Eq. (13) enters via the number of times the block encoding must be repeated to perform time evolution, related to the norm of the potential operator. Suppose one can soften the Coulomb potential while retaining target precision for the simulation. In that case, the norm of the potential term can be reduced to polylogarithmic dependence on N (see Supplementary Note 4 for details). In that case, an exponential quantum advantage in N is possible.

We note that second-quantized algorithms outperform first-quantized quantum algorithms in gate complexity when N < Θ(η2). This is because while the best scaling Trotter steps in the first quantization require \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}({\eta }^{2})\) gates42, the best scaling Trotter steps in the second quantization require \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}(N)\) gates. As recently shown in ref. 45, such approaches lead to a total gate complexity for Trotter-based second-quantized algorithms scaling as

In the limit that η = Θ(N), this approach has \({{{{{{{\mathcal{O}}}}}}}}({N}^{5/3})\) gate complexity, which is significantly less than the \({{{{{{{\mathcal{O}}}}}}}}({N}^{8/3})\) gate complexity of Trotter-based first-quantized quantum algorithms mentioned here, or the \({{{{{{{\mathcal{O}}}}}}}}({N}^{11/3})\) gate complexity of classical mean-field algorithms. (See Supplementary Note 5 for a discussion on the overall quantum speedup in different regimes of how N scales in η.) However, these second-quantized approaches generally require at least \({{{{{{{\mathcal{O}}}}}}}}(N)\) qubits. The approach used in ref. 45 to implement Trotter steps involves the fast multipole method56, which requires \({{{{{{{\mathcal{O}}}}}}}}(N\log N)\) qubits as well as the restriction to a grid-like basis. When using such basis sets, we expect N ≫ η, and so this space complexity would be prohibitive for quantum computers.

Methods such as fast multipole56, Barnes-Hut57, or particle-mesh Ewald58 compute the Coulomb potential in time \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}(\eta )\) when implemented within the classical random access memory model. If the Coulomb potential could be computed with that complexity on a quantum computer it would speed up the first-quantized Trotter algorithms discussed here by a factor of \({{{{{{{\mathcal{O}}}}}}}}(\eta )\). However, it is unclear whether such algorithms extend to the quantum circuit model with the same complexity without unfavorable assumptions such as QRAM59,60, or without restricting the maximum number of electrons within a region of space (see Supplementary Note 5 for details). Thus, we exclude such approaches from our comparisons here.

Quantum measurement costs

In contrast to classical mean-field simulations, on a quantum computer, all observables must be sampled from the quantum simulation. There are a variety of techniques for doing this, with the optimal choice depending on the target precision in the estimated observable as well as the number and type of observables one wishes to measure. For example, when measuring W unit norm observables to precision ϵ one could use algorithms introduced in ref. 61 which require \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}(\sqrt{W}/\epsilon )\) state preparations and \({{{{{{{\mathcal{O}}}}}}}}(W\log (1/\epsilon ))\) ancillae. Thus, to measure all \(W={{{{{{{\mathcal{O}}}}}}}}({N}^{2})\) elements of the 1-RDM to a fixed additive error in each element, this approach would require \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}(N/\epsilon )\) circuit repetitions. While scaling optimally in ϵ for quantum algorithms, this linear scaling in N would decrease the speedup over classical mean-field algorithms.

Here we introduce a new classical shadows protocol for measuring the 1-RDM. Classical shadows were introduced in ref. 62 and adapted for second-quantized fermionic systems in refs. 63,64,65,66. Our approach is to apply a separate random Clifford channel to each of the η different \(\log N\) sized registers representing an occupied orbital. Applying a random Clifford on \(\log N\) qubits requires \({{{{{{{\mathcal{O}}}}}}}}({\log }^{2}\,N)\) gates; thus, \({{{{{{{\mathcal{O}}}}}}}}(\eta {\log }^{2}\,N)\) gates comprise the full channel (a negligible cost relative to time-evolution). In Supplementary Note 6, we prove that repeating this procedure \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}(\eta /{\epsilon }^{2})\) times enables the estimation of all 1-RDM elements to within additive error ϵ. We also prove that this same procedure allows for estimating all higher-order k-particle RDMs elements with \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}({k}^{k}{\eta }^{k}/{\epsilon }^{2})\) circuit repetitions. In the next section and in Supplementary Note 7, we describe a way to map second-quantized representations to first quantization, effectively extending the applicability of these classical shadows techniques to second quantization as well.

To give some intuition for how this works, we consider the 1-RDM elements in first quantization:

where the subscript j indicates which of the η registers the orbital-ν to orbital-μ transition operator acts upon. Due to the antisymmetry of the occupied orbital registers in first quantization, we could also obtain the 1-RDM by measuring the expectation value of an operator such as \(\eta \left|p\right\rangle \,\,{\left\langle q\right|}_{1}\), which acts on just one of the η registers. Because \(\eta \left|p\right\rangle \,\,{\left\langle q\right|}_{1}\) has the Hilbert–Schmidt norm of \({{{{{{{\mathcal{O}}}}}}}}(\eta )\), the standard classical shadows procedure applied to this \(\log N\) sized register would require \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}({\eta }^{2}/{\epsilon }^{2})\) repetitions. But we can parallelize the procedure by also collecting classical shadows on the other η − 1 registers simultaneously. One way of interpreting the results we prove in Supplementary Note 6 is that, due to antisymmetry, these registers are anticorrelated. As a result, collecting shadows on all η registers simultaneously reduces the overall cost by at least a factor of η. To obtain W elements of the 1-RDM, one will need to perform an offline classical inversion of the Clifford channel that will scale as \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}(W{\eta }^{2}/{\epsilon }^{2})\); of course, any quantum or classical algorithm for estimating W quantities must have gate complexity of at least W. However, this only needs to be done once and does not scale in t. As a comparison, the cost of computing 1-RDM classically without exploiting sparsity is \({{{{{{{\mathcal{O}}}}}}}}(W\eta )\).

When simulating systems that are well described by mean-field theory, all observables can be efficiently obtained from the time-dependent 1-RDM. However, for observables such as the energy that have a norm growing in system size or basis size, targeting fixed additive error in the 1-RDM elements will not be sufficient for fixed additive error in the observable. In such situations, it could be preferable to estimate the observable of interest directly using a combination of block encodings55 and amplitude amplification67 (see e.g., ref. 68). Assuming the cost of block encoding the observable is negligible to the cost of time-evolution (true for many observables, including energy), this results in needing \({{{{{{{\mathcal{O}}}}}}}}(\lambda /\epsilon )\) circuit repetitions, where λ is the 1-norm associated with the block encoding of the observable. For example, whereas there are many correlation functions with \(\lambda={{{{{{{\mathcal{O}}}}}}}}(1)\), for the energy \(\lambda={{{{{{{\mathcal{O}}}}}}}}({N}^{1/3}{\eta }^{5/3}+{N}^{2/3}{\eta }^{1/3})\)51. Multiplying that to the cost of quantum time-evolution further reduces the quantum speedup.

The final measurement cost to consider is that of resolving observables in time. In some cases, e.g., when computing scattering cross sections or reaction rates, one might be satisfied measuring the state of the simulation at a single point in time t. However, in other situations, one might wish to simulate time-evolution up to a maximum duration of t, but sample quantities at L different points in time. Most quantum simulation methods that accomplish this goal scale as \({{{{{{{\mathcal{O}}}}}}}}(L)\) (\({{{{{{{\mathcal{O}}}}}}}}(Lt)\) in the case where the points are evenly spaced in time). However, the work of ref. 61 shows that this cost can be reduced to \({{{{{{{\mathcal{O}}}}}}}}(\sqrt{L}t)\), but with an additional additive space complexity of \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}(L)\). Either way, this is another cost that plagues quantum but not classical algorithms.

Quantum state preparation costs

Initial state preparation can be as simple or as complex as the state that one desires to begin the simulation in. Since the focus of this paper is outperforming mean-field calculations, we will discuss the cost of preparing Slater determinants within first quantization. For example, one may wish to start in the Hartree–Fock state (the lowest energy Slater determinant). Classical approaches to computing the Hartree–Fock state scale as roughly \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}(N{\eta }^{2})\) in practice23,24. This is a one-time additive classical cost that is not multiplied by the duration of time-evolution so it is likely subdominant to other costs.

Quantum algorithms for preparing Slater determinants have focused on the Givens rotation approach introduced in ref. 69 for second quantization. That algorithm requires \({{{{{{{\mathcal{O}}}}}}}}(N\eta )\) Givens rotation unitaries. Such unitaries can be implemented with \({{{{{{{\mathcal{O}}}}}}}}(\eta \log N)\) gates in first quantization53,70, hence combining that with the sequence of rotations called for in ref. 69 gives an approach to preparing Slater determinants in first quantization with \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}(N{\eta }^{2})\) gates in total, a relatively high cost. Unlike the offline cost to compute the occupied orbital coefficients, this state preparation cost would be multiplied by the number of measurement repetitions.

Here, we develop a new algorithm to prepare arbitrary Slater determinants in first quantization with only \(\widetilde{{{{{{{{\mathcal{O}}}}}}}}}(N\eta )\) gates. The approach is to first generate a superposition of all of the configurations of occupied orbitals in the Slater determinant while making sure that electron registers holding the label of the occupied orbitals are always sorted within each configuration so that they are in ascending order. This is necessary because, without such structure (or guarantees of something similar), the next step (anti-symmetrization) could not be reversible. For this next step, we apply the anti-symmetrization procedure introduced in ref. 71, which requires only \({{{{{{{\mathcal{O}}}}}}}}(\eta \log \eta \log N)\) gates (a negligible additive cost). Note that if one did not need the property that the configurations were ordered by the electron register, then it would be relatively trivial to prepare an arbitrary Slater determinant as a product state of η different registers, each in an arbitrary superposition over \(\log N\) bits (e.g., using the brute-force state preparation of ref. 72).

A high-level description of how the superposition of ordered configurations comprising the Slater determinant is prepared now follows, with details given in Supplementary Note 7. The idea is to generate the Slater determinant in second quantization in an ancilla register using the Givens rotation approach of ref. 69, while mapping the second-quantized representation to a first-quantized representation one second-quantized qubit (orbital) at a time. One can get away with storing only η non-zero qubits (orbitals) at a time in the second-quantized representation because the Givens rotation algorithm gradually produces qubits that do not require further rotations. Whenever one produces a new qubit in the second-quantized representation that does not require further rotations, one can convert it to the first-quantized representation, which zeros that qubit. Thus, the procedure only requires \({{{{{{{\mathcal{O}}}}}}}}(\eta )\) ancilla qubits—a negligible additive space overhead. A total of \({{{{{{{\mathcal{O}}}}}}}}(N\eta \log N)\) gates are required because for each of \({{{{{{{\mathcal{O}}}}}}}}(N)\) steps one accesses all \({{{{{{{\mathcal{O}}}}}}}}(\eta \log N)\) qubits of the first-quantized representation. In Supplementary Note 7, we show the Toffoli complexity can be further reduced to \({{{{{{{\mathcal{O}}}}}}}}(N\eta )\) with some additional tricks.

Finally, we note that quantum algorithms can also perform finite-temperature simulation by sampling initial states from a thermal density matrix in each realization of the circuit. For example, if the system is in a regime that is well treated by mean-field theory, one can initialize the system in a Slater determinant that is sampled from the thermal Hartree–Fock state73. Since the output of quantum simulations already needs to be sampled this does not meaningfully increase the number of quantum repetitions required. Such an approach would also be viable classically (and would allow one to perform simulations that only ever treat η occupied orbitals despite having finite temperature) but would introduce a multiplicative \({{{{{{{\mathcal{O}}}}}}}}(1/{\epsilon }^{2})\) sampling cost. For either processor, there is the cost of classically computing the thermal Hartree–Fock state, but this is a one-time cost not multiplied by the duration of time-evolution or \({{{{{{{\mathcal{O}}}}}}}}(1/{\epsilon }^{2})\).

Discussion

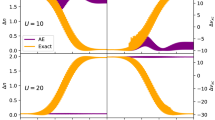

We have reviewed and analyzed costs associated with classical mean-field methods and state-of-the-art exact quantum algorithms for electron dynamics. We tightened bounds on Trotter-based first-quantized quantum simulations and introduced new and more efficient strategies for initializing Slater determinants in first quantization and for measuring RDMs via classical shadows. We compare these costs in Table 1. We plot the speedup of quantum algorithms relative to classical mean-field approaches when the goal is to sample the output of quantum dynamics at zero temperature in Fig. 1. We see that the best quantum algorithms deliver a seventh power speedup in particle number when N < Θ(η2), quartic in basis size when Θ(η2) < N < Θ(η3), super-quadratic in basis size when Θ(η3) < N < Θ(η4) and quintic in basis size but with a quadratic slowdown in η when N > Θ(η4). In extremal regimes of N < Θ(η5/4) and N > Θ(η4), the overall speedup in system size is super-quadratic (see Supplementary Note 5 for details). These are large enough speedups that quantum advantage may persist even despite quantum error-correction overhead74. Note that our analyses are based on derivable upper bounds for both classical and quantum algorithms over all possible input states. Tighter bounds derived over restricted inputs would give asymptotically fewer time steps required for both classical and quantum Trotter algorithms75.

Plot of the zero temperature dynamics quantum speedup ratio βC/βQ which assumes an Θ(ηα) relationship between problem size and basis size so that cost of the best exact quantum simulations of electron dynamics can be expressed as \(({\eta }^{{\beta }_{{{{{{{{\rm{Q}}}}}}}}}}t){(Nt/\epsilon )}^{o(1)}\) and the cost of the best classical mean-field algorithms for electron dynamics can be expressed as \(({\eta }^{{\beta }_{{{{{{{{\rm{C}}}}}}}}}}t){(Nt/\epsilon )}^{o(1)}\). These costs are compared under the same assumptions for sampling time-dynamics output as those mentioned in Table 1. See Supplementary Note 5 for more details.

The story becomes more nuanced when we wish to estimate ϵ-accurate quantities via sampling the quantum simulation output at L different time points. For observables with norm scaling as \({{{{{{{\mathcal{O}}}}}}}}(1)\) (e.g., simple correlation functions or single RDM elements), or those pertaining to amplitudes of the state (e.g., scattering amplitudes or reaction rates), the scaling advantages in system and basis size are maintained but at the cost of the quantum algorithm slowing down by a multiplicative factor of at least \({{{{{{{\mathcal{O}}}}}}}}(\sqrt{L}/\epsilon )\). When targeting the 1-RDM (which characterizes all observables within mean-field theory) we maintain speedup in N but at the cost of an additional linear slowdown in η. When measuring the total energy, the overall speedup becomes tenuous. Thus, the viability of quantum advantage with respect to zero-temperature classical mean-field methods depends sensitively on the target precision and particular observables of interest.

In terms of applications, we expect RT-TDHF to provide qualitatively correct dynamics whenever electron correlation effects are not pronounced. RT-TDDFT includes some aspects of electron correlation but the adiabatic approximation often creates issues76 and the method suffers from self-interaction error77. When the adiabatic approximation is accurate, self-interaction error is not pronounced, and the system does not exhibit a strong correlation, we expect RT-TDDFT to generate qualitatively correct dynamics. When there are many excited states to consider for spectral properties, it is often beneficial to resort to real-time dynamics methods instead of linear-response methods. Furthermore, we are often interested in real-time non-equilibrium electronic dynamics. This is the case for photo-excited molecules near metal surfaces78. The time evolution of electron density (i.e., the diagonal of the 1-RDM) near the molecule is of particular interest due to its implications for chemical reactivity and kinetics in the context of heterogeneous catalysis79. In this application, the simulation of nuclear degrees of freedom may be equally important, which we will leave for future analysis.

We see from Table 1 that prospects for quantum advantage are considerably increased at finite temperatures. Thus, a promising class of problems to consider for speedup over mean-field methods is the electronic dynamics of either warm dense matter (WDM)80,81,82,83 or hot dense matter (HDM)84. The WDM regime (where thermal energy is comparable to the Fermi energy) is typified by temperatures and densities that require the accurate treatment of both quantum and thermal effects85,86. These conditions occur in planetary interiors, experiments involving high-intensity lasers, and inertial confinement fusion experiments as the ablator and fuel are compressed into the conditions necessary for thermonuclear ignition. Ignition occurs in the hot dense matter (HDM) regime (where thermal energy far exceeds the Fermi energy). While certain aspects of these systems are conspicuously classical, they represent a regime that can be challenging to model, particularly the opacity of matter in stellar atmospheres87,88. Such astrophysical applications often require spectroscopic accuracy which is orders of magnitude more precise than chemical accuracy and necessitates a high ratio of N to η (the regime where the speedup of quantum algorithms relative to classical mean-field is most pronounced).

Another interesting context arises due to the conditions in which WDM is created in a laboratory. High-intensity ultrafast lasers or charged particle beams incident on condensed phase samples can be used to create these conditions on femtosecond time scales and the associated strong excitation and subsequent relaxation are well beyond the capabilities of mean-field methods89. Classical algorithms for HDM typically rely on an average atom description of the system in which the entire electronic structure is reduced to that of a single atom self-consistently embedded in a plasma90,91,92,93. While the level of theory applied to this atom can be sophisticated, the larger-scale structure of such a plasma is treated at a mean-field level. Identifying suitable observables for both of these regimes remains ongoing work.

Simulations in either the WDM or HDM regime typically rely on large plane wave basis sets and the inclusion of 10–100 times more partially occupied orbitals per atom than would be required at lower temperatures. Often, the attendant costs are so great that it is impractical to implement RT-TDDFT with hybrid functionals. Therefore, many calculations necessarily use adiabatic semi-local approximations, even on large classical high-performance computing systems80. Thus, the level of practically achievable accuracy can be quite low, and the prospect of exactly simulating the dynamics on a quantum computer is particularly compelling.

Although we have focused on assessing quantum speedup over mean-field theory, we view the main contribution of this work as more general. In particular, if exact quantum simulations are sometimes more efficient than classical mean-field methods, then all levels of theory in between mean-field and exact diagonalization are in scope for possible quantum advantage. Targeting systems that require more correlated calculations narrows the application space but improves prospects for quantum advantage due to the unfavorable scaling of the requisite classical algorithms. Thus, it may turn out that the domain of systems requiring, say, coupled cluster dynamics94,95,96,97, might be an even more ideal regime for practical quantum advantage, striking a balance in the trade-off between the breadth of possible applications and the cost of the classical competition.

Data availability

The authors declare that the data supporting the findings of this study are available within the paper.

Code availability

The source code for generating Fig. 1 is available from the corresponding authors upon request.

References

Feynman, R. P. Simulating physics with computers. Int. J. Theor. Phys. 21, 467–488 (1982).

Lloyd, S. Universal quantum simulators. Science 273, 1073–1078 (1996).

Bartlett, R. J. & Musial, M. Coupled-cluster theory in quantum chemistry. Rev. Mod. Phys. 79, 291–352 (2007).

Mardirossian, N. & Head-Gordon, M. Thirty years of density functional theory in computational chemistry: an overview and extensive assessment of 200 density functionals. Mol. Phys. 115, 2315–2372 (2017).

Lee, J., Pham, H. Q. & Reichman, D. R. Twenty years of auxiliary-field quantum Monte Carlo in quantum chemistry: an overview and assessment on main group chemistry and bond-breaking. J. Chem. Theory Comput. 18, 7024–7042 (2022).

Lee, S. et al. Evaluating the evidence for exponential quantum advantage in ground-state quantum chemistry. Nat. Commun. 14, 1952 (2023).

Reiher, M., Wiebe, N., Svore, K. M., Wecker, D. & Troyer, M. Elucidating reaction mechanisms on quantum computers. Proc. Natl Acad. Sci. USA 114, 7555–7560 (2017).

Li, Z., Li, J., Dattani, N. S., Umrigar, C. J. & Chan, G. K.-L. The electronic complexity of the ground-state of the FeMo cofactor of nitrogenase as relevant to quantum simulations. J. Chem. Phys. 150, 024302 (2019).

Berry, D., Gidney, C., Motta, M., McClean, J. & Babbush, R. Qubitization of arbitrary basis quantum chemistry leveraging sparsity and low rank factorization. Quantum 3, 208 (2019).

von Burg, V. et al. Quantum computing enhanced computational catalysis. Phys. Rev. Res. 3, 033055–033071 (2021).

Lee, J. et al. Even more efficient quantum computations of chemistry through tensor hypercontraction. PRX Quantum 2, 030305 (2021).

Goings, J. J. et al. Reliably assessing the electronic structure of cytochrome P450 on today’s classical computers and tomorrow’s quantum computers. Proc. Natl Acad. Sci. USA 119, e2203533119 (2022).

Elfving, V. E. et al. How will quantum computers provide an industrially relevant computational advantage in quantum chemistry? Preprint at http://arxiv.org/abs/2009.12472 (2020).

Babbush, R. et al. Low-depth quantum simulation of materials. Phys. Rev. X 8, 011044 (2018).

Babbush, R. et al. Encoding electronic spectra in quantum circuits with linear T complexity. Phys. Rev. X 8, 041015 (2018).

Kivlichan, I. D. et al. Improved fault-tolerant quantum simulation of condensed-phase correlated electrons via Trotterization. Quantum 4, 296 (2020).

McArdle, S., Campbell, E. & Su, Y. Exploiting fermion number in factorized decompositions of the electronic structure Hamiltonian. Phys. Rev. A 105, 012403 (2022).

Somma, R. D. Quantum simulations of one dimensional quantum systems. Preprint at https://arxiv.org/abs/1503.06319 (2015).

Geller, M. R. et al. Universal quantum simulation with prethreshold superconducting qubits: single-excitation subspace method. Preprint at http://arxiv.org/abs/1505.04990 (2015).

Dreuw, A. & Head-Gordon, M. Single-reference ab initio methods for the calculation of excited states of large molecules. Chem. Rev. 105, 4009–4037 (2005).

Runge, E. & Gross, E. K. Density-functional theory for time-dependent systems. Phys. Rev. Lett. 52, 997 (1984).

Van Leeuwen, R. Mapping from densities to potentials in time-dependent density-functional theory. Phys. Rev. Lett. 82, 3863 (1999).

Manzer, S., Horn, P. R., Mardirossian, N. & Head-Gordon, M. Fast, accurate evaluation of exact exchange: the occ-RI-K algorithm. J. Chem. Phys. 143, 024113 (2015).

Lin, L. Adaptively compressed exchange operator. J. Chem. Theory Comput. 12, 2242–2249 (2016).

Jia, W. & Lin, L. Fast real-time time-dependent hybrid functional calculations with the parallel transport gauge and the adaptively compressed exchange formulation. Comput. Phys. Commun. 240, 21–29 (2019).

Jia, W. & Lin, L. Fast real-time time-dependent hybrid functional calculations with the parallel transport gauge and the adaptively compressed exchange formulation. Comput. Phys. Commun. 240, 21–29 (2019).

Prodan, E. & Kohn, W. Nearsightedness of electronic matter. Proc. Natl Acad. Sci. USA 102, 11635–11638 (2005).

Kussmann, J., Beer, M. & Ochsenfeld, C. Linear-scaling self-consistent field methods for large molecules. WIREs Comput. Mol. Sci. 3, 614–636 (2013).

O’Rourke, C. & Bowler, D. R. Linear scaling density matrix real time TDDFT: propagator unitarity and matrix truncation. J. Chem. Phys. 143, 102801 (2015).

Zuehlsdorff, T. J. et al. Linear-scaling time-dependent density-functional theory in the linear response formalism. J. Chem. Phys. 139, 064104 (2013).

Khoromskaia, V., Khoromskij, B. & Schneider, R. QTT representation of the Hartree and exchange operators in electronic structure calculations. Comput. Methods Appl. Math. 11, 327–341 (2011).

Castro, A., Marques, M. A. & Rubio, A. Propagators for the time-dependent Kohn–Sham equations. J. Chem. Phys. 121, 3425–3433 (2004).

Jia, W., An, D., Wang, L.-W. & Lin, L. Fast real-time time-dependent density functional theory calculations with the parallel transport gauge. J. Chem. Theory Comput. 14, 5645–5652 (2018).

Kononov, A. et al. Electron dynamics in extended systems within real-time time-dependent density-functional theory. MRS Commun. 12, 1002–1014 (2022).

Shepard, C., Zhou, R., Yost, D. C., Yao, Y. & Kanai, Y. Simulating electronic excitation and dynamics with real-time propagation approach to TDDFT within plane-wave pseudopotential formulation. J. Chem. Phys. 155, 100901 (2021).

Shavitt, I. & Bartlett, R. J. Many-Body Methods in Chemistry and Physics: MBPT and Coupled-Cluster Theory (Cambridge University Press, 2009).

Wiesner, S. Simulations of many-body quantum systems by a quantum computer. Preprint at https://arxiv.org/abs/quant-ph/9603028 (1996).

Abrams, D. S. & Lloyd, S. Simulation of many-body Fermi systems on a universal quantum computer. Phys. Rev. Lett. 79, 2586 (1997).

Zalka, C. Efficient simulation of quantum systems by quantum computers. Fortschr. Phys. 46, 877–879 (1998).

Boghosian, B. M. & Taylor, W. Simulating quantum mechanics on a quantum computer. Phys. D Nonlinear Phenom. 120, 30–42 (1998).

Lidar, D. A. & Wang, H. Calculating the thermal rate constant with exponential speedup on a quantum computer. Phys. Rev. E 59, 2429–2438 (1999).

Kassal, I., Jordan, S. P., Love, P. J., Mohseni, M. & Aspuru-Guzik, A. Polynomial-time quantum algorithm for the simulation of chemical dynamics. Proc. Natl Acad. Sci. USA 105, 18681–18686 (2008).

Childs, A. & Su, Y. Nearly optimal lattice simulation by product formulas. Phys. Rev. Lett. 123, 050503 (2019).

Su, Y., Huang, H.-Y. & Campbell, E. T. Nearly tight Trotterization of interacting electrons. Quantum 5, 495 (2021).

Low, G. H., Su, Y., Tong, Y. & Tran, M. C. Complexity of Implementing Trotter Steps. PRX Quantum 4, 020323 (2023).

Cody Jones, N. et al. Faster quantum chemistry simulation on fault-tolerant quantum computers. N. J. Phys. 14, 115023 (2012).

Poulin, D., Hastings, M. B., Doherty, A. C. & Troyer, M. The Trotter step size required for accurate quantum simulation of quantum chemistry. Quantum Inf. Comput. 15, 361–384 (2015).

Babbush, R., McClean, J., Wecker, D., Aspuru-Guzik, A. & Wiebe, N. Chemical basis of Trotter-Suzuki errors in chemistry simulation. Phys. Rev. A 91, 022311 (2015).

Childs, A. M., Maslov, D., Nam, Y., Ross, N. J. & Su, Y. Toward the first quantum simulation with quantum speedup. Proc. Natl Acad. Sci. USA 115, 9456–9461 (2018).

Chan, H. H. S., Meister, R., Jones, T., Tew, D. P. & Benjamin, S. C. Grid-based methods for chemistry simulations on a quantum computer. Sci. Adv. 9, eabo7484 (2023).

Babbush, R., Berry, D. W., McClean, J. R. & Neven, H. Quantum simulation of chemistry with sublinear scaling in basis size. NPJ Quantum Inf. 5, 92 (2019).

Low, G. H. & Wiebe, N. Hamiltonian simulation in the interaction picture. Preprint at http://arxiv.org/abs/1805.00675 (2018).

Su, Y., Berry, D., Wiebe, N., Rubin, N. & Babbush, R. Fault-tolerant quantum simulations of chemistry in first quantization. PRX Quantum 4, 040332 (2021).

Aspuru-Guzik, A., Dutoi, A. D., Love, P. J. & Head-Gordon, M. Simulated quantum computation of molecular energies. Science 309, 1704 (2005).

Low, G. H. & Chuang, I. L. Hamiltonian simulation by qubitization. Quantum 3, 163 (2019).

Rokhlin, V. Rapid solution of integral equations of classical potential theory. J. Comput. Phys. 60, 187–207 (1985).

Barnes, J. & Hut, P. A hierarchical O(N log N) force-calculation algorithm. Nature 324, 446–449 (1986).

Darden, T., York, D. & Pedersen, L. Particle mesh Ewald: an N log N method for Ewald sums in large systems. J. Chem. Phys. 98, 10089–10092 (1993).

Childs, A. M., Leng, J., Li, T., Liu, J.-P. & Zhang, C. Quantum simulation of real-space dynamics. Quantum 6, 860 (2022).

Giovannetti, V., Lloyd, S. & Maccone, L. Quantum random access memory. Phys. Rev. Lett. 100, 160501 (2008).

Huggins, W. J. et al. Nearly optimal quantum algorithm for estimating multiple expectation values. Phys. Rev. Lett. 129, 240501 (2022).

Huang, H.-Y., Kueng, R. & Preskill, J. Predicting many properties of a quantum system from very few measurements. Nat. Phys. 16, 1050–1057 (2020).

Zhao, A., Rubin, N. C. & Miyake, A. Fermionic partial tomography via classical shadows. Phys. Rev. Lett. 127, 110504 (2021).

Wan, K., Huggins, W. J., Lee, J. & Babbush, R. Matchgate shadows for fermionic quantum simulation. Preprint at http://arxiv.org/abs/2207.13723 (2022).

O’Gorman, B. Fermionic tomography and learning. Preprint at http://arxiv.org/abs/2207.14787 (2022).

Low, G. H. Classical shadows of fermions with particle number symmetry. Preprint at https://arxiv.org/abs/2208.08964 (2022).

Brassard, G., Høyer, P., Mosca, M. & Tapp, A. In Quantum Computation and Information (eds Lomonaco, S. J. & Brandt, H. E.) Ch. 3, 53–74 (American Mathematical Society, 2002).

Rall, P. Quantum algorithms for estimating physical quantities using block encodings. Phys. Rev. A 102, 022408 (2020).

Kivlichan, I. et al. Quantum simulation of electronic structure with linear depth and connectivity. Phys. Rev. Lett. 120, 110501 (2018).

Delgado, A. et al. Simulating key properties of lithium-ion batteries with a fault-tolerant quantum computer. Phys. Rev. A 106, 032428 (2022).

Berry, D. W. et al. Improved techniques for preparing eigenstates of fermionic Hamiltonians. NPJ Quantum Inf. 4, 22 (2018).

Shende, V. V., Bullock, S. S. & Markov, I. L. Synthesis of quantum-logic circuits. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 25, 1000–1010 (2006).

Mermin, N. D. Stability of the thermal Hartree-Fock approximation. Ann. Phys. 21, 99–121 (1963).

Babbush, R. et al. Focus beyond quadratic speedups for error-corrected quantum advantage. PRX Quantum 2, 010103 (2021).

An, D., Fang, D. & Lin, L. Time-dependent unbounded Hamiltonian simulation with vector norm scaling. Quantum 5, 459 (2021).

Provorse, M. R. & Isborn, C. M. Electron dynamics with real-time time-dependent density functional theory. Int. J. Quantum Chem. 116, 739–749 (2016).

Cohen, A. J., Mori-Sa’nchez, P. & Yang, W. Insights into current limitations of density functional theory. Science 321, 792–794 (2008).

Tully, J. C. Chemical dynamics at metal surfaces. Annu. Rev. Phys. Chem. 51, 153–178 (2000).

Wang, R., Hou, D. & Zheng, X. Time-dependent density-functional theory for real-time electronic dynamics on material surfaces. Phys. Rev. B 88, 205126 (2013).

Baczewski, A. D., Shulenburger, L., Desjarlais, M., Hansen, S. & Magyar, R. X-ray Thomson scattering in warm dense matter without the chihara decomposition. Phys. Rev. Lett. 116, 115004 (2016).

Magyar, R. J., Shulenburger, L. & Baczewski, A. Stopping of deuterium in warm dense deuterium from Ehrenfest time-dependent density functional theory. Contrib. Plasma Phys. 56, 459–466 (2016).

Andrade, X., Hamel, S. & Correa, A. A. Negative differential conductivity in liquid aluminum from real-time quantum simulations. Eur. Phys. J. B 91, 1–7 (2018).

Ding, Y., White, A. J., Hu, S., Certik, O. & Collins, L. A. Ab initio studies on the stopping power of warm dense matter with time-dependent orbital-free density functional theory. Phys. Rev. Lett. 121, 145001 (2018).

Atzeni, S. & Meyer-ter Vehn, J. The Physics of Inertial Fusion: Beam Plasma Interaction, Hydrodynamics, Hot Dense Matter, Vol. 125 (Oxford University Press, 2004).

Graziani, F., Desjarlais, M. P., Redmer, R. & Trickey, S. B. Frontiers and Challenges in Warm Dense Matter, Vol. 96 (Springer, 2014).

Dornheim, T., Groth, S. & Bonitz, M. The uniform electron gas at warm dense matter conditions. Phys. Rep. 744, 1–86 (2018).

Bailey, J. E. et al. A higher-than-predicted measurement of iron opacity at solar interior temperatures. Nature 517, 56–59 (2015).

Nagayama, T. et al. Systematic study of l-shell opacity at stellar interior temperatures. Phys. Rev. Lett. 122, 235001 (2019).

Ralchenko, Y. Modern Methods in Collisional-Radiative Modeling of Plasmas, Vol. 90 (Springer, 2016).

Rozsnyai, B. F. Spectral lines in hot dense matter. J. Quant. Spectrosc. Radiat. Transf. 17, 77–88 (1977).

Starrett, C. et al. Average atom transport properties for pure and mixed species in the hot and warm dense matter regimes. Phys. Plasmas 19, 102709 (2012).

Starrett, C. & Saumon, D. Fully variational average atom model with ion-ion correlations. Phys. Rev. E 85, 026403 (2012).

Starrett, C. & Saumon, D. Electronic and ionic structures of warm and hot dense matter. Phys. Rev. E 87, 013104 (2013).

Huber, C. & Klamroth, T. Explicitly time-dependent coupled cluster singles doubles calculations of laser-driven many-electron dynamics. J. Chem. Phys. 134, 054113 (2011).

Sato, T., Pathak, H., Orimo, Y. & Ishikawa, K. L. Communication: Time-dependent optimized coupled-cluster method for multielectron dynamics. J. Chem. Phys. 148, 051101 (2018).

Shushkov, P. & Miller, T. F. Real-time density-matrix coupled-cluster approach for closed and open systems at finite temperature. J. Chem. Phys. 151, 134107 (2019).

White, A. F. & Chan, G. K.-L. Time-dependent coupled cluster theory on the Keldysh contour for nonequilibrium systems. J. Chem. Theory Comput. 15, 6137–6153 (2019).

Acknowledgements

The authors thank Alina Kononov, Garnet Kin-Lic Chan, Robin Kothari, Alicia Magann, Fionn Malone, Jarrod McClean, Thomas O’Brien, Nicholas Rubin, Henry Schurkus, Rolando Somma, and Yuan Su for helpful discussions. We thank Lin Lin for bringing our attention to the quantized tensor train format in ref. 31 and thank Yuehaw Khoo for a discussion related to this. D.W.B. worked on this project under a sponsored research agreement with Google Quantum AI. D.W.B. is supported by Australian Research Council Discovery Projects DP190102633 and DP210101367. A.D.B. acknowledges support from the Advanced Simulation and Computing Program and the Sandia LDRD Program. Some work on this project occurred while in residence at The Kavli Institute for Theoretical Physics, supported in part by the National Science Foundation under Grant No. NSF PHY-1748958. Sandia National Laboratories is a multi-mission laboratory managed and operated by National Technology and Engineering Solutions of Sandia, LLC, a wholly owned subsidiary of Honeywell International, Inc., for DOE’s National Nuclear Security Administration under contract DE-NA0003525.

Author information

Authors and Affiliations

Contributions

R.B. and J.L. conceived the original study, managed the overall collaboration, and wrote the first draft of the manuscript. All authors contributed to analyzing classical and quantum algorithms with significant contributions made by W.J.H. for Appendix F with help from A.Z., D.W.B., J.L., and S.F.U. for Appendix A, and R.B. and D.W.B. for other sections in Appendices. D.R.R., A.D.B., and H.N. discussed the results of the manuscript and contributed to the writing of the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Ryan MacDonell, Jin-Peng Liu, and Tongyang Li for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Babbush, R., Huggins, W.J., Berry, D.W. et al. Quantum simulation of exact electron dynamics can be more efficient than classical mean-field methods. Nat Commun 14, 4058 (2023). https://doi.org/10.1038/s41467-023-39024-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-023-39024-0

This article is cited by

-

Drug design on quantum computers

Nature Physics (2024)

-

Trajectory sampling and finite-size effects in first-principles stopping power calculations

npj Computational Materials (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.