Abstract

Study design

A psychometrics study.

Objectives

To determine intra and inter-observer reliability of Allen Ferguson system (AF) and sub-axial injury classification and severity scale (SLIC), two sub axial cervical spine injury (SACI) classification systems.

Setting

Online multi-national study

Methods

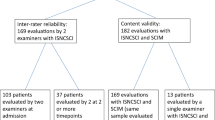

Clinico-radiological data of 34 random patients with traumatic SACI were distributed as power point presentations to 13 spine surgeons of the Spine Trauma Study Group of ISCoS from seven different institutions. They were advised to classify patients using AF and SLIC systems. A reference guide of the two systems had been mailed to them earlier. After 6 weeks, the same cases were re-presented to them in a different order for classification using both systems. Intra and inter-observer reliability scores were calculated and analysed with Fleiss Kappa coefficient (k value) for both the systems and Intraclass correlation coefficient(ICC) for the SLIC.

Results

Allen Ferguson system displayed a uniformly moderate inter and intra-observer reliability. SLIC showed slight to fair inter-observer reliability and fair to substantial intra-observer reliability. AF mechanistic types showed better inter-observer reliability than the SLIC morphological types. Within SLIC, the total SLIC had the least inter-observer agreement and the SLIC neurology had the highest intra-observer agreement.

Conclusion

This first external reliability study shows a better reliability for AF as compared to SLIC system. Among the SLIC variables, the DLC status and the total SLIC had least agreement. Low-reliability highlights the need for improving the existing classification systems or coming out with newer ones that consider limitations of the existing ones.

Similar content being viewed by others

Introduction

A common language is necessary for the better communication among surgeons to chronicle any fracture, grade the injury, decide the treatment for that particular injury pattern, determine prognosis and for research [1]. This common language could be pragmatically achieved by a classification technique. A classification technique must be like an uncomplicated algorithm based on the clinico-radiological characteristics to allow the identification of any injury. It is well documented that a clinically reliable, accurate and apposite classification system is needed [2]. A study by Mirza et al., has proposed what should be expected from an ideal classification system for spinal injury [3].

There are many classification systems that were propounded for sub axial cervical spine injury (SACI) based on parameters like morphology of the injury, injury mechanism or anatomical structures responsible for fracture stability and neurological status [4,5,6,7]. Each of the new classification systems avers to be better than the existing ones [7, 8]. However, these systems are still far from ideal, lacking one or the other essential characteristics and, hence, none of them have got ubiquitous acceptance [2, 3, 9]. Initially proposed classifications were elementary and were postulated on presumed injury mechanism on plain radiographs. They did not take into account the underlying injury to ligamentous structures and neurological status for classifying the fracture [4,5,6]. The recent ones were found to be more comprehensive and propitious, but cumbersome and arduous to apply in day to day practice, if not impractical [9,10,11,12]. The commonly used SACI classifications lack a conscientious scientific base and were based on insights of experienced surgeons and researchers who formulated the classification system [2]. Literature has made evident some intrinsic limitations even in the most recent classification systems [10].

A questionnaire survey was conducted by the lead authors to get the opinion of experts across the globe if the existing spine trauma classification systems for thoracolumbar and SACI met their requirements and were practically feasible in their set up. As per a survey for SACI, 38% of the experts used AF, 35% of experts used SLIC and 5% of experts used Cervical Spine Injury severity scale [9].

A key component of any classification system is its inter-observer and intra-observer reliability. A classification system must be reliable to be universally accepted [8]. Only two studies to date have compared the reliability of AF and sub-axial injury classification and severity scale (SLIC) classification systems, but both of them had involvement of at least one of the authors who developed the classification systems [7, 8]. The internal validation studies showed moderate agreement on SLIC, but an external validation study yielded a poor agreement [12]. The internal validation was done by the same authors who devised the classification, and this could have introduced a bias.

This is the first external reliability study comparing AF and SLIC, two of the most commonly used classification systems of SACI, as it is the first such study without the involvement of any of the authors who developed either of the classification systems. The objective of this study is to report and analyse the inter as well as intra-observer reliability of AF and SLIC.

Methods

The study was undertaken with the collaboration of a cohort of experienced spine surgeons (n = 13) of Spine Trauma Study Group of International Spinal Cord Society from 7 institutions across 4 countries. The Institutional Ethics Committee approved the study without the need to attain consent from the patients. Quick reference guide including a review of literature and details of various classification systems, especially AF and SLIC, was created and distributed to each reviewer. Clinico-radiological information of 34 random patients, who had got admitted at a single institution with a diagnosis of a traumatic SACI, was collected from the hospital’s database. Clinical data included age and sex of the patient, mode of injury, injury level, associated non-spinal injuries and neurological information. The neurological information included motor and sensory scores, perianal sensation and voluntary anal contraction assessed using International Standards for Neurological Classification of Spinal Cord Injury criteria. A new identity number was assigned to each patient. The patients’ radiological data were anonymised in the DICOM (Digital Imaging and Communications in Medicine) format. Images of the radiological data along with the clinical data were shared with the participants in the form of a power point presentation through Dropbox. Also, the clinical information shared did not include any personal identifiers. Therefore, all the shared data were anonymised and delinked. Only the Principal Investigator (PI) had the information of the coding for each participant, which could identify the patients. Confidential handling of the data by the PI was ensured as per NIH (National Institutes of Health) good clinical practice guidelines. The experts were solicited to classify each case using AF and SLIC systems and return the scoring sheets through e-mail. For intra-observer reliability, the same cases were re-presented to the experts 6 weeks later in a different order to avoid recall bias, with a request to once again categorise them in the two classification systems. Experts were blinded to the results of the first assessment. The first and final scoring sheets of the experts were collected and constructed for statistical analysis.

Statistical analysis

Inter-observer and intra-observer reliability were calculated for AF using Fleiss Kappa. Inter- observer and intra-observer reliability for individual components of SLIC were determined with both Fleiss Kappa and intraclass correlation coefficient ICC > 0.75 was taken as excellent, 0.4–0.75 as moderate and <0.4 as poor reliability scores [13,14,15]. The agreement based on the kappa score was interpreted based on Landis and Koch criteria [15]. All statistics were preconceived using SPSS, version 13.0 software (SPSS Inc., Chicago, IL).

Results

Only 10 among the first 13 experts sent the scoring sheet the second time. The inter-observer and intra-observer kappa scores for AF classification were 0.42 and 0.55, respectively. SLIC morphology inter-observer and intra-observer kappa scores were 0.21 and 0.41, respectively, with ICC scores of 0.32 and 0.33, respectively. SLIC DLC status inter-observer and intra-observer kappa scores were 0.21 and 0.39, respectively, with ICC scores of 0.32 and 0.59 respectively. Similarly, SLIC neurology inter-observer and intra-observer kappa scores were 0.28 and 0.65, respectively, with ICC scores of 0.46 and 0.78, respectively. SLIC total inter-observer and intra-observer kappa scores were 0.15 and 0.23, respectively, with ICC scores 0.41 and 0.16, respectively (Table: 1). The interpretation of the results based on the kappa value was performed using the Landis and Koch’s criteria. The SLIC scale showed variable agreement, ranging from slight to substantial, and AF displayed uniformly moderate inter and intra-observer agreement. The overall reliability for AF was better than that of SLIC. Intra-observer reliability was higher than inter-observer reliability for SLIC. AF mechanistic types manifested better reliability than SLIC morphological types. Within SLIC, the total SLIC had the least inter-observer agreement and SLIC neurology had the highest intra-observer agreement. (Table: 2)

Discussion

Classification systems help in improving communication between healthcare providers, aid in treatment decision making and help in predicting prognosis [2]. Classifications also provide a common universal language among medical professionals, thereby providing a tool for research [2, 3]. The same holds true for spine trauma classification systems also. An ideal spine trauma classification system has to provide descriptive and prognostic information. Mirza et al., have highlighted the features an ideal classification system should have [3]. The main purpose of a classification system in spinal injuries is to allow a grading of severity based on radiological and clinical (neurologic) characteristics, identification of pathogenesis and mechanism of injury, a guide for the choice of treatment and prediction of the outcome (prognosis) [3]. An all-inclusive spine trauma classification system must also be valid, reproducible (reliable), easily usable and readily executable in clinical practice [2, 3]. Reliability is the reproducibility by an observer (intra-observer) and between observers (inter-observer) [13]. Reliability can be measured statistically by kappa and ICC [13,14,15]. AF is a nominal system and Kappa was used to determine the reliability. SLIC and their components belong to an ordinal scale with a numerical scoring, hence both ICC and kappa were used (Tables 3 and 4) [7, 8].

Several classification systems have transpired for SACI. Holdsworth emphasised the significance of posterior ligamentous complex and zygapophyseal joint structure in his dual column theory of classification of thoracolumbar and cervical spine injuries [4]. It was impossible to regulate and analyse the observations of his study as it was not conducted in a systemic manner [4, 16]. AF system was formulated based on retrospective clinico-radiological analysis of closed indirect fractures and fracture-dislocations of the sub-axial cervical spine, which suggested the probable mechanism of injury. This study, established on the radiological imaging of 165 patients with acute SACI, brought out a classification system, on the assumption that injury vector and cervical spine posture, during the impact, determine the metamorphosis of kinetic energy into fractures or dislocations. It is, therefore, uncertain to assess what degree the classification system can be used to predict neurological prognosis and guide treatment [5]. Another mechanistic classification was introduced by Harris et al., which was based on correlation of radiographic and pathologic features of experimentally incited cervical injuries in laboratory models with acute clinical injuries. The classification also neither guides the management nor predicts the outcome [6]. Moore’s cervical spine injury severity score (CSISS) was enumerated using the imaging findings and fracture stability. Though it guides the treatment, it doesn’t consider the neurology [16, 17]. No validation or reliability studies were conducted during the development of the above-described classifications (i.e., Holdsworth system, Allen Ferguson system, Harris system, and CSISS). However, the SLIC system, developed by Vaccaro et al., seems to fulfil most of the criteria for an ideal classification system. It grades injury severity both on radiological and neurological perspectives and also guides treatment [7]. This classification was supported by the works of Patel et al [18]. and Dvorak et al. [19]. Vaccaro et al [7]. assessed the inter and intra-observer reliability of the SLIC severity scale and contrasted them with that of Harris and AF systems. As per this study, reliability (inter and intra-observer) of SLIC was higher than that of Harris, but slightly lower when compared to that of AF. The AF system fared equivalent [7, 8] or even better than the SLIC system, especially when comparing the AF mechanistic and SLIC morphological types, as shown in our study. The advantage of the SLIC system was the scoring, which can guide in treatment decisions. But, it is largely based on the insights of experienced surgeons and researchers who had formulated the classification [2]. The reliability of total SLIC that guides the treatment was shown to be low in the study conducted by Vaccaro et al. [7], as well as in our study (tables: 1, 2). Also, according to Van Middendorp et al., SLIC management algorithm did not show improvement in agreement concerning decision making among the surgeons [12]. Thus, though quite a few classification systems have been developed for SACI, experts are not convinced that any of them meets the requirements well. Hence, none of them has got a widespread acceptance.

Only two studies have been published so far, comparing the reliability of the two most commonly used classification systems, AF and SLIC [7, 8]. But, both of them involved at least one of the authors who developed the classification system. As far as we are aware, ours is the first reliability study, comparing two of the most commonly used classification systems of SACI, without the involvement of any of the authors who developed either of the classification systems. In our study, AF had moderate inter- and intra-rater agreement and fared better than the total SLIC (slight inter-rater and fair intra-rater agreement). The SLIC had an only fair inter-rater agreement for morphology, DLC status, and neurological status, but had a better intra-observer reliability for morphology and neurological status, which are moderate and substantial, respectively. The DLC status and total SLIC were the most difficult to objectify in the SLIC system and had the least agreement in our study. These findings were in concordance with the findings of Vaccaro et al. This could be due to the ‘indeterminate’ category of DLC integrity and dissimilarity in the interpretation of DLC status based on the MRI among the experts [7]. As per some studies, the correlation of MRI and intraoperative DLC status was poor and has led to unnecessary surgical interventions [20, 21]. This limitation of the system has been accepted by the experts who developed the classification system [7]. In an external validation of SLIC system, it was found that there was an insufficient agreement on the injury morphology, and the reliability of SLIC is likely to improve with the implementation of unambiguous true morphological characteristics of the injury [9]. So, better definitions of DLC status and even fracture morphology, with more research, is likely to enhance total SLIC and, hence, the reliability of SLIC.

However, the results of our study contrasted to the results of the study by Stone et al. [8], which showed an excellent inter- and intra-rater agreement for SLIC and a moderate inter-rater to an excellent intra-rater agreement for AF. Various reasons could have contributed to this difference in outcomes, including regional variations in familiarity with either of the classification systems (the study by Stone et al was conducted involving experts from 3 centres of US and 1 from Brazil, whereas our study involved experts from 3 centres of India, 1 of Germany, 1 of Brazil and 2 of US) and personal preferences for a certain classification system leading to an observer bias. One of the authors who developed the SLIC, being involved in this study, could also have introduced a bias in the results

Our study brings out the need for additional studies, evaluating the reliability and reproducibility of these spine trauma classification systems involving several doctors with different extent of training and at different centres. The low reliability also highlights the need for improving the existing classification systems or coming out with the newer ones, taking into consideration the shortcomings of the current classification systems. The lead author had also conducted an online questionnaire survey among 42 spine experts across the globe to get their opinion on whether the existing trauma classification systems for SACI were realistically executable and met their expectations. For classifying SACI, a majority of them used AF (38%) and SLIC (35%). When asked as to which system they thought was more practical to be used in their regular practice, the majority (31%) opted for AF system [9]. Even though the SLIC system is more comprehensive, experts may find it more cumbersome to use in their practice than the AF system. AF system is simpler, but not inclusive of all parameters, especially the neurological and ligamentous aspects. SLIC system seems more comprehensive and promising, but its reliability is lower than AF system and surgeons feel a need for further research in the SLIC system for making it simpler and, thereby, more reliable without losing its comprehensiveness. Newer classifications are being introduced for SACI. However, further research has to be done to prove their reliability and usefulness [22, 23]. These include the AO Spine Subaxial Cervical Spine Injury Classification System being developed by Vaccaro et al. and the ABCD Classification System by Shousha et al. The new AO system has reduced the number of fracture subcategories and added modifiers as well as neurology to the classification system. However, the way this classification system guides the management of subaxial cervical spine injuries has not yet been published [22]. The ABCD system classifies the SACI and proposes the management algorithm. The main components of this system are morphology of the injury based on the two-column concept, neurological status of the patient and the stability of the injury. However, this system is still under the validation process and no reliability studies have been conducted so far [23].

The strength of our study is that this is the first analysis conducted outside the American continent, involving experts from 7 institutions across 4 countries and experts other than those who formulated the classifications. The reliability may have been lower, as there was no face to face training of experts, even though such a training doesn’t depict a real-life scenario [24]. Also, as this is an online study, it may be possible that the experts had not used the reference guides that were earlier sent to them through an email, while they were actually participating in the online study. So, it may be worthwhile to conduct a study with such a face-to-face training, handing over the quick reference guides of the classification systems and assessing whether it changes the outcome. This can, thus, be considered as a limitation of this study. The other limitations include small sample size of the total number of experts who had responded both the times (n = 10) and the presentation of the cases to the experts through an email, which doesn’t ideally delineate the real-life scenario, where a clinician himself or herself examines the patients and then analyses, as well as interprets the radiographic data.

Conclusion

This is the first reliability study, comparing two of the most commonly used classification systems of SACI without the involvement of any of the authors who developed either of the classification systems. AF showed better reliability compared to SLIC, which can be due to the familiarity of AF among the experts and the ease of using it. Among SLIC variables, the DLC status and total SLIC had the least agreement. The low reliability highlights the need for improving the prevailing classification systems or coming out with newer ones, considering the limitations of existing ones. Furthermore, research on fracture morphology and better definitions on DLC status are expected to ameliorate the reliability of SLIC. In addition, research is required to evaluate the use of AF in guiding treatment and correlation between AF mechanistic fracture types and their associated neurological outcomes. Considering all the flaws in the existing classifications, it is true that we are far from an ideal classification for SACI classification. Hence, extensive multi-centric research is still required in this field.

References

Magerl F, Aebi M, Gertzbein S, Harms J, Nazarian S. A comprehensive classification of thoracic and lumbar spine injuries. Eur Spine J. 1994;3:1–2.

Van Middendorp JJ, Audigé L, Hanson B, Chapman JR, Hosman AJF. What should an ideal spinal injury classification system consist of? A methodological review and conceptual proposal for future classifications. Eur Spine J. 2010;19:1238–49.

Mirza SK, Mirza AJ, Chapman JR, Anderson PA. Classifications of thoracic and lumbar fractures: rationale and supporting data. J Am Acad Orthop Surg. 2002;10:364–77.

Holdsworth F. Fractures, dislocations, and fracture-dislocations of the spine. J Bone Jt Surg Am. 1970;52:1534–51. Dec

Allen BL, Ferguson RL, Lehmann TR, O’Brien RP. A mechanistic classification of closed, indirect fractures and dislocations of the lower cervical spine. Spine. 1982;7:1–27.

Harris JH, Edeiken-Monroe B, Kopaniky DR. A practical classification of acute cervical spine injuries. Orthop Clin North Am. 1986;17:15–30.

Vaccaro AR, Hulbert RJ, Patel AA, Fisher C, Dvorak M, Lehman RA, et al. The subaxial cervical spine injury classification system: a novel approach to recognize the importance of morphology, neurology, and integrity of the disco-ligamentous complex. Spine. 2007;32:2365–74.

Stone AT, Bransford RJ, Lee MJ, Vilela MD, Bellabarba C, Anderson PA, et al. Reliability of classification systems for subaxial cervical injuries. Evid Based Spine Care J. 2010;1:19–26.

Chhabra HS, Kaul R, Kanagaraju V. Do we have an ideal classification system for thoracolumbar and subaxial cervical spine injuries: what is the expert’s perspective? Spinal Cord. 2015;53:42–48.

Aarabi B, Walters BC, Dhall SS, Gelb DE, John Hurlbert R, Rozzelle CJ, et al. Subaxial cervical spine injury classification systems. Neurosurgery. 2013;72:170–86.

Joaquim A, Lawrence B, Daubs M, Brodke D, Patel A. Evaluation of the subaxial injury classification system. J Craniovertebr Junct Spine. 2011;2:67.

Van Middendorp JJ, Audigé L, Bartels RH, Bolger C, Deverall H, Dhoke P, et al. The subaxial cervical spine injury classification system: an external agreement validation study. Spine J. 2013;13:1055–63.

Gwet K. Chance-Corrected Agreement Coefficients. In: Gwet K, (ed). Handbook of inter-rater reliability: the definitive guide to measuring the extent of agreement among raters. 4th edn. USA: Advanced Analytics, LLC: Gaithersburg, MD; 2014. p. 25–69. pp

McGinn T, Wyer PC, Newman TB, Keitz S, Leipzig R, Guyatt G. Tips for learners of evidence-based medicine: 3. Measures of observer variability (kappa statistic). CMAJ. 2004;171:1369–73.

Landis JR, Koch GG. The measurement of observer agreement for categorical data on JSTOR. Biometrics. 1977;33:159–74.

Anderson PA, Moore TA, Davis KW, Molinari RW, Resnick DK, Vaccaro AR, et al. Cervical spine injury severity score. assessment of reliability. J Bone Jt Surg. 2007;89:1057–65.

Moore TA, Vaccaro AR, Anderson PA. Classification of lower cervical spine injuries. Spine. 2006;31:11–12.

Patel AA, Hurlbert RJ, Bono CM, Bessey JT, Yang N, Vaccaro AR. Classification and surgical decision making in acute subaxial cervical spine trauma. Spine. 2010;35:1–2.

Dvorak MF, Fisher CG, Fehlings MG, Rampersaud YR, Öner FC, Aarabi B, et al. The surgical approach to subaxial cervical spine injuries: an evidence-based algorithm based on the SLIC classification system. Spine. 2007;32:2620–9.

Rihn JA, Fisher C, Harrop J, Morrison W, Yang N, Vaccaro AR. Assessment of the posterior ligamentous complex following acute cervical spine trauma. J Bone Jt Surg Ser A. 2010;92:583–9.

Malham GM, Ackland HM, Varma DK, Williamson OD. Traumatic cervical discoligamentous injuries: correlation of magnetic resonance imaging and operative findings. Spine. 2009;34:2754–9.

Vaccaro AR, Koerner JD, Radcliff KE, Oner FC, Reinhold M, Schnake KJ, et al. AOSpine subaxial cervical spine injury classification system. Eur Spine J. 2016;25:2173–84.

Shousha M. ABCD classification system: a novel classification for subaxial cervical spine injuries. Spine. 2014;39:707–14.

Urrutia J, Zamora T, Yurac R, Campos M, Palma J, Mobarec S, et al. An independent inter-and intraobserver agreement evaluation of the AOSpine subaxial cervical spine injury classification system. Spine. 2017;42:298–303.

Author contributions

VK was responsible for designing the study, getting ethical committee approval and writing the manuscript. PKKY was responsible for the literature review, extracting and analysing the data from different studies and also contributed to writing the manuscript. HSC was responsible for designing as well as organising the study, analysing the results of this study and also contributed to writing the manuscript. APS, AN, GS, KDD, MLB, BM, NP, RA, ST, TB and VT took part in the study and provided feedback. No funding and grants have been received for this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Kanagaraju, V., Yelamarthy, P.K.K., Chhabra, H.S. et al. Reliability of Allen Ferguson classification versus subaxial injury classification and severity scale for subaxial cervical spine injuries: a psychometrics study. Spinal Cord 57, 26–32 (2019). https://doi.org/10.1038/s41393-018-0182-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41393-018-0182-z

This article is cited by

-

An international validation of the AO spine subaxial injury classification system

European Spine Journal (2023)