Abstract

Synaptic plasticity configures interactions between neurons and is therefore likely to be a primary driver of behavioral learning and development. How this microscopic-macroscopic interaction occurs is poorly understood, as researchers frequently examine models within particular ranges of abstraction and scale. Computational neuroscience and machine learning models offer theoretically powerful analyses of plasticity in neural networks, but results are often siloed and only coarsely linked to biology. In this review, we examine connections between these areas, asking how network computations change as a function of diverse features of plasticity and vice versa. We review how plasticity can be controlled at synapses by calcium dynamics and neuromodulatory signals, the manifestation of these changes in networks, and their impacts in specialized circuits. We conclude that metaplasticity—defined broadly as the adaptive control of plasticity—forges connections across scales by governing what groups of synapses can and can’t learn about, when, and to what ends. The metaplasticity we discuss acts by co-opting Hebbian mechanisms, shifting network properties, and routing activity within and across brain systems. Asking how these operations can go awry should also be useful for understanding pathology, which we address in the context of autism, schizophrenia and Parkinson’s disease.

Similar content being viewed by others

Introduction

Synaptic plasticity leads a double life. A great deal of research has addressed the biological substrates of plasticity, under the working hypothesis that changes in inter-neuronal communication subtend behavioral adaptation. While this connection has often been directly demonstrated, the mechanisms linking microscopic (e.g., synaptic) to macroscopic (behavioral and network) change have generally remained obscure. How are the diverse pairwise interactions between neurons related to network function? Which changes in these interactions bear on network computation and which don’t? How are different network, cellular, and sub-cellular needs and goals balanced via adaptation?

Answering these questions is complicated by the different approaches biological and computational researchers take to investigating plasticity. Biologically, diverse neuronal changes can directly impact how strongly the activity of one cell influences another. These include both pre- and postsynaptic modification, as well as intracellular alterations that interact with extracellular signals (e.g., neuromodulation via G-protein coupled receptors). More specifically, for example, cell responsiveness can be influenced by axonal changes [1, 2], spatial and electrotonic dendritic arbor remodelling [3,4,5,6], spine modification [5, 7,8,9,10,11,12,13,14], active-zone expansion and shrinkage [15], AMPA tetramer modification [16,17,18,19,20], calcium channel modifications [21,22,23], and many more mechanisms. From a computational perspective, in contrast, synapses are often reduced to single "weights", which are idealized as edges in graphs representing pairwise influence between neurons, and plasticity is cast as change of influence [24,25,26,27,28]. The forms of plasticity used in artificial neural networks are also largely selected to optimize the performance of certain functions, such as image recognition [28, 29], memory formation [30,31,32,33,34,35], or reinforcement learning [36]. Thus, while plasticity is increasingly well understood in both biological and computational terms, these literatures are often relatively siloed.

In this review, we examine relationships between the two, applying the general principle that integrating across scales and levels of analysis can facilitate progress in neuroscience [37]. We decompose the underlying problem into two sub-problems, examining (i) how cellular models of plasticity determine network changes, and (ii) how these changes interact with the functional, computational properties of those networks. The first is probably most well understood from the perspective of unsupervised mathematical models of plasticity. The latter is more well understood in terms of mathematical gradients of error landscapes. Considering these along with the biology of plasticity suggests a significant role for metaplasticity, or "the plasticity of plasticity" in multi-scale adaptation. Specifically, the expression of, criteria for, and circumstances inducing plasticity can be adaptively controlled [38,39,40], and this flexibility theoretically allows metaplasticity to align adaptation across scales in the service of functional outcomes. This further suggests that the specific mechanisms of metaplasticity in different neural circuits and contexts will reflect network function.

Concretely, the logic of our review proceeds as follows: (1) Calcium and related signalling cascades are key regulators of Hebbian post-synaptic plasticity, and diverse processes impact both. This makes those processes, in part, plasticity controllers, and we review some of their key elements; (2) Hebbian learning algorithms have long been addressed by computational theories, which characterize their impacts on networks. Analyses of such learning rules and the neuromodulation thereof have increasingly indicated how controlling Hebbian change can produce functional network outcomes, so we review these points; Lastly, (3) different brain areas are specialized and this should be reflected in terms of the metaplasticity they express. Scale also introduces forms of metaplasticity such as activity routing, which conditions neural population activity. These observations should be integrated with the former two points, so we review some potential connections between them. We conclude by discussing applications of these ideas to pathology, specifically Parkinson’s disease, autism, and schizophrenia.

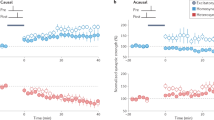

Diverse signals converge on Ca2+ as a plasticity controller

Experimentally, synaptic plasticity can be induced by many means, not all of which are naturalistic. Protocols that are plausibly similar to in-vivo conditions include burst induction in presynaptic afferents, which can mimic endogenous hippocampal activity, spike-pair protocols, which can mimic correlative spiking, and spike-burst protocols, which can mimic diffuse drive generating strong responses, for example [41,42,43,44,45,46,47,48,49,50] (Fig. 1a, b). Plasticity can also be induced and manipulated chemically, and with sub-threshold membrane currents. In-vivo and naturalistic manipulations are increasingly the norm however, and technical progress has improved control of key quantities, such as trans-membrane currents, over time (see e.g. [51]). Synapse potentiating protocols generally require considerable depolarization of post-synaptic cells, whereas depressing protocols require lesser depolarization.

a A pyramidal neuron with an afferent axon impinging on a dendrite, with two probes shown for stimulation and/or recording. b Three example stimulation protocols. Top: Burst inducing stimulation of an afferent connection (teal). Middle: STDP protocol, afferent stimulation (teal) paired with subsequent soma stimulation (purple). Bottom: A spike-burst protocol. Many other protocols exist, e.g. using different current injections, repetition timings and numbers, etc. c Phosphorylation of AMPARs changes their membrane densities and relative compositions of GluA1 to GluA2 subunits (shown in red and blue), mediating EPSC amplitudes. Similar changes in NR2A vs NR2B (as opposed to NR1) subunits of NMDARs modify their relative calcium permeability. Colors are visual guides. d Plasticity vs. Ca2+ concentration in canonical metaplasticity. A small amount of Ca2+ entering the postsynaptic cell induces LTD; larger amounts induce LTP. Changes in Ca2+ permeability change the amount of calcium delivered for a given depolarization, acting like a changeable ("floating") threshold, enforcing homeostasis, and inducing competitive learning. More realistic models are more sophisticated (see e.g., [56]) but this is a well established and reasonable first approximation.

There are many biological mechanisms by which neural activity (such as that generated by induction protocols) can potentiate or depress synapses. These can be roughly classified by their occurrence either pre- or post-synaptically, by the time-scales at which they occur, and by the signals that activate them. They include changes in vesicle count and content, spine properties, active zone surface areas, receptor densities, or dendritic function and morphology, for example [52]. Lasting, input dependent forms of plasticity generate "long term potentiation" (LTP) or depression (LTD) of synapses, which are measured as changes in post-synaptic trans-membrane current elicited by pre-synaptic activity [15]. The induction protocols discussed above generally explore these types of plasticity.

The most studied varieties of LTD and LTP occur post-synaptically in glutamatergic synapses [15]. When a presynaptic neuron fires an action potential, glutamate traverses the synapse and binds to postsynaptic AMPA and NMDA receptors, opening ion channels permeable to sodium and calcium. Whereas sodium influx primarily depolarizes the post-synaptic cell, calcium ions initiate intra-cellular processes that produce lasting changes. These include modifying NMDA and AMPA receptor densities and subunit compositions, directly impacting future glutamatergic transmission [15, 19]. Both the direction (LTP or LTD) and the amount of plasticity depend on the amount of calcium that enters the cell (Fig. 1d) [15, 53,54,55,56]. While it is increasingly recognized that the location of calcium entry can be critical for different processes, and that synapses vary in their exact properties, this is a widely accepted first approximation [56].

There are many other molecules and phenomena that interact with both post-synaptic calcium concentration and the machinery it engages, however. Related findings can be loosely organized according to whether they mainly addresses trans-membrane elements (AMPARs, NMDARs, VGCCs, GPCRs), primary signalling molecules other than glutamate (DA, ACh, NE, 5-HT, BDNF, TNF, eCBs), intracellular molecular players (Ca2+, cAMP, CaMKII, PKA, PKC, PKMζ, IP3), or modulation by other features of neurons, such dendrites (bAPs, spine clustering, endogenous spiking, electrotonic remodelling). This list is certainly not comprehensive, but it is diverse, and as we discuss below, many of the noted items modulate or are required for plasticity in particular circuits. The following several subsections discuss these roles, but also present a basic synthetic challenge to neuroscientists: What logic governs the mechanisms operating at any given synapse? Why are there so many "cooks in the kitchen" when it comes to calcium? Fortunately, the approximate common currency of post-synaptic calcium is compatible with ideas about synaptic plasticity arising from computational theories. The work we review therefore provides a cellular and molecular basis for comparison with abstract plasticity models (discussed in later sections), with the latter being theoretically linked to network functional and computational properties.

Ca2+, AMPARs, and NMDARs mediate canonical glutamatergic plasticity

As noted above, post-synaptic depolarization, the starting point for activity-dependent plasticity, is usually generated by inward Na+ and Ca2+ currents. In canonical LTP, Ca2+ currents contribute little to membrane depolarization but control plasticity, and they arise when NMDA receptors are (1) sufficiently depolarized, and (2) activated by co-agonists such as glycine (e.g., [15, 57,58,59,60]). Calcium entry leads to a number of signalling cascades, three of which we refer to here as the CaM–CaMKII pathway, AC–cAMP–PKA pathway, and the PLC–DAG–PKC pathways [15, 19, 61, 62]. Each molecule in these cascades is involved in multiple processes, but the kinases CaMKII, PKA, and PKC contribute to plasticity in significant part by phosphorylating AMPARs and initiating structural changes to preserve the consequences of AMPAR trafficking (see e.g., [15, 19, 63,64,65,66]). AMPARs are tetramers comprised of GluA1, GluA2, GluA3, or GluA4 subunits, with variable properties and molecular interactions based on composition [67]. CaMKII, PKA, and PKC differentially phosphorylate these subunits, leading to receptor exo- and endocytosis, as well broader trafficking changes, and these signalling cascades ultimately modify membrane AMPAR density and composition (Fig. 1c) [15, 19, 66, 68].

Long-timescale changes in synaptic activity can also modify the amount of glutamatergic transmission needed to initiate plasticity, thereby instantiating a type of "metaplasticity", or plasticity-of-plasticity [53,54,55,56]. Specifically, NMDARs are also tetramers, and are generally composed of two GluN1 subunits and two GluN2 family (GluN2A, GluN2B, GluN2C, or GluN2D) subunits [69]. As with AMPARs, different subunit compositions confer different properties on the resulting receptors. A key property that differs by composition is Ca2+ permeability, with the result that different post-synaptic receptor distributions will require greater or lesser activation in order to achieve a given integrated calcium flux. Importantly, NMDAR composition itself, and in particular the ratio of GluN2A to GluN2B subunits (also known as NR2A and NR2B units) can be modified by use-dependent post-synaptic signalling cascades. This determines how use impacts Ca2+ concentrations, and thereby AMPAR plasticity (Fig. 1c) [70,71,72,73,74,75,76,77,78,79]. Functionally, it produces a type of "floating threshold" for plasticity induction, which was notably predicted by computational theories of plasticity [53,54,55]. This threshold, along with the basic phenomenon of lesser and greater calcium influx producing LTD and LTP, is illustrated in Fig. 1d. One resulting function is homeostatic, in that depression becomes easier to induce in stronger synapses, whereas potentiation is favored by weaker synapses. As we discuss below, more general forms of metaplasticity have many additional computational implications.

Many processes converge on Ca2+ signalling

Beyond solely providing a floating threshold over long timescales, Ca2+, PKA, PKC, and CaMKII related pathways have been increasingly recognized as flexibly altering plasticity on the basis of non-NMDAR mediated signals, which likely serve important computational ends as well. Specifically, various other trans-membrane players directly impact calcium, perhaps most notably voltage gated calcium channels (VGCCs) and calcium-permeable AMPA receptors (CP-AMPARs). In addition, G-protein coupled receptors (GPCRs) also modulate elements of the CaM–CaMKII, AC–cAMP–PKA, and PLC–DAG–PKC pathways, and this family includes receptors that respond to all the major neuromodulators. Muscarinic acetylcholine receptors (mAChRs), α - and β-adrenergic receptors (β–ARs), dopamine receptors (DARs), and serotonin receptors (5HTRs), are all GPCRs impacting these pathways, as are metabotropic glutamate receptors (mGluRs) and metabotropic GABA receptors (GABABRs) [80, 81]. As a result, neuromodulators have diverse impacts on plasticity. An important question is therefore: Given broader theories of neuromodulators and the regulation of calcium-permeable channels, how are these impacts orchestrated in the service of computational goals? The most well understood case is probably dopaminergic modulation of plasticity for reinforcement learning, for example, but more generally, answers for particular circuits will require synthesizing functional observations across scales. To this end, we review some of the molecular aspects here.

From a computational perspective, coordinated, fine-grained spatial control of post-synaptic calcium is important because it can theoretically direct plasticity to specific stimuli or sets of post-synaptic inputs. VGCCs are strong candidates for mediating this capacity, (along with other dendritic parameters) because of their roles in dendritic processing and regulation of Ca2+. Voltage-gated calcium channels form several subfamilies based on their pore-forming proteins (α subunits) [22]. The Cav1 family (Cav1.1, Cav1.2, Cav1.3, and Cav1.4) conduct L-type calcium channels, and Cav1.2 and Cav1.3 are generally located postsynaptically in dendrites and cell bodies [82,83,84]. Cav2.1, Cav2.2, and Cav2.3 conduct P/Q-, N- and R-type currents and are primarily located presynaptically. Cav2.1 and Cav2.2 are involved in vesicle exocytosis and Cav2.1 participates in short-term synaptic facilitation and depression. Cav2.3 channels also appear to be located postsynaptically in some areas [85, 86]. The Cav3 family (Cav3.1, Cav3.2, and Cav3.3) conduct T-type calcium currents, which are involved in rhythmic and burst firing, particularly in the thalamus [22]. Of these, one therefore expects relatively direct effects of Cav1.2 and Cav1.3 channels on post-synaptic glutamatergic LTP induction, via calcium currents, and potentially less direct effects of Cav3 family channels via bursting related back-propagating action potentials (bAPs).

In line with these predictions, L-type calcium currents contribute to plasticity in a number of ways, several of which go beyond floating threshold effects. Cav1.2 contributes to LTP in (hippocampal) Schaffer collaterals, for example [87,88,89,90]. Blocking these currents in several circumstances either reduces or abolishes LTP that would have occurred otherwise. Cav1.2 channels also form highly localized signalling complexes with β2–adrenergic receptors and several members of the CaM–CaMKII and AC–cAMP-PKA cascades, such that adrenergic signalling up-regulates channel conductance and increases protein-based second messenger activity [90, 91]. This promotes LTP under joint, weak, theta-burst and adrenergic stimulation, which occur naturally in the hippocampus during exploratory behavior [92]. Moreover, NMDARs and VGCCs appear to regulate one another, with chronic increases in Cav1.2 L-currents lowering NMDAR Ca2+ flux for example [83, 86, 93]. Finally, L-type calcium currents also appear to contribute to (slow) spike after-hyperpolarization and to frequency-based adaptation, processes that regulate cell excitability and thereby plasticity [94,95,96,97]. Collectively, these observations suggest that VGCCs might be integrated into a holistic understanding of plasticity as having primary effects on induction thresholds, post-synaptic excitation and dendritic processing (discussed below), along with various secondary impacts. A key research goal will therefore be determining the relative importance of each for plasticity at any given synapse.

Post-synaptic calcium is also directly manipulated by calcium-permeable AMPA receptors (CP-AMPARs) [20, 23]. Typically, AMPARs contain at least one (post transcriptionally "edited") GluA2 subunit, making them impermeable to calcium. CP-AMPARs, on the other hand, are typically GluA1 homomeric. They are often inserted into the post-synaptic density during plasticity induction as a result of trafficking from endosomes, only to be subsequently removed [98,99,100]. Some neurons also appear to express long lasting CP-AMPARs however, including cortical and hippocampal interneurons [101,102,103,104]. It is not well understood what effects either the transient or long-lasting changes in calcium transmission have, but the broader calcium theory suggests they should lower the thresholds for inducing both synaptic depression (given weak additional input) and further potentiation (given strong additional input). Complicating the matter, intracellular polyamides close CP-AMPARs at significant depolarizations, making them voltage gated [105, 106]. This voltage gating appears to allow CP-AMPARs expressed by hippocampal interneurons to mediate "anti-Hebbian" plasticity [101, 103, 104], and may be important in principal-interneuron plasticity generally [102, 103, 107, 108]. (In this case, excitatory synapses onto inhibitory neurons are potentiated when pre-synaptic firing occurs without post-synaptic firing.) A number of regions have also been shown to express CP-AMPARs under pathological conditions, but here too, little is known about their precise effects on plasticity [21, 23]. As with VGCCs, it may therefore be reasonable to consider CP-AMPARs according to a first-order effect on calcium-based threshold change and diverse second-order effects. Whether these "second order" effects are really secondary remains to be seen, but the idea that transiently expressed CP-AMPARs should facilitate plasticity induction (both LTP and LTD) in the short term appears plausible.

Finally, as noted above, a number of metabotropic, neuromodulatory receptors interact with the CaMKII, PKA, and PKC calcium signalling cascades, providing further levers for controlling plasticity [19, 62, 80, 81]. These G-protein coupled receptors (GPCRs) form a very large class of trans-membrane proteins. Much of the diversity of this class occurs in the extracellular components, whereas intracellularly, GPCRs are characterized by their bound hetero-trimeric α, β, and γ subunits [80, 81]. Each subunit has a variety of subtypes as well. Extracellular ligand binding dissociates the αβγ trimers from the transmembrane elements, and generally further subdivides them into free α and a free βγ dimer (often denoted Gα and Gβγ or similar). The Gα proteins can be clustered into families identified with Gαi (alpha types i, o, z, t), Gαs (types s, olf), Gαq (types q, 11, 14, 15) and Gα12 (types 12, 13). Of these, Gi inhibits adenylyl cyclase (AC), which lowers production of cAMP, and thereby reduces the activity of PKA [19, 62]. Gs does the opposite, upregulating AC, and hence cAMP, and PKA [19, 62]. And finally, Gq produces DAG and IP3, which activate PKC and Ca2+ release from endoplasmic reticula via IP3Rs [19, 62]. Metabotropic receptors for all of the major neurotransmitters bind these G-proteins (i.e. Gi, Gs, and Gq families), which mediate complex intracellular activity. As one would predict by their actions on PKA, Gs coupled receptors appear to generally promote LTP, whereas Gi coupled receptors often promote LTD [62]. Gq coupled receptors show mixed effects on plasticity, with mGluRs being generally associated with LTD, whereas M1 AChRs are demonstrably involved in both LTD and LTP (discussed below). For any given receptor and context, the exact relationship to plasticity likely varies according to the balance of different G-protein mediated effects and the conditions they’re exerted in. Nonetheless, because of their strong relationships with macroscopic theories of brain function, we now discuss several instances of DAR, mAChR, and β–AR modulated plasticity in further detail.

DA, ACh, and NE modulate both activity and plasticity

DA, ACh, and Ne receptors all modulate intracellular signals involved in plasticity, and they change cellular and network properties governing activity as well. These changes occur simultaneously in several systems, suggesting that they may generally act in concert, but how this occurs, and what it accomplishes, are not well understood. (Indeed this article examines potential syntheses.) We consider network integration primarily in a later section, and for now continue with some of the cellular and molecular relationships.

Empirically, DA dependence is well established at cortico-striatal synapses, for example [10, 62, 109,110,111]. The striatum is the input region of the basal ganglia and is broadly recognized as having roles in action planning and execution, working memory and attention, and reward-based learning. Dopamine is believed to signal reward-prediction errors (RPEs) and motivational variables, so DA dependence of plasticity is broadly in line with these theories [10, 62, 109,110,111,112,113]. This modulation acts differentially on the two primary types of neurons in the striatum, which express different dopamine receptors. The "direct pathway" medium spiny neurons (dMSNs) primarily express D1Rs, and these D1-MSNs are metabotropically rendered more excitable by DA. The "indirect pathway" projections (iMSNs) primarily express D2Rs, and these D2-MSNs are metabotropically inhibited by it. Furthermore, the plasticity of cortico-striatal synapses onto each type of neuron is unidirectional or bidirectional depending on local DA concentration [114], with positive RPEs and negative RPEs preferentially driving reinforcement of the D1 and D2 pathways. These effects occur in part because D1Rs and D2Rs impinge on the calcium-cAMP-PKA signalling pathway via the Gs and Gi family α subunits respectively [115]. DA-based plasticity in MSNs is further gated by ACh, which is signaled by tonically active neurons [116,117,118], and requires co-agonism by endocannabinoids and adenosine [114]. Because DA appears to multiplex various signals, these additional requirements may serve to specify exactly when and how plasticity should occur in response to only relevant dopaminergically communicated information. More broadly, computational accounts of these pathways, which we discuss below, draw on theories of modulated Hebbian plasticity to suggest normative roles for the opponency and modulation noted here.

DA-dependent plasticity has also been established in the PFC of mice, but is less well understood theoretically or empirically. Empirically, D1Rs are expressed in a bilaminar pattern across frontal cortex, with elevated density in layers I-III and V-VI, and low density in layer IV (in primates, with some results in rodents) [119,120,121,122,123]. These receptors appear to be predominantly located on dendrites of pyramidal neurons, but are also located pre-synaptically on principal cells targeting distal dendrites of other glutamatergic neurons, and on parvalbumin positive GABAergic interneurons [121, 124, 125]. Maximal frontal D1R concentrations appear in dlPFC, which hosts strong recurrent connectivity that is mediated, unusually, by NMDAR rather than AMPAR activity [126, 127]. Posterior regions are relatively devoid of both D1Rs and dopamine, with the significant exception of the lateral intraparietal area, which is also noted for its recurrent activity and its role in working memory [123, 126]. Occipital cortex, by contrast, hosts both an extremely low density of D1Rs and little to no dopaminergic innervation [123, 126]. D2Rs appear to be expressed relatively uniformly, in much smaller quantities, across layer V pyramidal neurons throughout cortex [119, 120]. In frontal regions, they have also been found on GABAergic parvalbumin positive interneurons [128,129,130,131]. The D2Rs in layer V pyramidal neurons were recently reported to be Gs coupled rather than Gi coupled, and hence to enhance, rather than reduce, cell excitability given dopamine application [132].

In terms of direct (rather than network) impacts, plasticity induction at excitatory L2/3 synapses onto L5 pyramidal neurons shows dopamine dependence in mice [130, 133]. DA application at several time-points after these authors’ spike-pairing protocol produced D1R dependent Hebbian LTP. The longer-delay application (30 ms after pairing) was found to depend on both D1R activity post-synaptically and D2R mediated reductions in GABAergic interneuron firing rates. The shorter-delay application (10 ms) required only the latter, demonstrating a clear case of activity dependence, disentangled from post-synaptic GPCR signalling. These findings were subsequently extended by showing that changing the spike pairing to a post-before-pre protocol, which classically would induce LTD, and concurrently applying dopamine, produced LTP instead [133]. This was dependent on post-synaptic D1R activity, but not pre-synaptic D2R activity.

In contrast with dopamine, acetylcholine and norepinephrine have been shown to modulate cortical plasticity in several sensory areas. NE and ACh dependence occur at V1 L4 to L2/3 cortical synapses, for example, and appear to be required for plasticity induction there in adult rodents [134,135,136]. The mAChRs present are Gq coupled (M1 family), and interact with the PLC-DAG-PKC signalling cascade to bias plasticity towards LTD [136,137,138]. Co-located β-adrenergic receptors are Gs coupled and interact with the AC-cAMP-PKA cascade to promote LTP [135, 136, 139, 140]. When both are activated, these aspects combine to specifically gate causal spike-time dependent plasticity, meaning LTP occurs when pre-synaptic input precedes post-synaptic depolarization, and LTD occurs when this order is reversed [136]. Notably, α1-adrenergic receptors are also present post-synaptically, which have higher affinity for norepinephrine than β-adrenergic receptors, are Gq coupled, and have often been reported to facilitate LTD as well as M1-AChRs [135,136,137, 139, 141, 142]. In line with these points, one study found that low NE concentrations in isolation produced LTD, whereas high NE concentrations activated both receptors and re-instantiated Hebbian STDP [135].

Finally, all three neuromodulators (DA, ACh, NE) modify hippocampal plasticity as well. DA dependence has been noted on Schaffer collateral synapses, which connect CA3 to CA1, and in the perforant pathway, which connects entorhinal cortex to the dentate gyrus [143,144,145,146,147,148], whereas NE and ACh modulation have been reported at Schaffer collaterals [149,150,151,152]. β-adrenergic modulation was also noted to extend the temporal window for time-dependent LTP, by making CA1 pyramidals more excitable [149]. More generally, NE has often been reported to facilitate hippocampal LTP via the preferential Gs coupling of β-adrenergic receptors, as with visual cortex [92, 134, 149, 153,154,155]. Reported ACh effects have been more varied, with some studies indicating facilitation of LTP [154, 156,157,158,159,160,161,162,163,164,165,166,167] and others showing LTD induction [168,169,170,171,172,173]. The latter have generally seen LTD under weak or non-existent post-synaptic stimulation however, whereas the former have tended to look at enhancement of LTP or conversion from LTD, a potentially critical difference.

Several recent studies have also investigated how spike timing effects these processes. In one, ACh converted bidirectional STDP at Schaffer synapses into unidirectional LTD, whereas retroactive application of dopamine transformed this into LTP [150], in line with earlier reports of hippocampal DA-ergic modulation [143, 146, 147]. This was at odds with another group’s report that inhibition of mAChRs converted causal LTP to LTD, and prevented anti-causal LTD [151, 152], but the induction protocol used in the latter appears to have been significantly stronger. This strength discrepancy might mirror the general difference in LTP vs LTD biasing actions noted above, or may be mediated by the complexity of Gq signalling, specifically by different contextual implications of IP3-Ca2+ and PLC-DAG-PKC cascades. For example, M1 activity can both enhance SK channel (calcium-dependent, voltage-independent, small conductance potassium channel) activity via IP3-based internal Ca2+ release [174], and inhibit it via PKC [166, 175]. LTP induced by theta-burst stimulation of Schaffer collaterals can be facilitated by ACh via the latter mechanism, because closing the SK channels diminishes shunting current and enhances NMDAR Ca2+ flux [166, 175]. In fact, M1-AChRs can have a number of other impacts on K+, VGCC, and nonspecific cation channels [176], which makes the diversity of ACh mediated plasticity results perhaps less surprising, and generally indicates we have much to learn about the matter.

Summary of cellular and molecular data

To summarize, a number of different fundamental processes modulate intracellular calcium signalling cascades, and thereby plasticity. Up-regulating calcium, CaMKII, and PKA pathways tends to facilitate LTP, whereas down-regulating calcium or PKA, or up-regulating PKC related pathways tends to facilitate LTD. This is compatible with a model of plasticity in which small, but non-negligible amounts of calcium facilitate LTD and large amounts facilitate LTP. Although we did not address it above, it is important to note that one open question in this regard is exactly which properties of calcium fluxes, such as their amplitude, duration, or location, govern this behavior [56]. Nonetheless, a number of results are clear. Voltage gated calcium channels contribute to these effects, at minimum, by modulating calcium directly, through their interactions with NMDARs, by changing cell excitability, and through complexes with β-adrenergic receptors. Calcium-permeable AMPA receptors also directly mediate calcium currents and are mostly expressed transiently, but may be long lasting in some synapses. The implications of their short-term facilitation of calcium currents is not well understood, but presumably interacts with the same signalling cascades just described. These interactions are likely complicated by the fact that CP-AMPARs are themselves targets of said cascades, because they are AMPARs, and by polyamide-based gating. Lastly, the major neuromodulatory systems all engage GPCR signalling. Gα subunits, which are categorized by family (Gi, Gs, Gq, and G12) engage the PKA, IP3, and PKC pathways (in addition to diverse effects we have not discussed). As a result, they are expected to directly modify plasticity induction, and indeed multiple areas including striatum, pre-frontal cortex, visual cortex, and hippocampus display neuromodulated plasticity. Often this occurs as "gating" of LTD or LTP, or by converting one to the other. Because these are Hebbian forms of plasticity, models of their function should build on research examining un-modulated Hebbian rules and knowledge of what modulating these can accomplish.

Controlling plasticity dictates key network properties

How can we understand the impacts of the diverse biological phenomena reviewed above? One answer is to interpret them in light of mathematical models of network function and learning. Many of the modulations of LTP or LTD discussed so far control specifically Hebbian synaptic change. By this we mean change at an existing synapse determined by a presynaptic factor and a postsynaptic factor. As such, formal theories of Hebbian plasticity are highly relevant, and we proceed to review them here. Then we consider how metaplasticity can be exerted by augmenting Hebbian change with so-called "third" factors. These can represent reward or attention, and can mathematically model the calcium based or PKA, PKC, and CaMKII impacts discussed above [62, 111, 177,178,179,180]. Mathematical analyses indicate that such modulation vastly expands the universe of resulting network functions. Collectively, these considerations indicate how important network properties can be related to metaplasticity, providing an interpretive framework for the calcium signalling observations discussed above and knitting local synapse changes into functional network ones.

Unconditional Hebbian rate theories

The primary mathematical formulations of Hebbian plasticity are rate-based and spike-based, whereby synapses change according to time-averaged activities or according to timing relations among peri-synaptic depolarizations. A diversity of rate-based theories exist, but the Bienenstock-Cooper-Munroe theory (BCM) is probably the most well known and well validated in neuroscience [53, 55]. The canonical spike-based theory is termed "Spike-time dependent plasticity" (STDP) [44, 45, 48, 49, 111, 180, 181]. Both theories are based on the idea that pre-synaptic activity producing post-synaptic activity should increase synaptic efficacy, i.e., on Hebb’s postulate.

Dependence on pre- and post-synaptic activity suggests Hebbian change should respond to covariances, an intuition which is appropriate across a range of models. In the most basic rate formulations, one set of neurons (principal cells in V1, say) receives feed-forward input from another (principals in thalamus). The simplest synaptic changes are products Δw = yx, where w denotes synaptic efficacy ("weight"), Δw denotes a change in efficacy, y denotes post-synaptic activity, and x denotes pre-synaptic activity. When one set of neurons drives a second set like this, covariance between pre- and post-synaptic activity primarily reflects covariation within the driving set. Since the main dimension in which a collection of data varies is termed its principal component (PC), this suggests that Hebbian synaptic plasticity transforms the weights (and hence receptive fields) to reflect principal components of the data, as illustrated in Fig. 2b [182,183,184,185]. When homeostatic elements such as floating plasticity thresholds are modelled, neurons develop more complex receptive fields like Gabor filters [53, 55]. Including inhibitory competition forces neurons’ receptive fields to specialize [186, 187], and heterosynaptic competition tends to modify the properties of those fields somewhat [188]. Top-down feed-back and recurrent inputs generalize these models further, and since Δw = yx has interchangeable x and y, one might expect recurrent weights to become reciprocal, which is indeed common in cortical networks [189]. Symmetric connectivity stabilizes persistent activity within recurrent sub-networks [30, 187], or recruits similarly tuned neurons to excite one another (see Fig. 2d-f). Models of visual processing based on these ideas predicted aspects of cortical stimulus selectivity and map-formation, such as visual tuning properties and stronger connections between similarly tuned neurons [190,191,192,193,194,195,196,197]. Hippocampal encoding of memories in recurrent activity also essentially relies on this logic, for example [35, 198].

Blue indicates less activity or reduction in efficacy, and red indicates the opposite throughout. a A feed-forward network with two stimulus presentations, "trial 1" and "trial 2". Neurons 1-4 are connected to 5 and 6, and connections to 5 are shown with arrows. Synapses are strengthened from neurons with higher than average activity (red) and weakened from those with weaker activity (blue). b The synapse updates arranged in an array, numbered according to neurons as (To,From). The principal component of the trial-by-trial variability in a is shown, also labelled by neuron. This vector is the same as the top row of the weight updates, illustrating how the update is a "matched filter" (parallel vector) for the PC. The response of unit 5 is the sum over all pairs of elements multiplied together, i.e. the sum of the weight number (5,1) times the activity in unit 1, the weight (5,2) times the activity in unit 2, etc. c RPE-modulated feature detectors extract features from subsets of the data, picking up the covariance of input-output transformations with reward, or generating matched filters for PCs that drive rewarding output activity. Such updates can perform reinforcement learning. d Recurrent network with two stimulus presentations, and weight changes under symmetric and asymmetric update rules (assuming neuron 2 fires before neuron 1, in asymmetric case). e Weight matrix updates, under the same convention as in (b). Grey diagonal elements indicate that neurons don't innervate themselves. Grey off diagonal elements show unchanged weights. Symmetric and antisymmetric updates are generated by rate-coded Hebbian, and causal STDP rules. Nilpotent updates can occur when an anti-symmetric update can't lower a weight any further. These are linked to so called "non-normal" dynamics, of which transient synfire chains are an example. f Activity propagation from unit 1 (with time represented as evolving downward) if weights were as shown in (e) (and neurons turn themselves off). In the symmetric case, 1 and 2 are mutually excitatory, passing spikes back and forth. In the latter cases, 1 excites 2, but is not reciprocally excited, so activity is transient.

Theoretical work on unconditional, rate-based Hebbian plasticity mainly proceeded from these foundations by examining Hebbian interactions with other important features of biology, especially homeostasis. Naive Hebbian dynamics are unstable, with weak synapses disappearing and strong synapses often increasing indefinitely. As noted above, the BCM rule posited a floating plasticity threshold partly to resolve this issue [53, 55], but other early approaches included modelling weights as bounded or conserved in aggregate, and plasticity as a diminishing function of strength [191, 199, 200]. Rough conservation in aggregate has largely been born out in the form of "synaptic scaling" [201, 202], and remarkably there is also evidence that local excitatory-inhibitory balance and synaptic strength are conserved on dendritic sub-domains as well [5, 11]. Plasticity of neural excitability, inhibitory plasticity, and autonomous spine fluctuation dynamics have also been identified as contributors to homeostasis, and likely also serve computational roles [14, 107, 108, 203,204,205,206,207]. Synaptic scaling appears to be crucial for maintaining correlative relations between neurons, whereas network firing rate homeostasis is more strongly impacted by excitability changes, for example [206]. Inhibitory plasticity, on the other hand, may be fundamental for controlling detailed forms of excitatory-inhibitory balance, which in turn impact a number of important network properties such as the desynchronized states associated with attention and alertness [107, 108]. Finally, unconditional Hebbian plasticity in networks can also regularize learning (i.e., make it less flexible but more targeted) or otherwise bias networks towards certain representations [196, 208,209,210,211,212]. One example of this is aiding the development of systematic representations that facilitate generalization [208]. Though it is beyond our scope to address these in detail, we suspect that biological multiplexing of different types of plasticity has signatures in calcium pathway dynamics, and that investigating these will be a key area for cross-talk between theoretical and empirical research. Ideally results from these areas would be rationalized along with other mechanisms by reverse engineering accounts, as has been done somewhat with stability and representation learning, for example (e.g., [210, 213, 214]).

Unconditional Hebbian spike theories

Spike-timing models inherit basic properties from rate-based models [200, 215]. The canonical formulation of STDP computes the time difference between every pair of spikes occurring in a connected pair of neurons, translates each into an increment or decrement of the synaptic efficacy, adds these all together, and updates the synapse. Post-synaptically, neurons can accomplish this online by maintaining an "eligibility trace" of the times at which they received input, comparing this with their own activity, and modifying their synapses accordingly [180]. Physiologically, the eligibility trace is interpreted as calcium or other intra-cellular products which are elevated by synaptic input and decay over time. Backpropagating action potentials or other retrograde signals are interpreted as communicating post-synaptic activity to the synapse. The eligibility-trace formulation immediately suggests that there may be cases in which plasticity is retroactively expressed, by converting a latent change into an expressed one, and this has been widely observed [13, 62, 147, 150, 216,217,218,219]. This retroactive expression is also important for computational models of metaplasticity and may relate considerably to empirical "consolidation" and "synaptic tag and capture" ideas, although they have been little integrated thus far (see [15, 36, 180, 220,221,222,223,224,225], for example.)

A more general mathematical description involves the use of Volterra expansions [200]. The net plasticity induced by a sequence of spikes in a pair of neurons can be described as application of a "functional" to the pre- and post-synaptic neurons’ spike trains. The STDP functional takes two spike-trains and returns a synaptic weight change. The weight change depends on a relation quantifying the temporal order and proximity of pairs of spikes, called a kernel. STDP is determined by convolving the kernel with one of the spike trains, then taking the inner product of the result with the other spike train. This procedure is illustrated in Fig. 3A, as are a number of different potential pairwise kernels for Volterra expansions. These descriptions are motivated by the fact that Volterra expansions express the simplest pairwise interactions, and can be naturally expanded to include higher or lower order interactions as well. For example, they can model spike-triplet effects [226,227,228,229] or the impacts of unpaired output spiking [200], and can also be expanded to account for other temporal variables, such as membrane voltage deviations [230, 231].

A STDP is established empirically by inducing pre- and post-synaptic spiking and observing synapse efficacy changes. This determines a "kernel" or window function, which can be used to model plasticity. (a-i) Kernel components can theoretically be mixed and matched, and in-vivo they appear to be. The vertical axis is change in synaptic efficacy, horizontal is time from presynaptic to post-synaptic spike. The canonical STDP kernel is in (c), but unipolar LTP (a), unipolar LTD (i) and anti-causal STDP (g) also appear under conditions of neuromodulation. The NE, ACh and DA-dependent plasticity discussed in text can often be regarded as specifying these kernels. Hippocampal DA appears to convert canonical STDP (c) to unipolar LTP (a), whereas hippocampal ACh appears to convert canonical STDP (c) to unipolar LTD (i) in some circumstances, for example. In some PFC synapses, DA gates unipolar potentiation (a). Cases (d), (b), (f), and (h) also seem to arise in other conditions. See [62] for a detailed review, and references in text. Note that any particular effect may be protocol dependent. B Illustration of connectivity effects. Organizing synaptic efficacies as a matrix, updates based on different kernels have different mathematical properties. Unipolar LTP or LTD are both symmetric, classic STDP and inverted STDP are both anti-symmetric, and the remaining possibilities, are called nilpotent. Symmetric matrices have real eigendecompositions, meaning roughly that networks with symmetric connections produce stable recurrent activity. Anti-symmetric network weights and nilpotent weights favor transient, "moving" activity, such as synfire chains. Because activities and weights in actual networks are rectified, asymmetric updates will often be rectified to nilpotent ones, and thereby push networks to have feed-forward sub-networks. Here, a network of three neurons with positive symmetric initial weights between neurons 2 and 3 undergoes asymmetric updates based on the classic asymmetric STDP kernel to produce unidirecitonal connectivity from neuron 2 to 3. C Example application of the REINFORCE algorithm, specifying a three-factor plasticity rule, to a two neuron network. On repeated trials, activity in unit 2 dictates network performance such that being closer than average to some hypothetical target T is rewarded, being further is punished. These outcomes generate reward prediction errors relative to average reward both of which serve to increase the weight from unit 1 to unit 2 under the equation for dW, the change in synapse strength.

The kernel description also provides flexibility in modeling timing and order dependence. In the standard case, kernels are constructed with spike-spike interactions that decay exponentially as a function of absolute time differences. These are also causal, so that pre-before-post spiking generates LTP, and post-before-pre spiking generates LTD, as noted above. This results in plasticity that recapitulates much (but far from all) empirical synaptic data [48, 49, 232]. The kernel approach suggests that these aspects might be mixed and matched however, for example to model forms of plasticity under which post-before-pre spiking does not generate change, or for which both temporal orders of spiking produce LTP. Indeed, beyond the canonical form of STDP, many such alternative forms have now been described [62]. The most well understood of these is probably anti-Hebbian STDP at climbing-fiber homologous synapses onto Purkinje cells in the cerebellum like structures of weakly electric fish. These are thought to play a role in signal cancellation [233,234,235], but debates about climbing-fiber plasticity more broadly appears unresolved [236]. Anti-Hebbian STDP has also been found in neuro- and inhibition-modulated preparations [62, 237], however, and in excitatory-inhibitory plasticity [101, 103, 104]. This may be related to network stability or competitive specialization, as theoretical work has suggested for anti-Hebbian plasticity broadly [213, 214], but a general theory is lacking. The impacts of different kernels or their associations with, for example, different network connections between cell classes, is in need of greater exploration (but see [238, 239]). Especially so, since neuromodulation can change these kernels, as discussed below.

At the network level, canonical STDP predicts structured timing relations among groups of neurons. In networks with significant feed-forward pathways and similar transmission delays, canonical STDP predicts the existence of "synfire chains", groups of neurons that propagate activity as volleys of synchronous action potentials [240,241,242, 242,243,244,245,246,247,248,249,250,251]. More general structured asynchronous activity generalizes this. Specifically, when transmission delays, refractory activity, and other elements of biology are incorporated into spiking neural network models, STDP tends to produce overlapping ensembles of co-active neurons with conserved timing. Izhikevich referred to this as "polychronization", and showed that these ensembles are essentially intermingled synfire chains with timing offsets [245]. An interesting consequence of these models is that random membrane fluctuations, which drive resting state activity, interact with STDP to randomly shrink and enlarge the pools of neurons participating in different chains [245].

Synfire and polychronization ideas have suggested a number of hypotheses about in-vivo network function. Reproducible, non-stationary activity has often been considered as a potential substrate for compositional representation and routing, for example [246, 249, 251,252,253,254]. Recurrently, these properties can also be used as a form of active maintenance, providing spike-based, asymmetric counterparts to the Hopfield dynamics initially proposed to model memory [241, 242, 247, 250, 255]. More generally, modelling suggests that networks subject to STDP would need to co-opt polychronizing dynamics to fulfill their functions, because they would otherwise be subject to constant plasticity-induced degradation. This is a spike-timing-based example of regularization, which we noted above for unconditional Hebbian theories, and is perhaps most well understood for cases of reward-modulated STDP [179, 256,257,258,259]. When, where, and how unconditional STDP interacts with more complex forms of learning remains a major open question however, as was the case with rate models. One straightforward question, for example, is when and where symmetric vs asymmetric network restructuring occur (see Fig. 2b, d–f) [230, 260].

More empirically, groups of neurons with conserved timing relations (as are generally predicted with STDP rules) have been identified in various circuits. For example, auditory cortex has long been recognized to encode stimuli with precise spike timing [261, 262], and several of the ideas discussed above seem to apply there. Primary auditory L5 neurons (excitatory and inhibitory, in rats) exhibit fluctuating spontaneous activity that is highly reminiscent of polychronization [263,264,265]. These transient sequences were most highly conserved at transitions from cortical down states to cortical up states, and became less conserved the longer the transient activity persisted (as predicted by a polychronization model). Furthermore, a subset of up-states propagated as travelling waves, and local groups of neurons with conserved timing relations were activated in stereotyped order, regardless of the direction of wave travel or whether their responses were initiated by the waves at all [263]. Follow up work found that these stereotyped synfire-like responses provided a "vocabulary" of neural activity that stimuli could elicit, with the same pattern of decaying spike-time precision over stimulus presentation time [264, 265]. The computational analysis of spiking involved in these types of investigations are statistically non-trivial however, hampering progress [266,267,268]. Complicating the matter further, more complex situations, such as spike-phase coupling to oscillatory local field potentials, are plausibly the more common contexts for conserved timing that actions of plasticity should be investigated in (see e.g. [269,270,271,272]). Nonetheless, the asymmetric activity generated by STDP is of significant interest in computational neuroscience for generating transients of population activity, such as sequences [273,274,275], and could reasonably be expected to either generate or adapt "hidden Markov model like" neural dynamics.

Metaplasticity makes Hebbian updates conditional

Metaplasticity co-opts the plasticity discussed above, generalizing Hebbian change by making it conditional. In the simplest case this means gating plasticity, such that it only happens under certain circumstances but is unchanged in form. A more complicated situation involves both gating plasticity and modulating its sign, i.e. converting LTP to LTD or vice versa. Finally, these can be combined with alterations in the magnitude of induced changes. This perspective is useful over and above thinking about different synapses as merely having different time-, history-, or neuromodulator-dependent forms of plasticity, because it leaves the Hebbian aspect intact and allows asking what controlling it in constrained ways can accomplish.

Mathematical analyses indicate that such control vastly expands the potential uses of plasticity [27, 28]. In computational theories of neural networks, there are several classes of algorithmic learning procedures, which are categorized by the informativeness of the feedback provided to the learning algorithm [27, 28]. Supervised learning entails providing maximally informative feedback, which instructs networks with the output that should have been produced, such as when a teacher corrects the way a student reads a word. Reinforcement learning requires ordinal feedback, indicating when network outputs are better or worse, making it a form of trial and error learning. Unsupervised learning occurs without feedback, and network connections come to reflect statistics of the training environment. Classical Hebbian learning, such as unmodulated spike-time dependent or BCM plasticity, are forms of unsupervised learning. Metaplasticity that flexibly controls Hebbian updates essentially converts this into reinforcement learning based on "goals" which are implicitly defined by the modulation.

In reinforcement learning, a neural network needs to determine the gradient of some function with respect to network parameters (e.g., weights) in order to change those parameters in a way that increases or decreases the value of the function [36, 177, 276,277,278]. This function is called the "error" or the "loss", and encodes the "goal" of the network. The gradient indicates what the best possible local change of weights is for improving performance. This situation is distinguished from supervised learning by the fact that the gradient must be estimated based on scalar evaluative feedback (e.g., reward outcomes rather than specific information about what should have been produced). The behavior being optimized is referred to as a policy, and in a neural network this policy is a function of synaptic strengths [36]. Mathematically, for a set of presynaptic neurons connected to a set of post-synaptic neurons the gradient is the average of a Hebbian three-factor update involving the pre-synaptic input, the post-synaptic output, and a reward-prediction error [177, 180]. The classical algorithm demonstrating this is known as the REINFORCE algorithm (Fig. 3C) [177], and it forms the basis for a number of works that have examined reinforcement learning via synaptic plasticity since (e.g. [110, 116, 212, 276,277,278,279,280,281,282,283,284,285,286,287,288,289]).

When a network’s policy gradient is the expected value of a three-factor rule, and in particular has a scalar modulatory term, it is natural to interpret as a prescription for metaplasticity. Modulation acts to turn Hebbian plasticity up or down, on or off, or to invert it depending on feedback from the environment. From the perspective of a local network, "the environment" can include neuromodulatory signals indicating arousal, attention, surprise, or reward, for example. This is a key connection, because the modulatory term in the RL formulation manipulates the "error" that the network is trying to minimize, or "reward" being maximized. (Mathematically speaking, we are informally considering the Helmholtz-Hodge decomposition of the flow generated by a plasticity rule, with modulation defining a transformation of the terms.) By implication, converging manipulation of intracellular calcium signalling pathways, which themselves control LTP and LTD, can be intuitively thought of as defining network level reinforcement learning problems. The "error" or "reward" in these problems are not necessarily error or reward from the standpoint of the organism, but are arbitrary function evaluations related to the implicitly defined "purpose" of the local network (although one expects consistency conditions to complicate this intuition). The minimization steps used to solve these problems are selective applications of the same Hebbian updates that would otherwise lead to feature detectors, associative memory, sequential dynamics, etc. As a result, such algorithms operate in much the same way, with the caveats that unrewarding situations are ignored, the "feature detector" or "associative memory" seeking updates occur conditional on positive reward, and negative reward moves networks away from such associations. This informal account has technical caveats, but describes basic REINFORCE algorithms and more recent work on surprise-modulated plasticity accurately [177, 290, 291]. (Important details and more information on this topic can also be found in [177, 259, 276, 278, 279, 292,293,294,295,296].) Before illustrating these functional ideas in a broader biological context, we pause to discuss one of the critical connections with calcium dynamics.

Considering metaplasticity as reinforcement learning hinges on the capacity for neurons to distinguish between inputs requiring different responses [36, 177, 297]. While many mechanisms might allow this, dendritic signal integration is well positioned to do so [297, 298]. Both active and passive properties of dendrites control plasticity, and dendrites support diverse electrical signals including the generation of Ca2+ spikes, Na+ spikes, and "plateau potentials" [299,300,301]. Furthermore, calcium signals can be segregated between dendrites and spines [302,303,304], GABAergic interneurons can selectively shape dendritic branch currents [305, 306], and back-propagating action potentials can be differentially attenuated on the basis of morphology or GABA-ergic input [4, 307]. These mechanisms collectively allow complex control of local calcium via VGCCs and NMDARs. In one highly relevant study, specifically addressing stimulus disambiguation, branch-specific Ca2+ spikes related to two different tasks were shown to be gated by somatostatin positive interneurons (SOMs) on the apical tufts of L5 pyramidals in mouse motor cortex [308]. Behavioral learning and synaptic plasticity were both found to be causally related to this segregated activity, with interference between tasks arising when SOM-based separation of signals was disrupted [308]. Moreover, reactivation during sleep specifically reinforced such branch-specific localization of plasticity, in support of learning [309]. Elsewhere, spatially controlled dendritic plasticity has been shown to support functional linking of memories, for example [310], supporting the hypothesis that similar mechanisms may be at play broadly. While we cannot expand these points in further detail here, they form a key connection between biology and theory.

Summary of metaplasticity and network computation

To recap, basic theories of unconditional Hebbian plasticity qualitatively account for various phenomena. They predict that feed-forward neural pathways, such as thalamo-cortical connections, should develop feature detectors. Local recurrence, as seen between L4 or L2/3 principal neurons, should enhance bidirectional connectivity among similarly tuned neurons, insofar as plasticity reflects symmetric Hebbian rules. To the extent asymmetric rules like classical STDP govern recurrent change, synapses are expected to become more asymmetric. In the former case, recurrent connectivity should produce stable dynamics, whereas in the latter, recurrence should produce sequence-like activity. These types of dynamics have been used to model memory, central pattern generation, and directional association. The implications of non-classical STDP rules, such as those using non-traditional Volterra kernels, are less well understood. When any of these processes are subject to metaplasticity, they are expected to retain some of their fundamental features, as well as gaining new ones.

Theoretical work on modulated plasticity indicates that it can accomplish several things. Making the unconditional forms of plasticity just discussed conditional in an all or none way should produce essentially unsupervised results (feature extractors, Hopfield dynamics, synfire chains), but based only on that subset of the data for which plasticity is applied. More complex modulation by third factors can convert Hebbian forms of plasticity into reinforcement learning algorithms, rather than unsupervised ones, which navigate down gradients of error functions that are implicitly defined by the modulatory inputs and the details of the plasticity rule in question. Lastly, fine grained dendritic calcium dynamics appear critical for generating targeted plasticity that can make use of these theoretical possibilities.

Architectural specialization and metaplasticity

To this point, we have reviewed mechanisms for controlling plasticity and the local network properties they should be related to. But the function of plasticity likely depends on the networks and brain regions in which it occurs. A straightforward hypothesis is then that network specialization and divisions of labor across brain regions determine the implicit functional objectives discussed above, and that plasticity is controlled (via Ca2+ etc) to attain these. For example, predictive coding theories hypothesize that the purpose of sensory systems is largely to transmit unexpected sensory information for further processing [311,312,313]. In this case "minimizing sensory prediction error" might define the relevant optimization problem that plasticity is used to solve. Acetylcholine and norepinephrine, which are related to attention, arousal, and orienting to external stimuli, widely modulate sensory plasticity and may tailor it to solving this problem. In regions more closely dedicated to controlling interactions with the world, behaving in reward-maximizing ways may be the main goal [314]. The cortico-basal-ganglia-thalamic system, which plays a prominent role in action selection and reinforcement learning, appears to support this, and cortico-striatal synaptic plasticity in particular is strongly modulated by dopaminergic reward prediction errors. As we discuss below, motor-associated cortex may operate similarly. Rather than merely being passive (if specialized) substrates for learning, however, large scale networks also route information. This changes the activity observed by plasticity mechanisms, implicitly conditioning them further. Moreover, routing is also an effect of learning, especially at "high leverage" connections, as between cortical and subcortical areas. We review examples of these ideas along with neuromodulation-as-optimization here.

Arousal, surprise, and attention modulate sensory plasticity

A well supported hypothesis regarding ACh and NE holds that they cooperatively tune sensory cortex to adaptively improve sensory processing [315,316,317]. Behaviorally, both acetylcholine and norepinephrine are associated with arousal, and norepinephrine is also closely associated with orienting behavior [318,319,320]. Acetylcholine, furthermore, is associated with directed attention [176, 321,322,323,324]. Cortically, the need for tuning may reflect configuration trade offs, such as between ideal cortical states for performing detection versus discrimination [325,326,327]. Of these, the ideal state for sensory detection appears to be characterized by a number of coordinated changes, including modest network depolarization, diminished endogenous LFP fluctuations, decorrelated activity across neurons, and middling cortical response amplitudes to sensory stimuli [328,329,330,331,332]. Behavioral correlates of this state include alertness, attentiveness, wakefulness, and dilated pupils, which generally co-occur with increased task accuracy, decreased response biases, lower response times and reduced response variability [328,329,330,331,332]. Correlative and causal evidence both suggest that rapid fluctuations in acetylcholine and norepinephrine drive the tuning process producing all of these outcomes, with some evidence suggesting that NE may relatively reliably precede ACh activity as well [328,329,330,331,332].

Both NE and ACh exert local network effects through direct neuromodulation as well as indirectly through modified network inputs, as noted above. Acetylcholine projections appear to be fairly targeted, in the sense that different basal forebrain nuclei preferentially innervate different areas, whereas norepinephrine projections appear more uniform [333, 334]. Locally, the direct effects of Ach can be further subdivided into ionotropic and metabotropic components, since nicotinic acetylcholine receptors (nAChRs) are excitatory ligand gated ion channels. These local AChR impacts are often coupled with attentional recruitment of feedback projections between cortical areas [335,336,337]. As for norepinephrine, ionotropic receptors have not been reported, but NE can exert ionotropic-like effects through β2-adrenergic coupling to Cav1.2 channels and modifications of K+, H-type, and A-type currents, for example [142]. Indirect effects also arise from the coupling of locus coeruleus activity (the NE projection nucleus) to basolateral amygdala neurons with widespread projections, particularly to frontal cortex and hippocampus [15, 338, 339].

Of the "optimal cortical state" effects noted above, acetylcholine is most closely linked to desynchronizing network activity. In sensory circuits, it acts locally to depolarize vasoactive intestinal peptide positive interneurons (VIPs) in L2/3 and L5a via nAChRs [340,341,342,343,344]. These inhibit somatostatin positive interneurons (SOMs) and a subset of parvalbumin positive interneurons (PVs), resulting in disinhibition of principal cells [340,341,342,343,344]. Simultaneously, mAChRs and nAChRs upregulate excitability and activity in subsets of L2/3 and L5a SOMs that are not inhibited by VIPs, as well as the majority of SOMs in L5 [345, 346]. These SOMs target PVs and PYs, and appear to have primarily disinhibitory effects on PYs [345, 346]. In concert with these actions, mAChRs appear to upregulate the excitability of local pyramidal cells and reduce their spike-frequency adaptation [347, 348]. Which pieces of this are necessary and which are sufficient for decorrelating local networks is not entirely clear; both VIP and SOM targeting studies have independently reported sufficiency, but joint engagement certainly appears adequate.

The functional roles of norepinephrine in modulating perceptual processing are less clear. A diverse array of excitatory, inhibitory, and modulatory effects have long been reported, reflecting a difficult to determine internal logic [320, 349, 350]. In local networks, the primary differential effects of LC activity appear to be modifying signal-to-noise ratios, neural gain, neural tuning profiles, and thalamocortical information transmission [350,351,352,353]. A major source of these changes, at least in rodent somatosensory cortex, appears to be modified interactions between the ventral posteromedial nucleus of the thalamus (VPm) and the local thalamo-reticular nucleus (TRN) [351]. Specifically, increases in LC activity were found to drive TRN-VPm interactions via α-adrenergic receptor and T-type calcium currents, biasing thalamic relay of trigeminal ganglion information towards tonic firing rather than bursting. This caused a significant increase in decodable stimulus information conserved by thalamic responses to trigeminal ones [351]. These findings are consistent with previous work showing similar shifts of thalamo-cortical activity away from bursting and towards spiking with noradrenergic activation [354, 355]. More broadly, they appear consistent with the idea of systematically modifying neural gains to improve sensory responsiveness [350]. As such, whereas direct NE impacts on cortical state (via local adrenergic receptors) continue to be somewhat murky, NE may significantly increase perceptual information transmission.

Why should sensory plasticity be modulated by ACh and NE, given these observations? One possibility is that the various changes discussed above maximize the fidelity of sensory responses at precisely the time when feature processing should be most plastic. Salient, arousing stimuli that animals attend to are also those they learn about most quickly [356], and this learning should probably be based on the most highly resolved percepts possible. This information-maximizing approach to plasticity, whereby an organism is trying to extract as much information as possible from important sensory inputs, can be implemented by neuromodulated Hebbian rules based on surprise, in fact [290, 291]. Such rules take the implicit function to be minimized by REINFORCE-like RL algorithms, as discussed above, to be (Bayesian) sensory surprise, which has also been considered in the context of cholinergic modulation by others [357]. These surprise-modulated plasticity rules push neurons to extract independent components of their inputs (per ICA), which have had a long history of consideration in theoretical work on sensory processing [358]. Isomura and Toyoizumi were the first to show how synapse-local information could be used to generate this plasticity however, and the results are of course directly interpretable as feature extractors, per our earlier discussion. Such an account would be compatible with the observation that both high concentrations of NE and moderate concentrations of NE, coupled with ACh, gated the sensory plasticity we discussed above, for example [135]. This could be interpreted as promoting general responsiveness (of plasticity) to highly arousing conditions on the one hand, and targeted responsiveness to alert directed attention on the other.

Dopamine based reinforcement learning adapts behavior

Reward prediction error based modulation plays an especially plausible role in instantiating reinforcement learning algorithms at synapses onto neurons controlling behavioral output [36, 177]. RL algorithms maximize reward attainment by using RPEs to improve action selection. Given the RPE theory of dopamine, this would suggest that plasticity in the striatum, the motor cortex, and the cerebellum, along with pyramidal tract neurons across cortex, should be especially likely to exhibit dopaminergic modulation, because each impacts motor behavior fairly directly. Striatum and frontal cortex, including motor cortex, express high densities of DARs, as noted above, and both cortico-striatal synapses and L5 pyramidal neurons in mPFC have been shown to exhibit dopamine dependent plasticity [13, 114, 130, 133]. DAR expression in the cerebellum is established in some areas, as is expression in L5 throughout cortex broadly, but these facts have received less attention.

The cortico-striatal dopamine modulation discussed above has been integrated into neural network models of reinforcement learning [110, 280]. A major feature of cortico-striatal learning that distinguishes it from the type of simple policy gradient algorithms discussed so far (such as REINFORCE) is that the D1-MSN pathway and the D2-MSN pathway both operate as reinforcement-learners. Furthermore, the extent of learning and the extent to which each pathway has an impact on behavior is also modulated by dopamine (Fig. 4c–e). The Opponent Actor Learning (OpAL) model [287], which summarizes the computations of such networks, is an "actor-critic" model, meaning it represents behavioral policies and assessments of how valuable states of the world are separately [36]. The ventral striatum (and associated cortical and subcortical areas) are hypothesized to track information about how rewarding states of the world are, and these are compared with the results of behavior to generate reward prediction errors (Fig. 4d). Cortico-striatal connections in the D1-MSN and D2-MSN pathways determine action selection. Notably, while these pathways are classically thought to have opposite effects on behavior, the incorporation of neuromodulated, activity-dependent plasticity rules renders them non-redundant. Indeed, plasticity rules that mimic those described above in vitro [114] give rise to specialized representations, whereby the D1 MSNs discriminate between rewarding outcomes, while the D2 MSNs specialize in developing a high resolution policy for avoiding poor ones [287]. Many studies confirm the necessity and sufficiency of these opponent pathways (and synaptic transmission therein) for learning from positive and negative reward outcomes respectively (e.g., [115, 359, 360]). Intracellularly, they implicate PKA, cAMP and DARPP-32 in reinforcement learning [361], and genetic variation in such signaling predicts behavioral learning (including in humans, reviewed in [362]).

a Striatal medium spiny neuron plasticity and excitability depend on DA, ACh, adenosine, and NMDARs. Balance between the two pathways is mediated by DA. Figure follows Shen et al. 2008. b Cortico-basal-ganglia loops connect the cortex to the striatum recurrently and hierarchically. Figure follows Obeso et al 2014. c Internal detail of a single loop, showing dual pathways from striatal D1 and D2-expressing neurons to different output structures along the direct and indirect pathways. D1 MSNs largely reside in the "Go" pathway, D2 MSNs in the "NoGo" pathway. GP denotes globus pallidus. d The Opponent Actor Learning model, which captures cortico-striatal contributions to reinforcement learning computationally. See text for details. e When dopamine is high, the biological details of the model recapitulate empirically observed enhancements in learning to pick the best among good options. When DA is low, the model recapitulates enhanced ability to avoid bad options. These effects arise from the Hebbian reward prediction error modulated strengthening of Go and No-Go pathway MSNs. ΔG = GE ΔN = NE E f Pathological feedback of low DA on enhanced No-Go learning.

Interestingly, DA-based plasticity in MSNs is further gated by ACh, which is transmitted by tonically active striatal interneurons (TANs) [117, 118]. In theoretical models, this TAN gating of RPEs allows striatal learning rates to be adapted online according to uncertainty and reward volatility, a form of metaplasticity that resembles Bayesian learning [116]. Moreover, the plasticity induced by RPEs onto a striatal medium spiny neuron is further tuned by the time delays between glutamatergic and dopaminergic activity at a given spine [13]. As such, spatiotemporal dynamics of dopamine signaling across the striatum in the form of traveling waves can support a form of credit assignment (meaning appropriately targeted plasticity) to the underlying striatal region most relevant for the current behavioral context [113]. Finally, aside from the evidence that opponent D1/D2 pathways mediate learning, recent theoretical work suggests that this metaplasticity scheme is adaptive and provides a lever by which online ("tonic") dopamine levels can rapidly re-weight the contributions of D1 or D2 MSNs to action selection, depending on which population is more well suited to the task environment [363].

Finally, outside of striatal circuits, the reward prediction error theory of dopamine may also accord with the hypothesis that frontal cortex is an evolutionary expansion (and generalization) of motor cortex [364, 365]. From this perspective, DARs could be expected to be widespread frontally (as they are) because learning to take rewarding actions is the typical goal of reward-based reinforcement learning. Cortical L5 generally contains both intratelencephalic (IT) and pyramidal tract (PT) neurons, with the former projecting to cortex and striatum, and the latter projecting to thalamic and lower motor regions [366]. Both classes of neuron are poorly understood in general, but the L5 pyramidal tract of motor cortex disynaptically controls voluntary muscle contraction [366]. A simple hypothesis is therefore that DA-ergic gating of L2/3 to L5 synapses potentiates cortical motor pathways involved in generating subsequent unpredicted reward. This may be in line with observations that dopaminergic innervation of forelimb associated cortex is substantially stronger than that related to hindlimbs in rodents, for example [367], and potentially with dopamine as a requirement for motor learning generally in M1 [368, 369]. The fact that DAR mRNA has been found in corticocortical, corticothalamic, and corticostriatal neurons, but not corticospinal or corticopontine ones complicates this idea however [370].

Metaplasticity via activity routing within and across networks