THE PAPER IN BRIEF

• The brain offers ample inspiration for computer engineers, but such ‘neuromorphic’ devices can be disadvantaged by huge power consumption, limited endurance and considerable variability.

• One of the challenges associated with optimizing these devices involves ascertaining which brain characteristics to emulate.

• In a paper in Nature, Yan et al.1 report a type of synaptic transistor — a device named after its similarities to neural connections known as synapses — that maximizes performance through a ratcheting mechanism that is reminiscent of how neurons strengthen their synapses.

• The transistor could enable energy-efficient artificial-intelligence algorithms, and reproduce some of the many sophisticated behaviours of the brain.

FRANK H. L. KOPPENS: A new twist on synaptic transistors

At the heart of Yan and colleagues’ innovation lies the unusual behaviour of electrons that arises when materials of single-atom thickness are stacked together and then twisted relative to each other. The materials in question are bilayer graphene, which comprises two stacked layers of carbon atoms, sandwiched together by two layers of the dielectric material hexagonal boron nitride (hBN)2. Both of these materials have hexagonal crystal structures, but the spacing between their atoms differs slightly. The overlapping hexagonal patterns create regions of constructive and destructive interference, resulting in a larger-scale pattern known as a moiré lattice.

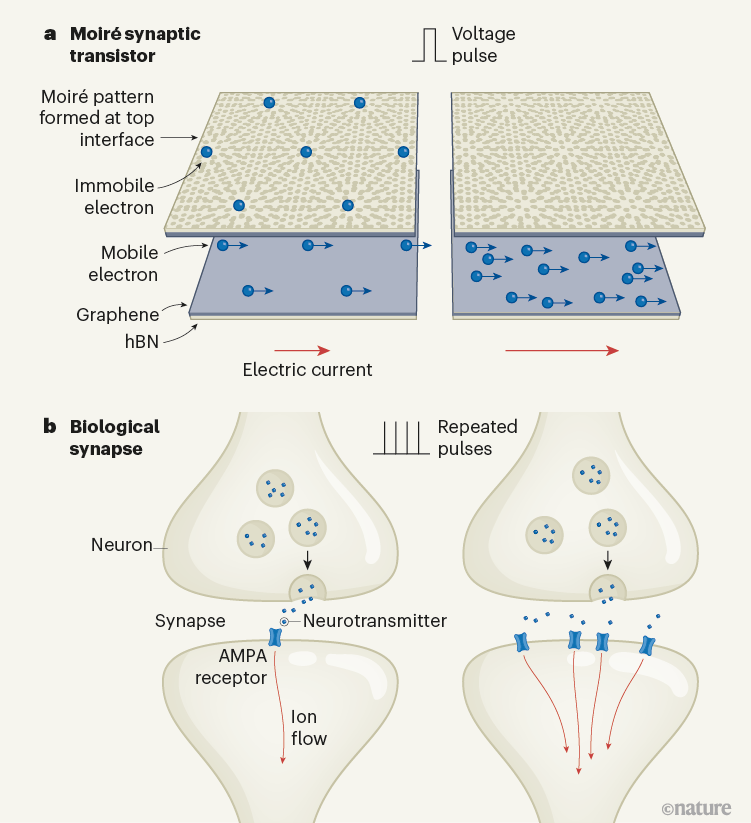

The moiré pattern modifies how electrons are distributed in bilayer graphene: it localizes them periodically throughout the crystal lattice (Fig. 1a). Electrons in the top layer of graphene are affected more by this periodic modulation because the crystal structure of this layer is aligned with that of the hBN above it, and this essentially makes the electrons immobile. By contrast, the hBN below the bottom graphene layer is rotated out of alignment with the graphene, resulting in a weaker electronic modulation3. The electrons in this layer are therefore mobile, and they contribute to the current flow.

Figure 1 | A transistor that imitates a biological synapse. Yan et al.1 built a device comprising two layers of graphene (each a single sheet of carbon atoms) and the dielectric material hexagonal boron nitride (hBN). a, The device is called a moiré synaptic transistor, because it shares similarities with synaptic connections between neurons, and because a ‘moiré’ pattern forms between the overlapping hexagonal crystal structures of the top layer of graphene and hBN. This pattern localizes electrons in the top graphene layer, but those in the bottom layer remain mobile. Applying a voltage pulse to the top gate (a component that regulates the number of electrons in the graphene system; not shown) results in a ratchet effect, through which an electric current is increased with successive pulses. b, This effect is reminiscent of the way in which repeated electrical stimulation can strengthen synapses by enriching protein complexes called AMPA receptors, leading to improved neurotransmitter efficacy and increased ion flow.

This asymmetry between layers makes the transistor function like a kind of ratchet, controlling the flow of mobile electrons and regulating the device’s electrical conductance, which is analogous to synaptic strength. The ratchet is controlled by two ‘gates’ above and below the structure, which regulate the number of electrons in the graphene system. When a voltage pulse is applied to the top gate, the initial rise in voltage adds immobile electrons to the top graphene layer. And when the electron energy levels in this layer are filled, mobile electrons are added to the bottom graphene layer. A subsequent decrease in voltage removes electrons from the top graphene layer, however the mobile electrons in the bottom layer remain. In this way, the voltage pulse changes the conductance in a manner that is reminiscent of the strengthening of a synaptic connection.

Read the paper: Moiré synaptic transistor with room-temperature neuromorphic functionality

What sets Yan and co-worker’s moiré device apart from existing synaptic transistors is that it can be easily tuned, a feature that shares similarities with synaptic behaviour observed in biological neural networks. This makes the transistor ideally suited to advanced artificial intelligence (AI) applications, particularly those involving ‘compute-in-memory’ designs that integrate processing circuitry directly into the memory array, to maximize energy efficiency. It could also allow information to be processed on devices located at the edge of a network, rather than in a centralized data centre, thereby enhancing the security and privacy of data.

Although the authors’ transistor represents an important leap forwards, it is not without its limitations. For instance, stacking the ultrathin materials requires sophisticated fabrication processes, which makes it challenging to scale up the technology for widespread industrial use. On a positive note, there are already methods for growing large-area bilayer graphene4 and hBN5, up to the typical 200- or 300-millimetre sizes used in the silicon industry. This sets the stage for an ambitious, yet timely, endeavour: the fully automated robotic assembly of large-area moiré materials.

If accomplished, this would make Yan and colleagues’ device easier to fabricate, and unlock other moiré-material innovations, such as quantum sensors, non-volatile computer memories and energy-storage devices. It would also bring us closer to integrating moiré synaptic transistors into larger, more complex neural networks — a crucial step towards realizing the full potential of these devices in real-world applications.

JAMES B. AIMONE & FRANCES S. CHANCE: Capturing the brain’s functionality

Yan and co-workers’ advance addresses a long-standing challenge at the intersection of neuroscience and computing: identifying which biophysical features of the immensely complicated brain are necessary for achieving functional neuromorphic computing, and which can be ignored. The authors have succeeded in emulating a characteristic of the brain that is particularly difficult to realize — its synaptic plasticity, which describes neurons’ ability to control the strength of their synaptic connections.

Existing synaptic transistors can be connected together in grid-like architectures that mimic neural networks. But dynamically reprogramming most of these devices remains unreliable or expensive, whereas the brain’s synapses can adapt reliably and robustly over time. Moreover, even if biological mechanisms of synaptic plasticity can be implemented in an artificial system, it remains unclear how to leverage these mechanisms to realize algorithms that can learn like biological systems do.

Memristor devices denoised to achieve thousands of conductance levels

The authors’ moiré synaptic transistor brings the flexibility and control necessary for brain-like synaptic learning by providing a powerful way to tune its electrical conductance — a proxy for synaptic strength. The device’s asymmetric charge-transfer mechanism is reminiscent of processes known as long-term potentiation and long-term depression, in which pulsed electrical stimulation has the effect of strengthening a synapse (or weakening it, in the case of depression). The ratcheting of charge carriers can be considered analogous to the enrichment of protein complexes, known as AMPA receptors, at synapses during long-term potentiation and long-term depression6 (Fig. 1b).

Inspired by observed behaviours in biological synapses, Yan et al. showed that their device could be used to train neuromorphic circuits in a more ‘brain-like’ way than has previously been achieved with artificial synapse devices. Although the two gates in the moiré synaptic transistor could be used in a simple manner to fine-tune synaptic strength (or electrical conductance) directly, in biology, the control of synaptic learning is more nuanced. The authors recognized that aspects of this finer control could also be realized in their device.

Specifically, Yan et al. were able to tune the top and bottom gate voltages to make their moiré synaptic transistor exhibit input-specific adaptation, which is a phenomenon that allows a neuron to control its synaptic learning rates in response to averaged input. This mechanism is used when the eye is deprived (of adequate lighting, for example) to help the brain recall a stored pattern when presented with a similar one.

Nanomaterials pave the way for the next computing generation

The authors’ moiré synaptic transistor could emulate this mechanism when programmed with a learning rule known as the Bienenstock–Cooper–Munro (BCM) model7, which sets a dynamically updated threshold for strengthening or weakening a synapse that depends on the neuron’s history. The BCM rule is an abstract algorithmic description of synaptic plasticity in the brain that has been connected to cognitive behaviours. By demonstrating that their device can implement this rule, Yan et al. have offered a pathway to recreating biorealistic plasticity in human-made hardware.

Their work provides an opportunity for the BCM learning rule to act as a Rosetta Stone between theoretical neuroscience (much of which is based on BCM and similar models) and state-of-the-art neuromorphic computing. For example, the authors’ ingenious dual-gate control could be used to realize synaptic plasticity in the vestibulo-ocular reflex, the mechanism that stabilizes images on the retina as the head moves8. It will be interesting to see what other models of plasticity can be expressed, such as spike-timing dependent plasticity, in which the strengthening of a synapse is dependent on the timing of stimulation9.

Read the paper: Moiré synaptic transistor with room-temperature neuromorphic functionality

Read the paper: Moiré synaptic transistor with room-temperature neuromorphic functionality

Nanomaterials pave the way for the next computing generation

Nanomaterials pave the way for the next computing generation

Memristor devices denoised to achieve thousands of conductance levels

Memristor devices denoised to achieve thousands of conductance levels