Abstract

Purpose:

There is uncertainty about when personalized medicine tests provide economic value. We assessed evidence on the economic value of personalized medicine tests and gaps in the evidence base.

Methods:

We created a unique evidence base by linking data on published cost–utility analyses from the Tufts Cost-Effectiveness Analysis Registry with data measuring test characteristics and reflecting where value analyses may be most needed: (i) tests currently available or in advanced development, (ii) tests for drugs with Food and Drug Administration labels with genetic information, (iii) tests with demonstrated or likely clinical utility, (iv) tests for conditions with high mortality, and (v) tests for conditions with high expenditures.

Results:

We identified 59 cost–utility analyses studies that examined personalized medicine tests (1998–2011). A majority (72%) of the cost/quality-adjusted life year ratios indicate that testing provides better health although at higher cost, with almost half of the ratios falling below $50,000 per quality-adjusted life year gained. One-fifth of the results indicate that tests may save money.

Conclusion:

Many personalized medicine tests have been found to be relatively cost-effective, although fewer have been found to be cost saving, and many available or emerging medicine tests have not been evaluated. More evidence on value will be needed to inform decision making and assessment of genomic priorities.

Genet Med 2014:16(3):251–257

Similar content being viewed by others

Introduction

Personalized medicine is increasingly being developed and used in clinical care, and thus the need to assess its value is inescapable. There is much debate and uncertainty on which personalized medicine tests provide economic value and how to balance the need for innovative new technologies with affordability. A recent editorial in the Journal of the American Medical Association noted that genomics has the potential to “bend the cost curve” by ensuring that the most effective treatment is used in the most appropriate patients—but that it is “too soon to know the extent of this potential benefit.”1 Decision makers and stakeholders need information on which tests provide relatively higher value in order to make appropriate decisions about where to invest efforts in development and adoption. These issues have recently emerged to the fore; for example, the National Institutes of Health has made the determination of genomic priorities and clinical actionability of genetic variants a high priority in a $14 million Funding Opportunity Announcement.2

Our objective is to assess available evidence on the economic value of personalized medicine testing, the gaps to address in the future, and possible approaches to filling those gaps. We measure value as cost-effectiveness, where the outcome of interest is the incremental impact on quality-adjusted life years (QALYs). This type of analysis, known as cost–utility analysis (CUA), is widely used and permits comparisons across diverse interventions, and there are sufficient numbers of such studies to conduct systematic analyses. Cost-effectiveness is only one input into decision making, but it is critical for stakeholders and decision makers to have information on the benefits and costs of technologies in order to make appropriate decisions such as where to invest in research, what technologies should be fast-tracked, and how to choose the most appropriate technology when multiple alternatives are available. Furthermore, it is important to use cost-effectiveness analysis (CEA) not only to evaluate current technologies but also to assess the potential value of emerging technologies in order to have information before decisions are made about their adoption.

Our study adds to the existing literature by using data from a systematic registry of CEAs and linking these studies to published data sources that enable to us to examine test characteristics and gaps in the evidence base. We used the most comprehensive and recent source of CEAs available—the Tufts Cost-Effectiveness Analysis Registry (CEAR). Because this registry compiles extensive data on each study using a systematic process and trained reviewers, it provides more valid and reproducible results than doing identifying and coding studies de novo. This registry, established in 1976, has been used as a data source for almost 50 publications, including those in high-profile journals such as the Journal of the American Medical Association, the New England Journal of Medicine, and Health Affairs.3

Materials and Methods

Overview

No comprehensive data source includes all personalized medicine tests, all economic analyses of such tests, or both CEAs and test characteristics ( Box 1 ). Therefore, we created a unique evidence base by linking multiple, best available published sources (Supplementary Data online). We chose measures based on our conceptual framework, which posits that analyses are needed of the personalized medicine tests that are available or soon to be available (i.e., clinically available or in advanced development; with demonstrated or potential clinical utility; where US Food and Drug Administration (FDA) drug labels include genetic information), and for conditions with high health burden (i.e., conditions with high mortality or health expenditures). There are few published sources of information on actual use of tests,4,5 and thus we could only assess whether tests were available for clinical use.

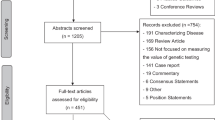

Figure 1 summarizes how we selected studies from the Tufts CEAR and linked these to five data sources measuring test characteristics and where value analyses may be most needed (details below).

Data sources

The CEAR is a comprehensive database abstracting and aggregating information on over 3,000 original, English-language, health-related CUAs published between 1976 and 2011 and indexed in PubMed (Supplementary Data online).6,7 CEAR data were used to assess the characteristics of published CUAs and then were linked to other data sources and measures to assess the availability of evidence on the cost-effectiveness of personalized medicine tests and gaps in the evidence base.

Tests available for clinical use or in advanced development. We identified tests that are clinically available or in advanced development from the Centers for Disease Control and Prevention’s (CDC) GAPP Finder website, a continuously updated, searchable database of genetic tests.8 We matched CUAs based on GAPP Finder information on disease, test, target population, trade name, and intended use.

Tests with FDA labels with genetic information. We identified tests for which there is an FDA label with genetic information from the FDA’s ongoing list of pharmacogenomic markers in drug labels.9 We matched CUAs with the labels if the CUA examined both the biomarker and the drug listed on the label.

Tests with demonstrated or likely clinical utility. We identified tests with “demonstrated” or “likely” clinical utility using the categories developed by the CDC.10,11 Tests with “demonstrated” clinical utility are those recommended by evidence-based panels. Tests with “likely” clinical utility have demonstrated analytic and clinical validity and hold promise for clinical utility, but evidence-based panels have not examined their use or have found insufficient evidence for their use. Note that several tests in the latter category are already in widespread use, e.g., gene expression–profiling tests for breast cancer. We matched CUAs based on information from the CDC website on test/application and scenario.

Tests examining conditions with high mortality or expenditures. We used two measures of health burden, similar to other such studies.12 We matched CUAs to measures of health burden using the condition name. We used PubMed to identify any conditions with high health burden that have relatively few genetic causes and excluded those from our analyses.

-

Top causes of mortality (United States). From among the top 10 causes of mortality reported in the most recent National Vital Statistics reports,13 we used the top eight: heart disease; malignant neoplasms; chronic lower respiratory diseases; cerebrovascular diseases; Alzheimer disease; diabetes mellitus; nephritis, nephrotic syndrome, and nephrosis; and influenza and pneumonia. We excluded accidents and suicides as being generally unrelated to genetics.

-

High-expenditure conditions (United States). From among the top 10 highest-expenditure conditions reported in the most recent Agency for Health Care Research and Quality report,14 we used the top eight: heart conditions; cancer; mental disorders; chronic obstructive pulmonary disease/asthma; osteoarthritis and other joint disorders; hypertension; diabetes mellitus; and hyperlipidemia. We excluded trauma and back problems as generally being unrelated to genetics.

Study selection

A challenge to conducting reviews of personalized medicine tests is that there is no single search term, and previous reviews have used a variety of terms; for example, PubMed did not create the medical subject heading term for “individualized medicine” until 2010. Thus, we used a wide range and number of terms based on input from a reference librarian, expert opinion, the FDA list of biomarkers included in pharmaceutical labeling, and study abstracts. We selected CUAs for inclusion by searching the CEAR using 83 terms, including general terms (e.g., “personalized medicine”) and specific markers (e.g., BRCA for the breast cancer susceptibility gene; Supplementary Data online). We excluded studies that did not examine personalized medicine testing or newborn screening, studies of family history without reference to genetic testing, and studies of other biomarkers such as cholesterol. We validated the completeness of our search terms by comparing our results with those of previous reviews of CUAs of personalized medicine15,16,17,18,19,20,21 and by comparing with PubMed medical subject heading and key-word searches.

To assess variation in these studies, we further analyzed whether the CUAs examined somatic (acquired) mutations—such as cancer, HIV-induced mutations, and germline (inherited) mutations (such as those in BRCA)—in addition to assessing the methodological quality of the CUAs as assigned by trained CEAR reviewers (1: lowest quality; 7: highest quality; see Supplementary Data online). We also compared the CUAs of personalized medicine tests with CUAs of pharmaceuticals. We chose pharmaceuticals for comparison because these interventions are closely related to personalized medicine tests and because there are a large number of studies for analysis.

Two authors independently coded studies and resolved inconsistencies in conjunction with a third author. We used study titles, disease categories, and abstracts to code studies. The unit of analysis was the CUA study. Because CUAs may report multiple ratios, we calculated weighted QALYs to summarize the cost and utility of each study using a previously described method (Supplementary Data online). We used Microsoft Excel (Microsoft, Redmond, WA) for descriptive analyses and Stata version 12 (Stata, College Station, TX) for weighted analyses.

Results

We identified 59 CUAs that specifically analyzed the use of personalized medicine testing (n = 59; Supplementary Data online). The earliest study was published in 1995, and there has been an increase in studies over time, with 10 published in 2011. There is wide variation in the tests examined in the CUAs, with the most common being gene expression–profiling tests for breast cancer (n = 7). The most commonly examined conditions are cancer (particularly breast cancer, n = 14) and infectious diseases (particularly HIV/AIDS (n = 6)). About one-third (39%) of studies examined tests for somatic mutations versus germline mutations. Average scores for methodological quality were 4.5 (scale of 1: lowest to 7: highest). About 20% of studies were funded by industry (n = 11).

Distribution of cost–utility ratios

A majority (72%) of the cost/QALY ratios indicate that personalized medicine tests provide better health, although at higher cost, with almost half of ratios falling under $50,000 per QALY gained, a commonly used threshold, and 80% falling under $100,000 per QALY gained ( Figure 2 ). Twenty percent of the results indicate that tests are cost saving (n = 22) and 8% of the results that tests may cost more without providing better health (n = 17).

There are vastly more CUAs of pharmaceuticals (n = 1,385) than CUAs of personalized medicine tests (n = 59). Although the number of CUAs of personalized medicine tests are increasing over time, in 2011 there were still far more published CUAs of pharmaceuticals (n = 148) than of personalized medicine tests (n = 10). The distribution of cost/QALY ratios for somatic versus germline mutations and for personalized medicine tests versus pharmaceuticals was similar (P = 0.18 and P = 0.96, respectively, weighted χ2 analyses). Average quality scores (4.5) were similar to quality scores for pharmaceutical CUAs (4.5) and in a previous study of the quality of studies included in the complete Tufts Registry (4.31).22

Gap analyses

All of the tests defined by the CDC as having demonstrated clinical utility have associated cost–utility data ( Figure 3 ). However, only about one-quarter of currently available tests and one-fifth of tests with likely clinical utility have associated cost–utility data. Only about one-tenth of drugs with FDA labels including genetic information and one-tenth of tests in advanced development have associated cost–utility data. About two out of three conditions with high mortality or with high expenditures have associated cost–utility data. Of the eight highest mortality conditions in the United States, three have no CUAs of associated tests (cerebrovascular diseases, Alzheimer disease, and influenza). Of the eight highest expenditure conditions, another three have no CUAs of associated tests (hypertension, hyperlipidemia, and osteoarthritis). There are several personalized medicine tests currently available for these conditions, e.g., testing for risk of stroke, Alzheimer disease, hypertension, familial hypercholesterolemia, and osteoarthritis.8

Discussion

We found that relatively few personalized medicine tests have been formally evaluated for their cost-effectiveness. Many tests currently available and many emerging tests have not been evaluated, and there is a lack of CEAs of tests for several conditions with high health burden. We found that a majority of the cost–utility ratios reported indicate that personalized medicine tests provide better health at higher cost, although there are relatively few studies demonstrating cost savings. It is often stated that personalized medicine saves money, and some have argued that personalized medicine must save money in order to be adopted; however, setting such a high bar is inconsistent with evaluation of other interventions. For example, a previous analysis of the Tufts CEAR, published in the New England Journal of Medicine, noted that relatively few preventive interventions actually save money.3

Our study adds to the previous literature by providing updated data and by explicitly examining gaps. In 2004, in one of the earliest reviews, our research group identified only 11 economic valuation studies, and this number included other forms of economic evaluation including CUAs.19 Other reviews have been conducted since that time,15,16,17,18,20,21 although these studies did not explicitly examine gaps. In addition, in the current study, we used an established and validated registry of CUA studies.

We focused only on CUAs, which is the most recommended method according to widely adopted guidelines because it incorporates quality-of-life measure and enables standardized comparisons across studies.23 Our preliminary analyses examining studies using cost per year of life saved (not quality adjusted) as the outcome suggest that our primary results would not be substantially different if we had also included these analyses; however, our inability to include CEAs means that our results are not representative of the “universe” of economic analyses of personalized medicine tests. We recognize that cost-effectiveness methods have limitations and that future analyses may have different results as the technologies evolve and mature. Future research should also consider examining other approaches to measuring values, which have not to date been used widely to examine personalized medicine, such as budget-impact analysis, value-of-information analysis, stated choice, and willingness-to-pay methods.24,25

Our study is constrained by the limitations of existing data sources. Because there is no comprehensive data source that includes all personalized medicine tests, we linked existing data sources that may each have limitations such as incomplete data. Moreover, no available registry includes all types of economic analyses and testing characteristics, and it would be infeasible to create such a registry ourselves due to the extensive resources required to identify and code studies. Although we used a validated registry and carefully defined inclusion and exclusion criteria, we may have missed some studies, and we cannot address possible publication bias. Finally, we recognize that many factors contribute to cost-effectiveness results, including how studies were conducted, the comparisons made, and whether appropriate utility weights were available. The primary purpose of our study was to provide an overall, descriptive review, and thus we conducted a structured review using systematic methods, but we did not pool the results or conduct a meta-analysis to formallyassess heterogeneity. Future studies should examine reasons for differences in cost-effectiveness and the impact of heterogeneity.25,26

Policy implications

One reason why there are relatively few assessments of economic value is that many tests do not have widely accepted evidence of clinical utility—an impact on medical and nonmedical outcomes—and thus it is challenging to conduct definitive CEAs ( Box 2 ). The issues surrounding the definition and measurement of clinical utility are major areas of debate not only for personalized medicine tests and cost-effectiveness but also for other new diagnostics. There are many different approaches to defining and evaluating clinical utility,27 and there is debate over the extent to which there must be demonstrated changes in patient outcomes from multiple randomized clinical trials to establish clinical utility. A National Human Genome Research Institute–sponsored colloquium of leaders in genomic medicine recently noted that, although evidence on utility is needed, the pace of innovation will soon “force clinicians and institutions to deal with genomic information for which evidence may be quite limited….evidentiary thresholds may thus need to be aligned with the intended use of the information, as the impact of genotype driven care on morbidity and mortality cannot be tested for every variant.”28 There are many examples of the complexities of determining clinical utility and cost-effectiveness, e.g., our previous work described the lag in coverage for gene expression profiling for breast cancer (OncotypeDx).29

One reason that clinical utility is not established before adoption is that there are no requirements for such evidence prior to FDA approval, certification by Clinical Laboratory Improvement Amendments of 1988, or coverage decisions by the Center for Medicare and Medicaid Services. Similarly, there are no requirements for assessment of economic value. To illustrate, our previous work has shown that the Medicare program has long struggled with whether and how to consider economic value in coverage decisions30 and that the program appears to cover a number of interventions that do not appear to be cost-effective.31

The catch-22 of value analyses, however, is that they are typically not conducted and published until after there is sufficient evidence of clinical utility, but by that time, the intervention is often already in widespread use and covered by payers, or conversely has been rejected even though it may provide reasonable value.32 Models of “what if” scenarios could be used to assess what interventions are most promising in terms of value and what data inputs and assumptions will drive the value—and thus direct research efforts. The Evaluation of Genomic Applications in Practice and Prevention initiative now uses “what if” scenario analysis to assess the likelihood that a test will provide sufficient clinical utility, allowing researchers to prioritize the tests that are reviewed and thus produce reviews more quickly.33 There are many examples of early CEAs informing adoption and coverage policies, including Medicare coverage decisions.23

Future research on appropriate methods for early evaluation is needed to facilitate the evaluation of interventions more rapidly so that information can be available prior to adoption. This issue is relevant not just for economic value but also for assessments of overall clinical utility. As noted in our study results, very few tests have demonstrated clinical utility as defined by the CDC, yet many tests without this evidence are in widespread use and many prescription drug labels contain pharmacogenomics information—but this has not translated into use of tests in clinical care. The current emphasis on comparative effectiveness studies through the Patient-Centered Outcomes Research Institute could facilitate development of evidence for more rapid decision making,34 although in our opinion it is unfortunate that the institute’s mandate does not include CEA or other economic measures of value or affordability.35

We recognize that some observers may be skeptical about the use of cost-effectiveness as a metric because of fears that its application will stifle innovation and lead to denials in access to care. We acknowledge that value as measured by dollars should not be used in isolation and that balancing all of the factors in adoption decisions is critical. However, it is unrealistic to deny that decisions on whether to adopt new technologies should be divorced from evidence on the value and affordability of those innovations. These issues are accelerating for personalized medicine tests as new technologies such as whole-genome sequencing become more available. Thus, our research group is developing approaches to assessing the value of these new technologies given the complexities they raise, such as the results they may provide on conditions that are not treatable (http://pharmacy.ucsf.edu/news/2013/03/11/1/).25 Our work has shown that stakeholders, such as health payers, want a broad range of information on new technologies in order to promote informed coverage decisions.36

In particular, there is a need for approaches that take into account clinical and economic uncertainty to prioritize where economic evaluations are most needed, in addition to addressing coverage and policy concerns. There should be continued efforts to develop comprehensive databases of personalized medicine tests that include economic information. The National Institutes of Health has created the Genetic Testing Registry, but test submissions are voluntary, and the registry does not include any information on value or cost-effectiveness. As we move into an era of “big data,” it will be increasingly feasible to link data sets and information so that they can be better used to assess value, and the NIH Genetic Testing Registry could be expanded to include measures of affordability and cost. In addition, future research is urgently needed to assess genomic priorities overall so that the variants that are “actionable” for clinical care can be determined, and, as noted previously, the National Institutes of Health is now seeking to fund such an effort. An article in Science37 noted that setting genomic priorities should consider public health implications. We similarly assert that public health implications must be considered in any societal assessment of genomic priorities and that these implications must include economic value and affordability—otherwise efforts to determine clinical actionability will be inadequate.

Currently, no one organization can or should be responsible for considering and determining the economic value of personalized medicine tests and other technologies. The responsibility for balancing benefits and costs has to be shared among patients, providers, industry, payers, professional organizations, and guideline groups. Although the assessment of cost-effectiveness is still often “verboten” because it is equated with “rationing,” a New England Journal of Medicine editorial noted that change is under way: “quietly, Washington policymakers have begun to concede the need to weigh health care’s benefits against its costs if our country is to avert fiscal disaster.”38 For example, the Choosing Wisely campaign by the American Board of Internal Medicine Foundation has enlisted 17 medical societies in an effort to provide higher-quality care that acknowledges our society’s finite health-care resources. There is still room for improvement; a recent review found that slightly more than half of the largest US physician societies explicitly consider costs in their clinical guidance documents; however, many societies ignore costs or remain vague in their approach for considering cost or cost-effectiveness (S. Pearson, personal communication).

It is critical, however, to carefully consider the true value of diagnostics and not impede the need for innovation because of the need to consider economic value. As noted in a recent editorial by Gottlieb and Makower39—Gottlieb has held senior roles in the federal government including deputy commissioner of the FDA—it is important to consider and measure the downstream benefits of innovative new technologies and not just consider the up-front costs of diagnostics but rather their impact on the entire care episode.

In conclusion, the value of personalized medicine has been hotly debated—and continues to be debated—as stakeholders struggle to balance the need for innovative technologies with the need to provide high-value care. Our research highlights gaps in what is known about the value of personalized medicine tests—information that can be used to inform research priorities, clinical care, industry investment, and coverage and policy decisions.

Disclosure

The authors declare no conflict of interest.

References

Armstrong K . Can genomics bend the cost curve? JAMA 2012;307:1031–1032.

Clinically Relevant Genetic Variants Resource: A Unified Approach for Identifying Genetic Variants for Clinical Use (U01). http://grants.nih.gov/grants/guide/rfa-files/rfa-hg-12-016.html. Accessed 10 December, 2012.

Cohen JT, Neumann PJ, Weinstein MC . Does preventive care save money? Health economics and the presidential candidates. N Engl J Med 2008;358:661–663.

Phillips KA . Closing the evidence gap in the use of emerging testing technologies in clinical practice. JAMA 2008;300:2542–2544.

Phillips KA, Liang SY, Van Bebber S ; Canpers Research Group. Challenges to the translation of genomic information into clinical practice and health policy: Utilization, preferences and economic value. Curr Opin Mol Ther 2008;10:260–266.

Neumann PJ, Greenberg D, Olchanski NV, Stone PW, Rosen AB . Growth and quality of the cost-utility literature, 1976–2001. Value Health 2005;8:3–9.

Tufts Cost-Effectiveness Analysis Registry. http://www.cearegistry.org. Accessed 10 December 2012.

GAPP Finder. Version 1.0. http://www.hugenavigator.net/GAPPKB/topicStartPage.do. Accessed 2 May 2013.

Food and Drug Administration Table of Pharmacogenomic Biomarkers in Drug Labels. http://www.fda.gov/drugs/scienceresearch/researchareas/pharmacogenetics/ucm083378.htm. Accessed 2 May 2013.

Khoury MJ, Bowen MS, Burke W, et al. Current priorities for public health practice in addressing the role of human genomics in improving population health. Am J Prev Med 2011;40:486–493.

Centers for Disease Control and Prevention Genomic Tests by Levels of Evidence. http://www.cdc.gov/genomics/gtesting/tier.htm. Accessed 1 May 2013.

Neumann PJ, Rosen AB, Greenberg D, et al. Can we better prioritize resources for cost-utility research? Med Decis Making 2005;25:429–436.

Murphy S, Xu J, Kochanek K . Deaths: Preliminary data for 2010. National Center for Health Statistics: Hyattsville, MD, 2012.

Agency for Healthcare Research and Quality. Total Expenses and Percent Distribution for Selected Conditions by Type of Service: United States, 2009. Medical Expenditure Panel Survey Household Component Data. Generated interactively. http://meps.ahrq.gov/mepsweb/data_stats/tables_compendia_hh_interactive.jsp?_SERVICE=MEPSSocket0&_PROGRAM=MEPSPGM.TC.SAS&File=HCFY2009&Table=HCFY2009_CNDXP_C&_Debug=. Accessed 2 May 2013.

Beaulieu M, de Denus S, Lachaine J . Systematic review of pharmacoeconomic studies of pharmacogenomic tests. Pharmacogenomics 2010;11:1573–1590.

Carlson JJ, Henrikson NB, Veenstra DL, Ramsey SD . Economic analyses of human genetics services: a systematic review. Genet Med 2005;7:519–523.

Djalalov S, Musa Z, Mendelson M, Siminovitch K, Hoch J . A review of economic evaluations of genetic testing services and interventions (2004–2009). Genet Med 2011;13:89–94.

Giacomini M, Miller F, O’Brien BJ . Economic considerations for health insurance coverage of emerging genetic tests. Community Genet 2003;6:61–73.

Phillips KA, Van Bebber SL . A systematic review of cost-effectiveness analyses of pharmacogenomic interventions. Pharmacogenomics 2004;5:1139–1149.

Vegter S, Boersma C, Rozenbaum M, Wilffert B, Navis G, Postma MJ . Pharmacoeconomic evaluations of pharmacogenetic and genomic screening programmes: a systematic review on content and adherence to guidelines. Pharmacoeconomics 2008;26:569–587.

Wong WB, Carlson JJ, Thariani R, Veenstra DL . Cost effectiveness of pharmacogenomics: a critical and systematic review. Pharmacoeconomics 2010;28:1001–1013.

Neumann PJ, Fang CH, Cohen JT . 30 years of pharmaceutical cost-utility analyses: growth, diversity and methodological improvement. Pharmacoeconomics 2009;27:861–872.

Gold M, Siegel J, Russel L, Weinstein M . Cost-Effectiveness in Health and Medicine. Oxford University Press: New York, NY, 1996.

National Institutes of Health Common Fund Teleconference Executive Summary: Economics of Personalized Health Care and Prevention. http://commonfund.nih.gov/pdf/HealthEconomics_Personalized_Health_Care_summary_REV_9-28-12.pdf. Accessed 21 February 2013.

Phillips KA, Sakowski JA, Liang SY, Ponce NA . Economic Perspectives on Personalized Health Care and Prevention. Forum for Health Economics and Policy 2013;16(2):57–86.

Sculpher M . Subgroups and heterogeneity in cost-effectiveness analysis. Pharmacoeconomics 2008;26:799–806.

Burke W, Laberge AM, Press N . Debating clinical utility. Public Health Genomics 2010;13:215–223.

Manolio TA, Chisholm RL, Ozenberger B, et al. Implementing genomic medicine in the clinic: the future is here. Genet Med 2013;15:258–267.

Trosman JR, Van Bebber SL, Phillips KA . Coverage policy development for personalized medicine: private payer perspectives on developing policy for the 21-gene assay. J Oncol Pract 2010;6:238–242.

Neumann PJ, Chambers JD . Medicare’s enduring struggle to define “reasonable and necessary” care. N Engl J Med 2012;367:1775–1777.

Neumann PJ, Tunis SR . Medicare and medical technology–the growing demand for relevant outcomes. N Engl J Med 2010;362:377–379.

Greenberg D, Rosen AB, Olchanski NV, Stone PW, Nadai J, Neumann PJ . Delays in publication of cost utility analyses conducted alongside clinical trials: registry analysis. BMJ 2004;328:1536–1537.

Veenstra DL, Piper M, Haddow JE, et al. Improving the efficiency and relevance of evidence-based recommendations in the era of whole-genome sequencing: an EGAPP methods update. Genet Med 2013;15:14–24.

Deverka PA, Vernon J, McLeod HL . Economic opportunities and challenges for pharmacogenomics. Annu Rev Pharmacol Toxicol 2010;50:423–437.

Neumann PJ, Weinstein MC . Legislating against use of cost-effectiveness information. N Engl J Med 2010;363:1495–1497.

Trosman JR, Van Bebber SL, Phillips KA . Health technology assessment and private payers’ coverage of personalized medicine. J Oncol Pract 2011;7(suppl 3):18s–24s.

Merikangas KR, Risch N . Genomic priorities and public health. Science 2003;302:599–601.

Bloche MG . Beyond the “R word”? Medicine’s new frugality. N Engl J Med 2012;366:1951–1953.

Gottlieb S, Makower J . A role for entrepreneurs: an observation on lowering healthcare costs via technology innovation. Am J Prev Med 2013;44(1 suppl 1):S43–S47.

Acknowledgements

This study was funded by a Program Project Grant from the National Cancer Institute to K.A.P. (P01CA130818), an R01 grant from the National Human Genome Research Institute to K.A.P. (R01HG007063), and a research award from the Department of Clinical Pharmacy, University of California, San Francisco. The funding organizations and sponsors had no role in the design and conduct of the study, in the collection, management, analysis, and interpretation of the data, or in the approval of the manuscript. We are grateful for comments from Teja Thorat, M.Sc., MPH, at the Tufts Medical Center and from Deborah Marshall, PhD, at the University of Calgary; and for data assistance from Cynthia Liang, PharmD, and Gregory Balani,BA. Ms. Thorat, Ms. Liang, Mr. Balani, and Dr. Marshall received no financial compensation for their contributions.

Author information

Authors and Affiliations

Corresponding author

Supplementary information

Supplementary Data

(DOC 407 kb)

Rights and permissions

About this article

Cite this article

Phillips, K., Ann Sakowski, J., Trosman, J. et al. The economic value of personalized medicine tests: what we know and what we need to know. Genet Med 16, 251–257 (2014). https://doi.org/10.1038/gim.2013.122

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/gim.2013.122

Keywords

This article is cited by

-

Precision Medicine in Type 1 Diabetes

Journal of the Indian Institute of Science (2023)

-

Tools for the Economic Evaluation of Precision Medicine: A Scoping Review of Frameworks for Valuing Heterogeneity-Informed Decisions

PharmacoEconomics (2022)

-

The Interface of Therapeutics and Genomics in Cardiovascular Medicine

Cardiovascular Drugs and Therapy (2021)

-

When Tissue is an Issue the Liquid Biopsy is Nonissue: A Review

Oncology and Therapy (2021)

-

Transformation of the Doctor–Patient Relationship: Big Data, Accountable Care, and Predictive Health Analytics

HEC Forum (2019)