Abstract

We consider the Dyson hierarchical graph  , that is a weighted fully-connected graph, where the pattern of weights is ruled by the parameter σ ∈ (1/2, 1]. Exploiting the deterministic recursivity through which

, that is a weighted fully-connected graph, where the pattern of weights is ruled by the parameter σ ∈ (1/2, 1]. Exploiting the deterministic recursivity through which  is built, we are able to derive explicitly the whole set of the eigenvalues and the eigenvectors for its Laplacian matrix. Given that the Laplacian operator is intrinsically implied in the analysis of dynamic processes (e.g., random walks) occurring on the graph, as well as in the investigation of the dynamical properties of connected structures themselves (e.g., vibrational structures and relaxation modes), this result allows addressing analytically a large class of problems. In particular, as examples of applications, we study the random walk and the continuous-time quantum walk embedded in

is built, we are able to derive explicitly the whole set of the eigenvalues and the eigenvectors for its Laplacian matrix. Given that the Laplacian operator is intrinsically implied in the analysis of dynamic processes (e.g., random walks) occurring on the graph, as well as in the investigation of the dynamical properties of connected structures themselves (e.g., vibrational structures and relaxation modes), this result allows addressing analytically a large class of problems. In particular, as examples of applications, we study the random walk and the continuous-time quantum walk embedded in  , the relaxation times of a polymer whose structure is described by

, the relaxation times of a polymer whose structure is described by  , and the community structure of

, and the community structure of  in terms of modularity measures.

in terms of modularity measures.

Similar content being viewed by others

Introduction

Most real-life networks display non-trivial features concerning their topological structure as well as the pattern of weights associated to their links. In fact, the arrangement of the connections between their constituent elements is typically neither purely regular nor purely random and, also, such connections are associated to weights accounting for wiring costs which may depend on the distance (e.g., a physical distance, a social distance) between elements to be linked1,2,3,4,5,6.

As well known, the topology and the distribution of weights have strong influence on the dynamical properties of the structure and on the dynamical properties of processes embedded in the structure itself. This is the case, for instance, for the dynamics of polymer networks (e.g., their response to external forces7), for diffusion and transport processes (e.g., epidemics in social networks8, chemical-kinetics9, quantum search algorithms10), for statistical mechanics models (e.g., ferromagnetic systems11,12, glasses13 and neural networks14).

Now, the topology and the pattern of weights of a given structure can be mathematically captured in terms of the Laplacian matrix, and most dynamical properties can be fully described through the Laplacian eigenvectors and eigenvalues (see e.g., the reviews15,16,17,18,19,20). Also, recent developments have shown how to approach analytically the solution of spin systems via methods based on the extension of the replica method where both the degree of nodes (via “hard constraints”) and the matrix spectrum (via a “soft constraint”) are prescribed21.

Beyond the above mentioned physically-driven applications, the study of the graph spectrum realizes increasingly rich connections with computer science (see e.g., ref. 22) and with many other areas of mathematics such as differential geometry (see e.g., refs 19 and 23). As a result, during the past few decades, the study of Laplacian eigenvalues has attracted an upsurge of interest. Among the (deterministic) structures for which the full knowledge of the spectrum has been achieved we can mention several examples of fractals24,25, of small-world networks26, and of scale-free graphs27, just to cite a few. However, in general, deriving the exact Laplacian spectrum for an arbitrary system is a challenging task and the use of deterministic structures is of much help to this aim.

Here we focus on a particular example of weighted graph, referred to as  , and we derive analytically its Laplacian spectrum. More precisely, the graph considered is a weighted, fully-connected network, originally introduced by Dyson28 to mimic a one-dimensional structure with long-range interactions. In fact, the weight Jij associated to the link connecting node i and node j scales as

, and we derive analytically its Laplacian spectrum. More precisely, the graph considered is a weighted, fully-connected network, originally introduced by Dyson28 to mimic a one-dimensional structure with long-range interactions. In fact, the weight Jij associated to the link connecting node i and node j scales as  , where dij is the distance between the nodes considered and σ is a positive tunable parameter that rules the decay of the interaction between nodes as their distance varies. This network is built deterministically and recursively, and we show that, given its building procedure, the coupling matrix J exhibits a block form which allows the analytical investigation of its spectrum. In fact, we are able to derive the full, exact Laplacian spectrum as well as its eigenvectors.

, where dij is the distance between the nodes considered and σ is a positive tunable parameter that rules the decay of the interaction between nodes as their distance varies. This network is built deterministically and recursively, and we show that, given its building procedure, the coupling matrix J exhibits a block form which allows the analytical investigation of its spectrum. In fact, we are able to derive the full, exact Laplacian spectrum as well as its eigenvectors.

These findings can be exploited in a wide range of problems, from structural problems (see e.g., ref. 29), such as the determination of the spanning trees, of the resistance distance and of the community structures, to dynamical problems as those mentioned above. In particular, here, we address a few applications concerning several different fields to show the effectiveness of the Laplacian spectrum.

First, we calculate the mean first-passage time for a random walker embedded in  , finding that the expected time to first reach a given node depends functionally on σ and it grows as the inhomogeneity of the pattern of weights is enhanced.

, finding that the expected time to first reach a given node depends functionally on σ and it grows as the inhomogeneity of the pattern of weights is enhanced.

Then, we consider a quantum particle moving in a potential described by  (namely the Hamiltonian which determines the time evolution is identified by the Laplacian of

(namely the Hamiltonian which determines the time evolution is identified by the Laplacian of  ) and we calculate the long-time average, finding that, in the limit of large size, it converges to 1/3, much above the equipartition limit 1/N expected for classical propagation, hence suggesting that

) and we calculate the long-time average, finding that, in the limit of large size, it converges to 1/3, much above the equipartition limit 1/N expected for classical propagation, hence suggesting that  is not well performing as far as coherent propagation is concerned.

is not well performing as far as coherent propagation is concerned.

Next, we consider  in the framework of generalized Gaussian structures and, based on the spectra, we calculate the structural average of the mean monomer displacement under applied constant force and the mechanical relaxation moduli, again highlighting the role of inhomogeneity in the coupling pattern.

in the framework of generalized Gaussian structures and, based on the spectra, we calculate the structural average of the mean monomer displacement under applied constant force and the mechanical relaxation moduli, again highlighting the role of inhomogeneity in the coupling pattern.

Finally, we exploit our results on the spectra of  to investigate its community structure. In fact, since the graph under study is regular, the knowledge of the second largest Laplacian eigenvalue allows us to estimate its modularity as a function of σ and of the partition considered.

to investigate its community structure. In fact, since the graph under study is regular, the knowledge of the second largest Laplacian eigenvalue allows us to estimate its modularity as a function of σ and of the partition considered.

The paper is organized as follows. In the section “The Laplacian spectrum and its applications” we review the basic definitions concerning the Laplacian matrix and in the section “Description of the hierarchical network” we review the hierarchical structure considered, discussing its building procedure and the block structure of its coupling matrix. Then, in the sections “Eigenvalues of the Dyson hierarchical graph” and “Eigenvectors” we derive analytically the eigenvalues and the eigenvectors, respectively, of the Laplacian matrix for the graph considered, while in the section “Examples of applications” we exploit these findings to derive some results concerning applications in several fields. Finally, the section “Conclusions” is left for conclusions, remarks and outlooks.

The Laplacian spectrum and its applications

The Laplacian matrix has a long history in science and there are several books and survey papers dealing with its mathematical properties and applications, see e.g., refs 15, 16, 17, 18, 19, 20. Here we just review some basic definitions in order to provide a background for the following analysis.

Let J be the generalized adjacency matrix (weight matrix) of an arbitrary graph  of size N, in such a way that the entry Jij of J is non null if i and j are adjacent in

of size N, in such a way that the entry Jij of J is non null if i and j are adjacent in  , otherwise Jij is zero. We call wi the weighted degree of node i which is defined as

, otherwise Jij is zero. We call wi the weighted degree of node i which is defined as  . Further, let W be the diagonal matrix, whose entries are given by Wij = wiδij. The Laplacian matrix of

. Further, let W be the diagonal matrix, whose entries are given by Wij = wiδij. The Laplacian matrix of  is then defined as

is then defined as

Given that L is semi-definite positive, the Laplacian eigenvalues are all real and non-negative. Also, they are contained in the interval [0, min{N, 2wmax}], where  . The set of all N Laplacian eigenvalues

. The set of all N Laplacian eigenvalues  is called the Laplacian spectrum. Following the definition (1), the smallest eigenvalue

is called the Laplacian spectrum. Following the definition (1), the smallest eigenvalue  is always null and corresponds to the eigenvector μ1 = eN, according to the Perron-Frobenius theorem. The second smallest eigenvalue is φ2 ≥ 0, and it equals zero only if the graph is disconnected. Thus, the multiplicity of 0 as an eigenvalue of L corresponds to the number of components of

is always null and corresponds to the eigenvector μ1 = eN, according to the Perron-Frobenius theorem. The second smallest eigenvalue is φ2 ≥ 0, and it equals zero only if the graph is disconnected. Thus, the multiplicity of 0 as an eigenvalue of L corresponds to the number of components of  .

.

The smallest non-zero Laplacian eigenvalue is often referred to as “spectral gap” (or also “algebraic connectivity”) and it provides information on the effective bipartitioning of a graph. Basically, a graph with a “small” first non-trivial Laplacian eigenvalue has a relatively clean bisection (i.e., the smaller the spectral gap and the smaller the relative number of edges required to be cut away to generate a bipartition), conversely, a large spectral gap characterizes non-structured networks, with poor modular structure (see e.g., refs 30 and 31).

The spectral gap has also remarkable effects on dynamical processes. For instance, let us consider a system of N oscillators represented by the nodes of  , which are interconnected pairwise by means of active links; the time evolution of the i-th oscillator is given by

, which are interconnected pairwise by means of active links; the time evolution of the i-th oscillator is given by  , where F and H are the evolution and the coupling functions, respectively, and β is a coupling constant. In this case a network exhibits good synchronizability if the eigenratio φN/φ2 is as small as possible and a small spectral gap is therefore likely to imply a poor synchronizability32.

, where F and H are the evolution and the coupling functions, respectively, and β is a coupling constant. In this case a network exhibits good synchronizability if the eigenratio φN/φ2 is as small as possible and a small spectral gap is therefore likely to imply a poor synchronizability32.

However, probably the easiest dynamical process affected by the underlying topology is the random walk. In this context, the spectral gap is associated with spreading efficiency: random walks move around quickly and disseminate fluently on graphs with large spectral gap in the sense that they are very unlikely to stay long within a given subset of vertices unless its complementary subgraph is very small33.

Finally, we mention at the relation between the number of spanning trees in a graph  and its Laplacian spectrum. Let us denote with

and its Laplacian spectrum. Let us denote with  the number of spanning trees in

the number of spanning trees in  : the Kirchhoff matrix-tree theorem states that (see e.g., refs 34 and 35)

: the Kirchhoff matrix-tree theorem states that (see e.g., refs 34 and 35)  . This formula turns out to be extremely useful in order to get bounds for

. This formula turns out to be extremely useful in order to get bounds for  even without having the exact spectrum of the related graph, or in order to estimate the number of spanning trees of complex graphs which can be defined as combinations of simpler graphs for which the exact spectrum is known (see e.g., ref. 36).

even without having the exact spectrum of the related graph, or in order to estimate the number of spanning trees of complex graphs which can be defined as combinations of simpler graphs for which the exact spectrum is known (see e.g., ref. 36).

For a weighted graph where  is the number of edges joining i and j, the previous formula for

is the number of edges joining i and j, the previous formula for  still holds. More generally, when

still holds. More generally, when  one can still look for the number of spanning trees on the bare topology (i.e., neglecting weights), or look for the minimum spanning tree(s), where the sum of the weights over all the edges making up the tree(s) is minimal (see e.g., ref. 37).

one can still look for the number of spanning trees on the bare topology (i.e., neglecting weights), or look for the minimum spanning tree(s), where the sum of the weights over all the edges making up the tree(s) is minimal (see e.g., ref. 37).

Beyond the purely mathematical point of view, deriving  is a key problem in many areas of experimental design for the synthesis of reliable communication networks where the links of the network are subject to failure38. Also, the number of spanning trees is related to the configurational integral (or partition function) Z of a Gaussian macromolecule, whose architecture is described by

is a key problem in many areas of experimental design for the synthesis of reliable communication networks where the links of the network are subject to failure38. Also, the number of spanning trees is related to the configurational integral (or partition function) Z of a Gaussian macromolecule, whose architecture is described by  ; in fact, one has

; in fact, one has  , where the proportionality constant does not depend on the topology but just on the number of monomers, on the temperature and on the spring constant between beads39.

, where the proportionality constant does not depend on the topology but just on the number of monomers, on the temperature and on the spring constant between beads39.

Further applications for the Laplacian spectrum will be reviewed and deepened in the section “Examples of applications” focusing on the hierarchical Dyson network.

Description of the hierarchical network

In this work we focus on a deterministic, weighted, recursively grown graph, referred to as  , originally introduced by Dyson to study the statistical-mechanics of spin systems beyond the mean-field scenario (corresponding to a fully-connected, unweighted embedding)28. The topological properties of this graph have been discussed in refs 14, 40 and 41, and here we briefly review them. The construction begins with 2 nodes, connected with a link carrying a weight J(1, 1, σ) = 4−σ. We refer to this graph as

, originally introduced by Dyson to study the statistical-mechanics of spin systems beyond the mean-field scenario (corresponding to a fully-connected, unweighted embedding)28. The topological properties of this graph have been discussed in refs 14, 40 and 41, and here we briefly review them. The construction begins with 2 nodes, connected with a link carrying a weight J(1, 1, σ) = 4−σ. We refer to this graph as  , and in the notation J(d, k, σ) we highlight the dependence on the graph iteration k and on the system parameter σ, also, d represents the iteration when the nodes considered first turn out to be connected. At the next step, one takes two replicas of

, and in the notation J(d, k, σ) we highlight the dependence on the graph iteration k and on the system parameter σ, also, d represents the iteration when the nodes considered first turn out to be connected. At the next step, one takes two replicas of  and connects the nodes pertaining to different replicas with links displaying a weight J(2, 2, σ) = 4−2σ; moreover, the weight on the existing links is updated as J(1, 1, σ) → J(1, 2, σ) = J(1, 1, σ) + J(2, 2, σ). This realizes the graph

and connects the nodes pertaining to different replicas with links displaying a weight J(2, 2, σ) = 4−2σ; moreover, the weight on the existing links is updated as J(1, 1, σ) → J(1, 2, σ) = J(1, 1, σ) + J(2, 2, σ). This realizes the graph  , which counts overall 4 nodes. At the generic k-th iteration, one takes two replicas of

, which counts overall 4 nodes. At the generic k-th iteration, one takes two replicas of  , insert 22k−1 new links, each carrying a weight J(k, k, σ) = 4−kσ, among nodes pertaining to different replicas, and the weights on existing links are updated as J(d, k − 1, σ) → J(d, k, σ) = J(d, k − 1, σ) + J(k, k, σ), for any d < k. If we stop the iterative procedure at the K-th iteration, the final graph

, insert 22k−1 new links, each carrying a weight J(k, k, σ) = 4−kσ, among nodes pertaining to different replicas, and the weights on existing links are updated as J(d, k − 1, σ) → J(d, k, σ) = J(d, k − 1, σ) + J(k, k, σ), for any d < k. If we stop the iterative procedure at the K-th iteration, the final graph  counts N = 2K nodes and the coupling between any pair of nodes can be expressed as

counts N = 2K nodes and the coupling between any pair of nodes can be expressed as

with d ∈ {1, …, K}. Remarkably, this iterative procedure allows for a definition of metric: two nodes are said to be at distance d if they occur to be first connected at the d-th iteration [Note: One can check that this metric is intrinsically ultrametric since, beyond the standard conditions for a well defined metric (dij ≥ 0;  ; dij = dji), the so-called ultrametric inequality (dij ≤ max(diz, dzj)) also holds.]. As a result, we can define a coupling matrix J associated to the network

; dij = dji), the so-called ultrametric inequality (dij ≤ max(diz, dzj)) also holds.]. As a result, we can define a coupling matrix J associated to the network  such that the entry Jij depends on the nodes (i, j) considered only through their distance dij, namely

such that the entry Jij depends on the nodes (i, j) considered only through their distance dij, namely

Given the building procedure of  , a generic node i has 2d−1 nodes at distance d ∈ {1, …, K}. Moreover, the total weight of the links stemming from a single node i can be written as

, a generic node i has 2d−1 nodes at distance d ∈ {1, …, K}. Moreover, the total weight of the links stemming from a single node i can be written as

Due to the symmetry underlying the network, wi does not depend on the site i, so one can simply write wi = w.

Beyond the coupling matrix J, one can introduce the Laplacian matrix L, which, as anticipated in Eq. 1, is defined as L = W − J, where W is a diagonal matrix with elements Wij = wδij.

Before concluding this section it is worth stressing that the parameter σ is bounded as 1/2 < σ ≤ 1. In a statistical mechanics context this ensures that the Dyson model is thermodynamically well defined13,14; in this context the lower bound σ > 1/2 ensures that the weight w remains finite in the limit N → ∞, while the upper bound σ ≤ 1 ensures that the heterogeneity in the coupling pattern is not too strong (namely, that the relaxation time, given by the inverse of the spectral gap, does not grow faster than the system size).

Eigenvalues of the Dyson hierarchical graph

In order to get familiar with the structure of the matrix J, it is convenient to write it down explicitly for a small value of K. In particular, for K = 3 it reads as

where we posed t = 4−σ and we highlighted with the superscript (N) the size of the matrix; in general, N = 2K and here N = 8. The block structure is evident and can be schematised as

where L(N/2) and R(N/2) are square matrices of size  . For such matrices, the determinant can be computed as

. For such matrices, the determinant can be computed as

Using the ultrametric structure of J(N), we can iterate this block decomposition. In fact, for example, after the first decomposition we obtain two matrices  and

and  such that

such that

Now, both  and

and  display the same block structure and for each an expression analogous to (7) holds in such a way that

display the same block structure and for each an expression analogous to (7) holds in such a way that

At this point, we pose

where, as stated previously, the superscript indicates the size of the matrices and, proceeding iteratively, we get a set of N/2 matrices of size 2 × 2 and referred to as  with l = 1, …, N/2.

with l = 1, …, N/2.

More precisely, at every n-th iteration, we can write 2n block matrices  of size N/2n, n = 1, …, K − 1, obtained as the sum or the difference of the 2n−1 matrices of the previous iteration. With this argument, we can state that our determinant is a product of N/2 determinants of matrices 2 × 2 as

of size N/2n, n = 1, …, K − 1, obtained as the sum or the difference of the 2n−1 matrices of the previous iteration. With this argument, we can state that our determinant is a product of N/2 determinants of matrices 2 × 2 as

Clearly, in order to compute the eigenvalues of J, we can again use this scheme, but taking as initial matrix J − λ I. In this case, with some algebra, we obtain the following

where pn is the determinant of a proper matrix of size N/2n × N/2n and it reads as

By plugging the previous expression for pn into Eq. 11 and solving for the roots of det(J − λ I) = 0, we get K + 1 distinct eigenvalues that can be written as

Notice that, as n increases, both λn and its multiplicity decreases.

Actually, we are mainly interested in the spectrum of the Laplacian matrix L = W − J, with W = wδij, where we recall that w is the weighted degree defined in Eq. 4. Now, denoting with φn the n-th eigenvalue of L, and exploiting the fact that W is proportional to the identity matrix, one can write

More precisely we have

Of course, since L is semidefinite positive, φn ≥ 0  , and, in particular, omitting the trivial eigenvalue φK = 0, we have

, and, in particular, omitting the trivial eigenvalue φK = 0, we have

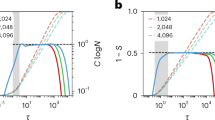

From Eq. 16 we notice that the larger the value of φn, and the larger its algebraic multiplicity (see also Fig. 1, panel a). Moreover, from Eq. 18 we see that φ0 decreases with σ (see Fig. 1, panel b) and, as a consequence, the spectrum covers a narrower interval (we recall that φK = 0 hence the spectrum width is given by φ0). However, the eigenratio φ0/φK−1 (which provides a more effective measure of the spectrum width) grows with σ implying poor synchronizability for large σ. This is consistent with the fact that large values of σ correspond to heterogeneous patterns of couplings41. Similarly, the spectral gap, corresponding to φK−1, decreases fast with σ (see Fig. 1, panel c) and, as a consequence, the spreading efficiency on  is weakened as the patter of couplings gets more heterogeneous.

is weakened as the patter of couplings gets more heterogeneous.

Panel a: Eigenvalue spectrum of L for K = 8 and for different values of σ. The multiplicity of an eigenvalue corresponds to the width of the related plateau and it grows with the magnitude of the eigenvalue. Moreover, the spectrum is broadened over a range which decreases with σ. Panel b: trend of the eigenvalue φ0 when σ varies in the interval (1/2, 1] for different values of K as shown in the legend. Since φ0 corresponds to the largest eigenvalue this also provides the span of the spectrum itself (notice that the smallest eigenvalue φK is always null). Panel c: trend of the eigenvalue φK−1 when σ varies in the interval (1/2, 1] for different values of K (the legend is the same as in panel b). Since φK−1 corresponds to the smallest non-null eigenvalue this also provides the spectral gap for  .

.

Equations 16 and 17 provide an explicit expression for the spectrum of the Laplacian matrix associated to the hierarchical graph under study. The Laplacian spectral density ρ(φ), where ρ(φ)dφ returns the fraction of eigenvalues laying in the interval (φ, φ + dφ), can be analytically recovered as follows. First, we determine the cumulative distribution Cum(φ): fixing an arbitrary value  , the overall number of eigenvalues smaller than or equal to

, the overall number of eigenvalues smaller than or equal to  is given by

is given by

where  is obtained by inverting Eq. 16, that is,

is obtained by inverting Eq. 16, that is,

and where  denotes the largest integer not greater than x. We stress that

denotes the largest integer not greater than x. We stress that  provides the index n such that φn is the eigenvalue closest to

provides the index n such that φn is the eigenvalue closest to  , with

, with  . Moreover, we remark that in Eq. 20, the index of the sum varies between

. Moreover, we remark that in Eq. 20, the index of the sum varies between  and K − 1: this is because, according to the notation introduced in Eq. 16, the eigenvalues decrease as n increases, so to find all the eigenvalues smaller than or equal to a fixed

and K − 1: this is because, according to the notation introduced in Eq. 16, the eigenvalues decrease as n increases, so to find all the eigenvalues smaller than or equal to a fixed  , it is necessary to consider the larger indices.

, it is necessary to consider the larger indices.

By merging the results (20) and (21), we can get a close-form expression for the cumulative distribution as

Of course, in the limit N → ∞, Cum(φ0) and Cum(φK−1) converge to the expected values, being Cum(φ0) = 1 − 1/N → 1, and Cum(φK−1) = 1/N → 0. The expression (22) is numerically checked in Fig. 2.

Finally, taking the derivative of Cum(φ), we get an estimate for ρ(φ):

and, using (20) and (21), we get

Thus, when  (i.e., for large sizes, and/or for σ close to 1, and/or in the upper part of the spectrum), the distribution scales as

(i.e., for large sizes, and/or for σ close to 1, and/or in the upper part of the spectrum), the distribution scales as  . This result is checked numerically in Fig. 3 (left panel): as expected, the comparison between the theoretical and the numerical estimates is successful especially in the case σ = 1, while for lower values deviations with respect to the power-law behavior emerge. Interestingly, as long as this picture holds, the expression in Eq. 24 allows us to get an estimate for the spectral dimension ds of

. This result is checked numerically in Fig. 3 (left panel): as expected, the comparison between the theoretical and the numerical estimates is successful especially in the case σ = 1, while for lower values deviations with respect to the power-law behavior emerge. Interestingly, as long as this picture holds, the expression in Eq. 24 allows us to get an estimate for the spectral dimension ds of  . In fact, recalling that in the small eigenvalue limit φ → 0 one has

. In fact, recalling that in the small eigenvalue limit φ → 0 one has  , we get

, we get

Left panel: The eigenvalue distribution ρ(φ)dφ for a system of size N = 2K with K = 10 is shown versus φ and for several choices of σ (the legend is the same as in Fig. 2). In particular, in this figure we show the histogram (symbols) derived from the exact spectrum, the theoretical estimate (solid line) given by Eq. 24, and the related scaling ρ(φ)dφ ~ φ1/(2σ−1) (dashed line). The comparison is successful especially for large values of φ and for σ not too small. Right panel: The eigenvalues for a system of size N = 2K with K = 10 are plotted in ascending order, for several choices of σ, as reported. The dashed lines highlight the expected behaviour according to the analytical estimate ~(K − i)2σ−1, following from Eq. 22. Again, one can see that the theoretical picture is quantitatively good just for large values of σ, while it turns out to be qualitatively correct in the whole range considered. In fact, a slower rate of growth for φi versus K − i implies a faster rate of growth for ρ(φ) versus φ, which in turns implies a larger spectral dimension, in agreement with Eq. 25.

In particular, ds(σ = 1) ≈ 2, and it monotonically grows as σ approaches its lower bound. In fact, as σ is reduced, the pattern of couplings gets more and more homogenous towards a mean-field scenario13,41. This result is further checked in Fig. 3 (right panel). We stress that the spectral dimension (when defined) generalises the Euclidean dimension to the case of non-translationally-invariant structures: in fact, this is a global property of the graph, related (beyond to the density of small eigenvalues of the Laplacian), for instance, to the infrared singularity of the Gaussian process, or to the (graph average) of the long-time tail of the random walk return probability; it also provides a consistent criterion on whether a continuous symmetry breaks down at low temperature (see e.g., ref. 42).

Finally, it is worth comparing the results found here for  with those pertaining to other examples of graphs. For instance, if we neglect the weights in

with those pertaining to other examples of graphs. For instance, if we neglect the weights in  we recover the complete graph (cg) of size N, often referred to as KN, where the Laplacian spectrum is given by φ(cg) = 0 with multiplicity 1 and φ(cg) = N with multiplicity N − 1. Beyond this peculiar case, as anticipated in the Introduction, there are several other examples of deterministically grown networks, exhibiting a non-trivial topology, for which the exact spectrum is known. In particular, we mention the Vicsek fractals (vf), where the spectral dimension is given by

we recover the complete graph (cg) of size N, often referred to as KN, where the Laplacian spectrum is given by φ(cg) = 0 with multiplicity 1 and φ(cg) = N with multiplicity N − 1. Beyond this peculiar case, as anticipated in the Introduction, there are several other examples of deterministically grown networks, exhibiting a non-trivial topology, for which the exact spectrum is known. In particular, we mention the Vicsek fractals (vf), where the spectral dimension is given by  , with f being a parameter which sets the coordination number of inner points43, and a class of small-world networks (sw) obtained by recursively joining together Kd graphs, where the spectral dimension is given by

, with f being a parameter which sets the coordination number of inner points43, and a class of small-world networks (sw) obtained by recursively joining together Kd graphs, where the spectral dimension is given by  , regardless of d26. For relatively sparse graphs, such as exactly decimable fractals (e.g., Sieprinski gasket, T-fractal), one can prove that ds < 242,44.

, regardless of d26. For relatively sparse graphs, such as exactly decimable fractals (e.g., Sieprinski gasket, T-fractal), one can prove that ds < 242,44.

Eigenvectors

Having computed the spectrum of L, we can now proceed with the calculation of its eigenvectors. As shown in the previous section, we have K + 1 distinct eigenvalues, each with its own algebraic multiplicity. We are looking for a system of linear independent vectors  , where μi is a N × 1 vector such that

, where μi is a N × 1 vector such that

with μiμj = δij and ||μi|| = 1,  .

.

Again, we can exploit the block structure of L to find these eigenvectors. In fact, for K = 3, and posing again t = 4−σ, L has the form

Let us start with the eigenvalue  (see Eq. 16): it has algebraic multiplicity N/2, so we have to find N/2 linear independent eigenvectors

(see Eq. 16): it has algebraic multiplicity N/2, so we have to find N/2 linear independent eigenvectors  of size N × 1, associated to this eigenvalue such that

of size N × 1, associated to this eigenvalue such that

Our ansatz is

that is, a vector that has all the entries equal to zero, but those at the position 2j − 1 an 2j, that are respectively equal to  and

and  , in order to obtain N/2 vectors with norm equal to 1. In this case, the left hand side of Eq. 26 becomes

, in order to obtain N/2 vectors with norm equal to 1. In this case, the left hand side of Eq. 26 becomes

Exploiting the structure of L, for every row i = 1, …, N, we have

Comparing this expression with (28) we get that the ansatz (29) is correct. In this way we find a set of N/2 independent eigenvectors associated to the eigenvalue φ0.

We are going to proceed analogously for the computation of the eigenvectors related to  for n = 1, …, K − 1, each with multiplicity N/2n+1. This implies that each of them is associated to 2K−n−1 linear independent eigenvectors. In particular, we claim that they have the form

for n = 1, …, K − 1, each with multiplicity N/2n+1. This implies that each of them is associated to 2K−n−1 linear independent eigenvectors. In particular, we claim that they have the form

where e is a one vector of size 2n such that ||e|| = 2n/2 and its first entry is either at the position 1 + i2n, or at the position 1 + (i + 1)2n of μj with i = 0, …, n − K − 1 and j = 1, …, 2K−n−1. With some algebra (as done previously in order to get (30) from (29)), we obtain

where n = 1, …, K − 1, and i = 0, …, K − n − 1. The previous equation can be further simplified if one recasts the right-hand term of (32) as

The last expression can be compared to φj (see Eq. 16) times μj (see Eq. 31), hence proving that the vectors defined in Eq. 31 are, in fact, eigenvectors.

Finally, according to the Frobenius-Perron theorem, for the zero eigenvalue with algebraic multiplicity equal to one, the corresponding eigenvector is  . In this way we have obtained a complete basis of N linearly independent eigenvectors related to the N eigenvalues, namely they form an orthonormal basis, that is

. In this way we have obtained a complete basis of N linearly independent eigenvectors related to the N eigenvalues, namely they form an orthonormal basis, that is

In particular, retaining the simple case K = 3, the orthonormal basis of eigenvectors can be written as

The generalization to larger sizes is straightforward.

We conclude this section observing that

and that

Examples of applications

In this section we exploit the results found in the Sections “Eigenvalues of the Dyson hierarchical graph” and “Eigenvectors” to derive information about several processes which can be defined on the graph  . These are just a few examples for illustrative purposes since, as underlined in the section “The Laplacian spectrum and its applications”, the exact knowledge of the whole Laplacian spectrum can be applied in a very wide range of fields and situations.

. These are just a few examples for illustrative purposes since, as underlined in the section “The Laplacian spectrum and its applications”, the exact knowledge of the whole Laplacian spectrum can be applied in a very wide range of fields and situations.

Random Walks

A simple random walk embedded on an arbitrary graph  is characterized by the transition matrix P = W−1J, that is, the probability that, in a given time step, the walker jumps from i to j is Pij = Jij/wi. Now, from the complete knowledge of the Laplacian spectrum one can derive the dynamical properties of the random walker and, in particular, one can calculate the mean time taken by a random walker to first reach a given node. This quantity plays a role in real situations such as transport in disordered media, neuron firing, spread of diseases and target search processes (see e.g., refs 44, 45, 46). Without loss of generality, we can fix the target site on the node j and focus on the mean first passage time (MFPT) from node i to node j, denoted as Tij. According to the definition of MFPT for random walks, we have Tjj = 0 and, for any

is characterized by the transition matrix P = W−1J, that is, the probability that, in a given time step, the walker jumps from i to j is Pij = Jij/wi. Now, from the complete knowledge of the Laplacian spectrum one can derive the dynamical properties of the random walker and, in particular, one can calculate the mean time taken by a random walker to first reach a given node. This quantity plays a role in real situations such as transport in disordered media, neuron firing, spread of diseases and target search processes (see e.g., refs 44, 45, 46). Without loss of generality, we can fix the target site on the node j and focus on the mean first passage time (MFPT) from node i to node j, denoted as Tij. According to the definition of MFPT for random walks, we have Tjj = 0 and, for any  ,

,

which can be rewritten in matrix form as

where  is the (N − 1)-dimensional unit vector (1, 1, …, 1)T;

is the (N − 1)-dimensional unit vector (1, 1, …, 1)T;  is the subvector of T obtained by deleting the j-th entry and whose i-th entry is Tij [Note: More precisely, the i-th entry of

is the subvector of T obtained by deleting the j-th entry and whose i-th entry is Tij [Note: More precisely, the i-th entry of  is Tij as long as i < j, while if i > j the entry corresponding to Tij is the (i − 1)-th one];

is Tij as long as i < j, while if i > j the entry corresponding to Tij is the (i − 1)-th one];  ,

,  , and

, and  are, respectively, the submatrices of P, W, and J obtained by deleting the j-th row and j-th column. With some passages it is possible to express Tij in terms of the spectra of L as (see ref. 27 for a detailed derivation)

are, respectively, the submatrices of P, W, and J obtained by deleting the j-th row and j-th column. With some passages it is possible to express Tij in terms of the spectra of L as (see ref. 27 for a detailed derivation)

Next, we can calculate the expected Tj to first reach the target node j, by averaging Tij over all possible starting sites, namely

By exploiting the result of Eq. 39 we can recast the previous expression in terms of the Laplacian eigenvalues and eigenvectors as well, namely (see again ref. 27 for a detailed derivation)

where s is the sum of the weighted degrees over all nodes, namely  .

.

In particular, for the graph  under study, we have that s = Nw, due to the homogeneity among nodes, and this also implies that Tj is actually independent of j. Moreover, exploiting Eq. 36, we can further simplify the general expression (41) as

under study, we have that s = Nw, due to the homogeneity among nodes, and this also implies that Tj is actually independent of j. Moreover, exploiting Eq. 36, we can further simplify the general expression (41) as

The sum appearing in Eq. 43 is not feasible for an explicit, general solution, yet, noticing that

we can provide Tj with a lower bound, that is,

The reliability of this bound is shown in Fig. 4: by varying K and σ we see that the bound is more accurate when the size is large (i.e.,  ) and when the pattern of weights is less homogeneous (i.e., σ far from 1/2). The leading term of Tlb scales as

) and when the pattern of weights is less homogeneous (i.e., σ far from 1/2). The leading term of Tlb scales as

By normalizing Tj with respect to the network size N mean we get quantities that are roughly comparable as the size is varied. Left panel: for the relatively small size N = 28 the lower bound Tlb does not provide a quantitatively good estimate for Tj. Central panel: when the size is relatively large as N = 216, the estimate is far improved. Right panel: when the size is very large as N = 232 ≈ 109, Tj ≈ Tlb, almost everywhere.

which evidences that (at least for large size and for σ far from its boundary values) the MFPT averaged over all starting nodes is well approximated by a linear growth with the size N, as corroborated by Fig. 5 (panel a). Moreover, one can show that (see also Fig. 4)

Panel a: Behavior of log2(Tj) with respect to K = log2(N). Different sets of data, corresponding to different choices of σ, are shown with different symbols, as explained by the legend. Data points are obtained by numerical evaluating Eq. 43, while solid lines represent the best linear fit. In fact, as evidenced in Fig. 4, the lower bound given by Tlb in Eq. 45 provides (in the limit of large size and for σ far from its boundary values) a good approximation for Tj and, in turn, in the limit of large size, Tlb scales linearly with N as shown in Eq. 46. More precisely, in our fitting function y = p1K + p2 we let the fit coefficients free and in the inset we compare the best-fit coefficient p1 with the unitary value expected from Eq. 46. The comparison is, within the error, satisfactory and further highlights that this approximation works better in the central region of the range σ ∈ (1/2, 1). Panel b: When σ = 1 an exact expression for Tj is achievable and here we compare the asymptotical analytical result, reported in Eq. 49, with the numerical evaluation of Tj from Eq. 43, as the size N = 2K varies, being K ∈ [8, 20]; notice the semilogarithmic scale plot. One can see that the numerical evaluation of Tj (circles) is well approximated by the function CN log2(N), where C = 1/6 (solid line). Panel c: The expected time Tj to reach a target set in the site j is plotted versus σ and for different choices of the graph size, as indicated by the legend. Data points are evaluated via the pseudo-inverse Laplacian method70 (in order to get a further check), while solid lines represent the numerical evaluation of Eq. 43. Notice that, in the limit of large size, Tj corresponds to the global first passage time τ.

and, for σ = 1, we are able to compute explicitly the sum in Eq. 42. In fact, fixing σ = 1, it becomes

Plugging this expression in Eq. 43, and posing σ = 1, we obtain the related exact value of Tj as

where in the last approximation we outlined the leading term being C = 1/6 (see also Fig. 5, panel b).

Therefore, by comparing the approximate result found for σ ∈ (1/2, 1) (see Eq. 46) and the exact result found for σ = 1 (see Eq. 49), we expect that Tj depends functionally on σ.

In the case the location of the target is unknown, one can still obtain a characteristic time by averaging Tj over all possible target locations, hence getting the so-called global first passage time, referred to as τ and defined as

By plugging Eq. 41 into Eq. 50, we get

which can be further developed by exploiting the previous results on eigenvalues (see Eqs 16 and 17). However, for the current graph, exploiting the homogeneity among the nodes, one can immediately write

where the last passage holds for large N (see Fig. 5, panel c).

To summarize, expected time Tj(σ) to reach a target placed in the arbitrary site j on  can be bounded as

can be bounded as

In general, as expected, Tj(σ) grows with σ since, when the pattern of weights is more heterogeneous (i.e., σ is large), the graph tends to get disconnected, the mixing time is large and reaching the farthest nodes takes more and more time.

The leading asymptotic dependence on the network size displayed by Tj(σ) can be compared to the scalings found for other structures displaying extensive connectivity (i.e., the coordination number of a subset of nodes diverges with the network size). For instance, for the class of pseudofractal networks studied in ref. 47, the MFPT scales sublinearly with the size N as long as the target node is central, while it scales linearly when the target node is peripheral; analogous results hold for the deterministic scale-free networks studied in ref. 48. Interestingly, a scaling ~N log N was evidenced for the global MFPT in the small-world exponential treelike networks studied in ref. 49, where a modular topology with loosely connections between different modules is also responsible for preventing the walker from exploring fluently the underlying space.

Quantum Walks

As stressed above, random walks constitute a basic example of dynamical process affected by the underlying topology. Their quantum-mechanical version, i.e. the quantum walks, constitute an advanced tool for building quantum algorithms and for modeling the coherent transport of mass, charge or energy (see e.g., refs 50, 51, 52), and display as well a strong dependence on the topological properties of the embedding structure and, accordingly, much of their properties can be expressed in terms of the Laplacian spectrum. Before deepening this point it is worth reviewing briefly a few definitions (here we just focus on the continuous time version of quantum walks, i.e., continuous-time quantum walks), while we refer to ref. 51 for an extensive treatment.

In such a quantum-mechanical context, the transport has to be formulated in Hilbert space and one assumes that the states |j〉 representing the nodes span the whole accessible Hilbert space; also, it is assumed that the states are orthonormal and complete, i.e.,  , and

, and  . The dynamics is then governed by a specific Hamiltonian H, such that the Schrödinger equation for the transition amplitude αk,j(t) from state |j〉 to state |k〉, reads

. The dynamics is then governed by a specific Hamiltonian H, such that the Schrödinger equation for the transition amplitude αk,j(t) from state |j〉 to state |k〉, reads

where ħ has been set equal to 1. The squared magnitude of the transition amplitude provides the quantum mechanical transition probability  . Now, the quantum-mechanical Hamiltonian H can be identified with the classical transfer matrix, i.e., H ≡ L53.

. Now, the quantum-mechanical Hamiltonian H can be identified with the classical transfer matrix, i.e., H ≡ L53.

The performance of the continuous-time quantum walk (CTQW) can be estimated in terms of the return probabilities πj,j(t): a quick temporal decay of these probabilities implies a fast transport through the network. In order to make a global statement on the performance, one considers the average return probability  given by (see ref. 51).

given by (see ref. 51).

where in the last passage we exploited the Cauchy-Schwarz inequality to get a lower bound for  which does not depend on the eigenvectors.

which does not depend on the eigenvectors.

For classical diffusion, the long-time limit of the transition probabilities reaches the equipartition value 1/N. In contrast, due to the unitary time evolution, for CTQW neither πi,j(t) nor  decay to a given value at long times, but rather oscillate around the corresponding long-time average

decay to a given value at long times, but rather oscillate around the corresponding long-time average  which, for

which, for  , is given by (see ref. 51)

, is given by (see ref. 51)

Remarkably, one can obtain a lower bound which does not depend on the eigenvectors and reads as (see refs 51 and 54)

namely,  is the sum of the squares of the normalized multiplicities. Therefore, the full knowledge of the Laplacian eigenvalue spectrum allows estimating (at least via bounds) whether the quantum transport on a given structure propagates relatively fast. Notice that, actually,

is the sum of the squares of the normalized multiplicities. Therefore, the full knowledge of the Laplacian eigenvalue spectrum allows estimating (at least via bounds) whether the quantum transport on a given structure propagates relatively fast. Notice that, actually,  just depends on the multiplicities of the distinct eigenvalues.

just depends on the multiplicities of the distinct eigenvalues.

Indeed, for quantum transport processes the degeneracy of the eigenvalues plays an important role, as the differences between eigenvalues determine the temporal behaviour, while for classical transport the long time behaviour is dominated by the smallest eigenvalue. Situations where only a few, highly-degenerate eigenvalues are present are related to slow CTQW dynamics, while when all eigenvalues are non-degenerate the transport turns out to be efficient (i.e., it spreads out fast)51.

However, in general, an excitation does not stay forever in the system where it was created; the excitation either decays (radiatively or by exciton recombination) or, e.g., in the case of biological light-harvesting systems, it gets absorbed at the reaction center, where it is transformed into chemical energy. In such cases, the total probability to find the excitation within the network is not conserved. Such loss processes can be modeled phenomenologically by modifying the Hamiltonian H appearing in Eq. 54. To fix the ideas, we consider networks where the excitation can only vanish at certain nodes, making up a set  with cardinality

with cardinality  . These nodes will be called trap-nodes or traps. The new Hamiltonian reads as

. These nodes will be called trap-nodes or traps. The new Hamiltonian reads as  , where

, where  can be written as a diagonal matrix with elements

can be written as a diagonal matrix with elements  as long as

as long as  , and zero otherwise;

, and zero otherwise;  represents the (tunable) absorbing rate.

represents the (tunable) absorbing rate.

As long as the absorbing rate  is small, i.e.,

is small, i.e.,  , this problem can be treated perturbatively getting that the mean survival probability

, this problem can be treated perturbatively getting that the mean survival probability  can be approximated by a sum of exponentially decaying terms:

can be approximated by a sum of exponentially decaying terms:

where M is the number of traps and γl is the imaginary part of the eigenvalue  of the perturbed Hamiltonian H′ (with approximation at first order), namely

of the perturbed Hamiltonian H′ (with approximation at first order), namely  51,55.

51,55.

Let us now resume the hierarchical graph  and exploit the results obtained in Sections “Eigenvalues of the Dyson hierarchical graph” and “Eigenvectors” to derive an estimate for

and exploit the results obtained in Sections “Eigenvalues of the Dyson hierarchical graph” and “Eigenvectors” to derive an estimate for  and for

and for  .

.

We start with  and notice that the estimate in Eq. 57 only accounts for the degeneracy of the eigenvalues which, as can be seen in Eqs 16 and 17, is not affected by σ, but only depends on the system size. As a result,

and notice that the estimate in Eq. 57 only accounts for the degeneracy of the eigenvalues which, as can be seen in Eqs 16 and 17, is not affected by σ, but only depends on the system size. As a result,  does not depend on σ. Moreover, exploiting the expression for φk obtained in Eqs 16 and 17, we can get that

does not depend on σ. Moreover, exploiting the expression for φk obtained in Eqs 16 and 17, we can get that  for a generic network of size N = 2K reads as

for a generic network of size N = 2K reads as

We can therefore derive that, no matter the system size and the value of σ, the long time average  is always finite and larger than 1/3. This means that the coherent transport on

is always finite and larger than 1/3. This means that the coherent transport on  tends to get stuck nearby the starting node. Of course, this stems from the fact that couplings decay exponentially fast with the distance among nodes. Actually, localization phenomena also occurs for the classical diffusion, where, as the size N gets larger and larger the mixing time grows exponentially and ergodicity breaks down in the limit N → ∞14,40,41. However, at finite size, for classical propagation the equipartition is eventually reached, while for the coherent propagation the localization effect is significant already at small size.

tends to get stuck nearby the starting node. Of course, this stems from the fact that couplings decay exponentially fast with the distance among nodes. Actually, localization phenomena also occurs for the classical diffusion, where, as the size N gets larger and larger the mixing time grows exponentially and ergodicity breaks down in the limit N → ∞14,40,41. However, at finite size, for classical propagation the equipartition is eventually reached, while for the coherent propagation the localization effect is significant already at small size.

Let us now move to  ; according to Eq. 58 it is possible to get a long time estimate in terms of the imaginary part γl of the eigenvalues of the perturbed Hamiltonian H′. This can be calculated as follows:

; according to Eq. 58 it is possible to get a long time estimate in terms of the imaginary part γl of the eigenvalues of the perturbed Hamiltonian H′. This can be calculated as follows:

where in the second passage we exploited the diagonal form of the matrix  and in the third passage we exploited the results found in Section “Eigenvectors”. More precisely, g is a vector, whose entry gl corresponds to the number of null entries in the eigenvector μl, namely

and in the third passage we exploited the results found in Section “Eigenvectors”. More precisely, g is a vector, whose entry gl corresponds to the number of null entries in the eigenvector μl, namely  , and δx,y is the Kronecker delta in such a way that the summation just returns the number of non-null entries in the eigenvector

, and δx,y is the Kronecker delta in such a way that the summation just returns the number of non-null entries in the eigenvector  that match a trap position. For instance, when M = 1, one finds

that match a trap position. For instance, when M = 1, one finds

or any other outcome obtained under the permutation of the elements pertaining to the same line (e.g., γ6 = 4/N, γ5 = γ7 = γ8 = 0). In general, in the presence of an arbitrary number M (M ≤ N) of traps, we have γ1 = γ = 2 = M/N, while for l > 2 the value of γl depends on the trap arrangement. Moreover, the smallest eigenvalue is null and the second smallest eigenvalue is M/N, whose multiplicity grows with M. Each null eigenvalue corresponds to a block of  which is trap free and this yields to a contribute to

which is trap free and this yields to a contribute to  which survives even at long times. Therefore, the trap arrangement which maximizes the survival probability is the one where traps are set as close as possible each other, while the trap arrangement which minimizes the survival probability is the one where traps are scattered broadly. This is rather intuitive as the quantum walk tends to remain localized around its starting point in such a way that if traps are assembled within a block, the walker can avoid them as there exist eigenstates living on different blocks, while if each block (even the smallest one, i.e. the dimer) is occupied by at least one trap the quantum walk can not avoid them56.

which survives even at long times. Therefore, the trap arrangement which maximizes the survival probability is the one where traps are set as close as possible each other, while the trap arrangement which minimizes the survival probability is the one where traps are scattered broadly. This is rather intuitive as the quantum walk tends to remain localized around its starting point in such a way that if traps are assembled within a block, the walker can avoid them as there exist eigenstates living on different blocks, while if each block (even the smallest one, i.e. the dimer) is occupied by at least one trap the quantum walk can not avoid them56.

Dynamics of polymer networks under external forces

Another field where the Laplacian spectrum is extensively exploited is polymer physics and, in particular, as for the investigation of the relationship between the topology of a polymer and its dynamics. To this purpose, the so-called generalized Gaussian structures are conveniently exploited. These are a generalization of the Rouse model7,57, meant for linear polymer chains, to systems with arbitrary topology. The polymer is modeled as a structure consisting of N beads (each bead corresponding to a node) connected by harmonic springs (we refer to ref. 7 for a discussion of the underlying assumptions).

Understanding how the underlying geometries of polymeric materials affect their dynamic behavior is becoming of increased importance as new polymeric materials with more and more complex architectures are synthesized7.

Let us consider a set of N beads, all subject to the same friction constant  with respect to the sorrounding viscous medium, and connected pairwise by harmonic strings. The pattern of the related string constants is provided by the matrix L in such a way that for the couple (i, j) it reads CLij, where C is a suitable constant. The equation describing the motion of the j-th bead is the following Langevin equation

with respect to the sorrounding viscous medium, and connected pairwise by harmonic strings. The pattern of the related string constants is provided by the matrix L in such a way that for the couple (i, j) it reads CLij, where C is a suitable constant. The equation describing the motion of the j-th bead is the following Langevin equation

where Rj(t) = (Xj(t), Yj(t), Zj(t)) represents the position in the space of the j-th bead at time t, L is the Laplacian matrix associated to the structure of the polymer, fj(t) is the thermal Gaussian noise [Note: More precisely, it is assumed that 〈fj(t)〉 = 0 and  , where kB is the Boltzmann constant, T is the temperature,

, where kB is the Boltzmann constant, T is the temperature,  is the friction constant, α and β represent the x, y, and z directions.], and Fj(t) is the sum of all external forces acting on the j-th bead.

is the friction constant, α and β represent the x, y, and z directions.], and Fj(t) is the sum of all external forces acting on the j-th bead.

One can show that, acting (from time t = 0 onwards) with a constant external force Fk(t) = Fδjk on a single bead j of the polymer in the, say, y direction, the mean displacement of a bead turns out to be (see ref. 7)

where  is the bond rate constant and

is the bond rate constant and  are the non-null eigenvalues of matrix L, with φ1 being the unique (for otherwise the polymer would be split in several parts) smallest eigenvalue 0.

are the non-null eigenvalues of matrix L, with φ1 being the unique (for otherwise the polymer would be split in several parts) smallest eigenvalue 0.

When the applied force is harmonic, namely  , say along the x direction, the related response function is the so-called complex dynamic (shear) modulus G*(ω), whose real, i.e. G′(ω), and imaginary, i.e., G″(ω), components are also referred to as the storage and the loss moduli, respectively7. In the generalized Gaussian structure model these are given by

, say along the x direction, the related response function is the so-called complex dynamic (shear) modulus G*(ω), whose real, i.e. G′(ω), and imaginary, i.e., G″(ω), components are also referred to as the storage and the loss moduli, respectively7. In the generalized Gaussian structure model these are given by

where ν represents the beads per unit volume.

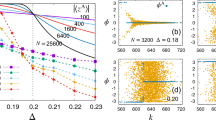

Let us now resume the graph  : as discussed previously, this represents a set of interconnected nodes where the coupling pattern exhibits hierarchy and modularity (see Fig. 6, right panel).

: as discussed previously, this represents a set of interconnected nodes where the coupling pattern exhibits hierarchy and modularity (see Fig. 6, right panel).

Left panel: Schematic representation of the polymer network described by  : N = 8 beads are pairwise coupled via couplings whose pattern exhibits a hierarchical structure. Right panel: Trend of the mean displacement of a bead 〈Y(t)〉 as a function of t ∈ [10−2, 106], for K = 8 and for different values of σ ∈ (1/2, 1], as shown by the legend. As highlighted analytically (see Eqs 66 and 67), for short and long times the mean displacement grows linearly with time and the rate of growth is independent of σ. On the other hand, in the intermediate time interval, the rate of growth is sub-linear and slower when the pattern of couplings is more homogeneous. The data points shown here are obtained by numerically evaluating Eq. 65, where we set

: N = 8 beads are pairwise coupled via couplings whose pattern exhibits a hierarchical structure. Right panel: Trend of the mean displacement of a bead 〈Y(t)〉 as a function of t ∈ [10−2, 106], for K = 8 and for different values of σ ∈ (1/2, 1], as shown by the legend. As highlighted analytically (see Eqs 66 and 67), for short and long times the mean displacement grows linearly with time and the rate of growth is independent of σ. On the other hand, in the intermediate time interval, the rate of growth is sub-linear and slower when the pattern of couplings is more homogeneous. The data points shown here are obtained by numerically evaluating Eq. 65, where we set  and γ = 2, lines are guide to eye. The inset shows a zoom on the long-time region where the two extremal cases σ = 0.5001 and σ = 1 are compared with the linear law y = t/N + τ/(Nw) (see Eq. 67, solid line).

and γ = 2, lines are guide to eye. The inset shows a zoom on the long-time region where the two extremal cases σ = 0.5001 and σ = 1 are compared with the linear law y = t/N + τ/(Nw) (see Eq. 67, solid line).

Exploiting the results found for the hierarchical graph under study (see Eqs 16 and 17), we can restate Eq. 62 as

In the limit of very short time, the terms to be summed up in Eq. 65 can be approximated as  , hence obtaining the linear scaling

, hence obtaining the linear scaling

Similarly, in the limit of very long times, we can write  and, recalling the results in Eq. 51, we get

and, recalling the results in Eq. 51, we get

Notice that the last term in the right hand side is proportional to the radius of gyration of the polymer considered58.

The results collected in Eqs 66 and 67 show that, in the limit of short and long times the mean displacement varies linearly with time, with a rate which is independent of σ. Therefore, the most interesting regime is the intermediate one, where the pattern of weights can possibly play a role. In fact, as shown in Fig. 6, this is the case and the resulting displacement is enhanced when the network is more inhomogeneous (i.e., σ is large).

As for the storage and loss moduli, exploiting the results of Section “Eigenvalues of the Dyson hierarchical graph”, Eqs 63 and 64 can be recast as

For several values of σ ∈ (1/2, 1], the behavior of G′ and of G″ has been evaluated numerically; the results for the case σ = 1 are shown in Fig. 7. As expected, G′ behaves as ω2 at low frequencies and as ω0 at high frequencies. In the intermediate regime the growth of G′ is smoothened and the width of such an intermediate scaling increases with σ. A similar behavior was evidenced for other unweighted networks displaying small-world features26,59. As for G″, it peaks at around ω ≈ 1, the exact value depending on the choice of σ. For relatively small frequencies, G″ is larger when the pattern of weights is more inhomogeneous (i.e., σ large), while for relatively large frequencies G″ is larger when the pattern of weights is more homogeneous (i.e., σ small). In any case, the expected scalings G″(ω) ~ ω and G″(ω) ~ ω−1 are recovered. This is again consistent with the results found for small-world structures in refs 26 and 59.

For the particular case σ = 1 we can estimate the asymptotic behaviour of G′ by upper-bounding the expression in Eq. 68, that is

The previous equation shows that G′(ω)|σ=1 scales at most linearly with N. Moreover, in the limit of low frequencies ( ), from Eq. 70 we can get G′(ω)|σ=1 ~ ω2N; this scaling is corroborated in Fig. 7 (left panel), where one can also see that this regime in the ω space shrinks with the size. In the opposite limit of high frequencies

), from Eq. 70 we can get G′(ω)|σ=1 ~ ω2N; this scaling is corroborated in Fig. 7 (left panel), where one can also see that this regime in the ω space shrinks with the size. In the opposite limit of high frequencies  , from Eq. 70 we get G′(ω)|σ=1 ~ ω0N0; again, the scaling is corroborated in Fig. 7 (left panel) and, this time, the width of this regime in the ω space does not depend on N.

, from Eq. 70 we get G′(ω)|σ=1 ~ ω0N0; again, the scaling is corroborated in Fig. 7 (left panel) and, this time, the width of this regime in the ω space does not depend on N.

Finally, as discussed in the section “Eigenvalues of the Dyson hierarchical graph”, the case σ = 1 corresponds to ds = 2 which is a critical value over which the polymeric structures collapse (however, under external forces, such polymers can unfold60, which makes this analysis reasonable). For the case ds = 2 the observables studied in this section display characteristic asymptotic behaviors in the intermediate time/frequency domain (see ref. 26 and references therein), namely 〈Y(t)〉 ~ log(t) and G′(ω) ~ G″(ω) ~ ω. These scalings have been successfully checked also for the graph  under study, as shown in Fig. 8.

under study, as shown in Fig. 8.

Three different sizes (N = 2K with K = 4, 8, 12) are compared and the theoretical expected behavior is also shown (dashed line). In particular, for the mean displacement the expected logarithmic scaling is highlighted by plotting the (natural) logarithm of 〈Y(t)〉 and comparing it with a linear trend.

Modularity

In graph theory the modularity Q is meant as a measure of the quality of a particular division of a network into a set of clusters (or groups, or communities)29,61,62. More precisely, for an unweighted graph, the modularity is the fraction of links falling within clusters minus the expected fraction in a network where links are placed at random (conditional on the given cluster memberships and the degrees of vertices). Thus, if the number of links within a group is no better than random, the modularity is zero. On the other hand, a modularity approaching one is indicative of a strong community structure, that is, a dense intra-group and a sparse inter-group connection pattern. Denoting with A the (unweighted) adjacency matrix of the graph, with  the degree of a node i, and with

the degree of a node i, and with  the number of edges in the graph, the modularity can be written as

the number of edges in the graph, the modularity can be written as

where  is the cluster to which node i is assigned. The previous expression can be extended to assess community divisions in weighted networks as

is the cluster to which node i is assigned. The previous expression can be extended to assess community divisions in weighted networks as

where  is the overall weight spread over all the edges. Of course, Eq. 73 recovers Eq. 72 as long as weights collapse to the value 1.

is the overall weight spread over all the edges. Of course, Eq. 73 recovers Eq. 72 as long as weights collapse to the value 1.

Let us write Eq. 73 in a more convenient way29. First, we notice that the terms to be summed up in Eq. 73 can be considered as elements of the N × N matrix M given by

Moreover, for a partitioning of the network into c clusters one can introduce the N × c “community matrix” S defined as follows. As anticipated, the c ∈ [1, N] disjoint, non-empty subsets are denoted by  ; clearly,

; clearly,  . Then, the first column of S is a vector

. Then, the first column of S is a vector  , where ek and 0k are, respectively, the ones and zeros vector of length k; the second column of S is a vector

, where ek and 0k are, respectively, the ones and zeros vector of length k; the second column of S is a vector  ; similarly for the remaining c − 2 columns. Notice that STS is a diagonal matrix where the k-th diagonal term is

; similarly for the remaining c − 2 columns. Notice that STS is a diagonal matrix where the k-th diagonal term is  and that, for an arbitrary couple of nodes (i, j),

and that, for an arbitrary couple of nodes (i, j),  . As a result, Eq. 73 can be recast as

. As a result, Eq. 73 can be recast as

A spectral upper bound Qub for the modularity can be found as (see ref. 29 for a complete derivation)

where  is the largest eigenvalue of M.

is the largest eigenvalue of M.

Now, the spectra of the modularity matrix is strongly related with the spectra of the weight matrix J (or, in the case of unweighted graphs, of the adjacency matrix A), see e.g., ref. 29. In particular, for regular graphs where  ,

,  , the eigenvalues

, the eigenvalues  of the modularity matrix M are equal to the eigenvalues {λi} of J (and, in turn, under a proper shift to eigenvalues {φi} of L), but the largest eigenvalue λK is replaced by the eigenvalue 0. This is proven rigorously for unweigthed graphs29 and the proof can be straightforwardly extended to regular weighted graphs.

of the modularity matrix M are equal to the eigenvalues {λi} of J (and, in turn, under a proper shift to eigenvalues {φi} of L), but the largest eigenvalue λK is replaced by the eigenvalue 0. This is proven rigorously for unweigthed graphs29 and the proof can be straightforwardly extended to regular weighted graphs.

Specifically, for the graph  under study, recalling the results in Eqs 13 and 14, the spectrum of M reads as

under study, recalling the results in Eqs 13 and 14, the spectrum of M reads as

and, of course, LJ = Nw/2. By plugging  in Eq. 76 we can estimate the modularity of a given partition

in Eq. 76 we can estimate the modularity of a given partition  . For instance, in the case of equipartition where there are c clusters of size N/c we get, no matter how clusters are chosen,

. For instance, in the case of equipartition where there are c clusters of size N/c we get, no matter how clusters are chosen,

If we choose as graph partitioning the one suggested by the pattern of weights, namely the one with c = N/2 clusters (i.e., the set of dimers), or the one with c = N/4 clusters, …, or the one with c = 2 clusters (i.e., the two main branches of the graph), we can obtain an exact expression for (75), as explained hereafter. We consider the matrix S and, computing the trace of the matrix STMS for different choices of l ∈ [1, K], we have

where, to lighten the notation, we wrote J(d) = J(d, K, σ) and we simply highlighted the dependence on the logarithmic size l of each cluster l = log2(N/c), l ∈ [1, K]; also, in the last passage we used t = 4−σ and the approximation follows the assumption  . Notice that Q(l, K, σ) is a concave function of l with a peak at l∗ which can be estimated by solving the following (see Eq. 80)

. Notice that Q(l, K, σ) is a concave function of l with a peak at l∗ which can be estimated by solving the following (see Eq. 80)

We can find the explicit solution for particular values of σ. For instance, setting σ = 1 we get

where the expansion holds for  ; more simply, we get that l∗(K, σ) ~ K/2, that is, the partition which maximizes the modularity when σ = 1 is the one where clusters have a size scaling as

; more simply, we get that l∗(K, σ) ~ K/2, that is, the partition which maximizes the modularity when σ = 1 is the one where clusters have a size scaling as  . From Eq. 81 one can also see that, by decreasing σ, the root l∗ grows. In Fig. 9 we compare Q and Qub for a network of generation K = 30. The latter provides a very good estimate for large values of l (i.e., clusters of large sizes) and over a range which increases with σ.

. From Eq. 81 one can also see that, by decreasing σ, the root l∗ grows. In Fig. 9 we compare Q and Qub for a network of generation K = 30. The latter provides a very good estimate for large values of l (i.e., clusters of large sizes) and over a range which increases with σ.

) and its upper bound Qub(l, K, σ>) (solid line) are evaluated from Eqs 77 and 79 for a system of generation K = 30 and plotted versus the logarithmic cluster size l ∈ [0, 30] for different values of σ.

) and its upper bound Qub(l, K, σ>) (solid line) are evaluated from Eqs 77 and 79 for a system of generation K = 30 and plotted versus the logarithmic cluster size l ∈ [0, 30] for different values of σ.Conclusions

In this work we considered the hierarchical graph  introduced by Dyson and we calculated the exact spectrum of its Laplacian matrix L.

introduced by Dyson and we calculated the exact spectrum of its Laplacian matrix L.

We recall that  is a weighted fully-connected graph, whose pattern of weights is ruled by the decay rate σ ∈ (1/2, 1]. A notion of metric can be defined in such a way that a couple of nodes (i, j) at distance dij are connected by a link associated to the weight

is a weighted fully-connected graph, whose pattern of weights is ruled by the decay rate σ ∈ (1/2, 1]. A notion of metric can be defined in such a way that a couple of nodes (i, j) at distance dij are connected by a link associated to the weight  . Thus, σ tunes the inhomogeneity of the pattern of weights (when σ → 1/2 the pattern is more homogeneous, while when σ = 1 distant nodes are only weakly connected).

. Thus, σ tunes the inhomogeneity of the pattern of weights (when σ → 1/2 the pattern is more homogeneous, while when σ = 1 distant nodes are only weakly connected).

Here, exploiting the deterministic recursivity through which  is built, we are able to derive explicitly the whole set of its Laplacian eigenvalues and eigenvectors. In this way, a large class of problems embedded in

is built, we are able to derive explicitly the whole set of its Laplacian eigenvalues and eigenvectors. In this way, a large class of problems embedded in  can be addressed analytically. In fact, the Laplacian matrix (also called the admittance matrix or Kirchhoff matrix, see e.g., refs 15 and 16) is a discrete analog of the Laplacian operator in multivariable calculus and it naturally arises in the analysis of dynamic processes (e.g., random walks) occurring on the graph but also in the investigation of the dynamical properties of connected structures themselves (e.g., vibrational structures and the relaxation modes)17,18,19,20. Also, several topological features (e.g., the number of spanning trees, the graph partitioning) can be quantified or, at least, bounded, through the Laplacian eigenvalues.

can be addressed analytically. In fact, the Laplacian matrix (also called the admittance matrix or Kirchhoff matrix, see e.g., refs 15 and 16) is a discrete analog of the Laplacian operator in multivariable calculus and it naturally arises in the analysis of dynamic processes (e.g., random walks) occurring on the graph but also in the investigation of the dynamical properties of connected structures themselves (e.g., vibrational structures and the relaxation modes)17,18,19,20. Also, several topological features (e.g., the number of spanning trees, the graph partitioning) can be quantified or, at least, bounded, through the Laplacian eigenvalues.

As examples of applications we studied the random walk moving isotropically in  , the continuous-time quantum walk moving in a potential given by

, the continuous-time quantum walk moving in a potential given by  , and the relaxation times of a polymer whose structure is described by

, and the relaxation times of a polymer whose structure is described by  .

.

As for the simple random walk, we found that the expected time Tj to first reach a given node j depends (super) linearly with the graph size N, the exact dependence being qualitatively affected by σ. In particular, when the pattern of weights is more inhomogeneous Tj grows faster with N, as expected due to the unlikelihood to reach the farthest nodes.

As for the quantum walk, we proved that the long time average, measuring how spread the walker finally gets, is finite and lower bounded by 1/3 even in the limit of large size. This value has to be compared with the equipartition limit 1/N holding for the classical case, suggesting that the coherent transport is not well performing on  . Also, in the presence of absorbing traps, according to their arrangement, surviving stationary states can be established.

. Also, in the presence of absorbing traps, according to their arrangement, surviving stationary states can be established.

Next, in the generalized Gaussian framework, we investigate the dynamics of polymers constituted by beads identified with the nodes of  and connected by elastic springs whose coupling is identified with the weights encoded by J. Using the Laplacian eigenvalues of