Abstract

With the prevalence of pre-trained language models (PLMs) and the pre-training–fine-tuning paradigm, it has been continuously shown that larger models tend to yield better performance. However, as PLMs scale up, fine-tuning and storing all the parameters is prohibitively costly and eventually becomes practically infeasible. This necessitates a new branch of research focusing on the parameter-efficient adaptation of PLMs, which optimizes a small portion of the model parameters while keeping the rest fixed, drastically cutting down computation and storage costs. In general, it demonstrates that large-scale models could be effectively stimulated by the optimization of a few parameters. Despite the various designs, here we discuss and analyse the approaches under a more consistent and accessible term ‘delta-tuning’, where ‘delta’ a mathematical notation often used to denote changes, is borrowed to refer to the portion of parameters that are ‘changed’ during training. We formally describe the problem and propose a unified categorization criterion for existing delta-tuning methods to explore their correlations and differences. We also discuss the theoretical principles underlying the effectiveness of delta-tuning and interpret them from the perspectives of optimization and optimal control. Furthermore, we provide a holistic empirical study on over 100 natural language processing tasks and investigate various aspects of delta-tuning. With comprehensive study and analysis, our research demonstrates the theoretical and practical properties of delta-tuning in the adaptation of PLMs.

Similar content being viewed by others

Main

With the revolutionary development in computing hardware, traditional statistical methods for modelling natural language have yielded their place to deep learning1 that heavily relies on tensor computation and huge data volume. Modern natural language processing (NLP) uses deep neural networks to implicitly model language distribution and capture language representations2,3,4. A standard pipeline involves encoding language into discrete tokens (tokenization) as model input, choosing a proper model architecture, designing corresponding tasks and training the network with the given corpora. Among these deep neural architectures, the transformer neural network4 produces state-of-the-art performances on a series of NLP applications. Subsequently, the advancement in pre-trained language models (PLMs) using deep transformers as their foundation has ushered in a new era of NLP. PLMs typically use heavily over-parameterized transformers as the base architecture and model natural language in bidirectional5, autoregressive6,7 or sequence-to-sequence8 manners on large-scale unsupervised corpora. Then for downstream tasks, task-specific objectives are introduced to fine-tune the PLMs for model adaptation. Notably, the increasing scale of PLMs (measured by the number of parameters) seems to be an irreversible trend, as constant empirical results show that larger models (along with more data) almost certainly lead to better performance. For example, with 175 billion parameters, Generative Pre-trained Transformer 3 (GPT-3)9 generates natural language of unprecedented quality and can conduct various desired zero-shot tasks with satisfactory results given appropriate prompts. Subsequently, a series of large-scale models such as Gopher10, Megatron-Turing Natural Language Generation (NLG)11 and Pathways Language Model (PaLM)12 have repeatedly shown effectiveness on a broad range of downstream tasks.

As the model scales, how to efficiently and effectively adapt large models to particular downstream tasks becomes an intriguing research issue. Although in-context learning has shown promising performance for PLMs such as GPT-3, fine-tuning still overtakes it under the task-specific setting. However, the predominant approach, full parameter fine-tuning, which initializes the model with the pre-trained weights, updates all the parameters and produces separate instances for different tasks, becomes impractical when dealing with large-scale models. In addition to the cost of deployment and computation, storing different instances for different tasks is extremely memory intensive. To further explore the practical application rate of large models (PLMs with over 1 billion parameters), we randomly select 1,200 published research papers from the recent six NLP conferences (200 for each venue), including Annual Meeting of the Association for Computational Linguistics (ACL) 2022, ACL 2021, Conference on Empirical Methods in Natural Language Processing (EMNLP) 2021, Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL) 2021, ACL 2020 and EMNLP 2020. Then we manually count the usage of PLMs in these peer-reviewed works, focusing on only the experimental part of the papers. According to the statistics in Extended Data Table 1, although the use of PLMs has become increasingly popular, only about 0.5–4% of research papers practically adopt large PLMs in the experiments. One of the reasons for their unpopularity is the unaffordable cost of deploying and experimentally validating large PLMs.

In fact, large PLMs with billions of parameters could be effectively driven by optimization of a few parameters, and a branch of parameter-efficient methods for model tuning arises. Although each of these approaches proposes distinct designs on the structure and location of trainable parameters in PLMs, they essentially tune a ‘delta’ in the adaptation phase, which refers to a small fraction of trainable parameters that can be placed anywhere in the PLM. We thus unify them under a more accessible term ‘delta-tuning’ that captures the essence of this branch of methods more precisely. In general, delta-tuning updates only a small number of parameters (inherently in the model or additionally introduced) while freezing the remaining parameters that account for the vast majority. Adapter tuning13 is among the earliest approaches to steer pre-trained models with a limited number of parameters. It inserts adapter modules with bottleneck architecture between layers in PLMs and only these inserted modules get updated during fine-tuning. BitFit14 updates the bias terms in PLMs while freezing the remaining modules. Low rank adaptation (LoRA)15 decomposes attention weight update into low-rank matrices to reduce the number of trainable parameters. The delta-tuning methods enable efficient tuning and practical usage for large pre-trained models and often achieve comparable results to the standard fine-tuning. For example, the vanilla fine-tuning of GPT-3 needs to update about 175,255 million parameters, which is almost infeasible in both industry and academia. However, if we tune only the injected low-rank decomposition matrices in each transformer layer15, only 37.7 million parameters will be involved in backpropagation. Delta-tuning not only provides a promising way to adapt large PLMs but also sheds light on the mechanisms behind such model adaptations. Compared with fine-tuning, delta-tuning makes model adaptation a considerably low-cost process. For instance, researchers find that the optimization problem of the adaptations for big models could be reparameterized into a low-dimensional ‘intrinsic subspace’16,17 and various NLP tasks could be handled by tuning only very few parameters in the subspace. The empirical evidence takes us one step closer to understanding how pre-trained models work and may even spawn new theoretical questions that are worth exploring.

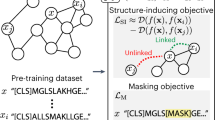

This Analysis attempts to comprehensively analyse recent advances in delta-tuning to establish a deeper understanding of this branch of methods (Methods). We formally describe the problem and categorize delta-tuning methods into addition-based, specification-based and reparameterization-based methods as illustrated in Fig. 4, then we comprehensively introduce the technical details and empirical conclusions of each method. To better understand the inner connections among the delta-tuning methods and the mechanisms of model adaptation, we develop theoretical analyses of delta-tuning by proposing theoretical frameworks from two different perspectives: optimization and optimal control. Our theoretical discussion is summarized as follows.

-

1.

Optimization. Based on the knowledge of a low intrinsic dimension in a large PLM, we show that delta-tuning is essentially a subspace-optimization method with respect to the solution space or functional space. The discussion justifies the designs of the existing delta-tuning methods and explains some phenomena in the experiments.

-

2.

Optimal control. Inspired by the relationship between deep learning and optimal control theories, we interpret delta-tuning as seeking optimal controllers for PLMs. We propose an optimal control framework that unifies different delta-tuning approaches. Our analysis provides theoretical references for the novel design of delta-tuning methods.

In terms of empirical studies, we carry out extensive and systematic experiments (Results) on over 100 NLP tasks to rigorously explore the performances, combinability, the power of scale, transferability and so on. Our main findings are summarized as follows.

-

1.

Performance. Delta-tuning yields consistent and non-trivial performance on more than 100 NLP tasks, showing that it is an effective and lightweight alternative to conventional fine-tuning. Among several representative delta-tuning methods, no single algorithm predominantly outperforms the others.

-

2.

Convergence. Training stability is also one of our focuses. Although the convergence of delta-tuning is generally not as fast as that of full parameter fine-tuning, we find that it is more sensitive to the delta structures than the number of tunable parameters. Meanwhile, the larger the model is, the faster the training converges.

-

3.

Efficiency. In terms of computational efficiency, which is the original motivation for the methods, delta-tuning could substantially improve computational and storage efficiency while achieving decent results, highlighting the promising practical value of adapting super-large PLMs.

-

4.

Combinability. Combining multiple delta-tuning methods is more effective than a single method in most cases, despite that the optimal combination may vary for different PLM backbones, downstream tasks and data scales. This finding implies the existence of an optimal delta structure, and it is likely that such a structure cannot be obtained artificially, but could be generated automatically.

-

5.

Power of scale. The power of scale (that is, both the performance and convergence are improved when the size of the PLM increases) is observed in all of the delta-tuning methods, even in unregulated neural modules. In other words, when the model size is large enough, only optimizing a random portion of parameters can achieve comparable performance to conventional fine-tuning.

-

6.

Transferability. Existing delta-tuning methods could well support knowledge transfer, showing non-trivial transferability among downstream tasks of similar categories. The finding suggests that we could establish a common platform to share and migrate these lightweight delta objects (that is the portion of the fine-tuned parameters).

We discuss the practicality and applications of delta-tuning from various perspectives in Supplementary Section 6, including efficient training and shareable checkpoints, multi-task learning, catastrophic forgetting mitigation and model-as-service. Hopefully, this Analysis will inspire research to advance the efficient adaptation of large language models.

Results

As an effective engine to stimulate large-size PLMs, delta-tuning presents an enormous practical potential for various real-world applications. We carried out systematic experiments to gain a deeper understanding of the attributes of different mainstream delta-tuning methods. Specifically, (1) we first conduct thorough comparisons among four representative delta-tuning methods and fine-tuning, covering the performance, convergence and the efficiency analysis. (2) We explore the combinability of three representative delta-tuning methods by comparing the performance under both the full-data and low-resource settings. We also explore the effects of manual templates and compare the generalization gap of different delta-tuning methods. Furthermore, we investigate (3) the scaling law and (4) the transferability of delta-tuning methods among different downstream tasks. The implementation details and tasks are described in Supplementary Sections 3 and 4.

Performance, convergence and efficiency

Experimental setting

We evaluate vanilla fine-tuning (FT) and four representative delta-tuning methods, including prompt-tuning (PT), prefix-tuning (PF), LoRA (LR) and adapter (AP). We follow the common practice for each delta-tuning implementation, and the training details are provided in Supplementary Section 3.1.

To cover broad and diverse NLP tasks, we select over 100 representative tasks from Huggingface datasets18. The selected tasks include text classification (for example, sentiment analysis and natural language inference), question answering (for example, machine reading comprehension and multi-choice question answering), conditional generation (for example, summarization and dialogue) and so on. We list the task details of each category in Supplementary Table 4. To handle different tasks with a single text-to-text PLM, we process the input and output of each task into the same sequence-to-sequence format. T5BASE and T5LARGE are two PLMs with the T5 architecture released by ref. 8. We choose T5BASE (ref. 8) as the mainly evaluated PLM backbone for different tuning methods, and we also report the performance of PT with T5LARGE (ref. 8).

Performance analysis

The overall results are listed in Table 1, from which we observe the following.

-

1.

In general, despite the substantial reduction of tunable parameters, different delta-tuning methods are almost comparable to FT in performance in most cases. This demonstrates the potential of driving large-scale PLMs through parameter-efficient adaptation.

-

2.

Despite having different design elements, PF, LR and AP are comparable to each other in performance. Specifically, each can show dominant performance (even better than FT) over others on certain tasks. According to the average results, the performances of all the methods are ranked as FT > LR > AP > PF > PT. Interestingly, the performance of the delta-tuning methods is not consistent with their number of tunable parameters, that is, at least on small PLMs, more tunable parameters do not necessarily lead to better performance, and the design of the structure for delta-tuning may play a greater role.

-

3.

PT lags far behind other delta-tuning methods in most cases, despite being the easiest method to implement (that is, without modifying the internal structure of the model). Another interesting finding is that, better PT performance is observed when the model size is enlarged to T5LARGE, which is aligned with previous findings on the power of scale for prompt-tuning19. However, as we show later, other delta-tuning methods also exhibit far better performance when the scale of the backbone PLM grows extremely large. The phenomenon implies that when the model size increases sharply, the design of the structure may become less important for delta-tuning methods.

Convergence analysis

In Fig. 1, Extended Data Fig. 1 and Supplementary Fig. 3, we visualize the performance of different delta-tuning methods (LR, AP and PF) and fine-tuning (FT) at different training steps to compare their convergence rate. We also report the convergence rate with respect to training time in Extended Data Fig. 2. As PT lags far behind other tuning methods in convergence, we do not visualize it in the figures. However, as mentioned in Methods, PT is the easiest method to implement and it is the desirable method to theoretically and empirically study the convergence issue across different sizes of PLMs. Our findings are summarized as follows.

-

1.

The convergence rate of these tuning methods is ranked as: FT > AP ≈ LR > PF. Overall, FT converges the fastest.

-

2.

We also find empirically that, (1) within a reasonably broad range, the performance and convergence of each delta-tuning method are not sensitive to the number of tunable parameters, but more sensitive to the structures of the methods, and (2) with the scale of PLM growing larger, the convergence of delta-tuning is also accelerated (see ‘The power of scale for delta-tuning’ section).

Note we apply early stopping to all methods. We choose three metrics: (1) exact match (EM), which measures the percentage of correctly predicted answers that exactly match the ground-truth answer; (2) classification F1, which is calculated as the harmonic mean of precision and recall; and (3) accuracy (ACC), which measures the percentage of correctly predicted instances out of all instances. The performance of PT is omitted as it lags far behind other tuning methods in both convergence and performance. The convergence rate of these tuning methods is ranked as: FT > AP ≈ LR > PF.

To summarize, our experiments yield similar conclusions in convergence and overall performance. These conclusions are well supported by the fact that we used the same experimental and implementation set-up, the same model selection strategy and diverse tasks.

Efficiency analysis

Here we study the efficiency of delta-tuning from the perspectives of memory efficiency and computation efficiency. For memory efficiency, to validate the efficiency of graphics processing unit (GPU) memory for delta-tuning, in Fig. 2, we conduct experiments to compare the GPU memory consumed by different delta-tuning methods and fine-tuning across different PLM scales. T5XL is the PLM with the T5 architecture released by ref. 8. Specifically, we choose three scales of the T5 model, that is, T5BASE, T5LARGE and T5XL, and test the peak GPU memories under different batch sizes. The static GPU memories, which leave out the intermediate tensors such as hidden states, are drawn on Batchsize=0. We use a NVIDIA A100 GPU (maximum GPU memory 39.58 GB) and library OpenDelta for these experiments. For the cases that consume more GPU memory than a single A100, we parallelize the model across multiple GPUs, which does not introduce additional memory consumption. We observe from the figure that under small batch sizes (for example, 1 and 8), delta-tuning saves up to 3/4 GPU memory; under large batch sizes (for example, 32 and 64), delta-tuning saves about 1/2–1/3 GPU memory. This demonstrates that delta-tuning saves GPU memory by alleviating the need for gradient computations for most of the parameters. Given the fact that small batch sizes are preferred when utilizing big models, delta-tuning has great potential to apply to large-scale PLMs. Furthermore, among the investigated methods, BitFit is the most memory efficient.

In addition, although delta-tuning may converge slower than traditional fine-tuning, the computations of the tunable parameters in the optimizer are greatly reduced, which speeds up training. We compare the forwards time and the backwards time of prompt-tuning, BitFit, adapter tuning and fine-tuning in Extended Data Fig. 3, varying the input length. For a fair comparison, we keep the batch size the same. From the results, we can see that:

-

1.

The structure of the delta-tuning methods could have a considerable impact on the time of a single forwards or backwards process. By greatly reducing the computations of the tunable parameters, the backwards time of delta-tuning methods is shorter than fine-tuning.

-

2.

As the adapter injects additional neural modules to each layer of the transformer model, the path of data flow becomes longer and further leads to inference latency (longer forwards time).

Combinations of delta-tuning methods

Considering that different delta-tuning methods are compatible with each other, which means they could be applied on the same PLM together, we investigate whether such a combination would bring additional benefits. Specifically, we evaluate both simultaneous combination and sequential combination. We choose three representative delta-tuning methods, including prompt-tuning, BitFit and adapter, to explore the effects of their combinations. The training details are described in Supplementary Section 3.2.

Simultaneous combination

We first explore the effects of directly applying all the three delta-tuning methods simultaneously. RoBERTaLARGE is the PLM released by ref. 20 and GLUE21 is the official benchmark for language understanding ability evaluation. The experiments are conducted using RoBERTaLARGE on eight tasks of GLUE (full-data setting), and we report the performance on the official development sets. We also test the performance of RoBERTaLARGE under the few-shot setting, where we randomly sample 16 training examples per label to construct the new training set and development set, respectively. Similar to prompt-based fine-tuning22, we insert a natural language prompt template into the input text for each task, and the detailed implementations are described in Supplementary Section 3.2.

We list the results of simultaneous combination for RoBERTaLARGE in Table 2 (the results of T5BASE are listed in Extended Data Table 2, with discussions in Supplementary Section 3.2), from which we conclude that:

-

1.

Under both the full-data setting and few-shot setting, introducing adapter into the combination almost always conduces to the average performance across GLUE tasks no matter whether there exist manual templates.

-

2.

Introducing prompt-tuning into the combination generally harms the average performance, showing that prompt-tuning may not be compatible with the other two delta-tuning methods.

-

3.

Introducing BitFit into the combination generally improves the average performance.

-

4.

Manual templates could substantially improve the zero-shot performance (from 23.7 to 43.4) by narrowing the gap between downstream tuning and pre-training. Under the few-shot setting, manual templates could also help boost the average performance evidently. However, when the training supervision is abundant (full-data setting), manual templates only show marginal improvements.

Sequential combination

In addition to the simultaneous combination, we further investigate the compatibility when the above three delta-tuning methods (prompt-tuning, BitFit and adapter) are sequentially introduced. Specifically, we split the whole tuning process into three stages. During each stage, we train an individual delta-tuning method for 6,000 steps; in the following stages, we freeze the tuned parameters in the previous stages and optimize only the newly introduced delta parameters. SST-2 (ref. 23) is the dataset that evaluates the sentiment analysis ability. We experiment with RoBERTaLARGE on SST-2 with and without manual templates. The results are visualized in Extended Data Fig. 4, from which it is derived that:

-

1.

Under certain cases, the performance can be improved with the involvement of subsequent delta-tuning methods.

-

2.

However, there does not exist an optimal sequential combination strategy that could dominate other combination strategies under different settings.

Generalization gap

In addition, we report the generalization gap (train performance − dev performance) for RoBERTaLARGE under the full-data setting, with the results shown in Extended Data Table 3. It is derived that:

-

1.

The gap of a single delta-tuning method is always smaller than fine-tuning, which means over-parameterization may help better memorize (overfit) training samples. Among all the delta-tuning methods, prompt-tuning tends to have the smallest generalization gap. Considering that each delta-tuning method could already generalize well and achieve non-trivial performance on the development set, overfitting the training set may not be the prerequisite for good generalization.

-

2.

In general, combining delta-tuning methods would enlarge the generalization gap, even to the extent that is comparable to fine-tuning, despite tuning far fewer parameters. This suggests that, for the investigated tasks, memorizing the training set may not require employing all of the parameters; in other words, a small model capacity during downstream adaptation may be enough for good memorization.

-

3.

Utilizing manual templates generally would not influence the generalization gap.

Conclusion

The above experiments indicate that different delta-tuning methods have distinct functionalities for the optimization of PLMs; thus, combining them is generally conducive to the downstream performance. However, as shown in the above results, the optimal combination of delta-tuning methods may vary considerably under different settings. That being said, it would be interesting to explore the mechanisms behind the inductive biases brought by different delta-tuning methods under different cases in the future. Besides, we also encourage future research explorations to systematically report the performance of their proposed delta-tuning methods on various PLM backbones under different settings thoroughly.

The power of scale for delta-tuning

With the scale of the backbone PLM growing, prompt-tuning becomes more and more competitive in performance, and would even achieve comparable performance to fine-tuning for a PLM with over 10 billion parameters19, and the convergence speed of prompt-tuning benefits from the scaling law. In this section, we explore whether other delta-tuning methods also exhibit the power of scale. MNLI and QNLI are two natural language inference dataset, and T5SMALL and T5XXL are two PLMs with the T5 architecture released by ref. 8. Specifically, we experiment on the task of MNLI, QNLI and SST-2, and choose three PLMs (T5SMALL, T5BASE and T5XXL) of increasing sizes, and evaluate the performance of five representative delta-tuning methods (adapter, LoRA, prefix-tuning, last-layer tuning and selective-module tuning). We describe the percentages of the tuned parameters for each method in all scales of the PLM in Supplementary Table 3. The training details are provided in Supplementary Section 3.3. The results are visualized in Fig. 3. From Fig. 3a–i, we observe that with the scale of the PLM growing, both the performance and the convergence of all delta-tuning methods are greatly improved. All delta-tuning methods tend to show comparable performance to fine-tuning, even for a small-scale PLM (T5BASE).

a–o, We perform all delta-tuning methods on different scales of T5: T5SMALL(), T5BASE() and T5XXL(). We report the performance of Adapter in (a–c), LoRA in (d–f), Prefix-tuning in (g–i), Last-layer tuning in (j–l), and Selective-module tuning in (m–o). From this figure, we can observe that with the scale of T5 increasing, all delta-tuning methods could converge faster and achieve better performance on MNLI, QNLI and SST-2.

On the basis of the existing results, we further design two delta-tuning methods: last-layer tuning and selective-module tuning. For last-layer tuning, we optimize the last layer in the T5 encoder; for selective-module tuning, we randomly choose some modules (for example, the feed-forward layer, query/key/value matrix in the attention layer, or a layer norm) in the T5 model to be tunable. The results are visualized in Fig. 3j–l,m–o, from which we could conclude that:

-

1.

Both methods show promising results, especially when the scale of the PLM is extremely large, with selective-module tuning slightly better than last-layer tuning. These results suggest that confining the optimization within a specific layer may not be a good strategy (for example, the case of prompt-tuning and last-layer tuning).

-

2.

Furthermore, randomly choosing modules across different layers could achieve excellent performance when the scale of PLMs grows extremely large.

In general, the above results imply that the power of scale may be a common phenomenon for delta-tuning. We hypothesize the existence of such a phenomenon is because larger PLMs generally have smaller intrinsic dimensionalities16; therefore, merely tuning minimal parameters could obtain a strong enough representation ability to achieve non-trivial performance in downstream tasks; furthermore, the over-parameterization and large-scale pre-training may make PLMs more unlikely to get stuck in a local optimum during downstream optimization, and thus the convergence is accelerated.

Task-level transferability evaluation

Recent studies24,25,26 have demonstrated that prompt-tuning has excellent cross-task transferability. In this subsection, we explore the cross-task transferability of four delta-tuning methods (prompt-tuning, prefix-tuning, adapter and LoRA) with 12 tasks of 5 different types (sentiment analysis, natural language inference, paraphrase identification, question answering and summarization). We transfer the trained delta parameters to the unseen target tasks. More training and dataset details are provided in Supplementary Section 3.4.

In experiments, we report their relative performance (zero-shot transferring performance and original performance). The results are shown in Extended Data Fig. 5, from which we can observe that:

-

1.

For the tasks belonging to the same category, transferring tuned parameters among them generally performs well; for the tasks of different types, transferring delta parameters among them generally achieves poor performance.

-

2.

We also find that transferring tuned parameters from the text generation tasks such as question answering and summarization can achieve non-trivial performance on sentiment analysis, indicating that text generation might be a complex task that includes the knowledge required to solve the sentiment analysis tasks. In general, the above results demonstrate that it is promising to utilize trained delta parameters for similar tasks through knowledge transfer.

Conclusion

This Analysis focuses on parameter-efficient methods, that is, delta-tuning, for PLMs. We first describe the problem and provide a categorization to survey the development of delta-tuning systematically. Captivated by the empirical evidence, we propose two frameworks to theoretically discuss delta-tuning from the optimization and optimal control perspectives. Our discussion sheds light on the theoretical references of a novel design for delta-tuning methods and hopefully could inspire a deeper understanding of model adaptation for PLMs. Empirically, we conduct extensive experiments across 100+ NLP tasks to fairly evaluate and explore the combinatorial property, influence of scale and transferability for delta-tuning. In terms of performance, delta-tuning can be slightly behind or comparable to fine-tuning on a wide range of tasks, and the gap shrinks as the model scales; in terms of efficiency, delta-tuning could considerably reduce storage space and memory usage, as well as accelerate backpropagation. In summary, delta-tuning shows considerable potential to stimulate large PLMs, and we hope that the paradigm can be further theoretically studied and empirically practiced.

Methods

Delta-tuning is developed on the success of PLMs, which use deep transformers as the base structure and adopts pre-training objectives on large-scale unlabelled corpora. For more information about PLMs and transformers, see Supplementary Section 1 or related surveys27 and original papers4,5,8,9.

Given a pre-trained model Θ = {w1, w2, ..., wN} and training data \({{{\mathcal{D}}}}\), the objective of PLM adaptation is to produce the adapted model \({{{\varTheta }}}^{{\prime} }=\{{w}_{1}^{{\prime} },{w}_{2}^{{\prime} },...,{w}_{M}^{{\prime} }\}\), where wi is the model parameter. Define ΔΘ as the change in the adapted model Θ′ compared with Θ, including the change in values and the number of elements. In vanilla fine-tuning, N = M and \({{\Delta }}{{\varTheta }}=\nabla {f}_{{{\varTheta }}}({{{\mathcal{D}}}})\) is the update value of all parameters in Θ with respect to training data, where fΘ represents the resulting loss of applying model Θ to training data D. Note that in this case, we omit the small set of parameters brought by extra classification heads for downstream tasks. While in delta-tuning, ΔΘ refers to the modification of a small number of parameters. Empirically, |ΔΘ| = |Θ| in vanilla fine-tuning, while for delta-tuning, |ΔΘ| ≪ |Θ|, where |⋅| indicates the number of parameters involved.

To organize them under a unified framework, we categorize the delta-tuning methods into three groups according to the operations on the delta parameters (as illustrated in Fig. 4): addition-based, specification-based and reparameterization-based approaches.

-

Addition-based methods introduce extra trainable neural modules or parameters that do not exist in the original model or process. In addition-based methods, M ≥ N and ΔΘ = {wN+1, wN+2, ..., wM}.

-

Specification-based methods specify certain parameters in the original model or process become trainable, whereas others are frozen. Denote the set of trainable parameters as \({{{\mathcal{W}}}}\), then ΔΘ = {Δw1, Δw2, ..., ΔwN}. When \({w}_{i}\in {{{\mathcal{W}}}}\), Δwi is the incremental value from wi to \({w}_{i}^{{\prime} }\), else, Δwi = 0.

-

Reparameterization-based methods reparameterize existing parameters to a parameter-efficient form by transformation. Denote the set of parameters to be reparameterized as \({{{\mathcal{W}}}}\), and suppose that each \({w}_{i}\in {{{\mathcal{W}}}}\) is reparameterized with new parameters \(R({w}_{i})=\{{u}_{1},{u}_{2},...,{u}_{{N}_{i}}\}\), then \({{\Delta }}{{\varTheta }}=({{\varTheta }}\setminus {{{\mathcal{W}}}})\cup {{{\mathcal{U}}}}\), where \({{{\mathcal{U}}}}=\{{u}_{j}| \exists {w}_{i}\in {{{\mathcal{W}}}},{u}_{j}\in R({w}_{i})\}\).

Addition-based methods

With the above definition in mind, addition-based methods introduce additional parameters to the neural network. In this section, we introduce two branches of representative addition-based methods, adapter-based tuning and prompt-based tuning.

Adapter-based tuning

As a seminal work in delta-tuning, adapter-based methods inject small-scale neural modules (adapters) to the transformer layers and only tune these adapters for model adaptation. Although such a strategy leaves an open choice of adapter structures, a simple instantiation13 achieves impressive performance and has become the most widely used baseline in recent research. Specifically, one adapter module contains a down-projection and an up-projection. For an input feature \({{{\bf{h}}}}\in {{\mathbb{R}}}^{d}\), a down-projection projects the input to a r-dimensional space with a parameter matrix \({{{{\it{W}}}}}_{d}\in {{\mathbb{R}}}^{d\times r}\), after which a nonlinear function f (⋅) is applied. Then the up-projection Wu maps the r-dimensional representation back to d-dimensional space. Added with a residual connection, the complete computation could be written as h← f(hWd)Wu+h.

In each block, the adapter modules are separately inserted after the multi-head self-attention and the feed-forward network sublayers, which reduces the tunable parameters per layer to 2 × (2dr (projectionmatrices) + d (residualconnection) + r (biasterm)). Practically, about 0.5–8% of parameters of the whole model13 could be involved in the tuning process under such a strategy.

Although an adapter works with much fewer tunable parameters than vanilla fine-tuning, some work attempts a more rigorous saving strategy by introducing inductive biases into the structure of the adapter layer. For example, Compacter28 proposes to use a combination of hypercomplex multiplication and parameter sharing. The hypercomplex multiplication parameterizes the original linear layer as the sum of the Kronecker products of two small matrices. Taking the down-projection as an example, \({{{{\it{W}}}}}_{d}=\mathop{\sum }\nolimits_{i = 1}^{n}{{{{\it{A}}}}}_{i}\otimes {{{{\it{B}}}}}_{i}\), where \({{{\it{A}}}}\in {{\mathbb{R}}}^{n\times n}\) and \({{{\it{B}}}}\in {{\mathbb{R}}}^{\frac{d}{n}\times \frac{r}{n}}\).

Their method reduces the parameter complexity of the normal adapter layer from \({{{\mathcal{O}}}}(dr)\) to \({{{\mathcal{O}}}}(d+r)\) without harming the performance. It also shows that a simple low-rank decomposition of the linear layer leads to comparable performance with the adapter layer, that is, \({{{{\it{W}}}}}_{d}={{{\it{A}}}}{{{{\it{B}}}}}^{T}\), where \({{{\it{A}}}}\in {{\mathbb{R}}}^{d\times n}\), \({{{\it{B}}}}\in {{\mathbb{R}}}^{r\times n}\) and \(n\ll \min (d,r)\), where the superscript T means matrix transposition.

As an addition-based approach, adapter-based tuning has the advantage of placing multiple adapter instances on a pre-trained model simultaneously, which can benefit many application scenarios. For example, multi-task learning29,30 is an advantageous setting for adapter-based methods, inserted with adapter modules in parallel with the self-attention module, PLMs could demonstrate impressive representational capacity in the multi-task setting. In contrast to directly conducting multi-task learning on adapters, adapterFusion31 first pre-trains task-specific adapters and then combines the representations of the pre-trained adapters to leverage the cross-task knowledge and enhance the performance of transfer learning.

In terms of computational efficiency, the training of adapters could be 60% faster than vanilla fine-tuning while the inference is only 4–6% slower. In addition, the computational cost could be further reduced dynamically by removing adapters from lower transformer layers32. Research also shows that adapter-based fine-tuning demonstrates better robustness than fine-tuning. Specifically, adapter-based fine-tuning could perform better than vanilla fine-tuning on few-shot and cross-lingual scenarios33 and is more robust under adversarial attacking34. We provide a comparison of different adapters, as well as other delta-tuning methods in Extended Data Table 4.

To sum up, adapters are lightweight additional neural modules that could be trained in a task-specific style, which could be regarded as ‘encapsulation’ of task information (in fact, this perspective can be applied to all the ‘deltas’). Although in an ideal world, adapters could be freely shared and reused by researchers, in practice, sharing and reusing such modules face substantial obstacles. Taking the first step, AdapterHub35 provides a feasible platform and toolkit to deploy adapters inside the transformer-based models.

Prompt-based tuning

Instead of injecting neural modules to the transformer model, prompt-based methods wrap the original input with additional context. As a strategy to stimulate PLMs by mimicking pre-trained objectives in the downstream tasks, prompt-based learning has achieved promising performance in various NLP tasks36,37, especially in low-data settings. The introduction of the technique and implementations of prompt-based learning have already been comprehensively presented in other literature38,39. In this paper, we primarily focus on the parameter-efficient attribute of prompt-based learning (only prefixes or prompts are optimized) and pay less attention to the settings where the models and prompts are simultaneously optimized.

An important seminal work of this branch of research is prefix-tuning40, which prepends trainable continuous tokens (prefixes) to the input and hidden states of each transformer layer. Each prefix is drawn from a newly initialized trainable parameter matrix P, whereas other parameters of the pre-trained model remain unchanged during training. During generation, if an activation hi is in a prefix position, it is the direct copy of the corresponding trainable parameter; otherwise, the activation is computed by the model as hi = LM(zi, h<i), where i is the position index, z is the input and LM stands for the language model. It is worth noting that the paradigm could be applied to both autoregressive and encoder–decoder models. Such a strategy could be effectively applied to natural language understanding with different scales of models41.

Compared with prefix-tuning, which adds tunable prefixes to every intermediate transformer layer, prompt-tuning19 proposes a more simplified strategy that only adds soft prompts to the input layer. Similar to prefix-tuning, the newly introduced prompts are not parameterized by the pre-trained model but an additional parameter matrix. And during training, the parameters of soft prompts are updated by gradient descent while the model parameters keep frozen. As the model size increases, the performance gap between prompt-tuning and full parameter fine-tuning is narrowed. In particular, when the model scales to T5XXL with 11 billion parameters, prompt-tuning yields comparable performance on SuperGlue with fine-tuning. This strategy also exhibits sensitivity to the length and initialization of the soft prompts. Prompts could also be injected in the pre-training stage to seek a satisfying initialization point42. Moreover, similar to other methods, prompt-tuning also demonstrates transferability across tasks24,26, which suggests that appropriate initialization could be substantially beneficial for downstream tasks.

The training curse of prompt-based methods

Although prompt-based methods exhibit a promising future for the adaptation of large pre-trained models, especially as prompt-tuning does not need to modify anything inside the neural network, there still exist unsolved challenges. In practice, prompt-tuning is difficult to optimize, and generally, this phenomenon becomes more apparent as the volume of data and the size of the model decreases. Even though soft prompts can be trained successfully, they converge slower than full parameter fine-tuning and other delta-tuning methods during training. In our experiments, we validate the phenomenon across different datasets (‘Performance, convergence and efficiency’ section), indicating that it is an interesting topic to train soft prompts to converge stably in various situations.

Specification-based methods

Specification-based methods fine-tune a few inherent parameters while leaving the majority of parameters unchanged in model adaptation. This approach does not seek to change the internal structure of a model but to optimize a small number of internal parameters to solve particular tasks. In general, such specifications could be implemented based on heuristics or training supervision.

Heuristic specification

Specification-based methods do not introduce any new parameters to the model, but directly specify part of the parameters to be optimized. The idea is simple but surprisingly effective; an early study43 only fine-tunes one-fourth of the final layers of BERT and RoBERTa and could produce 90% of the performance of full parameter fine-tuning. BitFit14 empirically proves that by only optimizing the bias terms inside the model and freezing other parameters, the model could still reproduce over 95% performance on several benchmarks. Empirical results in BitFit also show that even if we use a small random set of parameters for delta-tuning (which obviously will degrade the performance), the model could still yield passable results on the GLUE benchmark. Unfortunately, the work only applies this trick to small-scale models, and there is no guarantee that randomly choosing some parameters to be tuned would remain competitive for larger models. Another valuable observation is that different bias terms may have different functionalities during model adaptation.

Learn the specification

Rather than manually or heuristically specify which parameters to update, one alternative is to ‘learn’ such specifications. Following the definition in this section, diff pruning44 reparameterizes the fine-tuned model parameters Θ′ as the summation of the pre-trained parameters Θ and the difference vector ΔΘ, that is, Θ′ = Θ + ΔΘ, where |Θ| = |Θ′|. Hence, the key issue is to encourage the difference vector to be as sparse as possible; this work regularizes the vector by a differentiable approximation to the L0-norm penalty to achieve the goal of sparsity. Practically, because new parameters to be optimized are introduced in the learning phase, diff pruning takes up more GPU memory than full parameter fine-tuning, which may establish barriers in the application on large PLMs. The masking method45 learns selective masks for PLMs to only update the critical weights for particular tasks. To learn such a set of masks, a binary matrix associated with the model weights is introduced, where each value is generated by a thresholding function. During backpropagation, the matrix is updated by a noisy estimator.

Reparameterization-based methods

Reparameterization-based methods transform the adaptive parameters during optimization into parameter-efficient forms. This branch of delta-tuning is typically motivated by the hypothesis that PLM adaptations towards most downstream tasks are inherently low rank, and could thus be equivalently completed in a parameter-efficient way.

Intrinsic dimensions of PLM adaptation

Previous work16 has empirically shown that the full parameter fine-tuning process of pre-trained models can be reparameterized into optimization within a low-dimensional subspace, that is, fine-tuning has a low intrinsic dimension46, which measures the minimum number of parameters needed to reach satisfactory performance. In experiments, they find that a relatively low-dimensional (for example, thousands) reparameterization could achieve over 85% fine-tuning performance. In this sense, PLMs may serve as general compression frameworks, which compress the optimization complexity from high dimensions to low dimensions. They also demonstrate that larger PLMs generally have smaller intrinsic dimensions, and the process of pre-training implicitly reduces the PLM’s intrinsic dimension. Taking inspiration from these observations, reparameterization-based delta-tuning methods are proposed, which reparameterize (a part of) original model parameters with low-dimensional proxy parameters and only optimize the proxy parameters and thus reduce the computation and memory cost.

Intrinsic rank of weight differences

LoRA15 hypothesizes that the change of weights during model tuning has a low intrinsic rank. On the basis of this hypothesis, it is proposed to optimize the low-rank decomposition for the change of original weight matrices in the self-attention modules. In deployment, the optimized low-rank decomposition matrices are multiplied to obtain the delta of self-attention weight matrices. In this way, LoRA could match the fine-tuning performance on the GLUE benchmark. They demonstrate the effectiveness of their methods on PLMs of various scales and architectures.

Intrinsic space of multiple adaptations

Furthermore, intrinsic prompt-tuning17 makes a stronger hypothesis that the adaptations to multiple tasks could be reparameterized into optimizations within the same low-dimensional intrinsic subspace. Instead of resorting to a random subspace16, they try to find a common subspace shared by various NLP tasks, which is implemented through decomposing the trained soft prompts of multiple NLP tasks into the same low-dimensional nonlinear subspace, and then learn to adapt the PLM to unseen tasks or data by only tuning parameters in the subspace. Experiments show that in a 250-dimensional subspace found with 100 random tasks, by only tuning 250 free parameters, 97% and 83% of the full prompt-tuning performance can be recovered for 100 seen tasks (using different training data) and 20 unseen tasks, respectively. This provides strong evidence for their universal reparameterization hypothesis and may inspire future work. Moreover, this work also shows that the low-dimensional reparameterization can substantially improve the stability of prompt-tuning. Their method could also be leveraged as a tool for analysing the similarity and differences between various NLP tasks.

Theoretical perspectives of delta-tuning

Are these methods essentially doing the same thing? We are interested in the theoretical principles behind delta-tuning. A PLM can usually be effectively adapted to various downstream tasks with a smaller cost compared with pre-training, which leads to theoretical issues that are worth exploring in depth. We adopt two frameworks to introduce theoretical insights into delta-tuning from the perspectives of optimization and optimal control.

Optimization perspective

As training neural networks is an optimization process, the mechanism of delta-tuning can be analysed from the perspective of optimization. In general, it is challenging and time-consuming to solve large-scale and high-dimensional optimization problems. However, in the fine-tuning of a large PLM, empirical study16 reveals that there exists a low intrinsic dimension; thus, some customized optimization schemes can benefit from this property and be quite efficient in practice. One promising scheme is the subspace optimization47 that seeks an acceptable solution in a low-dimensional subspace. It manipulates a small number of variables and is more economical than the optimization in the whole space. In fact, delta-tuning can be viewed as a subspace-optimization method.

There are two approaches to applying subspace optimization and thus the delta-tuning can roughly fall into two categories. One is tuning model parameters in the solution subspace. It exploits a low-dimensional manifold that can approximately represent the whole model parameters, and the optimization trajectory follows this manifold. Some delta-tuning methods can be categorized into this approach, for example, LoRA15, BitFit14 and diff pruning44. The other approach seeks a surrogate of the original objective function in a small functional subspace and uses the minimizer of the surrogate function as the approximate final solution. It can provide some explanations of the rationales of some popular delta-tuning methods such as prompt-tuning19 and prefix-tuning40. A complete discussion can be found in Supplementary Section 2.1.

Optimal control perspective

We draw inspiration from optimal control theories to better understand the functionality of delta-tuning. In addition to their parameter efficiency, the essence of delta-tuning lies in regularizing the layer-wise hidden-state transformation process along forwards propagation. The forward propagation of hidden states h between layer j and j + 1 in the PLM, with the guidance of the delta parameters δ(j) at the jth layer, can be written as \({{{{\mathcal{G}}}}}_{\theta }^{(j)}\left({h}^{(j)},{\delta }^{(j)}\right)\). With the parameters θ in the PLM fixed, the transformation function \({{{{\mathcal{G}}}}}_{\theta }^{(j)}\) defines the altered forwards propagation at the jth layer with the learnable δ(j). The detailed formulations and instantiations of \({{{{\mathcal{G}}}}}_{\theta }^{(j)}\) for different delta-tuning methods, including Prefix-tuning, Adapter, LoRA and BitFit, are listed in Supplementary Section 2.2. In this way, the tuned delta parameters are interpreted as the optimal controllers that steer the PLMs to work in different realistic settings.

The optimal control perspective instructs the novel design of delta-tuning. For example, robust prefix-tuning48 tunes additional layer-wise prefix parameters during inference. The layer-wise propagation of hidden states is thus guided towards correct outputs. Another work49 leveraged inference-time bias-term tuning to mitigate bias and toxicity in natural language generation. The number of bias terms to be tuned is determined by the extent of modification of the hidden-state transformation in an adaptive manner. Finally, by applying the theories of controller design50,51, we expect more delta-tuning methods proposed with theoretical guarantees and better exploitation of the power of PLMs52.

Data availability

Datasets used in this study are freely available at https://github.com/INK-USC/CrossFit and https://huggingface.co/datasets/glue.

Code availability

The source code of this study is publicly available on GitHub at https://github.com/thunlp/OpenDelta. It is also available at https://zenodo.org/record/7340282.

References

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Hochreiter, S. & Schmidhuber, J. ürgen. Long short-term memory. Neural Comput. 9, 1735–1780 (1997).

Bengio, Y., Ducharme, R. & Vincent, P. A neural probabilistic language model. In Advances in Neural Information Processing Systems. 13 (2000).

Vaswani, A. et al. Attention is all you need. In Advances in Neural Information Processing Systems. 30 (2017).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. In Proc. the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. 1, 4171–4186 (2019).

Radford, A., Narasimhan, K., Salimans, T. & Sutskever, I. Improving language understanding by generative pre-training. OpenAI Blog. https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (2018).

Radford, A. et al. Language models are unsupervised multitask learners. OpenAI Blog. https://d4mucfpksywv.cloudfront.net/better-language-models/language-models.pdf (2019).

Raffel, C. et al. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 21, 5485–5551 (2020).

Brown, T. et al. Language models are few-shot learners. In Advances in Neural Information Processing Systems. 33, 1877–1901 (2020).

Rae, J. W. et al. Scaling language models: methods, analysis & insights from training Gopher. Preprint at arXiv https://arxiv.org/abs/2112.11446 (2021).

Smith, S. et al. Using deepspeed and megatron to train Megatron-Turing NLG 530b, a large-scale generative language model. Preprint at arXiv https://arxiv.org/abs/2201.11990 (2022).

Chowdhery, A. et al. PaLM: scaling language modeling with pathways. Preprint at arXiv https://arxiv.org/abs/2204.02311 (2022).

Houlsby, N. et al. Parameter-efficient transfer learning for NLP. In International Conference on Machine Learning. (eds Chaudhuri, K. & Salakhutdinov, R.) 2790–2799 (2019).

Zaken, E. B., Ravfogel, S. & Goldberg, Y. Bitfit: simple parameter-efficient fine-tuning for transformer-based masked language-models. In Proc. the 60th Annual Meeting of the Association for Computational Linguistics. 2, 1–9 (2022).

Hu, E. J. et al. LoRA: low-rank adaptation of large language models. In International Conference on Learning Representations (2022).

AAghajanyan, A., Gupt, S. & Zettlemoyer, L. Intrinsic dimensionality explains the effectiveness of language model fine-tuning. In Proc. the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing. 1, 7319–7328 (2021).

Qin, Y. et al. Exploring low-dimensional intrinsic task subspace via prompt tuning. Preprint at arXiv https://arxiv.org/abs/2110.07867 (2021).

Lhoest, Q. et al. Datasets: a community library for natural language processing. In Proc. the 2021 Conference on Empirical Methods in Natural Language Processing: System Demonstrations. 175–184 (2021).

Lester, B., Al-Rfou, R. & Constant, N. The power of scale for parameter-efficient prompt tuning. In Proc. the 2021 Conference on Empirical Methods in Natural Language Processing. 3045–3059 (2021).

Liu, Y. et al. Roberta: a robustly optimized BERT pretraining approach. Preprint at arXiv https://arxiv.org/abs/1907.11692 (2019).

Wang, A. et al. GLUE: a multi-task benchmark and analysis platform for natural language understanding. In International Conference on Learning Representations (2019).

Schick, T. & Schütze, H. Exploiting cloze-questions for few-shot text classification and natural language inference. In Proc. the 16th Conference of the European Chapter of the Association for Computational Linguistics. 255–269 (2021).

Socher, R. et al. Recursive deep models for semantic compositionality over a sentiment treebank. In Proc. the 2013 Conference on Empirical Methods in Natural Language Processing. 1631–1642 (2013).

Su, Y. et al. On transferability of prompt tuning for natural language understanding. In Proc. the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. 3949–3969 (2022).

Williams, A., Nangia, N. & Bowman, S. A broad-coverage challenge corpus for sentence understanding through inference. In Proc. the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. 1, 1112–1122 (2018).

Vu, T., Lester, B., Constant, N., Al-Rfou, R. & Cer, D. Spot: better frozen model adaptation through soft prompt transfer. In Proc. the 60th Annual Meeting of the Association for Computational Linguistics. 1, 5039–5059 (2022).

Han, X. et al. Pre-trained models: Past, present and future. AI Open 2, 225-250. https://www.sciencedirect.com/science/article/pii/S2666651021000231 (2021).

Mahabadi, R. K., Henderson, J. & Ruder, S. Compacter: efficient low-rank hypercomplex adapter layers. In Advances in Neural Information Processing Systems. 34, 1022–1035 (2021).

Stickland, A. C. & Murray, I. BERT and pals: projected attention layers for efficient adaptation in multi-task learning. In International Conference on Machine Learning. 5986–5995 (2019).

Mahabadi, R. K., Ruder, S., Dehghani, M. & Henderson, J. Parameter-efficient multi-task fine-tuning for transformers via shared hypernetworks. In Proc. the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing. 1, 565–576 (2021).

Pfeiffer, J., Kamath, A., Rücklé, A., Cho, K. & Gurevych, I. AdapterFusion: non-destructive task composition for transfer learning. In Proc. the 16th Conference of the European Chapter of the Association for Computational Linguistics. 487–503 (2021).

Rücklé, A. et al. AdapterDrop: in the efficiency of adapters in transformers. In Proc. the 2021 Conference on Empirical Methods in Natural Language Processing. 7930–7946 (2021).

He, R. et al. On the effectiveness of adapter-based tuning for pretrained language model adaptation. In Proc. the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing. 1, 2208–2222 (2021).

Han, W., Pang, B. & Wu, Y. N. Robust transfer learning with pretrained language models through adapters. In Proc. the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing. 2, 854–861 (2021).

Pfeiffer, J. et al. AdapterHub: a framework for adapting transformers. In Proc. the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations. 46–54 (2020).

Gao, T., Fisch, A. & Chen, D. Making pre-trained language models better few-shot learners. In Proc. the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing. 1, 3816–3830 (2021).

Hu, S. et al. Knowledgeable prompt-tuning: incorporating knowledge into prompt verbalizer for text classification. In Proc. the 60th Annual Meeting of the Association for Computational Linguistics. 1, 2225–2240 (2021).

Liu, P. et al. Pre-train, prompt, and predict: a systematic survey of prompting methods in natural language processing. ACM Comput. Surv. 55, 1–35 (2023).

Ding, N. et al. Openprompt: an open-source framework for prompt-learning. In Proc. the 60th Annual Meeting of the Association for Computational Linguistics: System Demonstrations. 105–113 (2022).

Li, X. L. & Liang, P. Prefix-tuning: optimizing continuous prompts for generation. In Proc. the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing. 1, 4582–4597 (2021).

Liu, X. et al. P-tuning: prompt tuning can be comparable to fine-tuning universally across scales and tasks. In Proc. the 60th Annual Meeting of the Association for Computational Linguistics. 2, 61–68 (2022).

Gu, Y., Han, X., Liu, S. & Huang, M. Ppt: pre-trained prompt tuning for few-shot learning. In Proc. the 60th Annual Meeting of the Association for Computational Linguistics. 1, 8410–8423 (2022).

Lee, J., Tang, R. & Lin, J. What would elsa do? Freezing layers during transformer fine-tuning. Preprint at arXiv https://arxiv.org/abs/1911.03090 (2019).

Guo, D., Rush, A. & Kim, Y. Parameter-efficient transfer learning with diff pruning. In Proc. the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing. 1, 4884–4896 (2021).

Zhao, M., Lin, T., Mi, F., Jaggi, M. & Schütze, H. Masking as an efficient alternative to finetuning for pretrained language models. In Proc. the 2020 Conference on Empirical Methods in Natural Language Processing. 2226–2241 (2020).

Li, C., Farkhoor, H., Liu, R. & Yosinski, J. Measuring the intrinsic dimension of objective landscapes. In International Conference on Learning Representations (2018).

Liu, X., Wen, Z. & Yuan, Y.-X. Subspace methods for nonlinear optimization. CSIAM Trans. Appl. Math. 2, 585–651 (2021).

Yang, Z. & Liu, Y. On robust prefix-tuning for text classification. In International Conference on Learning Representations (2022).

Yang, Z., Yi, X., Li, P., Liu, Y. & Xie, X. Unified detoxifying and debiasing in language generation via inference-time adaptive optimization. Preprint at arXiv https://arxiv.org/abs/2210.04492 (2022).

Boyd, S. P. & Barratt, C. H. Linear Controller Design: Limits of Performance Vol. 7 (Citeseer, 1991).

Ang, K. H., Chong, G. & Li, Y. PID control system analysis, design, and technology. IEEE Trans. Control Syst. Technol. 13, 559–576 (2005).

He, J., Zhou, C., Ma, X., Berg-Kirkpatrick, T. & Neubig, G. Towards a unified view of parameter-efficient transfer learning. In International Conference on Learning Representations (2022).

Acknowledgements

This work is supported by the National Key Research and Development Program of China (No. 2020AAA0106500), National Natural Science Foundation of China (No. 62276154 and No. 62011540405), Beijing Academy of Artificial Intelligence (BAAI) and the Institute for Guo Qiang at Tsinghua University. We thank J. He, P. Liu, T. Sun, C., L. Wang, C. Fang, X. Han and R. Shao for their suggestions and help with the paper.

Author information

Authors and Affiliations

Contributions

N.D., Y.Q. and Z.L. initiated and organized the research. N.D. drafted the abstract, the main text and Section Methods. S.H., X.W., W.Z. and Y.Q. added contents to Section Methods. F.W., Z.Y., N.D., Y.Q., S.H. and J.C. discussed the scope and content of the theoretical discussion. F.W. developed the optimization framework, and Z.Y. and Y.L. proposed the optimal control framework. N.D. verified the formula derivation. Y.Q. led the empirical study part. Y.Q., G.Y., Y.C., Y.S., W.C., J.Y., C.-M.C. and N.D. drafted Section Results. Y.Q., G.Y., W.C., J.Y. and S.H. conducted the experiments for overall performance and combination in Section Results. Y.S. and C.-M.C. conducted and wrote experiments for transferability and power of scale in Section Results. S.H. and Y.Q. drafted the application part. Z.L., H.-T.Z, Y.L., J.T., J.L. and M.S. advised the project, suggested the theoretical and empirical study and participated in the discussion. N.D. and Y.Q. participated in all the sections and proofread the whole paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Dieuwke Hupkes and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 The performance of T5BASE with different delta-tuning methods at different training steps.

The performance of T5BASE with different delta-tuning methods (LR, AP, PF) and fine-tuning (FT) at different training steps.

Extended Data Fig. 2 The performance of T5BASE with different delta-tuning methods at different training time.

The performance of T5BASE.with different delta-tuning methods (LR, AP, PF) and fine-tuning (FT) at different training time (seconds).

Extended Data Fig. 3 Time consumption for fine-tuning (FT) and different delta-tuning methods.

Time consumption for fine-tuning (FT) and different delta-tuning methods, including BitFit (BF), adapter (AP) and prompt-tuning (PT). We report the results with different input length.

Extended Data Fig. 4 The performance of RoBERTaLARGE when sequentially applying different delta-tuning methods.

The performance of RoBERTaLARGE when different delta-tuning methods (adapter (AP), BitFit (BF) and prompt-tuning (PT)) are applied sequentially. The experiments are conducted on SST-2.

Extended Data Fig. 5 Zero-shot transferring performance of four delta-tuning methods using T5BASE.

Zero-shot transferring performance of four delta-tuning methods using T5BASE. We report relative performance (zero-shot transferring performance / original performance) (%) on the target tasks (columns) when delta parameters are transferred from the source tasks (rows). Colours of the task names indicate the task types. Blue: sentiment analysis. Green: natural language inference. Orange: paraphrase identification. Brown: question answering. Purple: summarization.

Supplementary information

Supplementary Information

Preliminaries, Supplementary Theoretical Discussion, Empirical Study, Supplementary Figs.1–3 and Supplementary Tables 1–5.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ding, N., Qin, Y., Yang, G. et al. Parameter-efficient fine-tuning of large-scale pre-trained language models. Nat Mach Intell 5, 220–235 (2023). https://doi.org/10.1038/s42256-023-00626-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-023-00626-4

This article is cited by

-

CPMI-ChatGLM: parameter-efficient fine-tuning ChatGLM with Chinese patent medicine instructions

Scientific Reports (2024)

-

Defense against adversarial attacks: robust and efficient compressed optimized neural networks

Scientific Reports (2024)

-

Parameter-efficient tuning of cross-modal retrieval for a specific database via trainable textual and visual prompts

International Journal of Multimedia Information Retrieval (2024)

-

Rethinking the role of attention mechanism: a causality perspective

Applied Intelligence (2024)

-

Sentiment Analysis in the Age of Generative AI

Customer Needs and Solutions (2024)