Abstract

Modelling extreme values distributions, such as wave height time series where the higher waves are much less frequent than the lower ones, has been tackled from the point of view of the Peak-Over-Threshold (POT) methodologies, where modelling is based on those values higher than a threshold. This threshold is usually predefined by the user, while the rest of values are ignored. In this paper, we propose a new method to estimate the distribution of the complete time series, including both extreme and regular values. This methodology assumes that extreme values time series can be modelled by a normal distribution in a combination of a uniform one. The resulting theoretical distribution is then used to fix the threshold for the POT methodology. The methodology is tested in nine real-world time series collected in the Gulf of Alaska, Puerto Rico and Gibraltar (Spain), which are provided by the National Data Buoy Center (USA) and Puertos del Estado (Spain). By using the Kolmogorov-Smirnov statistical test, the results confirm that the time series can be modelled with this type of mixed distribution. Based on this, the return values and the confidence intervals for wave height in different periods of time are also calculated.

Similar content being viewed by others

Introduction

Marine forecasting has become a essential task to ensure the safety of navigation, fishery and engineering construction, among others1. Concretely, wave height prediction is key to the design of coastal and off-shore structures2. In this sense, the incorporation of wave models into numerical weather prediction models can improve atmospheric forecasts3. The development of offshore installations for oil and gas extraction and for renewable energy exploitation requires knowledge of the wave fields and any potential changes in them. One of the main problems is that the knowledge of the maximum peak-to-trough wave height is not usually available although largest waves have the greatest impact on ships and offshore structures4.

The importance of time series data mining has been increasing exponentially in the last decade5,6. They are present in different fields of application, e.g. climate7, oceanography8, biology9 and much more. In addition, they are used for different research objectives, such as classification10, tipping point detection11, forecasting12, etc.

Basically, a time series can be defined as temporal data collected in different periods of time. In this sense, the observation of a random variable in regular periods of time can lead to the introduction of noise. That is, if the period between two consecutive observations is much lower than the real cadence of the phenomenon under investigation, a high number of observed values will be very close to the average value of the characteristic studied.

In the context of oceanography and specifically, in the determination of extreme wave height values, if we consider a buoy collecting the wave height value every four hours, then a high proportion of values close to the average wave height will be recorded. This results in the fact that extreme wave heights, which are probably the most interesting ones, will be outnumbered by a set of very similar values without special interest. These non-informative observations have a distorting effect on the measures that could be taken to analyse the variable, because they do not significantly change the mean value but reduce the deviation, increasing the sample size.

Consequently, wave height extreme values will change from being more or less infrequent to atypical or outliers, with the drawbacks that this means for its analysis and prediction. The presence of these extreme values produces a denaturalization of the standard wave height probability distribution. For this reason, it is necessary to define thresholds of wave height from which the extreme wave distributions are considered, where large time series are needed, given that the number of these events every year is very low and depends on the oceanic position of the buoy.

Statistical methods to determine extreme wave heights using the Peaks-Over-Threshold approach (POT) have been significantly improved for several years. Mathiesen et al.13 use the POT method along with a Weibull distribution estimated by a maximum likelihood procedure. This is applied to the prediction of individual wave heights associated with high return periods, considering that 100 years or more is enough for the extensive use of ocean’s resources. In 2001, Coles14 introduced the GPD-Poisson by fitting a Generalized Pareto Distribution (GPD), which was also used later on15.

In 2011, Mazas and Hamm16 proposed the determination of extreme wave heights using a POT approach, where a double threshold (\(u_1,u_2\)) is presented. A low value \(u_1\) is set to select both weak and strong storms. Then, a second higher threshold (\(u_2\)) has to be determined to decide which storms have a statistically extreme behaviour. Tree probability distributions of extreme values are used to determine \(u_2\): GPD-Poisson, Weibull and Gamma distributions. To select the best-fitting distribution, two objective criteria based on likelihood (Bayesian Information Criterion17, BIC, and Akaike Information Criterion18, AIC) are used.

More recently, Petrov et al.19 presented a maximum entropy (MaxEnt) method for the prediction of extreme significant wave heights, comparing it with the state of the art methodologies of the Extreme Value Theory (EVT): the GPD and the Generalized Extreme Value distribution (GEV). According to the definition of the MaxEnt principle, the distribution that provides the highest entropy is selected to give more information among all other possible distributions that satisfy the proposed constraints.

As can be seen, all methods are based on selecting a threshold and modelling the distribution of the wave heights over this threshold. Thus, the main problem is how to select this threshold in order to avoid information loss. For that, it could be interesting to model the complete time series with both regular and extreme values and to use this theoretical distribution to fix the threshold for the POT approach. In this paper, we propose a new methodology to determine the distribution of the extreme wave heights considering that the normally distributed extreme wave heights are added as to regular values from a uniform distribution. The reason for choosing a uniform distribution is that, outside a range around the mean, all observations of wave height should be assumed to be part of the problem and never noise. This makes us discard the normal distribution as a contamination distribution. After that, using the estimated theoretical mixed distribution, we set the threshold for the POT methodologies. In this way, we fit several distributions of the values over this threshold and select the best-fitting distribution according to the BIC and AIC criteria.

The novel contributions of this work to applied energy issues are:

-

In atmospheric time series, such as wave height20, wind power21 or fog formation in airports22,23, there are many values close to the average. This makes that extreme values of time series, which are the most interesting ones, are hidden by uninteresting values. For this reason, these values have a distorting effect on extreme values. In this paper, we show that regular values do not significantly change the mean value of the time series, but they reduce the deviation by increasing the sample size.

-

We propose a new methodology which, up to the author knowledge, has not been applied before to wave height time series. This methodology is able to determine the distribution of the complete time series, taking into account that wave height time series distribution is a mixture of a normal distribution of extreme values and noise from a uniform distribution.

-

For adjusting the four parameters needed to define the mixed distribution, we used the method of moments24, given that our methodology uses the raw time series.

-

When the mixed distribution is estimated, this methodology is used to determine the threshold needed for POT approaches. We assume that using the extreme values situated over a percentile of the theoretical mixed distribution is more reliable than using a predefined value adjusted by a trial and error process. In this way, our methodology is applied to obtain return values for 1, 2, 5, 10, 20, 50 and 100 years for nine real-world wave height time series, using three different percentiles from the mixed distribution.

The rest of paper is organised as follows: section “Methodology” presents the details of the proposed method. Section “Dataset and experimental design” describes the data considered and the characteristics of the experiments, while section “Results and discussion” includes the results and the associated discussion. Finally, section “Conclusion” concludes the paper.

Methodology

This sections introduces the Extreme Value Theory and presents the proposed methodology of this work.

Extreme value theory

Extreme Value Theory (EVT) is associated to the maximum sample \(M_n = \text {max} (X_1, \ldots , X_n)\), where \((X_1, \ldots , X_n)\) is a set of independent random variables with common distribution function F. In this case, the distribution of the maximum observation is given by \(Pr(M_n < x) = F^n(x)\). The hypothesis of independence when the X variables represent the wave height over a determined threshold is quite acceptable, because, for oceanographic data, it is common to adopt a POT scheme which selects extreme wave height events that are approximately independent25. Also, in26, authors affirm that “The maximum wave heights in successive sea states can be considered independent, in the sense that the maximum height is dependent only on the sea state parameters and not in the maximum height in adjacent sea states”. This \(M_n\) variable is described with one of the three following distributions: Gumbel, Frechet, and Weibull.

One methodology in EVT is to consider wave height time series with the annual maximum approach (AM)27, where X represents the wave height collected on regular periods of time of one year, and \(M_n\) is formed by the maximum values of each year. The statistical behaviour of AM can be described by the distribution of the maximum wave height in terms of Generalized Extreme Value (GEV) distribution:

where:

where \(-\infty< \mu < \infty \), \(\sigma > 0\) and \(-\infty< \xi < \infty \). As can be seen, the model has three parameters: location (\(\mu \)), scale (\(\sigma \)), and shape (\(\xi \)).

The estimation of the return values, corresponding to the return period (\(T_p\)), are obtained by inverting Eq. (1):

where \(G(z_p) = 1 - p\). Then, \(z_p\) will be exceeded once per 1/p years, which corresponds to \(T_p\).

The alternative method in the EVT context is the Peak-Over-Threshold (POT), where all values over a threshold predefined by the user are selected to be statistically described instead of only the maximum values28,29. POT method has become a standard approach for these predictions13,29,30. Furthermore, several improvements over the basic approach have been proposed by various authors since then19,31,32.

The POT method is based on the fact that if the AM approach uses a GEV distribution (Eq. 1), the peaks over a high threshold should result in the related approximated distribution: the Generalized Pareto Distribution (GPD). The GPD fitted to the tail of the distribution gives the conditional non-exceedance probability \(P(X\le x | X > u)\), where u is the threshold level. The conditional distribution function can be calculated as:

There is consistency between the GEV and GPD models, meaning that the parameters can be related to \(\xi ^* = \epsilon \) and \(\sigma ^* = \sigma + \xi (u - \mu )\). The parameters \(\sigma \) and \(\xi \) are the scale and shape parameters, respectively. When \(\xi \ge 0\), the distribution is referred to as long tailed. When \(\xi < 0\), the distribution is referred to as short tailed. The methods used to estimate the parameters of the GPD and the selection of the threshold will be now discussed.

The use of the GPD for modelling the tail of the distribution is also justified by asymptotic arguments in14. In this paper, author confirms that it is usually more convenient to interpret extreme value models in terms of return levels, rather than individual parameters. In order to obtain these return levels, the exceedance rates of thresholds have to be determined as \(P(X>u)\). In this way, using Eq. (4) (\(P(X>x|X>u)=P(X>x)/P(X>u)\)) and considering that \(z_N\) is exceeded on average every N observations, we have:

Then, the N-year return level \(z_N\) is obtained as:

There are many techniques proposed for the estimation of the parameters of GEV and GPD. In19, authors applied the maximum likelihood methodology (ML) described in14. However, the use of this methodology for two parameter distributions (i.e. Weibull or Gamma) has a very important drawback: these distributions are very sensitive to the distance between the high threshold (\(u_2\)) and the first peak16. For this reason, ML could be used with two-parameter distribution when \(u_2\) reaches a peak. As this peak is excluded, the first value of the exceedance is as far from \(u_2\) as possible. A solution would be to use the three-parameter Weibull and Gamma distributions. However, ML estimation of such distributions is very difficult, and the algorithms usually fit two-parameter distributions inside a discrete range of location parameters33.

Proposed methodology

As stated before, in this paper, we present a new methodology to model this kind of time series considering not only extreme values but also the rest of observations. In this way, instead of selecting the maximum values per a period (usually a year) or defining thresholds in the distribution of these extreme wave heights, which has an appreciable subjective component, we model the distribution of all wave heights, considering that it is a mixture formed by a normal distribution and a uniform distribution. The motivation is that the uniform distribution is associated to regular wave height values which contaminate the normal distribution of extreme values. This theoretical mixed distribution is used then to fix the threshold for the estimation of the POT distributions. Thus, the determination of the threshold will be done in a much more objective and probabilistic way.

Let us consider as a sequence of independent random variables, \((X_1, \ldots , X_n)\) of wave height data. These data follow an unknown continuous distribution. We assume that this distribution is a mixture of two independent distributions: \(Y_1 \sim N(\mu , \sigma )\), and \(Y_2 \sim U(\mu - \delta , \mu + \delta )\), where \(N(\mu , \sigma )\) is a Gaussian distribution, \(U(\mu - \delta , \mu + \delta )\) is a uniform distribution, \(\mu > 0\) is the common mean of both distributions, \(\sigma \) is the standard deviation of \(Y_1\), and \(\delta \) is the radius of \(Y_2\), being \(\mu - \delta > 0\). Then \(f(x) = \gamma f_1(x) + (1-\gamma ) f_2(x)\), being \(\gamma \) the probability that an observation comes from the normal distribution, and f(x), \(f_1(x)\) and \(f_2(x)\) are the probability density functions (pdf) of X, \(Y_1\) and \(Y_2\), respectively.

For the estimation of the values of the four above-mentioned parameters (\(\mu , \sigma , \delta , \gamma \)), the standard statistical theory considers the least squares methods, the method of moments and the maximum likelihood (ML) method. In this context, Mathiesen et al.13 found that the least squares methods are sensitive to outliers, although Goda34 recommended this method with modified plotting position formulae.

Clauset et al.35 show that methods based on least-squares fitting for the estimation of probability-distribution parameters can have many problems, and, usually, the results are biased. These authors propose the method of ML for fitting parametrized models such as power-law distributions to observed data, given that ML provably gives accurate parameter estimates in the limit of large sample size36. The ML method is commonly used in multiple applications, e.g. in metocean applications25, due to its asymptotic properties of being unbiased and efficient. In this regard, White et al.37 conclude that ML estimation outperforms the other fitting methods, as it always yields the lowest variance and bias of the estimator. This is not unexpected, as the ML estimator is asymptotically efficient37,38. Also, in Clauset et al.35, it is shown, among other properties, that under mild regularity conditions, the ML estimation \({\hat{\alpha }}\) converges almost surely to the true \(\alpha \), when considering estimating the scaling parameter (\(\alpha \)) of a power law in the case of continuous data. It is asymptotically Gaussian, with variance \((\alpha -1)^2/n\). However, the ML estimators do not achieve these asymptotic properties until they are applied to large sample sizes. Hosking and Wallis39 showed that the ML estimators are non-optimal for sample sizes up to 500, with higher bias and variance than other estimators, such as moments and probability weighted-moments estimators.

Deluca and Corral40 also presented the estimation of a single parameter \(\alpha \) associated with a truncated continuous power-law distribution. In order to find the ML estimator of the exponent, they proceed by directly maximizing the log-likelihood \(l(\alpha )\). The reason is practical since their procedure is part of a more general method, valid for arbitrary distributions f(x), for which the derivative of \(l(\alpha )\) can be challenging to evaluate. They claim that one needs to be cautious when the value of \(\alpha \) is very close to one in the maximization algorithm and replace \(l(\alpha )\) by its limit at \(\alpha =1\).

Furthermore, the use of ML estimation for two-parameter distributions such as Weibull and Gamma distributions has the drawback16 previously discussed. Besides, the ML estimation is known to provide poor results when the maximum is at the limit of the interval of validity of one of the parameters. On the other hand, the estimation of the GPD parameters is subject of ongoing research. A quantitative comparison of recent methods for estimating the parameters was presented by Kang and Song41. In our case, having to estimate four parameters, we have decided to use the method of moments, for its analytical simplicity. It is always an estimation method associated with sample and population moments. Besides, adequate estimations are obtained in multi-parametric estimation and with limited samples, as shown in this work.

Considering \(\phi \) as the pdf of a standard normal distribution N(0, 1), the pdf of \(Y_1\) is defined as:

The pdf of \(Y_2\) is:

Consequently, the pdf of X is:

To infer the values of the four parameters of the wave height time series (\(\mu \), \(\sigma \), \(\delta \), \(\gamma \)), we define, for any symmetric random variable with respect to the mean \(\mu \) with pdf g and finite moments, a set of functions in the form:

or because of its symmetry:

These functions are well defined for the same moments of the variable x, because:

Particularly, for the normal and uniform distributions, all the moments are finite, and the same happens for all the \(U_k(x)\) functions. This function measures, for each pair of values x and k, the bilateral tail from the value x of the moment with respect to the mean of order k of the variable. It is, therefore, a generalization of the concept of probability tail, which is obtained for \(k = 0\).

Now, if we denote the corresponding moments for the distributions \(Y_1\) and \(Y_2\) by \(U_{k,1}(x)\) and \(U_{k,2}(x)\), it is verified that:

Then, to calculate the function \(U_k(x)\), we just need to calculate the functions \(U_{k,1}(x)\) and \(U_{k,2}(x)\).

Calculation \(U_k\) for the uniform distribution (\(U_{k,2}\))

From the definition of \(f_2(x)\) and \(U_k(x)\), if \(\mu > \delta \):

then,

Calculation \(U_k\) for the normal distribution (\(U_{k,1}\))

From the definition of the \(f_1(x)\) and \(U_k(x)\), we have:

Let the variable u be in the form \(u = \frac{t-\mu }{\sigma }\), then:

where \(\Upsilon _k = 2 \int _{x}^\infty (u)^k\phi (u)du\). \(\Upsilon _k(z)\) is the \(U_k\) function calculated for a N(0, 1) distribution, which will be then updated with values of \(k=1,2,3\).

Proposition I

The following equations are verified:

where \(\Phi \) is the cumulative distribution function (CDF) of the N(0, 1) distribution. The demonstration is included below.

The three equations can be obtained using integration by parts, but it is easier to derive the functions \(\Upsilon _k(x)\) to check the result. For the definition of the functions, for each value of k, we have:

Taking into account that \(\frac{\partial \phi (x)}{\partial x}=-x\phi (x)\), and \(\frac{\partial \Phi (x)}{\partial x}=\phi (x)\):

Therefore, the left and right sides of the previous equations differ in, at most, a constant. To verify that they are the same, we check the value \(x=0\):

which match with the right sides of Eqs. (18)–(20):

Substituting these results in Eq. (17) we have:

These functions will be the base to estimate the parameters of the distribution of variable X, except in the case of \(\mu \), as we will comment later. The estimates will be made with the corresponding \(U_k\) sample estimates, defined in the following Section.

Sample estimates of \(U_k\)

For each value of k and \(x \ge 0\), the sample estimator of \(U_k\) obtained by the method of moments is:

which has the properties described in the following propositions.

Proposition II

The estimator \(u_k(x)\) is an unbiased estimator of \(U_k(x)\). For the demonstration, we first rewrite \(u_k\) in the form:

where I is the indicator function. Considering the previous expression, we check the condition of an unbiased estimator:

Proposition III

The estimator \(u_k(x)\) is a consistent estimator of \(U_k(x)\). Considering again Eq. (35) for the variance of \(u_k(x)\) we have:

taking into account that \(I^2\{.\} = I\{.\}\).

Parameter estimation of the mixed distribution of X

The estimates are based on the \(u_k(0)\) values, for \(k=1,2,3\), which estimate the corresponding population parameters.

Estimation of \(\mu \) Given that the mean value of both distributions (uniform and normal) is the same, this value is not affected by the mixture. Therefore, the natural estimator is

Estimation of \(\sigma \), \(\delta \), and \(\gamma \) parameters

Applying the method of moments, we have the following three-equation system:

The reason for choosing the origin is that it has the maximum amount of information about the \(u_k(x)\) functions defined in Eq. (34). If a nonzero x value is chosen, the estimate will discard all observations in the interval \((\mu - x, \mu + x)\). Substituting Eqs. (15), (31), (32) and (33) in Eq. (13), the resulting equation system is:

where the solution must satisfy: \({\hat{\sigma }},{\hat{\delta }} > 0\) and \(\gamma \in [0,1]\).

Adjustment to the mixed distribution

To contrast if the obtained estimators are valid, we could see if the set of observations \(\{x_1,\ldots ,x_n\}\) fit the pdf of the final distribution:

where:

and:

For this purpose, a test that can be used is the Kolmogorov-Smirnov test. The one-sample Kolmogorov-Smirnov test42 is commonly used to examine whether samples come from a specific distribution function by comparing the observed cumulative distribution function with an assumed theoretical distribution. The Kolmogorov-Smirnov statistic Z is computed from the largest difference (in absolute value) between the observed and theoretical cumulative distribution. In this way, Z is the greatest vertical distance between empirical distribution function S(x) and the specified hypothesized distribution function \(F^*(x)\), which can be calculated as:

where the null hypothesis is \(H_0: F(x) = F^*(x)\) for all \(-\infty< x < \infty \), and the alternative hypothesis is \(H_1: F(x) \ne F^*(x)\) for at least one value of x, F(x) being the true distribution. If Z exceeds the \(1\text {-}\alpha \) quantile value (\(Q(1-\alpha )\)), then we reject \(H_0\) at the level of significance of \(\alpha \). When the number of observations n is large, the \(Q(1-\alpha )\) value can be approximated as43:

Using the theoretical mixed distribution to fix the threshold of the POT approaches

In this paper, when the mixed distribution is estimated, we use it to set the threshold for estimating the POT distributions. We assume that using the points which are situated over a percentile of the theoretical mixed distribution is more reliable than using a threshold value predefined by a trial and error procedures. Identifying extreme values when studying a phenomenon is supported by the determination of a limit value or a probability threshold. Since the consideration of extreme is determined by an unusual deviation from the central values of the distribution of the phenomenon under investigation, we understand that the probabilistic approach is preferred. In our work, we consider the 95%, 97.5% and 99% percentiles as possible thresholds.

In this way, a new sample of independent random variables is defined by \(Z = (z_1, z_2, \ldots , z_M)\), where \(Z = X > u\), u being the threshold and M being the number of exceedances. In this work, three distributions are fitted for the threshold exceedance distribution:

-

The first one is the GPD44, whose cumulative function is defined in Eq. (4).

-

The second distribution is the Gamma distribution, with the following cumulative function:

$$\begin{aligned} F(z;\xi ,\sigma ) = \frac{\gamma (\xi ,\frac{z}{\sigma })}{\Gamma (\xi )}, \end{aligned}$$(48)where \(\gamma \) is the lower incomplete gamma function, and \(\Gamma \) is the Gamma function.

-

Finally, the Weibull distribution is also considered:

$$\begin{aligned} F(z;\xi ,\sigma ) = 1 - \exp \left[ -\left( \frac{z}{\sigma } \right) ^\xi \right] . \end{aligned}$$(49)

These three distributions are adjusted to the exceedances using the Maximum Likelihood Estimator (MLE)13. After that, we select the best fit based on two objective criteria: BIC17 and AIC18. On the one hand, BIC minimizes the bias between the fitted model and the unknown true model:

where L is the likelihood of the fit, M is the sample size (in our case, the number of exceedances) and \(k_p\) the number of parameters of the distribution. On the other hand, AIC gives the model providing the best compromise between bias and variance:

Both criteria need to be minimized.

When the best-fitted distribution is obtained, the return period T (\(Hs_T\)) is calculated, and then the confidence intervals are computed. As can be seen in the experimental section, the GPD is the best distribution for all cases. The quantile for the GPD is:

where \(\lambda \) is the number of exceedances per year.

Finally, confidence intervals are also computed. For that, many authors use the classical asymptotic method14. However, Mathiesen et al. advocate the use of Monte-Carlo (MC) simulation techniques. Also, Mackay and Johanning26 proposed a storm-based MC method for calculating return periods of individual wave and crest heights. In the MC method, a random realisation of the maximum wave height in each sea state is simulated from the metocean parameter time series, and the GPD is fitted to storm peak wave heights exceeding some threshold. Mackay and Johanning26 showed that using \(n=1000\) is sufficient to obtain a stable estimation, although in our case, we have considered \(n=100000\) following the work of16. In16, as in our work, authors used the MC simulation method, and, after 100000 iterations, the \(90\%\) confidence interval is obtained using the percentiles [\(Hs_{T,5\%};Hs_{T,95\%}\)] of the 100000 \(Hs_T\) values obtained with the procedure.

Dataset and experimental design

Dataset

As stated before, the objective of this work is to model wave height time series where extreme values are present. For this reason, we evaluate the performance of the proposed methodology in several real-world wave height time series from different locations:

-

Gulf of Alaska: two wave height time series collected from the National Data Buoy Center of the USA45 in the Gulf of Alaska have been used. The buoys have the registration numbers 46001 and 46075. For the two buoys, one value every six hours is considered. The buoy 46001 is an offshore buoy placed in the coordinates 56.23N 147.95W, and data from 1st January 2008 to 31st December 2013 is considered, with a total of 8767 observations. On the other hand, 46075 is an offshore buoy whose coordinates are 53.98N 160.82W and data from 1st January 2011 to 31st December 2015 are collected in this buoy (7303 observations).

-

Puerto Rico: a total of six offshore buoys from Puerto Rico have been selected in our experiments to evaluate the proposed methodology. These buoys also belong to the NDBC of the USA, with registration ids 41043, 41044, 41046, 41047, 41048 and 41049. One value every six hours is considered, and data from 1st January 2011 to 31st December 2015 are used (7303 observations for each one). The geographical coordinates for each buoy are 21.13N 64.86W, 21.58N 58.63W, 23.83N 68.42W, 27.52N 71.53W, 31.86N 69.59W, and 27.54N 62.95W, respectively.

-

Spain: this dataset comes from the SIMAR-44 hindcast database provided by Puertos del Estado (Spain). The point is placed in the Strait of Gibraltar, whose coordinates are 36N 6W. One value every three hours is considered in this dataset from 1st January 1959 to 31 December 2000, forming a set of 122278 observations. Note that, it is the largest time series in our experiments. Given that the time series includes 42 years, we can estimate long return periods of wave height.

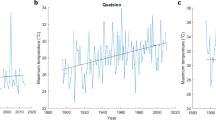

The summary of the information for each time series can be seen in Table 1 which includes the type of buoy, the location, the geographical coordinates, the number of observations, the mean values of the time series (Hs), and the maximum values of each one. The map location can be observed in Fig. 1, while the representation of the time series are shown in Fig. 2.

Experimental design

The experimental design for the time series under study is presented in this subsection. We divide the experiments in three stages:

-

Firstly, a Kolmogorov-Smirnov test is applied to determine whether the wave height distributions follow a normal distribution. That is, their distributions fit a simple Gaussian. The reason behind applying this test is that, if the wave height distributions follow a normal distribution, using the proposed methodology will not make sense. If this is not the case, we will proceed with the following points.

-

Secondly, the methodology is tested on the raw time series presented in the previous subsection. The algorithm estimates the parameters of the mixed distribution \((\mu , \sigma , \delta , \gamma )\) for each wave height time series, and then, the Kolmogorov-Smirnov test is applied to check if the estimated distribution corresponds to the empirical distribution of the data. It is important to mention that the Kolmogorov-Smirnov test is applied considering \(n=50\), which is an acceptable value for the Eq. (47), that is, we calculate the CDF of the estimated theoretical function and the empirical one in 50 intervals. Graphically, in this paper, we show the comparison between the theoretical distribution (estimated) and the empirical one (Fig. 3).

-

Finally, as we stated in previous sections, we use the theoretical mixed distribution to establish the threshold. In this sense, we delete the values below the threshold, and we fit the GPD, Gamma and Weibull distributions with the remaining values (those which are higher than the threshold). Based on two objective criteria, BIC and AIC, we select the best-fitted distribution and, finally, the return values of this distribution for the following return periods in years \(T=(1,2,5,10,20,50,100)\) are calculated.

Results and discussion

As mentioned above, the first phase of the experimentation is to check that the distributions of the wave height time series do not follow a normal distribution. The Kolmogorov-Smirnov test obtains Z values between 0.6 and 0.8, while the critical values are around 0.016. Moreover, the p-value is 0 in all cases and, therefore, lower than any \(\alpha \) value. Thus, for all time series, the null hypothesis is rejected, and it can be stated that the wave height time series distribution does not fit a simple Gaussian. We, therefore, proceed to part two of the experimentation.

For the mixed distribution proposed in this paper, the estimates and the Kolmogorov-Smirnov test results are shown in Table 2. As can be seen, the estimation of the \(\mu \) parameter is the same than the mean value of the time series (see Table 1), because we have used the sample mean as estimator (see section “Proposed methodology”). \(\sigma \) estimation seems to be very high with respect to the mean. It makes sense given that the estimation is made with approximately 7000 points, the variance needing to be high. \(\delta \) has values in the interval (0.74,1.80) because there is wave height data that, although not very small, contaminates the normal distribution (in intervals of three months, the parameter value is lower). \(\gamma \), which is the probability that an observation comes from the normal distribution, is very low. Again, this makes sense because of the high amount of data which are not extreme values and represent regular waves (uniform distribution). The Kolmogorov-Smirnov test does not reject the null hypothesis for all cases, \(Z < Q(1-\alpha )\), confirming that the estimated parameters of the mixed distribution correspond to the empirical values. For this reason, we can accept the theory proposed in this paper as a good method to estimate the theoretical distribution in wave height time series. Note that the Z values are lower in those time series whose mean value is higher, so the wave height time series collected from buoys 46001 and 46075 are better adjusted with this distribution, while the Spanish time series results in a worse fit. The results of the Kolmogorov-Smirnov test can be complementary analysed with the representation of the empirical and theoretical distribution, as can be observed in Fig. 3. The graphs show how the estimated theoretical distributions are adapted to the empirical distributions in each database.

For the third experiment, Table 3 shows the values of the BIC and AIC criteria when the GPD, Gamma and Weibull distribution are fitted using the values over the threshold determined by the percentiles 95%, 97.5% and 99% of the theoretical mixed distribution. The number of POTs (M) and the number of peaks per year (\(\lambda \)) are also included. As can be seen, the higher the percentile, the lesser number of peaks per year, because the number of POTs will be much lower. The results confirm that the best fitted distribution for all databases and for all percentiles is the GPD.

There exist a perfect correlation between the values of BIC and AIC for the three percentiles (0.977, 0.998 and 1.000, respectively), for the three distributions and the nine time series. In Table 3, it can be seen that the number of annual peaks is more reasonable when considering the 97.5% and 99% percentiles. This is because the lower the threshold, the more the number of waves from the uniform distribution, i.e. non-extreme waves, are contaminating the distribution of extreme waves, the more the number of less relevant peaks. For instance, in buoy 46001, the BIC value for the GPD is 786.42 and 313.09 respectively, a 21.57% and 19.61% lower than the value for the Gamma distribution, and a 31.39% and 29.05% lower than the value for the Weibull distribution. These results differ from those obtained by16 for the SIMAR-44 time series, where GPD gives poor results with respect to these criteria when compared to Gamma; but it is important to mention that we use a 3-parameter GPD instead of a 2-parameter one.

Finally, the return values and the confidence intervals for each dataset considering the different thresholds are summarized in Table 4. We have considered return periods of \(T\in \{1,2,5,10,20,50,100\}\) years. If we compare the obtained return values and the confidence intervals with respect to the ones obtained by Mazas and Hamm16, for SIMAR-44 time series, we can see that the results are not the same due to the differences in the thresholds, and because they consider 44 years instead of 42, as the first and the last year are used although they are not complete. We agree with the authors in that work in the sense that choosing the right threshold is not always a straightforward issue. For example, if we consider the percentile 97.5% of the theoretical mixed distribution, the return values and the confidence intervals are quite similar to the ones obtained by Mazas (with the slight differences commented above). With respect to the values obtained for the rest of the buoys, up to our knowledge, there are not other reference values. These estimations are approximate, given the reduced length of the time series (six years for buoy 46001 and five for the other buoys). If we compare them with the extreme values that appear in Table 1, we can see that, for the buoys 46075, 41043, 41046, the confidence intervals for the 95% percentile tend to contain these values more frequently, for the buoys 41047, 41048, 41049 and SIMAR-44, the confidence intervals are more adjusted, and, for the buoys 46001 and 41044, there are no confidence intervals that contain them.

Conclusions

This paper proposes a novel methodology for wave height time series modelling based on the assumption that, given a time series where the high waves are less common than lower ones, its distribution can be modelled as a mixture of a normal distribution with a uniform distribution. The methodology is based on the method of moments, and we use it to establish the threshold for the distribution estimation of the values over a peak methodology (POT). The automatic determination of this threshold is an important task, given that the alternative is to use a trial and error method which, as several authors agree, can be problematic and quite subjective. The whole approach is tested on nine real-world time series collected from the Gulf of Alaska (46001 and 46075), from Puerto Rico (41043, 41044, 41046, 41047, 41048 and 41049), and from Spain (SIMAR-44). For SIMAR-44, we compare our return periods with those obtained by Mazas and Hamm. The return periods obtained for the rest buoys can be considered as an initial approximation given the reduced length of the time series.

The experimentation is divided into three stages: the first verifies that the time series do not follow a normal distribution and that it, therefore, makes sense to apply the proposed methodology. The second one analysed the estimation of the distribution in the nine time series, showing that the estimated theoretical distribution fits the empirical one. These results are corroborated by a Kolmogorov-Smirnov test where \(Z < Q(1-\alpha )\) in all databases. For the third experiment, we use the percentiles 95%, 97.5% and 99% of the estimated theoretical distribution as possible thresholds for the POT distribution estimation. Results show that the best-fitted distribution for the POT is the Generalized Pareto Distribution in all cases, showing their return periods and confidence intervals.

A future line of work could approach the segmentation of the time series based on the percentiles of the obtained distribution and perform a posterior prediction of the segments obtained. We also plan to extend this work using time series from different fields and more advanced methods for forecasting, such as artificial neural networks. One line of work already underway is eliminating uniform noise, after which the extraction of extreme values can be carried out on a normal distribution. Although the probability distributions of extreme values are independent from the starting distribution, we believe that knowledge about them would allow a better approximation.

Data availibility

The datasets generated and/or analysed during the current study and the code generated in the experimental design are available at https://github.com/amduran/mixed_distributions.git, with the exception of SIMAR-44 which is available on request from Puertos del Estado.

References

Peng, S. et al. Improving the real-time marine forecasting of the northern south china sea by assimilation of glider-observed t/s profiles. Sci. Rep. 9, 1–9 (2019).

Soares, C. G. & Scotto, M. Modelling uncertainty in long-term predictions of significant wave height. Ocean Eng. 28, 329–342 (2001).

Saetra, Ø. & Bidlot, J.-R. Assessment of the ECMWF Ensemble Prediction Sytem for Waves and Marine Winds (European Centre for Medium-Range Weather Forecasts, 2002).

Feng, X., Tsimplis, M., Yelland, M. & Quartly, G. Changes in significant and maximum wave heights in the norwegian sea. Global Planet. Change 113, 68–76 (2014).

Esling, P. & Agon, C. Time-series data mining. ACM Comput. Surv. (CSUR) 45, 12 (2012).

Fontes, C. H. & Budman, H. A hybrid clustering approach for multivariate time series-a case study applied to failure analysis in a gas turbine. ISA Trans. 2017, 5 (2017).

Pérez-Ortiz, M. et al. On the use of evolutionary time series analysis for segmenting paleoclimate data. Neurocomputing 2017, 5 (2017).

Kim, J.-S., Seo, K.-W., Chen, J. & Wilson, C. Uncertainty in grace/grace-follow on global ocean mass change estimates due to mis-modeled glacial isostatic adjustment and geocenter motion. Sci. Rep. 12, 1–7 (2022).

Omranian, N., Mueller-Roeber, B. & Nikoloski, Z. Segmentation of biological multivariate time-series data. Sci. Rep. 5, 1–6 (2015).

Bagnall, A., Lines, J., Hills, J. & Bostrom, A. Time-series classification with COTE: The collective of transformation-based ensembles. IEEE Trans. Knowl. Data Eng. 27, 2522–2535 (2015).

Nikolaou, A. et al. Detection of early warning signals in paleoclimate data using a genetic time series segmentation algorithm. Clim. Dyn. 44, 1919–1933 (2015).

Zhao, Y. et al. A novel bidirectional mechanism based on time series model for wind power forecasting. Appl. Energy 177, 793–803 (2016).

Mathiesen, M. et al. Recommended practice for extreme wave analysis. J. Hydraul. Res. 32, 803–814 (1994).

Coles, S., Bawa, J., Trenner, L. & Dorazio, P. An Introduction to Statistical Modeling of Extreme Values, vol. 208 (Springer, 2001).

Méndez, F. J., Menéndez, M., Luceño, A. & Losada, I. J. Estimation of the long-term variability of extreme significant wave height using a time-dependent peak over threshold (pot) model. J. Geophys. Res.: Oceans 111, 5 (2006).

Mazas, F. & Hamm, L. A multi-distribution approach to pot methods for determining extreme wave heights. Coast. Eng. 58, 385–394 (2011).

Schwarz, G. et al. Estimating the dimension of a model. Ann. Stat. 6, 461–464 (1978).

Akaike, H. Information theory and an extension of the maximum likelihood principle. In Selected Papers of Hirotugu Akaike 199–213 (Springer, 1998).

Petrov, V., Soares, C. G. & Gotovac, H. Prediction of extreme significant wave heights using maximum entropy. Coast. Eng. 74, 1–10 (2013).

Durán-Rosal, A., Fernández, J., Gutiérrez, P. & Hervás-Martínez, C. Detection and prediction of segments containing extreme significant wave heights. Ocean Eng. 142, 268–279 (2017).

Dorado-Moreno, M. et al. Robust estimation of wind power ramp events with reservoir computing. Renew. Energy 111, 428–437 (2017).

Guijo-Rubio, D. et al. Prediction of low-visibility events due to fog using ordinal classification. Atmos. Res. 214, 64–73 (2018).

Durán-Rosal, A. et al. Efficient fog prediction with multi-objective evolutionary neural networks. Appl. Soft Comput. 70, 347–358 (2018).

Bowman, K. & Shenton, L. Estimation: Method of moments. Encycl. Stat. Sci. 3, 5 (2004).

Jonathan, P. & Ewans, K. Statistical modelling of extreme ocean environments for marine design: A review. Ocean Eng. 62, 91–109 (2013).

Mackay, E. & Johanning, L. Long-term distributions of individual wave and crest heights. Ocean Eng. 165, 164–183 (2018).

DeLeo, F., Besio, G., Briganti, R. & Vanem, E. Non-stationary extreme value analysis of sea states based on linear trends analysis of annual maxima series of significant wave height and peak period in the mediterranean sea. Coast. Eng. 167, 103896 (2021).

Davison, A. C. & Smith, R. L. Models for exceedances over high thresholds. J. R. Stat. Soc. Ser. B (Methodol.) 1990, 393–442 (1990).

Ferreira, J. & Soares, C. G. An application of the peaks over threshold method to predict extremes of significant wave height. J. Offshore Mech. Arct. Eng. 120, 165–176 (1998).

Caires, S. & Sterl, A. 100-year return value estimates for ocean wind speed and significant wave height from the era-40 data. J. Clim. 18, 1032–1048 (2005).

Stefanakos, C. N. & Athanassoulis, G. A. Extreme value predictions based on nonstationary time series of wave data. Environmetrics 17, 25–46 (2006).

Jonathan, P., Randell, D., Wadsworth, J. & Tawn, J. Uncertainties in return values from extreme value analysis of peaks over threshold using the generalised pareto distribution. Ocean Eng. 220, 107725 (2021).

Panchang, V. G. & Gupta, R. C. On the determination of three-parameter weibull mle’s. Commun. Stat.-Simul. Comput. 18, 1037–1057 (1989).

Goda, Y. Random Seas and Design of Maritime Structures, vol. 33 (World Scientific Publishing Company, 2010).

Clauset, A., Shalizi, C. R. & Newman, M. E. Power-law distributions in empirical data. SIAM Rev. 51, 661–703 (2009).

Wasserman, L. All of Statistics: A Concise Course in Statistical Inference, vol. 26 (Springer, 2004).

White, E. P., Enquist, B. J. & Green, J. L. On estimating the exponent of power-law frequency distributions. Ecology 89, 905–912 (2008).

Bauke, H. Parameter estimation for power-law distributions by maximum likelihood methods. Eur. Phys. J. B 58, 167–173 (2007).

Hosking, J. R. & Wallis, J. R. Parameter and quantile estimation for the generalized pareto distribution. Technometrics 29, 339–349 (1987).

Deluca, A. & Corral, Á. Fitting and goodness-of-fit test of non-truncated and truncated power-law distributions. Acta Geophys. 61, 1351–1394 (2013).

Kang, S. & Song, J. Parameter and quantile estimation for the generalized pareto distribution in peaks over threshold framework. J. Korean Stat. Soc. 46, 487–501 (2017).

Chakravarty, I. M., Roy, J. & Laha, R. G. Handbook of Methods of Applied Statistics (McGraw-Hill, 1967).

Pearson, E. S. & Hartley, H. O. Biometrika Tables for Statisticians (Cambridge University Press, 1966).

Pickands, J. Statistical inference using extreme order statistics. Ann. Stat. 1975, 119–131 (1975).

National buoy data center. http://www.ndbc.noaa.gov/. (National Oceanic and Atmospheric Administration of the USA (NOAA), 2021).

Acknowledgements

This work was supported in part by the “Agencia Española de Investigación” under Grant PID2020-115454GB-C22, AEI/10.13039/501100011033, and in part by the “Consejería de Transformación Económica, Industria, Conocimiento y Universidades (Junta de Andalucía) y Programa Operativo FEDER 2014-2020” under Grant PY20 00074. We would like to thank Puertos del Estado (Spain) for providing the dataset from the SIMAR-44 hindcast database.

Author information

Authors and Affiliations

Contributions

A.M.D.R. and P.A.G. processed the experimental data; M.C., P.A. and C.H.M. were involved in planning and supervised the work, A.M.D.R. performed the analysis, wrote the manuscript and designed the figures. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Durán-Rosal, A.M., Carbonero, M., Gutiérrez, P.A. et al. A mixed distribution to fix the threshold for Peak-Over-Threshold wave height estimation. Sci Rep 12, 17327 (2022). https://doi.org/10.1038/s41598-022-22243-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-22243-8

This article is cited by

-

Estimating cutoff values for diagnostic tests to achieve target specificity using extreme value theory

BMC Medical Research Methodology (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.