Abstract

Although there is a huge progress in complementary-metal-oxide-semiconductor (CMOS) technology, construction of an artificial neural network using CMOS technology to realize the functionality comparable with that of human cerebral cortex containing 1010–1011 neurons is still of great challenge. Recently, phase change memristor neuron has been proposed to realize a human-brain level neural network operating at a high speed while consuming a small amount of power and having a high integration density. Although memristor neuron can be scaled down to nanometer, integration of 1010–1011 neurons still faces many problems in circuit complexity, chip area, power consumption, etc. In this work, we propose a CMOS compatible HfO2 memristor neuron that can be well integrated with silicon circuits. A hybrid Convolutional Neural Network (CNN) based on the HfO2 memristor neuron is proposed and constructed. In the hybrid CNN, one memristive neuron can behave as multiple physical neurons based on the Time Division Multiplexing Access (TDMA) technique. Handwritten digit recognition is demonstrated in the hybrid CNN with a memristive neuron acting as 784 physical neurons. This work paves the way towards substantially shrinking the amount of neurons required in hardware and realization of more complex or even human cerebral cortex level memristive neural networks.

Similar content being viewed by others

Introduction

The human brain, consisting of 1010–1011 neurons and 1014–1015 synapses, has powerful cognitive capability while consuming low power (~15 watts)1. Inspired by the advanced information processing scheme in the brain, neuromorphic hardware using artificial neurons and synapses has aroused extensive concern in recent years2. Although improvements in CMOS device integration have been achieved by periodic doubling transistor density over the past half century3, it is still challenging to implement a neuromorphic hardware based on the standard CMOS technology to achieve the functionality comparable to the human brain. For example, the TrueNorth neuromorphic chip with 5.4 × 109 transistors can realize 1 × 106 spiking neurons and 2.56 × 108 synapses only4. In order to construct a brain-level neuromorphic hardware, it is inevitable to develop nanometer-scale neuromorphic devices and the Time Division Multiplexing Access (TDMA) technique may be a possible pathway for shrinking the amount of physical neurons.

Recently, great effort has been made to develop neuromorphic devices that can emulate the behaviors of neuronal systems. Among the emerging neuromorphic devices (e.g., neuron transistor5, phase-change device6, ferroelectric device7, magnetic device8), memristors whose resistance can be quasi-continuously adjusted by electrical stimuli, have attracted the most attention9,10. After Williams et al. announced the discovery of memristor in 200811,12,13, it has been demonstrated that memristors can effectively emulate synaptic plasticity, such as the long-term potentiation/depression14,15 and spike-timing dependent plasticity16,17, as well as brain-like behaviors, such as learning and forgetting functions18,19. More recently, small scale neuromorphic hardware using memristors as synapses have been demonstrated to realize brain-like behaviors. M. Ziegler et al. and Y. V. Pershin et al. respectively reported a simple neural network consisting of three electronic neurons connected by memristor-based synapses for implementing associative learning like the Pavlov’s dog20,21. S. B. Eryilmaz et al. and S. G. Hu et al. respectively realized associative memory in Hopfield neural networks based on memristor synapses22,23. D. B. Strukov et al. realized 33-pixel pattern classification in memristive crossbar circuits via ex situ or in situ training24,25,26. A. Serb et al. demonstrated pattern classification through unsupervised learning in a winner-take-all neural network based on multi-state memristive synapses27. P. Yao implemented 3-class face classification in a 1024-cell one transistor and one memristor (1T1R) synapse array28. W. D. Lu et al. demonstrated image reconstruction in a 32 × 32 memristor crossbar hardware through sparse coding29.

In the year of 2016, researchers from IBM reported a stochastic neuron with neuronal state directly stored in a phase-change device30; subsequently they presented neuromorphic architectures comprising neurons and synapses based on phase-change devices in the tasks of correlation detection31,32,33, pattern learning and feature extraction34. Although the phase change spiking neuron shows good neuromorphic properties, the memristor using CMOS-compatible materials is more promising and competitive for constructing spiking neuron because of its simple structure, excellent scalability, low cost, and good compatibility with standard CMOS processes35,36. On the other hand, in order to implement memristive neural network, building important types of artificial neural networks based on memristive neurons is necessary. Convolutional neural network (CNN) is a variant of multilayer perceptron (MLP) inspired by the organization of the animal visual cortex37, which has been widely applied in many artificial intelligent fields, such as handwritten numeral recognition38, object detection39, video classification40, and etc. Demonstration of building CNN based on memristive neuron may pave the way for further extensive use of memristive neural network in artificial intelligence. Most recently, fully integrated memristive neural networks were realized, which provide a more efficient approach to implementing neural network algorithms than traditional hardware41. L. Ni et al. achieved high energy efficiency and throughput in a ReRAM-based binary CNN accelerator42 and proposed an area-saving matrix-vector multiplication accelerator based on binary RRAM crossbar, which exhibits superior performance and low power consumption on machine learning43.

In this work, a CMOS-compatible HfO2 memristor neuron, which has the ability to mimic the integration of pre-synapse current in membrane of biological neuron, is designed and implemented. A hybrid CNN based on the HfO2 memristor neuron has been constructed. In the network, one memristive neuron can behave as 784 physical neurons based on the Time Division Multiplexing Access (TDMA) technique. Handwritten digits can be recognized by using the CNN. This study provides the possibility of both a substantial reduction of the amount of physical neurons required in the hardware and realization of more complex or even human brain level memristive neural network.

Materials and Methods

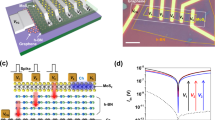

The memristor used as neuron is based on a metal-insulator-metal (MIM) structure with a thin HfO2 layer as the insulator. The device fabrication details were described in our previous work23. Dies with different electrode areas were cut from the wafer and packaged in standard 28-pin dual in-line package (DIP) for constructing the memristive neuron. The hybrid Convolutional Neural Network (CNN) based on the memristive neuron was realized on a printed circuit board (PCB) (see Supplementary Figure 1). The CNN consists of one memristive neuron, four transmission-gate chips (Maxim MAX313), seven operational amplifiers (Texas Instruments OPA4322AIPWR), and one comparator chip (Texas Instruments LM339). Electrical characteristics of the memristor were measured with a Keithley 4200 semiconductor characterization system at room temperature. In the measurement of the CNN, “Raspberry PI” PAD minicomputer was used to generate the clock signals, and the waveforms of the clock signals and outputs were recorded with a RIGOL oscilloscope (model No. DS4024) and was analyzed by “Respberry PI” PAD minicomputer. Details of TDMA is provided in Supplementary Note 2.

Results

In conventional artificial neuron, capacitors are normally used as the neural membrane. However, capacitors occupy a large area, resulting in impossibility to integrate a large amount of neurons in a chip. Memristors have much higher integration density and consume less energy. Besides, they are compatible with CMOS technology and have higher speed than capacitor-based neuron. In addition, although the phase-change memristive neuron shows good neuronal properties30, neuron based on the fully CMOS-compatible material HfO2 is still preferred considering the integration and process compatibility. HfO2 is a high-k material which has been widely used to replace SiO2 as the gate dielectric in CMOS technology (i.e., 28 nm CMOS technology44). And thus, the memristor based on HfO2 thin film is completely compatible with the standard CMOS technology. Besides, memristor based on HfO2 shows superior performances45, such as excellent radiation resistance, endurance and retention characteristics. Last but not least, the neuron based on the HfO2 exhibits similar properties of uncertainty analogous to biological neuron, which plays a key role in signal encoding and transmission46. The memristor having the hafnium oxide sandwiched between two metal electrodes is illustrated in Figure 1. The conductive filament model can be used to explain the change of resistance/conductance in the HfO2 memristor. Detailed device structure and conductive switching mechanism were described in our previous work23. As shown in Figure 1, an artificial spike-based neuron based on the HfO2 memristor containing dendrites (inputs), soma, and axon (output) has been designed and implemented in this work. The dendrite is connected to synapse for communication with other neurons. The soma comprises the neuronal membrane and the spike generation module. In a nonlinear integrate-and-fire neuron model, the membrane potential y can be expressed as

where L(y) is the leaky term, reflecting the imperfections in membrane; g(y, t) is the conductance of the ion channel representing the synaptic weight; and u denotes the voltage due to the arrival of the pre-synapse spike. The artificial neuron follows equation (1) until the membrane potential increases beyond a threshold. Then the soma fires a spike and resets the neuron to the initial state. More information about the memristive neuron circuit design and typical waveforms are provided in Supplementary Note 3.

An artificial neuron based on a HfO2 memristor. Schematic of an artificial neuron that consists of the dendrites (inputs), the soma (which comprises the neuronal membrane and the spike event generation) and the axon (output). The dendrites may be connected to synapses in a network. The input spikes are modulated by synapse in frequency domain having the same amplitude.

The integration functionality of neuron can be realized by applying successive pulses to the memristor. The signals of dendrites (inputs), axon (output) and somas (membrane potential) are shown in Figure 2. The electrical potential of the memristor increases step by step until a dendrite spike occurs, which reflects the integration functionality in membrane. When there is no dendrite spike applying to the memristor, a fixed current is applied to the memristor for sensing the resistance of the memristor. After several pulses, the resistance of the memristor increases beyond the threshold analogous to the threshold potential in a biological neuron. Then an axon spike is fired by the neuron. At the same time, a pulse is applied to the memristor to reset the memristor to the low-resistance state.

Figure 2a, 2b and 2c show the neuron firing properties for different thresholds of 0.5 V, 0.8 V and 1.0 V, respectively. As can be observed, a higher threshold leads to less axon spikes occurrence. The input spiking frequency also takes effect on the integration in the memristive neuron. Figure 2d, 2e and 2f show the neuron firing properties for different input spiking frequencies of 10 Hz, 100 Hz and 1 kHz, respectively. Higher input frequency of spikes causes more output spikes. On the other hand, real biological neural activity may show significant irregularities due to intrinsic and extrinsic neural noise that originates from the biochemical processes of the individual neuron in network. The memristive neuron implemented in this work also exhibits similar properties of uncertainty analogous to biological neurons. In some circumstances, the resistance of the memristor is unchanged for most spikes; while it suddenly approaches the high-resistance state for some spikes. The randomness of neuron firing is shown in Figure 2g. The Monte-Carlo simulation is carried out to fit the experimental result of the memristive neuron firing. As can be seen in Figure 2g, the Monte-Carlo fitting result is close to the actual histogram obtained from the memristive neuron firing experiments. The random integration-and-fire behavior of memristive neuron is caused by the random resetting event of memristor instead of the real integration. Figure 2g indicates that the designed memristive neuron can emulate real biological neurons well in uncertainty. More information about neuron firing properties is presented in Supplementary Note 4.

Using the memristive neuron, a hybrid convolutional neural network was constructed to demonstrate digit recognition. More CNN design information is provided in Supplementary Note 5. A “Raspberry PI” minicomputer was used to generate dendrite spikes and receive output axon spikes from neurons. In the hybrid neural network, neurons can either be the memristive neurons or software-based. The two types of neurons are exchangeable to verify the amount of neurons that the memristive neuron can behave as based on TDMA. It is should be noted that by using TDMA technique the speed of CNN is reduced as the network must spend time in accessing the same memristive neuron in series. Trade-off between the CNN speed and the number of the memristive neuron required should be considered. On the other hand, in the hybrid network synapses, maximum function and activation function are implemented in software domain by “Raspberry PI” minicomputer. The system photograph is presented in Supplementary Figure 1. As shown in Figure 3a, 5 × 5 synapses are connected to a neuron in the convolutional layer, and all of the neurons share the same synaptic weight in a certain layer due to the CNN inherent characteristics. Due to the shared synaptic weight, a single memristive neuron can behave as many neurons based on TDMA technique as shown in Figure 3b. The neural network was trained on Graphic Processing Unit (GPU) computing platform with Tensorflow47. After training, the convolutional kernels and weight matrixes are transferred into the hybrid CNN synaptic matrix. The information about training of the CNN is provided in Supplementary Note 6.

As shown in Figure 3a, the inference process is realized by the CNN with 6 layers including 2 convolutional layers, 2 maxpool layers and 2 fully connected layers. In the convolutional layers, the photographs of the handwritten digits are divided into several overlapping grids. In the first convolutional layer the photograph is divided into 28 × 28 grids and each grid contains 5 × 5 pixels. Zeros are filled at photograph borders to make the amount of grids equaled to that of the original photograph. The output of a grid is the weighted sum of the pixels; and the network inputs the sum to the activation function of the rectified linear unit (ReLU function). The outputs of all grids are made of a transformed photograph containing abstracted information. For the first convolutional layer, 32 convolutional kernels are used, and thus 32 different abstracted photographs (channels) of the handwritten digits are obtained. The convolutional layer is followed by a maxpool layer to reduce redundant information as well as the amount of pixels. The maxpool layer receives all pixels of one channel generated by the convolutional layer, and divides the pixels into several non-overlapping grids. In the first maxpool layer, the channel is divided into 14 × 14 grids; and each grid contains 2 × 2 pixels. The output of the first maxpool layer also contains 32 channels, each of which has 14 × 14 pixels. The second convolutional layer is the same as the first one except that the amount of convolutional kernels is 64. Before the fully connected layer, the output of maxpool layer is reshaped into one dimensional array, whose amount of elements is 3136 (7 × 7 × 64). As shown in Figure 3a, the convolutional layers are followed by a 2 layers of fully connected perceptron, which contains 1024 neurons and 10 neurons, respectively. Each neuron in perceptron receives the spikes, calculates the weighted sum of the spike and transfers the sum to the nonlinear activation function (ReLU function) to obtain the output. Handwritten digit recognition inference process is carried out according to:

Equation (2) presents convolution operation in convolutional layer, where wkikj is the convolutional kernel and fin(i, j) is the frequency of the input spike. Equation (3) presents the maxpool operation following the One-Hot rule (See Supplementary Note 5). The operation in fully connected layer follows equation (4), where wi is the ith weight of perceptron. Considering the characteristics of the memristive neuron, all signals received by neurons are modulated by frequency. The gray scale of handwritten photograph is modulated to a fixed amplitude spike, and the frequency of spike is proportional to the gray scale level. Considering the connection between synapses and neurons, the software-based synapse in the network is designed to be a frequency modulator. Synapses receive the spike sequence, and modify the frequency of the pulse sequence according to the pre-defined weight, and send out output spiking sequence. The calculation in the network follows equations (2–4). The detailed information of the output of channels in each layer when recognizing handwritten “9” is presented in Supplementary Note 7.

In the inference process, considering the time domain delay factor, the memristive neuron behaves as 784 neurons in first convolutional layer based on TDMA technique, as shown in Figure 3b. Neurons in other layers are software-based and they are replicable by the memristive neuron. The photographs of the handwritten of digits in the test set of MNIST database48 were used to examine the hybrid CNN. Ten thousand different photographs were examined through this CNN, and the accuracy rate is round 97.1% which approaches the rate of a pure software based network through GPU. More information about the CNN recognition of handwritten “9” is provided in Supplementary Note 8.

Figure 4a shows examples of noisy photographs for the digits “0” to “9”. Noises are added by randomly choosing pixels and assigning them to zero. Figure 4b shows the accuracy rate on ten thousand of test images with a certain proportion of noise. The average accuracy rate is more than 90% for the image which is distorted by adding 40% noise. In this work, all weights in convolutional layers and fully connection layers are positive for easy application in hardware.

Discussion

In summary, CMOS compatible neuron has been realized based on HfO2 memristor. We have demonstrated a hybrid CNN based on the HfO2 memristive neuron. One memristive neuron is used behaving as 784 neurons in the convolutional layer in the neural network based on the TDMA technique. Handwritten digits can be recognized through this memristive CNN. This study provides the possibility to reduce the number of neurons required by more than two orders as well as to realize hardware implementation of brain-level artificial neuromorphic networks.

References

Maguire, Y. G. et al. Physical principles for scalable neural recording. Frontiers in computational neuroscience 7, 137 (2013).

Mead, C. Neuromorphic electronic systems. Proceedings of the IEEE 78, 1629–1636 (1990).

Markov, I. L. Limits on fundamental limits to computation. Nature 512, 147 (2014).

Merolla, P. A. et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 345, 668–673 (2014).

Zhu, L. Q., Wan, C. J., Guo, L. Q., Shi, Y. & Wan, Q. Artificial synapse network on inorganic proton conductor for neuromorphic systems. Nature communications 5, 3158 (2014).

Wang, L., Lu, S.-R. & Wen, J. Recent advances on neuromorphic systems using phase-change materials. Nanoscale research letters 12, 347 (2017).

Boyn, S. et al. Learning through ferroelectric domain dynamics in solid-state synapses. Nature communications 8, 14736 (2017).

Lequeux, S. et al. A magnetic synapse: multilevel spin-torque memristor with perpendicular anisotropy. Scientific reports 6, 31510 (2016).

Hu, S. et al. Review of nanostructured resistive switching memristor and its applications. Nanoscience and Nanotechnology Letters 6, 729–757 (2014).

Kuzum, D., Yu, S. & Wong, H. P. Synaptic electronics: materials, devices and applications. Nanotechnology 24, 382001 (2013).

Chua, L. Memristor-the missing circuit element. IEEE Transactions on circuit theory 18, 507–519 (1971).

Chua, L. O. & Kang, S. M. Memristive devices and systems. Proceedings of the IEEE 64, 209–223 (1976).

Strukov, D. B., Snider, G. S., Stewart, D. R. & Williams, R. S. The missing memristor found. nature 453, 80 (2008).

Y van de Burgt, Y. et al. A non-volatile organic electrochemical device as a low-voltage artificial synapse for neuromorphic computing. Nature materials 16, 414 (2017).

Jo, S. H. et al. Nanoscale memristor device as synapse in neuromorphic systems. Nano letters 10, 1297–1301 (2010).

Yu, S., Wu, Y., Jeyasingh, R., Kuzum, D. & Wong, H.-S. P. An electronic synapse device based on metal oxide resistive switching memory for neuromorphic computation. IEEE Transactions on Electron Devices 58, 2729–2737 (2011).

Wang, Z. et al. Memristors with diffusive dynamics as synaptic emulators for neuromorphic computing. Nature materials 16, 101 (2017).

Hu, S. et al. Emulating the ebbinghaus forgetting curve of the human brain with a nio-based memristor. Applied Physics Letters 103, 133701 (2013).

Wang, Z. Q. et al. Synaptic learning and memory functions achieved using oxygen ion migration/diffusion in an amorphous ingazno memristor. Advanced Functional Materials 22, 2759–2765 (2012).

Ziegler, M. et al. An electronic version of pavlov’s dog. Advanced Functional Materials 22, 2744–2749 (2012).

Pershin, Y. V. & Di Ventra, M. Experimental demonstration of associative memory with memristive neural networks. Neural Networks 23, 881–886 (2010).

Eryilmaz, S. B. et al. Brain-like associative learning using a nanoscale non-volatile phase change synaptic device array. Frontiers in neuroscience 8, 205 (2014).

Hu, S. et al. Associative memory realized by a reconfigurable memristive hopfield neural network. Nature communications 6, 7522 (2015).

Alibart, F., Zamanidoost, E. & Strukov, D. B. Pattern classification by memristive crossbar circuits using ex situ and in situ training. Nature communications 4, 2072 (2013).

Prezioso, M. et al. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61 (2015).

Prezioso, M. et al. Modeling and implementation of firing-rate neuromorphic-network classifiers with bilayer pt/al2o3/tio2- x/pt memristors. In Electron Devices Meeting (IEDM), 2015 IEEE International, 17–4 (IEEE, 2015).

Serb, A. et al. Unsupervised learning in probabilistic neural networks with multi-state metal-oxide memristive synapses. Nature communications 7, 12611 (2016).

Yao, P. et al. Face classification using electronic synapses. Nature communications 8, 15199 (2017).

Sheridan, P. M. et al. Sparse coding with memristor networks. Nature nanotechnology 12, 784 (2017).

Tuma, T., Pantazi, A., Le Gallo, M., Sebastian, A. & Eleftheriou, E. Stochastic phase-change neurons. Nature nanotechnology 11, 693 (2016).

Pantazi, A., Woźniak, S., Tuma, T. & Eleftheriou, E. All-memristive neuromorphic computing with level-tuned neurons. Nanotechnology 27, 355205 (2016).

Wo źniak, S. et al. Neuromorphic architecture with 1 m memristive synapses for detection of weakly correlated inputs. IEEE Transactions on Circuits and Systems II: Express Briefs 64, 1342–1346 (2017).

Tuma, T., Le Gallo, M., Sebastian, A. & Eleftheriou, E. Detecting correlations using phase-change neurons and synapses. IEEE Electron Device Letters 37, 1238–1241 (2016).

Woźniak, S., Pantazi, A., Leblebici, Y. & Eleftheriou, E. Neuromorphic system with phase-change synapses for pattern learning and feature extraction. In Neural Networks (IJCNN), 2017 International Joint Conference on, 3724–3732 (IEEE, 2017).

Meena, J. S., Sze, S. M., Chand, U. & Tseng, T.-Y. Overview of emerging nonvolatile memory technologies. Nanoscale research letters 9, 526 (2014).

Yang, J. J., Strukov, D. B. & Stewart, D. R. Memristive devices for computing. Nature nanotechnology 8, 13 (2013).

Matsugu, M., Mori, K., Mitari, Y. & Kaneda, Y. Subject independent facial expression recognition with robust face detection using a convolutional neural network. Neural Networks 16, 555–559 (2003).

LeCun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proceedings of the IEEE 86, 2278–2324 (1998).

Ren, S., He, K., Girshick, R. & Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Advances in neural information processing systems, 91–99 (2015).

Karpathy, A. et al. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, 1725–1732 (2014).

Wang, Z. et al. Fully memristive neural networks for pattern classification with unsupervised learning. Nature Electronics 1, 137 (2018).

Ni, L., Liu, Z., Yu, H. & Joshi, R. V. An energy-efficient digital ReRAM-crossbar-based CNN with bitwise parallelism. IEEE Journal on Exploratory Solid-State Computational Devices and Circuits 3, 37–46 (2017).

Ni, L., Huang, H., Liu, Z., Joshi, R. V. & Yu, H. Distributed in-memory computing on binary RRAM crossbar. ACM Journal on Emerging Technologies in Computing Systems (JETC) 13, 36 (2017).

Lee, H. et al. Evidence and solution of over-RESET problem for HfO x based resistive memory with sub-ns switching speed and high endurance. In Electron Devices Meeting (IEDM), 2010 IEEE International, 19–7 (IEEE, 2010).

Mei, C. Y. et al. 28-nm 2 T High-K Metal Gate Embedded RRAM With Fully Compatible CMOS Logic Processes. IEEE Electron Device Letters 34, 1253–1255 (2013).

Averbeck, B. B., Latham, P. E. & Pouget, A. Neural correlations, population coding and computation. Nature reviews neuroscience 7, 358 (2006).

Yuan, T. TensorFlow, https://www.tensorflow.org/.

Yann, L. MNIST, http://yann.lecun.com/exdb/mnist/.

Acknowledgements

This work is supported by NSFC under project Nos 61774028 and 61771097, and the Fundamental Research Funds for the China Central Universities under project No. ZYGX2016Z007.

Author information

Authors and Affiliations

Contributions

J.J.W., S.G.H., Y.L. and T.P.C. proposed the research; the experiments were conceived by J.J.W., S.G.H., X.T.Z. and Q.Y. and carried out by S.G.H., Z.L., J.J.W., Q.Y., Y.Y. and S.H.; data analysis was conducted by S.G.H., Q.Y., J.J.W. and Y.L.; and J.J.W., S.G.H., Y.L., T.P.C. and Q.Y. prepared the manuscript with contributions from all authors.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, J.J., Hu, S.G., Zhan, X.T. et al. Handwritten-Digit Recognition by Hybrid Convolutional Neural Network based on HfO2 Memristive Spiking-Neuron. Sci Rep 8, 12546 (2018). https://doi.org/10.1038/s41598-018-30768-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-30768-0

This article is cited by

-

Memristive Devices Based on Two-Dimensional Transition Metal Chalcogenides for Neuromorphic Computing

Nano-Micro Letters (2022)

-

Convolutional neural networks performance comparison for handwritten Bengali numerals recognition

SN Applied Sciences (2019)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.