Abstract

Predicting failure in solids has broad applications including earthquake prediction which remains an unattainable goal. However, recent machine learning work shows that laboratory earthquakes can be predicted using micro-failure events and temporal evolution of fault zone elastic properties. Remarkably, these results come from purely data-driven models trained with large datasets. Such data are equivalent to centuries of fault motion rendering application to tectonic faulting unclear. In addition, the underlying physics of such predictions is poorly understood. Here, we address scalability using a novel Physics-Informed Neural Network (PINN). Our model encodes fault physics in the deep learning loss function using time-lapse ultrasonic data. PINN models outperform data-driven models and significantly improve transfer learning for small training datasets and conditions outside those used in training. Our work suggests that PINN offers a promising path for machine learning-based failure prediction and, ultimately for improving our understanding of earthquake physics and prediction.

Similar content being viewed by others

Introduction

Prediction of catastrophic failure remains a critical albeit challenging endeavor across disciplines, from the nondestructive evaluation and structural health monitoring of industrial components1,2 and civil infrastructure3,4,5 to geophysics. In the latter domain, decades of research have greatly improved our understanding of earthquake physics, however, it is not yet possible to make sufficiently accurate predictions of when/where destructive earthquakes will occur, and we can currently only rely on seismic hazard maps that tell us about the likelihood for a magnitude-x earthquake to strike a particular region in the next y years6,7. In the last decades, anthropogenic earthquakes near geothermal reservoirs or due to wastewater injection have also threatened communities in regions of historically low seismicity8,9, sometimes leading to early termination of innovative, costly projects10,11,12,13. Improving our ability to assess seismic risk—or in the long-term forecast earthquakes—would have a strong societal impact, saving lives, reducing economic disaster, as well as strengthening our ability to produce geothermal energy and non-conventional oil & gas by mitigating seismic risk near production sites.

Numerous laboratory studies have shown that the onset of failure is associated with bursts of acoustic emission (AE) events taking place during crack initiation and growth, and the number and amplitude of AE events generally increase as the sample approaches failure14,15,16,17,18,19,20,21,22,23,24. Recent friction studies on laboratory faults have shown that machine learning (ML) algorithms can actually predict the timing and magnitude of lab quakes using AE data15,16,25,26,27,28,29,30,31,32. It is remarkable that solely using acoustic emission data radiating from the faults as an input, the fault strength can be accurately predicted throughout the laboratory seismic cycle25,27. Past work33,34,35 has shown that the vast majority of events radiate from the fault plane, therefore carrying information about the fault state. And as the elastic waves radiate/scatter through the host granite blocks, they also provide information about the stress state of the host rock. It is also remarkable that predictions work in the early stage of the seismic cycle when the acoustic signal often looks like noise, either because it lacks a clear P-wave, such as expected for friction/fracture events, or because it represents something like tectonic tremor involving the sum of many small or low frequency events that overlap in time and cannot be distinguished as separate events36. These studies show that the variance and kurtosis of AE data are the most predictive features among the ~100 features considered by ML models15,27. Other studies using active-source ultrasonic measurements have shown that laboratory quakes are preceded by reliable precursory signals, such as systematic changes in elastic wave velocity and amplitude37,38,39,40,41. Most recent studies have shown that laboratory quakes can also be predicted from active-source measurements using machine learning approaches42,43, despite unfavorable conditions such as the occurrence of irregular seismic cycles. Again, it is remarkable that for some of the deep learning approaches used (like Long Short-Term Memory or LSTM), the R2 values reach ~0.94 for shear stress prediction.

While greatly striking, laboratory quake predictions are enabled by the large amount of training data available. Scaled up to geological times, it would be equivalent to decades/centuries of data in nature. Also, such models might perform well for one particular dataset but fail to provide accurate predictions for another, slightly different dataset. This challenge can be overcome using various strategies such as meta-learning44,45 and continual lifelong learning46. An alternative promising approach is Physics-Informed Neural Network (PINN) modeling47 with the goal of improving predictions, model transferability and generalizability while reducing the amount of required training data. Physics-informed learning integrates pure data and physical laws to train the models. The PINN models can be implemented by introducing observational, inductive, or learning bias47. In case of observational bias, sufficient data covering the input and output domain of a learning model serve as a form of physics-based constraint that is embedded in the ML model48. The main challenge is the cost of data acquisition to generate a large volume of data which may involve complex and large-scale experiments or computational models. The inductive biases approach focuses on developing specialized neural network architectures that implicitly incorporate physics49. The effectiveness of these models is currently limited to simple physics and their extension to complex laws is still challenging and difficult to encode in the network architecture. Finally, the PINN can be implemented using the learning bias approach in which appropriate physics constraints are added to the cost function to penalize predictions inconsistent with the underlying physics50,51. This approach is widely used as the flexibility of adding penalty constraints allows the inclusion of many domain-specific physical principles into the model.

Here, we present a learning bias-based PINN framework that is tasked with predicting shear stress and fault slip rate history given information on fault zone elastic wave speed and transmitted amplitude. The PINN framework incorporates two physics constraints, one that relates the elastic coupling of a fault with the surrounding host rock52, and another that relates fault stiffness to the ultrasonic transmission coefficient53,54. We systematically vary the amount of training data and find that, as training data becomes scarce, the PINN models outperform the purely data-driven models by roughly 10–15%. The PINN models are also more effective than purely data-driven models when tasked to predict laboratory quakes from a differing dataset (transfer learning).

Results and discussion

A brief description of the experimental procedure and data is given below. The performance of data-driven, PINN and transfer learning models for different training data sizes is then presented and discussed.

Friction experiment & ultrasonic monitoring

The friction experiments are carried out in a double direct shear (DDS) loading configuration, where three blocks of Westerly granite are loaded to create two faults, each of area 5 × 5 cm2 (inset, Fig. 1a). Prior to loading, the block surfaces are dusted with fine quartz powder (< ≈200 μm layer thickness). The vertical and horizontal pistons are used to apply shear (τ) and normal (σ) stresses, respectively. Two direct current differential transformers (DCDT) are used to measure shear and normal displacements of the hydraulic pistons, while two strain-gauge load cells measure shear and normal stresses. An additional on-board DCDT attached to the central granite block and referenced to the base of the sample assembly is used to measure slip rate (V). To commence the experiment, normal stress σ = 10 MPa is applied to the sample and held constant via servo-control. Next, the central block is pushed downward at a constant rate of Vl = 8.9 μm/s. As the block is pushed down, the shear stress increases until the fault becomes unstable, the shearing block rapidly displaces (slips) and a sharp drop in the shear stress is recorded (lab earthquake). After the drop, a new stick-slip cycle begins; the fault gets locked again (sticks) and shear stress continues to increase. In order to develop robust machine learning models using these data, it is desired to create a frictional regime that produces irregular stick-slips. To that end, an acrylic spring is placed in series between the vertical piston and the central block to reduce the overall stiffness of the loading apparatus (K), and experiments are conducted close to the stability boundary producing both regular and irregular stick-slip cycles55. In this study, we use data collected in two separate experiments41 namely, p5270 and p5271. The only difference between the two is the size of the acrylic spring used: 25 cm2 for experiment p5270 and 20.25 cm2 for p5271 resulting in different recurrence intervals and magnitudes of the lab quakes as shown in Supplementary Figs. S1 and S2. In p5270, the cycles become larger much earlier in the experiment. On the contrary, in p5271 they become larger later in the experiment. Throughout the experiment, all the stresses and displacements (including shear stress and slip rate) are recorded at a rate of 10 kHz. Additional details about these experiments can be found in refs. 40,41.

a Temporal evolution of shear stress and slip rate in experiment p5270. The inset shows a schematic of the DDS setup with two ultrasonic transducers (transmitter T and receiver R) probing the fault. The thin vertical dashed line corresponds to the time at which the reference ultrasonic waveform is chosen (see text for more details). b Schematic representation of active-source ultrasonic monitoring during the experiment. The ultrasonic waveforms are recorded every millisecond throughout the stick-slip cycles. Only a small subset of the waveforms is shown for readability. c An example of a recorded ultrasonic signal. Input features to the machine learning models are extracted from the initial portion of the ultrasonic signals (highlighted in brown).

The friction experiment is coupled with active source ultrasonic monitoring. A pair of p-wave ultrasonic transducers are used to regularly probe the faults throughout the experiment. The two identical piezoelectric disks, used as transmitter and receiver, are 12.7 mm in diameter, 4 mm thick (corresponding to a center frequency of 500 kHz) made of material 850 from American Piezo Ceramics (APC International). The piezoelectric transducers are epoxy-glued at the bottom of blind holes inside steel platens that hold the DDS assembly. The transmitter T imparts a series of half-sine pulses at 500 kHz every 1 ms throughout the experiment (Fig. 1b). The response is recorded by the receiving transducer R at a sampling rate of 25 MHz (Fig. 1c). For experiments p5270 and p5271, the recorded ultrasonic data consist of 132,399 and 75,999 signals recorded during 387 and 237 stick-slip cycles, respectively.

Ultrasonic feature extraction

Physics-based features, namely wave speed (vi) and spectral amplitude (Ai) at time ti, are extracted from each ultrasonic signal (waveform)42. Figure 2 illustrates the feature extraction process. To calculate the evolution of wave speed during frictional sliding, we first extract the time delay Δt by cross-correlating each waveform Si with a reference waveform S0. The reference waveform is chosen past the peak friction just before the fault starts its transition from stable sliding to unstable seismic cycles (thin vertical dashed line at time = 2065 s in Fig. 1a). The shape of the recorded waveforms Si changes little throughout the experiment such that the cross-correlation coefficient remains always greater than 0.97. The cross-correlation is calculated within a finite window of size T extending from ti + w1 to ti + w2 as shown in Fig. 2a. The peak of the cross-correlation between the two waveforms is refined by fitting a parabola passing through the peak and two adjacent points. For each signal Si, the total travel time or time-of-flight TOFi is obtained by adding the hand-picked arrival time of the reference waveform (TOF0) to the estimated time delay (TOFi = TOF0 + Δti). Finally, the wave speed is calculated by dividing the sample thickness by the travel time (vi = hi/TOFi), where hi = h0 + δh and h0 is the thickness measured at the beginning of the experiment just after applying the normal stress and δh is the thickness change measured continuously during the experiment. The second feature, spectral amplitude Ai, is calculated as the amplitude of the Fourier transform of each signal Si within a finite window of size T extending from ti + w1 to ti + w2 at a frequency of 400 kHz close to the center frequency of transmitted waves as shown in Fig. 2b. To reduce noise, both feature histories are low-pass filtered using a 10-point backward-looking moving average.

a Shows the reference waveform (S0) and a typical waveform during shearing (Si). The inset emphasizes the time delay between the two signals Δti calculated by cross-correlating the two signals. The box marks the extent of the cross-correlation window from ti + w1 to ti + w2 with w1 = 20.76 μs and w2 = 25.16 μs. The bottom plot shows a sample of wave speed and time shift evolution for several lab seismic cycles over a period of 30 s. b Illustrates the spectral amplitude calculation from the Fourier spectrum of the windowed signal. The plot at the bottom shows an exemplary evolution of spectral amplitude. Note that wave speed and amplitude vary systematically with shear stress, but have a complex nonlinear relationship with shear stress as given in ref. 42.

Performance of data-driven, PINN and transfer learning models

We compare the performance of PINN vs data-driven models as well as transfer-learned vs stand-alone models for different training-validation-test splits. The data-driven models are developed using the Multilayer perceptron (MLP) neural network with a backpropagation algorithm56 and Adam optimizer57 to perform the regression task. The PINN models are built upon the data-driven models with the loss function modified to include the physics-based constraints as shown schematically in Fig. 3. Two different PINN models (PINN #1 and PINN #2) are considered. The PINN #1 model is constrained by the elastic coupling relation of a fault with the surrounding host rock (see Eq. (1)). The PINN #2 model is constrained also by the coupling (Eq. (1)) of fault stiffness to the ultrasonic transmission coefficient relations (Eqs. (2) and (3)). The data-driven, PINN #1, and PINN #2 models share the same MLP framework (hidden layers, units, batch size, optimizer, and learning rate) across different data splits to allow one on one comparison. Transfer learning (TL) models for p5271 experiment initialize with the pre-trained p5270 model weights as schematically illustrated in Fig. 4. The performance of the TL models is compared with the standalone data-driven models for p5271 experiment. A detailed description of the data-driven models as well as the PINN and transfer learning frameworks including data selection and normalization is provided in “Methods”.

All the reported models are developed with Google Colab using GPU acceleration with 16 GB memory. Each model is re-run entirely with three random seed instances to obtain an estimate of the model variance. The average R2 score with standard error as well as the average root mean square error (RMSE) values and training times are reported for all the models.

Data-driven multi-output MLP models developed using the p5270 experimental dataset serve as a reference for later comparisons with PINN models. The reference model is developed with the training set size varying from 70% down to 5%. The R2 scores of the reference model for predicting the shear stress and slip rate using training, validation, and testing sets as a function of varying training set sizes are plotted in Fig. 5 (grey bars). We observe that models trained with more than 20% of training data result in test R2 scores greater than 0.9 for shear stress prediction. For slip rate prediction, R2 scores are between 0.75 to 0.87. In all the cases considered, shear stress is predicted more accurately than the slip rate. Further reduction in the amount of training data (10% and less) results in a considerably lower test R2 score, although the training R2 scores remain reasonably high, a possible indication of overfitting. In other words, although the models with reduced data fit the training data well, they poorly fit the nonlinear relationship between the ultrasonic features (input) and shear failure variables (target) in validation and test sets. These results serve as a baseline performance to evaluate the performance of PINN models.

a–c Shear stress (τ) prediction R2 scores in training, validation, and testing as a function of varying training set sizes. d–f Slip rate (V) prediction R2 scores in training, validation, and testing datasets as a function of varying training set sizes are plotted. For both shear stress and slip rate, the PINN models outperform the reference data-driven models in testing and the improvement increases inversely with training data size. The minimum and maximum of the error bar represent the one standard error from the mean.

Comparisons of PINN #1 and PINN #2 models with the reference data-driven model for varying training set sizes are shown in Fig. 5 (brown and yellow bars, respectively). Similar to reference models, both PINN models show test R2 scores greater than 0.9 when training data are 20% or more. Importantly, for all the considered splits, the PINN models perform equally well or better than their data-driven counterparts. Furthermore, the performance improvement is most evident when the training data are scarce (20% and less), especially in slip rate prediction. The PINN models trained on small sets (20% and less) also show less R2 score variance, which suggests that the models are stable and result in a small variation in the prediction of the target data with changes in the model initialization set by random seeds. Table 1 compares the RMSE values for the Reference data-driven, PINN #1, and PINN #2 models. Note that the RMSE values (calculated using normalized data) are consistently larger for the reference across all training data sizes. Finally, the training time for all the models is compared in Supplementary Table S1 showing that the PINN models converge faster than their corresponding data-driven models. Figure 6 visually compares the predictions by reference data-driven, PINN #1, and PINN #2 models developed with 70% and 5% training data shown for one complete shear stress and slip rate cycles. The superior performance of the PINN models for prediction of shear rate is visible in both panels and more pronounced for the smaller training set. In sum, our findings suggest that adding the physics constraints enhances the model performance and results in models with reasonable performance even when the training set is very small. Comparing PINN #1 and PINN #2 model performances for shear stress prediction, both PINN models show similar test scores except for the 50% and 5% cases where PINN # 2 outperforms PINN #1. The performance difference becomes more significant in the case of slip rate prediction with PINN #2 providing better predictions when ≤ 50% of the data are used for training. This highlights the importance of the constraint relating fault stiffness and ultrasonic wave transmission for improving prediction accuracy.

Finally, we examine how well the two PINN models have learned the experimental parameters and material properties in physics constraints (Eqs. (1)–(3)), which we treat as learnable constants. These weights are extracted from the learning layers of the fully trained models (early stopping enabled) and converted back to the original scale using the scaling used during data normalization. The learned values by PINN #1 & PINN #2 are then compared against the known values (when applicable) in Table 2. The PINN #1 model provides estimated normal stress (σ), system stiffness (k), and shear loading velocity (vl) values with the percentage errors ranging from 2 to 14% compared to the true experimental values across all the varying training dataset sizes. Similarly, the PINN #2 model estimates the normal stress (σ), shear loading velocity (vl), loading stiffness (K), and density (ρ) constants with smaller errors that range from 1 to 8%. In both cases, the error generally increases as the training set size decreases. The percent error for AIntact is not reported because its true value is not available. As expected from the performance comparison analysis above, constants estimated by PINN #2 models are more accurate than those estimated by PINN #1 models.

Transfer learning models are developed by fine-tuning data-driven and PINN models pre-trained on the p5270 dataset (70%–10%–20% split) to make predictions for the p5271 experiment. In addition, standalone data-driven models for p5271 experiment are trained, validated, and tested to serve as baseline. The training set size for the standalone and TL p5271 models is varied from 70% of the total data down to 10% while maintaining the same validation and testing sets of size 10% and 20%, respectively. Figure 7 compares the performance of the standalone and different TL models for all the considered data splits. Like for p5270, we see a generally decreasing R2 score trend for standalone p5271 models when training data are reduced from 70% to 10%. We observe that all the TL models outperform standalone data-driven models. In most cases, the TL Data-driven and TL PINN #2 models show similar performances except for the 10% case, where the TL PINN #2 significantly outperforms the TL Data-driven. On the contrary, the TL PINN #1 models consistently outperform all the other models irrespective of data split. Further model tuning with cosine decay schedule and fine-tuning (freezing one or more layers) show that the TL PINN #1 models consistently outperform the TL PINN #2 models in predicting shear stress and slip rate in all scenarios. One possible reason behind this observation could be that the PINN #1 models are constrained only by the simplified elastic coupling relation (Eq. (1)) unlike PINN #2 which also incorporates the ultrasonic transmission involving ultrasonic attributes (Eqs. (1)–(3)) that vary from experiment to experiment. This could be why PINN #1 model may generalize better than PINN #2. Unlike p5270, we do not observe any consistent trends in the model variances. In general, all the TL and standalone models show a high variance in slip rate prediction compared to the shear stress prediction. In addition to R2 score, the RMSEs for the standalone and TL models are compared in Supplementary Table S2. These corroborate the previous observations; all the TL models show smaller RMSE values compared to the standalone models with PINN #1 models having the smallest errors for each split. Comparing the time required for training in Supplementary Table S3, the TL models are shown to converge faster, indicating that the initial weights from the p5270 model provide a good starting point for training p5271 models. Finally, the experimental constants learned by TL PINN #1 and TL PINN #2 frameworks are compared against their known values in Supplementary Table S4. In sum, TL improves model prediction. Moreover, when the training data are scarce (represented by 10% of training data) the TL PINN models outperform standalone and TL data-driven models by a large margin.

TL models are initialized using the p5270 dataset. a–c Shear stress (τ) prediction R2 scores in training, validation, and testing datasets as a function of varying training set sizes are plotted. d–f Slip rate (V) prediction R2 scores in training, validation, and testing datasets as a function of varying training set sizes are plotted. TL improves model prediction and the TL PINN models significantly outperform standalone and TL data-driven models for small size training sets. The minimum and maximum of the error bar represent the one standard error from the mean.

Relevance to field studies

This study demonstrates that adding physics-based constraints to ML models is greatly beneficial for failure prediction, especially when datasets are scarce. On the other hand, we recognize that the model developed here cannot be directly applied to field data, because shear stress and slip rate data at depth are not accessible in the field. Moreover, very few active seismic surveys performed continuously over extended periods of time are available58. Nonetheless, we believe this work represents a step toward failure prediction in the field for the following reasons. First, an approach similar to the one presented here still using lab data might be followed to better constrain the rate and state frictional models and associated parameters that are used in geodetic studies to infer fault slip distribution at depth59,60,61,62,63,64,65,66,67. Second, stress and slip rate data might be inferred in the field with ML models using earthquake recurrence as input data, and possibly pre-training on lab data.

We build a PINN framework to predict laboratory earthquakes from active-source ultrasonic monitoring data and demonstrate that it outperforms models based only on data-driven loss especially when training data are limited. This framework incorporates two physical constraints that describe the elastic coupling of faults with their surroundings as well as ultrasonic transmission across the frictional interface. We compare the PINN predictions of laboratory earthquakes (shear stress history) and slip rate evolution to those from the purely data-driven models for varying amounts of training data. A key result is that incorporating the physics constraints improves the model performance; the improvement is most significant when training data are scarce. The modeling results for one dataset (p5270) suggest that the PINN #2 framework (constrained by both elastic coupling and ultrasonic transmission relations) outperforms the PINN #1 framework (incorporating only elastic coupling of faults with their surroundings). Furthermore, a TL study carried out using a distinct second dataset (p5271) shows that TL models outperform standalone models and that with transfer-learned PINN, it is possible to develop reasonably well-performing prediction models using a small amount of training dataset (using 10% in this study). In sum, our findings suggest that incorporating simplified laws of physics results in accurate and transferable predictions even when the training data size is small. This finding has important implications for seismicity monitoring and prediction in CO2 storage sites, geothermal and unconventional reservoirs using the time-lapsed active source monitoring with limited available field data.

Methods

Our objective is to predict shear stress (τi) history (model output) given the time evolution of the extracted ultrasonic features, vi and Ai (input features). In addition to (τi), we also predict slip rate (Vi) to formulate one of the physics constraints. Dual-output data-driven DL and PINN models are developed to simultaneously predict shear stress τi and slip rate Vi histories, using the time-evolution of wave speed vi and amplitude Ai features as input. For both sets of models, we implement MLP which is a deep fully connected neural network structure to perform the regression task. Note that time to failure (TTF) is not directly predicted here as it is not independent of shear stress (τi). If desired, the TTF for each stick-slip cycle can be readily estimated from the predicted τi history.

Training-validation-testing splits

To build the models, the dataset from each experiment is divided into distinct training, validation, and testing sets. The models are trained on the training dataset; hyperparameter tuning is carried out on the validation dataset while the unseen testing dataset is used to evaluate the reported performance of the models. Because we use time series of continuous data, the data are not randomly sampled or shuffled during training, validation, or testing preserving the sequence of stick-slip cycles. To investigate the prediction performance of the models with limited training data, the amount of training data used is varied from 70% (equivalent to about 273 seismic cycles) down to 5% (equivalent to about 15 seismic cycles) of the entire dataset, whereas the same validation and testing datasets are used across the models. The validation and testing sets constitute the final portion of the data and amount to 10% and 20% of the dataset, respectively. Note that we choose the training, validation, and testing sets in a sequence i.e., the training dataset immediately precedes the validation set, which is followed by the testing set as shown in Fig. 8.

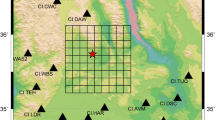

a 70% of the data (equivalent to 273 seismic cycles) are used for training. b 5% of the data (equivalent to 15 seismic cycles) are used for training. Note that in both cases, the validation and test sets do not vary and consist of 10% and 20% of the data equivalent to 36 and 78 cycles, respectively. Therefore, the total amount of data used for developing the model is varied from 100% (= 70 + 10 + 20) down to 35% (= 5 + 10 + 20) corresponding to the respective splits shown in (a, b).

Normalization

Prior to building the models, all the data are scaled using min-max normalization. This is achieved by first normalizing the training data followed by normalizing the validation and test datasets using the same training data min-max values.

Data-driven models

Data-driven MLP models are developed as reference models for all the training-validation-test splits. An MLP model consists of an input layer, one or more hidden layers, and an output layer. The data are propagated forward from the input layer to the output layer and the neurons are trained with the backpropagation learning algorithm56. Through grid search, we explored a series of MLP models with a different number of layers, nodes, batch sizes, and learning rates to find the best hyperparameters based on the performance on the validation dataset. Our best-performing data-driven MLP model has five hidden layers with 128, 64, 32, 16, and 8 nodes, respectively. A batch size of 32 and a learning rate of 0.001 is used following hyperparameter tuning. Following ref. 42, the input features are provided with 3 s of data history before the current time to predict shear stress and slip rate at the current time. The 3 s correspond roughly to the average duration of a seismic cycle in our datasets. We use mean squared error (MSE) loss, rectifier linear unit (ReLU) activation for each hidden layer, and linear activation for the output layer. The number of epochs is set to 100 with early stopping (patience = 20) enabled to prevent overtraining. Finally, we use the Adam optimizer57 and the R2 score metric to evaluate model performance.

PINN modeling framework

The proposed PINN framework builds upon the data-driven MLP model discussed above. We modify the loss function to include physics-based constraints as illustrated schematically in Fig. 3. We consider two constraints. The first describes elastic coupling between a fault and its surroundings. We model the lab setup as a single-degree-of-freedom spring slider system neglecting inertia52:

where μ( = τi/σ) is the coefficient of friction and k is the overall system stiffness. Shear stress τi and slip rate Vi are the model outputs while the applied normal stress σ and shearing rate Vl are known experimental constants. The system stiffness k combines the fault stiffness kf and the stiffness K of the rest of the deformation machine and host rock loading the fault (a measurable experimental parameter), which act in parallel:

The fault stiffness kf is related to the ultrasonic transmission coefficient T across the fault interface through the displacement discontinuity model54:

where Ai is the transmitted wave amplitude history, Aintact is the transmitted wave amplitude through the intact granite blocks i.e., in the absence of the faults, f0 is the center frequency of the received wave (400 kHz), ρ is the mass density of the material surrounding the fault (granite), and vi is the wave speed history. Among these, Ai and vi are the input features of the model while Aintact and ρ are experimental constants. The center frequency history f0 could have been extracted from the ultrasonic signals and used as an input, but here, we opt to treat it as a given constant f0. Note that Eq. (2) couples Eqs. (1) and (3) through kf.

In this study, we consider two different PINN models: PINN #1 and PINN #2. The first one (PINN #1) is only constrained by the elastic coupling relation (Eq. (1)) that includes a relation between the two output variables τi and Vi (but no input features) rewritten as:

The second PINN model (PINN #2) is constrained by Eqs. (1) to (3) and therefore, also includes the constraint involving input features wave speed vi and wave amplitude Ai. To build the PINN #2 model, all three equations are combined to produce the following constraint added to the loss function:

Given that shear stress τi and slip rate Vi are the model outputs, we denote that the predicted shear stress and slip rate as \(\hat{{\tau }_{i}}\) and \(\hat{{V}_{i}}\), respectively. We can view these predictions as functions of a time-dependent input feature vector (ui = Ai, vi) and θ, which are a collection of weight matrices and bias vectors used by the model. By substituting the neural network approximations into the governing equations (Eq. (4)) and (Eq. (5)), we obtain the constraint functions \(\hat{f_{1}}\) (Eq. (6)) and \(\hat{f_{2}}\) (Eq. (7)) below:

Finally, the composite cost functions for PINN #1 and PINN #2 framework are written in Eqs. (8) and (9), respectively.

The cost function combines the data-driven and physics costs using a regularizer value of 1. For both models, the first two terms represent the MSE in predicting the shear stress (τi) and slip rate (Vi) histories. The \(\hat{f}\) terms represent the penalty added to the cost function for violating the physics constraint defined using Eqs. (1)–(3). The other experimental parameters in the physics equations (i.e., σ, K, Vl, ρ, AIntact) are neither model inputs nor the target outputs. Although these parameters are either known (σ, Vl, ρ, k) or measurable (AIntact could be measured by testing an intact granite block of the same thickness as the cumulative thickness of the blocks used in the friction experiment), we treat them as trainable neural network weights in the PINN framework. This approach is used to avoid errors due to unit mismatch between features, outputs, and these constants in the constraints. These weights are extracted from the layers of the fully trained models and converted back to the original scale to undo the effect of data normalization (see implementation details in https://github.com/prabhavborate92/PINN_Paper.git). A comparison of the scaled learned weights with the known parameter values gives us the opportunity to examine the PINN model by determining how well the models are able to learn the values of parameters measured experimentally.

Transfer learning

As a way to assess the models’ generalizability, we use a transfer learning approach i.e., apply each of the three models (purely data-driven, PINN #1, and PINN #2) trained on the p5270 (reference model) to a new experiment: p5271. Figure 4 illustrates the proposed transfer learning approach. Transfer learning is carried out by using the same neural network architecture but fine-tuning (further training) of all the pre-trained reference model weights (trained and validated on the p5270 dataset) as well as the learning rate and batch size using only a small training set from experiment p5271. In other words, instead of random initialization, the weights of the new model are initialized using what has been learned when training the reference model. These initial weights and hyperparameters provide a good starting point for building a model on the new dataset and lead to faster convergence and smaller training set sizes. For all the transfer-learned (TL) models, hyperparameter tuning resulted in a learning rate of 1e-3 and batch size of 32. Similar to the data-driven models the TL models are trained with the number of epochs set to 100 and using Adam as an optimization algorithm.

We compare the performance of the models after transfer learning with that of the standalone data-driven model trained solely on the p5271 dataset with the training dataset size varied between 70 and 10% of the entire dataset (similar to that explained for standalone models trained on p5270 experimental dataset). Finally, the performance of the standalone and transfer-learned (data-driven, PINN #1, and PINN #2) models are compared in terms of R2 scores (as the performance metric), RMSE and required training time for each model.

Data availability

The experiment p5270 and p5271 data used for training, validation, and testing can be found at: https://github.com/prabhavborate92/PINN_Paper.git.

Code availability

The source codes and models developed in this paper can be accessed at https://github.com/prabhavborate92/PINN_Paper.git. When using the codes and models available in the GitHub repository, please cite Borate et al.68.

References

Rocha, H., Semprimoschnig, C. & Nunes, J. P. Sensors for process and structural health monitoring of aerospace composites: a review. Eng. Struct. 237, 112231 (2021).

Aabid, A. et al. A review of piezoelectric material-based structural control and health monitoring techniques for engineering structures: challenges and opportunities. Actuators 10, 101 (2021).

Parke, G. & Hewson, N. ICE Manual of Bridge Engineering, Third edition (ICE Publishing, 2022).

Abbas, M. & Shafiee, M. Structural health monitoring (shm) and determination of surface defects in large metallic structures using ultrasonic guided waves. Sensors 18, https://doi.org/10.3390/s18113958 (2018).

Herdovics, B. & Cegla, F. Structural health monitoring using torsional guided wave electromagnetic acoustic transducers. Struct. Health Monit. 17, 24–38 (2018).

Gerstenberger, M. C. et al. Probabilistic seismic hazard analysis at regional and national scales: state of the art and future challenges. Rev. Geophys. 58, e2019RG000653 (2020).

Shedlock, K. M., Giardini, D., Grunthal, G. & Zhang, P. The GSHAP global seismic hazard map. Seismol. Res. Lett. 71, 679–686 (2000).

Elsworth, D., Spiers, C. J. & Niemeijer, A. R. Understanding induced seismicity. Science 354, 1380–1381 (2016).

Rubinstein, J. L. & Mahani, A. B. Myths and facts on wastewater injection, hydraulic fracturing, enhanced oil recovery, and induced seismicity. Seismol. Res. Lett. 86, 1060–1067 (2015).

McGarr, A. et al. Coping with earthquakes induced by fluid injection. Science 347, 830–831 (2015).

Majer, E. L. et al. Induced seismicity associated with enhanced geothermal systems. Geothermics 36, 185–222 (2007).

Gaucher, E. et al. Induced seismicity in geothermal reservoirs: a review of forecasting approaches. Renew. Sustain. Energy Rev. 52, 1473–1490 (2015).

Giardini, D. Geothermal quake risks must be faced. Nature 462, 848–849 (2009).

Lockner, D. The role of acoustic emission in the study of rock fracture. Int. J. Rock Mech. Min. Sci. Geomech. Abstr. 30, 883–899 (1993).

Rouet-Leduc, B. et al. Machine learning predicts laboratory earthquakes. Geophys. Res. Lett. 44, 9276–9282 (2017).

Bolton, D. C. et al. Characterizing acoustic signals and searching for precursors during the laboratory seismic cycle using unsupervised machine learning. Seismol. Res. Lett. 90, 1088–1098 (2019).

Rivière, J., Lv, Z., Johnson, P. & Marone, C. Evolution of b-value during the seismic cycle: insights from laboratory experiments on simulated faults. Earth Planet. Sci. Lett. 482, 407–413 (2018).

Scholz, C. H. Microfracturing and the inelastic deformation of rock in compression. J. Geophys. Res. 73, 1417–1432 (1968).

Dunegan, H. & Harris, D. Acoustic emission—a new nondestructive testing tool. Ultrasonics 7, 160–166 (1969).

Dunegan, H., Harris, D. & Tatro, C. Fracture analysis by use of acoustic emission. Eng. Fract. Mech. 1, 105–122 (1968).

Meglis, I., Chows, T. & Young, R. Progressive microcrack development in tests in lac du bonnet granite-i. acoustic emission source location and velocity measurements. Int. J. Rock Mech. Min. Sci. Geomech. Abstr. 32, 741–750 (1995).

Jansen, D. P., Carlson, S. R., Young, R. P. & Hutchins, D. A. Ultrasonic imaging and acoustic emission monitoring of thermally induced microcracks in lac du bonnet granite. J. Geophys. Res. Solid Earth 98, 22231–22243 (1993).

Savage, J. C. & Hasegawa, H. S. Some properties of tensile fractures inferred from elastic wave radiation. J. Geophys. Res. 69, 2091–2100 (1964).

Bu, F. et al. Evaluation of the characterization of acoustic emission of brittle rocks from the experiment to numerical simulation. Sci. Rep. 12, 498 (2022).

Rouet-Leduc, B. et al. Estimating fault friction from seismic signals in the laboratory. Geophys. Res. Lett. 45, 1321–1329 (2018).

Lubbers, N. et al. Earthquake catalog-based machine learning identification of laboratory fault states and the effects of magnitude of completeness. Geophys. Res. Lett. 45, 13,269–13,276 (2018).

Hulbert, C. et al. Similarity of fast and slow earthquakes illuminated by machine learning. Nat. Geosci. 12, 69–74 (2019).

Jasperson, H. et al. Attention network forecasts time-to-failure in laboratory shear experiments. J. Geophys. Res. Solid Earth 126, e2021JB022195 (2021).

Pu, Y., Chen, J. & Apel, D. B. Deep and confident prediction for a laboratory earthquake. Neural Comput. Appl. 33, 11691–11701 (2021).

Wang, K., Johnson, C. W., Bennett, K. C. & Johnson, P. A. Predicting fault slip via transfer learning. Nat. Commun. 12, 7319 (2021).

Wang, K., Johnson, C. W., Bennett, K. C. & Johnson, P. A. Predicting future laboratory fault friction through deep learning transformer models. Geophys. Res. Lett. 49, e2022GL098233 (2022).

Laurenti, L., Tinti, E., Galasso, F., Franco, L. & Marone, C. Deep learning for laboratory earthquake prediction and autoregressive forecasting of fault zone stress. Earth Planet. Sci. Lett. 598, 117825 (2022).

Blanke, A., Kwiatek, G., Goebel, T. H. W., Bohnhoff, M. & Dresen, G. Stress drop-magnitude dependence of acoustic emissions during laboratory stick-slip. Geophys. J. Int. 224, 1371–1380 (2020).

Goebel, T. H. W. et al. Identifying fault heterogeneity through mapping spatial anomalies in acoustic emission statistics. J. Geophys. Res. Solid Earth 117, https://doi.org/10.1029/2011JB008763 (2012).

W. Goebel, T. H., Schorlemmer, D., Becker, T. W., Dresen, G. & Sammis, C. G. Acoustic emissions document stress changes over many seismic cycles in stick-slip experiments. Geophys. Res. Lett. 40, 2049–2054 (2013).

Bolton, D. C., Shreedharan, S., Rivière, J. & Marone, C. Acoustic energy release during the laboratory seismic cycle: Insights on laboratory earthquake precursors and prediction. J. Geophys. Res. Solid Earth 125, e2019JB018975 (2020).

Hedayat, A., Pyrak-Nolte, L. J. & Bobet, A. Precursors to the shear failure of rock discontinuities. Geophys. Res. Lett. 41, 5467–5475 (2014).

Kaproth, B. M. & Marone, C. Slow earthquakes, preseismic velocity changes, and the origin of slow frictional stick-slip. Science 341, 1229–1232 (2013).

Scuderi, M. M., Marone, C., Tinti, E., Di Stefano, G. & Collettini, C. Precursory changes in seismic velocity for the spectrum of earthquake failure modes. Nat. Geosci. 9, 695–700 (2016).

Shreedharan, S., Bolton, D. C., Rivière, J. & Marone, C. Preseismic fault creep and elastic wave amplitude precursors scale with lab earthquake magnitude for the continuum of tectonic failure modes. Geophys. Res. Lett. 47, e2020GL086986 (2020).

Shreedharan, S., Bolton, D. C., Rivière, J. & Marone, C. Competition between preslip and deviatoric stress modulates precursors for laboratory earthquakes. Earth Planet. Sci. Lett. 553, 116623 (2021).

Shokouhi, P. et al. Deep learning can predict laboratory quakes from active source seismic data. Geophys. Res. Lett. 48, e2021GL093187 (2021).

Shreedharan, S., Bolton, D. C., Rivière, J. & Marone, C. Machine learning predicts the timing and shear stress evolution of lab earthquakes using active seismic monitoring of fault zone processes. J. Geophys. Res. Solid Earth 126, e2020JB021588 (2021).

Vilalta, R. & Drissi, Y. A perspective view and survey of meta-learning. Artif. Intell. Rev. 18, 77–95 (2002).

Hospedales, T., Antoniou, A., Micaelli, P. & Storkey, A. Meta-learning in neural networks: a survey. IEEE Trans. Pattern Anal. Mach. Intell. 44, 5149–5169 (2022).

Parisi, G. I., Kemker, R., Part, J. L., Kanan, C. & Wermter, S. Continual lifelong learning with neural networks: a review. Neural Netw. 113, 54–71 (2019).

Karniadakis, G. E. et al. Physics-informed machine learning. Nat. Rev. Phys. 3, 422–440 (2021).

Lu, L., Jin, P., Pang, G., Zhang, Z. & Karniadakis, G. E. Learning nonlinear operators via DeepONet based on the universal approximation theorem of operators. Nat. Machine Intell. 3, 218–229 (2021).

Darbon, J. & Meng, T. On some neural network architectures that can represent viscosity solutions of certain high dimensional Hamilton-Jacobi partial differential equations. J. Comput. Phys. 425, 109907 (2021).

Raissi, M., Perdikaris, P. & Karniadakis, G. Physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 378, 686–707 (2019).

Zhu, Y., Zabaras, N., Koutsourelakis, P.-S. & Perdikaris, P. Physics-constrained deep learning for high-dimensional surrogate modeling and uncertainty quantification without labeled data. J. Comput. Phys. 394, 56–81 (2019).

Marone, C. Laboratory-derived friction laws and their application to seismic faulting. Annu. Rev. Earth Planet. Sci. 26, 643–696 (1998).

Pyrak-Nolte, L. J., Myer, L. R. & Cook, N. G. W. Transmission of seismic waves across single natural fractures. J. Geophys. Res. Solid Earth 95, 8617–8638 (1990).

Tattersall, H. G. The ultrasonic pulse-echo technique as applied to adhesion testing. J. Phys. D Appl. Phys. 6, 819–832 (1973).

Leeman, J. R., Saffer, D. M., Scuderi, M. M. & Marone, C. Laboratory observations of slow earthquakes and the spectrum of tectonic fault slip modes. Nat. Commun. 7, 11104 (2016).

Rumelhart, D. E., Hinton, G. E. & Williams, R. J. Learning representations by back-propagating errors. Nature 323, 533–536 (1986).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. Preprint at arXiv https://doi.org/10.48550/ARXIV.1412.6980 (2014).

Niu, F., Silver, P. G., Daley, T. M., Cheng, X. & Majer, E. L. Preseismic velocity changes observed from active source monitoring at the Parkfield SAFOD drill site. Nature 454, 204–208 (2008).

Avouac, J.-P. From geodetic imaging of seismic and aseismic fault slip to dynamic modeling of the seismic cycle. Annu. Rev. Earth Planet. Sci. 43, 233–271 (2015).

Barbot, S., Lapusta, N. & Avouac, J.-P. Under the hood of the earthquake machine: toward predictive modeling of the seismic cycle. Science 336, 707–710 (2012).

Bürgmann, R. et al. Deformation during the 12 November 1999 Duzce, Turkey, earthquake, from GPS and InSAR data. Bull. Seismol. Soc. Am. 92, 161–171 (2002).

Fielding, E. J. et al. Kinematic fault slip evolution source models of the 2008 M7.9 Wenchuan earthquake in China from SAR interferometry, GPS and teleseismic analysis and implications for Longmen Shan tectonics. Geophys. J. Int. 194, 1138–1166 (2013).

Gualandi, A., Perfettini, H., Radiguet, M., Cotte, N. & Kostoglodov, V. Gps deformation related to the Mw 7.3, 2014, Papanoa earthquake (Mexico) reveals the aseismic behavior of the Guerrero seismic gap. Geophys. Res. Lett. 44, 6039–6047 (2017).

Hearn, E. H. & Bürgmann, R. The effect of elastic layering on inversions of GPS data for coseismic slip and resulting stress changes: strike-slip earthquakes. Bull. Seismol. Soc. Am. 95, 1637–1653 (2005).

Kano, M., Fukuda, J., Miyazaki, S. & Nakamura, M. Spatiotemporal evolution of recurrent slow slip events along the southern Ryukyu subduction zone, Japan, from 2010 to 2013. J. Geophys. Res. Solid Earth 123, 7090–7107 (2018).

Michel, S., Gualandi, A. & Avouac, J.-P. Similar scaling laws for earthquakes and Cascadia slow-slip events. Nature 574, 522–526 (2019).

Wallace, L. M. et al. Slow slip near the trench at the Hikurangi subduction zone, New Zealand. Science 352, 701–704 (2016).

Borate, P. et al. Using a physics-informed neural network and fault zone acoustic monitoring to predict lab earthquakes. Zenodo https://zenodo.org/badge/latestdoi/569050567 (2023).

Acknowledgements

This study is funded by the US Department of Energy (DE-SC0017585) and NSF-MCA (#2121005) grants to P.S. C.M. acknowledges support from the European Research Council Advance Grant 835012 (TECTONIC) and US Department of Energy grants (DE-SC0020512) and (DE-EE0008763). J.R. acknowledges support from the US Department of Energy grant (DE-SC0022842). The authors are thankful to Srisharan Shreedharan for providing his experiment datasets and Prabhakaran Manogharan for his help in extracting the ultrasonic features.

Author information

Authors and Affiliations

Contributions

P.B.: methodology and writing—original draft. J.R.: writing—review & editing, and supervision. C.M.: writing—review and editing. A.M.: methodology and writing—review. D.K.: methodology and writing—review. P.S.: conceptualization, methodology, writing—review & editing, supervision, project administration, and funding acquisition.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Borate, P., Rivière, J., Marone, C. et al. Using a physics-informed neural network and fault zone acoustic monitoring to predict lab earthquakes. Nat Commun 14, 3693 (2023). https://doi.org/10.1038/s41467-023-39377-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-023-39377-6

This article is cited by

-

Recent advances in earthquake seismology using machine learning

Earth, Planets and Space (2024)

-

Crustal permeability generated through microearthquakes is constrained by seismic moment

Nature Communications (2024)

-

Laboratory Shear Behavior of Tensile- and Shear-Induced Fractures in Sandstone: Insights from Acoustic Emission

Rock Mechanics and Rock Engineering (2024)

-

Using a physics-informed neural network and fault zone acoustic monitoring to predict lab earthquakes

Nature Communications (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.