Abstract

The prediction of temperature effects from first principles is computationally demanding and typically too approximate for the engineering of high-temperature processes. Here, we introduce a hybrid approach combining zero-Kelvin first-principles calculations with a Gaussian process regression model trained on temperature-dependent reaction free energies. We apply this physics-based machine-learning model to the prediction of metal oxide reduction temperatures in high-temperature smelting processes that are commonly used for the extraction of metals from their ores and from electronics waste and have a significant impact on the global energy economy and greenhouse gas emissions. The hybrid model predicts accurate reduction temperatures of unseen oxides, is computationally efficient, and surpasses in accuracy computationally much more demanding first-principles simulations that explicitly include temperature effects. The approach provides a general paradigm for capturing the temperature dependence of reaction free energies and derived thermodynamic properties when limited experimental reference data is available.

Similar content being viewed by others

Introduction

The decarbonization of chemical industry is a necessity for the transition to a sustainable future1,2,3, but developing alternatives for established industrial processes is cost intensive and time consuming. Bottom-up computational process design from first-principles theory, i.e., without requiring initial input from experiment, would be an attractive alternative but has so far not been realized. On the other hand, computational materials design and discovery based on atomic-scale first-principles calculations has already become commonplace and is a powerful complement to experimental materials engineering4,5. Here, we demonstrate how first-principles quantum-mechanics based theory can be supplemented with a machine-learning (ML) model describing temperature dependence to enable the prediction of chemical reactions at high temperatures.

Temperature effects are especially important for chemical and electrochemical reactions that involve reactants and products in different states of matter, such as corrosion reactions (i.e., the binding of oxygen in a solid oxide)6,7 or the reverse, the extraction of metals from their oxides. As one example, we focus here on the latter and consider the pyrometallurgical reduction of metal oxides. In industry, many base metals, such as cobalt, copper, and silver, are extracted from their ores via smelting, using carbon as the reducing agent8,9. Recycling of transition and rare earth metals, e.g., from spent batteries and electronics waste, also commonly involves pyrometallurgical processes10,11. However, our findings apply more generally also to other classes of reactions at high temperatures.

Given their relevance, an inexpensive computational method for predicting the temperature dependence of oxidation or oxide reduction reactions would be extremely attractive. Empirical models based on the parametrization of experimental thermodynamics data, such as the Calculation of Phase Diagrams (CALPHAD) approach12,13, have been used for the thermodynamic characterization of materials at different temperatures and for virtual process optimization14 but are limited by the amount of available data from experiments. First-principles (quantum-mechanics based) calculations provide efficient and reliable estimates of ground-state materials properties at zero Kelvin15,16. Introducing temperature effects increases the computational cost of the simulations by several orders of magnitude, which is not amenable for the screening of large numbers of compositions and thermodynamic conditions required to aid with process optimization17. Hence, there is a need for computational methods that exhibit the computational efficiency of an interpolation-based method such as CALPHAD and the transferability of first-principles methods. We will demonstrate in the following that ML techniques can provide the missing link.

A growing body of literature evidences that first-principles modeling can be greatly accelerated by training ML models on the outcome of first-principles calculations18,19,20. However, in many cases, accurate data for high-temperature materials properties cannot be readily generated from first-principles calculations, and experimental thermochemical databases are much smaller in size. For example, we were only able to compile a set of 38 metal oxide reduction temperatures from public data sources that were extracted from experimentally measured free energies of reaction (see Supplementary Table 1). In the case of such data limitations, it is crucial for the construction of accurate models to make use of prior knowledge, for example, in the form of known laws of physics or thermodynamics.

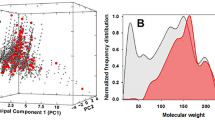

In the present work, we show that combining both information from first-principles calculations and data from experiment can enable the construction of quantitative models for the prediction of temperature-dependent materials properties such as metal-oxide reduction temperatures (Fig. 1a). The key novelty of our approach is that it makes use of known thermodynamic relationships (Fig. 1b). The predictions from an ML model based on Gaussian process regression (GPR)21 and results from first-principles calculations both enter the thermodynamic equations that govern metal oxide reduction, enabling the quantitative prediction of high-temperature materials properties of oxides that were not included in the reference data set (Fig. 1d). Through this thermodynamic underpinning, other temperature-dependent physical properties can be accessed at no extra cost and with a higher accuracy than when training ML models directly for specific observables. In particular, we demonstrate that the zero Kelvin first-principles calculations can be augmented with machine-learned temperature effects to yield a physics-based ML model for predicting high-temperature reaction free-energies with great accuracy but at a low computational cost.

a Zero Kelvin first-principles calculations and finite-temperature experimental data of metal oxides from the literature are compiled in a database. b Thermodynamic quantities are evaluated from first principles where feasible. c Features are extracted from the data set and used as inputs to build a quantitative machine learning (ML) model for those contributions that are not accessible from first principles. d The output from the ML model and from first-principles calculations together enter a physics-based framework for the prediction of the temperature-dependent stability of other metal oxides that were not included in the reference data set.

As a specific example, we consider the pyrometallurgical reduction of metal oxides to their base metals, though our general approach can be expected to be transferable also to other high-temperature reactions. Specifically, we aim at predicting the reduction temperature of metal oxides (MxOy) using carbon coke as a reducing agent, which corresponds to the chemical reaction

where M is the base metal (or a mixture of multiple metal species) of a given metal oxide MxOy, CO is carbon monoxide, and x and y are the corresponding stoichiometric reaction coefficients. In general, the metal oxide and carbon are in their solid state, while the reduced metal can be liquid (smelting) or solid and carbon monoxide is in the gas phase.

The Gibbs free energy of reaction corresponding to Eq. (1) can be expressed as

where ΔfG(M), ΔfG(CO), ΔfG(MxOy), and ΔfG(C) denote the Gibbs free energy of formation of M, CO, MxOy, and C, respectively. At room temperature, most metal oxides are highly stable and the equilibrium of reaction (1) is strongly tilted to the left-hand side, i.e., ΔrGred(MxOy) is positive. But at high-enough temperatures, the greater entropy of gas phases compared to solids shifts the equilibrium to the right-hand side of Eq. (1), making the reduction energetically favorable, i.e., ΔrGred(MxOy) becomes negative.

Our objective is the computational prediction of the reduction temperature Tred above which the sign of ΔrGred(MxOy) becomes negative and reduction of the metal oxide occurs.

In the following, we will compare three different computational approaches: (i) a fully non-empirical approximation of Tred based only on first-principles density-functional theory (DFT); (ii) a ML model obtained from a direct fit of experimental reduction temperatures; and (iii) a hybrid scheme that augments DFT zero-Kelvin predictions with an ML model of the temperature-dependent contributions.

A series of approximations is required to arrive at a purely first-principles estimate of the reduction temperature. The temperature dependence of the Gibbs free energy of formation of an oxide compound X, ΔfG(X) = ΔfH(X) − TS, is partly due to the temperature dependence of the enthalpy of formation ΔfH but mostly stems from the entropy term TS, where T is the temperature and S is the overall entropy. At zero Kelvin, the entropy term vanishes and the Gibbs free energy of formation is identical to the enthalpy of formation, which can be directly obtained from DFT calculations. For example, the zero-Kelvin formation enthalpy of the metal oxide can be calculated as

where \({E}_{{{{{{{{{\rm{M}}}}}}}}}_{{{{{{\mathrm{x}}}}}}}{{{{{{{{\rm{O}}}}}}}}}_{{{{{{\mathrm{y}}}}}}}}^{\,{{{{{{\mathrm{DFT}}}}}}}\,}\), \({E}_{{{{{{\mathrm{M}}}}}}}^{\,{{{{{{\mathrm{DFT}}}}}}}\,}\), and \({E}_{{{{{{{{{\rm{O}}}}}}}}}_{2}}^{\,{{{{{{\mathrm{DFT}}}}}}}\,}\) are the DFT energies of the metal oxide, the base metal, and an oxygen molecule in the gas phase, respectively. The greatest contribution to the temperature-dependent terms of the reaction free-energy (2) arises from the entropy of the molecular gas species CO (SCO), which can be efficiently approximated in the ideal gas limit from first-principles calculations. The vibrational entropy of the solid phases at a given temperature can also be obtained from first principles by integration of the vibrational density of states, which can be approximately obtained from DFT phonon calculations22. Additional contributions to the free-energy arise from the electronic, magnetic, and configurational entropies23,24, which can also be approximated from first principles but have not been considered in the present work. Further details of the DFT calculations and additional entropy contributions are given in the “Methods” section.

The experimental reduction temperature values along with the corresponding predictions obtained from DFT calculations are shown in Fig. 2. DFT reduction temperatures are shown for an approximation only accounting for the entropy of CO and including additionally the vibrational entropy contributions from phonon theory. See Supplementary Table 2 and Supplementary Fig. 1 for the corresponding data and correlation plots of the predicted and reference reduction temperatures. As expected, the accuracy of the reduction temperatures increases when a higher level of theory is included in our model: the mean absolute error (MAE) and the root mean squared error (RMSE) of the DFT-based models decrease when including phonon corrections to the free-energy from 235 K to 166 K and from 265 K to 202 K, respectively. However, including phonon corrections is computationally demanding and scales poorly with increasing number of atoms, making it computationally expensive for crystal structures with large unit cells. Due to this high computational cost, we computed 38 samples (binary and ternary oxides) using DFT but limited phonon calculations to the 19 binary oxides only.

Green triangles indicate the oxide reduction temperatures as predicted by density-functional theory (DFT) calculations only considering the temperature-dependent entropy contributions of CO. The orange squares show the reduction temperatures after correcting the DFT calculations using phonon theory. The predictions obtained from our machine learning (ML) model trained on reduction temperatures are indicated by blue circles. These data points were obtained from leave-one-out cross-validation and are thus pure predictions and were not included in the model construction. The horizontal error bars indicate the uncertainty estimates from the Gaussian process regression model. The black crosses indicate the experimental reference values of Supplementary Table 1. See Supplementary Fig. 1 for correlation plots of the predicted temperatures with the reference values. All data can be found in Supplementary Table 2.

As seen in Fig. 2, even on the highest level of theory considered, the predicted first-principles reduction temperatures are on average still subject to large errors of around 200 K. Including phonon calculations improved the DFT predictions across the board, but the relative error reduction is barely significant except for the compounds MgO, CaO, SiO2, and TiO2. Considering the high computational cost of phonon calculations, this result is sobering and reflects both the approximations made in the form of the reaction free energy and the intrinsic error of DFT.

The limited accuracy of the first-principles models motivated us to explore whether ML models can predict oxide reduction temperatures with superior accuracy. Intuitively, the temperature-dependent vibrational entropy contributions are determined by the nature of the chemical bonds in the various oxides, i.e., we expect differences depending on the degree of ionic and covalent character25. As input for the ML model, we therefore chose features that affect the chemical bonding and can be easily obtained from the periodic table or by means of efficient DFT calculations. The following properties were used for the construction of compound fingerprints:

-

(i)

Atomic properties: atomic number (Z), atomic mass (m), electronegativity (χ);

-

(ii)

Bond properties: ionic character (IC);

-

(iii)

Composition properties: oxidation state (Ox), stoichiometry (ϕ);

-

(iv)

Structure properties: unit cell volume (V), density (ρ), center of mass (μ); and

-

(v)

Phase properties (from DFT): 0 K formation enthalpy (ΔHf), bulk modulus (B0).

Note that only two of the properties are derived from DFT calculations, the formation energies and bulk moduli, the calculations of which are straightforward and computationally efficient. The construction of the compound fingerprint by combining the above properties is described in the Supplementary Methods section and in Supplementary Table 3. We employed recursive feature elimination to detect redundant features and avoid overfitting as is detailed in the Supplementary Methods section and Supplementary Fig. 2.

We trained a GPR-based ML model on the experimental reduction temperatures of Supplementary Table 1 and quantified its accuracy using leave-one-out cross-validation (LOOCV). LOOCV ensures that the model is evaluated only for samples that were not used for training and is a standard technique for assessing the transferability of a model (see further details in the “Methods” section). Further details of the model hyperparameters and construction are given in the methods section. In addition, we also performed multiple rounds of cross-validation using different partitions to study the robustness of the predictive power with respect to the train/test fold size (see Supplementary Fig. 3). The predicted reduction temperatures from LOOCV for each sample are compared with their corresponding experimental reference in Fig. 2.

We observe that the predicted reduction temperatures of the GPR model surpass in accuracy the first-principles values obtained when using only DFT, even when computationally expensive phonon corrections were included. The MAE and RMSE from LOOCV are 105 K and 127 K, respectively, which is around 50% smaller than the errors of the pure DFT predictions. In addition to a greatly improved predictive power, another benefit of the Gaussian process regression model compared to DFT is the uncertainty estimate that it provides (shown as blue error bars in Fig. 2).

In the first instance we used only experimental data from binary metal oxides (19 samples) to train and validate the ML model to allow for a fair comparison with the DFT results including phonon theory. Since the first-principles calculations needed for building the compound fingerprint are computationally affordable, we expanded our reference data set from 19 to 38 samples by including ternary oxides as well. LOOCV on the larger data set shows that including extra reference data improves the predictive power of the GPR model further and reduces the MAE and RMSE by 20 K and 18 K to 85 K and 109 K, respectively. In contrast, the DFT-based model (without phonon correction) showed larger errors for the increased data set (MAE and RMSE increased by 21 K and 12 K, respectively), presumably because of the neglected entropy contributions that become more relevant with increasing number of constituents.

Metal extraction via carbothermal reduction becomes technically challenging if the oxide reduction temperature is significantly above 1500 K, in which case other processes such as hydrometallurgical routes are more commonly used26. Focusing on pyrometallurgy, a useful model would have the ability to predict reduction temperatures below 1500 K with an accuracy of at least around ±100 K. Unfortunately, the direct ML model predictions are the least accurate for the relevant temperature range. Additionally, there is no guarantee that the direct ML model correctly captures the underlying thermodynamic principles that govern metal reduction, since we have treated the GPR model akin to a black box. To further validate the model in this respect, we considered the competing chemical reactions that are at the core of pyrometallurgical processes.

The overall reaction of Eq. (1) can be understood as a competition between the formation reactions of carbon monoxide and the metal oxide

where metal oxide formation is energetically more favorable at low temperatures, and CO formation is favored at high temperatures. The metal oxide reduction temperature is then determined by the intercept of the free energies of the CO and metal oxide formation reactions when normalized to the same oxygen content, i.e., the temperature for which \(2\ {{{\Delta }}}_{{{{{{\mathrm{f}}}}}}}G(CO)=\frac{2}{y}{{{\Delta }}}_{{{{{{\mathrm{f}}}}}}}G\)(MxOy). This relationship of CO and oxide formation energies is visualized in Ellingham diagrams27, which are a common tool for the engineering of pyrometallurgical processes.

Figure 3a–c show Ellingham diagrams for a subset of the considered compounds as predicted by DFT (with and without phonon corrections) and the direct ML model compared to experimentally measured references from the NIST-JANAF28 and Cambridge DoITPoMs29,30 databases. Free-energy diagrams for the entire set of compounds are shown in Supplementary Fig. 4.

a–d Predicted Ellingham diagrams obtained from a density-functional theory (DFT), b DFT including phonon theory, c the machine-learning (ML) model trained on reduction temperatures, and d the hybrid ML model trained on metal oxide formation free-energy slopes (∂G/∂T). The labels for the different metal oxides are included in panel d. Predicted free-energy curves are represented by the solid colored lines whilst the dashed lines are the experimental reference values (Expt.). The free-energy curves for the formation of e MgO, f Cr2O3, g LiFe5O8, and h Fe2O3 obtained from DFT (green), DFT using phonon corrections (orange) and the ML models trained on reduction temperatures (blue) and free-energy slopes (pink) are compared with their corresponding experimental reference data (black dashed lines). The black solid lines indicate the experimental free-energy curve for CO formation.

Ellingham diagrams contain more information than the reduction temperature, as they represent the variation of reaction free energies (per O2 molecule) across different temperatures: (i) Reduction temperatures can be determined from the intersection of the free energy of formation of CO (black line with negative slope) and the formation energy of a given metal oxide (positive slope), (ii) the reaction enthalpies at zero Kelvin (ΔfH(T = 0K)) are given by the y-intercepts of the oxide formation free energies, and (iii) the change of the formation free-energies with respect to the temperature (∂ΔfG/∂T, in the following ∂G/∂T for conciseness) is the slope of a metal oxide formation free-energy curve in the Ellingham diagram.

As seen in Fig. 3a, b, the slope of the reaction free energy curves predicted purely from first principles deviates significantly from the experimental reference, indicating that the temperature-dependence of the oxides is subject to large errors. The direct ML model trained on the reduction temperatures Tred (Fig. 3c) is in excellent agreement with experiment for V2O3, TiO2, and LiAlO2, both in terms of the slope of the reaction free energies as well as for the intercept with CO formation. However, the ML model predicts a completely wrong temperature dependence for CuO and CoO.

The failure of the Tred ML model for some of the compounds is due to errors in the DFT zero-Kelvin formation enthalpies of CuO and CoO. Even though the ML model predicts reduction temperatures for the two oxides that are close to the reference, the temperature dependence is described incorrectly because of large errors in the y-intercept of the reaction free energy curves, i.e., the zero-Kelvin enthalpies. The Tred ML model thus does not capture the underlying thermodynamics correctly and would not predict useful free energies at temperatures other than the oxide reduction temperature. Hence, the Tred ML model does not provide reliable predictions of the temperature-dependent reduction free energy and would therefore not be useful for, e.g., the prediction of reduction potentials for high-temperature electrolysis31.

To further improve the predictive capabilities of our model we decomposed it into two parts: zero Kelvin formation energies that can be obtained from DFT according to Eq. (3) and the temperature variation of the oxide formation free energy (∂G/∂T). Training targets for this hybrid ML model are thus the experimental values for ∂ΔfG/∂T. Figure 3d shows the Ellingham diagram obtained from the combination of the DFT zero Kelvin formation energies and the ML-predicted free energy change with the temperature. This hybrid ML model predicts the temperature dependence of the formation free energies of CuO and CoO in excellent agreement with experiment and simultaneously improves the accuracy of the reduction temperature predictions. The remaining error is mostly due to the zero-Kelvin formation energy and no longer is an artifact of the ML model.

As previously for the Tred model, we validate the ∂G/∂T model by comparing the experimental reduction temperatures with the values obtained by our predictions using LOOCV. Training on the experimental ∂G/∂T values of the binary oxides decreases the cross-validation scores even further, yielding an MAE of 74 K and an RMSE of 87 K, which are 31 K (MAE) and 40 K (RMSE) smaller than the errors of the Tred model (Fig. 4a). The LOOCV scores of the larger data set including ternary oxides are yet smaller, giving an MAE and RMSE of 64 K and 78 K, respectively, showing that the predictive power of the ∂G/∂T model increases as samples are added to the data set.

a Mean absolute error (MAE, blue bars), root mean square error (RMSE, orange bars), and maximum error (green bars) of the theoretical models. b Comparison between the formation energy (ΔHf) and reduction temperature (Tred) errors committed by the theoretical methods: density-functional theory (DFT, empty bars), DFT including phonon corrections (empty dashed bars) and the physics-based machine learning (ML) model trained for ∂G/∂T (solid color bars).

Finally, we analyze the accuracy of the predicted free-energy curves for different metal oxides to determine the source of the errors of the various models. In the interest of space, we only include four representative examples here and refer the reader to Supplementary Fig. 4 for the free-energy curves of all 38 samples considered in this work. This holds for the majority of the considered oxides, especially for the ML model trained for ∂G/∂T, which produces the most accurate predictions for the free-energy curves. We note that the ML methods can be applied to large structures, e.g., ternary oxides (Fig. 3g), for which the phonon corrections are computationally too demanding.

One of the advantages of training the ML model on the free energy slopes ∂G/∂T is that it is possible to distinguish between errors in the zero Kelvin formation enthalpy and errors in the predicted temperature dependence committed by the ML model. For some metal oxides, such as Fe2O3, the zero Kelvin enthalpy of formation is not well described by DFT (Fig. 3h). In this example, the reduction temperatures obtained from DFT and from the Tred model are close to the experimental reference, but only because of a compensation of errors in the zero-Kelvin enthalpy and the free energy slope. The ∂G/∂T model predicts the temperature dependence of the free energy in much better agreement with experiment than the other models, and the remaining error in the reduction temperature of Fe2O3 is dominated by the DFT error in the zero-Kelvin enthalpy. Hence, the ∂G/∂T model correctly captures the underlying physics.

In fact, as seen in Fig. 4b, the errors in the DFT zero-Kelvin formation energies correlate with the errors in the reduction temperatures predicted by the ∂G/∂T model. This does not hold true for the other models. The ∂G/∂T model decouples the zero Kelvin energies from the DFT calculations and the ML predictions of the temperature-dependent terms. As a consequence, increasing the accuracy of the zero-Kelvin enthalpies, e.g., by means of a higher level of theory, would yield an immediate improvement of the model accuracy.

This is further corroborated by a baseline ML model combining the experimental zero-Kelvin formation enthalpies with the ML-predicted free-energy slopes. This baseline model, which by construction does not exhibit the DFT errors at zero Kelvin, yields indeed an improved accuracy reducing the MAE and RMSE of the oxide reduction temperatures from 64 K and 78 K to 52 K and 65 K, respectively. An overview of the LOOCV error estimates of all discussed models is compiled in Supplementary Table 4. Since the feature vector of the ML model contains information derived from DFT, we would expect even further improvements in the predictions when using more accurate first-principles methods. Together, this test indicates that the accuracy of the hybrid ML model can still substantially improve if a more accurate electronic-structure method becomes available.

A limitation of our model arises from the fact that phase transitions were only implicitly included in the reference data, i.e., the model is not aware of the melting points of the base metals even though some of the metals melt well below the reduction temperature of their oxides. This is not a deficiency of the model for the prediction of reduction free energies and reduction temperatures. However, we believe that models for other temperature-dependent processes can be built following the same principles that we put forward here but training with explicit phase information, i.e., by distinguishing between different solid, liquid, and gas phases. We further note that an extension of our model to compositions with greater number of species is straightforward, since the feature vector entering the ML model is built on averaging species-specific quantities. Though, the description of disordered and eutectic systems might require the incorporation of additional terms in the model, such as the entropy of mixing. We also expect that the ML approach can be improved further by including terms that can capture other sources of temperature-dependent contributions to the free-energy, e.g., configurational, electronic, or magnetic entropy.

In conclusion, we demonstrated that zero-Kelvin first-principles calculations can be augmented with ML models trained on experimental free energy slopes to facilitate the accurate yet computationally efficient prediction of high-temperature materials properties. As one example with relevance for chemical industry, we applied this concept to the pyrometallurgical reduction of 38 binary and ternary metal oxides, showing that ML-augmented first-principles calculations can predict oxide reduction temperatures with a mean absolute error of 64 K and correctly describe the temperature dependence of the reaction free energies. We further highlighted the importance of encoding and targeting physical properties that are directly related to the fundamental equations of thermodynamics and serve as a sensible prior to build accurate ML models. The approach is not limited to oxide and could also be applied to other classes of compounds, such as sulfides or nitrides, if at least some experimental reference data is available, since all model features are derived either from first principles (formation energies and bulk moduli), from the crystal structure, or from the periodic table. The proposed ML methodology can serve as a blueprint for the modeling of temperature-dependent materials processes with a manageable computational cost in cases where limited experimental data is available and may ultimately guide the design of novel materials and processes.

Methods

Details of the machine learning models

All ML models were based on Gaussian Process Regression (GPR)21 as implemented in CatLearn32. We built a Gaussian process (GP)

where X defines the set of inputs (xi), f(X) denotes the latent functions, and K(X, X) is an n × n matrix with components k(xi, xj) for a number of n samples in the training or test set and d dimensions of the input space.

The kernel trick is used to translate the input space into feature space with the covariance function \(k(x,x^{\prime} )\). The kernel is applied to determine relationships between the descriptor vectors for candidates x and \(x^{\prime}\). We used the radial basis function (RBF) kernel with the form

where \({\sigma }_{{{{{{\mathrm{f}}}}}}}^{2}\) is a scaling factor, ℓ2 defines the kernel length scale, and \({\sigma }_{{{{{{\mathrm{c}}}}}}}^{2}\) is a constant shift. The hyperparameters \({\sigma }_{{{{{{\mathrm{f}}}}}}}^{2}\) and ℓ2 were optimized in each feature dimension of the fingerprint vector allowing for an anisotropic form of the kernel. This adds automatic relevance determination (ARD) capability to our model.

In the following, we refer to X and X* as the accumulation of the feature vectors of the candidates in the training and test sets, respectively. The conditional distribution of the GP is given by the mean

and covariance

where Ky = K(X, X) + ϵ2 I is the n × n covariance matrix for the noisy target values y and noise level ϵ. The variance for a new data (x*) obtained from the training data (X), which is used to quantify the uncertainty of the process, is given by

where the n × 1 covariance vectors between new data points and the training data xi ∈ X are given by \({{{{{{{\bf{k}}}}}}}}({{{{{{{{\bf{x}}}}}}}}}_{* })={[K({{{{{{{{\bf{x}}}}}}}}}_{* },{{{{{{{{\bf{x}}}}}}}}}_{1}),\ldots ,K({{{{{{{{\bf{x}}}}}}}}}_{* },{{{{{{{{\bf{x}}}}}}}}}_{{{{{{\mathrm{n}}}}}}})]}^{{{{{{\mathrm{T}}}}}}}\). The predicted uncertainty is then given by \(\sigma \left({{{{{{{{\bf{x}}}}}}}}}_{* }\right)\). The first term applies the predicted noise to the uncertainty with xλ being the optimized regularization strength for the training data. We chose as initial hyperparameters [σc, σf, ℓ] = (1.0, 1.0, 1.0), with bounds on the noise level ϵ ∈ [1 × 10−3, 1 × 10−1] and performed an optimization of the hyperparameters through maximizing the log marginal likelihood

where θ denotes the whole set of hyperparameters (σc, σf, ℓ, and ϵ). The hyperparameter optimization was performed using the Truncated Newton Constrained (TNC) method33 as implemented in SciPy34.

Model validation

The predictive power of our ML models was validated using three different techniques to ensure that the models are transferable to unseen data and do not exhibit overfitting. All reported error estimates were obtained from leave-one-out cross-validation (LOOCV), which is a standard method for assessing the generalization ability of models. This means, for each of the 38 oxides a GPR model was trained on a data set containing only data from the other 37 oxides. The error estimate was then determined by evaluating the model for the oxide that was left out. Thus, LOOCV establishes the transferability of the models for unseen data. Second, k-fold cross-validation was employed to detect correlations of the reference samples with each other by using test sets with increasing size. The results from the analysis confirm the error estimates from LOOCV and do not indicate any issues with the data set. See Supplementary Figs. 1 and 3 for further details. Finally, we varied the noise parameter of the GPR models over four orders of magnitude to determine whether the models were overfitted and reproduced unwanted information (such as noise) from the reference data or if they were able to robustly fit statical noisy observations from the reference data. As discussed in more detail in the Supplementary Methods section and shown in Supplementary Figs. 5 and 6, the goodness-of-fit of the models does not change significantly with the magnitude of the noise parameter, further indicating that the models do not suffer from overfitting. Based on these three tests, the ML models are robust and exhibit good transferability.

Model selection

In addition to GPR, we confirmed that other regression models can also be successfully trained on the reference data. The accuracies (from LOOCV) obtained with a number of different regression models are discussed in the Supplementary Methods section and errors are compiled in Supplementary Table 5. As seen in the table, the models achieve overall similar accuracies as the GPR model, although the maximal errors are significantly larger, except for the three linear models (linear, ridge, and LASSO regression). Based on this test, we decided to proceed with Gaussian process regression, since it provides an uncertainty estimate for its predictions (as shown as error bars in Fig. 2).

Density functional theory (DFT) calculations

DFT calculations were performed using the Vienna Ab initio Simulation Package (VASP)35,36 and the Strongly Constrained and Appropriately Normed (SCAN) exchange–correlation functional37 including the dispersion corrections scheme rVV1038. The energy cutoff was 600 eV, and the Brillouin zone was sampled using the automatic k-mesh generation method implemented in VASP with 30 subdivisions in each direction. We used the pseudopotentials Li(s1 p0), Na(p6 s1), Mg (s2 p0), Al(s2 p1), Si(s2 p2), K(3s 3p 4s), Ca (3s 3p 4s), Ti (d3 s1), V (p6 d4 s1), Cr (d5 s1), Mn (d6 s1), Fe (3p d7 s1), Co (d8 s1), Ni (d9 s1), Cu (d10 p1), and Zn (d10 p2) included in VASP version 5.4.

The convergence criterion for the electronic self-consistent cycle was fixed at 10−5 eV. Bulk structure optimizations were restarted until the optimizations required at most five ionic steps to ensure basis-set consistency with changing cell size and shape. For the d bands of V, Cr, Mn, Co, and Ni in their respective oxides, a Hubbard-U39,40 correction was used to counteract the self-interaction error and correct the oxide formation energies. The U values were fitted to the experimental formation energy values41 from the NIST-JANAF28 and Cambridge DoITPoMs29,30 thermodynamic tables as described in detail in the Supplementary Methods section and visualized in Supplementary Fig. 7. We applied the following U values: V (0.7 eV), Cr (1.5 eV), Mn (0.5 eV), Co (0.3 eV), and Ni (1.5 eV).

Phonon corrections were calculated using the Phonons module of the Atomic Simulation Environment (ASE)42 package. We used 2 × 2 × 2 supercells for calculating vibrational normal modes using the finite-displacement method22.

Entropy contributions

The discussion of entropy effects above is limited to vibrational entropy, which is intuitively the largest entropy contribution for the considered oxide compounds at high temperature. For solid oxide solutions, the configurational entropy contributions to the free energy, −TΔSmix, can also become significant and are the origin of the stability of high-entropy oxides43,44 and may determine the ground-state phase45. The entropy of mixing of an ideal solution is \({{\Delta }}{S}_{{{\mbox{mix}}}}=-{k}_{{{{{{\mathrm{B}}}}}}}\mathop{\sum }\nolimits_{{{{{{\mathrm{i}}}}}} = 1}^{{{{{{\mathrm{N}}}}}}}{x}_{{{{{{\mathrm{i}}}}}}}{{{{{{\mathrm{ln}}}}}}}\,{x}_{{{{{{\mathrm{i}}}}}}}\), where kB is Boltzmann’s constant, xi is the concentration of species i, and N is the number of species that share the same sublattice of the crystal structure. If it is known that a given oxide composition forms a solid solution, the contribution from the mixing entropy can be included analytically in the free energy of formation either on the level of first-principles theory or on top of the ML models. In case it is not known whether a composition is disordered or ordered, the tendency to disorder can be estimated based on first-principles calculations46 of special quasirandom structures47.

Data availability

All experimental reference data is contained in Supplementary Table 1. Data from first-principles (SCAN) calculations that were generated in this study and enter the compound fingerprints are provided as Supplementary Software 1. Reference trained models have been deposited on GitHub and are publicly available from https://github.com/atomisticnet/gibbsml49.

Code availability

The ML methodology described in the present work was implemented in a Python package named GibbsML that provides the vectorized compound fingerprints and can be used to build regression models based on GPR as implemented in CatLearn32. GibbsML further makes use of the Atomistic Simulation Environment (ASE)42 and the Python Materials Genomics (pymatgen) package48. The source code generated in this study has been deposited in GitHub under https://github.com/atomisticnet/gibbsml49 and is publicly available under the terms of the MIT license. Additional source code that implements the model validation is provided as Supplementary Software 1. A web application for predicting free-energy curves Ellingham diagrams is hosted at: http://ellingham.energy-materials.org. Note that the web application interfaces with the Materials Project database50 to extract first-principles data for the compound fingerprints, so that predictions are not limited to the scope of our own (SCAN-based) reference database.

References

Davis, S. J. et al. Net-zero emissions energy systems. Science 360, eaas9793 (2018).

Arbabzadeh, M., Sioshansi, R., Johnson, J. X. & Keoleian, G. A. The role of energy storage in deep decarbonization of electricity production. Nat. Commun. 10, 1–11 (2019).

Sonter, L. J., Dade, M. C., Watson, J. E. & Valenta, R. K. Renewable energy production will exacerbate mining threats to biodiversity. Nat. Commun. 11, 1–6 (2020).

Chen, L.-Q. et al. Design and discovery of materials guided by theory and computation. npj Comput. Mater. 1, 1–2 (2015).

Ludwig, A. Discovery of new materials using combinatorial synthesis and high-throughput characterization of thin-film materials libraries combined with computational methods. npj Comput. Mater. 5, 70 (2019).

Birks, N., Meier, G. H. & Pettit, F. S. Introduction to the High-Temperature Oxidation of Metals (Cambridge University Press, 2012).

Esmaily, M. et al. High-temperature oxidation behaviour of AlxFeCrCoNi and AlTiVCr compositionally complex alloys. npj Mater. Degrad. 4, 1–10 (2020).

Norgate, T. & Haque, N. Energy and greenhouse gas impacts of mining and mineral processing operations. J. Clean. Prod. 18, 266–274 (2010).

Forrest, D. & Szekely, J. Global warming and the primary metals industry. JOM 43, 23–30 (1991).

Zheng, X. et al. A mini-review on metal recycling from spent lithium ion batteries. Engineering 4, 361–370 (2018).

Yin, H. & Xing, P. Recycling of Spent Lithium-Ion Batteries 57–83 (Springer, 2019).

Lukas, H., Fries, S. G. & Sundman, B. Computational Thermodynamics: The Calphad Method (Cambridge University Press, 2007).

Bale, C. W. et al. FactSage thermochemical software and databases—recent developments. Calphad 33, 295–311 (2009).

Jung, I.-H. & Ende, M.-A. V. Computational thermodynamic calculations: FactSage from CALPHAD thermodynamic database to virtual process simulation. Metall. Mater. Trans. B 51, 1851–1874 (2020).

Jain, A., Shin, Y. & Persson, K. A. Computational predictions of energy materials using density functional theory. Nat. Rev. Mater. 1, 1–13 (2016).

Editorial, Boosting materials modelling. Nat. Mater. 15, 365 https://doi.org/10.1038/nmat4619 (2016).

Phillpot, S. Multiscale Phenomena in Plasticity: From Experiments to Phenomenology, Modelling and Materials Engineering 271–280 (Springer, 2000).

Butler, K. T., Davies, D. W., Cartwright, H., Isayev, O. & Walsh, A. Machine learning for molecular and materials science. Nature 559, 547–555 (2018).

Sanchez-Lengeling, B. & Aspuru-Guzik, A. Inverse molecular design using machine learning: Generative models for matter engineering. Science 361, 360–365 (2018).

Yao, Z. et al. Inverse design of nanoporous crystalline reticular materials with deep generative models. Nat. Mach. Intell. 3, 76–86 (2021).

Williams, C. K. & Rasmussen, C. E. Gaussian Processes for Machine Learning 2 (MIT Press, 2006).

Kresse, G., Furthmüller, J. & Hafner, J. Ab initio force constant approach to phonon dispersion relations of diamond and graphite. Europhys. Lett. 32, 729 (1995).

Ma, D., Grabowski, B., Körmann, F., Neugebauer, J. & Raabe, D. Ab initio thermodynamics of the CoCrFeMnNi high entropy alloy: Importance of entropy contributions beyond the configurational one. Acta Mater. 100, 90–97 (2015).

Körmann, F. et al. Free energy of bcc iron: integrated ab initio derivation of vibrational, electronic, and magnetic contributions. Phys. Rev. B 78, 033102 (2008).

Ozoliņš, V., Sadigh, B. & Asta, M. Effects of vibrational entropy on the Al-Si phase diagram. Matter 17, 2197–2210 (2005).

Norgate, T. E., Jahanshahi, S. & Rankin, W. J. Assessing the environmental impact of metal production processes. J. Clean. Prod. 15, 838–848 (2007).

Ellingham, H. J. T. Reducibility of oxides and sulfides in metallurgical processes. J. Soc. Chem. Ind. 63, 125–133 (1944).

Chase Jr, M. & Tables, N.-J. T. NIST-JANAF thermochemical tables. J. Phys. Chem. Ref. Data Monogr. 9, 1951 (1998).

Turkdogan, E. Physical Chemistry of High Temperature Technology (Academic Press, 1980).

Dissemination of IT for the Promotion of Materials Science (DoITPoMS) (Cambridge University, 2020) https://www.doitpoms.ac.uk.

Allanore, A. Electrochemical engineering for commodity metals extraction. Electrochem. Soc. Interface 26, 63–68 (2017).

Hansen, M. H. et al. An atomistic machine learning package for surface science and catalysis. Preprint at arXiv https://arxiv.org/abs/1904.00904. (2019).

Grippo, L., Lampariello, F. & Lucidi, S. A truncated Newton method with nonmonotone line search for unconstrained optimization. J. Optim. Theory Appl. 60, 401–419 (1989).

Virtanen, P. et al. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat. Methods 17, 261–272 (2020).

Kresse, G. & Furthmüller, J. Efficient iterative schemes for ab initio total-energy calculations using a plane-wave basis set. Phys. Rev. B 54, 11169 (1996).

Kresse, G. & Joubert, D. From ultrasoft pseudopotentials to the projector augmented-wave method. Phys. Rev. B 59, 1758 (1999).

Sun, J., Ruzsinszky, A. & Perdew, J. P. Strongly constrained and appropriately normed semilocal density functional. Phys. Rev. Lett. 115, 036402 (2015).

Peng, H., Yang, Z.-H., Perdew, J. P. & Sun, J. Versatile van der Waals density functional based on a meta-generalized gradient approximation. Phys. Rev. X 6, 041005 (2016).

Liechtenstein, A., Anisimov, V. I. & Zaanen, J. Density-functional theory and strong interactions: orbital ordering in Mott-Hubbard insulators. Phys. Rev. B 52, R5467 (1995).

Dudarev, S., Botton, G., Savrasov, S., Humphreys, C. & Sutton, A. Electron-energy-loss spectra and the structural stability of nickel oxide: an LSDA+ U study. Phys. Rev. B 57, 1505 (1998).

Artrith, N., Torres, J. A. G., Urban, A., Hybertsen, M. S. Data-driven approach to parameterize SCAN+U for an accurate description of 3d transition metal oxide thermochemistry. Preprint at arXiv https://arxiv.org/abs/2102.01131. (2021).

Larsen, A. H. et al. The atomic simulation environment—a Python library for working with atoms. Matter 29, 273002 (2017).

Bérardan, D., Franger, S., Dragoe, D., Meena, A. K. & Dragoe, N. Colossal dielectric constant in high entropy oxides. Phys. Status Solidi 10, 328–333 (2016).

Sarkar, A. et al. High entropy oxides for reversible energy storage. Nat. Commun. 9, 3400 (2018).

Kwon, D.-H. et al. The impact of surface structure transformations on the performance of Li-excess cation-disordered rocksalt cathodes. Cell Rep. Phys. Sci. 1, 100187 (2020).

Urban, A., Matts, I., Abdellahi, A. & Ceder, G. Computational design and preparation of cation-disordered oxides for high-energy-density Li-ion batteries. Adv. Energy Mater. 6, 1600488 (2016).

Zunger, A., Wei, S.-H., Ferreira, L. & Bernard, J. E. Special quasirandom structures. Phys. Rev. Lett. 65, 353 (1990).

Ong, S. P. et al. Python Materials Genomics (pymatgen): a robust, open-source python library for materials analysis. Comput. Mater. Sci. 68, 314–319 (2013).

Garrido Torres, J. A., Gharakhanyan, V., Artrith, N., Hoffmann Eegholm, T, & Urban, A. Augmenting zero-Kelvin quantum mechanics with machine learning for the prediction of chemical reactions at high temperatures (this paper), https://github.com/atomisticnet/gibbsml, (2021).

Jain, A. et al. The Materials Project: a materials genome approach to accelerating materials innovation. APL Mater. 1, 011002 (2013).

Acknowledgements

This work was supported by the National Science Foundation under Grant No. DMR-1940290 (Harnessing the Data Revolution, HDR). We acknowledge computing resources from Columbia University’s Shared Research Computing Facility project, which is supported by NIH Research Facility Improvement Grant 1G20RR030893-01, and associated funds from the New York State Empire State Development, Division of Science Technology and Innovation (NYSTAR) Contract C090171, both awarded April 15, 2010. The authors thank Mark S. Hybertsen, Dallas R. Trinkle, and Snigdhansu Chatterjee for helpful discussions.

Author information

Authors and Affiliations

Contributions

A.U. conceived and planned the project and supervised all aspects of the research. J.A.G.T., V.G., and A.U. developed the concept of the machine-learning methodology. J.A.G.T. implemented the method, compiled the reference data, performed the DFT calculations, constructed and validated the models, and analyzed the resulting data. The Hubbard-U parameters were determined by N.A. and J.A.G.T. N.A. also contributed to the conceptual development of the reaction free-energy formalism and the discussion of entropy contributions. T.H.E. developed the web application together with J.A.G.T. The manuscript was written by J.A.G.T., N.A., and A.U. and was read and revised by all authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review information

Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Garrido Torres, J.A., Gharakhanyan, V., Artrith, N. et al. Augmenting zero-Kelvin quantum mechanics with machine learning for the prediction of chemical reactions at high temperatures. Nat Commun 12, 7012 (2021). https://doi.org/10.1038/s41467-021-27154-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-021-27154-2

This article is cited by

-

Deep learning the hierarchy of steering measurement settings of qubit-pair states

Communications Physics (2024)

-

A duplication-free quantum neural network for universal approximation

Science China Physics, Mechanics & Astronomy (2023)

-

Data-driven models for ground and excited states for Single Atoms on Ceria

npj Computational Materials (2022)

-

Machine learning accelerated carbon neutrality research using big data—from predictive models to interatomic potentials

Science China Technological Sciences (2022)

-

Augmenting zero-Kelvin quantum mechanics with machine learning for the prediction of chemical reactions at high temperatures

Nature Communications (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.