Abstract

Many complex systems operating far from the equilibrium exhibit stochastic dynamics that can be described by a Langevin equation. Inferring Langevin equations from data can reveal how transient dynamics of such systems give rise to their function. However, dynamics are often inaccessible directly and can be only gleaned through a stochastic observation process, which makes the inference challenging. Here we present a non-parametric framework for inferring the Langevin equation, which explicitly models the stochastic observation process and non-stationary latent dynamics. The framework accounts for the non-equilibrium initial and final states of the observed system and for the possibility that the system’s dynamics define the duration of observations. Omitting any of these non-stationary components results in incorrect inference, in which erroneous features arise in the dynamics due to non-stationary data distribution. We illustrate the framework using models of neural dynamics underlying decision making in the brain.

Similar content being viewed by others

Introduction

Many complex systems generate coherent macroscopic behavior that can be expressed as simple laws. Such systems are commonly described by Langevin dynamics, in which deterministic forces define persistent collective trends and noise captures fast microscopic interactions1. Langevin equations are used to model stochastic evolution of complex systems such as neural networks2,3,4,5, motile cells6, swarming animals7, carbon nanotubes8, financial markets9, or climate dynamics10. While such systems can be readily observed in experiments or microscopic simulations, the analytical form of the Langevin equation usually cannot be easily derived from microscopic models or physical principles. The inference of Langevin equations from data is therefore crucial to enable efficient analysis, prediction, and optimization of complex systems.

Numerous methods were proposed for inferring Langevin dynamics from stochastic trajectories11, e.g., by estimating moments of local trajectory increments1,12,13,14,15,16,17. However, in many complex systems, the trajectories cannot be observed directly, but are only gleaned from a stochastic observation process that depends on the latent Langevin dynamics18. For example, spikes recorded from neurons in the brain form stochastic point processes with statistics controlled by the collective dynamics of the surrounding network2,19,20. Similarly, the dynamics of a protein are observed through photons emitted by fluorescent dyes tagging the protein in single-molecule microscopy experiments21,22,23. The Poisson noise inherent in spike or photon observations makes the inference of the underlying Langevin dynamics challenging.

This challenge can be addressed by modeling data as a doubly stochastic processes, in which latent stochastic dynamics drive another stochastic process modeling the observations24. The inference with latent dynamical models is data efficient as it integrates information along the entire latent trajectory, but it may be sensitive to the data distribution. Previous work only considered the inference of latent Langevin dynamics for equilibrium systems with the steady-state data distribution2,23. Whether these methods extend to non-equilibrium systems has not been tested. Yet, all living systems and physical systems that perform computations operate far from equilibrium, where transient dynamics play a key role. The inference of non-stationary Langevin dynamics from stochastic observations remains an important open problem.

Here we present an inference framework for latent Langevin dynamics which accounts for non-equilibrium statistics of latent trajectories. We show that modeling non-stationary components is critical for accurate inference, and their omission leads to biases in the estimated Langevin forces. As a working example, we model non-stationary dynamics of neural spiking activity during perceptual decision making, a process of transforming a sensory stimulus into a categorical choice25. The inference of the underlying dynamics from spikes is notoriously hard26, and analyses with simple parametric models result in controversial conclusions27,28,29,30. Our framework accurately infers the Langevin dynamics from spike data generated by competing models of decision making proposed previously. Our framework can be extended to different stochastic observation processes and is broadly applicable for the inference of the Langevin dynamics in non-stationary complex systems.

Results

Inference framework

We consider the inference of Langevin dynamics

where F(x) is the deterministic force, and ξ(t) is a white Gaussian noise \(\langle \xi (t)\rangle =0,\langle \xi (t)\xi (t^{\prime} )\rangle =\delta (t-t^{\prime} )\). We focus on one-dimensional (1D) Langevin dynamics representing a decision-making process on the domain x ∈ [−1; 1]. In 1D, the force derives from the potential function F(x) = −dΦ(x)/dx. The Langevin trajectories x(t) are latent, i.e. only accessible through stochastic observations Y(t). We work with observations that follow an inhomogeneous Poisson process with time-varying intensity f(x(t)) that depends on the latent trajectory x(t) via a function f(x) (Fig. 1a). Poisson noise models the variability of spike generation in a neuron.

a Latent dynamics are governed by the Langevin equation Eq. (1) with a deterministic potential Φ(x) and a Gaussian white noise with magnitude D. On each trial, the latent trajectory starts at the initial state x(t0) (blue dot) sampled from the probability density p0(x). When the trajectory reaches the domain boundaries (green dashed lines) for the first time, the observations can either terminate (orange dot) or continue depending on the experiment design. The latent Langevin dynamics are only accessible through stochastic observations, e.g., spikes that follow an inhomogeneous Poisson process with time-varying intensity that depends on the latent trajectory x(t) via the firing-rate function f(x). b Graphical diagram of the inference framework. Stochastic observations \({y}_{{t}_{i}}\) (gray circles) depend on the latent states \({x}_{{t}_{i}}\) (white circles), the arrows represent statistical dependencies. The absorption event (orange circle) indicates that observations terminate when the latent trajectory hit a boundary. The framework includes three non-stationary components: the initial state distribution p0(x) (blue box), the boundary conditions (reflecting or absorbing) for the time-propagation of latent dynamics (green box), and the absorption operator (orange box).

The non-stationary data Y(t) arise in non-equilibrium systems that perform computations. Such systems start their operation in a specific initial state and finish in a terminal state representing the outcome of the computation. The initial and terminal states are fundamentally different from the equilibrium state of the system. An example of such non-equilibrium computation is neural dynamics underlying perceptual decision making in the brain. Each decision process begins when a sensory stimulus is presented to a subject and terminates when the subject commits to a choice. Neural activity transiently evolves from the initial state at the stimulus onset until a choice is made, and different choices correspond to different terminal states of neural activity25. In experiment, multiple realizations of the decision process can be recorded under the same conditions, called trials. The statistics of trajectories x(t) across trials differs from the steady-state distribution.

To model non-stationary dynamics, we introduce three components into our framework (Fig. 1a, b). First, p0(x) models the distribution of the initial latent state at the trial start. On each trial, the latent dynamics evolve according to Eq. (1) from the initial condition x(t0) = x0, where x0 is sampled from p0(x). The distribution p0(x) is latent and needs to be inferred from data. The two other components account for the mechanism terminating the observation on each trial, which can be controlled either by the experimenter or by the system itself. In decision-making experiments, these possibilities correspond to fixed-duration or reaction-time task designs25. In a fixed-duration task, the subject reports the choice after a fixed time period set by the experimenter. Even if the neural trajectory reaches a state representing a choice (i.e. a decision boundary) at an earlier time point, the deliberation process continues. Thus, the latent trajectory can terminate at any state at the trial end (Fig. 2a). In contrast, in a reaction-time task, the subject reports the choice as soon as the neural trajectory reaches a decision boundary for the first time. Thus trials have variable durations defined by the neural dynamics itself, and the latent trajectory always terminates at one of the decision boundaries at the trial end (Fig. 2b, c). To model these alternative scenarios, we impose appropriate boundary conditions for the Langevin dynamics Eq. (1): reflecting for the fixed-duration and absorbing for the reaction-time tasks. In addition, we derive an absorption operator enforcing the trajectory termination at a decision boundary in the reaction-time task (Fig. 1b).

Latent Langevin dynamics with: a a linear potential and reflecting boundaries; b a linear potential and absorbing boundaries; and c a non-linear potential and absorbing boundaries (first column). For each dynamics, nine example trajectories are displayed (color gradient, second column). In all cases, the initial latent state x(t0) (blue dots, second column) is sampled from the same density p0(x) (third column, t = 0). The time-propagation of the latent probability density \(\widetilde{p}(x,t)\) strongly depends on the potential shape and boundary conditions (third column). With reflecting boundaries (a), the latent trajectories terminate anywhere in the latent space at the trial end, whereas with absorbing boundaries (b, c), the latent trajectories always terminate at the boundaries (orange dots, second column). These qualitative differences in the Langevin dynamics are difficult to discern from stochastic spike data (fourth column, colors correspond to the trajectories in the second column).

The Poisson noise masks distinctions between different types of latent Langevin dynamics. The spike trains appear similar for dynamics with reflecting versus absorbing boundaries (Fig. 2a, b), and with a linear versus non-linear potential (Fig. 2b, c). A non-stationary initial state p0(x) is also not obvious in the spike trains. Distinguishing these qualitatively different dynamics based on spike data is difficult, despite the latent trajectories and the corresponding time-dependent latent probability densities \(\widetilde{p}(x,t)\) (Eq. (5) in Methods section) are different (Fig. 2).

We infer the force potential Φ(x), the noise magnitude D, and the initial distribution p0(x) from stochastic spike data Y(t). The data consists of multiple trials Y(t) = {Yi(t)} (i = 1, 2, . . . n), and for each trial \({Y}_{i}(t)=\{{t}_{0}^{i},{t}_{1}^{i},...,{t}_{{N}_{i}}^{i},{t}_{E}^{i}\}\), where \({t}_{1}^{i},{t}_{2}^{i},...,{t}_{{N}_{i}}^{i}\) are recorded spike times, and \({t}_{0}^{i}\) and \({t}_{{{{{{{{\rm{E}}}}}}}}}^{i}\) are the trial start and end times, respectively. We maximize the data likelihood \({{{{{{{\mathscr{L}}}}}}}}\left[Y(t)| \theta \right]\) with respect to θ = {Φ(x), p0(x), D}. We derive analytical expressions for the variational derivatives of the negative log-likelihood, which we use to update θ using a gradient-descent (GD) algorithm2 (Methods section). The variational derivatives of the potential Φ(x) and p0(x) are continuous functions, which we evaluate numerically on each GD step using a finite basis (Supplementary Note 1). Thus, our method is non-parametric in the sense that we do not specify a parametric form for the functions Φ(x) and p0(x), but use the analytical expressions for their continuous variational derivatives evaluated in a finite basis. The likelihood calculation involves time-propagation of the latent probability density with the operator \(\exp \left(-\hat{{{\boldsymbol{{{\mathcal{H}}}}}}}({t}_{i}-{t}_{i-1})\right)\), where \(\hat{{{\boldsymbol{{{\mathcal{H}}}}}}}\) is a modified Fokker-Planck operator (Eq. (6) in Methods section). The operator \({\hat{{{\boldsymbol{{{\mathcal{H}}}}}}}}\) satisfies either reflecting (\({\hat{{{\boldsymbol{{{\mathcal{H}}}}}}}}_{{{\rm{ref}}}}\), fixed-duration task), or absorbing boundary conditions (\({{\hat{{{\boldsymbol{{{\mathcal{H}}}}}}}}}_{{{{{{{{\rm{abs}}}}}}}}}\), reaction-time task). The absorbing boundary conditions ensure that trajectories reaching a boundary before the trial end do not contribute to the likelihood. In addition, the absorption operator A enforces that the likelihood includes only trajectories terminating on the boundaries at the trial end time tE (Methods section).

Contributions of non-stationary components to the accurate inference

We found that accurate inference of non-stationary Langevin dynamics requires incorporating all three non-stationary components: the initial distribution p0(x), the boundary conditions, and the absorption operator. The necessity of all components for accurate inference is not obvious. Since spike trains generated from stationary versus non-stationary dynamics appear similar (Fig. 2), one could assume that omitting non-stationary components may affect the inference only insignificantly. To demonstrate how each component contributes to the accurate inference, we focus here on inferring the potential Φ(x) from synthetic data with known ground truth, assuming p0(x) and D are provided (we consider simultaneous inference of Φ(x), p0(x), D below). We use 200 trials of spike data generated from the model with a linear ground-truth potential and a narrow initial state distribution (full list of parameters in Supplementary Table 1). We simulated a reaction-time task, so that each trial terminates when the latent trajectory reaches one of the decision boundaries producing a non-stationary distribution of latent trajectories (Fig. 2b).

The inference accurately recovers the Langevin dynamics from these non-stationary spike data when all non-stationary components are taken into account (Fig. 3a). The GD algorithm iteratively increases the model likelihood (decreases the negative log-likelihood). Starting from an unspecific initial guess \({{\Phi }}(x)={{{{{{{\rm{const}}}}}}}}\), the potential shape changes gradually over the GD iterations. After some iterations, the fitted potential closely matches the ground-truth shape while the log-likelihood of the fitted model approaches the log-likelihood of the ground-truth model. The concurrent agreement of the inferred potential and its likelihood with the ground truth indicates the accurate recovery of the Langevin dynamics. At later iterations, the potential shape can deteriorate due to overfitting, and model selection is required for identifying the model that accurately approximates dynamics in the data when the ground truth is not known2 (we consider model selection and uncertainty quantification below).

The spike data are generated from the Langevin dynamics with a linear potential and absorbing boundaries (Fig. 2b). a The inference incorporates the non-equilibrium initial state distribution p0(x), absorbing boundary conditions, and the absorption operator (graphical diagram, inset in the left panel). When the likelihood of the fitted model approaches the likelihood of the ground-truth model (left panel, the relative log-likelihood is \(\left[{{{{{{\mathrm{log}}}}}}}\,{{{{{{{{\mathscr{L}}}}}}}}}_{{{{{{{{\rm{gt}}}}}}}}}-{{{{{{\mathrm{log}}}}}}}\,{{{{{{{\mathscr{L}}}}}}}}\right]/{{{{{{\mathrm{log}}}}}}}\,{{{{{{{{\mathscr{L}}}}}}}}}_{{{{{{{{\rm{gt}}}}}}}}}\)), the inferred potential shape closely matches the ground truth (right panel, colors correspond to the iterations marked with dots on the left panel). b Same as a, but omitting the absorption operator in the inference. The relative log-likelihood is with respect to the likelihood for the ground-truth potential, with the absorption operator omitted in the likelihood calculation for both the ground-truth and fitted potentials. c Same as b, but replacing the absorbing with reflecting boundary conditions in the inference and in the likelihood calculation for both the fitted and ground-truth potentials. d Same as c, but replacing p0(x) with the equilibrium density peq(x) in the inference and in the likelihood calculation for both the fitted and ground-truth potentials. Omitting any of the non-stationary components results in artifacts in the inferred potentials.

To reveal how each non-stationary component contributes to the inference, we replace all components one by one with their stationary counterparts and evaluate the inference quality under these modified conditions. First, we test the importance of the absorption operator by performing the inference with the initial distribution p0(x) and absorbing boundary conditions, but omitting the absorption operator (Fig. 3b). In this scenario, the likelihood includes all trajectories that terminate anywhere in the latent space and do not reach the domain boundaries before the trial end. The inferred potential shows the correct linear slope, but develops a large barrier near the right boundary, where the ground-truth potential is low. This behavior arises since the spurious potential barrier reduces the probability flux through the absorbing boundary and hence increases the model likelihood. Accordingly, the likelihood is lower for the ground-truth potential than for the potential with the spurious barrier when the absorption operator is omitted in the likelihood calculation Eq. (4). The absorption operator corrects for this mismatch by ensuring that only trajectories terminating at the boundaries contribute to the likelihood.

Next, we test the importance of the absorbing boundary conditions using the same non-stationary data. We take into account the initial distribution p0(x), but replace the absorbing with reflecting boundary conditions in the inference (Fig. 3c). In this scenario, all trajectories contribute to the likelihood independent of when and whether they reach the domain boundaries. The inferred potential exhibits a small barrier near the right boundary where the ground-truth potential is low. The probability density of latent trajectories in the data is vanishing at the absorbing boundaries (Fig. 2b), whereas stationary dynamics with reflecting boundaries predict high probability density in the regions where the potential is low (Fig. 2a). Hence, the spurious potential barrier arises to explain the low probability density at the right boundary. Accordingly, the likelihood is higher for the potential with the spurious barrier than for the ground-truth potential when reflecting instead of absorbing boundary conditions are used in the likelihood calculation.

Finally, we test the importance of the initial state distribution p0(x). Using the same non-stationary data, we perform the inference with p0(x) replaced by the equilibrium distribution \({p}_{{{{{{{{\rm{eq}}}}}}}}}(x)\propto \exp (-{{\Phi }}(x))\) under the reflecting boundary conditions (Fig. 3d). Instead of the linear slope, the inferred potential exhibits a flat shallow valley, which accounts for the high density of latent trajectories near the domain center in the data due to non-equilibrium p0(x). The equilibrium dynamics in the ground-truth potential predict lower probability density at the domain center than in the data, hence the likelihood is lower for the ground-truth potential than for the inferred shallow potential when incorrect initial distribution is used in the likelihood calculation. These results demonstrate that all three non-stationary components are critical for the accurate inference of non-stationary Langevin dynamics, and omitting any of them results in incorrect inference that accounts for the non-stationary data statistics by artifacts in the potential shape.

Discovering models of decision-making

To demonstrate that our framework can accurately infer qualitatively different non-stationary dynamics, we perform the inference on synthetic data generated by the alternative models of perceptual decision-making. We consider latent Langevin dynamics corresponding to the ramping and stepping models of decision making proposed previously27. The ramping model assumes that on single trials neural activity evolves gradually towards a decision boundary as a linear drift-diffusion process, which corresponds to a linear potential with a constant slope (Fig. 2b). The stepping model assumes that on single trials neural activity abruptly jumps from the initial to a final state representing a choice, which corresponds to a potential with two barriers where trajectories have to overcome one of the barriers to reach a decision boundary (Fig. 2c). Distinguishing between these alternative models of decision making is difficult with the traditional approach based on parametric model comparisons28.

We generated spike data with the ramping and stepping latent dynamics in a reaction time task (Fig. 2b, c). We choose the potential Φ(x), noise magnitude D, and the initial state distribution p0(x) so that the speed and accuracy of decisions in the model is similar to typical experimental values, and f(x) is chosen to produce realistic firing rates31 (parameters provided in Supplementary Table 1). First, we infer the potential shape Φ(x) with the correct D and p0(x) provided. For both ramping and stepping dynamics, our framework accurately infers the correct potential shape from 200 data trials (a realistic data amount in experiment, Fig. 4a, b). At the iteration when the likelihoods of the fitted and ground-truth model are equal, the inferred potentials are in good agreement with the ground truth, confirming the inference accuracy. The inference accuracy further improves with a larger data amount of 1600 trials.

a The spike data are generated from the Langevin dynamics with a linear potential and absorbing boundaries (Fig. 2b), which corresponds to the ramping model of decision-making dynamics. When the likelihoods of the fitted and ground-truth models are equal (upper panel, colored dots), the inferred potential closely matches the ground-truth potential (lower panel, colors correspond to dots in the upper panel). The inference accuracy improves with more data (teal - 200 trials, purple - 1600 trials). b Same as a, but for the spike data generated from the Langevin dynamics with a non-linear potential with two barriers and absorbing boundaries (Fig. 2c), which corresponds to the stepping model of decision-making dynamics. c Simultaneous inference of the potential Φ(x), the initial state distribution p0(x), and noise magnitude D from the same spike data as in a (400 trials). As the likelihood of the fitted model approaches the likelihood of the ground-truth model (upper left), all fitted components simultaneously approach the ground truth.

Finally, we demonstrate simultaneous inference of all functions governing the non-stationary dynamics Φ(x), p0(x), and D using synthetic data generated from the ramping model (Fig. 4c). We update each of Φ(x), p0(x), and D in turn on successive GD iterations. As the likelihood of the fitted model approaches the likelihood of the ground-truth model, the potential shape, noise magnitude, and the initial state distribution all closely match the ground truth, confirming the accurate inference of a full model of latent non-stationary Langevin dynamics. The inference of p0(x) can be less accurate when the data consist of a few long trials so that the dynamics equilibrate and trajectories contain little information about the initial state. In this quasi-stationary regime, p0(x) does not play an important role and the potential Φ(x) and noise magnitude D that define equilibrium dynamics can be inferred accurately even with an inaccurate inference of p0(x) (Supplementary Fig. 1).

Model selection and uncertainty quantification

So far we validated the inference accuracy by comparing the fitted model and its likelihood with the ground truth. However, in practical applications, the ground truth is unknown, and we need a procedure for selecting the optimal model among many models produced across iterations of the gradient descent. On early iterations, the fitted models miss some features of the correct dynamics (underfitting), whereas on late iterations, the fitted models develop spurious features (overfitting). The model that best captures the correct dynamics is discovered at some intermediate iterations. The standard approach for selecting the optimal model is based on optimizing model’s ability to predict new data (i.e. generalization accuracy), e.g., using cross-validation. However, methods optimizing generalization accuracy cannot reliably identify correct features and avoid spurious features when applied to flexible models2. An alternative approach for model selection is based on directly comparing features of the same complexity discovered from different data samples2. Since true features are the same, whereas noise is different across data samples, the consistency of features inferred from different data samples can separate the true features from noise. Model selection based on feature consistency can reliably identify the correct features for stationary dynamics2. Here we extended this method for the case of non-stationary dynamics (Methods section).

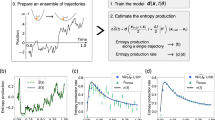

We illustrate the model selection method based on feature consistency using the same synthetic data as in Fig. 3a. We split the full dataset into two halves and optimize the model on each half independently. For each model produced by the gradient descent, we calculate the feature complexity defined as a negative entropy of latent trajectories \({{{{{{{\mathcal{M}}}}}}}}=-S\,[{{\Phi }}(x),D,{p}_{0}(x)]\) (Eq. (14)). For non-stationary dynamics, the entropy depends not only on the potential shape Φ(x) but also on the initial distribution p0(x) (Supplementary Note 6). The feature complexity grows over GD iterations as the model develops more and more structure (Fig. 5a). After the true features are discovered, \({{{{{{{\mathcal{M}}}}}}}}\) exceeds the ground-truth complexity, and further increases of \({{{{{{{\mathcal{M}}}}}}}}\) indicate fitting noise in the training data. The optimal feature complexity \({{{{{{{{\mathcal{M}}}}}}}}}^{* }\) separates the true features from noise. To determine the optimal \({{{{{{{{\mathcal{M}}}}}}}}}^{* }\) when the ground truth is unknown, we compare models of the same complexity discovered from two data halves. For \({{{{{{{\mathcal{M}}}}}}}} \, < \, {{{{{{{{\mathcal{M}}}}}}}}}^{* }\), the models of the same complexity tightly overlap between two data samples (Fig. 5c, left). For \({{{{{{{\mathcal{M}}}}}}}} \, > \, {{{{{{{{\mathcal{M}}}}}}}}}^{* }\), the models of the same complexity diverge, because overfitting patterns are unique for each data sample (Fig. 5c, right). We quantify the overlap of two models using the Jensen-Shannon divergence (JSD) between time-dependent probability densities of their latent trajectories (Eqs. (15) and (16)). For low feature complexities, JSD is small indicating that the true features of the dynamics are consistent between data samples (Fig. 5b, c, left). For higher feature complexities, JSD rises sharply indicating divergence of spurious features between data samples (Fig. 5b, c, right). We define the optimal \({{{{{{{{\mathcal{M}}}}}}}}}^{* }\) as the feature complexity for which JSD reaches a threshold. This procedure returns two overlapping potentials corresponding to \({{{{{{{{\mathcal{M}}}}}}}}}^{* }\), which agree with the ground-truth model on synthetic data (Fig. 5c, middle). We also performed model selection based on feature consistency for stepping and ramping dynamics (Fig. 4a, b) and found that the selected models agree well with the ground truth (Supplementary Fig. 2b, c).

a Feature complexity \({{{{{{{\mathcal{M}}}}}}}}\) increases over GD iterations at a rate that varies across data samples. The ground-truth feature complexity (gray line) is achieved on different iteration for different data samples. b JS divergence between the models discovered from data samples 1 and 2 for each level of the feature complexity. The optimal value \({{{{{{{{\mathcal{M}}}}}}}}}^{* }\) is defined as a maximum feature complexity for which JS divergence does not exceed a fixed threshold (dashed line). The dots correspond to the potential in c. c A pair of fitted potentials at \({{{{{{{\mathcal{M}}}}}}}}\ < \ {{{{{{{{\mathcal{M}}}}}}}}}^{* }\) (left, gray dot in b), \({{{{{{{\mathcal{M}}}}}}}}={{{{{{{{\mathcal{M}}}}}}}}}^{* }\) (middle, orange dot in b), and \({{{{{{{\mathcal{M}}}}}}}}\ > \ {{{{{{{{\mathcal{M}}}}}}}}}^{* }\) (right, teal dot in b) for data samples 1 and 2 (colors correspond to data in a). In all panels the ground-truth model is shown in gray. d JS divergences between models of the same complexity discovered from two data halves for each of 10 bootstrap samples (red line - same data as in b). The same threshold is used to select a pair of optimal models for each bootstrap sample. e Fitted potential (red) is the average of two potentials at optimal \({{{{{{{{\mathcal{M}}}}}}}}}^{* }\) produced by the model selection (middle panel in c). The confidence bounds (shaded area) are obtained as a pointwise 5% and 95% percentile across twenty potentials produced by the model selection on 10 bootstrap samples in d. The ground-truth model is shown in gray.

Practical applications often require quantifying uncertainty of the inferred model, which can be performed via bootstrapping, as we illustrate using the same data as in Fig. 3a. To obtain confidence bounds for the inferred model, we generate ten bootstrap samples by sampling trials randomly with replacement from the set of all trials. For each bootstrap sample, we refit the model and perform model selection using our feature consistency method (Fig. 5d). We then obtain the confidence bounds for the inferred potential by computing a pointwise 5% and 95% percentile across 20 potentials produced by the model selection on ten bootstrap samples (Fig. 5e). As expected, the uncertainty is largest in the regions where the potential is high, i.e. where the density of latent trajectories and hence the amount of spike data are low.

Discussion

Our framework accurately infers non-stationary Langevin dynamics from stochastic observations and accommodates non-additive Poisson observation noise. We demonstrate that accurate inference requires taking into account the non-equilibrium initial and final states that represent the start and outcome of computations performed by the system. Ignoring the non-equilibrium initial or final states results in incorrect inference, in which erroneous features in the dynamics arise due to non-stationary data distribution. We consider non-stationarity that arises from transient dynamics on a fast timescale within each trail, while the dynamical model components —potential, noise, and p0(x)—are the same across trials. An additional source of non-stationarity may arise from slow drifts in the dynamics across trials32,33. Such non-stationary drifts can be modeled as changes in the potential, noise, and p0(x) on a slow timescale across trials.

The inference accuracy depends on the amount of available data and the complexity of underlying dynamics. Here we considered cases where the inference accuracy was not limited by the data amount to isolate how it depends on non-stationary components. A larger data amount generally results in more accurate inference (Fig. 4a, b). With insufficient data, the inference can underfit, i.e. not discover all features of the system’s dynamics2. Inferring more complex dynamics requires larger amounts of data2.

We illustrate our inference framework using models of neural dynamics during decision making, an inherently non-stationary process of transforming sensory information into a categorical choice. Comparisons between simple parametric models, which instantiate a discrete set of alternative hypotheses, proved ineffective to reveal the underlying neural dynamics27,28,29,30. An obvious pitfall is that none of the a priori guessed alternative hypotheses may be correct28, and therefore model selection limited to a discrete set of hypotheses critically lacks flexibility. In contrast, our Langevin framework provides a flexible non-parametric description of dynamics, which covers a continuous space of hypotheses within a single model architecture2. Our framework can smoothly interpolate between many qualitatively different dynamics, which are all expressed with the same analytical equations, offering a powerful alternative to parametric model selection28. The inferred Langevin equation provides an interpretable description of dynamics, which opens access to many analytical tools available for the analysis, prediction, and control of stochastic systems1,34. Other approaches were also proposed to achieve the balance between flexibility and interpretability, for example, by approximating non-linear dynamics with a hierarchy of locally linear systems35. Flexible interpretable models can discover new hypotheses by fitting data, thus going beyond the classical model comparisons2,36,37,38. Our framework can be generalized to several latent dimensions and parallel data streams39 (e.g., multi-neuron recordings) and opens new avenues for analyzing dynamics of complex systems far from equilibrium.

Methods

Maximum-likelihood inference of latent non-stationary Langevin dynamics

We provide a brief summary of the analytical calculation of the model likelihood and its variational derivatives (see Supplementary Information for details). The likelihood \({{{{{{{\mathscr{L}}}}}}}}\left[Y(t)| \theta \right]\) is a conditional probability density of observing the data Y(t) given a model θ = {Φ(x), p0(x), D}. We only consider here a single trial Y(t) = {t0, t1, . . . , tN, tE}, since the total data likelihood is a product of likelihoods of all trials. The likelihood \({{{{{{{\mathscr{L}}}}}}}}\left[Y(t)| \theta \right]\) is a probability density of the observed spike data, since in continuous time the probability of any precise spike sequence {t1, t2, …tN} is infinitesimal. We can obtain the probability of observing a spike within dt of each {t1, t2, …tN} by multiplying the likelihood with dtN.

The likelihood is obtained by marginalizing the joint probability density \(P({{{{{{{\mathcal{X}}}}}}}}(t),Y(t)| \theta )\) over all possible latent trajectories \({{{{{{{\mathcal{X}}}}}}}}(t)\) that may underlie the data2,23:

Here \({{{{{{{\mathcal{X}}}}}}}}(t)\) is a continuous latent trajectory, and the path integral is performed over all possible trajectories. Note that if the trajectory \({{{{{{{\mathcal{X}}}}}}}}(t)\) was fixed and fully observed, Eq. (2) would reduce to the well-known expression for the likelihood of an inhomogeneous Poisson process with the instantaneous firing rate \(\lambda (t)=f({{{{{{{\mathcal{X}}}}}}}}(t))\) (Supplementary Note 4). Since the latent trajectory that produced the data is unknown, we need to consider all possible latent paths weighted according to how consistent they are with the spike data and with the Langevin dynamics.

To compute the path integral in Eq. (2), we consider a disrectized latent trajectory \(X(t)=\{{x}_{{t}_{0}},{x}_{{t}_{1}},\ldots ,{x}_{{t}_{N}},{x}_{{t}_{E}}\}\), which is a discrete set of points along a continuous path \({{{{{{{\mathcal{X}}}}}}}}(t)\) at each of the observation times {t0, t1, …, tN, tE}. Once we calculate the joint probability density P(X(t), Y(t)) of a discretized trajectory and data, then we can obtain the data likelihood by marginalization over all discretized latent trajectories:

Using the Markov property of the latent Langevin dynamics Eq. (1) and conditional independence of spike observations, the joint probability density P(X(t), Y(t)) can be factorized24 (Fig. 1b):

Here \(p({y}_{{t}_{i}}| {x}_{{t}_{i}}){{{{{\mathrm{d}}}}}}t\) is the probability of observing a spike within small dt of time ti given the latent state \({x}_{{t}_{i}}\), hence \(p({y}_{{t}_{i}}| {x}_{{t}_{i}})=f({x}_{{t}_{i}})\) by the definition of the instantaneous Poisson firing rate. \(p({x}_{{t}_{0}})\) is the probability density of the initial latent state. \(p({x}_{{t}_{i}}| {x}_{{t}_{i-1}})\) is the transition probability density from \({x}_{{t}_{i-1}}\) to \({x}_{{t}_{i}}\) during the time interval between the adjacent spike observations, which accounts for the absence of spikes during this time interval. Finally, the term \(p(A| {x}_{{t}_{E}})\) represents the absorption operator, which ensures that only trajectories terminating at one of the domain boundaries at time tE contribute to the likelihood. The absorption term \(p(A| {x}_{{t}_{E}})\) is only applied in the case of absorbing boundaries, and it is absent in the case of reflecting boundaries (Supplementary Note 1).

The discretized latent trajectory \(X(t)=\{{x}_{{t}_{0}},{x}_{{t}_{1}},...,{x}_{{t}_{N}},{x}_{{t}_{E}}\}\) is obtained by marginalizing the continuous trajectory \({{{{{{{\mathcal{X}}}}}}}}(t)\) over all latent paths connecting \({x}_{{t}_{i-1}}\) and \({x}_{{t}_{i}}\) during each interspike interval. These marginalizations are implicit in the transition probability densities. For the Langevin dynamics Eq. (1), the time-dependent probability density \(\widetilde{p}(x,t)\) evolves according to the Fokker-Planck equation34:

which accounts for the drift and diffusion in the latent space (Fig. 2, third column). In addition, the transition probability density \(p({x}_{{t}_{i}}| {x}_{{t}_{i-1}})\) in Eq. (4) should also account for the absence of spike observations during intervals between adjacent spikes in the data. Thus, \(p({x}_{{t}_{i}}| {x}_{{t}_{i-1}})\) satisfies a modified Fokker-Planck equation (Supplementary Note 5):

where the term −f(x) accounts for the probability decay due to spike emissions2,23. The solution of this equation \(p(x,{t}_{i})=p(x,{t}_{i-1})\exp (-{\hat{{{\boldsymbol{{{\mathcal{H}}}}}}}}({t}_{i}-{t}_{i-1}))\) propagates the latent probability density forward in time during each interspike interval. Depending on the experiment design, we solve Eq. (6) with either absorbing or reflecting boundary conditions (Supplementary Note 1).

The term \(p(A| {x}_{{t}_{E}})\) in Eq. (4) represents the absorption operator A, which ensures that the likelihood only includes trajectories terminating at the boundaries. The instantaneous probability pA for a trajectory to be absorbed at the boundaries given the latent state \({x}_{{t}_{E}}\) is obtained by applying A to a delta-function initial condition \(\delta ({x}_{{t}_{e}})\) and then integrating over the latent space:

To derive the absorption operator, we consider the survival probability \({P}_{{{\Delta }}t}({S}_{{t}_{E}}| {x}_{{t}_{E}})\) for a trajectory to survive (i.e. not to be absorbed at the boundary) within a time interval Δt given the latent state \({x}_{{t}_{E}}\). The survival probability is obtained by propagating the initial condition \(\delta ({x}_{{t}_{E}})\) with the operator \(\exp (-{\hat{{{\boldsymbol{{{\mathcal{H}}}}}}}}_{0}{{\Delta }}t)\) and integrating the result over the latent space:

Here we use the operator \({\hat{{{\boldsymbol{{{\mathcal{H}}}}}}}}_{0}\) instead of the operator \({\hat{{{\boldsymbol{{{\mathcal{H}}}}}}}}\), because the survival probability accounts only for the probability loss due to absorption at the boundaries and not for the probability decay due to spike emissions.

The probability for a trajectory to be absorbed during a time interval Δt given the state \({x}_{{t}_{E}}\) is given by \({P}_{{{\Delta }}t}({A}_{{t}_{E}}| {x}_{{t}_{E}})=1-{P}_{{{\Delta }}t}({S}_{{t}_{E}}| {x}_{{t}_{E}})\). Thus, the instantaneous probability of absorption is obtained as

where we use Eq. (8) to take the limit. Comparing this result with Eq. (7), we find that \({{{{{{{\boldsymbol{A}}}}}}}}={\hat{{{\boldsymbol{{{\mathcal{H}}}}}}}}_{0}\). Note that \(-{\hat{{{\boldsymbol{{{\mathcal{H}}}}}}}}_{0}\) is the Fokker-Planck operator in Eq. (5) that describes the rate of change of the latent probability density at each location x. Integrating both sides of Eq. (5) over the latent space, we obtain

This equation describes the decay of the total probability \(\widetilde{p}(t)={\int}_{x}{{{{{\mathrm{d}}}}}}x\widetilde{p}(x,t)\) in the latent space due to probability flux through the absorbing boundaries. Thus, applying the absorption operator A and integrating over the latent space represents the instantaneous loss of the total probability at time t, which is the fraction of all survived trajectories that reach the absorbing boundaries at exactly time t.

To compute and optimize the likelihood numerically, we represent Eq. (3) in a discrete basis39 (Supplementary Note 1). In the discrete basis, all continuous functions, such as p0(x), are represented by vectors, and the transition, emission, and absorption operators are represented by matrices. Thus, Eq. (3) is evaluated as a chain of vector-matrix multiplications.

Gradient descent optimization

We minimize the negative log-likelihood with the gradient descent (GD) algorithm. Instead of directly updating the functions Φ(x) and p0(x), we, respectively, update the driving force \(F(x)=-{{\Phi }}^{\prime} (x)\) and an auxiliary function \({F}_{0}(x)\equiv p^{\prime} (x)/{p}_{0}(x)\). The potential Φ(x) and p0(x) are obtained from F(x) and F0(x) via

We fix the arbitrary additive constant C in the potential to satisfy \({\int}_{x}\exp [-{{\Phi }}(x)]{{{{{\mathrm{d}}}}}}x=1\). The change of variable from p0(x) to F0(x) allows us to perform an unconstrained optimization of F0(x), and Eq. (11) ensures that p0(x) satisfies the normalization condition for a probability density \(\int\nolimits_{-1}^{1}{p}_{0}(x){{{{{\mathrm{d}}}}}}x=1\), p0(x) ⩾ 0. We ensure the positiveness of the noise magnitude D by rectifying its value after each GD update \(D=\max (D,0)\).

We derive analytical expressions for the variational derivatives of the likelihood \(\delta {{{{{{{\mathscr{L}}}}}}}}/\delta F(x)\), \(\delta {{{{{{{\mathscr{L}}}}}}}}/\delta {F}_{0}(x)\) and the derivative \(\partial {{{{{{{\mathscr{L}}}}}}}}/\partial D\), which are then evaluated in the discrete basis (Supplementary Notes 2 and 3). On each GD iteration, we update the model by stepping in the direction of the log-likelihood gradient:

Here Θ is one of the functions F(x), F0(x), or the noise magnitude parameter D, with the corresponding learning rates γΘ > 0, and n is the iteration number. For simultaneous inference of Φ(x), p0(x), and D (Fig. 4c), we update each of F(x), F0(x), and D in turn on successive GD iterations. The list of optimization hyperparameters, including learning rates and initializations, is provided in Supplementary Table 1.

Synthetic data generation

To generate synthetic spike data from a model with given Φ(x), p0(x), and D, we numerically integrate Eq. (1) with the Euler-Maruyama method to produce latent trajectories x(t) on each trial. We then use time-rescaling method40 to generate spike times from an inhomogeneous Poisson process with the firing rate λ(t) = f(x(t)). We use 200 data trials in Fig. 3; 200 and 1600 trials in Fig. 4a, b; and 400 trials in Fig. 4c. These data amounts are typical for experiments in which neural activity is recorded during decision making25,31. A single experimental session usually contains a total of 1000−2000 trails under different behavioral conditions, with about 100−200 trials in each condition.

Feature complexity

We define feature complexity as the negative entropy of latent trajectories generated by the model2 \({{{{{{{\mathcal{M}}}}}}}}=-S[{{\Phi }}(x),D,{p}_{0}(x);{{{\Phi }}}^{R}(x),{D}^{R},{p}_{0}^{R}(x)]\). The trajectory entropy is defined as a negative Kullback-Leibler (KL) divergence between the distributions \(P[{{{{{{{\mathcal{X}}}}}}}}(t)]\) and \(Q[{{{{{{{\mathcal{X}}}}}}}}(t)]\)41:

\(P[{{{{{{{\mathcal{X}}}}}}}}(t)]\) is the distribution of trajectories in the model of interest with Langevin parameters {Φ(x), D, p0(x)}, and \(Q[{{{{{{{\mathcal{X}}}}}}}}(t)]\) is the distribution of trajectories in the reference model with Langevin parameters \(\{{{{\Phi }}}^{R}(x),{D}^{R},{p}_{0}^{R}(x)\}\). The path integral is performed over all possible trajectories \({{{{{{{\mathcal{X}}}}}}}}(t)\). The reference model is a free diffusion with zero driving force (i.e. constant potential \({{{\Phi }}}^{R}(x)={{{{{{{\rm{const}}}}}}}}\)) and the same diffusion coefficient D as in the model of interest. The analytical expression for the trajectory entropy was derived previously for equilibrium dynamics41. In the equilibrium case, the trajectory entropy is defined by the equilibrium distribution peq(x) and does not depend on the initial state distribution p0(x). We generalized this result and derived an expression for the trajectory entropy for non-stationary dynamics (Supplementary Note 6):

For non-stationary dynamics, the trajectory entropy depends on the time-dependent distribution of the latent trajectories p(x, t) and thus on p0(x). We choose the initial distribution \({p}_{0}^{R}(x)\) for the reference model to be uniform. We derived an expression for efficient numerical evaluation of Eq. (14), where we take the integral over time analytically in the eigenbasis of the operator \({{{{{{{{\boldsymbol{{{{{{{{\mathcal{H}}}}}}}}}}}}}}}}}_{0}\) (Supplementary Eq. (69) in Supplementary Note 6).

Model selection based on feature consistency

To select the optimal model, we compare models discovered from two non-intersecting halves of the data and evaluate the consistency of their features. We quantify the overlap between two models by evaluating Jensen-Shannon divergence (JSD) between their time-dependent probability densities over the latent space:

where

The probability density \(\hat{p}(x,t)\) is normalized to account for the probability loss through the absorbing boundaries: \(\hat{p}(x,t)=\widetilde{p}(x,t)+{I}_{p}\delta (x-{x}_{b})\). Here \(\widetilde{p}(x,t)\) is the time-dependent solution of Eq. (5), \({I}_{p}=1-\int \widetilde{p}(x,t){{{{{\mathrm{d}}}}}}x\) is the total probability loss through the absorbing boundaries up to time t, and xb denotes a boundary (we combine the probability loss through both boundaries into a single term). We approximate the time integral in Eq. (15) by the midpoint rule and calculate the time-dependent probability densities by numerically solving the Fokker-Planck Eq. (5) (Supplementary Note 6.3).

We compare models of roughly the same complexity between the two sets of models \(\{{{{\Phi }}}_{n}^{1}(x),{D}^{1},{p}_{0}^{1}(x)\}\) and \(\{{{{\Phi }}}_{n}^{2}(x),{D}^{2},{p}_{0}^{2}(x)\}\) produced by GD on each data half (n = 1, 2, …N is the iteration number). First, we calculate the feature complexities \({{{{{{{{\mathcal{M}}}}}}}}}_{n}^{1}\) and \({{{{{{{{\mathcal{M}}}}}}}}}_{n}^{2}\) for all models in the two sets. Since feature complexities do not match exactly between the two sets due to nuances in the data, we need to allow for some slack in feature complexity when comparing models2. Accordingly, for each level of feature complexity \({{{{{{{{\mathcal{M}}}}}}}}}_{i}^{1}\), we find the index j* that minimizes the absolute difference \(| {{{{{{{{\mathcal{M}}}}}}}}}_{i}^{1}-{{{{{{{{\mathcal{M}}}}}}}}}_{{j}^{* }}^{2}|\). Next, we calculate DJS(j) between the model with feature complexity \({{{{{{{{\mathcal{M}}}}}}}}}_{i}^{1}\) and each of the models in the second set with similar complexity \({{{{{{{{\mathcal{M}}}}}}}}}_{j}^{2}\), where j = j* − R, j* − R + 1, …j* + R and we set R = 5. We then set \({D}_{{{{{\rm{JS}}}}}}({{{{{\mathcal{M}}}}}}_{i}^{1})={\min }_{j}{D}_{{{{{\rm{JS}}}}}}(j)\). We repeat this procedure for different iterations i to obtain the dependence \({D}_{{{{{{{{\rm{JS}}}}}}}}}({{{{{{{\mathcal{M}}}}}}}})\) (Fig. 5b, d). To find the optimal feature complexity, we set the threshold \({D}_{{{{{{{{\rm{JS,thres}}}}}}}}}=0.001\) and select \({{{{{{{{\mathcal{M}}}}}}}}}^{* }\) as the maximum feature complexity for which \({D}_{{{{{{{{\rm{JS}}}}}}}}}\ \leqslant\ {D}_{{{{{{{{\rm{JS,thres}}}}}}}}}\). This procedure returns two overlapping potentials of roughly the same feature complexity which represent the consistent features of dynamics across data samples (Fig. 5c).

Data availability

The synthetic data used in this study can be reproduced using the source code.

Code availability

The source code to reproduce the results of this study is freely available on GitHub (https://github.com/engellab/neuralflow, https://doi.org/10.5281/zenodo.5512552).

References

Friedrich, R., Peinke, J., Sahimi, M. & Reza Rahimi Tabar, M. Approaching complexity by stochastic methods: From biological systems to turbulence. Phys. Rep. 506, 87–162 (2011).

Genkin, M. & Engel, T. A. Moving beyond generalization to accurate interpretation of flexible models. Nat. Mach. Intell. 2, 674–683 (2020).

Wimmer, K., Nykamp, D. Q., Constantinidis, C. & Compte, A. Bump attractor dynamics in prefrontal cortex explains behavioral precision in spatial working memory. Nat. Neurosci. 17, 431–439 (2014).

Laing, C. R. & Kevrekidis, I. G. Equation-free analysis of spike-timing-dependent plasticity. Biol. Cybern. 109, 701–714 (2015).

Millman, D., Mihalas, S., Kirkwood, A. & Niebur, E. Self-organized criticality occurs in non-conservative neuronal networks during ‘up’ states. Nat. Phys. 6, 801–805 (2010).

Brückner, D. B. et al. Stochastic nonlinear dynamics of confined cell migration in two-state systems. Nat. Phys. 15, 595–601 (2019).

Yates, C. A. et al. Inherent noise can facilitate coherence in collective swarm motion. Proc. Natl. Acad. Sci. USA 106, 5464–5469 (2009).

Sriraman, S., Kevrekidis, I. G. & Hummer, G. Coarse nonlinear dynamics and metastability of filling-emptying transitions: water in carbon nanotubes. Phys. Rev. Lett. 95, 130603 (2005).

Friedrich, R., Peinke, J. & Renner, C. How to quantify deterministic and random influences on the statistics of the foreign exchange market. Phys. Rev. Lett. 84, 5224–5227 (2000).

Hasselmann, K. Stochastic climate models Part I. Theory. Tellus 28, 473–485 (2016).

Wei, C. & Shu, H. Maximum likelihood estimation for the drift parameter in diffusion processes. Stochastics 88, 699–710 (2016).

Ragwitz, M. & Kantz, H. Indispensable finite time corrections for Fokker-Planck equations from time series data. Phys. Rev. Lett. 87, 254501 (2001).

Touya, C., Schwalger, T. & Lindner, B. Relation between cooperative molecular motors and active Brownian particles. Phys. Rev. E 83, 051913–10 (2011).

García, L. P., Pérez, J. D., Volpe, G., Arzola, A. V. & Volpe, G. High-performance reconstruction of microscopic force fields from Brownian trajectories. Nat. Commun. 9, 1–9 (2018).

Postnikov, E. B. & Sokolov, I. M. Reconstruction of substrate’s diffusion landscape by the wavelet analysis of single particle diffusion tracks. Physica A 533, 122102 (2019).

Brückner, D. B., Ronceray, P. & Broedersz, C. P. Inferring the dynamics of underdamped stochastic systems. Phys. Rev. Lett. 125, 058103 (2020).

Frishman, A. & Ronceray, P. Learning force fields from stochastic trajectories. Phys. Rev. X 10, 5–32 (2020).

Wei, C. Estimation for parameters in partially observed linear stochastic system. Int. J. Appl. Math. 48, 123–127 (2018).

Ladenbauer, J., McKenzie, S., English, D. F., Hagens, O. & Ostojic, S. Inferring and validating mechanistic models of neural microcircuits based on spike-train data. Nat. Commun. 10, 199–17 (2019).

Churchland, A. K. et al. Variance as a signature of neural computations during decision making. Neuron 69, 818–831 (2011).

Chung, H. S., McHale, K., Louis, J. M. & Eaton, W. A. Single-molecule fluorescence experiments determine protein folding transition path times. Science 335, 981–984 (2012).

Chung, H. S. & Eaton, W. A. Single-molecule fluorescence probes dynamics of barrier crossing. Nature 502, 685–688 (2013).

Haas, K. R., Yang, H. & Chu, J. W. Expectation-maximization of the potential of mean force and diffusion coefficient in langevin dynamics from single molecule fret data photon by photon. J. Phys. Chem. B 117, 15591–15605 (2013).

Bishop. Pattern Recognition and Machine Learning (Springer, 2006).

Gold, J. I. & Shadlen, M. N. The neural basis of decision making. Annu. Rev. Neurosci. 30, 535–574 (2007).

Amarasingham, A., Geman, S. & Harrison, M. T. Ambiguity and nonidentifiability in the statistical analysis of neural codes. Proc. Natl. Acad. Sci. USA 112, 6455–6460 (2015).

Latimer, K. W., Yates, J. L., Meister, M. L. R., Huk, A. C. & Pillow, J. W. Single-trial spike trains in parietal cortex reveal discrete steps during decision-making. Science 349, 184–187 (2015).

Chandrasekaran, C. et al. Brittleness in model selection analysis of single neuron firing rates. bioRxiv preprint at https://www.biorxiv.org/content/10.1101/430710v1 (2018).

Zylberberg, A. & Shadlen, M. N. Cause for pause before leaping to conclusions about stepping. bioRxiv preprint at https://www.biorxiv.org/content/10.1101/085886v1.full (2019).

Zoltowski, D. M., Latimer, K. W., Yates, J. L., Huk, A. C. & Pillow, J. W. Discrete stepping and nonlinear ramping dynamics underlie spiking responses of lip neurons during decision-making. Neuron 102, 1249–1258.e10 (2019).

Chandrasekaran, C., Peixoto, D., Newsome, W. T. & Shenoy, K. V. Laminar differences in decision-related neural activity in dorsal premotor cortex. Nat. Commun. 8, 614 (2017).

Cowley, B. R. et al. Slow drift of neural activity as a signature of impulsivity in macaque visual and prefrontal cortex. Neuron 108, 551–567.e8 (2020).

Meirhaeghe, N., Sohn, H. & Jazayeri, M. A precise and adaptive neural mechanism for predictive temporal processing in the frontal cortex. bioRxiv https://doi.org/10.1101/2021.03.10.434831 (2021).

Risken, H. The Fokker-Planck Equation (Springer, 1996).

Nassar, J., Linderman, S. W., Bugallo, M. & Park, I. M. Tree-structured recurrent switching linear dynamical systems for multi-scale modeling. In International Conference on Learning Representations https://openreview.net/forum?id=HkzRQhR9YX (2019).

Schmidt, M. & Lipson, H. Distilling free-form natural laws from experimental data. Science 324, 81–85 (2009).

Daniels, B. C. & Nemenman, I. Automated adaptive inference of phenomenological dynamical models. Nat. Commun. 6, 8133–8 (2015).

Brunton, S. L., Proctor, J. L. & Kutz, J. N. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc. Natl. Acad. Sci. USA 113, 3932–3937 (2016).

Genkin, M., Hughes, O. & Engel, T. A. Engellab/neuralflow: learning non-stationary Langevin dynamics from stochastic observations of latent trajectories Zenodo https://doi.org/10.5281/zenodo.5512552 (2021).

Brown, E. N., Barbieri, R., Ventura, V., Kass, R. E. & Frank, L. M. The time-rescaling theorem and its application to neural spike train data analysis. Neural Comput. 14, 325–346 (2002).

Haas, K. R., Yang, H. & Chu, J.-W. Analysis of trajectory entropy for continuous stochastic processes at equilibrium. J. Phys. Chem. B 118, 8099–8107 (2014).

Acknowledgements

This work was supported by the NIH grant R01 EB026949 (T.A.E. and M.G.), the Swartz Foundation (M.G.), and Katya H. Davey Fellowship (O.H.). We thank C. Aghamohammadi for thoughtful comments on the manuscript.

Author information

Authors and Affiliations

Contributions

M.G. and T.A.E. designed the research and developed the framework. M.G. and O.H. developed the code and performed computer simulations. M.G. and T.A.E. wrote the paper with input from O.H.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Genkin, M., Hughes, O. & Engel, T.A. Learning non-stationary Langevin dynamics from stochastic observations of latent trajectories. Nat Commun 12, 5986 (2021). https://doi.org/10.1038/s41467-021-26202-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-021-26202-1

This article is cited by

-

A unifying perspective on neural manifolds and circuits for cognition

Nature Reviews Neuroscience (2023)

-

Autonomous inference of complex network dynamics from incomplete and noisy data

Nature Computational Science (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.