Abstract

The biological mechanisms that allow the brain to balance flexibility and integration remain poorly understood. A potential solution may lie in a unique aspect of neurobiology, which is that numerous brain systems contain diffuse synaptic connectivity. Here, we demonstrate that increasing diffuse cortical coupling within a validated biophysical corticothalamic model traverses the system through a quasi-critical regime in which spatial heterogeneities in input noise support transient critical dynamics in distributed subregions. The presence of quasi-critical states coincides with known signatures of complex, adaptive brain network dynamics. Finally, we demonstrate the presence of similar dynamic signatures in empirical whole-brain human neuroimaging data. Together, our results establish that modulating the balance between local and diffuse synaptic coupling in a thalamocortical model subtends the emergence of quasi-critical brain states that act to flexibly transition the brain between unique modes of information processing.

Similar content being viewed by others

Introduction

The brain is a complex, adaptive system that is organized across multiple spatial and temporal scales. Systems arranged in this way must solve a number of competing challenges. First, they must balance segregation—the need to retain precise, specialist functional capacities—and integration—in which information from segregated subregions is recombined at larger spatiotemporal scales1,2. Second, the brain must remain flexible enough to retain sufficient sensitivity to fluctuations in evolving fitness landscapes3. Finally, the systems must coordinate these capacities in ways that are energetically frugal4, which favors systems with relatively low-dimensional architectures5. How the brain is arranged to achieve these distinct constraints, and what physical mechanisms underpin them, remains poorly understood.

A solution to this challenge may be found in a somewhat over-looked principle of neuroanatomy. A number of circuits in the brain, such as the ascending neuromodulatory system6 and the non-specific, “matrix” cells of the thalamus7, project their axons in a relatively diffuse pattern that targets multiple distinct neural regions. These circuits are incompatible with the traditional notion of “message passing” between individual neurons that are typically ascribed to targeted, feed-forward projections between neurons8. So why might these highly conserved, diffuse connections exist as such a prominent feature of neuroanatomy?

A potential benefit of balancing targeted and diffuse coupling is that systems structured in this way may be able to support multiple distinct modes of processing. For instance, targeted connections between neural subregions will influence local neighbors in a relatively segregated mode, whereas diffuse connections may force distant regions into novel regimes that are impacted more strongly by the global brain state. Crucially, by modulating the amount of global, diffuse connectivity, the system could control its information processing capacity in an energy efficient manner9.

Systems that support multiple distinct modes often exhibit optimal functional properties at the transition point (or critical point), such as maximizing information transmission, the dynamic range, and the number of metastable states10,11,12. Rather than balancing precisely at a specific critical point, there is now robust evidence to suggest that complex systems such as the brain may display an enlarged/stretched critical point. This stretched critical regime (quasi-critical) allows the system to more readily utilize the optimal functional properties bestowed at criticality13,14,15,16. Near this quasi-critical region of state space, heterogeneity within the brain should allow subregions to experience transient excursions into the quasi-critical regime17,18. This would allow the system to harness the benefits of criticality (e.g., divergence of correlation length), without the associated risk of transitioning en masse into a pathological state of global synchronization19,20.

In this manuscript, we propose that this mechanism could be exploited in the brain by modulating the balance between local and diffuse synaptic coupling in the thalamocortical system. This in turn would imbue the system with the capacity to support the complex, adaptive system dynamics that support higher brain function.

Results

To test the hypothesis that diffuse coupling promotes a diversity of quasi-critical neural states, a network of biophysically plausible corticothalamic neural mass models was used to simulate large-scale human brain activity (Fig. 1). Neural mass models, which are a spatially discretized class of a neural field model, provide a tractable framework for the analysis of large-scale neuronal dynamics by averaging microscopic structure and activity21,22,23,24,25,26. These models are flexible, physiologically realistic, and inherently non-linear,23,24,25,26,27,28,29,30,31, and have successfully accounted for many characteristic states of brain activity20,21,25,27,30,32,33,34. Importantly, this work extends an existing and validated biophysical model, which itself has been extensively constrained by human electrophysiology data35. This feature ensures that we have oriented the system to a plausible region of state space, and further implies that our results will lead to testable empirical predictions related to the impact of diffuse inputs.

a Corticothalamic neural mass model implemented at each node of the network: each mass was comprised of four distinct cellular populations: an excitatory cortical pyramidal cell (“e“), an inhibitory cortical interneuron (“i”), an excitatory, specific thalamic relay nucleus (“s”), and an inhibitory thalamic reticular nucleus (“r”), with intranode corticothalamic neural mass coupling defined according to known anatomical connectivity. b Connectivity schematic—local and diffuse coupling with periodic boundary conditions (toroidal topology). c Distribution of nodal firing rates across the network—an increase in diffuse coupling subsequently increases the standard deviation of firing rates, with the tails of this distribution having greater above (and below) average values. The cartoons depict subsets of a thermal system with temperature T below (left) and above (right) the average \(\overline {\mathrm{T}}\). d Qualitative effect of increasing diffuse coupling in the presence of heterogeneity on the attractor landscape: increased diffuse coupling shifts all nodes towards their local saddle-node bifurcation point. In the middle of this continuum, the heterogeneous inputs allow a particular subset of nodes (shaded orange) to cross this point and the activity of these nodes begins to move towards the high firing attractor.

The specific neural mass model used in our study contained four distinct neural populations: an excitatory pyramidal cell, e, and an inhibitory interneuron, i, population in the cortex; and excitatory specific relay nuclei, s, and inhibitory thalamic reticular nuclei, r, population in the thalamus. The parameters from the model were fit to a region of state-space defined by the awake, human brain using field potentials from human scalp EEG data35,36. We simulated a 12 × 12 network of corticothalamic neural masses (Fig. 1a) using the neural field simulation software, nftSim37. The parameters for each neural mass were identically set to “eyes-closed” estimates35, which results in simulated activity with a characteristic 1/f spectrum and a peak in the alpha frequency band (8–13 Hz).

In addition to the identical intranode coupling, our model contained two classes of connectivity: local coupling, which was defined as a connection between an excitatory population in the cortex and its immediate neighbors (with diagonal nodes additionally scaled by a spatial decay factor of \(1/\sqrt 2\)); and diffuse coupling, which connected the pyramidal e populations’ activity to all other nodes in the network (Fig. 1b). The diffuse coupling term, which is defined as χ, was swept through a range and was the only parameter changed in this work. Periodic boundary conditions (i.e., a toroidal topological structure) were applied so that each node had an equal number of local afferent connections.

The presence of structural heterogeneities in neural network models, such as the human connectome and neural networks in the Caenorhabditis elegans have been shown to extend an idealized critical point into a region of state space that is known in statistical mechanics as a “Griffiths phase”17,18. This form of quasi-criticality is analogous to the inherent balance present between the liquid and gaseous phases of water at room temperature (Fig. 1c), during which time the vast majority of the water molecules are in their liquid phase. As the temperature rises towards water’s boiling point, a subset of these molecules may, for a short time, collide with other energetic molecules in their immediate surroundings. From the vantage point of this subset, it would appear as though the temperature of the entire fluid had risen. Those regions with slightly more energy than others would be able to cross their own locally defined bifurcation (or critical boundary)—i.e., “transition” into water vapor—while leaving the rest of the water molecules in their liquid phase. This phenomenon will occur more often as the temperature approaches the boiling point.

The brain may exploit a similar physical mechanism, whereby subregions cross locally defined critical boundaries while the bulk of the global brain state remains subcritical. We hypothesized that the prevalence of these critical regions should be modulated by diffuse inputs, in a manner analogous to increasing temperature in the fluid to gas transition. In other words, increasing diffuse coupling in the brain could drive the system such that a subset of nodes can cross their locally defined critical boundaries (e.g., orange nodes in Fig. 1d), while ensuring that the rest of the network remains in a subcritical state (e.g., blue nodes in Fig. 1d). It is important to note that this phenomenon only occurs when there are heterogeneities within the system. In the model used here, only the simplest form of spatial heterogeneity was included: namely, an independent white noise drive (uniquely sampled from an identical Gaussian distribution) to each neural mass in the network. Based on these factors, we hypothesized that the combination of heterogeneity and elevated diffuse coupling, χ, would be sufficient to transition a subset of nodes over their locally defined bifurcation, which in turn should alter the information processing dynamics of the brain. In order to test this hypothesis, we needed to identify a way to track transient, super-critical excursions at the nodal level in our model.

Quantifying regional dynamics through distance to local bifurcation

In dynamical systems, such as the brain, activity is often defined by the systems’ “attractors”, which are idealized states that a system evolves towards under a wide variety of starting conditions38. Multi-stable systems are those with more than one attractor present for a single set of parameters: each attractor has unique stability properties and can be explored by the system given appropriate inputs and/or initial conditions. The biophysical model utilized in this study describes a multi-stable system near a Hopf and a saddle-node bifurcation, both of which occur when a smooth incremental change in a control parameter (in our case, diffuse coupling) causes qualitatively abrupt changes in the system’s behavior.

Knowledge of a node’s attractors is important for understanding the node’s behavior; however, it can be challenging to extrapolate patterns from local nodes to the activity of the whole network. This makes it difficult to define the presence (or absence) of quasi-critical brain state dynamics in large-scale network models. To solve this problem, we note that the bifurcation point for each corticothalamic neural mass can be identified as a function of a constant postsynaptic potential induced by incident activity from other nodes. Time-independent solutions can then be produced by sweeping over this induced potential change in order to find the neural mass’ bifurcation point (i.e., the point where the two low-firing attractors meet and annihilate each other, leaving only a stable high firing attractor). Furthermore, the time-independent solutions can be used to determine the linear response gains between each population within the neural mass (Fig. S2).

As χ is increased, individual nodes become increasingly sensitive to their own inputs—that is, they have heightened “response gain”39,40,41. This effect is characterized by a sigmoidal function that maps population average membrane potential to firing rate25, as well as the slope (first derivative) of this function (Fig. S1). All of the simulated data in our experiment lies on the left-hand side of the peak in the gain curve (subpanel in Fig. S1), such that incremental increases in response gain have large effects on the nodes’ activity (i.e., the slope of the function is positive; orange in the right side of Fig. S1), and hence, cause the region to cross its locally defined bifurcation (Fig. 1d).

Increasing diffuse coupling promotes quasi-critical states

Armed with this approach, at each simulation time point, inputs to a given node can be translated into an instantaneous distance to the receiving nodes’ bifurcation point. In this way, the strength of each nodes’ attractor can be quantified, and the duration of excursions across the point where the attractor is no longer present during simulation can be accurately quantified. Here, the percentage of nodes that have crossed their local bifurcation is defined as Pc. As predicted by our hypothesis, increasing the amount of diffuse network coupling caused a non-linear increase in Pc (Fig. 2). Based on the network-level activity patterns across χ, we defined three “working zones”: a stable, subcritical zone (χ < 1.20 × 10−4 mV s; blue in Fig. 2), where Pc = 0; a quasi-critical zone (1.20 < χ < 1.27 × 10−4 mV s; green in Fig. 2), where 0 < Pc < 100; and a saturated zone (χ > 1.27 × 10−4 mV s; red in Fig. 2), where Pc = 100 in the second half of the simulation.

The time averaged percentage of nodes that have crossed their bifurcation, Pc, as a function of diffuse coupling, χ. We identified three qualitative zones: a low variability subcritical zone (blue), where no nodes crossed their bifurcation point for the full duration of the simulation, a highly variable, quasi-critical zone (green) where at least one node was below its bifurcation during the second half of the simulation, and a saturated, oscillatory zone (red). The insets show steady-state firing rates for each population within the neural mass model relatively represented via color intensity.

Another benefit of neural mass models over more abstract approaches (such as the Kuramoto or Fitzhugh-Nagumo model); Breakspear42 is their superior physical interpretability. Each parameter within the neural mass is, in principal, a measurable biophysical quantity. We leveraged this feature to identify the relative firing rate of each neural population in our model. The three zones in our model were associated with qualitatively distinct steady-state firing rate attractors for each population within the corticothalamic neural mass (inset of Fig. 2). Of note, the subcritical zone was associated with a higher firing rate in the r thalamic population relative to the s population (i.e., relative thalamic inhibition), whereas this relationship is inverted in the saturation zone (i.e., relative thalamic excitation). By construction, the quasi-critical zone necessarily supports a mix of these two states, with the balance dictated non-linearly by the value of Pc (Fig. 2). These results suggest that increasing diffuse coupling to the cortex had the effect of releasing a subset of excitatory thalamic s neurons from inhibition, which in turn was reflected by the crossing of their local bifurcation point (Fig. S3).

We also observed qualitatively distinct effects at the whole-network level. The average regional correlations within each zone are displayed as a force-directed graph in Fig. 3a. The subcritical zone is dominated by local coupling and the saturation zone by diffuse coupling. Notably, the quasi-critical zone shows a mix of both these integrated and segregated topological states, and their coincidence is predicated on heterogeneity within the network. Somewhat trivially, if this heterogeneity is removed and diffuse coupling is increased, the entire network will cross the bifurcation point together with Pc either 0% or 100, which is equivalent to an isolated neural mass receiving increasing drive. In other words, confirming our hypothesis, the presence of the quasi-critical regime was due entirely to the presence of spatial heterogeneity and increasing the diffuse coupling term, χ.

a Average regional correlations within each zone shown as a force-directed graph. b For a given χ, each node was stimulated with an excitatory rectangular pulse (amplitude = 1 mV; width = 10 ms) at t = 10 s. The target nodes activity was then compared to simulated activity in the absence of the pulse using the same noise sequence in order to quantify the perturbation induced. The pulse results are sorted based on their mean distance to bifurcation in the preceding 8 time points. For visualization purposes we then average the activity within the closest, middle, and farthest thirds based on this sorted distance, and low-pass filtered with a passband frequency of 0.001 Hz. (i) χ = 1.15 × 10−4 (ii) χ = 1.21 × 10−4 (iii) χ = 1.25 × 10−4 (iv) χ = 1.3 × 10−4 mV s; Note the vertical axis on (iv) differs from (i)–(iii). c Qualitative effect of increasing diffuse coupling on the attractor landscape: in the subcritical zone, the system was enslaved to the lower attractor; increasing χ into the quasi-critical zone had the effect of flattening the attractor landscape, allowing noise-driven excursions to transition nodes across their local bifurcation point; at high values of χ, the system became enslaved to the higher attractor.

Based on the previous literature15,17,43, we hypothesized that the quasi-critical regime should augment the network’s sensitivity to incoming stimuli. To test this hypothesis, a series of network simulations were run wherein an excitatory pulse stimulus was applied separately to each node across several diffuse coupling values (see Fig. 3b: panels i–iv). For visualization purposes, the nodes were grouped based on their average distance to bifurcation in a brief window (40 ms) preceding stimulus. In line with other critical phenomenon, the response duration and sensitivity of the network increases with diffuse coupling as the system as a whole becomes more critical. This is equivalent to the lower attractor “flattening”, which in turn allows a greater proportion of individual nodes to transition onto the higher attractor (Fig. 3c).

In addition to the increased network sensitivity, flexibility is increased within the quasi-critical zone, with a greater spread of stimulus-response durations observed (contrast Fig. 3b (i) and (ii)). This highlights the fact that within the quasi-critical zone, the dynamic repertoire is extended44 and could provide a mechanistic description of the hierarchy of timescales inferred empirically45,46. Together, these results demonstrate that increasing diffuse coupling transitions the network into a sensitive and complex state, which would likely be further enriched by the known spatial heterogeneity imbued by the white-matter of the structural connectome4.

Network signatures of quasi-criticality

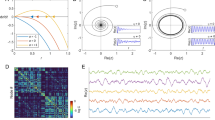

When analyzing empirical neuroimaging data, it is not possible to obtain direct evidence of a nodes’ gain, nor it is the distance from its own bifurcation. Instead, the putative signatures of complex, adaptive system dynamics must be estimated indirectly from empirical neuroimaging data47. Here, we demonstrate that a number of these analytic measures show qualitative changes as a function of χ, and thus together provide empirically accessible signatures of complex, adaptive dynamics (Fig. 4). For instance, the mean participation coefficient, which quantifies the extent of cross-community integration across the brain network48, was low, yet regionally variable, in the subcritical zone, rose sharply in the quasi-critical zone, and reached a ceiling in the saturated zone (Fig. 4a). This pattern is consistent with previous neuroimaging work that showed an increase in integration as a function of cognitive task performance48,49,50,51.

a Mean participation—which quantifies the extent to which a region functionally connects across multiple modules (these were calculated using a weighted version of the Louvain algorithm across all simulations). (b: left) Average time-series variability; (b: right) Regional diversity—defined as the variance in the upper triangle of the region-wise functional connectivity matrix. c Explained variance captured by PC1, σ1, and PC2, σ2. κ demarcates corresponding points in all panels.

Time-series variability (Fig. 4b; black) showed a similar monotonic increase with χ, though with a more protracted course than network integration. In contrast, regional diversity (Fig. 4b; orange) initially increased before dropping and wavering in the quasi-critical zone, and ultimately increasing to its highest value in the saturated zone. Interestingly, an increase of time-series variability within the quasi-critical zone was preceded by two peaks in regional diversity, which was defined as the variance in the upper triangle of the region-wise functional connectivity matrix. In other words, the promotion of unique functional architectures across the network occurs within the quasi-critical zone and these appear distinct from an increase in sustained local variability.

In previous work, we analyzed human fMRI data to show that the brain reconfigures into a low-dimensional brain state across a diverse array of cognitive tasks1,52. Similar patterns were observed here in the simulated data (Fig. 4c). Specifically, the percentage of variance explained by the first two principal components of the firing rate time series peaked in the quasi-critical zone, with the second principal component rising in explanatory power at a higher level of χ (Fig. 4c). Interestingly, the peak in the variance explained by the second principal component coincided with the peak in integration (corresponding to κ in Fig. 4), and the peak in variance explained by the first principal component coincided with the first peak in regional diversity. Together, these results suggest that the quasi-critical zone is associated with an integrated, flexible, and relatively low-dimensional network architecture, which is consistent with recent empirical whole-brain imaging results1 and has implications for the information processing capacities of both artificial and biological networks.

Orienting task and rest states from human fMRI data

We were next interested in whether the complex, adaptive network signatures identified in our neural mass model would translate into differences in empirical, whole-brain neuroimaging data. Based on previous work1,48 and the results of our biophysical model, we hypothesized that the network-wide effects of distinct cognitive states would be dissociable based on the measures that were found to be have unique signatures in the quasi-critical zone. Specifically, we predicted that task performance should be associated with increased diffuse network coupling, reflective of increased ascending arousal neuromodulation41 and increased thalamic engagement52, thus allowing information from functionally specialized regions, optimally formed in the segregated state, to be integrated across broad spatiotemporal scales.

To test this hypothesis, we analyzed whole-brain fMRI data from 100 unrelated subjects from the Human Connectome Project while they performed a cognitively challenging two-back task53. Regional BOLD fMRI data were analyzed using the same techniques that were applied to the simulated data (i.e., those in Fig. 4), and then paired t tests were used to contrast between cognitive task engagement and relatively quiescent rest periods. The results of our analysis demonstrated that, when compared to the resting state, task performance was associated with an increase in integration (t = 83.8; p = 1.02 × 10−93; Fig. 5a), a drop in regional diversity (t = 29.1; p = 2.37 × 10−50; Fig. 5b), increased time-series variability (t = −31.1; p = 6.83 × 10−53; Fig. 5c), and less variance explained by the first two principal components (PC1: t = 5.21; p = 1.04 × 10−6; PC2: t = 9.06; p = 1.23 × 10−14; Fig. 5d).

fMRI data from 100 unrelated subjects during a two-back task from the Human Connectome Project were analyzed to determine whether the task and rest states were associated with unique signatures of complex, adaptive brain dynamics. a Mean Participation was elevated during task performance (paired t test: t = 83.8; p = 1.02 × 10−93). b Regional diversity, defined as the variance in the upper triangle of the region-wise functional connectivity matrix, was lower during task performance than rest (paired t test: t = 29.1; p = 2.37 × 10−50); c fMRI time-series variability (paired t test: t = −31.1; p = 6.83 × 10−53). d Variance explained by first principal component (paired t test: t = 5.21; p = 1.04 × 10−6). d (inset) Variance explained by second principal component (paired t test: t = 9.06; p = 1.23 × 10−14). e Task and rest signatures were applied to a novel stochastic data-fitting algorithm to orient the brain states at different levels of χ: rest was associated with a lower diffuse coupling (χtest ~ 1.22 ± 0.1 mV s) than task states (χtask ~ 1.26 ± 0.1 mV s); (f) surface projection of Δχ for each region 73, generated by independently removing each region of the data, recalculating the signatures, and refitting to generate a Δχ.

To orient regional fMRI data onto the corticothalamic model outputs, we created a novel data-fitting approach. Briefly, a cost function was defined as the difference between the task and rest values for each of the complex network signatures used to analyze systems-wide time-series dynamics (Fig. 5a-d). The algorithm then searches for an interval of diffuse coupling, Δχ, that minimizes this cost function—that is it finds the [χ1, χ2] that best explains the change in all complex network signatures across task and rest states. Finally, a uniform random walk is performed on the weightings of each metrics gradient to scale its contribution to the overall cost function, effectively mitigating against bias for any one measure in the fitting algorithm. In this way, we were able to estimate the dynamical fingerprint of the underlying state in a manner that was robust to differences in the baseline statistics of each measure.

This approach confirmed that quasi-critical signatures orient rest states to lower levels of diffuse coupling (χrest~1.22 ± 0.1 × 10−4 V s) than those of cognitive task states (χtask~1.26 ± 0.1 × 10−4 V s; Fig. 5e). The χ fit results in a probability distribution (Fig. 5e) since an estimate is made for each new combination of weightings generated per iteration of the algorithm (104). The maximum likelihood of the task estimates was found to be coincident with the second peak in regional diversity and proximal to peak integration, suggesting that the brain is balancing flexibility, in the form of high functional diversity, with increased large-scale communication, in the form of network integration.

To aid neuroscientific interpretation, a variation of the group-level model-fitting approach was used to provide an estimate of Δχ at the regional level. To this end, we performed a virtual lesioning of the network (albeit without the benefit of the dimensionality measures, which are calculated across the whole system), in which each of the measures was recalculated following the removal of each node (in turn). The algorithm then fits the resultant Δχ which best captured their respective changes, with the notable difference that the upper bound of this range was set to the maximum likelihood of χtask, so as to ensure each nodes effect was compared to a common baseline (i.e., it finds [χ1, χtask]). The Δχ fits were diversely distributed across predominantly frontal and sensory cortex (Fig. 5f), suggesting that diffuse coupling allowed for integration across multiple distinct specialist sub-networks in order to complete the cognitive task. Together, these results confirm the hypothesis that brain activity during the task is associated with greater quasi-critical brain dynamics than during rest, and further extend this concept by suggesting a plausible biological mechanism—namely increased diffuse coupling—for these differences.

Discussion

Here, we used a network of biophysical corticothalamic neural masses, previously fit to human EEG data35,36, to demonstrate that quasi-critical brain states can be facilitated by the combination of spatially heterogeneous inputs and diffuse network coupling. Gradually increasing diffuse connectivity shifted each region closer to their individually defined bifurcation, which maximized flexibility (Fig. 2) while also increasing the sensitivity of the network to inputs (Fig. 3b) and system-wide topological integration (Fig. 4a). This constellation of complex network signatures dissociated different cognitive processing modes in empirical brain imaging data (Fig. 5). Together, these results establish a plausible neurobiological implementation of criticality in the brain that is driven by a known neuroanatomical principal. Crucially, the modulation of this physical mechanism (diffuse coupling) is demonstrated to augment flexibility in segregated and integrated operational modes, which in turn are reflected as changes in several key measures of complex adaptive network dynamics.

In previous work, it has been shown that cognitive task performance leads to a more integrated48 and low-dimensional1 brain state. Here, we demonstrate a simple neuroanatomical principle that may underpin these patterns. Specifically, we showed that, in the presence of the simplest form of spatial heterogeneity (independent noise to each region), increasing diffuse coupling across the network led to the exploitation of multi-stable system dynamics, broadened the systems dynamic repertoire, supported a hierarchy of input response sensitivity and timescales, and maximized temporal flexibility. Indeed, the quasi-critical states that we identified can facilitate functional integration across large spatial and temporal scales through a diverging correlational length, while also retaining the stability of the whole system. As such, these mechanisms provide a robust starting point for understanding the evolutionary mechanisms through which the brain learned to augment its functional repertoire across a wide range of scales.

The quasi-critical brain states represented here extend previous ideas on the critical brain hypothesis (14,15,16,54), but cast them in a novel, large-scale biophysical brain model. Conceptually, the quasi-critical zone identified in this work represents a state of the system where the dynamic repertoire and flexibility are both maximized. Here, we show that this state can be engaged and disengaged by modulating the impact of diffuse network connectivity. Importantly, this modeling work includes spatial heterogeneities in a minimal form (namely, noise inputs), which permit quasi-critical states while retaining physiologically plausible neural activity. It is also important to note that the quasi-critical state is not a single point but a well-defined region in state space, and thus numerous parameter combinations could be employed by the neurobiology in order to explore this physical niche, which agrees with the extended critical region observed in both human and Caenorhabditis elegans neural networks17.

A strength of the approach utilized here is that it relates directly to known characteristics of neuroanatomy. Indeed, there are at least two major systems in the human brain—the ascending arousal system55 and the diffuse thalamocortical “Matrix” projections56—that could readily instantiate the diffuse brain signal modeled in our study in a relatively flexible manner. Each of these highly inter-connected6,57 systems is characterized by relatively diffuse patterns of axonal connectivity that innervate the entire cortical mantle, along with a range of other subcortical, cerebellar, and brainstem structures55,56. These two systems are also characterized by highly dynamic expression58,59, suggesting that the relative amount of diffuse coupling may be controlled and shaped as a function of systemic requirements. Despite their relatively broad projection patterns, there is also evidence for more targeted connectivity60 and segregated processing modes61 within these two systems, which in turn might confer even more precise control over the highly dynamic, distributed neural coalitions that define our waking brain state62. In short, realistic heterogeneity within these systems, such as synaptic, receptor, and cell densities, will support the formation of quasi-critical states, and hence the brain may have evolved a way of using quasi-criticality to support distinct operational modes. This would allow low-dimensional control over the modes in an energy efficient manner63, i.e., functionally partition regions, allocate these to unique features of a task, and then reintegrate their outputs at a later time1.

The modeling methodology used in this work is distinct from inversion methods, wherein a generative model is fit to data and the resulting parameter estimates are used to elucidate a mechanistic understanding of a phenomenon. Whilst this approach is often informative, it can also result in the over-fitting of parameters. As such, insights from these approaches are difficult to generalize to broader brain states. A complimentary strategy often employed in statistical physics is to define a model according to first principles. While abstract, this strategy affords much greater control over the models’ degrees of freedom, and in turn makes any identified results more robust to parameter changes. These two modeling approaches compliment one and other and represent distinct modes of questioning a phenomenon: data-driven (why is the system changing in this way?) vs. hypothesis-driven (i.e., how will the system change if I modify it in this way?). In this work, we utilized a hybrid strategy: we exploited previous data-fitting results35,36 to orient the model in a plausible region of state-space (eyes-closed wakefulness) and then gradually introduced a new feature (diffuse cortical input) while keeping all other parameters constant. The model is thus a predictive framework, in which all of the signatures we identified (Figs. 3, 4) can be directly attributed to the modulation of diffuse coupling35.

By orienting our model in a previously defined state, our outputs can be directly compared with empirical data. However, the inclusion of a more realistic structural connectome will likely imbue a functional relevance to each region that was not present in our model64. Along these lines, previous modeling approaches have combined neural elements with a back-bone of structural connectivity10,65 to successfully account for the recurrent signatures of functional connectivity between regions (i.e., resting-state networks) which are caused by noise fluctuations sampling an underlying attractor manifold65. These models fall within a broad class that seeks to determine the role of large-scale coupling on macroscale brain dynamics. The present model distinguishes itself from this group by introducing three tiers of connectivity—intranode connectivity between corticothalamic populations within the neural mass, local nearest-neighbor cortical connectivity, and diffuse all-to-all uniform cortical connectivity. The latter is distinct from the “global coupling” seen in other models10,66, which is a parameter that scales the sparse between-node connectivity patterns inherent within the structural connectomes used in each model. Despite their differences, these approaches complement one another by demonstrating in different ways that increasing large-scale connectivity leads to the formation of a more complex attractor manifold. The present study enriches this body of work by describing quasi-critical brain states as a mechanism underpinning a broadened dynamic repertoire, and shows how a well-described class of neuroanatomical connectivity motifs could exploit quasi-criticality and shift the brain between operational modes unique to task and rest.

Methods

Corticothalamic neural mass

The corticothalamic neural mass model used in this work contains four distinct populations: an excitatory pyramidal cell, e, and an inhibitory interneuron, i, population in the cortex; and an excitatory specific relay nuclei, s, and inhibitory thalamic reticular nuclei, r, population in the thalamus. The dynamical processes that occur within and between populations in a neural field model are defined as follows:

For each population, the mean soma potential results from incoming postsynaptic potentials (PSPs):

where Vab(t) is the result of a postsynaptic potential of type b onto a neuron of type a and a, b ∈{e, i, r, s}. The postsynaptic potential response in the dendrite is given by

where the influence of incoming spikes to population a from population b is weighted by a connection strength parameter vab = Nabsab, with the mean number of connections between the two populations Nab and sab is the mean strength of response in neuron a to a single spike from neuron b. τab is the average axonal delay for the transmission of signals, and ϕab is the mean axonal pulse rate from b to a.

The operator Dab describes the time evolution of Vab in response to synaptic input,

where β and α are the overall rise and decay response rates to the synaptodendritic and soma dynamics.

The mean firing rate of a neural population Qa(t) can be approximately related to its mean membrane potential, Va(t), by

which define a sigmoidal mapping function Sa with a maximal firing rate \(Q_a^{\mathrm{max}}\), a mean firing threshold θa, and a standard deviation of this threshold \(\sigma \prime \pi /\sqrt 3\).

The mean axonal pulse rate is related to the mean firing rate by

Here, γa = va/ra represents the damping rate, where va is the propagation velocity in axons, and ra is the characteristic axonal length for the population.

Following the approach of previous neural field models, excitatory and inhibitory synapses in the cortex are assumed proportional to the number of neurons26,29. This random connectivity approximation results in vee = vie, and vei = vii which implies Ve = Vi and Qe = Qi. Inhibitory population variables can then be expressed in terms of excitatory quantities and are thus not neglected.

The fixed-point attractors, or steady states, of the corticothalamic neural mass are found by setting all time derivatives in the above equations to zero. The steady-state values \(\phi _e^{\left( 0 \right)}\) of ϕe is then given by solutions of

where \(\phi _n^{\left( 0 \right)}\) is the steady-state component of the input stimulus26,67. Roots of Eq. (7) are found using the fzero() function from MATLAB.

The connection gains between populations, which represent the additional activity generated in postsynaptic nuclei per additional unit input activity from presynaptic nuclei, can be calculated by linearizing Eq. (4) which gives

where

It is an important goal of this work to extend the ideas and phenomena already present in an existing biophysical model, which has been compared to human data, instead of a specific model of the phenomena with no bridge towards showing its implementation in biology.

Numerical simulations

A 12 × 12 network of corticothalamic neural masses were simulated using the neural field simulation software, nftSim37. The parameters for each neural mass were identically set to “eyes-closed” estimates given in Table 135, which results in simulated activity with a 1/f spectrum and a peak in the alpha frequency band (8–13 Hz) under moderate network coupling. Each simulation was run for a total of 32 s with 10 s of initial transients removed using an integration timestep of Δt = 2−13 s.

Network connectivity and heterogeneity

The noise terms are individually generated for a node from an identical white noise Gaussian distribution with a mean of 1 (s−1) and an amplitude spectral density of 10−5(arb. units). This serves as the only spatial heterogeneity in the network. The local coupling to each node is a nearest neighbor with diagonal nodes additionally scaled by \(1/\sqrt 2\). A sweep of the amplitude of these local connections was first performed to determine the location of the ensemble bifurcation (phase transition) point, and then a slightly smaller value was used to ensure the system was proximal to this point but far enough away as to be stable under perturbations from the noise terms. An additional level of network connectivity, called diffuse coupling and represented by the symbol χ, prescribes a given nodes connection to the entire network. This is the only coupling parameter that changes in this work. The corticothalamic neural mass equations are extended to include network inputs via the excitatory cortical population as follows:

where the first term on the right-hand side of Eq. (9) defines intranode connectivity, the second term defines local nearest-neighbor connectivity scaled by \(\nu _{ee}^{{\mathrm{local}}}\) (with the diagonal additional scaled by \(1/\sqrt 2\)), and the final term defines all-to-all uniform connectivity (excluding self-connection) scaled by the diffuse coupling parameter χ.

Distance to bifurcation

The network activity incident to each node at a given time point is purely excitatory and as such can be considered as a constant positive postsynaptic potential. In line with this, a constant potential is added to the cortical excitatory population and the steady states of the neural mass are solved numerically. A sweep of this potential change elucidates a saddle-node bifurcation which represents the necessary input, as a first-order approximation, required to drive a node to its locally defined critical boundary. The bifurcation point can then be used as a reference for interpreting simulation activity post hoc. That is, at each time point the incident network activity to each node is translated into a distance to bifurcation time series for that target node, which enables parallel analysis of local activity and network induced effects.

Using this information, we defined three “working zones”: a stable, subcritical zone (χ < 1.20 mV s; blue in Fig. 2), where Pc = 0; a quasi-critical zone (1.20 < χ < 1.27 mV s; green in Fig. 2), where 0 < Pc < 100; and a saturated zone (χ > 1.27 mV s; red in Fig. 2), where Pc = 100 in the second half of the simulation. The average population-level firing rate and gain within each zone were used to create an “ideal” corticothalamic population (Fig. 2). The Pearson’s correlation matrix within each zone was then thresholded (r > 0), binarized and used to create a force-directed embedding (Fig. 3).

Response to pulse stimulus

A simulation was first run with no applied pulse stimuli for comparison with stimulus results. Then, N = 144 trails were run where a pulse stimulus (amplitude = 1 mV; width = 10 ms) was applied to a single node at t = 10 s. The cortical activity from the no-stimuli simulation is subtracted from all pulse trails. Since the noise sequence generated is the same for each trial, this allows a clear mapping of stimuli-induced response. The trials are sorted based on the target nodes average distance to bifurcation within the 8 time points pre-stimulus. For visualization purposes, the stimulus-induced response of the targeted node in each trial is averaged across upper, middle, and lower thirds of the sorted distance to bifurcation vector, and the time series is low-pass filtered with a passband frequency of 0.001 Hz. As expected, nodes closest to their bifurcation had the strongest response, and the longest timescale for decaying back to pre-stimulus levels of activity.

Network signatures of criticality

The time series of the cortical “e” population was used to create a weighted, un-thresholded connectivity matrix. A weighted and signed version of the Louvain modularity algorithm from the Brain Connectivity Toolbox68 was used to iteratively maximizes the modularity statistic, Q, for different community assignments until the maximum possible score of Q has been obtained (Eqs. 10 and 11). The modularity estimate for a given network is, therefore, a quantification of the extent to which the network may be subdivided into communities with stronger within-module than between-module connections.

where \(\nu\) is the total weight of the network (sum of all negative and positive connections), wij is the weighted and signed connection between regions i and j, eij is the strength of a connection divided by the total weight of the network, and δMiMj is set to 1 when regions are in the same community and 0 otherwise. “+” and “–” superscripts denote all positive and negative connections, respectively. In our experiment, the γ parameter was set to 1.1 (tested within a range of 0.5–2.0 for consistency across 100 iterations). Given that the community structure of the system changed substantially as a function of χ, a consensus partition was created across the whole range using the ‘consensus_und.m’ script from the Brain Connectivity Toolbox.

The participation coefficient quantifies the extent to which a region connects across all modules. This measure has previously been used to characterize diversely connected hub regions within cortical brain networks (e.g., see 69). Here, the Participation Coefficient (B) was calculated for each of the 400 cortical parcels for each subject, where κisT is the strength of the positive connections of region i to regions in module s, and κiT is the sum of strengths of all positive connections of region i. The participation coefficient of a region is therefore close to 1 if its connections are uniformly distributed among all the modules and 0 if all of its links are within its own module:

Brain state variability was calculated by taking the standard deviation of the upper triangle of the correlation matrix at each level of χ. Time-series variability was estimated using the regional mean of the standard deviation of the cortical “e” population over time. The percentage of explained variance for the top two principal components was calculated by subjecting demeaned cortical “e” population time-series at each level of χ to separate principal component analyses.

Whole-brain fMRI analysis

Minimally pre-processed fMRI data were obtained from 100 unrelated participants (mean age 29.5 years, 55% female) from the HCP database. For each participant, BOLD data from the left-right encoding session from the N-back task were acquired using multiband gradient echo-planar imaging, amounting to 4 min 51 s of data (405 individual TRs) per subject. Pre-processed1,48 but temporally unfiltered data were extracted from 333 cortical parcels70. The time points associated with each cognitively challenging task-blocks and the interspersed rest blocks were convolved with a canonical haemodynamic response function (using the spm_hrf.m function from SPM12).

To estimate functional connectivity between the 333 cortical ROIs, we used the Multiplication of Temporal Derivatives (M) technique71. M is computed by calculating the point-wise product of temporal derivative of pairwise time series (Eq. 12). The resultant score is then averaged over a temporal window, w, in order to reduce the contamination of high-frequency noise in the time-resolved connectivity data. A window length of 20 TRs was used in this study, though results were consistent across a range of w values (10–50 TRs). To ensure relatively smooth transitions between each task, connectivity analyses were performed on each individual task separately, and were subsequently concatenated. In addition, all analyses involving connectivity (or the resultant topological estimates) incorporated the junction between each task as a nuisance regressor.

where for each time point, t, the M for the pairwise interaction between region i and j is defined according to Eq. 1, where t’ is the first temporal derivative (t + 1 – t) of the ith or jth time series at time t, σ is the standard deviation of the temporal derivative time series for region i or j and w is the window length of the simple moving average. This equation can then be calculated over the course of a time series to obtain an estimate of time-resolved connectivity between pairs of regions. Time-resolved values of BT are then calculated on each weighted, signed connectivity matrix. Values of each measure were compared statistically using a series of non-parametric permutation tests72 in which the group identity (i.e., rest vs. task) was randomly shuffled in order to populate a null distribution (5000 iterations).

Gradient fitting the model to whole-brain fMRI data

Firstly, participation, regional diversity, time-series variability, and variance explained by the first two principal components are calculated on the whole-brain imaging data and the model outputs for each value of diffuse coupling. Since the absolute values of these measures do not form a fair point of comparison with outputs from our simplified corticothalamic model, we focus on their relative differences across task and rest (i.e., what interval of diffuse coupling makes the most sense of the metric changes). Thus, for each measure the difference between rest and task is calculated to form 5 gradients that are fit to the corresponding gradients of the model outputs across levels of diffuse coupling. This is done by subsampling the model outputs (Fig. 4a–c)) at progressively coarser steps sizes, calculating the gradient numerically using diff() function from MATLAB, and then finding the x value (which is a subsample interval) that minimizes the cost function. The final estimate is the average across all subsampling scales. Finally, in order to mitigate against bias for any one metric in the fit, a uniform random walk is performed on the 0–1 weightings of each gradient metric to scale its contribution to the cost function.

Two distinct approaches are used for the whole-brain and regional estimates, respectively. For the whole-brain estimates, the algorithm is free to change the upper and lower bounds of the diffuse coupling interval χ1,χ2. For the regional estimates, we use the maximum likelihood from the task estimate of diffuse coupling χtask ~ 1.26 ± 0.1 × 10−4 mV s as the upper bound for the search, and thus only the lower bound is free to change [χ1, χtask]. A virtual lesioning approach is then used, where each node is removed from the data (only a single node is ever removed at a time) and the algorithm is run to estimate the new diffuse value (relative to the task estimate). The result is an estimate of the change in diffuse coupling facilitated by each node in the network.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

Data were provided by the Human Connectome Project (HCP); the Washington University, University of Minnesota, and Oxford University Consortium (Principal Investigators David Van Essen and Kamil Ugurbil; grant no. 1U54MH091657) funded by 16 NIH institutes and centers that support the NIH Blueprint for Neuroscience Research; and the McDonnell Center for Systems Neuroscience at Washington University. These data are freely available from the original study.

References

Shine, J. M. et al. Human cognition involves the dynamic integration of neural activity and neuromodulatory systems. Nat. Neurosci. 22, 289–296 (2019).

Sporns, O., Tononi, G. & Edelman, G. M. Connectivity and complexity: the relationship between neuroanatomy and brain dynamics. Neural Netw. 13, 909–922 (2000).

Kehagia, A. A., Murray, G. K. & Robbins, T. W. Learning and cognitive flexibility: frontostriatal function and monoaminergic modulation. Curr. Opin. Neurobiol. 20, 199–204 (2010).

Bullmore, E. & Sporns, O. The economy of brain network organization. Nat. Rev. Neurosci. 13, 336–349 (2012).

Cunningham, J. P. & Yu, B. M. Dimensionality reduction for large-scale neural recordings. Nat. Neurosci. 17, 1500–1509 (2014).

Edlow, B. L. et al. Neuroanatomic connectivity of the human ascending arousal system critical to consciousness and its disorders. J. Neuropathol. Exp. Neurol. 71, 531–546 (2012).

Jones, E. G. Thalamic circuitry and thalamocortical synchrony. Philos. Trans. R. Soc. B: Biol. Sci. 357, 1659–1673 (2002).

Aertsen, A., Diesmann, M. & Gewaltig, M. O. Propagation of synchronous spiking activity in feedforward neural networks. J. Physiol. Paris 90, 243–247 (1996).

Laughlin, S. B. & Sejnowski, T. J. Communication in neuronal networks. Science 301, 1870–1874 (2003).

Deco, G. & Jirsa, V. K. Ongoing cortical activity at rest: criticality, multistability, and ghost attractors. J. Neurosci. 32, 3366–3375 (2012).

Mora-Sánchez, A., Dreyfus, G. & Vialatte, F. B. Scale-free behaviour and metastable brain-state switching driven by human cognition, an empirical approach. Cogn. Neurodyn 13, 437–452 (2019).

Muñoz, M. A. Colloquium: criticality and dynamical scaling in living systems. Rev. Mod. Phys. 90, 31001 (2018).

Cocchi, L., Gollo, L. L., Zalesky, A. & Breakspear, M. Criticality in the brain: a synthesis of neurobiology, models and cognition. Prog. Neurobiol. 158, 132–152 (2017).

Tagliazucchi, E., Balenzuela, P., Fraiman, D. & Chialvo, D. R. Criticality in large-scale brain FMRI dynamics unveiled by a novel point process analysis. Front. Physiol. 3, 1–12 (2012).

Williams-García, R. V., Moore, M., Beggs, J. M. & Ortiz, G. Quasicritical brain dynamics on a nonequilibrium Widom line. Phys. Rev. E—Stat. Nonlinear, Soft Matter Phys. 90, 1–8 (2014).

Wilting, J. & Priesemann, V. Inferring collective dynamical states from widely unobserved systems. Nat. Commun. 9, 2325 (2018).

Moretti, P. & Muñoz, M. A. Griffiths phases and the stretching of criticality in brain networks. Nat. Commun. 4, 2521 (2013).

Vojta, T. Rare region effects at classical, quantum and nonequilibrium phase transitions. J. Phys. A: Math. Gen. 39, R143–R205 (2006).

Müller, E. J., van Albada, S. J., Kim, J. W. & Robinson, P. A. Unified neural field theory of brain dynamics underlying oscillations in Parkinson’s disease and generalized epilepsies. J. Theor. Biol. 428, 132–146 (2017).

Breakspear, M. et al. A unifying explanation of primary generalized seizures through nonlinear brain modeling and bifurcation analysis. Cereb. Cortex 16, 1296–1313 (2006).

Jirsa, V. K. & Haken, H. Field theory of electromagnetic brain activity. Phys. Rev. Lett. 77, 960–963 (1996).

Nunez, P. L. The brain wave equation: a model for the EEG. Math. Biosci. 21, 279–297 (1974).

Robinson, P. A., Rennie, C. J. & Wright, J. J. Propagation and stability of waves of electrical activity in the cerebral cortex. Phys. Rev. E: Stat. Phys. Plasmas Fluids Relat. Interdiscip. Top. 56, 826–840 (1997).

Robinson, P. A., Rennie, C. J., Wright, J. J. & Bourke, P. D. Steady states and global dynamics of electrical activity in the cerebral cortex. Phys. Rev. E: Stat. Phys. Plasmas Fluids Relat. Interdiscip. Top. 58, 3557–3571 (1998).

Wilson, H. R. & Cowan, J. D. A mathematical theory of the functional dynamics of cortical and thalamic nervous tissue. Kybernetik 13, 55–80 (1973).

Wright, J. J. & Liley, D. T. J. Dynamics of the brain at global and microscopic scales: neural networks and the EEG. Behav. Brain Sci. 19, 285–295 (1996).

Deco, G., Jirsa, V. K., Robinson, P. A., Breakspear, M. & Friston, K. The dynamic brain: From spiking neurons to neural masses and cortical fields. PLoS Comput. Biol. 4, e1000092 (2008)

Rennie, C. J., Robinson, P. A. & Wright, J. J. Effects of local feedback on dispersion of electrical waves in the cerebral cortex. Phys. Rev. E: Stat. Phys. Plasmas, Fluids Relat. Interdiscip. Top. 59, 3320–3329 (1999).

Robinson, P. A. et al. Prediction of electrocnccphulographic spectra from neurophysiology. Phys. Rev. E: Stat. Soft Matter Phys. 63, 0219031–02190318 (2001).

Robinson, P. A., Rennie, C. J. & Rowe, D. L. Dynamics of large-scale brain activity in normal arousal states and epileptic seizures. Phys. Rev. E: Stat. Phys. Plasmas Fluids Relat. Interdiscip. Top. 65, 9 (2002).

Robinson, P. A., Rennie, C. J., Rowe, D. L., O’Connor, S. C. & Gordon, E. Multiscale brain modelling. Philos. Trans. R. Soc. B: Biol. Sci. 360, 1043–1050 (2005).

Roberts, J. A. & Robinson, P. A. Modeling absence seizure dynamics: Implications for basic mechanisms and measurement of thalamocortical and corticothalamic latencies. J. Theor. Biol. 253, 189–201 (2008).

Bojak, I. & Liley, D. T. J. Modeling the effects of anesthesia on the electroencephalogram. Phys. Rev. E: Stat. Nonlinear, Soft Matter Phys. 71, 1–22 (2005).

Steyn-Ross, M. L., Steyn-Ross, D. A. & Sleigh, J. W. Modelling general anaesthesia as a first-order phase transition in the cortex. Prog. Biophys. Mol. Biol. 85, 369–385 (2004).

Abeysuriya, R. G., Rennie, C. J. & Robinson, P. A. Physiologically based arousal state estimation and dynamics. J. Neurosci. Methods 253, 55–69 (2015).

Robinson, P. A., Rennie, C. J., Rowe, D. L. & O’Connor, C. Estimation of multiscale neurophysiologic parameters by electroencephalographic means. Hum. Brain Mapp. 23, 53–72 (2004).

Sanz-Leon, P. et al. NFTsim: theory and simulation of multiscale neural field dynamics PLos Comput. Biol. 14, e1006387 (2018).

Miller, P. Dynamical systems, attractors, and neural circuits [version 1; referees: 3 approved]. F1000Research 5, 1–18 (2016).

David Servan-Schreiber, H. P. & J., D. C. A network model of catecholamine effects: gain, signal-to-noise ratio, and behavior. J. Clin. Endocrinol. Metab. 4971, 892–895 (1990).

Shine, J. M. Neuromodulatory Influences on Integration and Segregation in the Brain. Trends Cogn. Sci. 23, 572–583 (2019).

Shine, J. M., Aburn, M. J., Breakspear, M. & Poldrack, R. A. The modulation of neural gain facilitates a transition between functional segregation and integration in the brain. Elife 7, 1–16 (2018).

Breakspear, M. Dynamic models of large-scale brain activity. Nat. Neurosci. 20, 340–352 (2017).

Fontenele, A. et al. Criticality between cortical states. Phys. Rev. Lett. 122, 454934 (2018).

Kinouchi, O. & Copelli, M. Optimal dynamical range of excitable networks at criticality. Nat. Phys. 2, 348–352. (2006).

Cocchi, L. et al. A hierarchy of timescales explains distinct effects of local inhibition of primary visual cortex and frontal eye fields. Elife 5, 1–17 (2016).

Honey, C. J. et al. Slow cortical dynamics and the accumulation of information over long timescales. Neuron 76, 423–434 (2012).

McIntosh, A. R. & Jirsa, V. K. The hidden repertoire of brain dynamics and dysfunction. Netw. Neurosci. 3, 994–1008 (2019).

Shine, J. M. et al. The dynamics of functional brain networks: integrated network states during cognitive task performance. Neuron 92, 544–554 (2016).

Cohen, J. R. & D’Esposito, M. The segregation and integration of distinct brain networks and their relationship to cognition. J. Neurosci. 36, 12083–12094 (2016).

Hearne, L. J., Cocchi, L., Zalesky, A. & Mattingley, J. B. Reconfiguration of brain network architectures between resting-state and complexity-dependent cognitive reasoning. J. Neurosci. 37, 8399–8411 (2017).

Parhizi, B., Daliri, M. R. & Behroozi, M. Decoding the different states of visual attention using functional and effective connectivity features in fMRI data. Cogn. Neurodyn. 12, 157–170 (2018).

Shine, J. M. et al. The low-dimensional neural architecture of cognitive complexity is related to activity in medial thalamic nuclei report the low-dimensional neural architecture of cognitive complexity is related to activity in medial thalamic nuclei. Neuron 104, 849–855 (2019).

Barch, D. M. et al. Function in the human connectome: Task-fMRI and individual differences in behavior. Neuroimage 80, 169–189 (2013).

Beggs, J. M. & Plenz, D. Neuronal avalanches in neocortical circuits. Journal of neuroscience 23.35, 11167–11177 (2003).

Samuels, E. & Szabadi, E. Functional neuroanatomy of the noradrenergic locus coeruleus: its roles in the regulation of arousal and autonomic function part II: physiological and pharmacological manipulations and pathological alterations of locus coeruleus activity in humans. Curr. Neuropharmacol. 6, 254–285 (2008).

Jones, E. G. The thalamic matrix and thalamocortical synchrony. Trends Neurosci. 24, 595–601 (2001).

Varela, C. Thalamic neuromodulation and its implications for executive networks. Front. Neural Circuits 8, 1–22 (2014).

Halassa, M. M. & Kastner, S. Thalamic functions in distributed cognitive control. Nat. Neurosci. 20, 1669–1679 (2017).

Aston-Jones, G. & Cohen, J. D. An integrative theory of locus coeruleus-norepinephrine function: adaptive gain and optimal performance. Annu. Rev. Neurosci. 28, 403–450 (2005).

Clascá, F., Rubio-Garrido, P. & Jabaudon, D. Unveiling the diversity of thalamocortical neuron subtypes. Eur. J. Neurosci. 35, 1524–1532 (2012).

Totah, N. K., Neves, R. M., Panzeri, S., Logothetis, N. K. & Eschenko, O. The locus coeruleus is a complex and differentiated neuromodulatory system. Neuron 99, 1055–1068.e6 (2018).

Varela, F., Lachaux, J. P., Rodriguez, E. & Martinerie, J. The brainweb: phase synchronization and large-scale integration. Nat. Rev. Neurosci. 2, 229–239 (2001).

Wang, R., Wang, Z. & Zhu, Z. The essence of neuronal activity from the consistency of two different neuron models. Nonlinear Dyn. 92, 973–982 (2018).

Moretti, P. & Muñoz, M. A. Griffiths phases and the stretching of criticality in brain networks. Nat. Commun. 4, 2521 (2013b).

Ghosh, A., Rho, Y., McIntosh, A. R., Kötter, R. & Jirsa, V. K. Noise during rest enables the exploration of the brain’s dynamic repertoire. PLoS Comput. Biol. 4, e1000196 (2008).

Kringelbach, M. L. et al. Dynamic coupling of whole-brain neuronal and neurotransmitter systems. Proc. Natl Acad. Sci. U. S. A. 117, 9566–9576 (2020).

Braitenberg, V. & Schuz, A. Cortex: Statistics and Geometry of Neuronal Connectivity (Springer, Berlin, 2013).

Rubinov, M. & Sporns, O. Complex network measures of brain connectivity: uses and interpretations. Neuroimage 52, 1059–1069 (2010).

Power, J. D. et al. Evidence for hubs in human functional brain networks. Neuron 79.4, 798–813 (2013).

Gordon, E. M. et al. Generation and evaluation of a cortical area parcellation from resting-state correlations. Cereb. Cortex 26, 288–303 (2016).

Shine, J. M. et al. Estimation of dynamic functional connectivity using multiplication of temporal derivatives. Neuroimage 122, 399–407 (2015).

Nichols, T. & Holmes, A. In Human Brain Function Vol. 25, 2nd edn (eds Frackowiak, R. S. J., et al.) 887–910 (Academic Press, 2003).

Schaefer, A. et al. Local-global parcellation of the human cerebral cortex from intrinsic functional connectivity MRI. Cereb. Cortex 28, 3095–3114 (2018).

Acknowledgements

The authors would like to thank Ben Fulcher for engaging discussions.

Author information

Authors and Affiliations

Contributions

E.J.M. and J.M.S. conceived of the idea. E.J.M. designed and built the model, and ran analysis. J.M.S. conducted imaging data analysis. B.R.M. provided critical methodological and conceptual input. E.J.M. and J.M.S. formulated the first draft of the manuscript. All authors provided critical feedback on the manuscript, including editing of the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Communications thanks Anthony McIntosh and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Müller, E.J., Munn, B.R. & Shine, J.M. Diffuse neural coupling mediates complex network dynamics through the formation of quasi-critical brain states. Nat Commun 11, 6337 (2020). https://doi.org/10.1038/s41467-020-19716-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-020-19716-7

This article is cited by

-

The impact of the human thalamus on brain-wide information processing

Nature Reviews Neuroscience (2023)

-

Canonical circuit computations for computer vision

Biological Cybernetics (2023)

-

A whole new world: embracing the systems-level to understand the indirect impact of pathology in neurodegenerative disorders

Journal of Neurology (2023)

-

The ascending arousal system shapes neural dynamics to mediate awareness of cognitive states

Nature Communications (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.