Abstract

With the advances in smartphone and tablet screens, as well as their processing power and software, mobile apps have been developed reporting to assess visual function. This review assessed those mobile apps that have been evaluated in the scientific literature to measure visual acuity, reading metrics, contrast sensitivity, stereoacuity, colour vision and visual fields; these constitute just a small percentage of the total number of mobile apps reporting to measure these metrics available for tablets and smartphones. In general, research suggests that most of the mobile apps evaluated can accurately mimic most traditionally paper-based tests of visual function, benefitting from more even illumination from the backlit screen and aspects such as multiple tests and versions (to minimise memorisation) being available on the same equipment. Some also utilise the in-built device sensors to monitor aspects such as working distance and screen tilt. As the consequences of incorrectly recording visual function and using this to inform clinical management are serious, clinicians must check on the validity of a mobile app before adopting it as part of clinical practice.

摘要

随着智能手机和平板电脑信息处理能力和软件的升级, 移动应用程序已被研发用于评估视功能。本综述评估了那些在文献中报道过的移动应用程序, 这些程序用于测量视力、阅读指标、对比敏感度、立体视力、色觉和视野, 而这些指标仅占报道用于平板电脑和智能手机指标总数的一小部分。总的来说, 研究表明, 大多数文献评估的移动应用程序都可以准确地模仿多数传统的纸质版视觉功能测试, 这得益于背光屏幕更均匀的照明, 以及同一设备提供的多个测试和版本 (用以限制病人记忆测试表) 等方面。一些设备还利用内置的传感器来监测工作距离和屏幕倾斜等。由于错误记录视觉功能并将其用于临床管理的后果严重, 因此临床医生在将其作为临床实践的一部分之前, 必须检查移动应用程序的有效性。

Similar content being viewed by others

Introduction

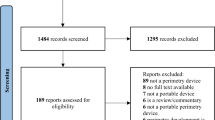

Traditional visual function tests were paper based. They were susceptible to degradation over time and with handling, it was hard to achieve even and appropriate illumination, and there was a need for multiple versions to minimise recollection bias. Software alternatives have become more common with the advent of smartphones and tablets, allowing key visual metrics to be assessed in more remote environments, but how equivalent are they to conventional testing? Technical challenges that have largely been addressed are pixel size (for near acuity optotype display), grayscale levels (for contrast testing), display size (for visual field testing) and colour rendition (for colour vision testing). On the Apple Store, a search identified over 50 apps reporting to assess visual acuity, 5 apps contrast sensitivity, 5 apps colour vision and 2 apps visual field assessment. On Google Play (Android), 13 apps reporting to assess visual acuity, 7 apps contrast sensitivity, 10 apps colour vision and 3 apps visual field assessment (January 2024). This review assesses full journal published papers validating these apps, located through a search of PubMed and Web of Science for “visual function” AND “app*” from their inception to the end of 2023, along with reviewing relevant references identified by these papers.

Visual acuity mobile Apps

Visual acuity (VA) is defined as the ‘spatial resolving capacity’ and represents the angular size of detail that is just resolvable by the observer. Minimum recognisable resolution is the most frequently used form of VA and involves the measurement of acuity using optotypes, which take the form of letters, numerals, symbols or pictures [1]. Based on VA, the World Health Organisation [2] has estimated that over two billion people suffer from near or distance vision impairment, at least half of which is preventable or not even diagnosed. Reduced VA can lead to difficulty in performing daily tasks such as driving or working, resulting in a lower quality of life (QoL) [3] and changes in VA can be indicative of pathology or alteration in refractive error [4].

Measures of VA are important in refraction, and are usually the primary measure of visual function in the diagnosis and follow up of patients with ocular pathology, as well as providing an indication of the safety of ophthalmic products / procedures [5]. Assessment of VA may be carried out by a range of personnel and charts may be physical, projected, computerised or displayed on another screen [1, 6] Despite several limitations, the traditional Snellen chart and Snellen notation (where the numerator represents testing distance, e.g. 6 m in the UK, and the denominator is the distance in metres at which the optotype height subtends 5 min arc and the stroke width subtends 1 min arc) remain in widespread clinical use. Snellen charts have differing numbers of optotypes per line, variable progression between lines, and the notation is problematic for linear representation of visual function and subsequent statistical analysis. LogMAR (log [base 10] of the MAR [expressed in minutes]) charts, introduced in the 1970s by Bailey and Lovie [7], overcome several of the disadvantages of Snellen, with 5 letters per line (each letter having a logMAR value of 0.02), uniform inter-line and inter-letter spacing, and the ability to move the chart and scale the results for non-standard testing distances. The ETDRS (Early Treatment of Diabetic Retinopathy Study) logMAR chart is well-established for research purposes, and differs from the Bailey Lovie logMAR chart in that the letters are wider and the chart was specifically designed for a 4 m testing distance, allowing for smaller examination rooms. For the assessment of near vision, logMAR, N-point, M-scale, equivalent Snellen, and Jaeger charts are available, often comprising words or paragraphs of text, rather than individual optotypes. Near charts that follow a logMAR format offer similar advantages to logMAR distance charts, and non-logMAR near charts are often truncated meaning that many patients will not be tested to threshold [6].

Mobile apps that measure VA may confer benefits for clinicians, researchers and the wider population. Such apps may allow patients, potentially with the assistance of a carer or family member, to regularly self-monitor acuity away from the clinic, which is of value when access to services is difficult or impacted by events such as the COVID-19 pandemic; COVID-19 forced a shift towards more tele-ophthalmology services and highlighted the need for reliable and valid digital tools. In regions where healthcare services are less established, apps may be used by non-specialist healthcare workers with minimal equipment, for screening and follow-up purposes. In clinic or clinical trials, apps can provide a standardised approach to the measurement and recording of VA through their backlit screens, ability to scale the letter size based on the entered working distance, options to randomise the optotypes displayed to prevent recall bias and the potential to automate the measurement process. In non-ophthalmic healthcare settings, such as emergency rooms, mobile Apps for the assessment of VA may also be useful [8].

A summary of peer-reviewed research where the performance of commercially-available mobile Apps to measure distance (Table 1) or near VA (Table 2) has been compared with a standard clinical approach and/or employed on a large scale was tabulated. Peek Acuity (Peek Vision Ltd. https://peekvision.org/solutions/peek-acuity/), available on Android only, has been the most widely studied distance VA app to date; it uses tumbling-E optotypes, reducing the barriers of literacy/ language and age, which are associated with conventional letter-based charts. The test requires calibration to the chosen testing distance (2 or 3 m) and another individual to perform the test; studies have included diverse personnel, such as caregivers in the home, schoolteachers and healthcare workers in Africa [9,10,11,12]. The majority of users questioned have reported that the App is easy to use [10, 11]. For screening children’s vision, some earlier studies suggested the sensitivity of the App in identifying reduced vision (e.g. <6/12) required improvement before more widespread use was feasible [12, 13]. A later study in Botswana [9] used Peek in a screening programme where 16% of 12,877 children examined with the app were referred for further clinical care based on vision <6/12 in the better eye, with around half of these children confirmed subsequently as needing spectacles, ocular medication, or further clinical care. The study highlighted the potential for mobile health technologies to be employed in countries similar to Botswana for nationwide vision screening programmes.

A 2022 meta-analysis [14] of the performance of mobile apps for VA assessment reported that when such apps were used by non-professionals, the accuracy was better than for professionals, a finding that was attributed to adults such as parents or schoolteachers having a better understanding of children’s responses, behaviour and moods than eye care professionals who are not known to the children being examined. The age of participants may also impact on the results obtained, with the sensitivity and diagnostic odds ratios of mobile VA apps being significantly better when adults are examined rather than young children. Overall, the body of literature to date indicates that mobile apps for the assessment of VA can be used successfully by professionals and non-professionals, in non-clinical settings, and that the apps generally perform well. Further research is needed, to include a wide range of participant ages and levels of vision, but the apps offer significant potential for the assessment and follow up of patients receiving ophthalmic care, and for children’s vision screening, especially in low-income countries.

Reading metrics

While high contrast, static VA is an important safety and disease detection metric, [15] it is not a good predictor of functional vision. [16] Most near tasks involve an element of reading, [17] which is perceived as being critical to communication and commerce in modern societies. [18] The speed at which an individual reads is fairly consistent until the critical print size is reached, after which it rapidly slows until the individual is no longer able to differentiate the optotypes (the near acuity threshold). [16, 19] Paper based reading speed charts were developed in the 1990s, [20,21,22] with the time to read paragraphs of text that sequentially decreased in size out aloud, manually timed; the results then had to be plotted to determine the supra-threshold reading speed, critical print size and near threshold, which was time consuming [23] A study comparing a digitised version of the MNRead chart found the results were similar, although the reading speed was slower on a tablet, attributed to the different method of timing the reading trials [24].

While the text and sizing can easily be digitised, using the onboard sensors allows for the working distance to be monitored through the camera, the start and end of the reading of each paragraph to be accurately timed through the microphone as well as recording to allow for incorrect syllable detection, and immediate data analysis. This approach was used when digitising the Radner chart, demonstrating a faster reading speed and lower critical print size when using the tablet app, and equivalent to better repeatability than the equivalent paper based version [25].

A novel Greek reading speed app (GDRS-test) on an Android device consisting of the time to read aloud (at a 40 cm distance) a series of 30 random two-syllable and then 30 three-syllable Greek words at the critical print size, without semantic connection, showed a moderate correlation between correct words per minute and the MNRead Chart assessed reading speed [26] However, the critical print size needed to be calculated in advance.

Contrast sensitivity

Conventional contrast sensitivity tests measure contrast detection at one spatial frequency [27], or spatial frequency at two to nine contrasts [28, 29]. A digital version of the Pelli-Robson chart, consisting of three letters for each contrast level (LogCS range of 0.15–2.25 with 0.15 LogCS steps) viewed at 80 cm was found to be more accurate and have a wider range of contrast stimuli than the paper chart, as well as the ability to assess both positive and negative polarity [30]. However, the OdySight digital version of the Pelli-Robson chart underestimated the contrast sensitivity by 0.16 logCS and the limits of agreement were large, showing it to be unreliable [31]. The Peek approach to contrast sensitivity presents a single tumbing ‘E’ at 1 m in one of four directions starting at maximum contrast and the user points in the direction of the prongs and the examiner ‘swipes’ the letter in that direction; if correct the contrast is reduced, with two incorrect direction swipes being the endpoint. This approach was highly correlated to a tumbling ‘E’ version of the Pelli-Robson chart, taking a faster but statistically similar time to complete [32]. Another study measured contrast sensitivity with sinusoidal gratings of 3, 6, 12 and 18 cycles per degree on a tablet compared to the similar Functional Acuity Contract Test (FACT) included in the Optec 6500, finding similar results [33].

Robson and Campbell originally portrayed the contrast sensitivity function as a sine wave grating with varying contrast (Y-axis) and spatial frequency (X-axis) [29]. Using a bit-stealing method, this can now be displayed on a tablet screen, with the user tracing their finger where they can see the tops of the maxima [34]; this approach showed much greater repeatability than CSV-1000 contrast test; Pelli-Robson had the best repeatability, but only assessed one spatial frequency as opposed to the app which generated a complete contrast sensitivity function in under a minute. It has been suggested that generating a curve in this way is not accurate, but instead of tracing the curve, the authors fitted their own simulation of the sinusoidal function with only 4 points [35].

Stereoacuity

A small number of stereoacuity mobile apps are available and have been evaluated in the peer-reviewed literature. The Tablet Stereo Test (TST) is an iPad-based app for the assessment of stereoacuity, based on random dot stereograms, viewed through anaglyph glasses (with red and green/blue filters to separate the images between the eyes). The observer is required to indicate the direction of the missing segment of a circle (one of four directions) and the test can be performed at multiple distances. Compared with the TNO test for near stereoacuity used at 3 m and 50 cm, there was no significant difference in median values between the app and the clinical test in adult participants [36]. The Android application SAT, also based on anaglyphs, has options to display random dot image sets including TNO, LANG, LEA, LEA contours, letters, and Pacman, taking 45–60 s to determine a staircase algorithm threshold. Despite the longer testing time, a cohort of 497 children aged 6–11 years were all able to complete testing with the app. However, the thresholds obtained from the app were statistically dissimilar to those found with conventional TNO and Weiss EKW tests, but clinically similar, and the correlations between the tests were only moderate (r = 0.49–0.53) [37].

Colour vision

Colour rendering on iPhones is considered sufficient for clinical assessment, although the five Ishihara apps (with varying number of Ishihara plates displayed) were found to vary in their colour accuracy [38] Although under simulated vision loss it has been suggested that the effect on colour vision might be underestimated [39], a comparison on an iPhone and a Samsung (Android) phone of the Eye Handbook colour vision test found no significant difference with a paper version [40] and a 92% sensitivity and 100% specificity [41]. However, while the Eye2Phone Ishihara test was found to have a high sensitivity (100%), specificity (95%) and coefficient of agreement (r = 0.95), the Colour Vision Test app was much poorer (100% sensitivity, 55% specificity and coefficient of agreement r = 0.535) [42]. Displaying the test on a Liquid Crystal Display (LCD) monitor also resulted in a 100% sensitivity and 99% specificity [43].

A comparison between paper and tablet displayed Velhagen/Broschmann/Kuchenbecker colour plates found 83% coincidence in findings in those with colour vision deficiencies and 89% in those considered colour normal [44] An app of the Farnsworth-Munsell Hue-100 cap ordering test compared to the original analogue version found poor comparability of results [45] A web-based Colour Assessment and Diagnostic test from City University (which uses random luminance masking) showed a high sensitivity (93–100%), specificity (83–100%) and coefficient of agreement (0.83–0.96) compared to the Nagel anomaloscope “gold standard”, Ishihara and the FM-100 hue, although the HRR only had a specificity of 33% and a coefficient of repeatability of 0.33 [46].

A novel gamified tablet-based ColourSpot test requires children, as young as four, to tap the spot in the grey background ‘sky’ to reveal an animation. Each target type (protan, deutan, tritan) is presented at high saturation and if successfully identified, its saturation is multiplied by a factor of 0.5 for the next trial, thus decreasing the saturation of targets of that type; if a distractor is tapped, the saturations of all three target types are multiplied by a factor of 1.5 for the next trial, thus making the next trial easier. This approach achieved a 100% sensitivity and 97% specificity for classifying a colour vision defect compared to the Ishihara [47].

Visual field assessment

Smartphones can be mounted in head-mounted visors to allow targets to be presented across approximately a 30° visual field. While this coverage is insufficient to detect peripheral defects, it has been established that only 1–2% of defects that are not glaucomatous have abnormalities beyond 30° without an additional central defect [48] Smartphone-based campimetry (Sb-C) [49] has been used to simulate a 59 test position threshold Octopus G1 programme showing a high (r = 0.815) correlation and moderate (r = 0.591) re-test reliability. “Visual Fields Easy” (VFE) is a suprathreshold iPad application, which when compared against the Humphrey Frequency Doubling Technology N-30-5, took on average 2.4 min longer, and only had a sensitivity of 67% and specificity of 77% [50]. Other studies compared the VFE with the Humphrey SITA Fast 24-2, finding sensitivity (78/90/97%) and specificity (53/48/70%) increased with glaucoma severity from mild to moderate to severe (respectively) [51, 52]. Another approach has been the Melbourne Rapid Fields (MRF) with a radial test pattern comprised of 66 test locations [53], which was similar in speed to the Humphrey 24-2 SITA fast and faster than SITA standard, was highly repeatable and had an inter-class correlation coefficient of 0.71–0.93 [54, 55].

As part of the Vision Impairment Screening Assessment (VISA) Stroke Vision app, the dynamic visual field test involves the user viewing a red fixation cross and tapping the screen when they detect a black target moving towards this fixation point [56]; this demonstrated a 79% sensitivity and 88% specificity compared to Goldmann or Octopus kinetic perimetry [57] and 0.70 Kappa agreement with confrontation fields [58].

As part of Read-Right post-stroke therapy, their app binocularly assesses visual fields with an adaptive algorithm testing of six points in each hemi-field, at 1, 2.5, 5 and 10° on the horizontal meridian and two additional points above and below the horizontal meridian at 2.5°; points are displayed for 100 ms at 5 dB suprathreshold, with the points closer to fixation having reduced contrast. Compared with Humphrey 10-2 and 24-2 perimetry in patients with unilateral homonymous visual field defects, the sensitivity (≥79%) and specificity (≥75%) for points along the horizontal meridian were best [59].

Conclusions

Mobile apps can mimic most traditionally paper-based tests of visual function. They can also benefit from more even illumination from the backlit screen, randomisation, and use of the in-built sensors to monitor aspects such as working distance and screen tilt. These features are particularly important when home assessment is advocated. However, this review only assessed those apps that have been evaluated in the scientific literature which constitute just a small percentage of the total number of apps available for tablets and smartphones. The consequences of incorrectly recorded visual function and using this to inform clinical management are serious, and therefore clinicians must check on the validity of a mobile app before adopting it as part of clinical practice.

References

Williams MA, Moutray TN, Jackson AJ. Uniformity of visual acuity measures in published studies. Invest Ophthalmol Vis Sci. 2008;49:4321–7.

World_Health_Organisation. Blindness and vision impairment. 2023: Available online at: https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment. Accessed 28th December 2023.

Benítez-Del-Castillo J, Labetoulle M, Baudouin C, Rolando M, Akova YA, Aragona P, et al. Visual acuity and quality of life in dry eye disease: proceedings of the OCEAN group meeting. Ocul Surf. 2017;15:169–78.

Durán TL, García-Ben A, Méndez VR, Alcázar LG, García-Ben E, García-Campos JM. Study of visual acuity and contrast sensitivity in diabetic patients with and without non-proliferative diabetic retinopathy. Int Ophthalmol. 2021;41:3587–92.

Koch DD, Kohnen T, Obstbaum SA, Rosen ES. Format for reporting refractive surgical data. J Cataract Refract Surg. 1998;24:285–7.

Caltrider D, Gupta A, Tripathy K Evaluation of Visual Acuity. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2023 Jan-. Available from: https://www.ncbi.nlm.nih.gov/books/NBK564307/ Accessed 564328th December 562023.

Bailey IL, Lovie JE. New design principles for visual acuity letter charts. Am J Optom Physiol Opt. 1976;53:740–5.

Pathipati AS, Wood EH, Lam CK, Sáles CS, Moshfeghi DM. Visual acuity measured with a smartphone app is more accurate than Snellen testing by emergency department providers. Graefe’s Arch Clin Exp Ophthalmol. 2016;254:1175–80.

Andersen T, Jeremiah M, Thamane K, Littman-Quinn R, Dikai Z, Kovarik C, et al. Implementing a school vision screening program in botswana using smartphone technology. Telemed E-Health. 2020;26:255–8.

Bastawrous A, Rono HK, Livingstone IA, Weiss HA, Jordan S, Kuper H, et al. Development and validation of a smartphone-based visual acuity test (peek acuity) for clinical practice and community-based fieldwork. JAMA Ophthalmol. 2015;133:930–7.

Davara ND, Chintoju R, Manchikanti N, Thinley C, Vaddavalli PK, Rani PK, et al. Feasibility study for measuring patients’ visual acuity at home by their caregivers. Indian J Ophthalmol. 2022;70:2125–30.

Rono HK, Bastawrous A, Macleod D, Wanjala E, Di Tanna GL, Weiss HA, et al. Smartphone-based screening for visual impairment in Kenyan school children: a cluster randomised controlled trial. Lancet Glob Health. 2018;6:e924–e932.

de Venecia B, Bradfield Y, Trane RM, Bareiro A, Scalamogna M. Validation of peek acuity application in pediatric screening programs in Paraguay. Int J Ophthalmol. 2018;11:1384–9.

Suo L, Ke X, Zhang D, Qin X, Chen X, Hong Y, et al. Use of mobile apps for visual acuity assessment: systematic review and meta-analysis. JNIR Mhealth Uhealth. 2022;10:e26275.

Rosin B, Banin E, Sahel JA. Current status of clinical trials design and outcomes in retinal gene therapy. Cold Spring Harb Perspect Med. 2023;a041301. https://doi.org/10.1101/cshperspect.a041301. [Epub ahead of print].

Gupta N, Wolffsohn JS, Naroo SA. Comparison of near visual acuity and reading metrics in presbyopia correction. J Cataract Refract Surg. 2009;35:1401–9.

Yeatman JD, White AL. Reading: the confluence of vision and language. Annu Rev Vis Sci. 2021;7:487–517.

Sacconaghi M. Why the American public supports twenty-first century learning. N. Dir Youth Dev. 2006;39-45:11–32.

Radner W. Reading charts in ophthalmology. Graefes Arch Clin Exp Ophthalmol. 2017;255:1465–82.

Radner W, Willinger U, Obermayer W, Mudrich C, Velikay-Parel M, Eisenwort B. A new reading chart for simultaneous determination of reading vision and reading speed]. Klin Monbl Augenheilkd. 1998;213:174–81.

Stifter E, Konig F, Lang T, Bauer P, Richter-Muksch S, Velikay-Parel M, et al. Reliability of a standardized reading chart system: variance component analysis, test-retest and inter-chart reliability. Graefes Arch Clin Exp Ophthalmol. 2004;242:31–39.

Legge GE, Ross JA, Luebker A, LaMay JM. Psychophysics of reading. VIII. The Minnesota low-vision reading test. Optom Vis Sci. 1989;66:843–53.

Patel B, Elliott DB, Whitaker D. Optimal reading speed in simulated cataract: development of a potential vision test. Ophthalmic Physiol Opt. 2001;21:272–6.

Calabrese A, To L, He Y, Berkholtz E, Rafian P, Legge GE. Comparing performance on the MNREAD iPad application with the MNREAD acuity chart. J Vis. 2018;18:8.

Kingsnorth A, Wolffsohn JS. Mobile app reading speed test. Br J Ophthalmol. 2015;99:536–9.

Almaliotis D, Athanasopoulos GP, Almpanidou S, Papadopoulou EP, Karampatakis V. Design and validation of a new smartphone-based reading speed app (GDRS-Test) for the greek speaking population. Clin Optom. 2022;14:111–24.

Pelli D, Robson JG, Wilkins AJ. The design of a new letter chart for measuring contrast sensitivity. Clin Vis Sci. 1988;2:187–99.

Corwin TR, Richman JE. Three clinical tests of the spatial contrast sensitivity function: a comparison. Am J Optom Physiol Opt. 1986;63:413–8.

Campbell FW, Robson JG. Application of Fourier analysis to the visibility of gratings. J Physiol. 1968;197:551–66.

Hwang AD, Peli E Positive and negative polarity contrast sensitivity measuring app. Is&T International Symposium On Electronic Imaging 2016;2016.

Brucker J, Bhatia V, Sahel JA, Girmens JF, Mohand-Said S. Odysight: a mobile medical application designed for remote monitoring-a prospective study comparison with standard clinical eye tests. Ophthalmol Ther. 2019;8:461–76.

Habtamu E, Bastawrous A, Bolster NM, Tadesse Z, Callahan EK, Gashaw B, et al. Development and validation of a smartphone-based contrast sensitivity test. Transl Vis Sci Technol. 2019;8:13.

Rodríguez-Vallejo M, Remón L, Monsoriu JA, Furlan WD. Designing a new test for contrast sensitivity function measurement with iPad. J Optom. 2015;8:101–8.

Kingsnorth A, Drew T, Grewal B, Wolffsohn JS. Mobile app Aston contrast sensitivity test. Clin Exp Optom. 2016;99:350–5.

Tardif J, Watson MR, Giaschi D, Gosselin F. The curve visible on the campbell-robson chart is not the contrast sensitivity function. Front Neurosci. 2021;15:626466.

Rodríguez-Vallejo M, Ferrando V, Montagud D, Monsoriu JA, Furlan WD. Stereopsis assessment at multiple distances with an iPad application. Displays. 2017;50:35–40.

Bonfanti S, Gargantini A, Esposito G, Facchin A, Maffioletti M, Maffioletti S. Evaluation of stereoacuity with a digital mobile application. Graefes Arch Clin Exp Ophthalmol. 2021;259:2843–8.

Dain SJ, AlMerdef A. Colorimetric evaluation of iPhone apps for colour vision tests based on the Ishihara test. Clin Exp Optom. 2016;99:264–73.

Zhao J, Fliotsos MJ, Ighani M, Eghrari AO. Comparison of a smartphone application with ishihara pseudoisochromatic plate for testing colour vision. Neuro-Ophthalmol. 2019;43:235–9.

Khizer MA, Ijaz U, Khan TA, Khan S, Liaqat T, Jamal A, et al. Smartphone color vision testing as an alternative to the conventional Ishihara Booklet. Cureus. 2022;14:e30747.

Ozgur OK, Emborgo TS, Vieyra MB, Huselid RF, Banik R. Validity and acceptance of color vision testing on smartphones. J Neuro-Ophthalmol. 2018;38:13–16.

Sorkin N, Rosenblatt A, Cohen E, Ohana O, Stolovitch C, Dotan G. Comparison of ishihara booklet with color vision smartphone applications. Optom Vis Sci. 2016;93:667–72.

Marey HM, Semary NA, Mandour SS. Ishihara electronic color blindness test: an evaluation study. 2015;3:67–75.

Tsimpri P, Kuchenbecker J. Investigation of color vision using pigment color plates and a tablet PC. Klinische Monatsblatter Fur Augenheilkd. 2016;233:856–9.

Liebold F, Schmitz J, Hinkelbein J. Evaluation of an online colour vision assessment tool. Flugmed Tropenmedizin Reisemedizin. 2022;29:109–12.

Seshadri J, Christensen J, Lakshminarayanan V, Bassi C. Evaluation of the new web-based “Colour Assessment and Diagnosis” test. Optom Vis Sci. 2005;82:882–5.

Tang TR, Alvaro L, Alvarez J, Maule J, Skelton A, Franklin A, et al. ColourSpot, a novel gamified tablet-based test for accurate diagnosis of color vision deficiency in young children. Behav Res Methods. 2022;54:1148–60.

Wirtschafter JD, Hard-Boberg AL, Coffman SM. Evaluating the usefulness in neuro-ophthalmology of visual field examinations peripheral to 30 degrees. Trans Am Ophthalmol Soc. 1984;82:329–57.

Grau E, Andrae S, Horn F, Hohberger B, Ring M, Michelson G. Teleglaucoma using a new smartphone-based tool for visual field assessment. J Glaucoma. 2023;32:186–94.

Kitayama K, Young AG, Ochoa A, Yu F, Wong KYS, Coleman AL. The agreement between an iPad visual field app and Humphrey frequency doubling technology in visual field screening at health fairs. J Glaucoma. 2021;30:846–50.

Ichhpujani P, Thakur S, Sahi RK, Kumar S. Validating tablet perimetry against standard Humphrey Visual Field Analyzer for glaucoma screening in Indian population. Indian J Ophthalmol 2021;69:87–91.

Johnson CA, Thapa S, Kong YXG, Robin AL. Performance of an iPad application to detect moderate and advanced visual field loss in Nepal. Am J Ophthalmol. 2017;182:147–54.

Vingrys AJ, Healey JK, Liew S, Saharinen V, Tran M, Wu W, et al. Validation of a tablet as a tangent perimeter. Transl Vis Sci Technol. 2016;5:3.

Prea SM, Kong YXG, Mehta A, He MG, Crowston JG, Gupta V, et al. Six-month longitudinal comparison of a portable tablet perimeter with the Humphrey field analyzer. Am J Ophthalmol. 2018;190:9–16.

Kong YXG, He M, Crowston JG, Vingrys AJ. A comparison of perimetric results from a tablet perimeter and Humphrey field analyzer in glaucoma patients. Transl Vis Sci Technol. 2016;5:2–2.

Tarbert CM, Livingstone IA, Weir AJ. Assessment of visual impairment in stroke survivors. Annu Int Conf IEEE Eng Med Biol Soc. 2014;2014:2185–8.

Quinn TJ, Livingstone I, Weir A, Shaw R, Breckenridge A, McAlpine C et al. Accuracy and feasibility of an android-based digital assessment tool for post stroke visual disorders-The StrokeVision App. Front Neurol. 2018;9:146.

Fiona JR, Lauren H, Claire H, Alison B, Victoria S, Terry P, et al. Vision screening assessment (VISA) tool: diagnostic accuracy validation of a novel screening tool in detecting visual impairment among stroke survivors. BMJ Open. 2020;10:e033639.

Nato K, Yean-Hoon O, Maurice MB, James A, Plant GT, Alexander PLA. ‘web app’ for diagnosing hemianopia. J Neurol Neurosurg Psychiatry. 2012;83:1222.

Andersen T, Jeremiah M, Thamane K, Littman-Quinn R, Dikai Z, Kovarik C, et al. Implementing a School Vision Screening Program in Botswana Using Smartphone Technology. Telemed J E Health. 2020;26:255–8.

Satgunam P, Thakur M, Sachdeva V, Reddy S, Rani PK. Validation of visual acuity applications for teleophthalmology during COVID-19. Indian Journal of Ophthalmology. 2021;69:385–90.

Bhaskaran A, Babu M, Abhilash B, Sudhakar NA, Dixitha V. Comparison of smartphone application-based visual acuity with traditional visual acuity chart for use in tele-ophthalmology. Taiwan J Ophthalmol. 2022;12:155–63.

Davara N, Chintoju R, Manchikanti N, Thinley C, Vaddavalli P, Rani P, et al. Feasibility study for measuring patients' visual acuity at home by their caregivers. Indian Journal of Ophthalmology. 2022;70:2125–30.

Anitha J, Manasa M, Khanna NS, Apoorva N, Paul A. A Comparative Study on Peek (Smartphone Based) Visual Acuity Test and LogMAR Visual Acuity. Test. tnoa Journal of Ophthalmic Science and Research. 2023;61:94–97.

Nik Azis NN, Chew FLM, Rosland SF, Ramlee A, Che-Hamzah J. Parents' performance using the AAPOS Vision Screening App to test visual acuity in Malaysian preschoolers. Journal of American Association for Pediatric Ophthalmology and Strabismus. 2019;23:268.e261–268.e266.

Han X, Scheetz J, Keel S, Liao C, Liu C, Jiang Y, et al. Development and Validation of a Smartphone-Based Visual Acuity Test (Vision at Home). Transl Vis Sci Technol. 2019;8:27.

Tiraset N, Poonyathalang A, Padungkiatsagul T, Deeyai M, Vichitkunakorn P, Vanikieti K. Comparison of visual acuity measurement using three methods: Standard etdrs chart, near chart and a smartphone-based eye chart application. Clinical Ophthalmology. 2021;15:859–69.

Hazari H, Curtis R, Eden K, Hopman WM, Irrcher I, Bona MD. Validation of the visual acuity iPad app Eye Chart Pro compared to the standard Early Treatment Diabetic Retinopathy Study chart in a low-vision population. Journal of Telemedicine and Telecare. 2022;28:680–6.

Raffa LH, Balbaid NT, Ageel MM. "Smart Optometry" phone-based application as a visual acuity testing tool among pediatric population. Saudi Medical Journal. 2022;43:946–53.

Racano E, Malfatti G, Pertile R, Delle Site R, Romanelli F, Nicolini A. A novel smartphone App to support the clinical practice of pediatric ophthalmology and strabismus: the validation of visual acuity tests. European Journal of Pediatrics. 2023;182:4007–13.

Karampatakis V, Almaliotis D, Talimtzi P, Almpanidou S. Design and Validation of a Novel Smartphone-Based Visual Acuity Test: The K-VA Test. Ophthalmology and Therapy. 2023;12:1657–70.

Katibeh M, Sanyam SD, Watts E, Bolster NM, Yadav R, Roshan A et al. Development and Validation of a Digital (Peek) Near Visual Acuity Test for Clinical Practice, Community-Based Survey, and Research. Translational Vision Science and Technology 2022;11.

Iskander M, Hu G, Sood S, Heilenbach N, Sanchez V, Ogunsola T et al. Validation of the New York University Langone Eye Test Application, a Smartphone-Based Visual Acuity Test. Ophthalmology Science 2022; 2.

Kim DG, Webel AD, Blumenkranz MS, Kim Y, Yang JH, Yu SY, et al. A Smartphone-Based Near-Vision Testing System: Design, Accuracy, and Reproducibility Compared With Standard Clinical Measures. Ophthalmic Surgery Lasers and Imaging Retina. 2022;53:79–84.

Author information

Authors and Affiliations

Contributions

TB was responsible for designing the review protocol, conducting the search, screening potentially eligible studies, extracting and analysing data, interpreting results, updating reference lists and creating the ’Summary of findings’ tables. JSW contributed to designing the review protocol and screening potentially eligible studies. He was responsible for writing elements the report, extracting and analysing data and interpreting results. ALS contributed to designing the review protocol and screening potentially eligible studies. She was responsible for writing elements the report, extracting and analysing data, interpreting results and creating the ‘Summary of findings’ tables.

Corresponding author

Ethics declarations

Competing interests

JSW is a Director of Wolffsohn Research Ltd which created two of the mobile apps included in the review.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bano, T., Wolffsohn, J.S. & Sheppard, A.L. Assessment of visual function using mobile Apps. Eye (2024). https://doi.org/10.1038/s41433-024-03031-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41433-024-03031-2