Abstract

The resolution of conventional optical equipment is always restricted by the diffraction limit, and improving on this was previously considered improbable. Optical super-resolution imaging, which has recently experienced rapid growth and attracted increasing global interest, will result in applications in many domains, benefiting fields such as biology, medicine and material research. This review discusses the contributions of different researchers who identified the diffractive barrier and attempted to realize optical super-resolution. This is followed by a personal viewpoint of the development of optical nanoscopy in recent decades and the road towards the next generation of optical nanoscopy.

Similar content being viewed by others

INTRODUCTION

Optical microscopy is regarded as one of the most significant tools in the development of science and technology. Since its initial invention in the late sixteen century, the microscope has earned a reputation of enabling the visualization of objects (or fine structures) that are usually invisible to the naked eye, thus shaping various disciplines such as biology, medicine and materials science. The capability of this technique, to sketch the boundary of microstructures, measure surface morphology and localize specified molecule distributions in vivo, has driven modern research. Without optical microscopy, our knowledge of the ‘microworld’ would be severely impaired.

Apparently, the image quality, in particular the resolution, is the core determinant of the performance of a given microscope. It was not until nearly 300 years after its invention, however, that the diffractive nature of light and its potential influence on the resolution was seriously considered. After G. Airy described his ‘Airy disc’ in 1835—a typical diffraction pattern created by the light focused by a lens with a circular aperture—Abbe1 established himself as the well-recognized pioneer who explicitly described and formalized the diffractive limit for the first time. Although his landmark paper in 18731 did not contain even a simple formula, Abbe clearly stated that the resolution of a general microscope was limited to approximately half of the working wavelength modified by the numerical aperture (NA) of the objective lens. Inspired by Abbe, the famous equation that defines the diffractive limit was eventually derived by von Helmholtz2 and later confirmed experimentally by Stephenson3 in 1877. The diffraction limit is generally expressed as:

where λ is the working wavelength, n is the refractive index of the medium and θ is the half angle over which the objective can gather light from the specimen. n and sin θ are collectively named the NA of the objective lens. In the axial direction, the resolution is even worse, and Equation (1) should be rewritten as:4

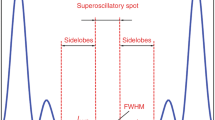

Extensive discussion of the relationship between diffraction and resolution continued after Abbe’s diffractive limit was illustrated. An urgent and practical problem to address, however, was determining an appropriate benchmark for resolution as it was particularly difficult to compare instruments with different point spread functions (PSFs). Of the many diverse resolution criterions that were proposed, the Rayleigh criterion (1874)5 and the full-width half-maximum (FWHM) method proposed by Houston (1927)6 gradually became the preferred choices. The Rayleigh criterion can be concisely written as follows: two-point sources are regarded as just resolved when the principal diffraction maximum of one image coincides with the first minimum of the other. In contrast, FWHM define the resolution as the difference between two points of the PSF at which the intensity is equal to the half the maximum PSF value. The FWHM method possesses an additional merit over the Rayleigh criterion in that it is more robust and therefore reliable for microscopes where the intensity distribution (of the focal spot) does not fall to zero, which was common in most practical applications due to background noise, an imperfect polarization state and/or lens aberrations.

Abbe’s work is enormously influential because it not only finds and defines the diffractive limit (mathematically), but also supplies a fundamental guideline for enhancement of the resolution and improvement of sample visualization. By shortening the working wavelength or increasing the NA of the system, the resolution of the microscope can be improved to some extent. Based on this principle, the prototype ultraviolet microscope was constructed by Köhler7 in 1904, while X-rays8,9 were later introduced into the micro-imaging system. The natural progression of this work was the realization of electron microscopy,10 where electrons with a critically shorter de Broglie wavelength are used for imaging. Improvements of the NA, on the other hand, began with the first oil immersion lens,11 which appeared even before Abbe’s period (although the original intention was to correct aberrations). By the 1990s, the technique of filling the objective space with a solid material of high refractive index (for example, the solid immersion lens (SIL)12) yielded higher magnification and spatial resolution than other commercially available objective lenses. However, both strategies have the following drawbacks: (i) ultraviolet light is not always desirable owing to the high risk of irreversible damage to samples, especially biological cells (the enhanced scattering in the tissue also result in a much smaller penetration depth); and (ii) a high refractive index is always followed by absorption and chromatic dispersion, which has a significant impact on the image quality.

These dilemmas drove the search for other alternatives to satisfy the increasing demand for improved resolution. One inspired idea was to reshape the PSF of the focal spot to decrease the FWHM: the apodization method.13 Proposed in 1952 by Di Francia,14 this method had the advantage of sharpening the central maximum of the focal spot at the expense of larger side lobes. This defect made it unsuitable for wide-field optical microscopes, creating artifacts and blurring the image. Nevertheless, it indirectly resulted in the advent of confocal scanning laser microscopy,15 which was regarded as the most epochal affair in the mid of twentieth century. The introduction of a pinhole into the optical path fundamentally changed the imaging mode of conventional microscopy, where the entire image of the sample could now be obtained either by rastering the sample or by moving a laser beam or pinhole disk. The PSF of the confocal scanning laser microscopy system is given by:16

where Hconv1 and Hconv2 are the PSFs of illumination and detection, respectively; P is the aperture function of the pinhole; and t and w are the unit vectors in terms of optical coordinates. If the size of pinhole is infinitely small, Equation (3) can further be simplified as:16

In this way, the resolution of the confocal microscope can be approximately 1.4 times better than that of conventional microscopy in both the lateral and axial directions.16 In other words, the light emanating from the out-of-focus plane is blocked by the pinhole, and only the in-focus light can be detected. Hence, the resolution and contrast originally negated by out-of-focus information can be recovered using a confocal microscope. Confocal scanning laser microscopy, combined with fluorescence microscopy developed by Heimstäd7 and others years before, remains to this day indispensable as a powerful tool to image, localize and identify the target molecules labeled by fluorophores, and currently occupies the desktops of modern biological laboratories.

To improve the axial resolution and enable optical sectioning and three-dimensional (3D) reconstruction, new techniques have been developed since the 1980s. For example, in 1983, Axelrod17 set up the first total internal reflection fluorescence microscope (TIRF). He used properties of total internal reflection, such as a small penetration depth and exponential decrease of the evanescent wave intensity, to produce exquisite z-axis sensitivity. Using two opposite objective lenses to expand the total aperture and thus enhance the axial resolution was another popular approach. Examples of this include 4Pi microscopy (1991)18,19, standing wave microscopy (1993),20 incoherent illumination interference image interference microscopy (1995)21 and image inverting interferometry (2009).22 Another technique based on the idea of setting the illumination path perpendicular to the detection one. Light sheet microscopy (1993)23 had the advantage of visualizing large specimens. Besides the advent of new optical facilities, mathematical algorithms also contributed to the growing trend of resolution enhancement. Among the numerous algorithms that were developed, the key advance was made in 1983 by Agard and Sedat24 who published the deconvolution process: a mathematical method for deblurring an image.

Abbe deserves respect for his conspicuous foresight and innovation, both of which fundamentally changed the path of developmental microscopy research. On the other hand, Abbe’s enormous influence also restricted the imagination of scientists. In the century that followed his time, few attempts were made to overcome the diffraction limit irrespective of the increasing need for better spatial resolution. Although technical progress continued, some of which were inspiring (as mentioned above), the resolution was still diffraction-limited; it was still considered improbable to visualize fine structures below 100 nm using visible light. Only recently have scientists reviewed and exploited this question once again and developed other innovative strategies that can break Abbe’s diffraction limit through other innovative strategies.

EARLY ENDEAVORS

After achieving the maximum theoretical resolution, researchers faced a bottleneck with regard to further enhancing the spatial resolution of microscopes that use visible light. Yet many dynamic processes, such as viruses or biological and chemical reactions, or static properties such as the surface roughness of a material, require accurate imaging on the sub-100-nm scale. The challenge of substantially breaking the diffraction limit in order to obtain optical super-resolution had become a hot spot for instrumentation research.

In fact, researchers had long been aware of the theoretical basis of breaking the diffractive limit. The presence of evanescent waves was first postulated by Francia14 in 1942 and revealed experimentally in 1949. Compared with normal propagation waves, an evanescent wave is characterized as a near-field standing wave with an intensity that decays exponentially with distance from the boundary. The wave vector of evanescent waves has the form:

where  and

and  are the wave vectors parallel and perpendicular to the boundary, respectively. It follows that the parallel component of the evanescent wave will be larger than for a general propagation wave and corresponds to the information of finer details. In other words, the subdiffraction-limited detail can be visualized once the evanescent wave is captured and projected to the far-field. Nevertheless, due to technical limits, an additional quarter century passed before far-field optical super-resolution was realized.

are the wave vectors parallel and perpendicular to the boundary, respectively. It follows that the parallel component of the evanescent wave will be larger than for a general propagation wave and corresponds to the information of finer details. In other words, the subdiffraction-limited detail can be visualized once the evanescent wave is captured and projected to the far-field. Nevertheless, due to technical limits, an additional quarter century passed before far-field optical super-resolution was realized.

The first application that triggered the use of evanescent waves in microscopy was near-field scanning optical microscopy (NSOM)25 in 1972. Using a probe to scatter and collect the evanescent wave in the near-field and recover high spatial frequencies (Figure 1), NSOM could successfully resolve details with dimensions below the diffraction limit. This demonstrated the potential for molecule-scale spatial localization and imaging, and inspired other scientists to develop other probe detection techniques such as scanning tunneling microscopy (1982),26 and atom force microscopy (1986).27 Although the resolution of the image captured by NSOM is superior to most other microscopes, the probe-detection method makes the whole system complex and slows down the imaging speed. The resolution of early NSOM was also limited by the size of the aperture (a result of a tradeoff between the resolution and signal-to-noise ratio), but this problem was consequently solved by the introduction of a metal tip probe.28,29,30

Schematic of the NSOM. The resolution of the NSOM relies on two key factors: the aperture size of the probe and the distance between the tip and the sample. The probe can perform either as the source (for near-field illumination), as the detector (for collection of the evanescent light scattered by the sample), or both. NSOM, near-field scanning optical microscopy.

Evanescent waves were then used in the TIRF microscope as the illumination light. Although the TIRF microscope does not utilize the full effectiveness of the evanescent wave for super-resolution, the chief advantages of this development are a thin optical sectioning and a lower background noise, both of which (in theory) rely on the decay of the excitation intensity along the perpendicular direction.

The three decades following the development of NSOM and TIRF have witnessed explosive advances in optical super-resolution with visible light. Irrespective of the technical diversity, illumination and detection are two basic modules that all nanoscopes should have, thereby providing a simple and clear way to catalog the available systems (Figure 2). The following sections will center on the discussion of these solutions and the way in which they overcame the unfavorable aspects of diffraction.

DETECTION OF THE EVANESCENT WAVE

Amplifying the evanescent waves before they decay to an undetectable level is the core challenge for evanescent wave detection. In 2000, Pendry31 published his epochal paper predicting a ‘perfect lens’ made of a slab of material with negative refractive index. Pendry explicitly derived formulae that led to the conclusion that such a perfect lens had the power to focus all Fourier components of a two-dimensional (2D) image (i.e., both propagation and evanescent waves could contribute to the formation of images). The transition coefficients of the perfect lens for both s- and p-polarized fields can be written as:

where d is the thickness of the slab; ε and μ are the dielectric function and the magnetic permeability, respectively; and kz and k′z are the wave vectors of the beam in the vacuum and negative refractive index material, respectively. A practical dilemma that limits the realization of the perfect lens is a lack of natural negative refractive index material for the visible scale. A number of scientists have attempted to create artificial negative refractive index materials—the ‘metameterial’, while others have turned to alternatives. As indicated by Pendry himself, if the light is absolutely p-polarized, the dependence of the transition coefficient on μ can be eliminated. Hence, it is possible to partially realize (the function of) the perfect lens by incorporating a p-polarized incident beam and a thin metallic film. This conjecture was experimentally confirmed in 2005 by Zhang and his colleagues,32,33 who used a silver superlens (Figure 3a) to successfully achieve subdiffraction-limited optical imaging. The evanescent wave was amplified in the thin metal film, thereby generating a subdiffraction image on the other side (Figure 3e), while the distance between the original object and the image was much larger than the general penetration depth of the evanescent field. Two years later, Zhang’s group further optimized their original implementation and projected the image to the far-field (Figure 3b). This far-field superlens (FSL)34 combined the original physical model with the theory of frequency shift35 and enabled a controllable fashion of frequency conversion (Figure 3f). The high frequency component, which carries subdiffraction information corresponding to the evanescent waves, can thus be shifted back to propagation mode. The conversion relationship can be expressed mathematically as:

Schematic of (a) a superlens, (b) an FSL, (c) a 2D FSL and (d) the hyperlens proposed by Zhang’s group. (e–g) Theoretical comparison of the various optical lenses. Although all of these techniques originate from a similar principle, the technical details are actually quite different. In e, the superlens can only magnify the amplitude of evanescent waves. In f, FSL and 2D FSL extend beyond the conventional function by converting the frequencies to the propagation mode, so that super-resolution signals can readily be captured in the far-field. In g, the hyperlens is a piece of artificial meta-material, in which the wave vectors of evanescent waves reduce until they can propagate in free space. Figure reprinted with permission: a, Ref. 32, ©2005 AAAS; b, Ref. 34, ©2007 ACS; c, Ref. 36, ©2007 ACS; d, Ref. 37, ©2007 AAAS. 2D, two-dimensional; FSL, far-field superlens.

where kin is the wave vector of the object; kΛ=2π/Λ; m is the diffraction order; and Λ is the grating period of the FSL. The geometrical structure of the FSL ensures that the transition of diffractive orders other than −1 will be eliminated so that ‘one-to-one’ conversion—essential for the unambiguous projection of subdiffraction details to the far-field—can be realized. Xiong et al.36 expanded the super-resolution capability of the FSL to two dimensions in the same year, using a modified, multilayer grating (Figure 3c). Yet the magnification of subdiffraction features into the far-field was still impossible until the hyperlens was demonstrated37 (Figure 3d). In the hyperlens, a sandwich-like half-cylindrical cavity that will magnify the object while the evanescent waves become propagation waves (a piece of artificial meta-material), is fabricated. In this strongly anisotropic meta-material, the wave vectors of the propagation waves gradually decrease so that subdiffraction information can be detected in air (Figure 3g).

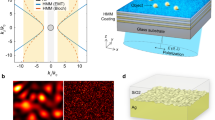

Another option originated from the SIL, where the core device was a transparent dielectric sphere or hemisphere. The nanoscale lens in SIL-type implementation (nSIL)38,39 improves the resolution by 25% compared with regular (macroscopic) SIL. By adding an annular aperture40 to the nSIL, additional optimization of the resolution is achievable as the diffraction lobes are narrowed and subcritical rays are blocked—avoiding aberrations. To further enhance the resolution, in 2011, Wang et al.41 modified the geometrical shape of the nSIL to be a whole microsphere and illuminated it with white light (Figure 4a). The imaging procedure had changed to a virtual one that was capable of obtaining 50-nm resolution (Figure 4b and 4c). To further optimize the image contrast and expand the viewing field, one could immerse the microsphere in liquid42 or increase its refractive index.43 To avoid the potential influence due to the evaporation of the liquid, it would be necessary to change the surface hydropolicity of the microsphere.44

Microsphere-based nanoscopy. (a) The configuration of the system with reflection-illumination mode. The image quality, especially the contrast and viewing field, can evidently be optimized when the microsphere is immersed in the liquid. The images of the sample are expected to be virtual and magnified, while the magnification factors are diverse when the patterns of the samples change (b, c). The ultimate resolution confirmed by the experiment in c is approximately 50 nm. Figure reprinted with permission: a and b, Ref. 42, ©2011 AIP; c, Ref. 41, ©2011 NPG.

ILLUMINATION WITH AN EVANESCENT WAVE

Apart from collecting the evanescent portion of light scattered or irradiated by fine details, illuminating the sample with an evanescent wave can also lead to optical super-resolution. Compared with the methods mentioned in the previous section, illumination by evanescent light can be converted to a propagation wave by scattering (or other physical mechanisms), so that super-resolution details can be directly detected from the far-field. For metallic samples, one promising route involves surface plasmon polaritons (SPPs).45 As SPPs are shorter in wavelength than incident light, they will result in a local field enhancement phenomenon along the metal/dielectric interface. The wave vector of SPPs can be written as:

where k0 is the wave vector of excited light in a vacuum; and εd and εm are the dielectric functions of the dielectric and metal, respectively. The use of SPPs on gold films for optical super-resolution imaging was demonstrated by Smolyaninov et al.46,47 in 2005. In their studies, the authors deposited a glycerine microdroplet onto a gold film and used its surface boundary to obtain total internal reflection, thereby creating a magnifying parabolic mirror. The sample to be imaged was etched onto the gold film under the droplet, and a magnified image was formed and observed through an ordinary microscope from the far-field. Another SPP-based super-resolution method was proposed by Yuan et al.48 They used an optical vortex beam to excite SPPs and thus generate a structured illumination pattern (SIM). As the wavelength of SPPs is much smaller than that of excitation light, a super-resolution image can thus be generated by measuring the magnified Moiré patterns.

SPPs-based methods supply the conspicuous capability to image the details beyond the diffraction limit, but their limitation is also very obvious: the sample should be metallic or be coated by a metallic thin film. To get out of this dilemma and expand the application scope, humans also attempt to use other kinds of evanescent (surface) waves. A recent idea to achieve optical super-resolution uses near-field illumination by microfibers49 (Figure 5a) and offers a promising approach to surface tomography imaging without localized field enhancement. In 2013, Hao et al.49 noticed that when a sample with subdiffraction details is illuminated by an external evanescent field, the spatial frequencies are passively shifted to a propagation mode, thereby projecting super-resolution information to the far-field (Figure 5b). This method supplies an effective way to discern simple structures such as gratings or line/dot pairs—which contain a limited number of frequency components—and can result in a resolution of approximately 75-nm (Figure 5c). However, for complex 2D patterns, this passive frequency conversion will result in chaotic images that require the introduction of a series of recovery algorithms (besides the optical means).

Microfiber-based nanoscopy. (a) The configuration of the system. (b) The principle of frequency shift. The spatial frequency of fine structures can be passively shifted back to the propagation mode using the formula kout=ke−kΛ, where ke is the wave vector of the evanescent wave and kΛ is the spatial frequency of the structure. (c) Images of 75-nm-gap line pairs taken by SEM (left), wide-field optical microscopy (middle) and microfiber nanoscopy (right). The length of the bar is 1 μm. Figure reprinted with permission: Ref. 49, ©2013 AIP. SEM, scanning electron microscope.

ABSOLUTE FAR-FIELD STRATEGIES

Evanescent waves are so powerful that they fundamentally break the diffraction limit and have the potential to supply, in theory, infinitely small resolution. However, the confined propagation distance of evanescent waves makes them impractical for the non-invasive detection or illumination of internal details of the sample from the far-field. For biological specimens, which are characterized by high scattering and insulation, this challenge is especially important. As these cell samples are always stained by fluorescent chromophores to emphasize the area of interest before imaging, it would be useful if the natural properties of fluorophores could be applied. In this respect, the discovery of multiple nonlinear processes of fluorescence since the mid-twentieth century, such as fluorescence recovery after photobleaching50 and fluorescence resonance energy transfer,51 have gradually paved the way towards optical super-resolution capability.

All stories began with the invention of two-photon microscopy. Although the initial concept was described by Göppert-Mayer52 in 1931 and observed by Kaiser53 in 1961, Denk et al.54 were the first to use this method for imaging in 1990. In two-photon microscopy, excitation and emission are confined to the focal volume, and the fluorescent intensity is proportional to I2, so that the effective PSF can be shrunk. It should be noted that two-photon microscopy achieves optical super-resolution at the expense of doubling the wavelength of the excited light, therefore, the common excitation spectra lies in the infrared range (700–1000 nm). The actual resolution enhancement rate is very limited. Actually, the chief contribution of two-photon microscopy is that it allows imaging of very deep tissue, using a long excitation wavelength and reducing the sensitivity to scattering associated with excitation confinement. The two-photon microscope always provides clear imaging at 10 times the depth of other microscopes (Figure 6). A higher order of excitation was experimentally realized by Hell et al.55 in 1996, which further resulted in the invention of three-photon microscope.

Although experimental confirmation of a three-photon absorption process was a remarkable achievement, Hell was remembered for another contribution to microscopy that had begun two years earlier. After its theoretical description in 1994,56 the demonstration of stimulated emission depletion microscopy (STED) in 199957 shocked the world. STED restricts fluorescence to the subdiffraction region at known positions by depleting the fluorophores surrounding it (to their ground state). A peak-centered focal spot, nested by a doughnut beam, is scanned over the sample and an image is generated by reading out the signals from a point detector. STED can resolve much more sophisticated details (Figure 7a) than the confocal microscope (Figure 7b), for example, the published resolution record of 2.4 nm was just achieved in 2012.58 Dynamic imaging with a frame rate as high as 200 fps has been reported59 for this method, and it is also possible to realize multi-color imaging and 3D nanoscale reconstruction.60

Images of the sample taken with (a) a confocal microscope, (b) STED and (c) g-STED. (d) The intensity profiles of the same region for each method. Specifically, g-STED leads to one of the following two predictable results: (i) the same resolution is obtained using a considerably smaller incident intensity than for STED; or (ii) the same incident intensity is maintained and an improvement in the resolution is expected. STED, stimulated emission depletion microscopy.

The theoretical resolution of a STED microscope can be expressed as:61

where I0 and ISTED are the intensities of the transition saturation and the depletion beam, respectively. It is reasonable to expect improved resolution by increasing the incident intensity of the depletion beam (usually some hundreds of mW); however, this simplified model is based on excitation and depletion beams with appropriate polarizations and temporal alignment62,63—in particular a perfect doughnut focal spot with a close-to-zero-intensity center. Presently, the most popular way to obtain a doughnut focal spot is based on phase modulation under high NA conditions. The strict requirement of the focal spot shape for STED applications has boosted research on the focusing properties of vectorial beams, while corresponding knowledge of focal spot manipulation has, in return, expanded the application of STED principles to other domains such as lithography64,65,66,67 and optical tweezers.68,69 Focal spot manipulation70,71 can also partially contribute to reducing the intensity of the depletion beam,69 although more straightforward and effective solutions include the ground state depletion, time-gated detection technique (g-STED)72,73 (Figure 7c and 7d) and frequency dependent detection using modulated excitation light.74

Although originating from traditional confocal microscopy75 (Figure 8a and 8b), STED together with ground state depletion microscopy,76,77 saturated pattern excitation microscopy78 and dynamic saturation optical microscopy,79,80 is always classified as reversible saturable optical transition (RESOLFT) microscopy81 (Figure 8c). The technical details of these methods may differ, but they share a similar foundation: that one of the several energy levels of a fluorophore can be expressed only in a subdiffraction region by a saturation (or switching) effect. In an ideal situation, it is possible to attain infinitely small resolution, whereas the practical performance will be influenced significantly by the noise,82 the size of fluorescent molecules, photobleaching and phototoxicity.

Principles of confocal microscopy, RESOLFT microscopy (e.g. STED) and stochastic readout microscopy (e.g. STORM). A and B denote the bright and dark states of the fluorophores, respectively. When (a) the sample is imaged using (b) a confocal microscope, the adjacent fluorophores within the diffraction zone will be excited simultaneously. On the other hand, the situation will change when the super-resolution method is applied. (c) In the RESOLFT mode, the effective PSF of light emission is limited by a doughnut focal spot of the depletion beam and the whole image is generated by bidirectional scanning. (d) In stochastic readout mode, a single switchable fluorophore from a random position within the diffraction zone is switched to a stable state (A), while others remain in the excited state (B). The final image is obtained by repeatedly imaging the same area and combining the frames together. Figure reprinted with permission: c and d, Ref. 75, ©2007 AAAS. PSF, point spread function; RESOLFT, reversible saturable optical transition; STED, stimulated emission depletion microscopy; STORM, stochastic optical reconstruction microscopy.

While RESOLFT microscopy and its prominent form (STED) were being demonstrated, Gustafsson had committed himself to developing another approach: SIM. Gustafsson reviewed the idea of Lukosz (1963)83 and set up a prototype of SIM in 2000.84 SIM uses patterned light to illuminate the sample and enhances the resolution by measuring the fringes in a Moiré pattern. Although it is still diffraction limited, SIM doubles the lateral resolution to approximately λ/4. The diffraction limit was fundamentally broken for this system in 2005, using a nonlinear process called saturated structured illumination microscopy.85 SIM and saturated structured illumination microscopy also enabled 3D subdiffraction imaging using 3D structured light.86

In 2006, the demonstration of photoactivated localization microscopy (PALM) by Betzig,87 stochastic optical reconstruction microscopy (STORM) by Zhuang88 and fluorescence photoactivation localization microscopy by Hess,89 indicated the birth of an absolutely new principle of optical super-resolution. In each of these methods, the single fluorophore is switched individually and stochastically in space and time (Figure 8d). Only a small proportion of the fluorophores remain in the bright state at stochastically distributed positions that do not overlap with each other. Therefore, one can precisely localize the fluorophores using an appropriate algorithm. This step is always realized by photobleaching or fluorescence resonance energy transfer. After numerous iterations, the final image can be generated by combining all frames together (Figure 9a and 9b). Better resolution can be expected by increasing the number of iterations, but at the expense of a longer processing time. To obtain sparsely distributed spots in the bright state, these microscopes always work under ‘bleaching–recovery–bleaching’ mode. However, a new method is to record the bleaching process of the fluorophores and making them sparse by using a subtraction calculation (bleaching/blinking-assisted localization microscopy; Figure 9c and 9d).90,91 On first inspection, these stochastic switching and readout mode techniques are not reliable for dynamic applications. Hence, recent endeavors have mainly focused on fast algorithms that seek to reconstruct the image with less frames. Some examples of these fast algorithms are fluoroBancroft,92,93 the Maliang method94 and wedged template matching.95 Another challenge that this kind of microscopy faces is the realization of 3D construction: a hurdle that was also overcome in recent years. Although aberrations usually disrupt the quality of image, in 2008, Huang et al.96 added a weak cylindrical lens into the optical path in order to supply additional optical astigmatism. In this way, the fluorescent spot was spread along perpendicular directions before and after the ideal focal plane, so that the axial position can be determined with nanometer accuracy. A similar idea that uses double-helix PSF to create the difference was proposed by Pavani et al.97 in 2009. There were also some other techniques developed to achieve 3D imaging during the same period, such as biplane,98 and dual-objective PALM/STORM,99,100 and the highest resolution achieved until now is beyond 20 nm99 in both lateral and axial directions.

Images of microtubules in cells. (a) A conventional indirect immunofluorescence image. (b) The 3D STORM image of the same area with the depth information color-coded. (c) Confocal image. (d) The BaLM image of the same area. On comparison, it is apparent that stochastic readout microscopy can significantly enhance the resolution in both lateral and axial directions. Figure reprinted with permission: a and b, Ref. 96, ©2008 AAAS. BaLM, bleaching/blinking-assisted localization microscopy; 3D, three-dimensional; STORM, stochastic optical reconstruction microscopy.

Last but not least, super-resolution optical fluctuation imaging (SOFI, 2009)101 relies on higher-order statistical analysis of temporal fluctuations recorded in a movie. The mathematical treatment of SOFI can increase the resolution in all three dimensions by a factor of  , where N is the order of correlation function used in SOFI. By reweighting the PSF mathematically or introducing cross-correlation algorithm,102 it is possible to achieve a resolution enhancement that is proportional to N. Multi-color imaging can also be realized using this method.103 The main limitation of SOFI is the brightness scaling of the image. As it is always necessary to enhance the brightness by increasing the excitation intensity, the fluorophores tend to be photobleached in a very short time, which may in turn influence the resolution.

, where N is the order of correlation function used in SOFI. By reweighting the PSF mathematically or introducing cross-correlation algorithm,102 it is possible to achieve a resolution enhancement that is proportional to N. Multi-color imaging can also be realized using this method.103 The main limitation of SOFI is the brightness scaling of the image. As it is always necessary to enhance the brightness by increasing the excitation intensity, the fluorophores tend to be photobleached in a very short time, which may in turn influence the resolution.

The nonlinear process of fluorescence supplies the basis for absolute far-field nanoscopy, in which both the illumination and detection waves are propagation waves. However, it is especially important to find a more universal approach to image the non-fluorescent samples. One possible solution is based on explicitly designed microstructures, such as the concentric periodic groove aperture (bull’s eye).104 The implementation of this structure involved the principle of superoscillations,105,106 a phenomenon that was originally attributed to Aharonov and was popularized through Berry’s works (2006–2009). Superoscillation occurs when a globally band-limited signal contains local segments that oscillate faster than its fastest Fourier components. It hints at the possibility of transferring subdiffraction information to the far-field if the phenomenon can persist long enough. Since 2007, the superoscillation principle has been successfully applied to shrink the size of the focal spot using a bull’s eye (or similar microstructures).107,108,109 However, it is practically difficult to employ in imaging because a high-intensity halo would simultaneously surround the subdiffraction focal spot. In 2012, Rogers et al.110 proposed a method to overcome this barrier by using scanning imaging mode. Their superoscillation lens contained 25 rings and was optimized using the binary particle swarm optimization method,111 finally obtaining a lateral resolution of less than λ/6.

SOME NEW POTENTIAL METHODS

There are several other methods that have been shown to break the diffraction limit. For example, time-reversal imaging (2007)112 is based on the idea that light can propagate and refocus back to the point of the source (either practically or computationally), after it is detected (as if the time had been reversed). To realize this target, it is necessary to make the reflected light interfere with itself in such a way that it can precisely converge towards the source and convert the evanescent waves to propagation waves—through use of a meta-material—before they decay. One such implementation is Maxwell’s fisheye,113 which has a spatially varying refractive index and is expected to cause all light rays emitted from one point to meet at a point exactly opposite it. If all light rays can propagate in this way, one can produce a mirror image of the object with unprecedented resolution. A practical device working on the microwave scale was manufactured by Ma114 in 2011; however, it is still an arduous task to realize both ideas using visible light. Whether it is feasible to fabricate a ‘perfect lens’ using materials with a positive refractive index is also arguable.115

In the meantime, van Putten and his colleagues116 shared with the world an impressive and innovative idea for optical super-resolution. Before they published their results, all existing approaches had tried to accurately manipulate the propagation of each and every light beam to enhance the resolution of the image. However, van Putten et al. did the opposite. Rather than transferring light clearly to the target, they scattered light in all directions (Figure 10a). This high-index resolution enhancement by scattering (HIRES) benefited from expansion of the maximum cone of light by scattering, so that the NA of the system was larger, thus improving the resolution. The calibration of the phase modulation by the HIRES lens (Figure 10b) should be a priority, thereby enabling the recovery of subdiffraction details from the clutter directly captured by the system. It is notable that this method does not actually break the diffraction limit, although the associated increase of the NA indeed enhances the resolution by a factor of (approximately) three (Figure 10c and 10d). There is still a long way to go to win the battle of finding the perfect imaging approach, but van Putten’s work illustrates that the neat use of the computer instead of finding a new physical mechanism is also a valid route for achieving an improvement in resolution.

Super-resolution scattering lens. (a) Principle of light coupling to high transversal k vectors in a high-index material. (b) Schematic of a HIRES lens that uses light scattering to achieve a high optical resolution. (c) The reference image taken with oil immersion objective lens (NA=1.49). (d) The image of the same area is taken with a scattering lens. Figure reprinted with permission: Ref. 116, ©2011 APS. HIRES, high-index resolution enhancement by scattering; NA, numerical aperture.

CONCLUSION AND OUTLOOK

The power that drives the development of optical super-resolution microscopy is that visualizing samples with such unprecedented resolution will yield miraculous discoveries. The applications of these methods have already begun, but the full realization of the initial dream is yet to come. Biologists are still waiting for in vivo 3D super-resolution reconstruction techniques, while material researchers have long been eager to image fine details below the surface. As mentioned in this review, some pioneers have gotten their feet in the door, and numerous state-of-the-art works have been proposed. These works originate from diverse physical mechanisms and have different applications. Although the diffraction barrier has fundamentally been broken, the drawbacks of present techniques are as evident as their merits. Apart from some specific exceptions such as microsphere nanoscopy, most other techniques based on the evanescent field lack the capability to reflect color information, and are limited to imaging surfaces. The use of the fluorophors is limited to the phenomena originating from nonlinear effects of fluorescence, which in turn confines their applications. To activate these effects, the incident power of the laser is so strong that it may result in irreversible damage to the biological sample. Although infrared light would have a more moderate impact on cells, the resolution enhancement of 2/3-photon microscopy is counteracted by the comparatively long excitation wavelength. The viewing fields of nearly all super-resolution imaging methods are not sufficiently large. From this point of view, it seems that PALM/STORM and SOFI have some natural advantages, but the drawback, however, is that both approaches require a long time to process the data, which implies that in their present form, they are not reliable for live imaging. We are still waiting for a universal and economical method to obtain sub-10-nm resolution.

Furthermore, how present ideas can be combined to acquire multiple functions will also be a critical part of the process. The intensity distribution alone is too homogeneous to make a complete and rigorous analysis. More other functional information is always expected. While this procedure is in progress in the decades to follow, the introduction of new concepts, such as quantum imaging117,118,119 and a non-diffractive beam,120,121 may inspire the next breakthrough.

References

Abbe E . Beiträge zur Theorie des Mikroskops und der mikroskopischen Wahrnehmung. Arch Mikroskop Anat 1873; 9: 413–418.

von Helmholtz H . Die theoretische Grenze für die Leistungsfähigkeit der Mikroskope. Ann Phys Chem 1874; 557–584.

Stephenson JW . Observations on professor Abbe’s experiments illustrating his theory of microscopic vision. Monthly Microsc J 1877; 17: 82–88.

Silfies JS, Schwartz SA, Davidson MW . The diffraction barrier in optical microscopy. Available at: http://www.microscopyu.com/articles/superresolution/diffractionbarrier.html.

Rayleigh L . On the manufacture and theory of diffraction-gratings. Philos Mag Ser 1874; 47: 81–93.

Houston WV . A compound interferometer for fine structure work. Phys Rev 1927; 29: 0478–0484.

Heimstädt O . Das fluoreszenzmikroskop. Z Wiss Mikrosk 1911; 28: 330–337.

Kirz J, Jacobsen C, Howells M . Soft-X-ray microscopes and their biological applications. Q Rev Biophys 1995; 28: 33–130.

Miao JW, Ishikawa T, Shen Q, Earnest T . Extending X-ray crystallography to allow the imaging of noncrystalline materials, cells, and single protein complexes. Annu Rev Phys Chem 2008; 59: 387–410.

Ruska E . über Fortschritte im Bau und in der Leistung des magnetischen Elektronenmikroskops. Z Phys 1934; 87: 580–602.

Solliday J . History of oil immersion lenses. Available at: http://www.smecc.org/history_of_oil_immersion_lenses.htm.

Wu Q, Feke GD, Grober RD, Ghislain LP . Realization of numerical aperture 2.0 using a gallium phosphide solid immersion lens. Appl Phys Lett 1999; 75: 4064–4066.

Wang H, Sheppard CJ, Ravi K, Ho ST, Vienne G . Fighting against diffraction: apodization and near field diffraction structures. Laser Photon Rev 2012; 6: 354–392.

Di Francia G . Super-gain antennas and optical resolving power. Suppl Nuovo Cim 1952; 9: 426–438.

Minsky M . Microscopy apparatus. US Patent 3013467, 1961.

Wilson T . Resolution and optical sectioning in the confocal microscope. J Microsc 2011; 244: 113–121.

Axelrod D . Cell–substrate contacts illuminated by total internal reflection fluorescence. J Cell Biol 1981; 89: 141–145.

Hell S . Doppelkonfokales Rastermikroskop. EU Patent 91121368.4, 1991.

Hell S, Stelzer EH . Properties of a 4pi confocal fluorescence microscope. J Opt Soc Am A 1992; 9: 2159–2166.

Bailey B, Farkas DL, Taylor DL, Lanni F . Enhancement of axial resolution in fluorescence microscopy by standing-wave excitation. Nature 1993; 366: 44–48.

Gustafsson MG, Agard DA, Sedat JW . Sevenfold improvement of axial resolution in 3D wide-field microscopy using two objective lenses. Proc SPIE 1995; 2412: 147–156.

Wicker K, Sindbert S, Heintzmann R . In: Proceedings of Novel Techniques in Microscopy; 27–29 April 2009; Vancouver, BC, Canada. Optical Society of America: Washington, DC, USA, 2009, Paper NWB1.

Voie AH, Burns DH, Spelman FA . Orthogonal-plane fluorescence optical sectioning: three-dimensional imaging of macroscopic biological specimens. J Microsc 1993; 170: 229–236.

Agard DA, Sedat JW . Three-dimensional architecture of a polytene nucleus. Nature 1983; 302: 676–681.

Ash EA, Nicholls G . Super-resolution aperture scanning microscope. Nature 1972; 237: 510–512.

Binning G, Rohrer H, Gerber C, Weibel E . Surface studies by scanning tunneling microscopy. Phys Rev Lett 1982; 49: 57–61.

Binnig G, Quate CF, Gerber C . Atomic force microscope. Phys Rev Lett 1986; 56: 930–933.

Novotny L, Sanchez EJ, Xie XS . Near-field optical imaging using metal tips illuminated by higher-order Hermite-Gaussian beams. Ultramicroscopy 1998; 71: 21–29.

Chon JW, Gu M, Bullen C, Mulvaney P . Two-photon fluorescence scanning near-field microscopy based on a focused evanescent field under total internal reflection. Opt Lett 2003; 28: 1930–1932.

Sanchez EJ, Novotny L, Xie XS . Near-field fluorescence microscopy based on two-photon excitation with metal tips. Phys Rev Lett 1999; 82: 4014–4017.

Pendry JB . Negative refraction makes a perfect lens. Phys Rev Lett 2000; 85: 3966–3969.

Fang N, Lee H, Sun C, Zhang X . Sub-diffraction-limited optical imaging with a silver superlens. Science 2005; 308: 534–537.

Zhang X, Liu ZW . Superlenses to overcome the diffraction limit. Nat Mater 2008; 7: 435–441.

Liu ZW, Durant S, Lee H, Pikus Y, Fang N et al. Far-field optical superlens. Nano Lett 2007; 7: 403–408.

Novotny L, Hecht B . Principles of Nano-optics. Cambridge: Cambridge University Press, 2006.

Xiong Y, Liu Z, Sun C, Zhang X . Two-dimensional Imaging by far-field superlens at visible wavelengths. Nano Lett 2007; 7: 3360–3365.

Liu ZW, Lee H, Xiong Y, Sun C, Zhang X . Far-field optical hyperlens magnifying sub-diffraction-limited objects. Science 2007; 315: 1686–1686.

Lee JY, Hong BH, Kim WY, Min SK, Kim Y et al. Near-field focusing and magnification through self-assembled nanoscale spherical lenses. Nature 2009; 460: 498–501.

Mason DR, Jouravlev MV, Kim KS . Enhanced resolution beyond the Abbe diffraction limit with wavelength-scale solid immersion lenses. Opt Lett 2010; 35: 2007–2009.

Liau ZL . Annular solid-immersion lenslet array super-resolution optical microscopy. J Appl Phys 2012; 112: 083110–083117.

Wang ZB, Guo W Li L Luk’yanchuk B, Khan A et al. Optical virtual imaging at 50 nm lateral resolution with a white-light nanoscope. Nat Commun 2011; 2: 218.

Hao X, Kuang C, Liu X, Zhang H, Li Y . Microsphere based microscope with optical super-resolution capability. Appl Phys Lett 2011; 99: 203102–203103.

Darafsheh A, Walsh GF, Dal Negro L, Astratov VN . Optical super-resolution by high-index liquid-immersed microspheres. Appl Phys Lett 2012; 101: 141128.

Hao X, Kuang C, Li Y, Liu X, Ku Y et al. Hydrophilic microsphere based mesoscopic-lens microscope (MMM). Opt Commun 2012; 285: 4130–4133.

Sambles JR, Bradbery GW, Yang FZ . Optical-excitation of surface-plasmons—an introduction. Contemp Phys 1991; 32: 173–183.

Smolyaninov II, Davis CC, Zayats AV . Image formation in surface plasmon polariton mirrors: applications in high-resolution optical microscopy. New J Phys 2005; 7: 175.

Smolyaninov II, Davis CC, Elliott J, Zayats AV . Resolution enhancement of a surface immersion microscope near the plasmon resonance. Opt Lett 2005; 30: 382–384.

Yuan G, Wang Q, Yuan X . Dynamic generation of plasmonic Moiré fringes using phase-engineered optical vortex beam. Opt Lett 2012; 37: 2715–2717.

Hao X, Liu X, Kuang CF, Li YH, Ku YL et al. Far-field super-resolution imaging using near-field illumination by micro-fiber. Appl Phys Lett 2013; 102: 013104–013104.

Axelrod D, Koppel DE, Schlessinger J, Elson E, Webb WW . Mobility measurement by analysis of fluorescence photobleaching recovery kinetics. Biophys J 1976; 16: 1055–1069.

Fernandez SM, Berlin RD . Cell surface distribution of lectin receptors determined by resonance energy transfer. Nature 1976; 264: 411–415.

Göppert-Mayer M . Über Elementarakte mit zwei Quantensprüngen. Ann Phys 1931; 401: 273–294.

Kaiser W, Garrett CG . Two-photon excitation in CaF2:Eu2+. Phys Rev Lett 1961; 7: 229–231.

Denk W, Strickler J, Webb W . Two-photon laser scanning fluorescence microscopy. Science 1990; 248: 73–76.

Hell SW, Bahlmann K, Schrader M, Soini A, Malak HM et al. Three-photon excitation in fluorescence microscopy. J Biomed Opt 1996; 1: 71–74.

Hell SW, Wichmann J . Breaking the diffraction resolution limit by stimulated emission: stimulated-emission-depletion fluorescence microscopy. Opt Lett 1994; 19: 780–782.

Klar TA, Hell SW . Subdiffraction resolution in far-field fluorescence microscopy. Opt Lett 1999; 24: 954–956.

Wildanger D, Patton BR, Schill H, Marseglia L, Hadden JP et al. Solid immersion facilitates fluorescence microscopy with nanometer resolution and sub-Ångström emitter localization. Adv Mater 2012; 24: OP309–OP313.

Lauterbach MA, Ullal CK, Westphal V, Hell SW . Dynamic imaging of colloidal-crystal nanostructures at 200 frames per second. Langmuir 2010; 26: 14400–14404.

Wildanger D, Medda R, Kastrup L, Hell SW . A compact STED microscope providing 3D nanoscale resolution. J Microsc 2009; 236: 35–43.

Reuss M, Engelhardt J, Hell SW . Birefringent device converts a standard scanning microscope into a STED microscope that also maps molecular orientation. Opt Express 2010; 18: 1049–1058.

Galiani S, Harke B, Vicidomini G, Lignani G, Benfenati F et al. Strategies to maximize the performance of a STED microscope. Opt Express 2012; 20: 7362–7374.

Hao X, Kuang CF, Wang TT, Liu X . Effects of polarization on the de-excitation dark focal spot in STED microscopy. J Opt 2010; 12: 115707.

Hao X, Kuang C, Li Y, Liu X . A method for extending depth of focus in STED nanolithography. J Opt 2012; 14: 045702.

Kuang CF, Hao XA, Liu X, Wang TT, Ku YL . Formation of sub-half-wavelength focal spot with ultra long depth of focus. Opt Commun 2011; 284: 1766–1769.

Li LJ, Gattass RR, Gershgoren E, Hwang H, Fourkas JT . Achieving lambda/20 resolution by one-color initiation and deactivation of polymerization. Science 2009; 324: 910–913.

Andrew TL, Tsai HY, Menon R . Confining light to deep subwavelength dimensions to enable optical nanopatterning. Science 2009; 324: 917–921.

Hao X, Kuang C, Li Y, Liu X . Continuous manipulation of doughnut focal spot in a large scale. Opt Express 2012; 20: 12692–12698.

Xue Y, Kuang C, Li S, Gu Z, Liu X . Sharper fluorescent super-resolution spot generated by azimuthally polarized beam in STED microscopy. Opt Express 2012; 20: 17653–17666.

Wang H, Groen FH, Pereira SF, Braat JJ . Optical waveguide focusing system with short free-working distance. Appl Phys Lett 2003; 83: 4486–4487.

Wang H, Yuan G, Tan W, Shi L, Chong T . Spot size and depth of focus in optical data storage system. Opt Eng 2007; 46: 065201–065201.

Vicidomini G, Schönle A, Ta H, Han KY, Moneron G et al. Sharper low-power STED nanoscopy by time gating. Nat Meth 2011; 8: 571–573.

Ge J, Kuang C, Lee SS, Kao FJ . Fluorescence lifetime imaging with pulsed diode laser enabled stimulated emission. Opt Express 2012; 20: 28216–28221.

Ronzitti E, Harke B, Diaspro A . Frequency dependent detection in a STED microscope using modulated excitation light. Opt Express 2013; 21: 210–219.

Hell SW . Far-field optical nanoscopy. Science 2007; 316: 1153–1158.

Hell SW, Kroug M . Ground-state-depletion fluorescence microscopy—a concept for breaking the diffraction resolution limit. Appl Phys B 1995; 60: 495–497.

Donnert G, Keller J, Medda R, Andrei MA, Rizzoli SO et al. Macromolecular-scale resolution in biological fluorescence microscopy. Proc Natl Acad Sci USA 2006; 103: 11440–11445.

Heintzmann R, Jovin TM, Cremer C . Saturated patterned excitation microscopy: a concept for optical resolution improvement. J Opt Soc Am A 2002; 19: 1599–1609.

Enderlein J . Breaking the diffraction limit with dynamic saturation optical microscopy. Appl Phys Lett 2005; 87: 094105-1–094105-3.

Humpolickova J, Benda A, Machan R, Enderlein J, Hof M . Dynamic saturation optical microscopy: employing dark-state formation kinetics for resolution enhancement. Phys Chem Chem Phys 2010; 12: 12457–12465.

Hell SW . Strategy for far-field optical imaging and writing without diffraction limit. Phys Lett A 2004; 326: 140–145.

Lee CJ, Boller KJ . The noise-limited-resolution for stimulated emission depletion microscopy of diffusing particles. Opt Express 2012; 20: 12793–12798.

Lukosz W, Marchand M . Optischen Abbildung Unter Überschreitung der Beugungsbedingten Auflösungsgrenze. Opt Acta 1963; 10: 241–255.

Gustafsson MG . Surpassing the lateral resolution limit by a factor of two using structured illumination microscopy. J Microsc 2000; 198: 82–87.

Gustafsson MG . Nonlinear structured-illumination microscopy: wide-field fluorescence imaging with theoretically unlimited resolution. Proc Natl Acad Sci USA 2005; 102: 13081–13086.

Schermelleh L, Carlton PM, Haase S, Shao L, Winoto L et al. Subdiffraction multicolor imaging of the nuclear periphery with 3D structured illumination microscopy. Science 2008; 320: 1332–1336.

Betzig E, Patterson GH, Sougrat R, Lindwasser OW, Olenych S et al. Imaging intracellular fluorescent proteins at nanometer resolution. Science 2006; 313: 1642–1645.

Rust MJ, Bates M, Zhuang X . Sub-diffraction-limit imaging by stochastic optical reconstruction microscopy (STORM). Nat Methods 2006; 3: 793–795.

Hess ST, Girirajan TP, Mason MD . Ultra-high resolution imaging by fluorescence photoactivation localization microscopy. Biophys J 2006; 91: 4258–4272.

Munck S, Miskiewicz K, Sannerud R, Menchon SA, Jose L et al. Sub-diffraction imaging on standard microscopes through photobleaching microscopy with non-linear processing. J Cell Sci 2012; 125: 2257–2266.

Burnette DT, Sengupta P, Dai Y, Lippincott-Schwartz J, Kachar B . Bleaching/blinking assisted localization microscopy for superresolution imaging using standard fluorescent molecules. Proc Natl Acad Sci USA 2011; 108: 21081–21086.

Andersson S . Localization of a fluorescent source without numerical fitting. Opt Express 2008; 16: 18714–18724.

Hedde PN, Fuchs J, Oswald F, Wiedenmann J, Nienhaus GU . Online image analysis software for photoactivation localization microscopy. Nat Methods 2009; 6: 689–690.

Quan T, Li P, Long F, Zeng S, Luo Q et al. Ultra-fast, high-precision image analysis for localization-based super resolution microscopy. Opt Express 2010; 18: 11867–11876.

Watanabe S, Takahashi T, Takeshima T . Localization of high-density fluorophores using wedged template matching. In: Proceedings of Focus on Microscopy Conference; 1–4 April 2012; Singapore; 2012.

Huang B, Wang W, Bates M, Zhuang X . Three-dimensional super-resolution imaging by stochastic optical reconstruction microscopy. Science 2008; 319: 810–813.

Pavani SR, Thompson MA, Biteen JS, Lord SJ, Liu N et al. Three-dimensional, single-molecule fluorescence imaging beyond the diffraction limit by using a double-helix point spread function. Proc Natl Acad Sci USA 2009; 106: 2995.

Juette MF, Gould TJ, Lessard MD, Mlodzianoski MJ, Nagpure BS et al. Three-dimensional sub-100 nm resolution fluorescence microscopy of thick samples. Nat Methods 2008; 5: 527–529.

Shtengel G, Galbraith JA, Galbraith CG, Lippincott-Schwartz J, Gillette JM et al. Interferometric fluorescent super-resolution microscopy resolves 3D cellular ultrastructure. Proc Natl Acad Sci USA 2009; 106: 3125–3130.

Xu K, Babcock HP, Zhuang X . Dual-objective STORM reveals three-dimensional filament organization in the actin cytoskeleton. Nat Methods 2012; 9: 185–188.

Dertinger T, Colyer R, Iyer G, Weiss S, Enderlein J . Fast, background-free, 3D super-resolution optical fluctuation imaging (SOFI). Proc Natl Acad Sci USA 2009; 106: 22287–22292.

Dertinger T, Colyer R, Vogel R, Enderlein J, Weiss S . Achieving increased resolution and more pixels with superresolution optical fluctuation imaging (SOFI). Opt Express 2010; 18: 18875–18885.

Gallina ME, Xu J, Dertinger T, Aizer A, Shav-Tal Y et al. Resolving the spatial relationship between intracellular components by dual color super resolution optical fluctuations imaging (SOFI). Opt Nanosc 2013; 2: 2.

Lezec HJ, Degiron A, Devaux E, Linke RA, Martin-Moreno L et al. Beaming light from a subwavelength aperture. Science 2002; 297: 820–822.

Berry MV, Popescu S . Evolution of quantum superoscillations and optical superresolution without evanescent waves. J Phys A Math Gen 2006; 39: 6965–6977.

Berry MV, Dennis MR . Natural superoscillations in monochromatic waves in D dimensions. J Phys A Math Theor 2009; 42: 022003.

Huang FM, Zheludev N, Chen YF, de Abajo FJ . Focusing of light by a nanohole array. Appl Phys Lett 2007; 90: 091119.

Wang TT, Wang X, Kuang CF, Hao XA, Liu X . Experimental verification of the far-field subwavelength focusing with multiple concentric nanorings. Appl Phys Lett 2010; 97: 231105.

Wang X, Fu J, Liu X, Tong LM . Subwavelength focusing by a micro/nanofiber array. J Opt Soc Am A 2009; 26: 1827–1833.

Rogers ET, Lindberg J, Roy T, Savo S, Chad JE et al. A super-oscillatory lens optical microscope for subwavelength imaging. Nat Mater 2012; 11: 432–435.

Jin NB, Rahmat-Samii Y . Advances in particle swarm optimization for antenna designs: real-number, binary, single-objective and multiobjective implementations. IEEE Trans Antenn Propag 2007; 55: 556–567.

Lerosey G, de Rosny J, Tourin A, Fink M . Focusing beyond the diffraction limit with far-field time reversal. Science 2007; 315: 1120–1122.

Leonhardt U . Perfect imaging without negative refraction. New J Phys 2009; 11: 093040.

Ma YG, Sahebdivan S, Ong CK, Tyc T, Leonhardt U . Evidence for subwavelength imaging with positive refraction. New J Phys 2011; 13: 033016.

Tyc T, Zhang X . Forum optics: perfect lenses in focus. Nature 2011; 480: 42–43.

van Putten EG, Akbulut D, Bertolotti J, Vos WL, Lagendijk A et al. Scattering lens resolves sub-100 nm structures with visible light. Phys Rev Lett 2011; 106: 193905.

Yanhua S . Quantum imaging. IEEE J Sel Top Quantum Electron 2007; 13: 1016–1030.

Wang H, Han S, Kolobov MI . Quantum limits of super-resolution of optical sparse objects via sparsity constraint. Opt Express 2012; 20: 23235–23252.

Kolobov MI, Fabre C . Quantum limits on optical resolution. Phys Rev Lett 2000; 85: 3789–3792.

Durnin J, Miceli JJ Jr, Eberly JH . Diffraction-free beams. Phys Rev Lett 1987; 58: 1499–1501.

Fahrbach FO, Simon P, Rohrbach A . Microscopy with self-reconstructing beams. Nat Photon 2010; 4: 780–785.

Acknowledgements

The authors wish to express sincere appreciation and gratitude to Professor S Hell, Professor X Zhang, Professor X Zhuang, Professor Z Liu, Professor N Fang, Dr E G van Putten and Dr Z Wang for the precious discussion and images. This work was financially supported by grants from National Natural Science Foundation of China (Grant No. 61205160), the Doctoral Fund of Ministry of Education of China (Grant Nos. 20110101120061 and 20120101130006) and the Scholarship Award for Excellent Doctoral Student granted by Ministry of Education.

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

This work is licensed under a Creative Commons Attribution-NonCommercial-Share Alike 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/3.0/

About this article

Cite this article

Hao, X., Kuang, C., Gu, Z. et al. From microscopy to nanoscopy via visible light. Light Sci Appl 2, e108 (2013). https://doi.org/10.1038/lsa.2013.64

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/lsa.2013.64

Keywords

This article is cited by

-

Design of an omnidirectional gaze optical imaging system with ultrahigh resolution

Optical Review (2021)

-

Single-cell biomagnifier for optical nanoscopes and nanotweezers

Light: Science & Applications (2019)

-

Numerical analysis of wide-field optical imaging with a sub-20 nm resolution based on a meta-sandwich structure

Scientific Reports (2017)

-

Achieving high-efficiency emission depletion nanoscopy by employing cross relaxation in upconversion nanoparticles

Nature Communications (2017)

-

Gradient Permittivity Meta-Structure model for Wide-field Super-resolution imaging with a sub-45 nm resolution

Scientific Reports (2016)