Abstract

Purpose

44.5% of abstracts presented at biomedical conferences are published. 26.5% of abstracts presented are basic science. The 2005 Walport Report reformed clinical academic training in the United Kingdom (UK) to promote trainee research. This study aims to analyse UK Ophthalmology research output following the reconstruction of clinical academic training.

Patients and Methods

1862 abstracts presented at The Royal College of Ophthalmologists' (RCOphth) Annual Congress from May 2005-May 2012 were examined using PubMed. Publication trends were analysed using SPSS v22 (IBM), using Spearman's rank coefficient and Mann-Whitney U test.

Results

44 (2.4%) abstracts were randomized controlled trials (RCTs), 88 (4.7%) basic science, and 231 (12.4%) oral presentations. 486 (26.6%) abstracts were published to a mean impact factor (IF) of 2.39 (95% CI 2.21–2.57). Mean time to publication from presentation was 15.17 (13.88–16.48) months, negatively correlating with IF (r=−0.149, P<0.003). Oral presentation (P<0.0001), RCTs (P=0.002), and basic science (P<0.0001) abstracts all made publication significantly more likely, with hazard ratios of 2.63 (2.13–2.24), 2.07 (1.3–3.2), and 1.92 (1.41–2.59), respectively. Higher IF was associated with oral presentation (3.4 vs 2.16, P<0.0001), basic science (3.57 vs 2.35, P<0.0001), and RCTs (4.78 vs 2.38, P=0.002). No significant change in publication rate was seen across the 8 years (P=0.61).

Conclusion

The proportion of basic science and total abstracts published that are presented at the RCOphth is lower than that in other biomedical conferences. RCTs, basic science abstracts, and oral presentations are more likely to be published. There was no improvement in publication rates following the 2005 Walport Report.

Similar content being viewed by others

Introduction

Peer reviewed publication is one of the primary means by which research findings are checked for an adequate level of academic rigor before dissemination in the modern scientific community. Conference presentation often precedes publication by several months though it is not subject to the same level of scrutiny as is applied to journal publications.1 However, these presentations facilitate rapid transmission of ideas through a community of researchers. The process of converting conference presentation to journal publication has been studied and comprehensively reviewed over many fields.1 Reports of UK ophthalmic presentation to publication rates are absent from the literature at the time of writing and there is a paucity of any such ophthalmic studies internationally.2, 3, 4 In biomedical research, publication has often been suggested to be influenced by the quality of research, a positive outcome and the time available to researchers. This last factor has been reported as the most common reason for non-publication, as well as difficulties between co-authors.5, 6, 7, 8 As such the rate of conversion from conference presentation to full publication is likely to reflect the amount of resources scientists in a given field can direct towards research output, as well as the quality of research.

In 2005, Dr Mark Walport produced the Walport report highlighting lack of a transparent career structure, lack of flexibility, and lack of structured posts all as barriers to pursuit of a clinical academic career within the UK National Health Service.9, 10 As a result, the National Institute for Health Research (NIHR) Integrated Clinical Academic (ICA) Programme was developed (Figure 1); it sought to promote the role of the clinical scientist by protecting time for clinical academics to focus on research activities, while in clinical training, and to protect clinical training posts when individuals pursue research fellowships.9, 10 The ultimate impact of the Walport report was the development of a transparent, flexible, and structured training pathway (from medical school to independent academic clinician); this left aspirant academic clinicians free to pursue research interests without detriment to their clinical training. The years directly after the introduction of the ICA, represent a particularly interesting period in which to study conversion from presentation at scientific meetings to publication in peer reviewed journals in the UK.

Graphical representation of the National Institute for Health Research (NIHR) Integrated Clinical Academic Programme.9 Academic foundation years offer 33% protected time for research activity; academic clinical fellowships 25%; and clinical lectureships 50%. In Ophthalmology, specialty training is 7 years, rather than the 5 years. MB, Bachelor of Medicine; Intercalated BSc, Bachelor of Science degree carried out during undergraduate medical degree; MB/PhD, Doctor of Philosophy carried out during undergraduate medical degree.

The main aim of this study is to evaluate whether there is an increase in the proportion of abstracts presented at the largest annual UK Ophthalmology conference that are later published in a journal and to examine the factors, which predict publication. As well as offering a snapshot of UK ophthalmic research, we will examine trends over time by assessing each annual congress from 2005–2012 and compare outcomes to those in previous literature.

Material and methods

One-thousand eight-hundred and sixty-two abstracts in congress proceedings for each annual meeting of the Royal College of Ophthalmologists from May 2005–May 2012 were reviewed in July 2014. Format of presentation, whether it was a basic science study and if it was a randomised control trial (RCT) were all recorded. Each abstract was searched for first on PubMed using the abstract title, first name, and last name of authors; if no result was found a second search on Google Scholar using the full title was performed. The abstracts from the conference proceedings and the published article were then reviewed, if the aims and the methods of published abstracts contained those stated in presented abstracts (allowing for increases in data size or presented abstracts making up a portion of a larger body of work) the presentation was recorded as being published. For those abstracts achieving peer reviewed publication, 2012 impact factor (IF) and delay between presentation and publication were recorded; work already published before presentation was given a lag time of 0 months.

These data were then analysed using SPSS v22 (IBM, Armonk, NY, USA). A Kaplan–Meier analysis, using publication as the event of interest, was performed for each univariate variable with a Mantel–Cox test being used to demonstrate statistical significance (P<0.05). Each variable found to be individually significant then underwent multivariate analysis by Cox regression to identify independent predictors of publication and ascertain hazard ratios. Trends over time and between IF and lag time were analysed using the Spearman’s rank correlation coefficient, and differences between the IF of sub-groups were analysed using a Mann–Whitney U test. Results are expressed using 95% confidence intervals in brackets. These non-parametric tests were selected on the basis of the Shapiro–Wilk test of normality. Before analysing differences in IF and the lag time between presentation and publication those abstracts published >24 months after presentation were excluded to reduce bias. This was done given that data collection occurred 24 months after the latest congress studied and so abstracts presented in this congress would not have had the opportunity to demonstrate lag times >24 months. As IF was subsequently found to be negatively correlated with lag time it was felt that abstracts published >24 months after the presentation should also be excluded from IF analysis.

Results

Four-hundred and ninty-six (26.6%) of the 1862 abstracts interrogated were published in journals with a mean IF of 2.39 (2.21–2.57) and with a mean lag time from conference presentation of 15.17 (13.88–16.46) months. Following the exclusion of the 93 abstracts published >24 months after presentation, the mean IF of publishing journal was 2.47 (2.26–2.68) and the mean lag time was 9.83 (9.11–10.55) months. Over time, there was no significant trend in proportion or frequency of publication (P=0.44), IF of publishing journals (P=0.063), or time to publication once those with a lag time>24 months were excluded (P=0.20). No significant correlation was seen across the 8 years in any of the further numerical description of the abstracts found in Table 1 using Spearman’s rank linear correlation analysis.

Forty-four (2.4%) abstracts were distinguished as RCTs, 88 (4.7%) as basic science, and 231 (12.4%) as oral presentations. On Kaplan–Meier analysis abstracts that described RCTs (P=0.03), basic science work (P<0.0001), or were orally presented (P<0.0001) were statistically more likely to be published (Figure 2). Twenty-four-month publication rates for RCT and non-RCT abstracts were 36.6% (21.6–51.6%) and 22.3% (20.3–24.3%); for basic science and non-basic science abstracts were 41.3% (31.2–51.4%) and 20.6% (18.6–22.6%); and for oral and poster presented abstracts were 43.3% (36.7–49.9%) and 18.6% (16.6–20.6%). Where the research was performed had no significant effect. Following multivariate analysis of the three significant predictors of publication, these variables maintained their significance and so were all independent predictive factors in our model. Oral presentation (P<0.0001), RCT (P=0.002), and basic science (P<0.0001) content all made publication significantly more likely with hazard ratios of 2.63 (2.13–2.24), 2.07 (1.3–3.2), and 1.92 (1.41–2.59), respectively.

(a) Kaplan–Meier plot showing time to publication for abstracts presented orally (full line) and by poster (dashed line); the P-value derived from a Mantel–Cox test is displayed. (b) Kaplan–Meier plot showing time to publication for abstracts of randomised controlled trial (RCT) data (full line) and non-randomised controlled trial data (dashed line); the P-value derived from a Mantel–Cox test is displayed. (c) Kaplan–Meier plot showing time to publication for abstracts containing basic science work (full line) and non-basic science work (dashed line); the P-value derived from a Mantel–Cox test is displayed.

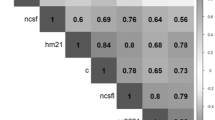

When excluding those abstracts not published from analysis, the IF of the publishing journal was found to correlate negatively with publication lag time with a Spearman’s rank correlation coefficient of −0.149 (P=0.003). The IF of publishing journals was also found to be significantly greater if abstracts were constructed from basic science or RCT data or were presented orally (Figure 3).

Discussion

The mean publication rate of 26.6% we have described is substantially lower than the mean of 44.5% from the 79 biomedical reports shown in the latest Cochrane review by Scherer et al.1 This disparity is even greater when compared with the 63.0% shown in American ophthalmic conference abstracts by Juzych et al.2, 3, 4

Like Sherer et al1 abstract follow-up was a minimum of 2 years. Sherer et al also demonstrated that basic science, RCTs, and oral presentation were three significant predictors of publication; hazard ratios 1.27 (CI 1.12–1.42), 1.24 (CI 1.14–1.36), and 1.28 (CI 1.09–1.49), respectively. In all, 46.7% of abstracts analysed were oral presentations, 26.5% basic science, and 19.2% RCT; these percentages are all at least four times higher than our study population and may explain the large disparity in publication rates we observed.1

Juzych et al looked at 327 randomly selected abstracts from the 1693 abstracts presented at the 1985 Annual Meeting of the Association for Research in Vision and Ophthalmology (ARVO), an International Meeting in the USA. Their follow-up period was 87 months, ours ranged from 26 to 110 months. Juzych et al used MEDLINE, a broadly similar database to PUBMED, to search for abstracts using their title and first author. Again basic science abstracts were published at a significantly higher rate than clinical abstracts, hazard ratio 1.20, however, oral presentation was not a predictor for publication and RCTs were not analysed separately. Sixty-seven percent of abstracts analysed were basic science, nearly 15 times higher than the proportion in our study population. It is reasonable to conclude that the large proportion of basic science abstracts at ARVO may contribute to the difference in publication rates seen.

Our study highlights the large proportion of biomedical research findings that are never made publically available. While conference presentation is a step towards dissemination in itself it leaves little record of the body of its contribution to a field, thereby not maximising the contribution of research subjects and depriving future researchers of a complete picture of what is already known. Discrepancy between submitted and presented abstracts in a UK ophthalmic conference has already been demonstrated, however, the discrepancy between presented and published content is likely much greater.11 The lower rate of engagement with publication that we have observed in UK ophthalmic research avoids the assurance of scientific rigor that could be provided by peer reviewed publication.

Trainee led collaborative research has become a growing area in student research and in surgical specialties; this is yet to gain such popularity in ophthalmic research.12 Adoption of such a research strategy in the future may help improve the efficacy of ophthalmic research, enabling high-powered multi-centre studies to be carried out successfully. Furthermore, with the regional nature of training in which juniors rotate through local centres is a potential strategy to help improve research quality.

Limitations

As reported by MacKinney and others5, 6, 7 three key factors contributing to this lower publication rate are the prioritisation of research, time available to clinicians, and the quality of research produced. With this in mind it is interesting that no significant change in publication rate was observed from 2005–2012 given the augmentation of the role of the clinical scientist overseen by the newly created NIHR as a result of the Walport report of 2005.9 It should be noted that many of the changes targeted junior trainees and even medical students who would take 9–16 years to complete an academic training programme with a research fellowship. Therefore, a more accurate assessment of reform may occur after current trainees have progressed to consultant level.

The surrogate used is likely to allow comment on one result of Walport reforms, protected research time within clinical training, as lack of time is a contributor to low publication rate. However, it does not adequately measure another result of Walport reform, full-time research pursuit while protecting clinical training. Therefore, another outcome of interest might be trends in trainee numbers pursuing training fellowships and clinical scientist fellowships.

A limitation of using this study to compare with existing literature is that we considered one conference with a relatively small proportion of basic science abstracts. International Ophthalmology conferences such as ARVO and the American Academy of Ophthalmology attract presentations of some of the highest quality UK research, a large proportion of which are basic science.2, 3 However, The Royal College of Ophthalmologists Annual Congress was chosen for comparison as it is a conference that traditionally sees a high proportion of trainee-led presentations, therefore, authors are likely to have had their training affected by the Walport report.

Furthermore, assignment of abstracts as basic science was arbitrary, those that were interpreted as being a study based “on the bench” were allocated to this category. In reality, many projects are often translational research and may fall into both a basic science and clinical research categories.

Conclusion

The proportion of abstracts presented at the Royal College of Ophthalmologist’s annual congress that are published in peer reviewed journals is substantially lower than that reported in other biomedical conferences, a likely contributor is the clinical nature of the conference. Abstracts containing RCTs, basic science data,or those which are presented orally are 2–3 times more likely to be published and to be published at a higher IF. There has been no significant change in the proportion of abstracts published or in the IF of the journals in which abstracts were published from 2005–2012. The authors cannot demonstrate any improvement in the rate of publication in UK ophthalmology research following the restructuring of UK clinical research in 2005 after the Walport report.

Re-analysis of the above work and analysis of trends in time out of training for research after a larger time interval from the Walport report is recommended.

References

Scherer RW, Langenberg P, von Elm E . Full publication of results initially presented in abstracts. Cochrane Database Syst Rev 2007; 2: Mr000005.

Juzych MS, Shin DH, Coffey J, Juzych L, Shin D . Whatever happened to abstracts from different sections of the association for research in vision and ophthalmology? Invest Ophthalmol Vis Sci 1993; 34 (5): 1879–1882.

Juzych MS, Shin DH, Coffey JB, Parrow KA, Tsai CS, Briggs KS . Pattern of publication of ophthalmic abstracts in peer-reviewed journals. Ophthalmology 1991; 98 (4): 553–556.

Scherer RW, Dickersin K, Langenberg P . Full publication of results initially presented in abstracts. A meta-analysis. JAMA 1994; 272 (2): 158–162.

MacKinney EC, Chun RH, Cassidy LD, Link TR, Sulman CG, Kerschner JE . Factors influencing successful peer-reviewed publication of original research presentations from the American Society of Pediatric Otolaryngology (ASPO). Int J Pediatr Otorhinolaryngol 2015; 79: 392–397.

Hartling L, Craig WR, Russell K, Stevens K, Klassen TP . Factors influencing the publication of randomized controlled trials in child health research. Arch Pediatr Adolesc Med 2004; 158 (10): 983–987.

Krzyzanowska MK, Pintilie M, Tannock IF . Factors associated with failure to publish large randomized trials presented at an oncology meeting. JAMA 2003; 290 (4): 495–501.

Reysen S . Publication of nonsignificant results: a survey of psychologists' opinions. Psychol Rep 2006; 98 (1): 169–175.

Collaboration UCR. Medically- and dentally-qualified academic staff: Recommendations for training the researchers and educators of the future. In: Health Do (ed) 2005. Available from http://www.ukcrc.org/wp-content/uploads/2014/03/Medically_and_Dentally-qualified_Academic_Staff_Report.pdf.

Pusey C, Thakker R . Clinical academic medicine: the way forward. Clin Med (Lond) 2004; 4 (6): 483–488.

Buchan JC, Spokes DM . Do recorded abstracts from scientific meetings concur with the research presented? Eye (Lond) 2010; 24 (4): 695–698.

Bartlett D, Pinkney TD, Futaba K, Whisker L, Dowswell G . Trainee led research collaboratives: pioneers in the new research landscape BMJ Careers. 2 August 2012 Available from http://careers.bmj.com/careers/advice/Trainee_led_research_collaboratives%3A_pioneers_in_the_new_research_landscape.

Acknowledgements

We thank Chiedu Ufodiama, Rishi Dhand, and James Muggleton for their help with data collection.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no conflict of interest.

Additional information

This work was presented at RCOPHTH Annual Congress 2015.

Rights and permissions

About this article

Cite this article

Okonkwo, A., Hogg, H. & Figueiredo, F. An 8-year longitudinal analysis of UK ophthalmic publication rates. Eye 30, 1433–1438 (2016). https://doi.org/10.1038/eye.2016.138

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/eye.2016.138

This article is cited by

-

The annual conference of the Irish College of Ophthalmologists: examining over a decade of trends

Irish Journal of Medical Science (1971 -) (2023)

-

Does presentation at a national meeting lead to publication? Evaluating the quality of the Scottish Ophthalmological Club

Eye (2018)