Abstract

Mindfulness-based programs (MBPs) are widely used to prevent mental ill health. Evidence suggests beneficial average effects but wide variability. We aimed to confirm the effect of MBPs and to understand whether and how baseline distress, gender, age, education, and dispositional mindfulness modify the effect of MBPs on distress among adults in non-clinical settings. We conducted a systematic review and individual participant data (IPD) meta-analysis (PROSPERO CRD42020200117). Databases were searched in December 2020 for randomized controlled trials satisfying a quality threshold and comparing in-person, expert-defined MBPs with passive-control groups. Two researchers independently selected, extracted and appraised trials using the revised Cochrane Risk-of-Bias tool. IPD of eligible trials were sought from authors. The primary outcome was psychological distress (unpleasant mental or emotional experiences including anxiety and depression) at 1 to 6 months after program completion. Data were checked and imputed if missing. Pairwise, random-effects, two-stage IPD meta-analyses were conducted. Effect modification analyses followed a within-studies approach. Stakeholders were involved throughout this study. Fifteen trials were eligible; 13 trialists shared IPD (2,371 participants representing 8 countries. In comparison with passive-control groups, MBPs reduced average distress between 1 and 6 months post-intervention with a small to moderate effect size (standardized mean difference, −0.32; 95% confidence interval, −0.41 to −0.24; P < 0.001; no heterogeneity). Results were robust to sensitivity analyses and similar for the other timepoint ranges. Confidence in the primary outcome result is high. We found no clear indication that this effect is modified by the pre-specified candidates. Group-based teacher-led MBPs generally reduce psychological distress among volunteering community adults. More research is needed to identify sources of variability in outcomes at an individual level.

Similar content being viewed by others

Main

Depression and other common mental health disorders are among the leading global causes of morbidity, generating a substantial societal burden1. In 2015, 4.4% of the global population was estimated to be suffering from depression and 3.6% was estimated to have anxiety disorders2. The prevalence of anxiety and depression had increased by 14.9% and 18.4%, respectively, from 2005 to 2015 (ref. 3), despite the increase in the provision of treatment for common mental health disorders4. The COVID-19 pandemic has introduced new challenges to global mental health, particularly amongst at-risk individuals such as healthcare workers5, and there are concerns that these will persist beyond the pandemic period, without the right prevention and management approaches6. In general, there is widespread agreement that not enough emphasis is placed on prevention compared with the treatment of disorders7, and shifting to the implementation of preventive interventions must be done with care as they should be evidence based and delivered to a high standard of quality4.

The past decade has seen an expansion of mental health prevention and promotion programs in workplaces, educational establishments and other community settings8. Typically, they target psychological distress, a concept encompassing a range of disturbing or unpleasant mental or emotional experiences that usually include depression and anxiety9. Psychological distress is often an internal response to external stressors when coping mechanisms are overwhelmed. It is also frequently referred to as mental distress, emotional distress or simply stress. If unaddressed, psychological distress can result in mental and physical health disorders10.

MBPs are among the most popular preventive interventions11. It is estimated that 15% of British adults and 20% of Australians have practiced mindfulness meditation at some point in their lives; 5% in the United States have done so in 2017 (refs. 12,13). Mindfulness training is offered in over 600 companies globally14 and 79% of US medical schools15. National health guidelines in England encourage workplaces to help employees access mindfulness, yoga or meditation for their mental well-being16. During the COVID-19 pandemic, international mental health guidelines have been advocating for mindfulness training exercises6,17.

In these contexts, mindfulness is typically defined as “the awareness that emerges through paying attention on purpose, in the present moment, and nonjudgmentally to the unfolding of experience moment by moment”18. Core MBP elements are mindfulness meditation training, doing things mindfully such as eating or brushing one’s teeth, and collective and individual inquiry with a qualified teacher, using participatory learning processes19. MBPs emphasize scientific approaches to health and aim to be suitable for delivery in public institutions in various settings and across cultures.

We recently published a systematic review and aggregate-data (AD) meta-analysis of randomized controlled trials (RCTs) evaluating MBPs in non-clinical settings20. We found that MBPs reduce adults’ average psychological distress compared with no intervention. In our AD meta-analysis, we have also assessed whether effects vary as a function of study-level differences, such as MBP type and intensity. However, preliminary evidence strongly suggests that the effectiveness of MBPs varies as a function of individual, participant-level differences. Thus, there is a need for studies with sample sizes large enough to study such differences properly21,22,23,24,25,26,27. With the current surge of MBP use, it is crucial to move beyond simply studying group-level intervention effects and assess individual variability in MBP training responsiveness28. This could allow MBPs to be targeted at subgroups that will benefit most, maximizing effectiveness and cost-effectiveness and minimizing harm29.

Individual-level factors

One individual-level factor with promising preliminary evidence for modifying MBP effects is pre-intervention psychological distress. Those who start with worse mental health may be the most likely to benefit from MBPs possibly because they tend to have more room for improvement and more motivation. There is evidence that MBPs targeted at stressed, anxious or symptomatic groups have larger effects20,30,31,32,33. In clinical settings, our IPD meta-analysis of mindfulness-based cognitive therapy (MBCT) to prevent recurrent depression relapse has found that those with worse baseline mental health would benefit more34. Another analysis combining three clinical trials has found a similar interaction effect35. However, some studies have found no evidence of such an interaction36.

Gender-specific effects may also be present in psychosocial interventions to promote health37. Some evidence from RCTs and AD meta-analyses suggests that MBPs’ effects on men are smaller than those on women, and multiple explanations for this have been proposed26,38,39,40. However, other studies, including our MBCT IPD meta-analysis, have found no significant influence of gender30,34.

A comparison of meta-analyses of MBPs for children and students with those of MBPs for adults suggests that effects may be larger among younger people20,41,42,43. Although some studies support this suggestion44, age was not a significant effect modifier in the clinical MBCT IPD meta-analysis nor in other studies34,45. Older people may be more engaged in training and therefore more likely to benefit.

The effects of some psychological interventions are known to be moderated by education levels37,46. There are concerns that those with lower education levels may not benefit equally from MBPs because of their language and cultural references47. A meta-analysis has recently shown that highly educated participants benefited more from a workplace MBP than others38. However, education levels did not significantly modify the effect of MBCT in the IPD meta-analysis with clinical populations34.

Another candidate effect modifier is dispositional mindfulness, a construct reflecting an individual’s focus and quality of attention48. Dispositional mindfulness, although very frequently measured, is an inconsistent concept, and it is unclear to what extent changes in dispositional mindfulness are specific to MBPs49,50,51,52. A higher level of dispositional mindfulness may be needed to engage with MBPs, but this may also limit the amount that is gained28. Some studies found that those with greater baseline dispositional mindfulness experienced greater mental health and well-being improvements after having participated in MBPs27,45,53.

Rationale and aims of the study

So far, RCTs assessing MBPs in non-clinical settings have lacked the sample sizes needed to have adequate statistical power to assess individual differences in the effects of MBPs. Combining trials in meta-analyses has solved the sample size problem, but standard meta-regressions and subgroup meta-analyses are unable to avoid aggregation bias. This bias, sometimes referred to as ecological bias or fallacy, occurs when associations between average participant-level characteristics such as gender and the pooled intervention effect do not necessarily reflect the true associations between the participant-level characteristics and the intervention effect54. For example, a standard meta-regression may show that trials with a smaller mean age have a larger effect, but if these trials also happen to deliver longer MBPs, the larger effect is attributed to the delivery of longer MBPs rather than to having younger participants. Aggregation bias is avoided when interactions are examined at the participant level (that is, based on within-trial information).

As it allows for effect modification testing at the participant level, IPD meta-analysis is regarded as the ideal approach for estimating the modification effects of individual differences22,23,54,55,56,57. This approach is a specific type of systematic review that involves the collection, checking and re-analysis of the original data for each participant in each study58. This supports better-quality data and analysis, allowing for in-depth explorations and robust meta-analytic results, which may differ from those based on AD59.

In summary, IPD allow researchers to explore how intervention effects vary as a function of individual differences without aggregation bias, and the combination of data from multiple studies increases the power to detect such variations. IPD meta-analysis is also ideal for estimating intervention effects because the data can be checked and re-analyzed consistently across all the included samples, RCT data unused in previous analyses can be included, and missing data can be accounted for at the individual level60,61.

We therefore conducted the first, to our knowledge, systematic review and IPD meta-analysis of MBPs for adults in non-clinical settings. We aimed to estimate the effect of MBPs on psychological distress and to compare this with the results of our AD meta-analysis. In addition, crucially, we wanted to answer the following research question: Do our prespecified participant-level characteristics modify the effect of MBPs on psychological distress and, if so, how? Based on previous evidence, existing theories, the likelihood of availability of RCT data, and international comparability, our prespecified candidate effect modifiers were baseline psychological distress, gender, age, education and dispositional mindfulness.

Results

Study selection and characteristics

Figure 1 presents the IPD-specific Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) study selection flow diagram. Combining our database searches from our previous review20 with the updated search, we obtained 21,843 records. We identified 11 additional records from other sources. Selection led to 51 records belonging to 15 trials deemed eligible sources of IPD.

Reproduced with permission from the PRISMA IPD group, which encourages sharing and reuse for non-commercial purposes. *This section was built using a heuristic for quick study selection, whereby the easiest-to-assess criterion, study design, was assessed first and only the studies satisfying this criterion would be assessed for the subsequent criterion, and so on.

Data were considered unavailable from 2 of the 15 eligible trials from which IPD were sought owing to the non-response to multiple contact attempts62 and the authors’ confirmation that data were inaccessible63. Therefore, only the IPD from 13 studies were included, amounting to 2,371 participants. This means we were able to obtain the IPD of 96% of the eligible participants and 87% of the eligible trials. The obtained IPD included two doctoral theses that did not have published results yet that were of interest to this review64,65. Five of the trials required a data transfer agreement to be in place before the authors could share the data. Throughout the process of obtaining and analyzing the data and completing the risk-of-bias assessments, the authors were contacted for any clarifications; nine authors were contacted about the data files and formats, and eight were contacted with queries regarding risk of bias.

The main study characteristics are summarized in Table 1. Of the 13 trials included, 4 were cluster RCTs. Publication dates ranged from 2012 to 2022; for two trials66,67, the main papers were published after our search, but their protocols had been identified in the search. Studies were conducted across eight countries: Australia, Canada, Chile, Taiwan, Thailand, the Netherlands, the United Kingdom and the United States.

Sample sizes for individual trials ranged from 44 to 670 participants. Participant types were diverse, ranging from general university and medical and nursing students to teachers, law enforcement officers and healthcare professionals. In keeping with the inclusion of non-clinical participants, for a cluster RCT of lung cancer patients and partners, only partner data were used30, and for one of the parents of children with ADHD, only parent data were used67, and only the data from non-asthmatic participants were used in an RCT which also recruited asthmatic participants65.

Mean ages reported across studies varied between 19 and 59 years, and females accounted for 11% to 100% of participants across trials. Pooling the IPD observed from all included studies revealed that the median age was 34 (range, 17 to 76; non-normal distribution) and 71% of participants were women. The median number of years of education was 15 (range, 8 to 21; non-normal distribution).

Mean levels of baseline distress and dispositional mindfulness cannot be meaningfully estimated across all studies because different instruments were used to measure them. Considering the most-used psychological distress measure, the 10-item Perceived Stress Scale (6 trials; 1,069 participants, most from the United States), the mean score is 15.48 (s.d., 6.57). This is significantly higher distress (P value (P) < 0.001) than that of a US probability sample of 2,387 adults with a mean of 13.02 (s.d., 6.35) (ref. 68). Scores from 0 to 13 are considered indicative of low stress, whereas those between 14 and 26 are considered indicative of moderate stress69. The higher distress level in our sample could be driven by the fact that half of these MBPs are targeted at groups at risk of being distressed. However, reducing the sample to universal (not targeted) MBPs does not substantially change the mean score (15.10; s.d., 6.45). Considering the most-used dispositional mindfulness measure, the 15-item Mindful Attention Awareness Scale (3 trials; 694 participants, most from the United States), the mean score of 4.29 (s.d., 0.75) is not significantly different (P = 0.23) from that of a comparable, non-clinical sample of 200 US adults (mean, 4.22; s.d., 0.63) (ref. 70). Item-level data for re-calculating total scores were provided for all but two trials, which provided total scores only.

The most-offered MBP was mindfulness-based stress reduction (MBSR). All studies had to include a passive control, but four had additional active-control groups and these included exercise and health enhancement programs and a stress management course.

All the trials measured psychological distress between 1 and 6 months following intervention completion because this was an eligibility criterion. In addition, all the trials measured post-intervention psychological distress (that is, less than 1 month after program completion). Three trials measured psychological distress beyond 6 months after completion of the intervention; the longest follow-up was 17 months post-intervention71. Effect modifiers gender and age were available for all trials, education level was available for all but one and dispositional mindfulness was measured in 11 of the 13 included studies.

Trials had on average 20% missing data on the primary outcome, ranging from 1% to 54% (Supplementary Table 1). Half of these missing data (that is, 10% of the total data) came from participants with missing data on all the review outcomes. By arm, 19% of MBP primary outcome data were missing, compared with 22% of passive controls and 16% of active controls.

Communications with collaborating trial authors when risk of bias was unclear led to all trials being classified as low risk for domain 1 (risk of bias arising from the randomization process); before the queries, four trials had been assessed as having some concerns. For the second domain (risk of bias due to deviations from the intended interventions), for the comparisons with passive-control groups, conservatively, all trials remained assessed as having a high risk. For active-controlled comparisons, risk was low. For domain 3 (risk of bias due to missing outcome data), 6 of the 13 trials had initially been assessed as having some concerns, whereas the remainder had been assessed as having a low risk. However, subsequently obtaining IPD for all randomized participants and using prespecified multiple missing data imputation and sensitivity analyses of departures from the missing-at-random assumption resulted in all the trials being reappraised as having a low risk of bias because of missing data. Domain 4 (risk of bias in the measurement of the outcome) remained classified as high risk across all trials as the outcomes were self-reported by nature. For the fifth and last domain (risk of bias in the selection of the reported result), 9 of the 13 trials were originally rated as having some concerns, but as the IPD meta-analysis had prespecified analyses, all trials were then rated as low risk. This resulted in uniform ratings across trials: all trials were low risk for domains 1, 3, and 5 and high risk for domain 4. Domain 2 was high risk for all passive-controlled comparisons and low risk for all active-controlled comparisons (Supplementary Table 2).

Intervention effects

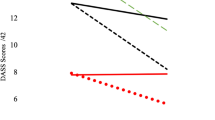

Table 2 shows the results of the IPD meta-analysis assessing the overall effects of MBPs on psychological distress (Fig. 2). In comparison with passive-control groups, on average, MBPs reduce distress between 1 and 6 months post-intervention, our primary outcome (standardized mean difference (SMD), −0.32; 95% confidence interval (CI), −0.41 to −0.24; P < 0.001; 95% prediction interval (PI), −0.41 to −0.24 (no heterogeneity)). The effect size, according to Cohen’s conventional criteria72, is small to moderate, but the confidence intervals are narrow, and there is no evidence of statistical heterogeneity. Results are similar for the other psychological distress timepoint ranges in comparison with passive-control groups. However, there is no evidence that MBPs decrease psychological distress in comparison with active-control groups.

Incorporating published data from the two trials for which IPD were not available did not modify the primary outcome’s effect estimate size or significance (15 trials; 2,477 participants; SMD, −0.31; 95% CI, −0.40 to −0.23; P < 0.001; I² index (I2), 0%). Similar results were obtained by analyzing observed data only (13 trials; SMD, −0.32; 95% CI, −0.41 to −0.23; P < 0.001; I2, 0%) and by modeling missing data as 10% or 20% worse than the observed data (for both scenarios: 13 trials; SMD, −0.32; 95% CI, −0.40 to −0.23; P < 0.001; I2, 0%). Figure 3 shows that missing outcome scores in the MBP arm would need to be over 50% worse on average than the observed scores to impact the statistical significance of reported effects, and over 70% worse for the direction of the intervention effect to change.

As a further check, we compared the primary outcome AD and IPD meta-analysis results of the nine trials that overlap in this publication and in our previous review20. Our previous review included 27 trials for this IPD meta-analysis’s primary outcome, and found results similar to those of the IPD meta-analysis, but with much greater heterogeneity. By comparing the same set of nine trials, we aimed to explore whether the heterogeneity could be explained by the assumptions and transformations needed when extracting summary data from publications in aggregate-data meta-analyses as opposed to using IPD. Effect sizes were similar, but the AD meta-analysis was more heterogeneous (SMD, −0.28; 95% CI, −0.44 to −0.12; P = 0.004; I2, 40%; 95% PI, −0.62 to 0.07) than the IPD meta-analysis (SMD, −0.32; 95% CI, −0.42 to −0.22; P < 0.001; I2, 0%).

According to the Grading of Recommendations Assessment, Development and Evaluation (GRADE) assessment (Table 2 and more details in Supplementary Table 3), confidence in the results emerging from 1 to 6 month follow-up comparisons with passive-control groups (primary outcome) is high. Confidence in the post-intervention results is moderate because of non-reporting bias potentially arising from the exclusion of trials that only reported secondary outcomes. Confidence in the 6+ month follow-up results is low because of non-reporting bias and imprecision (small number of studies), and confidence in the results arising from comparisons with active controls is very low because of non-reporting bias, imprecision, and inconsistency (unexplained heterogeneity).

Effect modifiers

Table 3 summarizes the interaction effects for all outcomes and comparisons. We found no evidence that any prespecified variables modified the effects of MBPs on our primary outcome, psychological distress at 1–6 months post-intervention (Supplementary Figs. 1–5), or any of our secondary outcomes. Because none of the prespecified candidate variables showed evidence of interaction effects, we were unable to build a predictive model to show which profiles could benefit the most.

Gender-specific meta-analyses suggest that MBPs reduce distress among both men and women compared with passive controls (for men: SMD, −0.40; 95% CI, −0.55 to −0.25; P < 0.001; for women: SMD, −0.28; 95% CI, −0.38 to −0.18; P < 0.001). Two trials in the primary outcome meta-analysis used student samples. Whereas students are projected to gain more years of education as they progress in their studies, participants selected from the community with the same number of years of education are less likely to gain additional years of education in the future. Thus, although all the interaction models were adjusted by age, we ran a post hoc sensitivity analysis excluding student trials to explore the possibility that students confounded education–MBP interactions. We found no interaction effect difference between this restricted analysis (SMD, 0.02; 95% CI, −0.03 to 0.08; P < 0.40) and that which includes all studies.

Discussion

Our IPD meta-analysis found evidence that MBPs generate, on average, a small to moderate reduction in adults’ psychological distress, lasting for at least 6 months in each of the represented settings, in comparison with no intervention. Based on the GRADE assessment, our confidence in these results is high. There was no clear indication that this effect is modified by baseline psychological distress, age, gender, education level or dispositional mindfulness.

Intervention effects

Our intervention effect results encourage the implementation of teacher-led MBPs for adults in non-clinical settings, and we have not found subgroups or settings in which they may be less efficacious. However, the average effect is small to moderate, and it is difficult to ascertain clinical significance because we have combined different instruments, although the effect size is within the range that has been proposed for defining minimally important difference based on effect size73. Also, our intervention effect results only estimate average effects across participants. There is evidence that some people may not benefit or may even experience harm74.

Despite our primary outcome results being positive, without active comparisons or blinded controls, we cannot confidently say that this is due to mindfulness training. Furthermore, in our exploratory analysis, we found no clear evidence that MBPs are superior to other interventions in mental health promotion. These results are aligned with those of our AD meta-analysis, which included up to 51 active-controlled trials20, and a meta-review of MBP meta-analyses22. Therefore, the specificity of MBP effects is still unclear.

Our findings do not extend to automated or self-guided MBPs such as those delivered through smartphone applications, books, CDs, etc. The lack of human interaction and teacher guidance may substantially modify their effectiveness and safety75. Despite the popularity of app-based mindfulness courses, potentially driven by cost and accessibility advantages14,76, the evidence base is still developing77. Our findings may partially extend to teleconference-based MBPs, but implementation research should better define this.

Similarly, our findings are limited to voluntary MBPs and should not be automatically extended to other types of offerings. The evidence so far on compulsory MBPs for adults, for example, an MBP required as part of medical school courses, is scarce and does not show benefit78. Furthermore, a recent large and well-conducted trial assessing a non-clinical MBP for adolescents, delivered as part of the school curriculum, showed no added benefit to well-being compared with normal school provision79.

Effect modifiers

Our findings on effect modification are remarkably similar to those of our IPD meta-analysis of MBCT to prevent recurrent depression relapse: no evidence was found of the effect-modifying role of age, sex, education or dispositional mindfulness on MBP effects34. However, the depression relapse IPD meta-analysis found some evidence suggesting a relative increment in effect with worse baseline mental health status, which was not replicated in the current non-clinical study.

Other study designs, mainly individual trials and AD meta-analyses, have found different results for all of these potential effect modifiers, for example, 26,27,36,38,39,40. However, study designs other than the IPD meta-analysis suffer from methodological shortcomings that make interaction analyses of potential effect modifiers at the individual participant level less reliable80,81.

Our results may help in the interpretation of evidence that MBPs targeted at at-risk or stressed groups are more effective in reducing depression and anxiety than universal MBPs. Our AD meta-analysis found that indicated MBPs (for individuals with subclinical symptoms of mental health conditions) and selective MBPs (for those at higher risk of developing mental health problems, such as carers) were more beneficial in preventing anxiety and depression than universal MBPs, although no such differences were found for psychological distress20. Another AD meta-analysis found similar results for depressive symptoms among university students82. Our current IPD meta-analysis suggests that this greater effect of targeted MBPs is not related to those more distressed at baseline obtaining more benefits but could instead be due to differences in the types of MBPs or their teachers (for example, therapists teaching selective or indicated MBPs). Another explanation could be that depression and anxiety questionnaires may suffer from ceiling effects among those less distressed, of which there are more in universal interventions, whereas psychological distress questionnaires may retain sensitivity along this mental health spectrum. Alternatively, those with higher baseline distress may be more responsive to MBP effects, but they may also have less time to devote to mindfulness practice after the course, so the extra effects may not materialize after the course ends.

Individuals with fewer than 12 years of education were underrepresented in our dataset, so we cannot exclude the possibility that benefit differently from MBPs. Our sample reflects the wider situation: the average rates of educational attainment for the individuals in MBSR and MBCT trials tend to be greater than the average for the US population83,84. Individuals with low educational attainment may not have equal access to MBPs. This could happen at least in part because of the aforementioned offering of MBPs by universities and workplaces, granting those enrolled in higher-degree programs and working for well-resourced employers access to MBPs while leaving the wider public with limited access. MBPs available to the wider public may be less common than MBPs behind the paywall of being enrolled in higher-degree programs or employed by well-resourced employers. Our results warrant efforts to adapt and thoroughly evaluate MBPs for wider audiences.

Our IPD has a good age range among adults, although those above 70 years old were underrepresented, so our findings cannot reliably extend to them. Men were underrepresented, making up 29% of the participants, which reflects other MBP trials very precisely83,84. Given that we found MBPs to be effective among men, more research is needed to identify barriers to access or engagement among men. Factors such as the gender of teachers and peers may contribute to engagement; in the case of MBPs within trials, the attractiveness of the control condition (for example, physical exercise) or researchers’ characteristics may play roles.

A key limitation of IPD meta-analyses is that the effect modifiers that can be assessed are limited to those that the existing trials measured. Other effect modifiers may be at play, such as participant expectations and beliefs, group and setting dynamics, and personality and cognitive factors28. Socio-economic and cultural factors may also be key. Although our country spread was good, low- and middle-income countries are underrepresented, and low- and middle-income populations within countries may be underrepresented too. Future qualitative research could identify and prioritize the most promising potential effect modifiers85.

In order to reduce the likelihood of spurious effect modification results, we had to limit our analyses to a handful of potential effect modifiers, and we could not assess any nuances within these (for example, different dimensions of baseline psychological distress). Also, our assessed effect modifiers may have shown significant results with more trials included. We hope that these limitations are addressed in future IPD meta-analyses as trials in the field continue to accumulate.

Confidence in the results

This IPD meta-analysis was preceded by a comprehensive systematic review, the methods were prespecified, and various risks of bias were mitigated. These aspects increased the robustness of our results86. We were able to obtain IPD for 96% of the eligible participants, over the 90% mark, which is seen as a reliable indicator of low risk of selection bias86,87. In line with recent work88, results were robust to sensitivity analyses including AD for the two eligible trials from which we could not obtain IPD.

Confidence in the IPD meta-analysis intervention effect primary outcome result is high according to the GRADE assessment, meaning that further research is unlikely to change this result. The assessment is markedly higher than that of our AD meta-analysis20. Several aspects explain this difference. In contrast to the AD meta-analysis, the trials included in the IPD meta-analysis passed an a priori quality threshold. Limiting inclusion to those trials with higher quality can make a meta-analysis more robust87. Many of our AD meta-analysis results were sensitive to trial quality, which encouraged us to limit our IPD meta-analysis. We acknowledge, however, that the revised Cochrane Risk-of-Bias tool (RoB 2) has not been validated as a scale, so we could not use validated cut-off points for selecting trials and risk-of-bias domains may not be interchangeable89. All trials’ risk of bias was reduced further by using IPD.

The consistency of the results also contributed to the GRADE rating. AD meta-analyses rely on published data, which limits the ability to check it and forces analysts to make transformations and strong assumptions of a varied, complex and hard-to-prespecify nature. These AD meta-analysis limitations increase the chance of biases and errors, decreasing the reliability of results and sometimes inflating heterogeneity. The differences between our AD and IPD meta-analyses illustrate this. The narrow prediction intervals of our primary outcome contrast with those of our previous AD meta-analysis. In the latter, we found similar results, but given the heterogeneity between studies, the findings did not support the generalization of MBP effects across every represented setting20. The study-level moderators that we investigated in the AD meta-analysis, such as program characteristics or type of population targeted, were not able to fully explain the observed heterogeneity. Similarly, we have not found strong evidence of individual-level effect modifiers in the IPD meta-analysis reported here. Instead, methodological factors may have contributed to the increased precision of both confidence and prediction intervals in the IPD meta-analysis compared with the AD meta-analysis, significantly reducing heterogeneity.

The methodological aspects of our study described above illustrate many of the advantages of IPD meta-analyses over AD meta-analyses. Specifically, they show the strengths of using IPD to re-calculate summary measures and of applying the same summary measures, sample types, and imputation methods to all the trials. The gains in consistency and reliability tend to compensate for the extra time and resource involved in collecting IPD, which usually limits the number of studies that can be included in IPD meta-analyses in comparison with their AD counterparts. Contemporary open-data initiatives mean that increasing amounts of IPD will be readily available from public data repositories, which will undoubtedly make IPD meta-analyses more feasible and faster over time.

However, despite the use of IPD, there are remaining risk-of-bias concerns regarding the lack of blinding and self-reported outcomes. These are inherent to the nature of the research field: it is extremely difficult to effectively blind participants to real-life psychosocial interventions, and psychological distress is an inherently subjective outcome. Future research could consider alternative assessment approaches, such as clinician-rated or partner-rated measures of psychological distress. In addition, downstream effects of psychological distress, such as work absenteeism or health problems, could be considered. Future trials should also take into account RoB 2 domains and trial reporting checklists when planning their trials in order to increase quality and thereby confidence in their results. Publicly registering a trial protocol ahead of data collection that pre-specifies a primary outcome measure and a primary timepoint, with a primary outcome data analysis plan, could go a long way in this sense. Missing data problems would be greatly mitigated if trialists could encourage participants to complete outcome surveys even when they abandon the MBP.

Confidence in results arising from actively controlled comparisons is very low. These comparisons included very few studies, so results are unreliable. Also, our search excluded studies in which the only comparisons tested were those with active-control groups, potentially biasing the results for this comparison. In any case, it is hard to interpret the effects of MBPs using comparisons with a mix of active-control groups because different control interventions may have different specific effects that may overlap differently with those of the MBPs. Similarly, the effect modification results for this comparison are hard to interpret because the effects of each active-control intervention may be modified in different ways. Confidence will increase as new trials in the field accumulate and we are able to synthesize evidence comparing MBPs with specific interventions, rather than with a mix of interventions.

Time and resource constraints meant that we had to exclude several trials that were only reporting secondary outcome results. We report these secondary outcomes anyway for completeness and for exploratory purposes, but we acknowledge that the limited inclusion could have biased these results, hence the reduced GRADE confidence in them. Relatedly, defining the primary outcome timepoint range as between 1 and 6 months post-intervention and choosing the longest follow-up within this window in order to focus on effects that are widely reported yet likely to be more stable than immediate effects were reasoned and predefined decisions, but ultimately arbitrary.

Conclusion

In contrast to those who take no action, community adults who choose to take part in group-based, teacher-led MBPs will generally experience a reduction in their psychological distress. Based on the trials accrued so far, we found no clear indication that baseline distress, gender, age, education level or dispositional mindfulness will modify this effect, but further research on MBP effect modification factors is needed.

Methods

The protocol for this work was prospectively registered (PROSPERO registration number CRD42020200117) and published90. Methodological details can be found in the protocol publication and are briefly described below. This study is reported in accordance with the relevant PRISMA guidelines91,92. This publication has considered the Global Code of Conduct, a code of ethics for equitable research partnerships. Because this project only involved the use of secondary anonymized data from other research studies, it did not require ethics committee approval.

A public stakeholder group has provided input throughout the life of this project, initially by providing feedback on the study protocol and screening and extracting records as research partners. Then, they contributed to the interpretation, dissemination, and output of the study findings, co-creating a film summarizing the study methodology and key findings and co-authoring a paper (in preparation) detailing their experience in acting as stakeholders on an IPD meta-analysis project. We have also involved a group of professional stakeholders to form an advisory group.

Study search and selection

The search for eligible studies follows the same protocol conducted as part of our previous review20, wherein 13 databases (AMED, ASSIA, CENTRAL, CINAHL, ERIC, EThOS, EMBASE, MEDLINE, ProQuest, PsycINFO, Scopus, Web of Science and the World Health Organization International Clinical Trials Registry Platform) were electronically searched with no publication date limits. We updated this search in December 2020 using the search strategies prespecified in our IPD meta-analysis protocol90. In addition to studies obtained through database searches, the 136 trials included in our previous review were screened for eligibility against the IPD meta-analysis inclusion criteria. Moreover, authors invited to share IPD made us aware of further publications linked to their main trial publications.

The review inclusion criteria, applied at the study level, were (1) parallel-arm RCTs, including cluster RCTs; (2) group-based first-generation MBPs19, with a minimum intensity of four 1 h in-person teacher-led sessions or equivalent; (3) passive-control groups, such as no intervention or waitlists, or treatment as usual, which the MBP arm also had to have access to; (4) adult (18+ year old) participants living in the community who were not specifically selected for having any particular clinical condition; (5) self-reported psychological distress measured between 1 and 6 months after MBP completion; (6) at least one of the following candidate effect modifiers being reported: baseline psychological distress, gender, age, education and dispositional mindfulness; and (7) a maximum of two risk-of-bias sources rated as ‘high’, before having obtained IPD, according to RoB 2 (ref.93).

Our inclusion criteria were deliberately narrower in scope than our previous AD meta-analysis review criteria, rendering our IPD meta-analysis feasible and allowing for a more focused and high-quality analysis. For feasibility purposes, we had to limit our IPD meta-analysis inclusion criteria to those trials reporting the primary outcome, excluding those trials only reporting secondary outcomes. We have also excluded trials which only compared MBPs with active-control groups, but we did include trials that had active-control groups if they also compared MBPs with a passive-control group.

Retrieved records (first, abstracts, then full texts) were screened independently by two reviewers (J.G. and C.F.) using Covidence94 for all but criterion (7). Then, multiple records from the same trial were combined and appraised using RoB 2 (7). Any discrepancies in screening or rating decisions were discussed and resolved within the research team.

Data collection and processing

Two independent reviewers (J.G. and C.F.) extracted study-level characteristics from publications. We contacted the authors of eligible trials and invited them to collaborate, offering co-authorship on any publications resulting from their shared trial data. Initial contact by email included the review protocol and instructions on which data were being requested and how to transfer the data. We requested final and baseline scores for the outcome measure and the available prespecified effect modifiers. Where trials used more than one measure for the same outcome, we requested the more psychometrically robust outcome. Where trials measured the same outcome multiple times within our timepoint range of interest (for example, the 2 and 4 month follow-up), we requested the longest follow-up to reduce heterogeneity within the range, as well as to focus on the effects that are likely to be more stable.

We asked authors to share anonymized IPD for all randomized participants, including any data which may have been omitted from trial publications or analyses. We requested data for individual scale items rather than calculated total scores, without imputation of missing data. Data transfers were completed using the University of Cambridge Transfer Server of the Secure Data Hosting Service, an ISO 27001-certified safe haven for sensitive data. When transfers were finished, one reviewer (C.F.) checked all trial data files with guidance from a second reviewer (J.G.).

We initially checked files in the original format they were sent in (SPSS, Excel) and again after importing into Stata. First, we confirmed that all randomized participants were present by checking trial publications and registries. Second, we checked all files for any missing variables. Third, we checked that scores were for individual scale items, not total scores, and whether these were reversed or imputed, whether data were missing and how this was indicated in the original file, and whether there were any extreme values or inconsistent items (for example, unusually old or young participants). Where authors also transferred a data dictionary, we compared it with the data files and standardized it across studies. Where necessary, we contacted authors when we had questions or needed clarifications and we discussed with two reviewers (J.G. and C.F.) and recorded any changes to the original data files, such as the removal of ineligible participants. Once we completed checking all trial data files, we standardized them into a prespecified format using Stata.

Once they were standardized, we calculated the demographics and descriptive statistics of each trial and timepoint and compared them with those of trial publications; we also discussed any discrepancies, and if they remained unresolved, we contacted the trial authors for further information. Then, we conducted analyses of individual trials and compared them with published analyses, discussing any discrepancies with the trial authors. For studies in which IPD were not available, two independent reviewers extracted AD from trial publications. Trials used different categories for collecting education-level data, so we estimated years of education based on the education systems of the countries in which the trial was conducted.

Outcomes and risk-of-bias assessment

The primary outcome of this meta-analysis is self-reported psychological distress measured between 1 and 6 months after program completion using psychometrically valid questionnaires (for example, Perceived Stress Scale, General Health Questionnaire, Depression, Anxiety and Stress Scale). Psychological distress was the most measured and robust outcome of our AD meta-analysis20 and is normally distributed in the general population. Rather than focusing on any particular set of mental health symptoms, psychological distress transcends the diagnostic categories traditionally used in psychiatry and is a general indicator of mental health deterioration9.

Secondary outcomes are psychological distress measures taken less than 1 month after program completion (post-intervention) or beyond 6 months after program completion. Post-intervention timepoints were considered to be secondary outcomes because they do not inform stable changes; therefore, they are less useful for understanding the real-life impact of MBPs and the factors modifying these effects in a stable fashion. Secondary outcome analyses were considered exploratory.

The reviewers selecting the studies independently assessed whether the trial outcomes included measures of psychological distress. Disagreements were resolved via consensus between two senior team members (T.D. and P.B.J.) blinded to the trial results and to which trial used each measure, before IPD were requested. Measure validity was ascertained by cross-referencing the validity and reliability studies cited in the trial publications. Where measures had been translated from the original language, validity studies of the translated measures were additionally checked. As trials used different instruments to measure psychological distress, we standardized them using z scores.

The main analysis compared MBPs with a combination of all the passive-control groups. We chose this as the main comparison because it makes the interpretation of potential modification effects more straightforward. Including active controls would make results hard to interpret because the effects of each control intervention may be modified in different ways. A comparison with passive-control groups allows for a better understanding of MBP effect modification per se. Notwithstanding, if the included trials also compared MBPs with other interventions, these were grouped under the comparator ‘active control’ for exploratory analyses.

Relying initially on trial publications, two reviewers (J.G. and C.F.) independently assessed trials’ risk of bias using RoB 2 for RCTs applied to the review outcomes93,95. This tool considers bias due to (1) randomization, (2) deviations from intended interventions, (3) missing outcome data, (4) measurement of the outcome and (5) selection of the reported result. We assessed cluster RCTs with their specific sub-set of questions on RoB 2. Once IPD had been obtained, we updated the risk-of-bias assessments for individual studies (for example, the risk was lowered if IPD included participants missing in published trial reports) and discussed any unclear information with study authors. Finally, we used the GRADE approach to assess the confidence in the accumulated evidence96. It categorizes the quality of evidence into four levels of certainty: high, moderate, low, and very low. For each outcome, we considered trials’ risk of bias, meta-analysis non-reporting bias, imprecision (CIs), inconsistency (prediction intervals) and indirectness of evidence.

Analytic approach

To calculate the overall MBP effect, we performed two-stage IPD meta-analyses54. Meta-analyses were univariate for the timepoint ranges for which data from all the trials were available; otherwise, they were multivariate, including all available timepoint ranges to make the most efficient use of available data97.

For the first stage of each IPD meta-analysis, we conducted linear regressions separately by trial to estimate the intervention effects following the intention-to-treat principle. We used the analysis of covariance estimate as an effect measure (final score adjusted for baseline score and the available prespecified effect modifiers)54,98. We treated questionnaire scores as continuous variables.

For the second stage of the IPD meta-analyses, we combined the intervention effects from each trial using pairwise random-effects meta-analyses within comparator categories. We used restricted maximum likelihood to estimate heterogeneity in the intervention effect and quantified heterogeneity using approximate prediction intervals99.

We imputed missing data following a prespecified plan54,100,101. Multiple imputation (multivariate imputation by chained equations) was performed separately by trial and by randomized group within each trial. We only imputed data for participants for which data on other review outcomes were available, and we used these other outcomes as auxiliary variables (except in the sensitivity analyses assessing departures from the missing-at-random assumption, in which we imputed all missing outcome data; see below). In order not to increase between-study heterogeneity, we used the same set of covariates in the imputation models across studies54: the psychological distress outcomes and prespecified effect modifiers measured by that study. We performed 50 imputations per study, which was at least equal to the percentage of incomplete cases. More details are found in our protocol90.

We performed a series of sensitivity analyses exploring missing data for the primary outcome. One analysis incorporated published AD from trials for which IPD were unavailable into the second stage of the two-stage IPD meta-analysis60. Another sensitivity analysis was performed using no imputed data. We assessed departures from the missing-at-random assumption in sensitivity analyses by increasing all imputed psychological distress scores by 10% and 20% and by increasing them by 10–90% in the intervention arm only, given that MBP participants experiencing deterioration may have been less willing to complete outcome measures than passive-control-group participants, who may have expected to feel worse.

The effect modification analyses assessed the potential modifiers of interest one by one following a within-studies approach80,102. For each effect modifier, a treatment-by-participant covariate interaction term was incorporated in the intervention effect trial regression models. Then, the estimated interactions were combined in a random-effects meta-analysis. We estimated subgroup-specific intervention effects by repeating the analysis procedure using the interaction parameters derived from the within-studies approach.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

The aggregate data are publicly available103. For individual trial data availability, please refer to the relevant trial publication.

Code availability

The statistical code is publicly available103.

References

Vos, T. et al. Global, regional, and national incidence, prevalence, and years lived with disability for 301 acute and chronic diseases and injuries in 188 countries, 1990-2013: a systematic analysis for the Global Burden of Disease Study 2013. Lancet 386, 743–800 (2015).

World Health Organization Depression and Other Common Mental Disorders: Global Health Estimates https://apps.who.int/iris/handle/10665/254610 (World Health Organization, 2017).

Vos, T. et al. Global, regional, and national incidence, prevalence, and years lived with disability for 310 diseases and injuries, 1990–2015: a systematic analysis for the Global Burden of Disease Study 2015. Lancet 388, 1545–1602 (2016).

Jorm, A. F., Patten, S. B., Brugha, T. S. & Mojtabai, R. Has increased provision of treatment reduced the prevalence of common mental disorders? Review of the evidence from four countries. World Psychiatry 16, 90–99 (2017).

Cenat, J. M. et al. Prevalence of symptoms of depression, anxiety, insomnia, posttraumatic stress disorder, and psychological distress among populations affected by the COVID-19 pandemic: a systematic review and meta-analysis. Psychiatry Res. 295, 113599 (2021).

Vadivel, R. et al. Mental health in the post-COVID-19 era: challenges and the way forward. Gen. Psychiatry 34, e100424 (2021).

World Health Organization Mental Health Action Plan 2013-2020 https://www.who.int/publications/i/item/9789241506021 (World Health Organization, 2013).

Samele, C. Increasing momentum in prevention of mental illness and mental health promotion across Europe. BJPsych Int. 13, 22–23 (2016).

Lo Moro, G., Soneson, E., Jones, P. B. & Galante, J. Establishing a theory-based multi-level approach for primary prevention of mental disorders in young people. Int. J. Environ. Res. Public Health 17, 9445 (2020).

Russ, T. C. et al. Association between psychological distress and mortality: individual participant pooled analysis of 10 prospective cohort studies. BMJ 345, e4933 (2012).

Burke, A., Lam, C. N., Stussman, B. & Yang, H. Prevalence and patterns of use of mantra, mindfulness and spiritual meditation among adults in the United States. BMC Complement. Altern. Med. 17, 316 (2017).

Simonsson, O., Fisher, S. & Martin, M. Awareness and experience of mindfulness in Britain. Sociol. Res. Online 26, 833–852 (2020).

Dib, J., Comer, J., Wootten, A. & Buhagiar, K. State of Mind 2021 Report (Smiling Mind, 2021).

Jacobs, E. Are free meditation apps the answer for stressed staff? Financial Times (2020).

Barnes, N., Hattan, P., Black, D. S. & Schuman-Olivier, Z. An examination of mindfulness-based programs in US medical schools. Mindfulness 8, 489–494 (2017).

National Institute for Health and Care Excellence. Mental Wellbeing at Work (NG212): NICE guideline https://www.nice.org.uk/guidance/ng212 (National Institute for Health and Care Excellence, 2022).

World Health Organization. Doing What Matters in Times of Stress https://www.who.int/publications/i/item/9789240003927 (World Health Organization, 2020).

Kabat-Zinn, J. Full Catastrophe Living, Revised Edition: How to Cope with Stress, Pain and Illness Using Mindfulness Meditation 2 edn (Piatkus, 2013).

Crane, R. S. et al. What defines mindfulness-based programs? The warp and the weft. Psychol. Med. 47, 990–999 (2017).

Galante, J. et al. Mindfulness-based programmes for mental health promotion in adults in non-clinical settings: a systematic review and meta-analysis of randomised controlled trials. PLoS Med. 18, e1003481 (2021).

Keng, S. L., Smoski, M. J. & Robins, C. J. Effects of mindfulness on psychological health: a review of empirical studies. Clin. Psychol. Rev. 31, 1041–1056 (2011).

Goldberg, S. B., Sun, S. & Davidson, R. J. The empirical status of mindfulness based interventions: a systematic review of 44 meta-analyses of randomized controlled trials. Perspect. Psychol. Sci. 17, 108–130 (2020).

De Vibe, M., Bjørndal, A., Tipton, E., Hammerstrøm, K. & Kowalski, K. Mindfulness based stress reduction (MBSR) for improving health, quality of life, and social functioning in adults. Campbell Syst. Rev. 8, 1–127 (2012).

Davidson, R. J. Mindfulness-based cognitive therapy and the prevention of depressive relapse: measures, mechanisms, and mediators. JAMA Psychiatry 73, 547–548 (2016).

Ospina, M. B. et al. Meditation practices for health: state of the research. Evid. Rep. Technol. Assess. 155, 1–263 (2007).

Rojiani, R., Santoyo, J. F., Rahrig, H., Roth, H. D. & Britton, W. B. Women benefit more than men in response to college-based meditation training. Front. Psychol. 8, 551 (2017).

Shapiro, S. L., Brown, K. W., Thoresen, C. & Plante, T. G. The moderation of mindfulness-based stress reduction effects by trait mindfulness: results from a randomized controlled trial. J. Clin. Psychol. 67, 267–277 (2011).

Tang, R. & Braver, T. S. Towards an individual differences perspective in mindfulness training research: theoretical and empirical considerations. Front. Psychol. 11, 818 (2020).

Burton, H., Sagoo, G. S., Pharoah, P. D. P. & Zimmern, R. L. Time to revisit Geoffrey Rose: strategies for prevention in the genomic era? Ital. J. Public Health 9, e8665–8661 (2012).

Schellekens, M. P. J. et al. Mindfulness-based stress reduction added to care as usual for lung cancer patients and/or their partners: a multicentre randomized controlled trial. Psychooncology 26, 2118–2126 (2017).

Hofmann, S. G., Sawyer, A. T., Witt, A. A. & Oh, D. The effect of mindfulness-based therapy on anxiety and depression: a meta-analytic review. J. Consult. Clin. Psychol. 78, 169–183 (2010).

Roos, C. R., Bowen, S. & Witkiewitz, K. Baseline patterns of substance use disorder severity and depression and anxiety symptoms moderate the efficacy of mindfulness-based relapse prevention. J. Consult. Clin. Psychol. 85, 1041–1051 (2017).

Khoury, B. et al. Mindfulness-based therapy: a comprehensive meta-analysis. Clin. Psychol. Rev. 33, 763–771 (2013).

Kuyken, W. et al. Efficacy of mindfulness-based cognitive therapy in prevention of depressive relapse: an individual patient data meta-analysis from randomized trials. JAMA Psychiatry 73, 565–574 (2016).

Ter Avest, M. J. et al. Added value of mindfulness-based cognitive therapy for depression: a tree-based qualitative interaction analysis. Behav. Res. Ther. 122, 103467 (2019).

Fiocco, A. J., Mallya, S., Farzaneh, M. & Koszycki, D. Exploring the benefits of mindfulness training in healthy community-dwelling older adults: a randomized controlled study using a mixed methods approach. Mindfulness 10, 737–748 (2018).

Whitehead, M. A typology of actions to tackle social inequalities in health. J Epidemiol. Community Health 61, 473–478 (2007).

Vonderlin, R., Biermann, M., Bohus, M. & Lyssenko, L. Mindfulness-based programs in the workplace: a meta-analysis of randomized controlled trials. Mindfulness 11, 1579–1598 (2020).

Galante, J. et al. A mindfulness-based intervention to increase resilience to stress in university students (the Mindful Student Study): a pragmatic randomised controlled trial. Lancet Public Health 3, e72–e81 (2018).

De Vibe, M. et al. Mindfulness training for stress management: a randomised controlled study of medical and psychology students. BMC Med. Educ. 13, 107 (2013).

Dawson, A. F. et al. Mindfulness-based interventions for university students: a systematic review and meta-analysis of randomized controlled trials. Appl. Psychol. Health Well Being 12, 384–410 (2020).

Dunning, D. et al. The effects of mindfulness-based interventions on cognition and mental health in children and adolescents: a meta-analysis of randomised controlled rrials. J. Child Psychol. Psychiatry 60, 244–258 (2019).

Dunning, D. et al. Do mindfulness-based programmes improve the cognitive skills, behaviour and mental health of children and adolescents? An updated meta-analysis of randomised controlled trials. Evid Based Ment. Health 25, 135–142 (2022).

Nyklicek, I. & Irrmischer, M. For whom does mindfulness-based stress reduction work? Moderating effects of personality. Mindfulness 8, 1106–1116 (2017).

Greeson, J. M. et al. Decreased symptoms of depression after mindfulness-based stress reduction: potential moderating effects of religiosity, spirituality, trait mindfulness, sex, and age. J. Altern. Complement. Med. 21, 166–174 (2015).

Ebert, D. D. et al. Does Internet-based guided-self-help for depression cause harm? An individual participant data meta-analysis on deterioration rates and its moderators in randomized controlled trials. Psychol. Med. 46, 2679–2693 (2016).

Russell, L. et al. Relevance of mindfulness practices for culturally and linguistically diverse cancer populations. Psychooncology 28, 2250–2252 (2019).

Rau, H. K. & Williams, P. G. Dispositional mindfulness: a critical review of construct validation research. Person. Individ. Differ. 93, 32–43 (2016).

Goldberg, S. B. et al. What can we learn from randomized clinical trials about the construct validity of self-report measures of mindfulness? A meta-analysis. Mindfulness 10, 775–785 (2019).

Grossman, P. & Van Dam, N. T. Mindfulness, by any other name…: trials and tribulations of sati in western psychology and science. Contemp. Buddhism 12, 219–239 (2011).

Quaglia, J. T., Braun, S. E., Freeman, S. P., McDaniel, M. A. & Brown, K. W. Meta-analytic evidence for effects of mindfulness training on dimensions of self-reported dispositional mindfulness. Psychol. Assess. 28, 803–818 (2016).

Baer, R., Gu, J., Cavanagh, K. & Strauss, C. Differential sensitivity of mindfulness questionnaires to change with treatment: a systematic review and meta-analysis. Psychol. Assess. 31, 1247–1263 (2019).

Gawrysiak, M. J. The many facets of mindfulness and the prediction of change following mindfulness-based stress reduction (MBSR). J. Clin. Psychol. 74, 523–535 (2018).

Riley, R. D., Tierney, J. F. & Stewart, L. A. Individual Participant Data Meta-Analysis: a Handbook for Healthcare Research (Wiley, 2021).

Hingorani, A. D. et al. Prognosis research strategy (PROGRESS) 4: stratified medicine research. BMJ 346, e5793 (2013).

Ioannidis, J. Next-generation systematic reviews: prospective meta-analysis, individual-level data, networks and umbrella reviews. Br. J. Sports Med. 51, 1456–1458 (2017).

Tierney, J. F., Fisher, D. J., Burdett, S., Stewart, L. A. & Parmar, M. K. B. Comparison of aggregate and individual participant data approaches to meta-analysis of randomised trials: an observational study. PLoS Med. 17, e1003019 (2020).

Higgins, J. P. T. et al. Cochrane Handbook for Systematic Reviews of Interventions Version 6.1. www.training.cochrane.org/handbook (Cochrane, 2020).

Tudur Smith, C. et al. Individual participant data meta-analyses compared with meta-analyses based on aggregate data. Cochrane Database Syst. Rev. 9, MR000007 (2016).

Riley, R. D., Lambert, P. C. & Abo-Zaid, G. Meta-analysis of individual participant data: rationale, conduct, and reporting. BMJ 340, c221 (2010).

Oxman, A. D., Clarke, M. J. & Stewart, L. A. From science to practice: meta-analyses using individual patient data are needed. JAMA 274, 845–846 (1995).

Vieten, C. & Astin, J. Effects of a mindfulness-based intervention during pregnancy on prenatal stress and mood: results of a pilot study. Arch. Womens Ment. Health 11, 67–74 (2008).

Phang, C. K., Mukhtar, F., Ibrahim, N., Keng, S. L. & Mohd Sidik, S. Effects of a brief mindfulness-based intervention program for stress management among medical students: the Mindful-Gym randomized controlled study. Adv. Health Sci. Educ. Theory Pract. 20, 1115–1134 (2015).

Aeamla-Or, N. The Effect of Mindfulness-Based Stress Reduction on Stress, Depression, Self-Esteem and Mindfulness in Thai Nursing Students: a Randomised Controlled Trial. PhD thesis, Univ. Newcastle (2015).

Kral, T. R. A. et al. Impact of short- and long-term mindfulness meditation training on amygdala reactivity to emotional stimuli. Neuroimage 181, 301–313 (2018).

MacKinnon, A. L. et al. Effects of mindfulness-based cognitive therapy in pregnancy on psychological distress and gestational age: outcomes of a randomized controlled trial. Mindfulness 12, 1173–1184 (2021).

Siebelink, N. M. et al. A randomised controlled trial (MindChamp) of a mindfulness-based intervention for children with ADHD and their parents. J. Child Psychol. Psychiatry 63, 165–177 (2022).

Cohen, S. & Williamson, G. M. in The Social Psychology of Health (eds Spacapan S. & Oskamp S.) (Sage, 1988).

New Hampshire Department of Administrative Services Perceived Stress Scale https://www.das.nh.gov/wellness/docs/percieved%20stress%20scale.pdf (2016).

Brown, K. W. & Kasser, T. Are psychological and ecological well-being compatible? The role of values, mindfulness, and lifestyle. Soc. Indic. Res. 74, 349–368 (2005).

van Dijk, I. et al. Effects of mindfulness-based stress reduction on the mental health of clinical clerkship students: a cluster-randomized controlled trial. Acad. Med. 92, 1012–1021 (2017).

Cohen, J. Statistical Power Analysis for the Behavioral Sciences 2nd edn (Lawrence Erlbaum Associates, 1988).

Kazis, L. E., Anderson, J. J. & Meenan, R. F. Effect sizes for interpreting changes in health status. Med. Care 27, S178–S189 (1989).

Britton, W. B., Lindahl, J. R., Cooper, D. J., Canby, N. K. & Palitsky, R. Defining and measuring meditation-related adverse effects in mindfulness-based programs. Clin. Psychol. Sci. 9, 1185–1204 (2021).

Montero-Marin, J. et al. Teachers ‘finding peace in a frantic world’: an experimental study of self-taught and instructor-led mindfulness program formats on acceptability, effectiveness, and mechanisms. J. Educ. Psychol. 113, 1689–1708 (2021).

Perez, S. Meditation and mindfulness apps continue their surge amid pandemic TechCrunch https://techcrunch.com/2020/05/28/meditation-and-mindfulness-apps-continue-their-surge-amid-pandemic/ (2020).

Gal, E., Stefan, S. & Cristea, I. A. The efficacy of mindfulness meditation apps in enhancing users’ well-being and mental health related outcomes: a meta-analysis of randomized controlled trials. J. Affect. Disord. 279, 131–142 (2021).

Damião Neto, A., Lucchetti, A. L. G., da Silva Ezequiel, O. & Lucchetti, G. Effects of a required large-group mindfulness meditation course on first-year medical students’ mental health and quality of life: a randomized controlled trial. J. Gen. Intern. Med. 35, 672–678 (2019).

Kuyken, W. et al. Effectiveness and cost-effectiveness of universal school-based mindfulness training compared with normal school provision in reducing risk of mental health problems and promoting well-being in adolescence: the MYRIAD cluster randomised controlled trial. Evid. Based Ment. Health 25, 99–109 (2022).

Fisher, D. J., Carpenter, J. R., Morris, T. P., Freeman, S. C. & Tierney, J. F. Meta-analytical methods to identify who benefits most from treatments: daft, deluded, or deft approach? BMJ 356, j573 (2017).

Button, K. S. et al. Power failure: why small sample size undermines the reliability of neuroscience. Nat. Rev. Neurosci. 14, 365–376 (2013).

Ma, L., Zhang, Y. & Cui, Z. Mindfulness-based interventions for prevention of depressive symptoms in university students: a meta-analytic review. Mindfulness 10, 2209–2224 (2019).

Waldron, E. M., Hong, S., Moskowitz, J. T. & Burnett-Zeigler, I. A systematic review of the demographic characteristics of participants in US-based randomized controlled trials of mindfulness-based interventions. Mindfulness 9, 1671–1692 (2018).

Eichel, K. et al. A retrospective systematic review of diversity variables in mindfulness research, 2000–2016. Mindfulness 12, 2573–2592 (2021).

Frank, P. & Marken, M. Developments in qualitative mindfulness practice research: a pilot scoping review. Mindfulness 13, 17–36 (2021).

Wang, H. et al. The methodological quality of individual participant data meta-analysis on intervention effects: systematic review. BMJ 373, n736 (2021).

Tierney, J. F. et al. Individual participant data (IPD) meta-analyses of randomised controlled trials: guidance on their use. PLoS Med. 12, e1001855 (2015).

Tsujimoto, Y. et al. No consistent evidence of data availability bias existed in recent individual participant data meta-analyses: a meta-epidemiological study. J. Clin. Epidemiol. 118, 107–114.e105 (2020).

Savovic, J. et al. Association between risk-of-bias assessments and results of randomized trials in Cochrane Reviews: the ROBES Meta-Epidemiologic Study. Am. J. Epidemiol. 187, 1113–1122 (2018).

Galante, J., Friedrich, C., Dalgleish, T., White, I. R. & Jones, P. B. Mindfulness-based programmes for mental health promotion in adults in non-clinical settings: protocol of an individual participant data meta-analysis of randomised controlled trials. BMJ Open 12, e058976 (2022).

Shamseer, L. et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015: elaboration and explanation. BMJ 350, g7647 (2015).

Stewart, L. A. et al. Preferred reporting items for systematic review and meta-analyses of individual participant data: the PRISMA-IPD Statement. JAMA 313, 1657–1665 (2015).

Sterne, J. A. C. et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ 366, l4898 (2019).

Covidence systematic review software (Veritas Health Innovation, 2019).

RoB2 Development Group Revised Cochrane Risk-of-Bias Tool for Randomized Trials (RoB 2) https://sites.google.com/site/riskofbiastool/welcome/rob-2-0-tool/current-version-of-rob-2?authuser=0 (2018).

Guyatt, G. et al. GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ Open 336, 924–926 (2008).

Mavridis, D. & Salanti, G. A practical introduction to multivariate meta-analysis. Stat. Methods Med. Res. 22, 133–158 (2013).

McKenzie, J. E., Herbison, G. P. & Deeks, J. J. Impact of analysing continuous outcomes using final values, change scores and analysis of covariance on the performance of meta-analytic methods: a simulation study. Res. Synth. Methods 7, 371–386 (2016).

Riley, R. D., Higgins, J. P. T. & Deeks, J. J. Interpretation of random effects meta-analyses. BMJ 342, d549 (2011).

Debray, T. P. et al. Get real in individual participant data (IPD) meta-analysis: a review of the methodology. Res. Synth. Methods 6, 293–309 (2015).

White, I. R., Royston, P. & Wood, A. M. Multiple imputation using chained equations: issues and guidance for practice. Stat. Med. 30, 377–399 (2011).

Riley, R. D. et al. Individual participant data meta-analysis to examine interactions between treatment effect and participant-level covariates: statistical recommendations for conduct and planning. Stat. Med. 39, 2115–2137 (2020).

Galante, J. et al. Individual Participant Data Systematic Review and Meta-analysis of Randomised Controlled Trials Assessing Adult Mindfulness-Based Programmes for Mental Health Promotion in Non-clinical Settings - Electronic Dataset and Code https://doi.org/10.17605/OSF.IO/F9UPX (Center for Open Science, 2023).

Barrett, B. et al. Meditation or exercise for preventing acute respiratory infection: a randomized controlled trial. Ann. Fam. Med. 10, 337–346 (2012).

Barrett, B. et al. P02.36. Meditation or exercise for preventing acute respiratory infection: a randomized controlled trial. BMC Complement. Altern. Med. https://doi.org/10.1370/afm.1376 (2012).

Obasi, C. N. et al. Advantage of meditation over exercise in reducing cold and flu illness is related to improved function and quality of life. Influenza Other Respir. Viruses 7, 938–944 (2013).

Zgierska, A. et al. P02.57. Mindfulness meditation versus exercise in the prevention of acute respiratory infection, possible mechanisms of action: a randomized controlled trial. BMC Complement. Altern. Med. https://doi.org/10.1186/1472-6882-12-S1-P113 (2012).

Zgierska, A. et al. Randomized controlled trial of mindfulness meditation and exercise for the prevention of acute respiratory infection: possible mechanisms of action. Evid. Based Complement. Altern. Med. 2013, 1–14 (2013).

Hayney, M. S. et al. Age and psychological influences on immune responses to trivalent inactivated influenza vaccine in the meditation or exercise for preventing acute respiratory infection (MEPARI) trial. Hum. Vaccines Immunother. 10, 2759–2767 (2014).

Rakel, D. et al. Value associated with mindfulness meditation and moderate exercise intervention in acute respiratory infection: the MEPARI Study. Fam. Pract. 30, 390–397 (2013).

Barrett, B. et al. Meditation or exercise for preventing acute respiratory infection (MEPARI-2): a randomized controlled trial. PLoS ONE 13, e0197778 (2018).

Maxwell, L., Barrett, B., Chase, J., Brown, R. & Ewers, T. Self-reported mental health predicts acute respiratory infection. WMJ 114, 100–104 (2015).

Hayney, M. S. et al. Serum IFN-γ-induced protein 10 (IP-10) as a biomarker for severity of acute respiratory infection in healthy adults. J. Clin. Virol. 90, 32–37 (2017).

Meyer, J. D. et al. Benefits of 8-wk mindfulness-based stress reduction or aerobic training on seasonal declines in physical activity. Med. Sci. Sports Exerc. 50, 1850–1858 (2018).

Barrett, B. Predictors of mindfulness meditation and exercise practice, from MEPARI-2, a randomized controlled trial. Mindfulness 10, 1842–1854 (2019).

Meyer, J. D., Hayney, M. S., Coe, C. L., Ninos, C. L. & Barrett, B. P. Differential reduction of IP-10 and C-reactive protein via aerobic exercise or mindfulness-based stress-reduction training in a large randomized controlled trial. J. Sport Exerc. Psychol. 41, 96–106 (2019).

Christopher, M. S. et al. Mindfulness-based resilience training to reduce health risk, stress reactivity, and aggression among law enforcement officers: a feasibility and preliminary efficacy trial. Psychiatry Res. 264, 104–115 (2018).

Hunsinger, M., Christopher, M. & Schmidt, A. M. Mindfulness training, implicit bias, and force response decision-making. Mindfulness 10, 2555–2566 (2019).

Ribeiro, L. et al. Differential impact of mindfulness practices on aggression among law enforcement officers. Mindfulness 11, 734–745 (2020).

Errazuriz, A. et al. Effects of mindfulness-based stress reduction on psychological distress in health workers: a three-arm parallel randomized controlled trial. J. Psychiatr. Res. 145, 284–293 (2022).

Turner, L. et al. Immune dysregulation among students exposed to exam stress and its mitigation by mindfulness training: findings from an exploratory randomised trial. Sci. Rep. 10, 1–11 (2020).

Bóo, S. J. M. et al. A follow‐up study to a randomised control trial to investigate the perceived impact of mindfulness on academic performance in university students. Couns. Psychother. Res. 20, 286–301 (2019).

Galante, J. et al. Effectiveness of providing university students with a mindfulness-based intervention to increase resilience to stress: one-year follow-up of a pragmatic randomised controlled trial. J. Epidemiol. Community Health 75, 151–160 (2020).

Huang, S. L., Li, R. H., Huang, F. Y. & Tang, F. C. The potential for mindfulness-based intervention in workplace mental health promotion: results of a randomized controlled trial. PLoS ONE 10, e0138089 (2015).

Hwang, Y.-S. et al. Mindfulness-based intervention for educators: effects of a school-based cluster randomized controlled study. Mindfulness 10, 1417–1436 (2019).

Kral, T. R. A. et al. Mindfulness-based stress reduction-related changes in posterior cingulate resting brain connectivity. Soc. Cogn. Affect. Neurosci. 14, 777–787 (2019).

Hirshberg, M. J., Goldberg, S. B., Rosenkranz, M. & Davidson, R. J. Prevalence of harm in mindfulness-based stress reduction. Psychol. Med. 52, 1–9 (2020).

Baird, B., Riedner, B. A., Boly, M., Davidson, R. J. & Tononi, G. Increased lucid dream frequency in long-term meditators but not following MBSR training. Psychol. Conscious. (Wash. D.C.) 6, 40–54 (2019).

Tomfohr-Madsen, L. M. et al. Mindfulness-based cognitive therapy for psychological distress in pregnancy: study protocol for a randomized controlled trial. Trials 17, 498 (2016).

Schellekens, M. P. J. et al. Mindfulness-based stress reduction added to care as usual for lung cancer patients and their partners: a randomized controlled trial. J. Thorac. Oncol. 12, S1416–S1417 (2017).

Schellekens, M. P. J. et al. Mindfulness-based stress reduction in addition to treatment as usual for patients with lung cancer and their partners: results of a multi-centre randomized controlled trial. Int. J. Behav. Med. 23, S231 (2016).

Siebelink, N. M. et al. Mindfulness for Children with ADHD and Mindful Parenting (MindChamp): a qualitative study on feasibility and effects. J. Atten. Disord. 25, 1931–1942 (2021).

van Dijk, I. et al. Effect of mindfulness training on the course of psychological distress and positive mental health of medical students during their clinical clerkships. A cluster-randomized controlled trial. Int. J. Behav. Med. 23, S86 (2016).

Acknowledgements