Abstract

Digital twins, a nascent yet potent computer technology, can substantially advance sustainable ocean management by mitigating overfishing and habitat degradation, modeling, and preventing marine pollution and supporting climate adaptation by safely assessing marine geoengineering alternatives. Concomitantly, digital twins may facilitate multi-party marine spatial planning. However, the potential of this emerging technology for such purposes is underexplored and yet to be realized, with just one notable project entitled European Digital Twins of the Ocean. Here, we consider the promise of digital twins for ocean sustainability across four thematic areas. We further emphasize implementation barriers, namely, data availability and quality, compatibility, and cost. Regarding oceanic data availability, we note the issues of spatial coverage, depth coverage, temporal resolution, and limited data sharing, underpinned, among other factors, by insufficient knowledge of marine processes. Inspired by the prospects of digital twins, and informed by impending difficulties, we propose to improve the availability and quality of data about the oceans, to take measures to ensure data standardization, and to prioritize implementation in areas of high conservation value by following the ‘nested enterprise’ approach.

Similar content being viewed by others

Introduction

Oceans, and the ecosystem services they yield, are fundamental to human life. They provide 10–12% of the world’s population with livelihoods1 and support three billion people with protein from seafood2,3. They regulate the Earth’s climate by absorbing ~30% of carbon dioxide produced by human activities4, and they serve as home to a diverse array of flora and fauna5, with an estimated 230,000 marine species described to date6.

Yet humans are persistently degrading, destabilizing, and debilitating oceanic ecosystems7,8. Marine environments are polluted with waste, chemicals, oil spills, invasive organisms, and particulates. There are currently 5.25 trillion pieces of plastic in the world’s oceans, growing at 8 million tons per year9. The ensuing destruction of marine habitats, such as coral reefs and mangroves, has grave consequences for the plants and animals they support10,11. Indeed, 10% of global coral reefs, which house 25% of marine species, have been destroyed and another 60% are at risk. Overfishing, accounting for ~23% of global seafood production12, and climate change, which exacerbates ocean acidification and circulation pattern anomalies, are threatening marine life further13,14.

As a response, emerging computer technologies have been proposed to improve ocean sustainability. Sensors and monitoring systems are already collecting copious amounts of data on oceanic properties. For instance, the Ocean Observatories Initiative uses Acoustic Doppler Current Profilers, Conductivity-Temperature-Depth sensors, fluorometers and turbidity sensors to provide continuous, high-resolution measurements of physical, biochemical, and geological properties of the Northeastern Pacific Ocean, Central and Southern California Current Systems and Juan de Fuca Plate15. These data inform ocean planning toward better governance. The European Space Agency’s Sentinel satellite mission provides data on a range of parameters, including sea surface temperature, ocean color, and sea ice cover16. Argo robotic floats (Array for Real- time Geostrophic Oceanography), drifting at depths of 1 to 2 km, register dissolved oxygen and nitrate, and incoming solar radiation levels to improve our understanding of ocean CO2 uptake and climate change impacts17. Together with Geographic Information Systems (GIS) software, such technologies help identify areas of conservation value. For instance, the Ocean Health Index18, a GIS-based tool, is used to assess the health of oceans, from global to local scales, and recognize areas that need protection.

Nonetheless, the trends of overfishing and marine pollution endure, at the risk of driving more than half of the world’s marine species to the brink of extinction by the end of the century. These risks have urged the UN to declare a state of oceanic emergency and prompted a call to scale-up ocean action founded on science, technology, and innovation19.

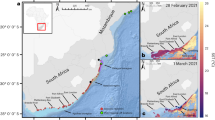

Against this backdrop, in this Perspective we examine whether Digital Twins (DTs), an innovative and advanced computer technology, built upon previously deployed hardware and software platforms, may provide a stepping stone to achieving ocean sustainability. Weighing DTs’ promise and presumed potency in advancing ocean sustainability across four thematic areas (see Fig. 1), we proceed to emphasize barriers that may hinder their implementation. We pay particular attention to data availability and quality constraints, underpinned by scientific gaps in physical and biochemical oceanography. Finally, we highlight several measures to alleviate these barriers.

Benefits of the virtual ocean

DTs are virtual representations of living and non-living entities, and the systems within which such entities are embedded. Enabled by advances in computing capabilities, DTs exist as computer-simulated models. Deployment of sensors that detect biochemical and physical properties of entities in real-time, ensures that the digital counterparts of these measured entities are accurate and ‘live’20. In such coupled cyber-physical systems, changes that occur in ‘physical’ real-world objects – e.g., the biotic and abiotic components of estuaries, coral reefs or the deep-sea – are modifying their virtual replicas, or ‘twins’, simultaneously and continuously21.

Initially implemented in product and process engineering22,23,24,25, in recent years DTs have been used outside their origin domains to model and simulate multi-component, highly dynamic systems, including ecosystems and the atmosphere26,27, and have been proposed for promoting sustainability writ large28.

If integrated with artificial intelligence (AI) and advanced modeling techniques, namely autonomous agents, DTs may be continuously interrogated for optimal system behaviors to support decision-makers. Autonomous agents embedded in virtual replica systems would use the current state of a system as input, simulate numerous control sequences to determine which aligns best with the control objective (e.g., prevent overfishing), predict future action sequences that optimize system behavior, and advise stakeholders overseeing and intervening in the ‘real-life’ system, for instance, a fishery29. Combining DTs with autonomous agents will have profound implications for marine management, offering possible remedies to overfishing and pollution concerns.

However, despite the increasing and transdisciplinary potential of DTs, they have received little attention in scientific and technological discussions for ocean sustainability, and accordingly, their potential has remained underutilized. In our opinion, one notable and prospective project, entitled the European Digital Twin of the Ocean (DTO), stands out as a fully-fledged and operational DT. Insufficiently funded, the European DTO is also, naturally, a Euro-centric endeavor, with scientific and technical development budgets secured in Horizon Europe funding mechanisms limiting its transformative potential (see Box 1).

In this respect, a comprehensive and balanced account of DTs is warranted, including an account anticipating and assessing design and deployment limitations of DTs, to ensure the technology receives the appropriate treatment and realizes its transformative potential for sustainable ocean management. To appreciate these prospects, we acknowledge potential applications of DTs across four thematic areas: (a) reducing and preventing overfishing, (b) modeling and predicting marine pollution, (c) adapting to climate change, and (d) marine spatial planning (see Fig. 1).

Reduce and prevent overfishing

Decades of overfishing has resulted in the decline of fish stocks, such as the Grand Banks cod, and degradation of marine food webs. Global warming exacerbates this concern by reducing fisheries productivity30. In this context, ‘virtual fisheries’ could be developed in silico to enable more effective management of fish stocks and monitor in near-real-time fish populations and fishing operations. Autonomous agents that are integrated into these ‘virtual fisheries’ could predict species abundance over time, and advise on optimal catch size and timing, thereby maintaining sustainable yield and protecting crucial marine habitats, such as spawning and nursery hotspots.

In the same vein, the computational environment of a DT could assist different parties to increase real-time transparency of fishing operations ensuring fisheries are harvested at a sustainable rate or using responsible fishing methods. Earlier applications of AI, outside of a DT, have proven successful to this end. For example, Sainsbury collaborated with Oceanmind to track fishing vessels in order to verify that tuna are caught without the use of fish aggregate devices31. Such tracking may happen autonomously and continuously, and for a greater number of species, once a DT is implemented for fisheries. Provided reliable catch statistics, or near enough approximations – through private-public partnerships, DTs could simulate different catch regimes, and help stewards and stakeholders to allocate fishing quotas.

Furthermore, DTs can be used to actively monitor, and combat, illegal, unregulated, and unreported (IUU) fishing practices which are responsible for up to 20% of fish catch worldwide32. Different data sources and analyses could be used to map locations of ships and detect IUU activity. These data can be displayed in real-time to local stakeholders and enable precise enforcement in marine protected areas (MPAs) or exclusive economic zones (EEZs). For example, the Global Fishing Watch (GFW) uses multiple data streams to track vessels, including automatic identification system (AIS), a tracking platform that uses transceivers on ships, and vessel monitoring system (VMS) data, which vessels broadcast, as well as remote sensing technology, including visible infrared imaging radiometer suite, synthetic aperture radar, and optical imagery33. The integration of these data sources in a ‘live’ virtual replica system, with autonomous agents, could enable tracking of vessels and identify IUU fishing activity34. Moreover, autonomous agents may be able to analyze Global Fishing Watch data, detect patterns, and provide spatio-temporal predictions of IUU activity to improve local enforcement35, in the same way AI has been touted to predict crime in cities36. Indeed, AI algorithms were trained on automatic identification system data and ocean condition data, such as SST and chlorophyll, to predict illegal activity of Chinese fishing vessels in Argentina’s EEZ32.

Modeling and predicting marine pollution

Alongside overfishing, marine pollution has emerged as a global emergency in recent decades, and a hallmark of the “Anthropocene”. A plethora of toxic substances generated by human activities are increasingly introduced into marine environments at the risk of their permanent impairment37. Plastic debris in oceans, coasts, and estuaries has gained somewhat of a prominence in this regard38, but plastics are merely one pollutant in a long list of chemical elements and compounds, including hundreds of pesticides, anti-foulants, pharmaceuticals and heavy metals39.

This risk compounds as contaminants arise from various sources such as land-based industrial activities, vessels, mineral exploration and extraction at sea, and riverine inputs39. Approximately 80% of contaminants originate inland and referred to as nonpoint source pollution (NSP), including numerous independent sources, such as septic tanks, automobiles, farms, ranches, and forest areas40. These make coastal pollution a monitoring priority together with ocean-based industries, namely oil and gas operations which have been responsible for some of the most well-known pollution events, including the Exxon Valdez Oil Spill in 198941 and the Deepwater Horizon Oil Spill in 201042.

Here, DTs may prove particularly useful in preventing coastal pollution. DTs can integrate multi-modal data inputs, including from close- and remote- sensing, to monitor NSP runoff from coastal communities and cities, as well as from industrial and sewage treatment plants and drainage systems. Autonomous agents could use DTs data for training, and then suggest policies that recommend improved management across waste streams to minimize debris or sewage discharge into oceans43. If paired with state-of-the-art machine learning (ML) models that recognize brands of pieces of debris based on image analysis, DTs may further improve polluter-pays policies and support such frameworks as EU’s Extended Producer Responsibility. In this regard, IBM’s PlasticNet project promises to implement ML for identification of trash types44, and could in the future be integrated into a larger virtual replica. Based on such data, DTs may issue alerts on toxic effluents and plastics, and their impending proximity to shallow water coral reefs and species45.

In preventing pollution from oil and gas operations, DTs can support predictive maintenance of large engineering systems, in the same way they have been used in other domains, including water and electricity infrastructure46. For example, ‘virtual jack-up rigs’ or ‘virtual semi-submersible rigs’ could synchronize with underwater Interne of Things (IoT) sensors47 connected to rig parts, and issue an alert when a component is about to malfunction. Songa Offshore, a drilling company, has already connected hundreds of IoT sensors to rigs in the North Atlantic48 for such a purpose. In the same vein, DTs could be used to simulate the effects of extreme weather events on offshore oil and gas infrastructure, a risk of increasing probability49.

In the event of an oil spill, and coupled with oil transport models (which account for tidal currents, baroclinic circulation, and local winds forecasts, for instance50, DTs may provide a near-real-time platform to interrogate possible oil slick movements and spreading, and run ‘what-if’ simulations testing and identifying optimal treatment and containment options51. Already proven as a platform that facilitates multi-party collaborations28, DTs could further assist in coordinating between different field response units.

Moreover, DTs may promote the research and regulation of underwater noise pollution found to be detrimental for marine species relying on acoustic senses for orientation and communication52.

In this increasingly recognized pollution domain53, DTs would harmonize and visualize sensor data on noises emitted by ships and seismic surveys with data on species distribution, such as those of SPACEWHALE for whales54. Drawing on these data, autonomous agents could then advise on optimal course-plotting to minimize noise disturbances, thereby supporting new regulatory frameworks such as the EU’s new limits on noise pollution.

Adapting to climate change

The implications of climate change for marine ecosystem integrity is a heavily researched area55, spanning studies of shifting ocean temperature, circulation, stratification, nutrient input, oxygen content, acidification, and oceanic species abundance and distributions, undertaken by various agencies and institutions.

One contested area of investigation where DTs might prove particularly useful is marine geoengineering. Involving manipulations of natural processes and habitats to counteract anthropogenic climate change and its impacts, marine geoengineering also has the potential to result in harmful effects56,57. Iron fertilization to aid in primary producer growth, artificial upwelling to reduce sea surface temperature, and seaweed cultivation and alkalinization to absorb carbon are some of the ideas in the field. Such methods court controversy, as not enough is known about their consequences, and the techniques would have to be carried out on an extremely large scale for effectiveness58. Here, ‘virtual estuaries’, ‘virtual coral reefs’ and ‘virtual mangrove forests’ could enable digital safe spaces where potential geoengineering interventions which promise to promote climate change adaptation but may result in unintended harm, can be tested at a speed and scale that may otherwise be inhibited by the precautionary principle.

Promoting marine spatial planning

DTs are uniquely suitable to support marine spatial planning (MSP). DTs already employed for improved socio-technical and socio-ecological systems design28 could be re-purposed to this end. Real-time virtual replicas of marine environments would allow public and private stakeholders to simulate various planning scenarios, and with the aid of autonomous agents, determine which human activity aligns best with biodiversity conservation—all in silico—before interfering with the physical system. This way, ‘marine multi-use’, a cornerstone principle of MSP59 (see Box 2.), may be realized with minimal compromises between public and private parties.

DTs could usher in a new area of nature-inclusive marine construction60, for instance by promoting coupled offshore wind farms and fish farms, while accommodating artificial reef structures59. Autonomous agents would analyze all potential areas for such infrastructure, factoring in climatic conditions, noise pollution, eutrophication (caused by aquaculture’s nutrient discharge), and invasive species proliferation potential, among other variables, before recommending optimal locations. Top of Form

Barriers

Ideally, DTs would offer powerful virtual environments, in which computational resources; sensors, processors, autonomous agents, and actuators, are able to simulate aquatic ecosystems across coastal habitats—from the Littoral to the Neritic zone, at the ocean surface and at open oceans, alongside simulations of ocean‑based industries, including maritime and coastal equipment and ports (shipping and fishing included), offshore oil and gas, offshore wind, and marine biotechnology.

However, setting up this computational environment is a gargantuan task. On many accounts, it is impractical. In some respects, it may not be necessary. If, for instance, managing coastal marine biodiversity is an institutional priority, then computational resources could be allocated to model and simulate the 66 large marine ecosystems (LMEs) defined as comparatively large near coastal areas (spanning 200,000 km2 or more) where productivity is considered higher than in open ocean, and where most (approximately 90%) of the world’s fish catch is taken61,62 (although open-ocean processes, including migration routes, cannot be entirely ignored). Devising DTs of limited extent to support conservation, restoration, and sustainable management of high-priority aquatic zones, such as LMEs, is a more realistic effort. Such efforts too, nevertheless, will face at least three technical and economic limitations.

First, robust live virtual representations rely on appropriate data. Yet, data pertaining to fundamental oceanic processes and phenomena are partial, underpinned by gaps in scientific knowledge spanning the physical, biological, and chemical oceanography sub-domains.

For instance, the study of currents and coastal dynamics, how they interact with the atmosphere and drive ENSO events—all at the crux of physical oceanography—is essential to support accurate DTs. However, present-day satellites are only capable of measuring geostrophic currents of 100 kilometers or more63. The Surface Water and Ocean Topography (SWOT) satellite, launched by NASA and the French space agency CNES in December 2022, promises to measure (mesoscale) currents of 20 kilometers and more in the future64. Yet even with this improved resolution, data regarding sub-mesoscale currents, or small-scale currents of up to 1 kilometer63, will remain unavailable. The Ocean Surface Current Multiscale Observation Mission (OSCOM), shortlisted in China’s Strategic Priority Program on Space Science, offers to observe ocean surface currents at 5–10 kilometers, with a launch date in 2025. It remains unclear if this mission will be eventually chosen, as there are 13 candidate missions and just six will be launched65. While it is possible to infer ocean surface currents from drifting buoys or Argo floats data, there are only 1500 buoys worldwide, spaced 400–500 kilometers apart, and just 4000 Argo floats with a similar low resolution of 200–300 kilometers63, suggesting these machines will fail to fill in gaps in knowledge and data essential for DTs, rendering the latter imprecise.

Representing the deep sea in virtual replicas is an additional issue. The deep sea is generally considered to encompass waters under 200 m, where light begins to dwindle. A variety of oceanic processes occur at these depths, including biological carbon pumping and nutrient cycling66. It also serves as a habitat for a host of organisms66. Concomitantly, it faces similar threats as the shallower layers of the ocean, such as temperature changes, acidification, and pollution67. An accurate and live representation of the deep sea is imperative for simulating various oceanic processes, such as nutrient availability and the oceanic carbon cycle, as well as for predicting the effects of anthropogenic stressors. Nonetheless, regions below the Epipelagic zone are widely recognized to be under-observed, and under-studied66. Baseline measurements of essential properties in the Arctic deep sea, for instance, are missing, and scientific knowledge of biogeochemical processes in the deep ocean is similarly partial68.

Scientific knowledge gaps pervade biochemical oceanography as well. A survey of long-term biological observation programs revealed only 7% of the global ocean surface are monitored, with a marked lack of monitoring across South American, Eastern European, Asian, Oceania and African coasts69. Moreover, up to two-thirds of marine species have yet to be discovered70. Such gaps in data would result in modeling inaccuracies and algorithmic errors71,72. An analysis of the spatial distribution of some 35,000 marine species indicated that species are absent near the equator, which the authors attributed to a lower frequency of sampling in tropic zones73. Such sampling bias would affect the precision of ‘virtual fisheries’ and other in silico models, and their ability to inform decisions, for instance in MSP processes.

A second technical barrier pertains to data compatibility and interoperability. For some time now, multiple initiatives have been attempting to assimilate data into shared platforms, such as GOOS (Global Ocean Observation System)74 and EMODNET, which compiles over 150 organizations providing marine-related information75. However, these data originate from different sources, and do not necessarily follow standardized formats, which makes data harmonization and interoperability a challenge. Marine image data, for example, are gathered by different camera systems mounted on varied platforms such as Argo floats, AUVs, and moorings camera platforms. Images differ in resolution, illumination, and viewing angles, and image metadata, which may include water depth, positioning, and different water properties, is typically too sparse to adhere to interoperability principles76. Complicating these further, certain data curators may be discouraged to comply with standards, if compliance entails modifying organizational formatting74. Taken together, such factors make it difficult to compare between datasets76, and eventually limit the efficacy of DTs as a decision support system.

Cost, is a third persistent obstacle. Implementing a digital twin of a multicomponent, dynamic system is a resource-intensive endeavor. For comparison, developing a virtual replica of Singapore was estimated to cost $73 million77. The core European DTO is budgeted at €10 million (see Box 1). While Singapore, an island state at the southern end of the Malay Peninsula, covers a total area of 719 km2, the world’s oceans cover an area of approximately 361 million km2 and contain a volume of about 1.37 billion km3 of water. The Ocean is over 500,000 times larger in surface area than Singapore (volume is exponentially larger), yet receives a direct budget seven times smaller for developing a virtual replica. If anything, this anecdote calls for further funding of ocean DTs.

The underlying data acquisition layer is likewise underfunded. For instance, an Argo float costs $20,000–$150,000, with additional $20,000 for deployment. At a low resolution of 300 km, the current annual cost of the Argo project is estimated at $40 million78. Adding floats for higher-resolution coverage to improve prospective DTs, would multiply these costs considerably.

Countries, which could assist in implementing and maintaining essential gear for monitoring and scientific inquiry, may shift their priorities. For example, the Tropical Atmosphere Ocean (TAO) array, a grid of buoys providing data on the El Niño–Southern Oscillation since the 1980s, crucial for weather forecasting, has been undermined by inadequate maintenance due to budgetary constraints in the US79 and in Japan80. We similarly expect many countries to show little interest in subsidizing projects for studying and simulating common-pool resources, such as the oceans. In the context of oceanography, NOAA’s Ocean Exploration program, the only US federal program focused on deep ocean research, was allocated $42 million in 2021, less than 1% of NOAA’s total annual budget81. Countries may further limit scientific access to Exclusive Economic Zones, preventing data collection in crucial marine regions82.

On their account, private parties may lift several of these barriers—ocean-based industries spend some $3 billion annually on marine data—yet commercial competition and conflicting interests stand to overshadow common-good intentions. Fishing fleet location and catch data, essential for ‘live virtual fisheries’, will not be easily shared. Oil and gas infrastructure operators will fear their data may be used against them as proof of negligence83, preventing the development of ‘live virtual MSPs’ and the realization of ‘marine multi-use’ that does not risk oceanic biomes and biodiversity.

Beyond these immediate techno-economic limitations, we expect various ethical concerns and uncertainties to arise in the development of oceanic DTs, and the digital representation of marine ecosystem services. Indeed, ethical issues, including safety, privacy, data security, and inclusivity, have been acknowledged and analyzed in the deployment of advanced computer technologies—including DTs, AI and ubiquitous computing—in comparable, complex and multi-stakeholder socio-ecological systems (e.g., agro-ecologies, river basins)28,84,85,86. Efforts to develop DTs in a risk-aware, reflexive, and responsive manner, should draw on lessons learned in these domains as well as in the broader responsible research and innovation literature and practice87.

The way forward: digital twins as a piece to the ocean sustainability puzzle

The need to conserve and restore ocean ecosystems, given their fundamental role in providing ecosystem services and in maintaining healthy atmospheric and terrestrial environments on which humans rely, is now firmly recognized in international agendas. We have recently embarked on the mission of the UN Decade of Ocean Science for Sustainable Development (2021–2030)5, with UNESCO pledging to map 80% of the seabed by 2030.

DTs are one instrument in a toolbox that could be used to provide the underpinning infrastructure, facilitate partnerships, and generate the data required to inform policies for this mission. However, while DTs for the governance of our oceans are at least as important as DTs of, say, the atmosphere, the latter has garnered greater attention in literature and practice27. This must be rectified, and the impediments highlighted above must be overcome.

So, what needs to be done to realize DTs for ocean sustainability? First, costs should be covered and transparency increased. Targeted funding from governments and other nongovernmental organizations is essential for developing and maintaining digital twins and should be earmarked for this purpose. In addition, incentivization of data sharing and the use of open-source tools and platforms can help to reduce both the financial and time-related costs of creating and using digital twins as well as support the democratization of oceanic data.

Second, data quality and quantity must be improved. Spatial and temporal coverage, and resolution, could be augmented by deploying innovative observation platforms, such as Marine Autonomous Robotic Systems that can capture measurements in locations inaccessible by ships88. In a similar vein, new sensor technologies, such as the deep ocean profiling float already deployed off Luzon Island, can enhance the water column depth of measurements captured89.

Third, data interoperability should be emphasized. Industry associations and professional societies can promote the development of technical standards, compatibility protocols, and best practices for creating, managing, and using digital twins, including integration with existing systems. These efforts should extend to data collection and curation, including building standardized repositories and portals such as the Global Ocean Data Analysis Project (GLODAP), the Ocean Biogeographic Information System (OBIS), and the Integrated Marine Observing System (IMOS). Considering the myriad of organizations involved in the study of the ocean, a global collaborative effort to collect and curate data may be facilitated by federated Marine Spatial Data Infrastructures (MSDIs). Federated MSDIs are distributed systems that enable different institutions to share spatial data seamlessly in a single platform while keeping their data sovereignty and control, and adhering to FAIR (Findable, Accessible, Interoperable, Reusable) data principles.

Fourth, the development of DTs should be strategically prioritized. Here, a ‘nested enterprises’ approach—to inform the rollout of complex computational environments – could prove useful. Adopted from the common-pool resource literature90, particularly pertinent for ocean governance91, the ‘nested enterprises’ principle maintains that governance systems of shared resources should be scaled by the urgency of the problems they are aiming to solve, while enabling a degree of flexibility and sensitivity to context and local circumstances. By adopting a bounded bottom-up approach, regulators, oceanographers, and computer scientists could scale gradually, starting with DTs of marine biomes and ecosystems of the highest value.

Fifth, cross-disciplinary collaborations must be improved. The ocean science, technology, and policy communities are yet to be sufficiently well organized to advance the use of DTs to tangibly inform governance for sustainable marine ecosystems. Initiatives to strengthen scientific, commercial, governmental, and not-for-profit collaboration are essential to achieve the UN Decade of Ocean Science for Sustainable Development. To facilitate such collaborations, a multifaceted approach—much in line with the ‘nested enterprises’ approach discussed above—can be employed. One course of action may see the establishment of interdisciplinary research initiatives around large marine ecosystems (LMEs) at risk (e.g., LMEs exposed to severe pollution) that will bring together oceanographers and marine biologists alongside computer scientists and policy analysts to serve as a hub for knowledge exchange and problem-solving (e.g., data harmonization). Such initiatives can offer shared workspaces, interdisciplinary seminars, and joint research projects to encourage the blending of expertise and perspectives. They may take the form of intensive summer research programs common in academia for the STEM disciplines (science, technology, engineering, and mathematics). Another course of action could create funding mechanisms that require collaboration between different fields to incentivize researchers to partner across disciplines. For instance, grant programs could mandate partnerships between oceanographers and computer scientists to tackle specific marine ecosystem challenges in a DT virtual environment. Indeed, DTs can in themselves facilitate collaborations, and should be clearly stated, prioritized, and integrated in frameworks for ocean management such as the UN High Seas Treaty.

Finally, developing a comprehensive, cross-sector, stakeholder engagement strategy is crucial for all these measures. We emphasize at least four pillars for successful stakeholder engagement. At the outset, it is imperative to identify and categorize stakeholders based on their interests, levels of influence, and domain expertise, for each MSP effort and in each LME governance framework. This entails recognizing governmental entities responsible for shaping ocean policies, marine researchers at the forefront of scientific exploration, industry stakeholders representing sectors such as shipping, fisheries, and renewable energy, as well as local communities directly dependent on oceanic resources. Second, to establish a foundation of informed engagement, a thorough comprehension of the unique needs, concerns, and expectations of each stakeholder group is indispensable. Employing methods such as surveys, interviews, and focus groups can provide insights into these aspects, enabling a tailored approach to engagement—before a DT is developed and used in decision-making processes. Such understanding aids in addressing potential conflicts, such as issues pertaining to data privacy, intellectual property rights, and socio-economic impacts. Third, central to the engagement strategy is the creation of platforms that facilitate collaboration and knowledge exchange among stakeholders. Such platforms are emphasized above (e.g., GLODAP, OBIS, IMOS, and MSDIs). Fourth, when stakeholders are engaged it is necessary to underscore the alignment between their participation and their respective goals. Lastly, the viability of the engagement strategy is underpinned by demonstrable outcomes. This can be achieved through the execution of pilot projects—consistent with the ‘nested enterprises’ approach—that showcase the tangible impact of DTs utilization on ocean sustainability. Such demonstration projects may encompass real-time monitoring of marine ecosystems in the DT interface, preventing IUU activities and pollution from oil and gas operations, and improving transparency of fishing operations to ensure fisheries are harvested at a sustainable rate. Sharing the results of pilot initiatives would foster a deeper understanding of the benefits of DTs for ocean sustainability, thereby catalyzing broader adoption.

Data availability

The data used in this article are fully available in the main text and referenced sources.

Change history

15 December 2023

A Correction to this paper has been published: https://doi.org/10.1038/s44183-023-00037-3

References

Teh, L. C. & Sumaila, U. R. Contribution of marine fisheries to worldwide employment. Fish Fish. 14, 77–88 (2013).

FAO (2020). The state of world fisheries and aquaculture 2020. Rome, Italy: Food and Agriculture Organization of the United Nations.

Tigchelaar, M. et al. The vital roles of blue foods in the global food system. Glob. Food Secur. 33, 100637 (2022).

IPCC (2019). Climate change and oceans. In: IPCC Special Report on the Ocean and Cryosphere in a Changing Climate. Geneva, Switzerland: Intergovernmental Panel on Climate Change.

Frazão Santos, C. et al. A sustainable ocean for all. npj Ocean Sustain. 1, 2 (2022).

UNESCO (2021). Marine biodiversity. Paris, France: United Nations Educational, Scientific and Cultural Organization.

Halpern, B. S. et al. A global map of human impact on marine ecosystems. Science 319, 948–952 (2008).

Jackson, J. B. et al. Historical overfishing and the recent collapse of coastal ecosystems. science 293, 629–637 (2001).

Ritchie, H., & Roser, M. (2018). Plastic pollution. Our World in Data.

Pandolfi, J. M. et al. Global trajectories of the long-term decline of coral reef ecosystems. Science 301, 955–958 (2003).

Mellin, C. et al. Humans and seasonal climate variability threaten large-bodied coral reef fish with small ranges. Nat. Commun. 7, 10491 (2016).

FAO (2020). The State of World Fisheries and Aquaculture 2020. Sustainability in action.

IPCC, 2013: Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change [Stocker, T.F. et al. (eds.)]. Cambridge University Press, Cambridge, United Kingdom and New York, NY, USA, 1535 pp.

Barange, M. et al. Impacts of climate change on fisheries and aquaculture. United Nations’ Food and Agriculture Organization 12, 628–635 (2018).

Ocean Observatories Initiative (OOI) (2023). Sustained data for a changing ocean. Available at: https://oceanobservatories.org/ (accessed 16 March 2023).

European Space Agency (2023). Sentinel 3: ESA’s Global land and Ocean Mission for GMeS Operational Services. Available at: https://sentinel.esa.int/documents/247904/351187/S3_SP-1322_3.pdf (accessed 16 March 2023).

Chai, F. et al. Monitoring ocean biogeochemistry with autonomous platforms. Nature Rev. Earth Environ. 1, 315–326 (2020).

Gewin, V. (2012). Ocean health index unveiled. Nature.

UN (2022). UN Ocean Conference opens with call for urgent action to tackle ocean emergency. Available at: https://www.un.org/en/desa/un-ocean-conference-opens-call-urgent-action-tackle-ocean-emergency (accessed 16 March 2023).

Wright, L. & Davidson, S. How to tell the difference between a model and a digital twin. Adv. Model. Simul. Eng. Sci. 7, 1–13 (2020).

Grieves, M. & Vickers, J. In Transdisciplinary Perspectives on Complex Systems (eds Kahlen, J. et al.) 85–113 (Springer, 2017).

Boschert, S. & Rosen, R. In Mechatronic Futures (eds Hehenberger, P. & Bradley, D.) 59–74 (Springer, 2016).

Bauer, P. et al. The digital revolution of Earth-system science. Nat. Comput. Sci. 1, 104–113 (2021).

Rosen, R., Von Wichert, G., Lo, G. & Bettenhausen, K. D. About the importance of autonomy and digital twins for the future of manufacturing. IFAC PapersOnLine 48, 567–572 (2015).

Tao, F., Zhang, H., Liu, A. & Nee, A. Y. Digital twin in industry: state-of-the-art. IEEE Trans. Industr. Inform. 15, 2405–2415 (2018).

Voosen, P. Europe is building a ‘digital twin’ of Earth to revolutionize climate forecasts. Science (2020).

Bauer, P., Stevens, B. & Hazeleger, W. A digital twin of Earth for the green transition. Nat. Clim. Change 11, 80–83 (2021).

Tzachor, A., Sabri, S., Richards, C. E., Rajabifard, A. & Acuto, M. Potential and limitations of digital twins to achieve the sustainable development goals. Nat. Sustain. 5, 822–829 (2022).

Tzachor, A., Richards, C. E. & Jeen, S. Transforming agrifood production systems and supply chains with digital twins. npj Science of Food 6, 47 (2022).

Free, C. M. et al. Impacts of historical warming on marine fisheries production. Science 363, 979–983 (2019).

Sainsbury’s (2018). Sainsbury’s expands responsible fishing methods. Available at: https://www.about.sainsburys.co.uk/news/latest-news/2018/28-03-2018-tuna (accessed 23 March 2023).

Woodill, A. J., Kavanaugh, M., Harte, M., & Watson, J. R. (2020). Predicting Illegal Fishing on the Patagonia Shelf from Oceanographic Seascapes. arXiv preprint arXiv:2007.05470.

de Souza, E. N., Boerder, K., Matwin, S. & Worm, B. Improving fishing pattern detection from satellite AIS using data mining and machine learning. PLoS ONE 11, e0158248 (2016).

Global Fish Watch (2018). IUU – Illegal, Unreported, Unregulated Fishing. Available at: https://globalfishingwatch.org/fisheries/iuu-illegal-unreported-unregulated-fishing/ (accessed 23 March 2023).

Global Fish Watch (2020). Predictive Analytics to Forecast Illegal Fishing Risk in Mexico. Available at: https://globalfishingwatch.org/fisheries/illegal-fishing-risk-in-mexico/ (accessed 23 March 2023).

Kouziokas, G. N. The application of artificial intelligence in public administration for forecasting high crime risk transportation areas in urban environment. Transport. Res. Procedia 24, 467–473 (2017).

Beiras, R. (2018). Marine pollution: sources, fate and effects of pollutants in coastal ecosystems. Elsevier.

Borrelle, S. B. et al. Why we need an international agreement on marine plastic pollution. Proc. Natl Acad. Sci. USA 114, 9994–9997 (2017).

European Commission (2023). Our Oceans, Seas and Coasts. Descriptor 8: Contaminants. Available at: https://ec.europa.eu/environment/marine/good-environmental-status/descriptor-8/index_en.htm (accessed 23 March 2023).

Landrigan, P. J. et al. Human health and ocean pollution. Ann. Glob. Health 86, 151 (2020).

Peterson, C. H. et al. Long-term ecosystem response to the Exxon Valdez oil spill. Science 302, 2082–2086 (2003).

Beyer, J., Trannum, H. C., Bakke, T., Hodson, P. V. & Collier, T. K. Environmental effects of the Deepwater Horizon oil spill: a review. Mar. Pollut. Bull. 110, 28–51 (2016).

Meijer, L. J. et al. More than 1000 rivers account for 80% of global riverine plastic emissions into the ocean. Sci. Adv. 7, eaaz5803 (2021).

IBM (2021). PlasticNet: Saving the Ocean with Machine Learning (IBM Space Tech). Available at: https://www.ibm.com/cloud/blog/plasticnet-saving-the-ocean-with-machine-learning-ibm-space-tech (accessed 23 March 2023).

Sweet, M., Stelfox, M., & Lamb, J. (2019). Plastics and shallow water coral reefs: synthesis of the science for policy-makers.

Götz, C. S., Karlsson, P. & Yitmen, I. Exploring applicability, interoperability and integrability of Blockchain-based digital twins for asset life cycle management. Smart Sustain. Built Environ. 11, 532–558 (2020).

Kesari Mary, D. R., Ko, E., Yoon, D. J., Shin, S. Y. & Park, S. H. Energy optimization techniques in underwater internet of things: issues, state-of-the-art, and future directions. Water 14, 3240 (2022).

ZDNET (2017). How IoT is helping this offshore driller gain efficiencies. Available at: https://www.zdnet.com/article/iot-helping-offshore-driller-gain-efficiencies/ (accessed 23 March 2023).

Dong, J., Asif, Z., Shi, Y., Zhu, Y. & Chen, Z. Climate change impacts on coastal and offshore petroleum infrastructure and the associated oil spill risk: a review. J. Mar. Sci. Eng. 10, 849 (2022).

Periáñez, R. A Lagrangian oil spill transport model for the Red Sea. Ocean Eng. 217, 107953 (2020).

Mohammadiun, S. et al. Evaluation of machine learning techniques to select marine oil spill response methods under small-sized dataset conditions. J. Hazard. Mater. 436, 129282 (2022).

Nowacek, D. P. et al. Marine seismic surveys and ocean noise: time for coordinated and prudent planning. Front. Ecol. Environ. 13, 378–386 (2015).

Chahouri, A., Elouahmani, N. & Ouchene, H. Recent progress in marine noise pollution: a thorough review. Chemosphere 291, 132983 (2022).

Space Whale (2023). Available at: https://www.spacewhales.de/ (accessed 23 March 2023).

García-Soto, C. (Carlos); et al. (2022). An Overview of Ocean Climate Change Indicators: Sea Surface Temperature, Ocean Heat Content, Ocean pH, Dissolved Oxygen Concentration, Arctic Sea Ice Extent, Thickness and Volume, Sea Level and Strength of the AMOC (Atlantic Meridional Overturning Circulation). Front. Mar. Sci. 8, 2021.

McGee, J., Brent, K. & Burns, W. Geoengineering the oceans: an emerging frontier in international climate change governance. Aust. J. Maritime Ocean Affairs 10, 67–80 (2018).

Crabbe, M. J. C. Modelling effects of geoengineering options in response to climate change and global warming: Implications for coral reefs. Comput. Biol. Chem. 33, 415–420 (2009).

Boyd, P. & Vivian, C. Should we fertilize oceans or seed clouds? No one knows. Nature 570, 155–157 (2019).

Steins, N. A., Veraart, J. A., Klostermann, J. E. & Poelman, M. Combining offshore wind farms, nature conservation and seafood: Lessons from a Dutch community of practice. Mar. Policy 126, 104371 (2021).

Kamermans, P. et al. Offshore wind farms as potential locations for flat oyster (Ostrea edulis) restoration in the Dutch North Sea. Sustainability (Switzerland) 10, 308 (2018).

Large Marine Ecosystems Hub (2023). Available at: https://www.lmehub.net/ (accessed 24 August 2023).

Global Environment Facility (2023). Large Marine Ecosystems. Available at: https://www.thegef.org/what-we-do/topics/international-waters/marine/large-marine-ecosystems (accessed 29 March 2023).

Du, Y. et al. Ocean surface current multiscale observation mission (OSCOM): simultaneous measurement of ocean surface current, vector wind, and temperature. Prog. Oceanogr. 193, 102531 (2021).

NASA (2022). Latest International Water Satellite Packs an Engineering Punch. Available at: https://www.nasa.gov/feature/jpl/latest-international-water-satellite-packs-an-engineering-punch (accessed 29 March 2023).

INVERSE (2022). Dark matter, Earth 2.0, and more: These 13 missions could be China’s next big space mission. Available at: https://www.inverse.com/science/china-space-missions-selection-process (accessed 29 March 2023).

Levin, L. A. et al. Global observing needs in the deep ocean. Front. Mar. Sci. 6, 241 (2019).

Paulus, E. Shedding light on deep-sea biodiversity—a highly vulnerable habitat in the face of anthropogenic change. Front. Mar. Sci. 8, 667048 (2021).

Nguyen, A. T. et al. On the benefit of current and future ALPS data for improving Arctic coupled ocean-sea ice state estimation. Oceanography 30, 69–73 (2017).

UNESCO, GOOS BioEco Panel (202021). Alarming knowledge gaps in the global status of marine life. Available at: https://www.unesco.org/en/articles/alarming-knowledge-gaps-global-status-marine-life (accessed 29 March 2023).

Appeltans, W. et al. The magnitude of global marine species diversity. Curr. Biol. 22, 2189–2202 (2012).

Jobin, A. et al. AI reflections in 2020. Nat. Mach. Intellig. 3, 2–8 (2021).

Tzachor, A., Whittlestone, J., Sundaram, L. & Heigeartaigh, S. O. Artificial intelligence in a crisis needs ethics with urgency. Nat. Mach. Intellig. 2, 365–366 (2020).

Menegotto, A. & Rangel, T. F. Mapping knowledge gaps in marine diversity reveals a latitudinal gradient of missing species richness. Nat. Commun. 9, 4713 (2018).

Snowden, D. et al. Data interoperability between elements of the global ocean observing system. Front. Mar. Sci. 6, 442 (2019).

Martín Míguez, B. et al. The European Marine Observation and Data Network (EMODnet): visions and roles of the gateway to marine data in Europe. Front. Mar. Sci. 6, 313 (2019).

Schoening, T. et al. Making marine image data FAIR. Sci. Data 9, 414 (2022).

Tomorrows World Today (2022). Singapore’s Digital Twin of Entire Country. Available at: https://www.tomorrowsworldtoday.com/2022/09/12/singapores-digital-twin-of-entire-country/ (accessed 29 March 2023).

Scripps Institution of Oceanography (2023). Argo Program. Available at: https://argo.ucsd.edu/about/ (accessed 29 March 2023).

Tollefson, J. El Niño monitoring system in failure mode. Nature (2014).

Voosen, P. Fleet of sailboat drones could monitor climate change’s effect on oceans. Sci. Mag. (2018).

Government Executive (2021). Why America Must Lead—and Fund—the Ocean Data Revolution. Available at: https://www.govexec.com/management/2021/06/why-america-must-leadand-fund-ocean-data-revolution/175010/ (accessed 29 March 2023).

Global Ocean Observing System (2021). Experts warn limits on ocean observations in national waters likely to jeopardize climate change mitigation efforts. Available at: https://www.goosocean.org/index.php?option=com_content&view=article&id=403:experts-warn-limits-on-ocean-observations-in-national-waters-likely-to-jeopardize-climate-change-mitigation-efforts&catid=13&Itemid=125 (accessed 29 March 2023).

Murray, F. et al. Data challenges and opportunities for environmental management of North Sea oil and gas decommissioning in an era of blue growth. Mar. Policy 97, 130–138 (2018).

Richards, C. E., Tzachor, A., Avin, S. & Fenner, R. Rewards, risks and responsible deployment of artificial intelligence in water systems. Nat. Water 1, 422–432 (2023).

Tzachor, A., Devare, M., King, B., Avin, S. & Ó hÉigeartaigh, S. Responsible artificial intelligence in agriculture requires systemic understanding of risks and externalities. Nat. Mach. Intellig. 4, 104–109 (2022).

Galaz, V. et al. Artificial intelligence, systemic risks, and sustainability. Technol. Soc. 67, 101741 (2021).

Stilgoe, J., Owen, R. & Macnaghten, P. In The Ethics of Nanotechnology, Geoengineering and Clean Energy 347–359 (Routledge, 2020).

National Oceanography Centre (2023). Marine Autonomous Robotic Systems. Available at: https://noc.ac.uk/technology/technology-development/marine-autonomous-robotic-systems (accessed 29 March 2023).

Wang, Q., Qiu, Z., Yang, S., Li, H. & Li, X. Design and experimental research of a novel deep-sea self-sustaining profiling float for observing the northeast off the Luzon Island. Sci. Rep. 12, 18885 (2022).

Ostrom, E. Reformulating the commons. Swiss Political Sci. Rev. 6, 29–52 (2000).

Brodie Rudolph, T. et al. A transition to sustainable ocean governance. Nat. Commun. 11, 3600 (2020).

European Commission (2023). European Digital Twin of the Ocean (European DTO). Available at: https://research-and-innovation.ec.europa.eu/funding/funding-opportunities/funding-programmes-and-open-calls/horizon-europe/eu-missions-horizon-europe/restore-our-ocean-and-waters/european-digital-twin-ocean-european-dto_en (accessed 16 March 2023).

European Commission, CORDIS (2023). EU Public Infrastructure for the European Digital Twin Ocean. Available at: https://cordis.europa.eu/project/id/101101473 (accessed 16 March 2023).

European Commission (2021). Horizon Europe. Available at: https://research-and-innovation.ec.europa.eu/system/files/2022-06/ec_rtd_he-investing-to-shape-our-future_0.pdf (accessed 23 March 2023).

UN Chronicle (2022). Collaboration and Capacity-Building to End Illegal, Unreported and Unregulated Fishing. Available at: https://www.un.org/en/un-chronicle/joint-analytical-cell-closing-net-illegal-unreported-and-unregulated-fishing (accessed 23 March 2023).

Foley, M. M. et al. Guiding ecological principles for marine spatial planning. Mar. Policy 34, 955–966 (2010).

Santos, C. F. et al. Marine spatial planning. In World Seas: An environmental evaluation (pp. 571–592). Academic Press (2019).

Ehler, C. N. Two decades of progress in marine spatial planning. Mar. Policy 132, 104134 (2021).

Acknowledgements

This paper was made possible through the support of a grant from Templeton World Charity Foundation, Inc. The opinions expressed in this publication are those of the author(s) and do not necessarily reflect the views of Templeton World Charity Foundation, Inc. We are grateful to Professor Alex Hearn of Universidad San Francisco de Quito and the Galapagos Science Centre for hosting O.H. at USFQ and the Galapagos Science Centre and for his comments to improve and precise the manuscript. The authors thank Ms Kristina Atanasova for the graphical development and design of Fig. 1.

Author information

Authors and Affiliations

Contributions

A.T., O.H. and C.E.R. developed the paper jointly, and all contributed equally to the writing of the text.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tzachor, A., Hendel, O. & Richards, C.E. Digital twins: a stepping stone to achieve ocean sustainability?. npj Ocean Sustain 2, 16 (2023). https://doi.org/10.1038/s44183-023-00023-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s44183-023-00023-9

This article is cited by

-

Advancing interdisciplinary knowledge for ocean sustainability

npj Ocean Sustainability (2023)