Abstract

Quantum technology provides a ground-breaking methodology to tackle challenging computational issues in power systems. It is especially promising for Distributed Energy Resources (DERs) dominant systems that have been widely developed to promote energy sustainability. In those systems, knowing the maximum sections of power and data delivery is essential for monitoring, operation, and control. However, high computational effort is required. By leveraging quantum resources, Quantum Approximate Optimization Algorithm (QAOA) provides a means to search for these sections efficiently. However, QAOA performance relies heavily on critical parameters, especially for weighted graphs. Here we present a data-driven QAOA, which transfers quasi-optimal parameters between weighted graphs based on the normalized graph density. We verify the strategy with 39,774 expectation value calculations. Without parameter optimization, our data-driven QAOA is comparable with the Goemans-Williamson algorithm. This work advances QAOA and pilots its practical application to power systems in noisy intermediate-scale quantum devices.

Similar content being viewed by others

Introduction

Quantum technology is emerging as a new hope to address challenging computational tasks in power systems, including quantum chemistry simulation for new type batteries1,2,3, efficient power system analysis by solving linear systems of equations4,5,6,7,8, forecasting highly chaotic systems9, scheduling and dispatching power grids10, unit commitment11, optimal reconfiguration of distribution grids12, etc. However, the existing algorithms require substantial quantum resources, limiting their near-term utilization on noisy intermediate-scale quantum (NISQ) devices13. Even though specific instances of quantum algorithms have been demonstrated on various quantum processors with tens of qubits14,15,16, practical applications to address power system problems will still require further advances in algorithmic design.

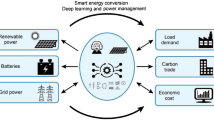

In power systems, one emerging quantum application is to analyze the Distributed Energy Resources (DERs) dominant power system, which provides a potent solution to seek an edge toward energy sustainability. In Fig. 1, we illustrate a typical DER dominant cyber-physical power system includes physical layer and cyber layer. The physical layer is energized by DERs and the cyber-layer enables the communication among DERs17 through the advanced metering infrastructure and Internet of Things system18 for system coordination and control19.

To improve the resiliency of the system, it is critical to efficiently obtain the maximum sections of power energy in the physical layer and data traffic in the cyber layer20,21,22. Mathematically, finding the maximum section of power energy or data traffic is to solve the Max-Cut problem, which is an NP-hard issue23. Therefore people implement classical approximation algorithms24,25,26,27 to address the Max-Cut problem in practical applications. However, for specific instances, classical algorithms can only guarantee an approximation ratio of 0.87824,28.

The Quantum Approximate Optimization Algorithm (QAOA), a hybrid quantum-classical algorithm, is expected to obtain better approximate solutions than any existing classical algorithms29,30. QAOA utilizes a classical computer trains the parameters for quantum circuit29. The parameterized quantum circuit approximates the adiabatic evolution from an initial Hamiltonian, whose ground energy state is easy to prepare, to a final Hamiltonian, whose ground energy state encodes the solution of the Max-Cut problem. With an ideal approximation, people expect to obtain the exact solution of the Max-Cut problem with high probabilities31. Consequently, the parameters involved in the quantum circuit play an essential role in getting high-quality approximations32,33,34. However, how to efficiently obtain appropriate parameters is still an open question.

This work presents a data-driven QAOA with parameter transfer strategy to tackle the challenging issue of efficiently obtaining appropriate parameters for QAOA. Therefore, the data-driven QAOA enables efficient search of the maximum sections of power delivery and data traffic in cyber-physical power systems. The contributions of this work are summarized below. First, a parameter transfer strategy based on the normalized graph density is developed for QAOA on generic weighted graphs. Through the transfer strategy, quasi-optimal parameters can be obtained for the target (new) graphs from the seed (existing) graphs. Those parameters can then be either directly applied or used as an initial guess for further optimization. The transfer strategy is designed to be extendable, allowing new verified graph-parameter pairs to be added to the transfer database. Therefore, we can enable efficient QAOA computation. Second, based on the transfer strategy, the data-driven QAOA framework is established. We have numerically justified the effectiveness of the strategy through evaluating the QAOA’s performance on 1710 random instances with the transferred parameters and the ones after optimization. Third, we also perform the data-driven QAOA in practical power systems to get their maximum sections. Simulations on 996 case studies have validated that the data-driven QAOA can efficiently obtain comparable results to the Goemans-Williamson (GW) algorithm. It sheds light on the online computation and analysis. Additionally, the study on the near-term achievable noise of quantum processor shows that it is negligible to our data-driven QAOA method. Overall, this work reduces the computational effort required for training QAOA parameters, advances the development of QAOA, and highly promotes its wide applications for solving engineering problems. As a practical quantum application, it is feasible shortly in the NISQ era to address problems in power systems. Recently, we became aware of a similar work by Shaydulin et al. about the transferability between weighted graphs35, which was carried out independently.

Results

Maximum sections problem formulation

In the power system, the maximum power (data) section is defined as an edge collection \({{{{{{\mathcal{C}}}}}}}^{* }\) of the graph G = (V, E), which is modeled from the physical (cyber) layer. The collection \({{{{{{\mathcal{C}}}}}}}^{* }\) has the following three properties: First, \({{{{{{\mathcal{C}}}}}}}^{* }\) is a subset of the edge set E, namely, \({{{{{{\mathcal{C}}}}}}}^{* }\subseteq E\). Second, the reduced graph \(\bar{{{\mathrm{G}}}}=(V,{{{{{{\mathcal{C}}}}}}}^{* })\) is a bipartite graph. Third, as shown in (1), the summation of the edge weights in \({{{{{{\mathcal{C}}}}}}}^{* }\) is the supremum of the edge summation of all possible collections \({{{{{\mathcal{C}}}}}}\), which satisfy the first two properties.

It is critical to efficiently obtain the maximum sections because of three reasons. First, the maximum power section offers a cost-effective way to monitor the dynamics and power delivery capability of the physical system, especially when the system’s operation frequently changes caused by intentional/unintentional disturbances, such as the fluctuations of DERs and the changes of system topology due to the join or removal of subsystems (e.g., microgrids)20. Second, the maximum power sections cast light on the dynamic system’s control and operations. Dispatchable DERs can be coordinated for controlling the electric power over the maximum section to improve the whole system’s operation21. Third, the maximum data traffic sections provide an insight into enhancing the overall power system’s resilience through strategically designing and managing the communication network22,36, e.g., packet routing and traffic control.

Mathematically, finding the maximum section is to solve a Max-Cut problem of a weighted graph G = (V, E), where ∣V∣ = n is the vertex number, ∣E∣ = m is the edge number, and wij represents the normalized weight of the edge \(\langle i,j\rangle \in E\), where \({{{{{\rm{Max}}}}}}({w}_{ij})=1\). The Max-Cut solutions are identical before and after normalization. The edge weight is obtained via power flow calculation for the physical layer and means the data traffic in the cyber layer. Modeling details are provided in the Methods section “Modeling the Power System”.

The objective is to find a subset S ⊂ V that maximizes \(\mathop{\sum }\nolimits_{i\in S,j\notin S}^{}{w}_{ij}\) for cyber or physical layers, respectively. Suppose an n-bit string Z = z1 ⋯ zi ⋯ zj ⋯ zn ∈ {−1, 1}n can denote the status of vertices V, showing each bit zi will be equal to 1 if the ith vertex is in the subset S, otherwise be − 1. We can exhibit the partition of vertices for obtaining the maximum section. Thus, the classical cost function of the Max-Cut problem can be defined as,

where Cij(Z) represents the contribution of wij to the cost function. The Max-Cut problem translates into finding the n-bit string Z to maximize the cost function C(Z). Given the n-bit string Z, we define the approximation ratio to be C(Z)/C(ZMax-Cut), where ZMax-Cut is the exact Max-Cut solution. The goal of approximate algorithms is to find the solution with a high approximation-ratio.

QAOA for max-cut problem

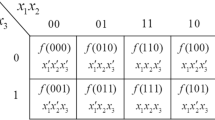

On quantum computers, we use n quantum bits (qubits) \(\left\vert Z\right\rangle =\vert {z}_{1}\cdots {z}_{i}\cdots {z}_{j}\cdots {z}_{n}\rangle \) to represent the status of n vertexes. Each qubit \(\vert {z}_{i}\rangle \) can be a superposition of quantum states \(\left\vert 0\right\rangle \) and \(\left\vert 1\right\rangle \), denoted as \(\vert {z}_{i}\rangle ={a}_{i}\vert 0\rangle +{b}_{i}\vert 1\rangle \), where \(\left\vert 0\right\rangle \) and \(\left\vert 1\right\rangle \) are the eigenstates of the Pauli-Z operator σz with the eigenvalues of 1 and − 1 respectively. When we measure the qubit in the computational basis, which is z basis, according to the quantum mechanics, the qubit could collapse to the state \(\left\vert 0\right\rangle \) with probability of \({\vert {a}_{i}\vert }^{2}\) and the state \(\left\vert 1\right\rangle \) with probability of \({\vert {b}_{i}\vert }^{2}\). Therefore, unlike classical computers, the measurement results could vary even though the qubit is identical at each execution. If we consider that measuring \(\left\vert 0\right\rangle \) represents zi = 1 and measuring \(\left\vert 1\right\rangle \) represents zi = − 1, we can obtain various n-bit strings Z = z1 ⋯ zi ⋯ zj ⋯ zn ∈ {−1, 1}n and calculate C(Z) in (2) after every single quantum computer execution.

On the other hand, we could obtain the deterministic n-bit string Zk out of the measurements on 2nn-qubit eigenstates in the computational basis, denoted as \(\vert {Z}_{k}\rangle \) with \(\vert {z}_{k,i}\rangle =\left\vert 0\right\rangle \) or \(\left\vert 1\right\rangle \) and \({z}_{k,i}=\langle {z}_{k,i}\vert {\sigma }_{i}^{z}\vert {z}_{k,i}\rangle \). Therefore, we can have

where

We consider \(C(\left\vert Z\right\rangle )=\left\langle Z\right\vert {H}_{{{{{{\rm{C}}}}}}}\left\vert Z\right\rangle \) as the quantum analog of C(Z). Then 2n classical cost functions C(Zk) are one-on-one mapped to 2n quantum cost functions \(C(\vert {Z}_{k}\rangle )\). The Max-Cut problem translates into finding the quantum state \(\vert {Z}_{k}\rangle \) to maximize the cost function \(C(\vert {Z}_{k}\rangle )\).

The 2n\(\vert {Z}_{k}\rangle \) states form the complete basis of the 2n Hilbert space for n-bit quantum states. Therefore we can decompose an arbitrary state \(\left\vert Z\right\rangle \) into a linear combination of \(\vert {Z}_{k}\rangle \), denoted as \(\left\vert Z\right\rangle =\mathop{\sum }\nolimits_{k = 1}^{{2}^{n}}{\alpha }_{k}\left\vert {Z}_{k}\right\rangle \) with \(\mathop{\sum }\nolimits_{k = 1}^{{2}^{n}}{\left\vert {\alpha }_{k}\right\vert }^{2}=1\). The quantum cost function of an arbitrary state \(\left\vert Z\right\rangle \) can be written as

Since \(C(\left\vert Z\right\rangle )=\left\langle Z\right\vert {H}_{{{{{{\rm{C}}}}}}}\left\vert Z\right\rangle \ge 0\), we have \(\max C(\left\vert Z\right\rangle )=\underset{k=1}{\overset{{2}^{n}}{\max }}C(\vert {Z}_{k}\rangle )\). Notably, in quantum mechanics, \(C(\left\vert Z\right\rangle )=\left\langle Z\right\vert {H}_{{{{{{\rm{C}}}}}}}\left\vert Z\right\rangle \) is the expectation value of system energy for a quantum system described by Hamiltonian HC. The Max-Cut problem translates into finding the maximum energy state for the quantum system described by Hamiltonian HC.

QAOA utilizes a quantum circuit running on the quantum computer to approximate an adiabatic evolution from the maximum energy state of an initial Hamiltonian, HB, to the maximum energy state of the final Hamiltonian, HC. For the Max-Cut problem, we particular define the HB as

According to adiabatic theorem37, with an ideal approximation, we expect to obtain the maximum energy state of HC, which leads to the exact Max-Cut solution, with a high probability.

To implement QAOA on quantum computers, we first prepare the maximum energy state of HB, \({\left\vert +\right\rangle }^{\otimes n}\), as the initial state for the quantum circuit. Then we run the quantum circuit with 2p trainable parameters \(\gamma =({\gamma }_{1},{\gamma }_{2},\ldots ,{\gamma }_{p})\) and \(\beta =({\beta }_{1},{\beta }_{2},\ldots ,{\beta }_{p})\) to approximate the p-step Trotter expansion of the adiabatic evolution. We measure the output state, obtain the classical n-bit string, and estimate the quantum cost function using (5) with multiple executions. After that, we use the classical-quantum hybrid optimizer iterating 2p parameters to maximize the quantum cost function. Ideally, when p tends to infinity, the probability of obtaining the exact Max-Cut solution will tend to be 1. Even with a finite p, measuring the final state \(\left\vert Z\right\rangle \) of the optimized circuit could generate high approximation-ratio solutions. More details are discussed in the Methods section “Adiabatic Approximation with QAOA”.

Data-driven QAOA

People have studied QAOA’s efficiency and accuracy in regular graphs with constant circuit depth29,38,39,40,41. On the other side, the performance of QAOA on generic weighted graphs is an open question and challenging to estimate rigorously42. Heuristic strategies show potentials to find quasi-optimal parameters with high approximation-ratio solutions, a claim backed by numerical evidences32,38,40. However, researchers have not exhaustively explore the heuristic strategies on generic weighted graphs42.

Our data-driven QAOA for generic weighted graphs can provide a high approximation-ratio solution without parameter optimization to avoid expensive computational effort. The data-driven QAOA is based on normalized weighted graph density D43, which is defined as,

The data-driven QAOA includes five steps as shown in Fig. 2 and introduced as follows, where an innovative parameter transfer strategy is the key idea.

a Modeling the cyber-physical system into normalized weighted graphs. b The parameter transfer module that obtains the quasi-optimal parameters (γ, β). c The expandable quasi-optimal parameter database that stores and updates the mapping tables for (b). d The quantum circuit with multiple layers by using the transferred parameters (γ, β). e The detailed example for one-layer circuit in (d). f The probability distribution by measurement for computing the cost function and selecting a high approximation-ratio solution. g Optimize the parameters for better performance and extend the database in (c) if needed.

Step 1: Formulate the search of maximum section to the Max-Cut problem of the normalized weighted graph and calculate its D.

Step 2: Obtain the quasi-optimal parameters (γ, β) based on D via the parameter transfer strategy, and then pass these parameters to the quantum processors. The transfer strategy works for generic weighted graphs.

Step 3: Construct the quantum circuit with the adjacency matrix Wadj and parameters (γ, β), and run it in a quantum processor. Then, measure the output state of the quantum circuit to get the probability distribution and calculate the cost function value.

Step 4: Optimize the parameters by the classical optimizer for a better result when necessary. Step 4 is optional.

Step 5: Expand the database by adding more pairs denoted by (n, D, γ, β) from verified cases, to provide more quasi-optimal parameters. Step 5 is optional.

Decent initial guesses obtained in Step 2 can also help to handle noise-free barren plateaus, which are linked to random parameter initialization44. These initial guesses also reduce the iterations between the classical optimizer and the quantum processor, saving the running time of the algorithm. For the proposed data-driven QAOA, we also provide the pseudocode Algorithm 1 in “Supplementary Methods”.

Parameter transfer strategy

The essential idea of the data-driven QAOA in Fig. 2 is the parameter transfer strategy, as summarized in the Step 2. It includes the following three substeps, which highly improves the effectiveness of transfer.

Substep 1: Establish the initial database. Several seed graphs are randomly generated, with their normalized graph densities spreading over [0, 1]. Considering the small size of these graphs, we can calculate the quasi-optimal parameters (γ, β)40,41 as shown in Methods section “Optimization of Parameters”. These parameters provide potentially quasi-optimal parameters for new graphs. This feature is particularly appealing to relatively larger target graphs. The database can then be established, based on the pairs (n, D, γ, β).

Substep 2: Develop the mapping table. The mapping table is designed for transferring quasi-optimal parameters from seed graphs to target graphs with the same circuit layer number p. For creating the mapping table, several target graphs are also randomly generated, with normalized graph densities spread over [0, 1]. With the parameters obtained from the seed graphs, QAOA calculation is performed for each target graph to get the cost function value \(C(\left\vert Z\right\rangle )\). Assume there are \({{{{{\mathcal{N}}}}}}\) seed graphs with ns vertices and \({{{{{\mathcal{M}}}}}}\) target graphs with nt vertices, the values of \(C(\left\vert Z\right\rangle )\) are then organized into a \({{{{{\mathcal{N}}}}}}\times {{{{{\mathcal{M}}}}}}\) matrix Mp(ns ↦ nt), i.e., the mapping table. In the table, each column is corresponding to one target graph and each row is corresponding to one seed graph. Note a mapping table only needs to be prepared once in advance for the same p, ns, nt.

Substep 3: Transfer parameters to new graphs. For a new graph with normalized graph density \({D}^{{\prime} }\) and size \({n}_{{{{{{\rm{t}}}}}}}^{{\prime} }\), several appropriate seed graphs will be selected from the mapping table \({M}_{p}({n}_{{{{{{\rm{s}}}}}}}\mapsto {n}_{{{{{{\rm{t}}}}}}}^{{\prime} })\), whose size ns needs to be equal or close to \({n}_{{{{{{\rm{t}}}}}}}^{{\prime} }\). Then, in the mapping table, one (or more) column, whose corresponding D is equal or close to the new graph’s \({D}^{{\prime} }\), will be selected. Since each entry of this column is associated with a pair \(({n}_{{{{{{\rm{s}}}}}}},\tilde{D},\gamma ,\beta )\) of the seed graph, we can choose entries that are bigger than a threshold to get quasi-optimal parameters for the new graph. Specifically, based on the obtained entries, the parameters in the pair corresponding to each entry will be identified and then transferred to the new graph. In this sense, we can use an interval \([{\tilde{D}}_{{{{{{\rm{l}}}}}}},{\tilde{D}}_{{{{{{\rm{r}}}}}}}]\) to summarize the identified pairs and denote the mapping as \({D}^{{\prime} }\mapsto [{\tilde{D}}_{{{{{{\rm{l}}}}}}},{\tilde{D}}_{{{{{{\rm{r}}}}}}}]\), as shown in Fig. 2. To improve the result’s accuracy, the layer number p can be accordingly increased, with parameters obtained by above transfer strategy.

The presented quasi-optimal parameter database is expendable, as mentioned in the Step 5 of the data-driven QAOA. In the Substep 3, we obtain the identified quasi-optimal parameters. For one thing, these parameters can be directly applied to the QAOA calculation of the new graph. The new result can then be added to the current mapping table \({M}_{p}({n}_{{{{{{\rm{s}}}}}}}\mapsto {n}_{{{{{{\rm{t}}}}}}}^{{\prime} })\) as a new entry, which is associated with the pair \(({n}_{{{{{{\rm{s}}}}}}},\tilde{D},\gamma ,\beta )\). For another, these parameters can also be decent initial guesses for further optimizing the parameters. Then based on the optimized result, a new pair \(({n}_{{{{{{\rm{t}}}}}}}^{{\prime} },{D}^{{\prime} },{\gamma }^{{\prime} },{\beta }^{{\prime} })\) can be added to the database to provide potential parameters for new graphs, which is equivalent to the Substep 1. For the proposed parameter transfer strategy, we also provide the the pseudocode Algorithm 2 in Supplementary Methods.

In summary, the key idea of data-driven QAOA includes the following five steps referring to Fig. 2: First, by modeling the cyber-physical system into two normalized weighted graphs, we compute the normalized graph density from adjacency matrix Wadj. Second, in the parameter transfer module, we first determine the seed graph size ns and layer number p. Then, according to the mapping table Mp(ns ↦ nt) in Supplementary Fig. 1, we can obtain the quasi-optimal parameters (γ, β) from seed graphs, whose normalized densities can be expressed by an interval \([{\tilde{D}}_{l},{\tilde{D}}_{r}]\). Third, the transferred parameters (γ, β) are directly passed to the quantum circuit with multiple layers for QAOA. By measurement, it generates the probability distribution \({\left\vert {\alpha }_{k}\right\vert }^{2}\) in (5), from which we can obtain \(C(\left\vert Z\right\rangle )\) and select a high approximation-ratio solution. Fourth, if a better performance is desired, we will further optimize (γ, β) for \(C{\left(\left\vert Z\right\rangle \right)}_{\max }\). This step is optional. Fifth, the obtained pair (n, D, γ, β) can be used to develop an expandable quasi-optimal parameter database to provide quasi-optimal parameter for new target graphs. If fourth step is performed, by obtaining the optimized parameters, we can store a new pair (nt, D, γnew, βnew) in a new mapping table for target graphs; otherwise, we can add one new entry \(C(\left\vert Z\right\rangle )\) to the original mapping table, as exampled in Supplementary Fig. 1. This step is also optional.

Numerical justification of the parameter transfer strategy

Since rigorously estimating the QAOA performace on generic weighted graphs is still an open question42, we provide numerical examples to justify the effectiveness of the parameter transfer strategy. We verify the efficacy of the transferred parameters from three aspects by comparing the approximation ratios with the ones obtained by QAOA using random parameters, QAOA using optimized parameters and GW algorithm.

For developing the mapping tables, we randomly generate 9 unweighted graphs with ns1 = 10 and 9 weighted graphs with ns2 = 24 as seed graphs, as given in Supplementary Table I. The 1710 non-planar target graphs with nt = 24 are also randomly generated, including 714 unweighted graphs and 996 weighted ones. The justifications involve 39, 744 QAOA expectation value calculations and at least 16, 146, 548, 640 shots. We apply two classical optimizers based on the Newton and the Constrained Optimization BY Linear Approximation (COBYLA) methods45 to get the mean approximation-ratio of the seed graphs and their corresponding quasi-optimal parameters. The values for p = 1, 2, 3 are summarized in Supplementary Table I. These parameters (γ, β) provide the initial data for the expandable database as introduced in Fig. 2.

According to the Substep 2, we develop 12 mapping tables as examples, among which the 6 mapping tables in Supplementary Fig. 1 are for the unweighted seed graphs under both weighted and unweighted target graphs with p = 1, 2, 3, respectively; the other 6 mapping tables in Supplementary Fig. 2 are for the weighted seed graphs.

Numerical justification—comparison with using random parameters

We compare the QAOA results from the transferred parameters and from random parameters to verify the parameter transfer strategy. We apply each seed graph’s quasi-optimal parameters to the QAOA calculation for the 1,710 target graphs, respectively. Figure 3 summarizes the mean approximation-ratios of QAOA in the unweighted and weighted graphs for p = 1, 2, 3. In Fig. 3, each black circle represents the mean approximation-ratio for a target graph with the identified parameters obtained from the 9 groups parameters in the mapping tables developed from unweighted ns1 = 10 seed graphs. These black circles show the high approximation-ratios, which are 0.8501, 0.8911, 0.9125 in average for p = 1, 2, 3, respectively. Comparing these black circles with the pink circles showing the approximation ratios with random parameters, we can see that the parameter transfer strategy significantly improves the approximation ratio, especially for low density graphs, which verifies the effectiveness of the transfer strategy. In addition, in Fig. 4, we also observe high approximation-ratio with parameters transferred from weighted ns2 = 24 seed graphs.

a p = 1, unweighted target graphs. (inset) The detail approximation-ratio distribution of one data point. The solid red curve is the fitting normal distribution for the original distribution. The dotted green line is the mean value of the original distribution. b p = 2, unweighted target graphs. c p = 3, unweighted target graphs. d p = 1, weighted target graphs. (inset) The detail approximation-ratio distribution of one data point. e p = 2, weighted target graphs. f p = 3, weighted target graphs. The mean approximation-ratios are computed by the probability distribution, with details given in (5) and the Methods section “Measurement Outcomes for the Cost Function Value''. The probability with respect to approximation-ratio is fitted by normal distribution. The σwindow is the standard deviation of the scatters with the same color in the 0.1 scan window regarding to D.

Meanwhile, we have two insights in both Figs. 3 and 4. First, the significant improvement in low density graph is particularly appealing to power systems, which usually have low densities. Second, it is challenging for the random parameters method to efficiently handle barren plateaus, while our method can address this issue by providing quasi-optimal initial guesses46. Further explanations for the effectiveness of the transfer strategy are shown in “Methods” section “Findings for Transfer Principles”.

Numerical justification—comparison with using optimized parameters

We verify that the transferred parameter can provide warm starting for QAOA. Figure 5 compares the mean approximation-ratio of QAOA using the transferred and unfavorable parameters in the mapping table with and without further optimization.

The blue scatters denote the approximation-ratio under the transferred parameters without optimization. The orange scatters denote the approximation-ratio under the transferred parameters with optimization. The yellow scatters denote the worst parameters in mapping table without optimization. The purple scatters denote the worst parameters in mapping table with optimization. a p = 1, weighted target graphs. b p = 2, weighted target graphs. c p = 3, weighted target graphs.

On the one hand, the transferred parameters can be decent initial guesses. Figure 5 shows that after further optimizing the transferred parameters (blue scatters), the result (orange scatters) has no significant improvement. On the other hand, those transferred parameters also can be quasi-optimal parameters. Most of blue scatters without optimization are better than the purple scatters, which are optimized from yellow scatters with lots of computational effort. There is a significant increment when comparing the transferred parameters with the worst parameters in the mapping table, as shown by the blue and yellow scatters in Fig. 5. These comparisons validate the effectiveness of the parameter transfer strategy.

In addition, the optimized parameters associated to the orange scatters in Fig. 5 can be adopted to expand the database.

Numerical justification—comparison with the GW algorithm

For further verifying our strategy can provide the promising results, we compare the results of using the GW algorithm with the ones via the data-driven QAOA. p = 1, 2, 3, 10 are adopted as examples. In Fig. 6, when p increases, the overall performance of transferred parameters increases. when p = 10, the transferred parameters without any optimization have better mean approximation-ratio than GW algorithm in the 113 graphs out of total 996 graphs. It is encouraging that, without any parameter optimization, the data-driven QAOA is competitive with GW algorithm. We expect that proper optimization and larger p could improve the approximation ratio further.

The drop trend of approximation ratio with p = 10 in the large graph density regime, as shown in Fig. 6, is due to the overfitting on the seed graphs with D = 0.9111 and D = 1. The 20 parameters in the 10-layer QAOA circuits could be excessive to be justified for some 10-vertex seed graphs. Notably, \(p={{{{{\mathcal{O}}}}}}(\log (n))\) could be sufficient to obtain high approximation-ratio solutions42. The overfitting issue could be resolved in large seed graphs with n vertexes and \(p={{{{{\mathcal{O}}}}}}(\log (n))\).

Numerical examples of data-driven QAOA on power systems

We test and verify the data-driven QAOA on a typical power system. The physical system is a modified IEEE 24-bus system47, as given in Supplementary Fig. 3. Eleven DERs are integrated into the system. Considering the output fluctuations of DERs, the normalized graph density will correspondingly change over time. So, two operational scenarios with normalized graphs densities D = 0.0525 and D = 0.1053 are given as examples for the test. The communication network also has 24 vertices. Considering the dynamic data traffic in the network, two scenarios are considered as examples with D = 0.1143 and D = 0.3280, respectively. We provide the results from the following two aspects.

Numerical example—test without the depolarizing noise

We carry out the test according to the steps given in Fig. 2. Based on the power flow calculation of the physical system or the data traffic measurement of the cyber layer, four normalized weighted graphs can be obtained. With the normalized graph densities, quasi-optimal parameters can be identified through the mapping table in Supplementary Fig. 1 for the QAOA calculation. To provide a comparison, Table 1 summarizes the mean approximation-ratios with all the parameters in the mapping table when p = 1, 2, 3 for the four graphs. The highlighted results emphasize that the best results based on the mapping table can be obtained with the transferred parameters. Thus, it justifies the effectiveness of the parameter transfer strategy.

We also compare the data-driven QAOA’s results with the GW algorithm. Figure 7a–c shows the approximation-ratio distributions of different normalized graph densities when p = 10, with the following findings. First, the results show that the approximation means are very close to those of the GW algorithm. More importantly, Fig. 7b, c show that the data-driven QAOA’s results are better than the GW algorithm, as there is at least ten times higher probability for the data-driven QAOA method than the GW algorithm to get the highest approximation ratio, as shown in the zoom-in details. In practice, we usually use the highest cut value as an approximate solution instead of the mean approximation-ratio. The data-driven QAOA can be better than the GW algorithm. Note these parameters are transferred from the mapping tables without any further optimization. Hence, according to the “Numerical Justification—Comparison with Using Optimized Parameters” section, when these parameters are used as initial guesses for further optimizing them, the better mean approximation-ratio are 0.9569, 0.9499, 0.9751 for the cases in Fig. 7a–c, respectively. Second, Table 1 shows that the mean approximation-ratio will increase as p increases; and thus, a relatively larger p is recommended for practical applications.

Noise model I is 0.1% depolarizing error on single-qubit gates and 1% depolarizing error on two-qubits gates; Noise model II is 0.01% depolarizing error on single-qubit gates and 0.1% depolarizing error on two-qubits gates. a D = 0.1053, p = 10, without noise. b D = 0.1143, p = 10, without noise. c D = 0.3280, p = 10, without noise. d D = 0.1053, p = 3, noise model I. e D = 0.1143, p = 3, noise model I. f D = 0.3280, p = 3, noise model I. g D = 0.1053, p = 3, noise model II. h D = 0.1143, p = 3, noise model II. i D = 0.3280, p = 3, noise model II.

Numerical example—test with the depolarizing noise

To verify the practicability of data-driven QAOA, we introduce the depolarizing noise on quantum gates to simulate the realistic noise on quantum simulators48. Two noise models are considered. The noise model I is with 0.1% depolarizing error on single-qubit gates and 1% depolarizing error on two-qubits gates, which is presently achievable. The noise model II is with 0.01% depolarizing error on single-qubit gates and 0.1% depolarizing error on two-qubits gates, which is achievable in the near term.

We carry out the numerical noise experiments on the test graphs. Figure 7d–i shows the examples of three graphs with D = 0.1053, D = 0.1143, and D = 0.3280, under the transferred parameters when p = 3. By comparing the approximation-ratio distributions and means between the results with and without noise, we can see that the mean approximation-ratios drop, with noise model I, as given in Fig. 7d–f. While with the smaller noise, the reduction of mean approximate-ratio is negligible, as shown in Fig. 7g–i. Therefore, it is feasible to run the data-driven QAOA on a NISQ quantum processor and address the Max-Cut problem in the practical power system in the near term.

Conclusions

We present a data-driven QAOA to efficiently search for the maximum power or data sections in DER dominant power systems by leveraging quantum advantage. The parameter transfer strategy is designed to provide quasi-optimal parameters from seed graphs to target graphs. It addresses the challenge of obtaining the critical parameters in QAOA; and thus, highly improving the efficacy and efficiency of QAOA. In the transfer strategy, normalized graph density is utilized to bridge the seed and target graphs for developing an extendable mapping table. We have verified the transfer strategy by comparing our approximation ratios with those obtained by QAOA using random parameters, QAOA using optimized parameters and GW algorithm. The improvements show the superiority of proposed strategy, and encourage that the data-driven QAOA is competitive with GW algorithm. The parameter transferability has also been verified from two perspectives, namely between unweighted and weighted graphs and between small scale and large scale graphs as well as graphs with the same size. We simulate the presented method in a modified IEEE 24-bus system and demonstrated its effectiveness in finding the maximum sections with and without depolarizing noise. The presented method showcases the new computation of power systems when meeting quantum technology.

The potentials of this work include the following aspects. First, the application of the proposed data-driven QAOA with the parameter transfer strategy has a strong potential to be extended from Max-Cut problem to the general binary combinatorial optimization problems. Second, from the power engineering angle, our work has many potential applications including the optimization for smooth and quick black start, the unit commitment in large-scale systems, designing and managing the communication network, and among others. Therefore, as a promising early candidate for achieving quantum advantage on NISQ systems, our method can also be extended to address challenging issues in other complex engineered systems and eventually evolve into a formal quantum methodology.

Methods

Adiabatic approximation with QAOA

According to the adiabatic evolution theorem31, during the time interval [0, T], we can slowly change the system’s Hamiltonian from HB to HC and obtain the maximum energy state of HC with high probability29. The changing process is exampled in below equation:

where \(s\left(t\right)\) is a smooth function, \(s\left(0\right)=0\) and \(s\left(T\right)=1\). We then use Trotterization technique to emulate the evolution process49.

We discretize the total time interval [0, T] into intervals [jΔt, (j + 1)Δt] with small enough Δt. Over the jth interval, the Hamiltonian is approximately constant, i.e., H(t) = H((j + 1)Δt). Therefore, the total time evolution operator U(T, 0) can be approximately discretized into 2p implementable operators with constant Hamiltonian49, as written in (9). The approximation will improve as Δt gets smaller or, equivalently, as p gets bigger.

where U((j + 1)Δt, jΔt) represents the time evolution from jΔt to (j + 1)Δt. Inserting (8) to (9) and using \({e}^{i({A}_{1}+{A}_{2})x}={e}^{i{A}_{1}x}{e}^{i{A}_{2}x}+{{{{{\mathcal{O}}}}}}({x}^{2})\), the time evolution operator can be expressed as,

where \({U}_{{{{{{\rm{B}}}}}}}^{\left(j\right)}\) and \({U}_{{{{{{\rm{C}}}}}}}^{\left(j\right)}\) are the time evolution operators, evolving the system under the Hamiltonian HB for the time period of βj = (1 − s(jΔt))Δt and the Hamiltonian HC for the time period of γj = s(jΔt)Δt, respectively, as defined in (11).

In the evolution, \(\left\vert \varphi \right\rangle \) represents the quantum state \(\left\vert Z\right\rangle \) in the “QAOA for Max-cut Problem” section. Through applying \({U}_{{{{{{\rm{B}}}}}}}^{\left(j\right)}\) and \({U}_{{{{{{\rm{C}}}}}}}^{\left(j\right)}\) to the initial state \(\left\vert \varphi (0)\right\rangle ={\left\vert +\right\rangle }^{\otimes n}\) alternately, we can compute the final state \(\left\vert \varphi (T)\right\rangle \) in (12), which is expected to lead a high \(C(\left\vert \varphi (T)\right\rangle )\) and collapse to maximum energy state after measurement.

where \(\gamma =({\gamma }_{1},{\gamma }_{2},\ldots ,{\gamma }_{p})\) and \(\beta =({\beta }_{1},{\beta }_{2},\ldots ,{\beta }_{p})\) need to be optimized, which requires expensive computational effort. For the original QAOA for Max-Cut problem, we also provide the the pseudocode Algorithm 3 in Supplementary Methods.

Measurement outcomes for the cost function value

Quantum computers perform calculations based on the probability distribution of quantum states. In QAOA, we obtain the cost function value in (5) by sampling the quantum states, where \({\vert {\alpha }_{k}\vert }^{2}\) is the probability that the final state \(\vert \varphi \rangle \) collapses on the computational basis \(\vert {Z}_{k}\rangle \), as explained below.

First, we construct the quantum circuit of QAOA for the target graphs. In our study, the circuit is built in the Qiskit simulator50. The quantum circuit prepares the initial maximum energy state and computes the final state in (12) by using the quantum operators \({U}_{{{{{{\rm{B}}}}}}}^{\left(j\right)}\) and \({U}_{{{{{{\rm{C}}}}}}}^{\left(j\right)}\), whose implementations are illustrated in Fig. 2 and also shown in (13) and (14), respectively.

where \({R}_{{{{{{\rm{X}}}}}}}^{(j)}\) means only applying RX gate to the jth qubit without changing other qubits; and \({R}_{{{{{{\rm{ZZ}}}}}}}^{\left\langle i,j\right\rangle }\) means only applying RZZ gate to the ith and jth qubits.

Second, we run the quantum circuit Nshot times and measure the final state for the probability distribution. Suppose the final state collapses on the \(\vert {Z}_{k}\rangle \) with Nk times, the approximation of \({\vert {\alpha }_{k}\vert }^{2}\) is \({\vert {\tilde{\alpha }}_{k}\vert }^{2}={N}_{k}/{N}_{{{{{{\rm{shot}}}}}}}\). The approximation will improve as Nshot gets bigger. In this study, to get an accurate probability distribution and mean approximation-ratio, we perform Nshot = 219 to approximate the distribution coefficients \({\vert {\alpha }_{k}\vert }^{2}\). In practice, 2, 048 shots works well and is recommended.

Third, we calculate the cost function \(C(\vert \varphi \rangle )\) as shown in (5), which is the weighted summation of \(C(\vert {Z}_{k}\rangle )\) with the non-zero coefficients \({\vert {\tilde{\alpha }}_{k}\vert }^{2}\). Since the standard deviation of \(C(\vert \varphi \rangle )\) is in the order of \(\sqrt{m}\)29, Nshot is in the polynomial order. So, it is efficient to compute \(C(\vert {Z}_{k}\rangle )\) with non-zero coefficients \({\left\vert {\tilde{\alpha }}_{k}\right\vert }^{2}\). Based on the calculation of \(C(\vert {Z}_{k}\rangle )\) with non-zero coefficients \({\vert {\tilde{\alpha }}_{k}\vert }^{2}\), we select the \(\vert {Z}_{{{{{{\rm{opt}}}}}}}\rangle \) with the maximal cut value as the final solution Zopt.

Optimization of parameters

This subsection explains the parameter optimization involved in three perspectives of the “Numerical Justification of the Parameter Transfer Strategy” section and the “Numerical Examples of Data-Driven QAOA on Power Systems” section, where we need to optimize the parameters for high cost function values in seed graphs with ns = 10 and in target graphs with nt = 24, when p = 1, 2, 3, 10. The three perspectives are introduced below.

First, we get the optimal parameters for the seed graphs with ns = 10 when p = 1, 2, 3 by classical optimization method. In our study, the Newton method is used to get the exact cost function values. Considering the non-convex landscapes of the cost function, we adopt multiple initial guesses for (γ, β) within [0, 2π]p × [0, π]p. The number of initial guesses is designed in the polynomial order of n and m, which is proved to be sufficient for obtaining the optimal parameters29.

Second, we get the quasi-optimal parameters for the seed graphs with ns = 10 when p = 10 by Fourier heuristic strategy32. In the Fourier strategy, the time complexity of obtaining quasi-parameters is reduced into \({{{{{\mathcal{O}}}}}}({{{{{\rm{poly}}}}}}(p))\) to avoid computational burden32, thus the parameters with high p can be obtained efficiently.

Third, after getting the transferred parameters, we further optimize them for verifying the efficacy of the transferred parameters. Specifically, we use the COBYLA method45 to further optimize the transferred parameters due to the fluctuations of the cost function values. As mentioned in Methods section “Measurement Outcomes for the Cost Function Value”, the quantum computer estimates the cost function values by sampling copies of output quantum state, which results in the fluctuations of the cost function values. The COBYLA method is used to address the optimization with this issue. The optimized results under the COBYLA method are given in Fig. 5 and Supplementary Table I. The COBYLA method is also used to carry out the optimization of transferred parameters for the test cases without the depolarizing noise.

Note that some certain gradient-based methods might also be able to find the quasi-optimal parameters with fluctuating cost function value, where the inaccurate gradient estimation may cause the escape from a local maximum and allow the converge towards a better one41.

Test graphs preparation

Without losing generality, the test graphs are randomly generated, as introduced below. First, we randomly generate adjacency matrices. Second, we set the entries to be zero with different probability to ensure the densities of the test graphs spread over [0, 1]. Third, since there exist a polynomial algorithm for Max-Cut problem for the planar graph51, we check the generated graphs’ planar property by Kuratowski’s Theorem, such that all of the test graphs are not planar. Finally, we generate 11, 840 graphs, sort them by normalized graph densities, and then uniformly pick out 1710 graphs as test graphs.

Findings for transfer principles

Here we show two important findings in Fig. 3 to further explain the transfer principles.

First, the mean approximation-ratio of QAOA for the target graphs are correlated to a Lipschitz continuous curve with respect to their normalized graph densities, although these target graphs are randomly generated. It is justified by a scan window with the size 0.1, as given in Fig. 3. The window shows that the upper limit of the standard deviations of the scatters with the same parameters is 0.057, which further indicates the scatters approximately follow a curve. It also indicates the normalized graph density is a effective metric. According to these curves, we can directly estimate the mean approximation-ratio of new graphs with parameters in the database. Thereafter the parameters with outstanding performance can be quickly identified for the QAOA circuit, avoiding the high computing effort.

Second, the parameters of seed graphs with low density perform better in target graphs with low density than in the ones with high density, and vice versa. This property is also uncovered in the mapping tables, where the yellow area denoting the high approximation-ratio will increase as D increases. Specifically, when the sizes of the seed and target graphs are very close, the yellow area will be around the diagonal line as shown in Supplementary Fig. 2; while, when the size of the target graph is much bigger than that of the seed graphs, the area will be above the diagonal line as shown in Supplementary Fig. 1. With this property, the quasi-optimal parameters can be effectively identified.

Modeling the power system

We model the physical layer and then get multiple normalized weighted graphs through the power flow calculation when disturbances from DERs are considered. Power flow calculates the bus voltages for a given load, generation, and network condition, based on which the line powers (weights) can be obtained. The power flow equations are given in (15).

where * denotes conjugate, \({\dot{V}}_{i}\in {\mathbb{C}}\) is the ith bus (vertex) voltage in the physical grid, \({P}_{i},{Q}_{i}\in {\mathbb{R}}\) is the injection active and reactive power of the ith bus, and \({\dot{y}}_{ik}\in {\mathbb{C}}\) is the admittance of the line between the ith bus and kth bus.

After solving the power flow equations, we can obtain the complex power over each line. In our study, the apparent power is used as the edge weight. Due to the complex landscape of parameters21, the edge weight is then normalized. Thus, the modeling graph for the physical system is a normalized weighted graph.

The (15) describes the steady state of the physical power system. The DERs could be grid-forming or grid-following, and the corresponding bus types could be PV-bus, Vδ-bus, or PQ-bus, respectively. The (15) does not involve the dynamic modeling of DERs but concludes them as the PQ-bus, PV-bus, or Vδ-bus, depending on the microgrid system’s operational mode. When we consider the dynamics of power system, we can model the DERs in grid-following or grid-forming pattern. Then, differential algebra equations can be developed to describe the dynamics of the system. At each time step, the transient state describes the power delivery among buses. Then, our method can also be utilized to search the maximum power energy section. Thus, the current application on the steady state can then be extended to a general case.

The modeling graph of the cyber layer is based on the communication network data traffic that is flexible and random. In our study, we randomly generate nt = 24 graphs to represent the communication network. In practical applications, we can monitor the data traffic to set up the edge weights for the cyber graphs.

Depolarizing noise model

For demonstrating the potential of our method to be a promising candidate for achieving quantum advantage on NISQ systems, we introduce the depolarizing noise for the quantum gates in QAOA circuits. In our study, Qiskit50 is used to simulate the depolarizing noise and investigate the influences. The simulator needs to calculate the density matrix after each quantum gate to include the noise model, which costs exponentially more calculation resources than the noiseless vector simulation.

The approximation-ratio distribution of GW algorithm

In Fig. 7a–c, we obtain the approximation-ratio distributions of the GW algorithm, as summarized below.

The GW algorithm relaxes the constraint of Max-Cut problem from discrete variables to the vectors on a unit sphere. The relaxed problem then becomes a semidefinite programming (SDP) problem. By solving the SDP problem, we obtain the optimal vector distribution. By randomly cutting the unit sphere into two parts, we correspondingly separate the vectors into two groups and obtain an approximate solution. When we cut the sphere several times, there is a guarantee that we have at least 0.878 expected approximation ratio.

Similarly to the shots in QAOA, with certain cut times, the GW algorithm outputs a probability distribution with respect to the approximation ratio, e.g., the approximation-ratio distribution. In the theoretical research, we usually compare the expectation of the approximation-ratio distribution to evaluate the algorithm performance, while in the practical applications to power systems, we can take the maximum approximation-ratio as the final approximation solution.

Data availability

The data that support the plots within this article and other findings of this study are available from the corresponding author upon reasonable request.

Code availability

Code for this article is available from the corresponding author upon reasonable request.

References

Aspuru-Guzik, A., Dutoi, A. D., Love, P. J. & Head-Gordon, M. Simulated quantum computation of molecular energies. Science 309, 1704–1707 (2005).

Hastings, M. B., Wecker, D., Bauer, B. & Troyer, M. Improving quantum algorithms for quantum chemistry. arXiv https://doi.org/10.48550/ARXIV.1403.1539 (2014).

O’Malley, P. J. et al. Scalable quantum simulation of molecular energies. Physical Review X 6, 031007 (2016).

Harrow, A. W., Hassidim, A. & Lloyd, S. Quantum algorithm for linear systems of equations. Physical Review Letters 103, 150502 (2009).

Eskandarpour, R., Ghosh, K., Khodaei, A. & Paaso, A. Experimental quantum computing to solve network dc power flow problem. arXiv https://doi.org/10.48550/ARXIV.2106.12032 (2021).

Zhou, Y., Feng, F. & Zhang, P. Quantum electromagnetic transients program. IEEE Trans. Power Syst. 36, 3813–3816 (2021).

Eskandarpour, R. et al. Quantum computing for enhancing grid security. IEEE Trans. Power Syst. 35, 4135–4137 (2020).

Feng, F., Zhou, Y. & Zhang, P. Quantum power flow. IEEE Trans. Power Syst. 36, 3810–3812 (2021).

Lubasch, M., Joo, J., Moinier, P., Kiffner, M. & Jaksch, D. Variational quantum algorithms for nonlinear problems. Phys. Rev. A 101, 010301 (2020).

Giani, A. & Eldredge, Z. Quantum computing opportunities in renewable energy. SN Comput. Sci. 2, 1–15 (2021).

Koretsky, S. et al. Adapting quantum approximation optimization algorithm (qaoa) for unit commitment. In Proc. 2021 IEEE International Conference on Quantum Computing and Engineering (QCE) 181–187 (IEEE, 2021).

Silva, F. F., Carvalho, P. M., Ferreira, L. A. & Omar, Y. A QUBO formulation for minimum loss network reconfiguration. IEEE Transactions on Power Systems 1–13 https://doi.org/10.1109/TPWRS.2022.3214477 (2022).

Preskill, J. Quantum computing in the nisq era and beyond. Quantum 2, 79 (2018).

Harrigan, M. P. et al. Quantum approximate optimization of non-planar graph problems on a planar superconducting processor. Nat. Phys. 17, 332–336 (2021).

Pagano, G. et al. Quantum approximate optimization of the long-range ising model with a trapped-ion quantum simulator. Proc. Natl. Acad. Sci. 117, 25396–25401 (2020).

Graham, T. M. et al. Multi-qubit entanglement and algorithms on a neutral-atom quantum computer. Nature 604, 457–462 (2022).

Rafique, Z., Khalid, H. M. & Muyeen, S. Communication systems in distributed generation: A bibliographical review and frameworks. IEEE Access 8, 207226–207239 (2020).

Inayat, U., Zia, M. F., Mahmood, S., Khalid, H. M. & Benbouzid, M. Learning-based methods for cyber attacks detection in iot systems: A survey on methods, analysis, and future prospects. Electronics 11, 1502 (2022).

Mahmoud, M. S., Khalid, H. M. & Hamdan, M. M. Cyberphysical infrastructures in power systems: architectures and vulnerabilities (Academic Press, 2021).

Shariatzadeh, F., Vellaithurai, C. B., Biswas, S. S., Zamora, R. & Srivastava, A. K. Real-time implementation of intelligent reconfiguration algorithm for microgrid. IEEE Transactions on sustainable energy 5, 598–607 (2014).

Jing, H., Wang, Y. & Li, Y. Interoperation analysis of reconfigurable networked microgrids through quantum approximate optimization algorithm. In Proc. 2022 IEEE Power & Energy Society General Meeting (PESGM) 1-5 (IEEE, 2022).

Newman, M. E. Modularity and community structure in networks. Proc. Natl. Acad. Sci. 103, 8577–8582 (2006).

Garey, M., Johnson, D. & Stockmeyer, L. Some simplified np-complete graph problems. Theor. Comput. Sci. 1, 237–267 (1976).

Goemans, M. X. & Williamson, D. P. Improved approximation algorithms for maximum cut and satisfiability problems using semidefinite programming. J. ACM (JACM) 42, 1115–1145 (1995).

Mei, S., Misiakiewicz, T., Montanari, A. & Oliveira, R. I. Solving sdps for synchronization and maxcut problems via the grothendieck inequality. In Proc. 2017 Conference on Learning Theory 1476–1515 (PMLR, 2017).

Shao, S., Zhang, D. & Zhang, W. A simple iterative algorithm for maxcut. arXiv https://doi.org/10.48550/ARXIV.1803.06496 (2018).

Yao, W., Bandeira, A. S. & Villar, S. Experimental performance of graph neural networks on random instances of max-cut. In Proc. Wavelets and Sparsity XVIII 242–251 (SPIE, 2019).

Khot, S., Kindler, G., Mossel, E. & O’Donnell, R. Optimal inapproximability results for max-cut and other 2-variable csps? SIAM Journal on Computing 37, 319–357 (2007).

Farhi, E., Goldstone, J. & Gutmann, S. A quantum approximate optimization algorithm. arXiv https://doi.org/10.48550/ARXIV.1411.4028 (2014).

Basso, J., Farhi, E., Marwaha, K., Villalonga, B. & Zhou, L. The quantum approximate optimization algorithm at high depth for maxcut on large-girth regular graphs and the sherrington-kirkpatrick model. https://doi.org/10.4230/LIPICS.TQC.2022.7 (2022).

Farhi, E., Goldstone, J., Gutmann, S. & Sipser, M. Quantum computation by adiabatic evolution. arXiv https://doi.org/10.48550/ARXIV.QUANT-PH/0001106 (2000).

Zhou, L., Wang, S.-T., Choi, S., Pichler, H. & Lukin, M. D. Quantum approximate optimization algorithm: Performance, mechanism, and implementation on near-term devices. Phys. Rev. X 10, 021067 (2020).

Wang, H., Zhao, J., Wang, B. & Tong, L. A quantum approximate optimization algorithm with metalearning for maxcut problem and its simulation via tensorflow quantum. Mathematical Problems in Engineering 2021 (2021).

Yao, J., Bukov, M. & Lin, L. Policy gradient based quantum approximate optimization algorithm. In Proc. Mathematical and Scientific Machine Learning 605–634 (PMLR, 2020).

Shaydulin, R., Lotshaw, P. C., Larson, J., Ostrowski, J. & Humble, T. S. Parameter transfer for quantum approximate optimization of weighted maxcut. arXiv https://doi.org/10.48550/ARXIV.2201.11785 (2022).

Khalid, H. M. & Peng, J. C.-H. A bayesian algorithm to enhance the resilience of wams applications against cyber attacks. IEEE Trans. Smart Grid 7, 2026–2037 (2016).

Born, M. & Fock, V. Beweis des adiabatensatzes. Zeitschrift für Physik 51, 165–180 (1928).

Galda, A., Liu, X., Lykov, D., Alexeev, Y. & Safro, I. Transferability of optimal qaoa parameters between random graphs. In Proc. 2021 IEEE International Conference on Quantum Computing and Engineering (QCE) 171–180 (IEEE, 2021).

Wurtz, J. & Love, P. Maxcut quantum approximate optimization algorithm performance guarantees for p> 1. Phys. Rev. A 103, 042612 (2021).

Brandao, F. G., Broughton, M., Farhi, E., Gutmann, S. & Neven, H. For fixed control parameters the quantum approximate optimization algorithm’s objective function value concentrates for typical instances. arXiv https://doi.org/10.48550/ARXIV.1812.04170 (2018).

Guerreschi, G. G. & Smelyanskiy, M. Practical optimization for hybrid quantum-classical algorithms. arXiv https://doi.org/10.48550/ARXIV.1701.01450 (2017).

Barak, B. & Marwaha, K. Classical algorithms and quantum limitations for maximum cut on high-girth graphs. https://doi.org/10.4230/LIPICS.ITCS.2022.14 (2021).

Tokuyama, T. Algorithms and computation (2022).

Cerezo, M., Sone, A., Volkoff, T., Cincio, L. & Coles, P. J. Cost function dependent barren plateaus in shallow parametrized quantum circuits. Nat. Commun. 12, 1–12 (2021).

Powell, M. J. A view of algorithms for optimization without derivatives. Math. Today-Bull. Inst. Math. Appl. 43, 170–174 (2007).

Wang, S. et al. Noise-induced barren plateaus in variational quantum algorithms. Nat. Commun. 12, 1–11 (2021).

Ordoudis, C., Pinson, P., Morales, J. M. & Zugno, M. An updated version of the IEEE rts 24-bus system for electricity market and power system operation studies. Technical University of Denmark13 (2016).

Nielsen, M. A. & Chuang, I. L. Quantum Computation and Quantum Information (Cambridge University Press, 2011).

Wu, L.-A., Byrd, M. & Lidar, D. Polynomial-time simulation of pairing models on a quantum computer. Phys. Rev. Lett. 89, 057904 (2002).

Anis, M. S. et al. Qiskit: An open-source framework for quantum computing. https://doi.org/10.5281/zenodo.2573505 (2021).

Shih, W.-K., Wu, S. & Kuo, Y.-S. Unifying maximum cut and minimum cut of a planar graph. IEEE Trans. Comput. 39, 694–697 (1990).

Acknowledgements

H.J. and Y.L. are supported by the Office of Naval Research under the award N00014-22-1-2504. Y.W. is primarily supported by the Office of the Director of National Intelligence—Intelligence Advanced Research Projects Activity through ARO Contract No. W911NF-16- 1-0082 and DOE BES award de-sc0019449 (quantum algorithm analysis). The author would like to thank Dr. Rui Chao at Duke University for the detailed discussion on the QAOA algorithm.

Author information

Authors and Affiliations

Contributions

H.J., Y.W., and Y.L. equally contributed to this work in conceiving the idea and designing the experiments. H.J. conducted experiments. Y.W. and Y.L. fabricated samples, participated in figure design, and revised the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Engineering thanks the anonymous reviewers for their contribution to the peer review of this work. Primary Handling Editors: Miranda Vinay and Rosamund Daw.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jing, H., Wang, Y. & Li, Y. Data-driven quantum approximate optimization algorithm for power systems. Commun Eng 2, 12 (2023). https://doi.org/10.1038/s44172-023-00061-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s44172-023-00061-8