Abstract

Background

Patients with cancer often have unmet psychosocial needs. Early detection of who requires referral to a counsellor or psychiatrist may improve their care. This work used natural language processing to predict which patients will see a counsellor or psychiatrist from a patient’s initial oncology consultation document. We believe this is the first use of artificial intelligence to predict psychiatric outcomes from non-psychiatric medical documents.

Methods

This retrospective prognostic study used data from 47,625 patients at BC Cancer. We analyzed initial oncology consultation documents using traditional and neural language models to predict whether patients would see a counsellor or psychiatrist in the 12 months following their initial oncology consultation.

Results

Here, we show our best models achieved a balanced accuracy (receiver-operating-characteristic area-under-curve) of 73.1% (0.824) for predicting seeing a psychiatrist, and 71.0% (0.784) for seeing a counsellor. Different words and phrases are important for predicting each outcome.

Conclusion

These results suggest natural language processing can be used to predict psychosocial needs of patients with cancer from their initial oncology consultation document. Future research could extend this work to predict the psychosocial needs of medical patients in other settings.

Plain language summary

Patients with cancer often need support for their mental health. Early detection of who requires referral to a counsellor or psychiatrist may improve their care. This study trained a type of artificial intelligence (AI) called natural language processing to read the consultation report an oncologist writes after they first see a patient to predict which patients will see a counsellor or psychiatrist. The AI predicted this with performance similar to other uses of AI in mental health, and used different words and phrases to predict who would see a psychiatrist compared to seeing a counsellor. We believe this is the first use of AI to predict mental health outcomes from medical documents written by clinicians outside of mental health. This study suggests this type of AI can predict the mental health needs of patients with cancer from this widely-available document.

Similar content being viewed by others

Introduction

Cancer is not only a leading cause of death, but a disease that substantially impacts physical, mental, and social health1. Patients with cancer have an increased risk of developing mental illnesses following diagnosis2. Approximately one-third of patients with a mental health condition before cancer diagnosis are at particular risk for worsened distress2. Cancer can impact employment and relationships3,4,5, adding more strain to a patient’s financial, interpersonal, and support systems. Conditions such as depression and anxiety not only degrade quality-of-life, they are associated with decreased rates of survival, possibly by impacting a patient’s ability to follow through with treatment6,7,8. To help address psychosocial needs, cancer centres employ clinicians such as psychiatrists and counsellors specializing in psychosocial care for people with cancer9.

Despite the development of psychosocial oncology as part of cancer care, patients with cancer continue to have unmet psychosocial needs10,11,12. Achieving equity-oriented healthcare in cancer will require better support for patients with psychosocial needs including comorbid mental illness13.

While lack of resources often contributes to unmet needs, there is also evidence failure to detect psychosocial needs plays a role, especially in high-resourced settings14. Prior work has found treating oncologists could only identify around one-third of severely distressed patients, and did not refer patients to psychosocial resources effectively15,16. This may be due to treating oncologists being focused on cancer control, having time constraints, using close-ended questions, and/or having cultural and socioeconomic differences from their patients. In addition, patients may not know resources are available, or be reluctant to share their difficulties17.

Machine learning (ML) can train models to predict outcomes such as which patients could benefit from a referral to a psychiatrist or counsellor. Such models could then be incorporated into an EMR and flag certain patients. ML models can incorporate structured data, which has been processed into specific features such as genetic markers, demographic features, or comorbidities. However, the availability of structured data can vary between cancer centres, which may limit their widespread use18,19,20,21. Using structured data can also limit the types of data that can be used, as not all data can be easily extracted or structured; a centre may record the marital status of their patients, but not whether they are currently having relationship difficulties22.

Using unstructured data, such as the initial oncology consultation document, can address some of these drawbacks. Unstructured data may possess information relevant for predicting whether a patient will see a psychiatrist or counsellor that may not be routinely stored as structured data. As most patients being treated for cancer would have an initial oncology document, a model using this data could be widely used, no matter what other data a cancer centre records.

Using ML to predict outcomes from documents falls under the branch of artificial intelligence called Natural Language Processing (NLP). Recent advances in NLP have incorporated neural networks like transformers23, such as those used by the recently released question-answering system ChatGPT24. Neural NLP models are more complex than the traditional linear methods, and are better able to understand how words in a document relate to each other, even if not directly adjacent.

Traditionally, physicians have sought to understand the psychosocial needs of patients with cancer through clinical interviews or questionnaires25,26,27,28,29,30. We were unable to find relevant prior work seeking to use computational methods to predict the psychosocial needs of patients with cancer. A recent study used a statistical model and structured data to forecast the number of patients with cancer and high symptom complexity a clinic would see31. However, this study did not make predictions for individuals.

NLP has been used in psychiatry with a variety of documents, including patient transcripts32 and social media posts33,34. Prior work using medical documents has often sought to extract data such as patient diagnoses35,36,37,38,39. Some studies have used non-neural NLP to predict readmission from discharge summaries40,41. Much of the recent application of NLP in mental health has used a set of 816 discharge summaries to identify the lifetime severity of a patient’s mental illness42,43,44,45,46,47,48,49,50. We did not find NLP literature predicting psychosocial outcomes from non-psychiatric medical documents, or find prior work using neural NLP to predict future psychiatric outcomes. There has been more NLP work in oncology51,52,53,54, including our recent work predicting survival from oncologist consultations55.

In this work, we investigate using NLP with initial oncology consultation documents to predict which patients with cancer will see a psychiatrist or counsellor within one year. To the best of our knowledge, predicting psychosocial needs from non-psychiatric medical documents is a novel application of NLP. Our relatively large dataset, drawn from over 50,000 patients with cancer, allows us to investigate more advanced NLP tools, including those using large language models and other neural networks, which have rarely been used in medical applications. The initial oncology consultation document is readily available, and may have relevant information for predicting psychosocial needs. We hypothesized NLP models could predict these outcomes with balanced accuracy (BAC) and receiver-operating-characteristic area-under-curve (AUC) above 0.65, a threshold exceeded in predictive work using ML elsewhere in psychiatry, such as research in depression56,57, suicide58, and bipolar disorder59. In this study, we train and evaluate traditional and neural models to predict which patients will see a psychiatrist or counsellor based on their initial oncology consultation document. Despite these documents not focusing specifically on psychosocial health, our best models achieve BAC above 70%, and AUC above 0.75, for both tasks when evaluated on an internal holdout test set.

Methods

The University of British Columbia BC Cancer Research Ethics Board provided approval for this prognostic study (H17-03309), and exempted this work from requiring informed consent from participants as it was not feasible to obtain. We report this study following the Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis (TRIPOD) guidelines60.

Data source and study population

We selected our study cohort from the 59,800 patients at BC Cancer starting cancer care between April 1, 2011 and December 30, 2016. Patients were seen for malignant disease or for non-malignant or precancerous disease requiring specialist cancer care. BC Cancer provides most cancer care in British Columbia, and is affiliated with all radiation oncologists and over 85% of medical oncologists in the province. BC Cancer provides care at six geographically diverse settings, and oversees systemic therapy at the majority of the smaller Community Oncology Network locations. BC Cancer provided our data. Clinicians generated the documents by a combination of dictation and free text processing, without explicit document structure requirements. Documents generally followed typical formatting conventions for medical consultation documents, such as including sections on identifying information, history of presentation, medical and other histories, physical examination, impression/assessment, and recommendation/plan.

Data selection and preparation

As in our recent study54, we excluded participants with more than one cancer diagnosis and required patients to have at least one valid medical or radiation oncologist consultation document within 180 days of diagnosis. For this work, we used the oncologist document closest to a patient’s diagnosis.

We preprocessed documents before they were used by our models, as outlined in Note SN1. This included text tokenization for our Bag-of-Words (BoW) models, where words have their endings removed. We generated labels based on patients having a document generated by psychiatry or counselling after seeing the patient, within the 12 months following creation of their initial oncology consultation document.

Natural language models

NLP models understand language based on the probabilities of which words follow each other61. We compared four language models: the traditional non-neural method BoW62,63, and three models using neural networks: convolutional neural networks (CNN)46,47,64, long-short term memory (LSTM)65, and a more recent large language model, Bidirectional Encoder Representations from Transformers (BERT)66,67,68. Figure 1 shows simplified diagrams of some of the differences in how these models understand text. Full diagrams of the model architectures can be found in their original work and elsewhere46,61,62,64,65,66. We describe further details including libraries used, class-imbalance handling, and code availability in Note SN1. To investigate whether the models were performing trivial predictions, we compared the performance of these models with a rule-based method that predicts a patient will see a psychiatrist if the consult contains the token “psychiatrist” and will see a counsellor if it contains the token “counsel”. For this rule-based method, we used the same data processing and vectorizer as for BoW. To investigate the impact of BERT having a limited number of tokens it can intake, we also investigated a variation of BERT called Longformer69, evaluating this model alongside CNN and BERT with different numbers of tokens. Longformer can use documents up to 4096 tokens in length, more than BERT’s limit of 512, due to having a less densely-connected self-attention. We trained Longformer using undersampling due to technical constraints, and compared it to BERT and CNN also trained with this method.

a The bag-of-words model counts word occurrences in a document, which is then used by a traditional machine learning algorithm. b The convolutional neural network model understands a document in small adjacent clusters of words called convolutions (one is shown with black lines). The model can then learn to predict from combinations of these convolutions. c The long short-term memory model updates the prediction by reading the document one word at a time. It has a memory cell that allows it to remember some prior context (dotted lines). In this work, we used a bidirectional implementation, which combines the forward long short-term memory layer shown with another layer reading words in reverse order. d The bidirectional encoder representations from transformers model can understand how each word is connected to all other words in the document but can only read small portions of text. One word’s possible connections are indicated by a black line.

Statistical analysis

The primary outcome was model performance when predicting whether patients would see a psychiatrist or counsellor within 12 months. We sought to avoid overfitting, when a model performs well on training data but not new data70, and so first randomly separated our data into training (70%), development (10%) and testing (20%) sets, a standard practice in NLP71. We then tuned and developed our models using only the training and development sets. For the neural models, training a model requires multiple passes through the training data to optimally train, called epochs. As the models will eventually overfit the training data, we continued this training until there were no further improvements in balanced accuracy for five epochs (patience) when the models were evaluated on the development set. We then compared hyperparameters based on these best performances to choose the best set of hyperparameters. To generate the final results, we continued to use standard practice, and used these tuned hyperparameters as above, stopping training of the neural models based on development set performance, and evaluating these best models on the holdout testset. To be able to provide an estimate of performance variance, we repeated this process for a total of ten times per model and target, keeping the hyperparameters unchanged, but shuffling the training data. To compare mean model performances, we conducted two-tailed dependent t-tests, with Bonferroni correction for multiple-comparison at 95% confidence, and calculated effect size using Cohen’s d. We used one-tailed t-tests to compare the rule-based method’s results with the model performances. We conducted the t-tests with sample size of 10, as described above; their results could be impacted by increasing the sample size, which could be done without limit. We also used a simple regression model to investigate the impact of maximum tokens on Longformer performance. We describe metrics in Table ST1.

Interpreting our models

We measured what words were important for our BoW models based on the models’ coefficient weights, which result from training on all documents in the training set. We used the Captum Interpretability Library for Pytorch72 implementation of integrated gradients (IG)73 for an initial understanding of our neural models. This attribution method visualizes which words in a document influence a model’s prediction. The resulting visualization is easy to understand, but can only show us how the model works one document at a time. For the interpretation shown, to preserve privacy, we use a synthesized demonstration document crafted to have similar word importance to a document from a patient not included in our dataset, generated by a gynecologic oncologist.

As the above method can only interpret a model one document at a time, we developed a new method to understand a neural model over many documents using both IG and the new topic modelling technique, BERTopic74. BERTopic has been recently applied to medical tasks75,76, and is well described elsewhere. In brief, it allows topic modelling, which summarizes the main topics in a large collection of documents. BERTopic does so using modern transformer-based large-language models (LLM) to form embeddings of the documents separate from the topic representations, and allows customization of its modular steps. We used the “Best Practice” values, as of August 17, 2023, for these steps. This included the default sentence transformer, UMAP77, HDBScan78, scikit-learn CountVectorizer79 and the default class-based TF-IDF61. We created topic representations using KeyBERT80 and OpenAI’s ChatGPT 3.5 Turbo model81, alongside the default MMR representation82. We provide further details on our implementation in Note SN1.

We used this new model interpretation method with one CNN model for predicting seeing a psychiatrist, and one model for predicting seeing a counsellor, using the same models as for the standard IG interpretation described above. Documents longer than 1500 tokens were trimmed off the end to this amount due to technical constraints. For this new technique, we first extracted sentences from all documents in our test set that had an average IG attribution value above 0.01, setting this value empirically based on what sentences it would extract from the gynecologic oncology document used above, again using this document as it was not in any of our datasets. We then fed this collection of documents to BERTopic, using the nr_topics parameter to find 20 topics to represent these sentences. We again set this parameter empirically, as a trade-off between topic specificity and interpretability. For example, if this parameter was set lower, distinct topics would start to merge into one topic, such as family cancer history and personal cancer history becoming a general history topic. To focus these results, we used sentences with positive attribution values for this analysis, given that the models default to predicting patients will not see the clinicians, and we are more interested in positive predictors. This technique could also be applied to observe topic modelling for sentences with mean low attribution values, e.g., less than 0.01.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Results

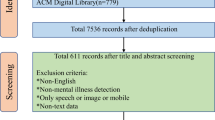

Patient and document selection

Our patient selection was the same as in prior work55. Of the 59,800 BC Cancer patients, we excluded 2784 due to starting cancer care multiple times, and 9391 due to not having a medical or radiation oncology consultation within 180 days of their cancer diagnosis. This left 47,625 patients, of which 25,428 were women (53.4%) and 22,197 were men (46.6%), with a mean age (SD) of 64.9 (13.7) years (Table 1). For our prediction targets, 662 (1.4%) of patients saw a psychiatrist, while 10,034 (21.1%) saw a counsellor, within 12 months of the initial document being generated.

We show some characteristics of the documents used for our predictions in Table 2. The documents are evenly split between medical oncology (51.5%) and radiation oncology (48.5%). 271 clinicians generated the radiation oncology documents, while 459 clinicians generated those from medical oncology. After preprocessing, the documents had a mean number of tokens between 972 and1022, depending on the model. 95.2% of documents had more tokens than the 512 limit of BERT.

Predicting seeing a psychiatrist

Table 3 shows the performance of our different NLP models when predicting whether a patient will see a psychiatrist in the 12 months following their initial oncologist consultation. We evaluated the models on a holdout testset. The CNN and LSTM models achieved significantly better performance than both BoW and BERT, with BAC above 70%, AUC near or above 0.80, and large effect sizes (Tables ST2 and ST3). All models significantly outperformed the rule-based method (p < 0.002, Cohen’s ds > 1), which predicts based on the token “psychiatrist”, achieving balanced accuracies 6.8–18.9% higher, and AUC 0.165–0.282 higher.

Predicting seeing a counsellor

In Table 4, we show the performance of our different NLP models when they predict if a patient will see a counsellor. This prediction is again for the 12 months following their initial oncologist consultation, using a holdout testset. CNN and LSTM models are again significantly better than BoW and BERT, and have large effect sizes (Tables ST4 and ST5). The performance is significantly lower when predicting seeing a counsellor versus seeing a psychiatrist for all models except BERT (Table ST6). All models again significantly outperformed the rule-based method (p < 0.003, Cohen’s ds > 1), which predicts based on the token “counsel”, achieving balanced accuracies 6.8–15.7% higher, and AUC 0.126–0.231 higher.

Impact of token limits on transformer models

In Table 5, ST7 and ST8 we show a comparison of BERT and Longformer performance when predicting which patients will see a psychiatrist, with different maximum numbers of tokens used by a model for each document. We also show the performance of CNN for comparison. All models in this table used undersampling for class-imbalance due to technical constraints and for consistency. We see a numerical trend that more tokens leads to increases in both BAC and AUC, at least to 2048 tokens. These differences are not statistically significant on head-to-head comparison, while effect sizes were above one when comparing Longformer with 512 tokens to Longformer using 2048 or 4096 tokens. Fitting a simple regression model with number of tokens as the independent variable leads to p-value of 0.241, R2 of 0.011 for balanced accuracy, and a p-value of 0.062, R2 of 0.089 for AUC. The CNN model still has a numerically and statistically superior performance even compared to the Longformer models, except comparing the BAC between CNN and Longformer with 2048 and 4096 tokens after multiple-comparison correction.

Interpreting our models

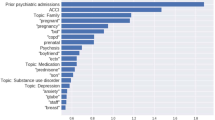

We find similarities and differences in the top ten most important tokens for our models predicting seeing a psychiatrist versus seeing a counsellor (Table 6). All tokens were used by both models, but differed in importance depending on the predictive target. We found tokens related to mental health were important in both models, including “depress” (depression, depressed) and “anxieti” (anxieties), though “anxieti” was only in the top ten for seeing a counsellor. Tokens directly related to a patient’s cancer are among the top ten most important token when predicting seeing a psychiatrist, but not a counsellor (“myeloma”, “radiat”, likely “1”). Demographic factors also seem important, such as “retir” (retiree, retired) in both, or “princ” and “georg”, corresponding to Prince George, the BC Cancer site located in northern BC, which serves a more rural population.

In Notes SN2 and SN2, we show the importance of words in one synthesized document which we crafted to demonstrate similar word importance to a real patient’s document for our CNN models. This patient saw both psychiatry and counselling, which the models correctly predict. For the model predicting seeing a counsellor, a recent history of pain, and a family history of cancer in both maternal and paternal grandparents were predictive. For the model predicting seeing a psychiatrist, the maternal grandmother’s history is again important, but pain is not. Instead, we see that the oncologist writing “also noticed”, followed by additional medical symptoms, is predictive of seeing a psychiatrist.

In Tables 7 and 8, we show the results of our newly-developed technique to understand a neural model’s predictions over multiple documents, providing additional details including representative sentences in Tables ST9 and ST10. The topics cover a majority of the extracted sentence, 30,956/49760 (61.5%) for seeing a psychiatrist, and 40,424/57935 (69.8%) for seeing a counsellor. The remaining sentences are classified by BERTopic as outliers. For both targets, we find a range of topics, including those pertaining to symptoms, personal cancer history, family cancer history, substance use, and social history. As was found in the BoW interpretation, features of a patient’s cancer or treatment seem more relevant to predicting seeing a psychiatrist (topics 0, 1, 2, 3, 8, 15, 18) than for seeing a counsellor (topics 1, 3, 14, 16). Conversely, symptoms or medications used for symptom management seem more common for predicting seeing a counsellor (topics 1, 2, 8, 10, 13, 17, 19) than for psychiatry (topics 0, 5, 9).

Discussion

In this work, we investigated the use of NLP with patients’ initial oncology consultation documents to predict whether they will see a psychiatrist or counsellor in the year following the date of the consultation document. Our best models achieved BAC over 70% and AUC over 0.80, for predicting whether they would see a psychiatrist. Performance was worse for predicting which patients will see a counsellor, though best models still achieved BAC and AUC above 70%. Two types of neural models, CNN and LSTM, outperformed the simpler BoW models. This suggests these predictions may benefit from a more complex understanding of language made possible by neural networks, in contrast to related work using similar data and techniques to predict the survival of patients with cancer survival55. While we could not find similar work to which we could compare these results, these metrics are comparable to or better than other applications of ML for predicting future events in psychiatry, such as predicting whether a patient’s depression will respond to an antidepressant56,57, whether someone will complete or attempt suicide58, or if a child will later develop a bipolar disorder59. This supports the validity of this technique for our task, and more generally, the potential use of NLP for predicting psychiatric outcomes from non-psychiatric medical documents.

Our models’ ability to better predict whether patients would see a psychiatrist versus a counsellor is somewhat surprising. The difference may not be clinically significant, but we expected seeing a counsellor to be easier to predict. Seeing a psychiatrist is more class-imbalanced; the ratio between those seeing a psychiatrist and not seeing one is quite extreme. Generally, ML models will perform better on tasks with less class-imbalance83. Our result may be due to patients seeing counsellors at BC Cancer for a variety of reasons, including both psychological assistance and social needs such as housing or transportation. It may be difficult for our models to account for these different reasons. Our results also suggest seeing a psychiatrist is more related to the medical information within the text. The BoW model had top ten features related to a patient’s cancer, while in the CNN model, normal heart rate was a negative predictor. We did not see these relationships in our model predicting which patients will see a counsellor.

Model interpretations supported they were using relevant and appropriate data to make their predictions. Important words for our BoW models included words related to mental illness, aspects of the patient’s cancer illness, and demographic factors. Interpreting a neural model from an initial oncology document not included in our dataset showed an example of how the models make their predictions. In this document, shown here by an analogous synthesized document, the CNN model used current pain and a family history of cancer to predict seeing a counsellor. The bidirectional relationship between pain and psychological health is well established84, while the family history may attest to intergenerational trauma associated with cancer. Similarly, for seeing a psychiatrist, the model again uses family history. It also found that “also noticed” followed by somatic symptoms supported a referral to psychiatry. This may imply the model is learning a patient endorsing many somatic symptoms may increase their chance of seeing a psychiatrist, consistent with known relationships85.

We furthered this initial neural model interpretation by developing a new technique to interpret neural models over multiple documents. By using BERTopic to model the topics of sentences with high mean positive attribution from IG, we see further evidence that the models are using a variety of text, including those pertaining to a family history of cancer history, and symptoms. Given this is a new technique, these results should be interpreted cautiously. However, they do seem to also suggest possible differences between the factors predictive of seeing a counsellor versus psychiatrist as suggested by our BoW prediction, such as symptoms being used more to predict seeing a counsellor, and disease characteristics more used for predicting seeing a psychiatrist. These topics may also suggest directions to explore to further our understanding of the psychosocial needs of cancer patients. For example, two topics for seeing a psychiatrist involve peripheral edema. This could be related to corticosteroid use, which can directly lead to both peripheral edema and psychiatric symptoms86. It also could be related to the presence of central nervous system tumours that often need these medications, and can also lead to psychiatric symptoms. We plan to further develop and validate this technique in future work, including investigating different parameters and choices for the modular steps.

This application of NLP could be used to help oncologists identify which patients may benefit from referral to counsellors or psychiatrists. It is unclear what performance we would need for such models to be used clinically; the sensitivity versus specificity of models could be adjusted depending on the application. Given that our models are trained on the status quo, where a degree of undetected and missed opportunities for referral exists, setting the models to have a higher sensitivity, at the expense of specificity, may be reasonable. Future work could seek to train or evaluate our methodology on a dataset where experts assess patients and label whether a patient should be referred to psychiatry or counselling. However, it could be difficult to manually label the thousands of patients required to effectively train neural models.

Comparing the results of the different models may provide direction to build upon our results and further improve performance. The better performance of our CNN and LSTM models compared to BoW may suggest that the more complex understanding of language that neural models are capable of may be useful for this task, as supported by our interpretations where this seems to be taking place. However, the numerical advantage of these models over BoW is relatively modest, especially when predicting which patients will see a counsellor. The use of neural language models over traditional NLP methods comes with disadvantages including increased computational cost, more difficult interpretability, and possibly privacy concerns61,72,83,87,88 so neural methods generally should be used when their advantage in performance outweighs these drawbacks. It may be possible to improve the performance of our models with further exploration of hyperparameter and architectural changes.

Given the recent advancement and success of transformer-based LLMs24, further investigation of these models may also help improve the performance of our tasks. The poor performance of BERT, which utilizes transformed-trained LLMs, was somewhat surprising, but may be due to our documents often exceeding the maximum number of tokens that can be used with this model. While the first portion of the consultation documents often document data that seems potentially relevant to our prediction, such as identifying data and symptoms, BERT may have often not had access to information usually featured towards the end of medical consultations, including past histories, assessment, and future planning. This limitation was supported by our investigation of the Longformer model, which can use up to 4096 tokens. We saw a numerical trend of increasing performance as the model could use up to 2048 tokens, enough for most documents (Table 2). However, even when able to use this larger number of tokens, this transformer-based model still did not outperform CNN. This may be due to Longformer’s sparse attention. Future work may want to investigate LLMs that have denser attention and can still utilize longer documents, such as BigBird89, and may want to further investigate LLMs trained specifically on clinical data90.

Future work could also seek to improve our models by adding other types of data, such as the responses from psychosocial questionnaires designed for patients with cancer29, to our training data. Alternatively, one could train separate models with rating scale data, and compare their performance to our models. However, while use of rating scales is becoming more common, such data is certainly not as ubiquitous, and possibly not as informative, as the initial oncology consultation document.

Future work will be needed to investigate the external validity of our models by evaluating them using initial oncology consultation documents from other cancer organizations. We have some evidence our models are using geographic features specific to British Columbia. This could lead to a drop in performance when used elsewhere, as could other differences such as language use, referral patterns, and treatment availability. If this is the case, our models could be further fine-tuned61 on data from a different source, which generally requires smaller amounts of data. Alternatively, our methodology could be used to train new models based on data from other sources, an advantage of us using the common and widely available data within initial oncology consultation documents. Given the possibility of LLM to improve with very large amounts of data, the best performance may be possible by training neural models on large numbers of these documents from multiple healthcare settings. We facilitated this by using a widely available document.”

Further investigation may not only help guide improvement of the models, but may also generate new hypotheses to investigate the relationship between course of illness and the need for psychosocial supports. To this end, future work may also want to investigate the performance of our models on subsets of patients, given possible differences related to age, gender, rural vs. urban setting, cancer stage, and cancer type91. Similarly, future work could investigate both false positives and false negatives from our models. False positives could show examples of those who would have benefited from referral to psychosocial supports, but faced barriers. False negatives could be investigated to determine whether our models are missing potential signs of impending psychosocial needs, or if patients only developed these needs at a later date. If the latter, future work could also explore predictive models that update with subsequent clinical documents generated from oncologists, such as progress notes or re-referrals.

Even if performance was perfect and external validity established, future work will also be needed to investigate possible barriers to using such techniques in clinical practice. This could include examining logistical barriers such as difficulties around incorporating predictive systems within electronic medical record system workflows. Better understanding of patient comfort around artificial intelligence being used is also needed, especially when pertaining to a sensitive topic such as need for psychosocial supports92. Another area of future investigation could be applying our methodology to predict psychosocial interventions in other medical settings, such as which patients on a medical ward will be referred to consultation-liaison psychiatry based on their internal medicine admission consultation.

As described above, we will need to evaluate our models on documents from other cancer care organizations to establish external validity. However, our documents do come from many providers in six geographically-distinct centres. We also acknowledge training our models on referral patterns that likely include missed referrals, making our models themselves imperfect. Additionally, while a comparison against a rule-based method solely using the tokens “psychiatrist” and “counsel” supports that models are not solely making trivial predictions based on whether oncologists are writing that they will make a referral, some of the predictions being made may be relatively simple, such as those based on whether consults include words such as “depression” or “anxiety”. We do, however, see that the top ten BoW tokens are varied, while the CNN interpretation shows an example of how the model can correctly predict a patient seeing the disciplines without obvious language, and that predictive sentences have a variety of topics. Another limitation is that some words used by our models are specific to our province, such as city names. This helps our models learn about geography-based differences, but such data would not be generalizable in other regions. As described above, we also note that our work did not explore our models’ performance on different subsets of the population such as those based on gender or cancer type; as an initial investigation, we defer this to future work. It is possible these NLP techniques may be a stronger or weaker tool, depending on the specific population.

We believe this is a novel application of NLP, as we were unable to find similar research attempting this task, or attempting to predict psychiatric outcomes from non-psychiatric medical documents generally. We believe further development will allow these techniques to improve and extend the lives of patients with cancer by helping to identify psychosocial needs that cause distress and sometimes interfere with cancer treatment.

Data availability

We are unable to share the initial oncology consultation documents used in this work due to their number and our inability to anonymize the confidential information within them. These data are stored securely at BC Cancer. Readers can contact the corresponding author for additional information and data.

Code availability

The computer code will be available upon publication on a public Github repository93. The trained BoW models will be available upon publication in this repository. Due to the possibility of neural models storing extractable private data87,88, we are unable to share our trained neural models publicly, but may be able to share them with interested parties pending medical ethics and institutional approval, and will be interested in exploring federated learning approaches.

References

Singer, S. Psychosocial impact of cancer. in Psycho-Oncology (eds Goerling, U. & Mehnert, A.) 1–11 (Springer International Publishing, 2018) https://doi.org/10.1007/978-3-319-64310-6_1.

Lu, D. et al. Clinical diagnosis of mental disorders immediately before and after cancer diagnosis: A Nationwide Matched Cohort Study in Sweden. JAMA Oncol. 2, 1188–1196 (2016).

Schover, L. R. The impact of breast cancer on sexuality, body image, and intimate relationships. CA Cancer J. Clin. 41, 112–120 (1991).

Erker, C. et al. Impact of pediatric cancer on family relationships. Cancer Med. 7, 1680–1688 (2018).

Blanchard, C. G., Albrecht, T. L. & Ruckdeschel, J. C. The crisis of cancer: psychological impact on family caregivers. Oncology (Williston Park) 11, 189–194 (1997).

Pillay, B., Lee, S. J., Katona, L., Burney, S. & Avery, S. Psychosocial factors predicting survival after allogeneic stem cell transplant. Supportive Care Cancer 22, 2547–2555 (2014).

Pinquart, M. & Duberstein, P. R. Depression and cancer mortality: a meta-analysis. Psychol. Med. 40, 1797–1810 (2010).

Nayak, M. G. et al. Quality of life among cancer patients. Indian J. Palliat Care 23, 445–450 (2017).

Butow, P., Girgis, A. & Schofield, P. Psychosocial aspects of delivering cancer care: an update. Cancer Forum 37, 20–22 (2013).

John, D. A., Kawachi, I., Lathan, C. S. & Ayanian, J. Z. Disparities in perceived unmet need for supportive services among patients with lung cancer in the cancer care outcomes research and surveillance consortium. Cancer 120, 3178–3191 (2014).

So, W. K. W. et al. A mixed-methods study of unmet supportive care needs among head and neck cancer survivors. Cancer Nurs. 42, 67–78 (2019).

Alananzeh, I. M., Levesque, J. V., Kwok, C., Salamonson, Y. & Everett, B. The unmet supportive care needs of Arab Australian and Arab Jordanian cancer survivors: an international comparative survey. Cancer Nurs. 42, E51 (2019).

Horrill, T. C., Browne, A. J. & Stajduhar, K. I. Equity-oriented healthcare: what it is and why we need it in oncology. Curr. Oncol. 29, 186–192 (2022).

Ripamonti, C. I., Santini, D., Maranzano, E., Berti, M. & Roila, F. Management of cancer pain: ESMO clinical practice guidelines†. Ann. Oncol. 23, vii139–vii154 (2012).

Söllner, W. et al. How successful are oncologists in identifying patient distress, perceived social support, and need for psychosocial counselling? Br. J. Cancer 84, 179–185 (2001).

Newell, S., Sanson-Fisher, R. W., Girgis, A. & Bonaventura, A. How well do Medical oncologists perceptions’ reflect their patients’ reported physical and psychosocial problems? Cancer 83, 1640–1651 (1998).

Steele, R. & Fitch, M. I. Why patients with lung cancer do not want help with some needs. Support Care Cancer 16, 251–259 (2008).

Akcay, M., Etiz, D. & Celik, O. Prediction of survival and recurrence patterns by machine learning in gastric cancer cases undergoing radiation therapy and chemotherapy. Adv. Radiat. Oncol. 5, 1179–1187 (2020).

Deng, F. et al. Predict multicategory causes of death in lung cancer patients using clinicopathologic factors. Comput. Biol. Med. 129, 104161 (2021).

Ferroni, P. et al. Breast cancer prognosis using a machine learning approach. Cancers 11, 328 (2019).

Kaur, I. et al. An integrated approach for cancer survival prediction using data mining techniques. Comput. Intell Neurosci. 2021, 6342226 (2021).

Krauze, A. & Camphausen, K. Natural language processing – finding the missing link for oncologic data, 2022. Int. J. Bioinform. Intell. Comput. 1, 22–42 (2022).

Vaswani, A. et al. Attention is All you Need. in Advances in Neural Information Processing Systems 30 (Curran Associates, Inc., 2017).

ChatGPT: Optimizing Language Models for Dialogue. OpenAI https://openai.com/blog/chatgpt/ (2022).

Ashbury, F. D., Findlay, H., Reynolds, B. & McKerracher, K. A Canadian survey of cancer patients’ experiences: are their needs being met? J. Pain Symptom Manag. 16, 298–306 (1998).

Savard, J., Ivers, H. & Savard, M.-H. Capacity of the Edmonton Symptom Assessment System and the Canadian Problem Checklist to screen clinical insomnia in cancer patients. Support Care Cancer 24, 4339–4344 (2016).

Cuthbert, C. A., Boyne, D. J., Yuan, X., Hemmelgarn, B. R. & Cheung, W. Y. Patient-reported symptom burden and supportive care needs at cancer diagnosis: a retrospective cohort study. Support Care Cancer 28, 5889–5899 (2020).

Smrke, A. et al. Distinct features of psychosocial distress of adolescents and young adults with cancer compared to adults at diagnosis: patient-reported domains of concern. J. Adolesc. Young Adult Oncol. 9, 540–545 (2020).

Linden, W., Yi, D., Barroetavena, M. C., MacKenzie, R. & Doll, R. Development and validation of a psychosocial screening instrument for cancer. Health Qual. Life Outcomes 3, 54 (2005).

Linden, W. et al. The Psychosocial Screen for Cancer (PSSCAN): further validation and normative data. Health Qual. Life Outcomes 7, 16 (2009).

Watson, L. et al. Using autoregressive integrated moving average (ARIMA) modelling to forecast symptom complexity in an ambulatory oncology clinic: harnessing predictive analytics and patient-reported outcomes. Int. J. Environ. Res. Public Health 18, 8365 (2021).

Gara, M. A. et al. The role of complex emotions in inconsistent diagnoses of schizophrenia. J. Nerv. Mental Dis. 198, 609–613 (2010).

Zeberga, K. et al. A novel text mining approach for mental health prediction using Bi-LSTM and BERT model. Comput. Intell. Neurosci. 2022, e7893775 (2022).

Benítez-Andrades, J. A., Alija-Pérez, J.-M., Vidal, M.-E., Pastor-Vargas, R. & García-Ordás, M. T. Traditional machine learning models and bidirectional encoder representations from transformer (BERT)–based automatic classification of tweets about eating disorders: algorithm development and validation study. JMIR Med. Inform. 10, e34492 (2022).

Abbe, A., Grouin, C., Zweigenbaum, P. & Falissard, B. Text mining applications in psychiatry: a systematic literature review. Int. J. Methods Psychiatr. Res. 25, 86–100 (2016).

Wu, C.-S., Kuo, C.-J., Su, C.-H., Wang, S. & Dai, H.-J. Using text mining to extract depressive symptoms and to validate the diagnosis of major depressive disorder from electronic health records. J. Affect. Disord. 260, 617–623 (2020).

Fernandes, A. C. et al. Identifying suicide ideation and suicidal attempts in a psychiatric clinical research database using natural language processing. Sci. Rep. 8, 7426 (2018).

Dai, H.-J. et al. Deep learning-based natural language processing for screening psychiatric patients. Front. Psychiatry 11, 533949 (2021).

Ford, E., Carroll, J. A., Smith, H. E., Scott, D. & Cassell, J. A. Extracting information from the text of electronic medical records to improve case detection: a systematic review. J. Am. Med. Inform. Assoc. 23, 1007–1015 (2016).

Rumshisky, A. et al. Predicting early psychiatric readmission with natural language processing of narrative discharge summaries. Transl. Psychiatry 6, e921 (2016).

Boag, W. et al. Hard for humans, hard for machines: predicting readmission after psychiatric hospitalization using narrative notes. Transl. Psychiatry 11, 1–6 (2021).

Filannino, M., Stubbs, A. & Uzuner, O. Symptom severity prediction from neuropsychiatric clinical records: Overview of 2016 CEGS N-GRID Shared Tasks Track 2. J. Biomed. Inform. 75 Suppl, S62–S70 (2017).

Karystianis, G. et al. Automatic mining of symptom severity from psychiatric evaluation notes. Int. J. Methods Psychiatr. Res. 27, e1602 (2018).

Clark, C., Wellner, B., Davis, R., Aberdeen, J. & Hirschman, L. Automatic classification of RDoC positive valence severity with a neural network. J. Biomed. Inform. 75 Suppl, S120–S128 (2017).

Tran, T. & Kavuluru, R. Predicting mental conditions based on “history of present illness” in psychiatric notes with deep neural networks. J. Biomed. Inform. 75, S138–S148 (2017).

Rios, A. & Kavuluru, R. Convolutional neural networks for biomedical text classification: application in indexing biomedical articles. in Proceedings of the 6th ACM Conference on Bioinformatics, Computational Biology and Health Informatics 258–267 https://doi.org/10.1145/2808719.2808746 (ACM, 2015).

Rios, A. & Kavuluru, R. Ordinal convolutional neural networks for predicting RDoC positive valence psychiatric symptom severity scores. J. Biomed. Inform. 75 Suppl, S85–S93 (2017).

Dai, H.-J. & Jonnagaddala, J. Assessing the severity of positive valence symptoms in initial psychiatric evaluation records: Should we use convolutional neural networks? PLoS One 13, e0204493 (2018).

Posada, J. D. et al. Predictive modeling for classification of positive valence system symptom severity from initial psychiatric evaluation records. J. Biomed. Inform. 75, S94–S104 (2017).

Eglowski, S. CREATE: Clinical Record Analysis Technology Ensemble (California Polytechnic State University, 2017). https://doi.org/10.15368/theses.2017.60.

Banerjee, I., Bozkurt, S., Caswell-Jin, J. L., Kurian, A. W. & Rubin, D. L. Natural language processing approaches to detect the timeline of metastatic recurrence of breast cancer. JCO Clin. Cancer Inform. 1–12 https://doi.org/10.1200/CCI.19.00034 (2019).

Rajput, K., Chetty, G. & Davey, R. Performance analysis of deep neural models for automatic identification of disease status. in 2018 International Conference on Machine Learning and Data Engineering (iCMLDE) 136–141. https://doi.org/10.1109/iCMLDE.2018.00033 (2018).

Liang, H. et al. Evaluation and accurate diagnoses of pediatric diseases using artificial intelligence. Nat. Med. 25, 433–438 (2019).

Wang, H., Li, Y., Khan, S. A. & Luo, Y. Prediction of breast cancer distant recurrence using natural language processing and knowledge-guided convolutional neural network. Artif. Intell. Med. 110, 101977 (2020).

Nunez, J.-J., Leung, B., Ho, C., Bates, A. T. & Ng, R. T. Predicting the survival of patients with cancer from their initial oncology consultation document using natural language processing. JAMA Netw. Open 6, e230813 (2023).

Lee, Y. et al. Applications of machine learning algorithms to predict therapeutic outcomes in depression: a meta-analysis and systematic review. J. Affect. Disord. 241, 519–532 (2018).

Nunez, J.-J. et al. Replication of machine learning methods to predict treatment outcome with antidepressant medications in patients with major depressive disorder from STAR*D and CAN-BIND-1. PLoS One 16, e0253023 (2021).

McHugh, C. M. & Large, M. M. Can machine-learning methods really help predict suicide? Curr. Opin. Psychiatry 33, 369–374 (2020).

Uchida, M. et al. Can machine learning identify childhood characteristics that predict future development of bipolar disorder a decade later? J. Psychiatr. Res. 156, 261–267 (2022).

Patzer, R. E., Kaji, A. H. & Fong, Y. TRIPOD reporting guidelines for diagnostic and prognostic studies. JAMA Surg. 156, 675–676 (2021).

Jurafsky, D. & Martin, J. H. Speech and Language Processing (Draft)802–811 (Prentice Hall, 2015).

Zhang, A., Lipton, Z. C., Li, M. & Smola, A. J. Dive into Deep Learning. arXiv:2106.11342 [cs] (2021).

Manning, C., Raghavan, P. & Schuetze, H. Introduction to Information Retrieval (Cambridge University Press, 2009).

Kim, Y. Convolutional Neural Networks for Sentence Classification. in Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP) 1746–1751 (Association for Computational Linguistics, 2014). https://doi.org/10.3115/v1/D14-1181.

Adhikari, A., Ram, A., Tang, R. & Lin, J. Rethinking complex neural network architectures for document classification. in Proceedings of the 2019 Conference of the North 4046–4051 (Association for Computational Linguistics, 2019). https://doi.org/10.18653/v1/N19-1408.

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv:1810.04805 [cs] (2019).

Huang, K., Altosaar, J. & Ranganath, R. ClinicalBERT: Modeling Clinical Notes and Predicting Hospital Readmission. arXiv:1904.05342 [cs] (2019).

Lee, J. et al. BioBERT: a pre-trained biomedical language representation model for biomedical text mining. Bioinformatics 36, 1234–1240 (2020).

Beltagy, I., Peters, M. E. & Cohan, A. Longformer: The Long-Document Transformer. arXiv:2004.05150 [cs], (2020).

Shalev-Shwartz, S. & Ben-David, S. Understanding Machine Learning: From Theory to Algorithms (Cambridge University Press, 2014). https://doi.org/10.1017/CBO9781107298019.

van der Goot, R. We Need to Talk About train-dev-test Splits. in Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing 4485–4494 (Association for Computational Linguistics, Online and Punta Cana, 2021). https://doi.org/10.18653/v1/2021.emnlp-main.368.

Kokhlikyan, N. et al. Captum: a unified and generic model interpretability library for PyTorch. arXiv:2009.07896 [cs, stat] (2020).

Sundararajan, M., Taly, A. & Yan, Q. Axiomatic Attribution for Deep Networks. arXiv:1703.01365 [cs] (2017).

Grootendorst, M. BERTopic: Neural topic modeling with a class-based TF-IDF procedure. Preprint at https://doi.org/10.48550/arXiv.2203.05794 (2022).

Jeon, E., Yoon, N. & Sohn, S. Y. Exploring new digital therapeutics technologies for psychiatric disorders using BERTopic and PatentSBERTa. Technol. Forecast. Soc. Change 186, 122130 (2023).

Ng, Q. X., Yau, C. E., Lim, Y. L., Wong, L. K. T. & Liew, T. M. Public sentiment on the global outbreak of monkeypox: an unsupervised machine learning analysis of 352,182 Twitter posts. Public Health 213, 1–4 (2022).

McInnes, L., Healy, J. & Melville, J. UMAP: Uniform Manifold Approximation and Projection for Dimension Reduction. Preprint at https://doi.org/10.48550/arXiv.1802.03426 (2020).

McInnes, L., Healy, J. & Astels, S. hdbscan: hierarchical density based clustering. J. Open Sour. Softw. 2, 205 (2017).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Grootendorst, M. KeyBERT: Minimal keyword extraction with BERT. https://doi.org/10.5281/zenodo.4461265 (2020).

OpenAI. ChatGPT (Version 3.5 Turbo). (2023).

Carbonell, J. & Goldstein, J. The use of MMR, diversity-based reranking for reordering documents and producing summaries. in Proceedings of the 21st Annual International ACM SIGIR Conference On Research And Development in Information Retrieval 335–336 (1998).

Hastie, T., Tibshirani, R. & Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction 2nd edn (Springer Science & Business Media, 2009).

Gatchel, R. J. & Turk, D. C. Psychosocial Factors in Pain: Critical Perspectives (Guilford Press, 1999).

Mostafaei, S. et al. Explanation of somatic symptoms by mental health and personality traits: application of Bayesian regularized quantile regression in a large population study. BMC Psychiatry 19, 207 (2019).

Roth, P., Wick, W. & Weller, M. Steroids in neurooncology: actions, indications, side-effects. Curr. Opin. Neurol. 23, 597 (2010).

Ponomareva, N., Bastings, J. & Vassilvitskii, S. Training Text-to-Text Transformers with Privacy Guarantees. in Findings of the Association for Computational Linguistics: ACL 2022 2182–2193 (Association for Computational Linguistics, 2022). https://doi.org/10.18653/v1/2022.findings-acl.171.

Carlini, N. et al Extracting training data from large language models. in 30th USENIX Security Symposium (USENIX Security 21), 2633–2650 (USENIX, 2021).

Zaheer, M. et al. Big Bird: Transformers for Longer Sequences. arXiv:2007.14062 [cs, stat] (2021).

Li, Y., Wehbe, R. M., Ahmad, F. S., Wang, H. & Luo, Y. A comparative study of pretrained language models for long clinical text. J. Am. Med. Inform. Assoc. 30, 340–347 (2023).

Mitchell, A. J. et al. Prevalence of depression, anxiety, and adjustment disorder in oncological, haematological, and palliative-care settings: a meta-analysis of 94 interview-based studies. Lancet Oncol 12, 160–174 (2011).

Robertson, C. et al. Diverse patients’ attitudes towards Artificial Intelligence (AI) in diagnosis. PLoS Dig. Health 2, e0000237 (2023).

Nunez, J.-J. jjnunez11/scar_nlp_psych: v1.0.0. Zenodo https://doi.org/10.5281/zenodo.10864482 (2024).

Acknowledgements

This work was funded in part by an unrestricted research grant from the BC Cancer Foundation with the funds originating from the Pfizer Innovation Fund and in part by a UBC Institute of Mental Health Marshall Fellowship received by J.J.N.

Author information

Authors and Affiliations

Contributions

J.J.N., A.T.B., and R.T.N. conceived and designed this work. All authors assisted with acquiring, analysis, and interpreting data, J.J.N. and A.T.B. drafted the initial manuscript, with all authors assisting with review and revision. J.J.N. programmed and ran the computer code. A.T.B. obtained funding. B.L., C.H., and A.T.B. provided administrative and technical support. A.T.B. and R.T.N. provided supervision.

Corresponding author

Ethics declarations

Competing interests

The authors declare the following competing interests: J.J.N. reported receiving unrestricted grant funding from Pfizer Canada through the Pfizer Innovation Fund during the conduct of the study. B.L. reported receiving personal fees from AstraZeneca outside the submitted work. C.H. reported personal fees from AbbVie, Amgen Inc, Bayer AG, Bristol-Myers Squibb Company, Eisai Co, Ltd, Janssen Pharmaceuticals, Jazz Pharmaceuticals PLC, Merck & Co, Inc, Novartis AG, and Takeda Pharmaceutical Company Limited and grant funding from AstraZeneca, EMD Serono, and F. Hoffmann–La Roche AG outside the submitted work. A.T.B. reported receiving unrestricted grant funding from Pfizer Inc to BC Cancer allocated to the Psychiatry Department during the conduct of the study and participating on an advisory panel in 2019 for Eisai Co, Ltd, outside the submitted work. R.T.N. declares no competing interests.

Peer review

Peer review information

Communications Medicine thanks Julia Ive and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nunez, JJ., Leung, B., Ho, C. et al. Predicting which patients with cancer will see a psychiatrist or counsellor from their initial oncology consultation document using natural language processing. Commun Med 4, 69 (2024). https://doi.org/10.1038/s43856-024-00495-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s43856-024-00495-x