Abstract

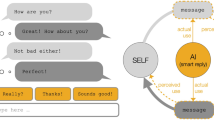

As conversational agents powered by large language models become more human-like, users are starting to view them as companions rather than mere assistants. Our study explores how changes to a person’s mental model of an AI system affects their interaction with the system. Participants interacted with the same conversational AI, but were influenced by different priming statements regarding the AI’s inner motives: caring, manipulative or no motives. Here we show that those who perceived a caring motive for the AI also perceived it as more trustworthy, empathetic and better-performing, and that the effects of priming and initial mental models were stronger for a more sophisticated AI model. Our work also indicates a feedback loop in which the user and AI reinforce the user’s mental model over a short time; further work should investigate long-term effects. The research highlights the importance of how AI systems are introduced can notably affect the interaction and how the AI is experienced.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The raw data are available on a GitHub repository, including all survey results and conversation transcripts. Source Data are provided with this paper.

Code availability

The code is available on the same GitHub repository as the data. The doi for the code is https://doi.org/10.5281/zenodo.8136979. The repository includes data processing and visualization code as well as the HTML/CSS/Javascript code for the chatbot interface. The API codes to access GPT-3 and Google Sheets are retracted, and would need to be replaced to run the code.

References

Brown, T. et al. Language models are few-shot learners. In 34th Conference on Neural Information Processing Systems 1877–1901 (NeurIPS, 2020).

Kenton, J. D. M.-W. C. & Toutanova, L. K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proc. naacL-HLT, vol. 1, 2 (2019).

Thoppilan, R. et al. Lamda: language models for dialog applications. Preprint at arXiv https://doi.org/10.48550/arXiv.2201.08239 (2022).

Vaswani, A. et al. Attention is all you need. In 31st Conference on Neural Information Processing Systems (NeurIPS, 2017).

OpenAI. GPT-4 technical report. Preprint at arXiv https://doi.org/10.48550/arXiv.2303.08774 (2023).

Chowdhery, A. et al. PaLM: scaling language modeling with pathways. Preprint at arXiv https://doi.org/10.48550/arXiv.2204.02311 (2022).

Touvron, H. et al. Llama 2: open foundation and fine-tuned chat models. Preprint at arXiv https://doi.org/10.48550/arXiv.2307.09288 (2023).

Kim, H., Koh, D. Y., Lee, G., Park, J.-M. & Lim, Y.-k. Designing personalities of conversational agents. In Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems 1–6 (ACM, 2019).

Pataranutaporn, P. et al. Ai-generated characters for supporting personalized learning and well-being. Nat. Mach. Intell. 3, 1013–1022 (2021).

Adamopoulou, E. & Moussiades, L. Chatbots: history, technology, and applications. Mach. Learn. Appl. 2, 100006 (2020).

Hoy, M. B. Alexa, Siri, Cortana, and more: an introduction to voice assistants. Med. Ref. Serv. Q. 37, 81–88 (2018).

Bavaresco, R. et al. Conversational agents in business: a systematic literature review and future research directions. Comput. Sci. Rev. 36, 100239 (2020).

Xu, A., Liu, Z., Guo, Y., Sinha, V. & Akkiraju, R. A new chatbot for customer service on social media. In Proc. 2017 CHI Conference on Human Factors in Computing Systems 3506–3510 (ACM, 2017).

Winkler, R., Hobert, S., Salovaara, A., Söllner, M. & Leimeister, J. M. Sara, the lecturer: Improving learning in online education with a scaffolding-based conversational agent. In Proc. 2020 CHI Conference on Human Factors in Computing Systems 1–14 (ACM, 2020).

Xu, Y., Vigil, V., Bustamante, A. S. & Warschauer, M. “Elinor’s talking to me!": integrating conversational AI into children’s narrative science programming. In CHI Conference on Human Factors in Computing Systems 1–16 (ACM, 2022).

Fitzpatrick, K. K., Darcy, A. & Vierhile, M. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): a randomized controlled trial. JMIR Ment. Health 4, e7785 (2017).

Jeong, S. et al. Deploying a robotic positive psychology coach to improve college students’ psychological well-being. User Model. User-Adapt. Interact. 33, 571–615 (2022).

Reeves, B. & Nass, C. The Media Equation: How People Treat Computers, Television, and New Media Like Real People Vol. 10, 236605 (Cambridge Univ. Press, 1996).

Brandtzaeg, P. B., Skjuve, M. & Følstad, A. My AI friend: How users of a social chatbot understand their human–AI friendship. Hum. Commun. Res. 48, 404–429 (2022).

Ta, V. et al. User experiences of social support from companion chatbots in everyday contexts: thematic analysis. J. Med. Int. Res. 22, e16235 (2020).

Croes, E. A. & Antheunis, M. L. Can we be friends with Mitsuku? A longitudinal study on the process of relationship formation between humans and a social chatbot. J. Soc. Pers. Relat. 38, 279–300 (2021).

Balch, O. AI and me: friendship chatbots are on the rise, but is there a gendered design flaw? The Guardian (7 May 2020); https://www.theguardian.com/careers/2020/may/07/ai-and-me-friendship-chatbots-are-on-the-rise-but-is-there-a-gendered-design-flaw

Weizenbaum, J. Eliza-a computer program for the study of natural language communication between man and machine. Commun. ACM 9, 36–45 (1966).

Natale, S. If software is narrative: Joseph Weizenbaum, artificial intelligence and the biographies of Eliza. New Media Soc. 21, 712–728 (2019).

Breazeal, C.Designing Sociable Robots (MIT Press, 2004).

Knijnenburg, B. P. & Willemsen, M. C. Inferring capabilities of intelligent agents from their external traits. In ACM Transactions on Interactive Intelligent Systems Vol. 6, 1–25 (ACM, 2016).

Feine, J., Gnewuch, U., Morana, S. & Maedche, A. A taxonomy of social cues for conversational agents. Int. J. Hum. Comput. Studies 132, 138–161 (2019).

Złotowski, J. et al. Appearance of a robot affects the impact of its behaviour on perceived trustworthiness and empathy. Paladyn 7, 55–66 (2016).

Li, D., Rau, P.-L. & Li, Y. A cross-cultural study: effect of robot appearance and task. Int. J. Soc. Robot. 2, 175–186 (2010).

Komatsu, T. & Yamada, S. Effect of agent appearance on people’s interpretation of agent’s attitude. In CHI’08 Extended Abstracts on Human Factors in Computing Systems, 2919–2924 (ACM, 2008).

Pi, Z. et al. The influences of a virtual instructor’s voice and appearance on learning from video lectures. J. Comput. Assisted Learn. 38, 1703–1713 (2022).

Paetzel, M. The influence of appearance and interaction strategy of a social robot on the feeling of uncanniness in humans. In Proc.18th ACM International Conference on Multimodal Interaction 522–526 (2016).

Koda, T. & Maes, P. Agents with faces: the effect of personification. In Proc. 5th IEEE International Workshop on Robot and Human Communication 189–194 (IEEE, 1996).

Seaborn, K., Miyake, N. P., Pennefather, P. & Otake-Matsuura, M. Voice in human–agent interaction: a survey. ACM Comput. Surv. 54, 1–43 (2021).

Seaborn, K. & Urakami, J. Measuring voice UX quantitatively: a rapid review. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems 1–8 (ACM, 2021).

Ehret, J. et al. Do prosody and embodiment influence the perceived naturalness of conversational agents’ speech? In ACM Transactions on Applied Perception Vol. 18, 1–15 (ACM, 2021).

Kim, Y., Reza, M., McGrenere, J. & Yoon, D. Designers characterize naturalness in voice user interfaces: their goals, practices, and challenges. In Proc. 2021 CHI Conference on Human Factors in Computing Systems 1–13 (ACM, 2021).

Aylett, M. P., Cowan, B. R. & Clark, L. Siri, Echo and performance: you have to suffer darling. In Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems 1–10 (ACM, 2019).

Lewis, J. R. & Hardzinski, M. L. Investigating the psychometric properties of the speech user interface service quality questionnaire. Int. J. Speech Technol. 18, 479–487 (2015).

Hwang, A. H.-C. & Won, A. S. AI in your mind: counterbalancing perceived agency and experience in human–AI interaction. In CHI Conference on Human Factors in Computing Systems Extended Abstracts 1–10 (ACM, 2022).

Völkel, S. T., Buschek, D., Eiband, M., Cowan, B. R. & Hussmann, H. Eliciting and analysing users’ envisioned dialogues with perfect voice assistants. In Proc. 2021 CHI Conference on Human Factors in Computing Systems 1–15 (ACM, 2021).

Kraus, M., Wagner, N. & Minker, W. Effects of proactive dialogue strategies on human-computer trust. In Proc. 28th ACM Conference on User Modeling, Adaptation and Personalization 107–116 (ACM, 2020).

Castro-González, Á., Admoni, H. & Scassellati, B. Effects of form and motion on judgments of social robots’ animacy, likability, trustworthiness and unpleasantness. Int. J. Hum.-Comput. Studies 90, 27–38 (2016).

van den Brule, R., Dotsch, R., Bijlstra, G., Wigboldus, D. H. & Haselager, P. Do robot performance and behavioral style affect human trust? Int. J. Soc. Robot. 6, 519–531 (2014).

Song, S. & Yamada, S. Expressing emotions through color, sound, and vibration with an appearance-constrained social robot. In 2017 12th ACM/IEEE International Conference on Human–Robot Interaction 2–11 (IEEE, 2017).

Paradeda, R. B., Hashemian, M., Rodrigues, R. A. & Paiva, A. How facial expressions and small talk may influence trust in a robot. In International Conference on Social Robotics 169–178 (Springer, 2016).

Epstein, Z., Levine, S., Rand, D. G. & Rahwan, I. Who gets credit for AI-generated art? iScience 23, 101515 (2020).

Cho, M., Lee, S.-s. & Lee, K.-P. Once a kind friend is now a thing: understanding how conversational agents at home are forgotten. In Proc. 2019 on Designing Interactive Systems Conference 1557–1569 (ACM, 2019).

Johnson-Laird, P. N. Mental Models: Towards a Cognitive Science of Language, Inference, and Consciousness 6 (Harvard Univ. Press, 1983).

Norman, D. A. in Mental Models 15–22 (Psychology, 2014).

Bansal, G. et al. Beyond accuracy: the role of mental models in human–AI team performance. In Proc. AAAI Conference on Human Computation and Crowdsourcing Vol. 7, 2–11 (AAAI, 2019).

Rutjes, H., Willemsen, M. & IJsselsteijn, W. Considerations on explainable AI and users’ mental models. In CHI 2019 Workshop: Where is the Human? Bridging the Gap Between AI and HCI (Association for Computing Machinery, 2019).

Gero, K. I. et al. Mental models of AI agents in a cooperative game setting. In Proc. 2020 CHI Conference on Human Factors in Computing Systems 1–12 (ACM, 2020).

Kieras, D. E. & Bovair, S. The role of a mental model in learning to operate a device. Cogn. Sci. 8, 255–273 (1984).

Kulesza, T., Stumpf, S., Burnett, M. & Kwan, I. Tell me more? The effects of mental model soundness on personalizing an intelligent agent. In Proc. SIGCHI Conference on Human Factors in Computing Systems 1–10 (ACM, 2012).

Bender, E. M., Gebru, T., McMillan-Major, A. & Shmitchell, S. On the dangers of stochastic parrots: can language models be too big? In Proc. 2021 ACM Conference on Fairness, Accountability, and Transparency 610–623 (ACM, 2021).

Bower, A. H. & Steyvers, M. Perceptions of AI engaging in human expression. Sci. Rep. 11, 21181 (2021).

Finn, E. & Wylie, R. Collaborative imagination: a methodological approach. Futures 132, 102788 (2021).

Jasanoff, S. & Kim, S.-H. Dreamscapes of Modernity: Sociotechnical Imaginaries and the Fabrication of Power (Univ. Chicago Press, 2015).

Finn, E. What Algorithms Want: Imagination in the Age of Computing (MIT Press, 2017).

Hudson, A. D., Finn, E. & Wylie, R. What can science fiction tell us about the future of artificial intelligence policy? AI Soc. 38, 197–211 (2021).

Hildt, E. Artificial intelligence: does consciousness matter? Front. Psychol. 10, 1535 (2019).

Yampolskiy, R. V. Taxonomy of pathways to dangerous artificial intelligence. In Workshops at the 30th AAAI Conference on Artificial Intelligence (2016).

Kounev, S. et al. in Self-Aware Computing Systems 3–16 (Springer, 2017).

Martínez, E. & Winter, C. Protecting sentient artificial intelligence: a survey of lay intuitions on standing, personhood, and general legal protection. Front. Robot. AI 8, 367 (2021).

Cave, S., Coughlan, K. & Dihal, K. “Scary robots" examining public responses to AI. In Proc. 2019 AAAI/ACM Conference on AI, Ethics, and Society 331–337 (ACM, 2019).

Cave, S. & Dihal, K. Hopes and fears for intelligent machines in fiction and reality. Nat. Mach. Intell. 1, 74–78 (2019).

Bingaman, J., Brewer, P. R., Paintsil, A. & Wilson, D. C. "Siri, show me scary images of AI": effects of text-based frames and visuals on support for artificial intelligence. Science Commun. 43, 388–401 (2021).

Chubb, J., Reed, D. & Cowling, P. Expert views about missing AI narratives: is there an AI story crisis? AI Soc. 1–20 (2022).

Mueller, S. T., Hoffman, R. R., Clancey, W., Emrey, A. & Klein, G. Explanation in human–AI systems: a literature meta-review, synopsis of key ideas and publications, and bibliography for explainable AI. Preprint at arXiv https://doi.org/10.48550/arXiv.1902.01876 (2019).

Nickerson, R. S. Confirmation bias: A ubiquitous phenomenon in many guises. Rev. General Psychol. 2, 175–220 (1998).

Ekström, A. G., Niehorster, D. C. & Olsson, E. J. Self-imposed filter bubbles: selective attention and exposure in online search. Comput. Hum. Behav. Rep. 7, 100226 (2022).

Harrington, A. The many meanings of the placebo effect: where they came from, why they matter. Biosocieties 1, 181–193 (2006).

Colagiuri, B., Schenk, L. A., Kessler, M. D., Dorsey, S. G. & Colloca, L. The placebo effect: from concepts to genes. Neuroscience 307, 171–190 (2015).

Kosch, T., Welsch, R., Chuang, L. & Schmidt, A. The placebo effect of artificial intelligence in human–computer interaction. ACM Transactions on Computer–Human Interaction Vol. 29, 1–32 (ACM, 2022).

Denisova, A. & Cairns, P. The placebo effect in digital games: phantom perception of adaptive artificial intelligence. In Proc. 2015 Annual Symposium on Computer–Human Interaction in Play 23–33 (ACM, 2015).

Friedrich, A., Flunger, B., Nagengast, B., Jonkmann, K. & Trautwein, U. Pygmalion effects in the classroom: teacher expectancy effects on students’ math achievement. Contemp. Educ. Psychol. 41, 1–12 (2015).

Rosenthal, R. in Improving Academic Achievement 25–36 (Academic, 2002).

Gill, K. S. Artificial intelligence: looking though the Pygmalion Lens. AI Soc. 33, 459–465 (2018).

GPT-3 Powers the Next Generation of Apps (OpenAI, 2021); https://openai.com/blog/gpt-3-apps

Cave, S., Dihal, K. & Dillon, S. AI Narratives: A History of Imaginative Thinking About Intelligent Machines (Oxford Univ. Press, 2020).

Paiva, A., Leite, I., Boukricha, H. & Wachsmuth, I. Empathy in virtual agents and robots: a survey. ACM Transactions on Interactive Intelligent Systems (TiiS) 7, 1–40 (2017).

Yalcin, Ó. N. & DiPaola, S. A computational model of empathy for interactive agents. Biol. Inspired Cogn. Architect. 26, 20–25 (2018).

Groh, M., Ferguson, C., Lewis, R. & Picard, R. Computational empathy counteracts the negative effects of anger on creative problem solving. In 10th International Conference on Affective Computing and Intelligent Interaction (IEEE, 2022).

De Vignemont, F. & Singer, T. The empathic brain: how, when and why? Trends Cogn. Sci. 10, 435–441 (2006).

Preston, S. D. & De Waal, F. B. Empathy: Its ultimate and proximate bases. Behav. Brain Sci. 25, 1–20 (2002).

Birkhäuer, J. et al. Trust in the health care professional and health outcome: a meta-analysis. PloS ONE 12, e0170988 (2017).

Miller, T. Explanation in artificial intelligence: Insights from the social sciences. Artif. Intell. 267, 1–38 (2019).

Evers, A. W. et al. Implications of placebo and nocebo effects for clinical practice: expert consensus. Psychother. Psychosom. 87, 204–210 (2018).

Leibowitz, K. A., Hardebeck, E. J., Goyer, J. P. & Crum, A. J. The role of patient beliefs in open-label placebo effects. Health Psychol. 38, 613 (2019).

Harrington, A. The Placebo Effect: An Interdisciplinary Exploration Vol. 8 (Harvard Univ. Press, 1999).

Danry, V., Pataranutaporn, P., Mueller, F., Maes, P. & Leigh, S.-w. On eliciting a sense of self when integrating with computers. In AHs ‘22: Proc. Augmented Humans International Conference 68–81 (ACM., 2022).

Schepman, A. & Rodway, P. Initial validation of the general attitudes towards artificial intelligence scale. Comput. Hum. Behav. Rep. 1, 100014 (2020).

See, A., Roller, S., Kiela, D. & Weston, J. What makes a good conversation? How controllable attributes affect human judgments. In Proc. 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Vol. 1 (Long and Short Papers) https://doi.org/10.18653/v1/N19-1170 (Association for Computational Linguistics, 2019).

Kosch, T., Welsch, R., Chuang, L. & Schmidt, A. The placebo effect of artificial intelligence in human–computer interaction. ACM Trans. Comput.-Hum. Interact. https://doi.org/10.1145/3529225 (2022).

Hutto, C. & Gilbert, E. VADER: a parsimonious rule-based model for sentiment analysis of social media text. In Proc. International AAAI Conference on Web and Social Media Vol. 8, 216–225 (2014).

Acknowledgements

Our paper benefited greatly from the valuable feedback provided by the reviewers, and we extend our gratitude for their contribution. We thank J. Liu, data science specialist at the Institute for Quantitative Social Science, Harvard University, for reviewing our statistical analysis. We would like to thank M. Groh, Z. Epstein, N. Whitmore, S. Chan, Z. Yan and the MIT Media Lab Fluid Interfaces group members for reviewing and giving constructive feedback on our paper. We would like to thank MIT Media Lab and KBTG for supporting P. Pataranutaporn, and the Harvard-MIT Health Sciences and Technology, and Accenture for supporting R.L.

Author information

Authors and Affiliations

Contributions

P.P. and R.L. contributed equally to this work. They conceived the research idea, designed and conducted experiments, analysed and interpreted data, and participated in writing and editing the paper. P.M. and E.F. provided supervision and guidance throughout the project, and contributed to the writing and reviewing of the paper. All authors approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Sangsu Lee and the other, anonymous reviewer(s), for their contribution to the peer review of this work. Primary Handling Editor: Jacob Huth, in collaboration with the Nature Machine Intelligence team.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Sections 1–3 and Figs. 1–5.

Source data

Source Data Fig. 2

The assigned group and perceived motives for each participant in the GPT-3 experiment.

Source Data Fig. 3

Processed conversation data, GPT-3 and ELIZA combined.

Source Data Fig. 4

Processed survey data, GPT-3 and ELIZA combined.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Pataranutaporn, P., Liu, R., Finn, E. et al. Influencing human–AI interaction by priming beliefs about AI can increase perceived trustworthiness, empathy and effectiveness. Nat Mach Intell 5, 1076–1086 (2023). https://doi.org/10.1038/s42256-023-00720-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-023-00720-7