Abstract

Over the last few decades, stochastic thermodynamics has emerged as a framework to study the thermodynamics of small-scaled systems. The relation between entropy production and precision is one of the most prominent research topics in this field. In this paper, I answer the question how much dissipation is needed to follow a pre-determined trajectory. This will be done by deriving a trade-off relation between how precisely a mesoscopic system can follow a pre-defined trajectory and how much the system dissipates. In the high-precision limit, the minimal amount of dissipation is inversely proportional to the expected deviation from the pre-defined trajectory. Furthermore, I will derive the protocol that maximizes the precision for a given amount of dissipation. The optimal time-dependent force field is a conservative energy landscape which combines a shifted version of the initial energy landscape and a quadratic energy landscape. The associated time-dependent probability distribution conserves its shape throughout the optimal protocol. Potential applications are discussed in the context of bit erasure and electronic circuits.

Similar content being viewed by others

Introduction

The dynamics of mesoscopic systems are heavily influenced by thermal fluctuations. Controlling those systems generally incurs a thermodynamic cost. Over the last decade, several general bounds on this cost have been derived within the framework of stochastic thermodynamics1,2. For example, the thermodynamic uncertainty relation states that the signal-to-noise ratio of any thermodynamic flux is bounded by the dissipation rate (i.e., entropy production rate) of the system3,4,5,6,7,8,9,10,11; the thermodynamic speed limit states that the speed at which a system can be transferred from an initial to a specific final finite state is bounded by the dissipation rate12,13,14,15,16,17,18,19,20,21; the dissipation-time uncertainty relation bounds the total amount of dissipation for any first-passage time of far-from-equilibrium systems22,23,24; and an entropic bound based on a communication channel interpretation25. These bounds have led to several applications, such as assessments of the efficiency of cellular processes and methods to infer the dissipation rate from measurements of thermodynamic fluxes26,27,28,29,30.

Most of the aforementioned entropic bounds focus either on the precision associated with thermodynamic fluxes, or on limits on the speed at which one can complete a process. Thermodynamic limits on the precision at which one can follow an entire trajectory have, to the best of my knowledge, not been thoroughly addressed. The central goal of this paper will be to close this gap by answering the question: ‘How precisely can a system follow a pre-defined trajectory while keeping the total expected amount of dissipation associated with the process below a fixed limit?’, for continuous-state Markov systems. This will be done by using methods from optimal transport theory12,31,32,33 to derive a closed expression for the minimal expected deviation between the desired trajectory and the actual trajectory, given a fixed amount of entropy production and provided that one has full control over the system. The collection of optimal solutions for different values of total expected dissipation, also known as a Pareto front34,35, determines the minimal average deviation from the desired trajectory as a function of dissipation, effectively rendering a trade-off relation between precision and dissipation, cf. Eqs. (5)-(7) below. This trade-off relation generally has a rather complicated form but simplifies in the small-deviation limit. In this limit, the minimal expected deviation from the desired trajectory is inversely proportional to the amount of dissipation in the process.

The next section of this paper introduces the basic notation and reviews some results of stochastic thermodynamics that will then be used to derive the precision-dissipation trade-off relation and the associated driving protocols. Subsequently, I will show applications of the framework in information processing and in electric circuits. The paper ends with a discussion on potential applications and future research directions.

Results

Stochastic thermodynamics

Throughout this paper, I will focus on n-dimensional continuous Markov systems whose state can be described by a variable x = (x1, x2, . . , xn). The probability, p(x, t), for the system to be in state x at time t satisfies an overdamped Fokker-Planck equation:

where v(x, t) is the probability flux, given by,

Here F(x, t) is the force field that the system experiences, D is the diffusion coefficient, kB is the Boltzmann constant, and T is the temperature of the environment. Throughout this paper, T and D are assumed to be constant. The Fokker–Planck equation, Eq. (2), can describe a broad class of systems including the position of a colloidal particle in a potential energy landscape36, the distribution of electrical charges across the conductors of a linear electrical circuit37, the state of a spin system38, or the concentrations of molecular species in a chemical reaction network39. Throughout this paper, I will assume that one has full control over the force field at all times, unless specified otherwise. This means that one can construct any time-evolution for the probability distribution p(x, t) (cf. Supplementary note 1).

The goal of this paper is to determine the optimal time-dependent force field, such that the state of the system, x, follows a given pre-defined trajectory X(t) as closely as possible between an initial and a final time, t = 0 and t = tf. The expected deviation from X(t) can be quantified by a function ϵ, defined as

i.e., the expected squared distance from the pre-defined trajectory integrated over the duration of the protocol. Meanwhile, one also wants to minimize the amount of dissipation. Stochastic thermodynamics dictates that the average amount of entropy dissipated throughout the process is given by1

Note that this expected amount of dissipation is always positive, in accordance with the second law of thermodynamics.

Precision-dissipation trade-off

The central goal of this paper will be to minimize the expected deviation, ϵ, while also minimizing the expected amount of dissipation, ΔiS, by choosing an optimal protocol for the force field, F(x, t). ϵ is generally minimized by immediately forcing the probability distribution to be peaked around X(t), which leads to a diverging amount of dissipation. Meanwhile, the total dissipation can be set to zero, by setting \({{{{{{{\bf{F}}}}}}}}({{{{{{{\bf{x}}}}}}}},t)={k}_{B}T\nabla \ln (p({{{{{{{\bf{x}}}}}}}},0))\) at all times. One can use Eqs. (1) and (4) to show that this force fields leads to a stationary state with zero dissipation, but in this case ϵ will generally be large. In other words, the minimization of ϵ and ΔiS, are mutually incompatible and there is no unique optimal trajectory. Therefore, the focus of this paper will be on minimizing the expected deviation ϵ for a fixed amount of dissipation ΔiS, or vice versa. Such a minimum is known as a Pareto-optimal solution. Although Pareto-optimality is a often-used technique in the fields of economics and engineering34, it has only recently been introduced in the context of stochastic thermodynamics35. The collection of all Pareto-optimal solutions is known as the Pareto front. For any point on the Pareto front, one can only lower the expected deviation, ϵ, by increasing the amount of dissipation and vice versa. In supplementary note 1 I show that the Pareto front can generally be written as a parametric set of equations:

with

and 〈.〉0 stands for the average taken over the initial probability distribution p(x, 0), \(\left\langle g({{{{{{{\bf{x}}}}}}}})\right\rangle =\int\nolimits_{-\infty }^{\infty }d{{{{{{{\bf{x}}}}}}}}p({{{{{{{\bf{x}}}}}}}},0)g({{{{{{{\bf{x}}}}}}}})\), for any test function g(x). The full Pareto front can be found by varying λ between zero and infinity, which corresponds to changing the value of ΔiS or ϵ, under which one minimizes the other quantity. This Pareto front serves as a trade-off relation between the amount of dissipation, ΔiS and the precision, ϵ: once one reaches the Pareto front one can only further decrease ϵ by increasing ΔiS and vice versa. Although the Pareto front can only be reached under the correct level of control, Eqs. (5)–(7) still serve as a lower bound for systems with limited levels of control: for each value of ϵ, Eq. (6) serves as a lower bound on the amount of dissipation. The explicit bound is written in Supplementary note 1, c.f., Eqs. (A.25)-(A.26). This Pareto front is the central result of this paper.

It should be stressed that this bounds the precision of any continuous-state Markov system following a time-dependent trajectory. Furthermore, the Pareto front only depends on the initial state of the system through its first two moments, \({\langle {{{{{{{{\bf{x}}}}}}}}}^{2}\rangle }_{0}=\int\nolimits_{-\infty }^{\infty }d{{{{{{{\bf{x}}}}}}}}\,p({{{{{{{\bf{x}}}}}}}},0){{{{{{{{\bf{x}}}}}}}}}^{2}\) and \({\langle {{{{{{{\bf{x}}}}}}}}\rangle }_{0}=\int\nolimits_{-\infty }^{\infty }d{{{{{{{\bf{x}}}}}}}}\,p({{{{{{{\bf{x}}}}}}}},0){{{{{{{\bf{x}}}}}}}}\). This shows that the Pareto front for very distinctive problems can have exactly the same shape. In particular, one can map any Pareto front on that of a Gaussian system with the same initial average and variance.

In most relevant applications, one is primarily interested in reaching a very high level of precision, i.e., very small ϵ. In this limit, the expression for the Pareto front simplifies to (c.f., Supplementary note 3)

where {jumps} stands for the collection of discontinuities in the protocol, \({\lim }_{t\to {t}_{i}^{+}}{{{{{{{\bf{X}}}}}}}}(t)-{\lim }_{t\to {t}_{i}^{-}}{{{{{{{\bf{X}}}}}}}}(t)\equiv \Delta {{{{{{{{\bf{X}}}}}}}}}_{i}\ne 0\). Therefore, one can conclude that,- in the high-precision limit, the minimal expected amount of dissipation is inversely proportional to the expected deviation from the desired trajectory. The bound Eq. (8) is only strictly true in the limit ϵ → 0, but it can serve as a good approximation to the exact Pareto front, Eqs. (5)–(7), for sufficiently low values of ϵ, as will be shown in the examples below.

So far, I have focused on the expression of the Pareto front, but it is also possible to obtain the associated optimal protocols for the force field and the probability distribution. Firstly, the optimal time-dependent force field is given by (c.f., Supplementary Note 2)

This force field is of a gradient form, i.e., the optimal protocol only involves a conservative energy landscape and non-conservative forces will generally not improve the precision of the driving without inducing extra dissipation. This is in stark contrast to discrete-state systems, where non-conservative forces are generally necessary to minimize dissipation40. Equation (9) also reveals the level of control needed to reach the Pareto front: the optimal energy landscape is the sum of a time-dependent harmonic oscillator and an energy landscape that has the same shape as the equilibrium energy landscape at t = 0, \(-{k}_{B}T\ln p({{{{{{{\bf{x}}}}}}}},0)\). This means that if the system is initially in a Gaussian state, the optimal energy landscape, Eq. (9), corresponds to an harmonic oscillator at all times. This expression also gives a clear interpretation to the functions a(λ, t) and b(λ, t): a(λ, t) is a decreasing function, independent of the target trajectory X(t), which leads to a tightening of the energy landscape throughout the protocol, while b(λ, t) determines the positional shift of the energy landscape.

It is also possible to calculate the probability distribution associated with the state of the system at all times (c.f., Supplementary note 2):

In other words, the protocol that minimizes ϵ for a given value of ΔiS conserves the shape of the probability distribution associated with the state of the system at all times. This result is in agreement with the aforementioned interpretation that a(λ, t) is responsible for the narrowing of the distribution while b(λ, t) leads to a positional shift.

Applications

The general bound and optimal protocols derived above can be applied to a broad class of systems. To illustrate this, I will look at two examples (cf. Fig. 1): information processing, where this framework allows to optimize arbitrary complicated bit operations, and electronic circuits, which serve as an ideal setting to check how well systems under limited control can approach the Pareto front.

Computational bits can be modeled using a one-dimensional Fokker-Planck equation with a double-well potential41,42,

where E0, x0, and c are free parameters. The bit is then said to be in state 0 if x < 0 and in state 1 if x > 0. In equilibrium, the bit is equally likely to be in state 0 and to be in state 1, p(x < 0) = p(x > 0) = 1/2. If one wants to erase the bit to state 0, p(x < 0) = 1, one needs to perform an amount of work, W, to the system by modulating U(x, t). The expectation value of W is bounded by Landauer’s limit, \(\left\langle W\right\rangle \ge {k}_{B}T\ln 2\). Over the last decade, several methods have been derived to extend Landauer’s principle to finite-time processes12,16,17,43,44,45,46, where one can show that one needs to put in an extra amount of work, corresponding to the dissipation. With the framework derived in this paper it is possible to extend these results to more complicated bit operations. In particular, I will focus on the precision-dissipation trade-off associated with erasing a bit to state 0 and subsequently flipping the bit to state 1, c.f. Fig. 1a. This corresponds to

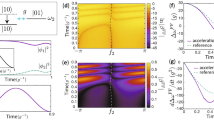

and p(x, 0) corresponds to the equilibrium distribution associated with U0(x). The resulting Pareto front is shown in Fig. 2a (c.f., Supplementary note 4 for detailed calculations). One can see that the high-precision limit, Eq. (8) is in very good agreement with the exact Pareto front for \(\epsilon \, \lesssim \, 0.5{t}_{f}{x}_{0}^{2}\), but no longer serves as a lower bound for larger values of ϵ. The explicit protocols in the low-deviation (\(\epsilon /({t}_{f}{x}_{0}^{2})=0.5\)) and the high-deviation (\(\epsilon /({t}_{f}{x}_{0}^{2})=2\)) limit are shown in the supplementary videos 1–2. Furthermore, Supplementary figure 2 shows a(λ, t) and b(λ, t) for different levels of precision. One can verify that the positional shift, b(λ, t) follows X(t) closer at higher precision and mainly deviates around the jump in X(t). Meanwhile the probability distribution tightens exponentially fast, as illustrated by the decay of a(λ, t).

a Pareto front for erasure plus bit-flip, with \(c=\sqrt{2}\), E0 = 2kBT and \(c=\sqrt{2}\). The dashed green line corresponds to Eq. (8). b the electrical circuit, as depicted in Fig. 1. b Pareto front for an electrical circuit with time-dependent voltage source Vs(t), resistor R and capacitor C, with tf = RC/3 and \(C{V}_{0}^{2}=2{k}_{B}T\). The green region corresponds to the values of ϵ and ΔiS that can be reached by only controlling the voltage source, whereas the red region corresponds to the theoretical Pareto front under full control. The dashed red line corresponds to Eq. (8).

There are many systems, where one does not have full control over the driving protocol. This can make it impossible to implement the optimal protocol, Eq. (8), and saturate the bound, Eqs. (5)–(7). It is not a priori clear how close the Pareto front under limited control is to the one under full control. To test this, I will now turn to the electronic circuit shown in Fig. 1b, where an observer controls a time-dependent voltage source Vs(t) connected to a resistor, with resistance R, and a capacitor, with capacitance C. One can then use the time-dependent voltage source to make sure that voltage over the capacitor, vC, follows a pre-defined trajectory. For small-scaled systems, this voltage will generally fluctuate due to thermal noise. Control over Vs(t) does not allow for any arbitrary force field F(vc, t), as will be shown below. Therefore, the minimal deviation for a given amount of dissipation will not saturate the Pareto front.

The voltage fluctuations associated with thermal noise are given by the Johnson-Nyquist formula37. In this case, one can show that the voltage over the capacitor satisfies:

This corresponds to a Fokker-Planck equation, similar to Eq. (1), with F(vc, t) = C(Vs(t) − vc) and D = kBT/(RC2). The voltage source can now be used to apply a time-dependent voltage over the capacitor. Here, I will focus on a protocol where one tries to charge the capacitor

Initially, the capacitor is assumed to be in equilibrium with the voltage source,

With this boundary conditions, one can write a general expression for the probability distribution at all times:

with

This expression can be verified by plugging it in into Eq. (13). One can use the general framework for thermodynamics of electronic circuits to calculate the total amount of dissipation during the process37. This gives an expression that corresponds exactly to Eq. (4). Furthermore, the precision is defined in the same way as in Eq. (3), with X(t) = VT(t).

Both the theoretical precision-dissipation Pareto front under full control, Eq. (5)–(7) and the Pareto front when one only has control over the voltage source are calculated explicitly in supplementary notes 4 and 5 respectively. The Pareto fronts are shown in Fig. 2b, together with the high-precision bound, Eq. (8). The green region corresponds to the values of ϵ and ΔiS that can be reached by controlling the voltage source, whereas the red region corresponds to values of ϵ and ΔiS that can only be reached under full control. One can verify from Fig. 2b that the high-precision region cannot be reached by only controlling the voltage source.

The supplemental videos 3–6 show optimal protocols both under full control (for ϵ = 0.05 and ϵ = 0.5) and under limited control (for ϵ = 0.5 and ϵ = 0.6). One can see that the full-control protocol primarily focuses on avoiding big fluctuations while the limited control protocol focuses more on optimizing the average value. Furthermore, one can see that the optimal protocol mainly deviates from X(t) at the end of the protocol. This can also be seen in Supplementary fig. 2.

Discussion

In conclusion, this paper derives a general expression for the minimal thermodynamic cost associated with following a pre-defined trajectory at a given precision, cf. Eqs. (5)–(6). This bound holds for all systems that can be described by an overdamped Langevin system and becomes particularly useful in the high-precision limit where ϵ is at least inversely proportional to the amount of dissipation. This means that the results can be applied to a broad class of biological and chemical systems, alongside the examples in information processing and electronic systems discussed above.

This work opens up several potential directions for future research. One particularly interesting application would be to use the Pareto front to infer a lower bound on the amount of entropy production from experimental measurements of the precision. Indeed, by calculating ϵ with respect to any choice of X(t), one can use the Pareto front derived in this paper to infer a lower bound on the dissipation rate of the experimental system.

Although there exists a broad range of methods to infer dissipation for steady-state systems29,47,48,49, extensions to time-dependent systems often involve response functions8,50, time-inverted dynamics9, or other quantities that might not be experimentally accessible12,15,51. Therefore, the number of methods to estimate the entropy production in time-dependent systems from a single set of measurements is rather limited33,52,53 and it would be interesting to quantitatively compare our bound with these methods.

To do this, one can look at a broad range of experimental systems, including micro-electronic systems similar to the example discussed in this paper54, or colloidal particles trapped by optical tweezers55,56. It might also be possible to use the Pareto front to improve model discovery techniques, such as Stochastic Force Inference25 and Sparse Identification of Nonlinear Dynamics57, where measurements of ϵ and ΔiS might put additional constraints on the choice of models. Another interesting application would be to use the bound as a quantitative test to study how efficient a choice of control parameters is.

There are also several ways in which the results from this paper can be extended. For example, one can use the same methodology to derive lower bounds for the entropy production associated with minimizing other observables. It might also be possible to extend the results of this paper to discrete state systems and systems with strong quantum effects, using similar ideas as in the known extensions of the thermodynamic speed limit15. It would also be interesting to compare the results of this paper with similar Pareto fronts under limited control. One particularly interesting type of control is counterdiabatic driving, in which the system is forced to follow an adiabatic trajectory58. This has important applications in several fields such as quantum control59, information processing46, and population genetics60. It would be interesting to see whether one can derive a similar precision-dissipation trade-off within such a limited-control framework. This might be possible by redoing the derivation in Supplementary note 1 where the control constraints lead to extra terms in the Lagrangian, Eq. (A.1). Furthermore, it has been shown that Pareto fronts under limited control can have interesting phenomenology such as phase-transitions35.

Data availability

No datasets were generated or analyzed in this study.

References

Seifert, U. Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 75, 126001 (2012).

Peliti, L. and Pigolotti, S. Stochastic Thermodynamics: An Introduction (Princeton University Press, 2021).

Barato, A. C. & Seifert, U. Thermodynamic uncertainty relation for biomolecular processes. Phys. Rev. Lett. 114, 158101 (2015).

Gingrich, T. R., Horowitz, J. M., Perunov, N. & England, J. L. Dissipation bounds all steady-state current fluctuations. Phys. Rev. Lett. 116, 120601 (2016).

Proesmans, K. & Van den Broeck, C. Discrete-time thermodynamic uncertainty relation. EPL (Europhys. Lett.) 119, 20001 (2017).

Hasegawa, Y. & Van Vu, T. Fluctuation theorem uncertainty relation. Phys. Rev. Lett. 123, 110602 (2019).

Timpanaro, A. M., Guarnieri, G., Goold, J. & Landi, G. T. Thermodynamic uncertainty relations from exchange fluctuation theorems. Phys. Rev. Lett. 123, 090604 (2019).

Koyuk, T. & Seifert, U. Operationally accessible bounds on fluctuations and entropy production in periodically driven systems. Phys. Rev. Lett. 122, 230601 (2019).

Proesmans, K. & Horowitz, J. M. Hysteretic thermodynamic uncertainty relation for systems with broken time-reversal symmetry. J. Stat. Mech.: Theory Exp. 2019, 054005 (2019).

Harunari, P. E., Fiore, C. E. & Proesmans, K. Exact statistics and thermodynamic uncertainty relations for a periodically driven electron pump. J. Phys. A: Math. Theor. 53, 374001 (2020).

Pal, S., Saryal, S., Segal, D., Mahesh, T. S. & Agarwalla, B. K. Experimental study of the thermodynamic uncertainty relation. Phys. Rev. Res. 2, 022044 (2020).

Aurell, E., Mejía-Monasterio, C. & Muratore-Ginanneschi, P. Optimal protocols and optimal transport in stochastic thermodynamics. Phys. Rev. Lett. 106, 250601 (2011).

Aurell, E., Gawedzki, K., Mejia-Monasterio, C., Mohayaee, R. & Muratore-Ginanneschi, P. Refined second law of thermodynamics for fast random processes. J. Stat. Phys. 147, 487–505 (2012).

Sivak, D. A. & Crooks, G. E. Thermodynamic metrics and optimal paths. Phys. Rev. Lett. 108, 190602 (2012).

Shiraishi, N., Funo, K. & Saito, K. Speed limit for classical stochastic processes. Phys. Rev. Lett. 121, 070601 (2018).

Proesmans, K., Ehrich, J. & Bechhoefer, J. Finite-time landauer principle. Phys. Rev. Lett. 125, 100602 (2020).

Proesmans, K., Ehrich, J. & Bechhoefer, J. Optimal finite-time bit erasure under full control. Phys. Rev. E 102, 032105 (2020).

Ito, S. & Dechant, A. Stochastic time evolution, information geometry, and the cramér-rao bound. Phys. Rev. X 10, 021056 (2020).

Zhen, Y.-Z., Egloff, D., Modi, K. & Dahlsten, O. Universal bound on energy cost of bit reset in finite time. Phys. Rev. Lett. 127, 190602 (2021).

Van Vu, T. & Saito, K. Finite-time quantum landauer principle and quantum coherence. Phys. Rev. Lett. 128, 010602 (2022).

Dechant, A. Minimum entropy production, detailed balance and wasserstein distance for continuous-time markov processes, J. Phys. A: Math.Theor. 55, 094001 (2022).

Falasco, G. & Esposito, M. Dissipation-time uncertainty relation. Phys. Rev. Lett. 125, 120604 (2020).

Kuznets-Speck, B. and Limmer, D. T. Dissipation bounds the amplification of transition rates far from equilibrium, Proc. Natl Acad. Sci. USA 118, e2020863118 (2021).

Yan, L.-L. et al. Experimental verification of dissipation-time uncertainty relation. Phys. Rev. Lett. 128, 050603 (2022).

Frishman, A. & Ronceray, P. Learning force fields from stochastic trajectories. Phys. Rev. X 10, 021009 (2020).

Pietzonka, P., Barato, A. C. & Seifert, U. Universal bound on the efficiency of molecular motors. J. Stat. Mech.: Theory Exp. 2016, 124004 (2016).

Gingrich, T. R., Rotskoff, G. M. & Horowitz, J. M. Inferring dissipation from current fluctuations. J. Phys. A: Math. Theor. 50, 184004 (2017).

Pietzonka, P. & Seifert, U. Universal trade-off between power, efficiency, and constancy in steady-state heat engines. Phys. Rev. Lett. 120, 190602 (2018).

Li, J., Horowitz, J. M., Gingrich, T. R. & Fakhri, N. Quantifying dissipation using fluctuating currents. Nat. Commun. 10, 1–9 (2019).

Manikandan, S. K. et al. Quantitative analysis of non-equilibrium systems from short-time experimental data. Commun. Phys. 4, 1–10 (2021).

Benamou, J.-D. & Brenier, Y. A computational fluid mechanics solution to the monge-kantorovich mass transfer problem. Numer. Math. 84, 375–393 (2000).

Villani, C., Topics in Optimal Rransportation, Vol. 58 (American Mathematical Soc., 2003).

Dechant, A. and Sakurai, Y. Thermodynamic interpretation of Wasserstein distance, arXiv https://arxiv.org/abs/1912.08405 (2019).

Ishizaka, A. and Nemery, P. Multi-cRiteria Decision Analysis: Methods And Software (John Wiley & Sons, 2013).

Solon, A. P. & Horowitz, J. M. Phase transition in protocols minimizing work fluctuations. Phys. Rev. Lett. 120, 180605 (2018).

Blickle, V., Speck, T., Helden, L., Seifert, U. & Bechinger, C. Thermodynamics of a colloidal particle in a time-dependent nonharmonic potential. Phys. Rev. Lett. 96, 070603 (2006).

Freitas, N., Delvenne, J.-C. & Esposito, M. Stochastic and quantum thermodynamics of driven rlc networks. Phys. Rev. X 10, 031005 (2020).

Garanin, D. A. Fokker-planck and landau-lifshitz-bloch equations for classical ferromagnets. Phys. Rev. B 55, 3050 (1997).

Gillespie, D. T. The chemical langevin equation. J. Chem. Phys. 113, 297–306 (2000).

Remlein, B. & Seifert, U. Optimality of nonconservative driving for finite-time processes with discrete states. Phys. Rev. E 103, L050105 (2021).

Bérut, A. et al. Experimental verification of landauer’s principle linking information and thermodynamics. Nature 483, 187–189 (2012).

Jun, Y., Gavrilov, M. & Bechhoefer, J. High-precision test of landauer’s principle in a feedback trap. Phys. Rev. Lett. 113, 190601 (2014).

Proesmans, K. & Bechhoefer, J. Erasing a majority-logic bit. Europhys. Lett. 133, 30002 (2021).

Zulkowski, P. R. & DeWeese, M. R. Optimal finite-time erasure of a classical bit. Phys. Rev. E 89, 052140 (2014).

Zulkowski, P. R. & DeWeese, M. R. Optimal control of overdamped systems. Phys. Rev. E 92, 032117 (2015).

Boyd, A. B., Patra, A., Jarzynski, C. & Crutchfield, J. P. Shortcuts to thermodynamic computing: the cost of fast and faithful information processing. J. Stat. Phys. 187, 1–34 (2022).

Roldán, É. & Parrondo, J. M. R. Estimating dissipation from single stationary trajectories. Phys. Rev. Lett. 105, 150607 (2010).

Martínez, I. A., Bisker, G., Horowitz, J. M. & Parrondo, J. M. R. Inferring broken detailed balance in the absence of observable currents. Nat. Commun. 10, 1–10 (2019).

Ehrich, J. Tightest bound on hidden entropy production from partially observed dynamics. J. Stat. Mech.: Theory Exp. 2021, 083214 (2021).

Koyuk, T. & Seifert, U. Thermodynamic uncertainty relation for time-dependent driving. Phys. Rev. Lett. 125, 260604 (2020).

Barato, A. C., Chetrite, R., Faggionato, A. & Gabrielli, D. A unifying picture of generalized thermodynamic uncertainty relations. J. Stat. Mech.: Theory Exp. 2019, 084017 (2019).

Otsubo, S., Manikandan, S. K., Sagawa, T. & Krishnamurthy, S. Estimating time-dependent entropy production from non-equilibrium trajectories. Commun. Phys. 5, 11 (2022).

Lee, S. et al. Multidimensional entropic bound: estimator of entropy production for langevin dynamics with an arbitrary time-dependent protocol. Phys. Rev. Res. 5, 013194 (2023).

Garnier, N. & Ciliberto, S. Nonequilibrium fluctuations in a resistor. Phys. Rev. E 71, 060101 (2005).

Ciliberto, S. Experiments in stochastic thermodynamics: short history and perspectives. Phys. Rev. X 7, 021051 (2017).

Kumar, A., Chétrite, R., & Bechhoefer, J. Anomalous heating in a colloidal system, Proc. Natl Acad. Sci. USA 119, e2118484119 (2022).

Brunton, S. L., Proctor, J. L. & Kutz, J. N. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc. Natl Acad. Sci. USA 113, 3932–3937 (2016).

del Campo, A. Shortcuts to adiabaticity by counterdiabatic driving. Phys. Rev. Lett. 111, 100502 (2013).

Sels, D. & Polkovnikov, A. Minimizing irreversible losses in quantum systems by local counterdiabatic driving. Proc. Natl Acad. Sci. USA 114, E3909–E3916 (2017).

Ilker, E. et al. Shortcuts in stochastic systems and control of biophysical processes. Phys. Rev. X 12, 021048 (2022).

Acknowledgements

I thank John Bechhoefer for interesting discussions on the manuscript. This project has received funding from the European Union’s Horizon 2020 research and innovation program under the Marie Sklodowska-Curie grant agreement No. 847523 ‘INTERACTIONS’ and from the Novo Nordisk Foundation (grant No. NNF18SA0035142 and NNF21OC0071284).

Author information

Authors and Affiliations

Contributions

K.P. did everything.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Peer review

Peer review information

Communications Physics thanks the anonymous reviewers for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Proesmans, K. Precision-dissipation trade-off for driven stochastic systems. Commun Phys 6, 226 (2023). https://doi.org/10.1038/s42005-023-01343-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42005-023-01343-5

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.