Abstract

Coercivity is expressed as a complex correlation between magnetisation and microstructures. However, owing to multiple intrinsic origins, coercivity has not been fully understood in the framework of the conventional Ginzburg–Landau theory. Here, we use machine learning to draw a realistic energy landscape of magnetisation reversal to consider missing parameters in the Ginzburg–Landau theory. The energy landscape in the magnetisation reversal process is visualised as a function of features extracted via machine learning; the correlation between the reduced feature space and hysteresis loop is assigned. Features in the lower dimension dataset strongly correlate with magnetisation and are embedded with morphological information. We analyse the energy landscape for simulated and experimental magnetic domain structures; a similar trend is observed. The landscape map enables visualisation of the energy of the system and coercivity as a function of feature space components.

Similar content being viewed by others

Introduction

Soft magnetic materials have important applications in the cores of transforms, generators, and motors, among others1,2,3. With the advantage of reducing global warming, electric cars have become a popular topic in the automotive industry. The desired properties of soft magnetic materials for achieving high energy efficiency are high saturation magnetisation, low coercivity, and low loss. A crucial aspect that limits the efficiency of motors is energy loss. The loss at each cycle corresponds to the work performed by the external field4. Therefore, to develop better motors, maximum suppression of this loss is necessary. Among the losses, hysteresis loss is primarily caused by the pinning of the domain wall, which limits the free motion under an external field4. Domain walls have been extensively researched5, and the correlation between hysteresis and microstructures has been addressed for hard magnetic materials6, and in a few works, for soft magnetic materials7,8. The microstructure in soft magnetic materials has an important influence on the loss owing to the Barkhausen effect9.

Visualising the energy for magnetic materials has practical and fundamental applications. Balakrishna and James recently described a theoretical framework to elucidate the coercivity in Permalloy (Py) using magnetoelastic and anisotropic energies10. Toga et al. have described the energy landscape for the Neodymium (Nd) magnet to reveal the thermodynamic activation energy of the system11. The calculation of the energy in real materials is arduous owing to defects, roughness, crystal sizes, etc. The description of physics in inhomogeneous polycrystalline systems considering the metallography structure is necessary for advanced material applications, particularly in non-equilibrium conditions such as the magnetisation reversal process. Thus, an understanding of the function of real materials in a heterogeneous system, such as the magnetic domain and metallographic structure, has been an outstanding issue in materials science.

Currently, however, this problem may be solved using material informatics12,13. In the last decade, with the evolution of hardware and the creation of material databases14,15, machine-learning techniques have broken into material science with outstanding results16,17,18. Magnetic properties have recently been investigated using machine learning. Kwon et al. developed a technique for extracting the parameters of the magnetic Hamiltonian from spin-polarised low-energy electron microscope (SPLEEM) image data using a combination of neural networks19. Park et al. have used artificial neural network (ANN) and other machine learning algorithms to predicate the coercivity in NdFeB magnets and obtained an accuracy as high as R2 = 0.920. Additionally, the obtainment of system parameters based on the analysis of the Ginzburg–Landau (GL) domain wall equation has been recently reported21. Recently discussions done by our group about the GL theory and its applications and limitations have been published somewhere else22,23.

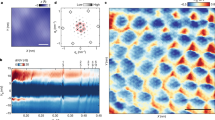

In this work, we attempt to describe coercivity using the machine learning energy landscape and how system properties can be visualised in feature space using an extension of the standard GL theory. We focused on understanding the coercivity mechanism of real materials. We used a micromagnetics simulation and magneto-optical Kerr effect microscope images combined with principal component analysis (PCA) to develop the energy landscape in the magnetisation reversal process for Py thin film. We could draw the landscape for simulated and experimental magnetic domain structure sets. We discuss how coercivity is represented in a feature space and analysed the relationship between physical parameters, the meaning of the outputted features, and energy cost. Our explainable machine learning method is applied to understand the mechanism of coercivity of both simulated and experimental datasets of magnetisation reversal processes. Here, we compared the effect of an increase in the density of defects in Py thin films using a smart analysis to directly connect the image information of the magnetic domain structure to the analysis of the coercive force mechanism using the GL theory. To elucidate the general idea of this manuscript, Fig. 1 displays the workflow of extracting the physical information and drawing the energy landscape from the magnetic domain images. (I) Magnetisation images were obtained by simulation or experiment or both. (II) Here the images are pre-processed and converted from real space to reciprocal space using fast Fourier transformation (FFT). Fourier transformation has been used in image processing to extract periodic components; also, Fourier transformation has been extensively used to study magnetic domains in diverse imaging and analysis techniques24,25,26,27,28. The reciprocal space maps the magnetic order; thus, we can reduce the dataset by considering only the higher-ordered elements with minimum information loss, which also avoids overfitting. (III) The reciprocal information is vectorised and used as the input for PCA, which is reduced in two dimensions. PCA is a simple and efficient algorithm that can help interpret the trend in the dataset, and it also features explainability. At the end of this step, the energy landscape is drawn, which can be used to extract the hidden factors. (IV) Finally, the GL model was extended to the information space by using variable transformations. The correlation between the principal components and the physical parameters is used to interpret the magnetisation reversal phenomenon.

The classic Ginzburg–Landau (GL) model has issues with analysing the coercivity of inhomogeneous real materials, such as grain boundaries and defects. Our extended GL model exploits the information of inhomogeneities in the magnetic domain structure and uses a physical descriptor to draw the energy landscape in a feature space. The data acquisition starts with obtaining magnetic domain images from the initial image set by solving the Landau–Lifshitz–Gilbert (LLG) equation or experimental setup. Next, the features are extracted using fast Fourier transformation (FFT) and then vectorised. The FFT vectors of the magnetisation image dataset images were reduced to a 2-dimensional space using principal component analysis (PCA). The dimensionally reduced data sets can finally be stacked together. The interpretation of the coercivity can be done by observing the distance and gradient of the landscape. Summarily, using supervised machine learning, the prediction of the energy can be achieved.

Results

Development of energy landscape model

Machine learning methods

Machine learning algorithms are mainly separated into two main groups: unsupervised and supervised learning. The former aims to detect patterns and correlations between the data29 and the latter is trained using data labels to perform predictions. In this work, we used PCA (unsupervised learning) to reduce the dimension of the datasets and ANN and polynomial regression (supervised learning) to perform the predictions.

Principal component analysis

PCA is a traditional technique applied for dimension reduction, feature extraction, and data visualisation29,30. Due to the versatility of the method, PCA and other PCA-based algorithms have been successfully employed in pattern recognition and signal processing31,32,33,34.

PCA is formally defined as the orthogonal projection of data points onto a lower-dimensional linear space that the variance of the data in the lower space is maximised. The goal is to extract important information from the data set as well as order both the indices and principal components (PCs) based on the “explainability” (explained variance) of the data set. In a two dimensional decomposition, the first principal component (PC1) has the maximum variance. Orthogonal to PC1, the second principal component (PC2) presents the remaining variance.

Polynomial regression

Polynomial regression is a regression method that represents the relationship between two variables using a nth degree polynomial29.

where ϵ is the error and β0, ⋯ βn are the unknown parameters. The fitting is often done using the least-square method.

Artificial neural network

ANN is inspired by the biological neural network to perform predictions35,36. Based on the biological concept, an ANN algorithm can be composed of one or more layers and each layer has one or more nodes, also called neurons. The connection between the nodes in an ANN is weighted based on their prediction ability35.

Each node is trained based on the previous layers and so on. The layers between the input and output layers are called “hidden layers”. The training is based on minimisation of the error function by the gradient descend35.

Extended GL model

GL is a simple model to describe the magnetic reversal process based on statistical mechanics. Due to the simplistic formulation of the system energy, taking account of defects is rather complicated37. In the GL theory framework, the free energy of the system is given as a function of the order parameter. In the case of magnetic materials, the projection of total magnetic moment in the direction of external magnetic field, here written as m, plays the role of an order parameter. Therefore, the magnetically stable state of the magnetic material is obtained using the following free energy density38:

where the first and second terms on the right-hand side are the internal energy densities, Uz is the Zeeman energy density owing to the external magnetic field hz, and Ui is the internal energy density term owing to the exchange, magnetic anisotropy energy densities, among others, excluding Uz. In Eq. (2), Uz is a linear function of m, which is given by Uz = −hzm. In the third term, T, S, and V are the temperature, entropy, and volume respectively.

In this model the magnetisation reversal process is explained by the stability of the system. For example, the reversal of the magnetisation state from positive (m > 0) to negative (m < 0), generated by hz becoming more negative, corresponds to a monotonous increase of the functional F(m), as observed in Eq. (2). The magnetisation reversal process occurs when hz is strong enough to overcome the internal energy barrier. The non-equilibrium condition is obtained using the following:

where the derivative of the third term in Eq. (2) can be neglected. This is because, when the temperature T is sufficiently lower than the Curie temperature, the change in the total magnetic moment m is caused by a change in the global magnetic domain structure rather than spin fluctuation. Thus, the influence of the change in m on S is negligible. Regarding to the signs in Eq. (3), in the our exemplified case ∂U/∂m and hz are negative. As a result, the magnetisation reversal owing to the external magnetic field is expressed as follows:

where Hc denotes the coercivity of the magnetic material. Note that hz is negative here and the definition of Hc is a positive number, thus it requires the negative sign. The magnetisation reversal condition is that the external magnetic field is less than the minimum value of the differential term. Because the differentiation of the internal energy by the magnetic moment provides an effective field, it is possible to directly compare the magnetic properties of a material with an external magnetic field.

The calculation of the realistic version of the internal energy density Ui is extremely complicated; thus in this work, we can overcome the difficulty in obtaining the internal energy by investigating the features in the reduced space of the order parameter. To extract the features from the magnetisation data set, FFT was selected because it can capture the periodicity of the magnetic domains39. The magnetisation in the reciprocal space can be written as follows:

where m(r) is the total magnetic moment inside the local region of the magnetic material, which is given by the position vector r, and V is the volume of the magnetic material.

To connect the image features to the physical property m, we derive a function D based on the principal components (PCs) of the FFT features in principal components. D is given as a function of the extracted features as follows:

The physical descriptor D describes the magnetisation as a function of the output of the machine learning algorithm. This transformation can be used to assign meaning to the PCs. Moreover, to investigate the more complex relationship between PCs and other physicals properties, the visualisation of the energy and PCA space components can be useful. Note here that in Eq. (6) the magnetisation is described as a projection of total magnetic moment, in comparison to the vector description displayed in Eq. (9) (Methods section). One approximation of our model is that we are projecting the magnetisation in the same direction as the external magnetic field, which is the direction that accounts for the coercivity. In this study, we refer to this relationship as an extended energy landscape i.e., extended in the feature space.

Therefore, the effective field, ∂Ui/∂m, using machine learning information is transformed to the following:

Equation (7) describes the effective field as a function of the gradient of the energy landscape in the feature space, and physical descriptor D. The first derivative contains only elements from the extended energy landscape and the second describes the relationship between the real and feature space. In this work, we are interested in the description of the transformation landscape in the feature space, our group has attempted to analyse the shape of the effective field somewhere else40.

Application of energy landscape in feature space

Simulated Permalloy thin film

Data acquisition and pre-processing

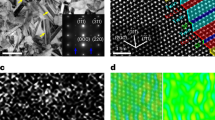

Py is a Ni–Fe ferromagnetic alloy used in the industry for decades owing to its high permeability, low coercivity, and insignificant magnetostriction4,41,42. The concentration of Ni and Fe is approximately 80% and 20%, respectively. The impurities and defects must be mitigated to achieve the desired properties. Figure 2 shows the conventional magnetisation reversal curves for the simulated Py thin-film considering the various non-magnetic grain defect densities (Supplementary Fig. 1) which is in accordance with the literature43,44. Note that coercivity increases with increasing density of defects42. This relation is valid for micro-size defects because increasing the defects result in the generation of more pinning points, thereby, increasing coercivity45. It is well reported that nanosize defects can reduce the coercivity; however, this is outside of the scope of our study. To avoid thermal-related complications, we consider T = 0 K and for the measured sample, we measured as-grown. The problem with only relying in the magnetisation curve is that it is a physical quantity calculated by averaging the magnetic domain contrast and does not provide sufficient information on the microstructure of the magnetic domain. Thus, we use FFT and a dimension-reduction method to increase the physical information obtained from the magnetisation curve.

Magnetisation as function of the external field for simulated Permalloy films with different density of defects, shown in the legend. The density of defects varied from 1 to 23.6%. The inset images show the normalised x-component of the magnetisation (mx/m) domains images for a specific location on the hysteresis curve. The calculation generates appropriate magnetic structures and magnetic hysteresis loops that will be used hereafter as an example of the application of extended Ginzburg-Landau method.

To transform data into the feature space, we first arrange the magnetisation in an image set and process it using FFT. Next, the images were cropped 1/4 of the original size to avoid overflow. The transformed images are then vectorised, and the real and imaginary parts of the Fourier transformed vectors are stacked together. Third, an unsupervised machine-learning algorithm, PCA, was used to reduce the dimensions of the dataset. PCA is effective in extracting linear features of the dataset and projecting them into a low-dimensional space30. The main idea of PCA is to identify a new set of variables (principal components) that are linear functions of the original dataset and linearly independent of one another. The new set of variables is ordered according to the variance46.

Drawing the energy landscape

Figure 3 a shows the result of drawing the magnetisation curve in the information space using FFT and PCA. We took the FFT of the magnetisation component of mx, extracted the features, and decomposed them in a two-dimensional space using PCA. Each point corresponds to an image from the dataset. The distribution of PCA decomposition is centred at the origin, owing to a feature implemented by default in the PCA algorithm. Cumulative contributions of the first and second principal components (PC1 and PC2) are approximately 80–90% (for more details, see Supplementary Table 1), which means that most of the information from the images can be reduced to two dimensions. Note that the points representing images around the coercivity region (m = 0) are commonly distributed around zero of feature 1 (PC1) and in the maximum/minimum of feature 2 (PC2). In contrast, the points representing the saturation texture images (B = 0.6 T) are commonly distributed in the minimum and maximum of PC1 and nearly zero of PC2. The symmetric disposition of coercivity and saturation shows that PCA can capture information about the hysteresis loop from the magnetisation domain dataset. By overlapping different defect density datasets, we can see a clear increase in point dispersion as the defect density increases, implying that the overall distance between the points increases.

a Principal component analysis (PCA) decomposition magnetisation dataset considering various defect densities. b Magnetisation as a function of the first principal component (PC1), c total energy (Etotal) as a function of the second principal component (PC2). Red and black symbols correspond to positive and negative coercivity, respectively. PC1 is a good explanatory variable for the magnetisation, whereas PC2 can be a good explanatory variable for energy. The density of defects varied from 1 to 23.6%.

To investigate the meaning of the feature space components, the correlations between PCA components (Fig. 3a) and physical parameters were analysed. Figure 3b shows the PC1 as a function of magnetisation. Note that PC1 an be approximated as a linear relationship with the magnetisation.

For PC2, however, the physical interpretation is difficult, since no linear correlation was observed. Thus, it is important to analyse the correlations between various physical parameters. Near-coercivity images in the magnetisation space are mapped to the local maximum/minimum of PC2 (Fig. 3a), but unlike PC1, PC2 correlates with the magnetic domains. Thus, we can infer that PC2 might be related to the system energy, the total energy as a function of PC2 is displayed in Fig. 3c. The relationship between total energy and PC2 resembles a higher-order polynomial, with the coercivity data images near the maximum. Moreover, the landscape is flattened near the coercivity, which accurately represents the properties of soft magnetic materials.

One simple result that can be obtained from PCA decomposition is the relationship between feature space components and coercivity. Figure 4 shows the relationship between the weighted Euclidean distances from saturation to coercivity in the feature space (d) and coercivity. The weighted distance from the saturation to the coercivity is defined as follows:

where index i corresponds to the PCA components, and α is the explained variance of component i of the PCA decomposition. Here, we truncate the sum in the second element owing to the high explanation rates of PC1 and PC2. Note that d is proportional to coercivity. The second-order polynomial regression of the weighted distance associates a physical property with an element from the feature space. This correlation is explained by understanding how the magnetisation texture is disposed of in the feature space. Similar textures are disposed closer in the feature space; however, in the case of depinning, it is also visualised in the morphology of the magnetic texture which, in turn, increases the distance of points in the feature space. In other words, increasing the density of defects increases the occurrence of depinning, dispersing the data point in the reduced space. Although the distance between the adjacent points is reduced for the highly defective films owing to the smaller depinning, the overall distance is increased by the occurrence frequency.

Coercivity as a function of principal components weighted Euclidean distance from saturation to coercivity (d(PCsat, PCcoer)) defined in Eq. (8). Each point corresponds to a different dataset with a distinct defect density, thereby varying coercivity. The dotted red line corresponds to second-order polynomial regression.

The distance in the extended landscape might determine the coercivity mechanism. Thus, the energy of the system can be visualised for an unknown system based on the disposition of points in the feature space. High explanation ratios of PC1 and PC2 demonstrate that both components hold sufficient information on the magnetic ordering to describe coercivity. In the case of a lower explanation ratio, other PCA components can be easily added, which makes this approach versatile and useful. The similarities in the feature space show that the components are correlated with energy and coercivity, and can be used to reveal other properties in more complex systems.

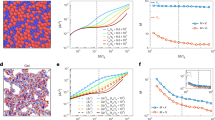

The final step is to predict the energy based on the reduced space variables (PC1 and PC2). Figure 5 displays the estimated energy as a function of the actual value of the energy. The model was trained using a deep neural network and we achieved coefficient of determination R2 = 0.82. It is possible that by increasing the number of data, we could improve the result of the prediction. This implies that the energy can be predicted based on image location in the feature space. The ease with which the inhomogeneities can be added is a significant advantage over the GL theory because the GL theory considers the average of magnetisation.

Experimental Permalloy thin film

Experimental data acquisition and pre-processing

We demonstrate that the energy landscape for experimental images can be drawn similarly. Here, the in-plane magnetisation image data were obtained using a Kerr microscope, as described in the methods section. Before drawing the energy landscape, the image set was pre-processed using a Python library for scientific analysis called SciPy to correct for the brightness of the Kerr microscope’s light source47. We treated the magnetisation texture images obtained from the Kerr microscope as matrices, as they are in a 16-bit file format (216 = 65536 values). First, the background was subtracted from the images to increase the contrast; a Gaussian filter (σ = 7) and blur filter were used to reduce the noise. The filters had a negligible influence on the final result. The average intensity variation of the images was then used to normalise and correct the intensity of the whole data set. In contrast to the simulated images, the experimental images are susceptible to noise and drifting, which shifts the brightness intensity requiring additional correction.

After image correction, the estimation of the magnetisation curve for Py (Ni78.5Fe21.5) is displayed in Fig. 6. Here, magnetisation is calculated by obtaining the ratio of bright to dark pixels in the images. Note that, in contrast to the magnetisation curve (Fig. 2), the hysteresis curve for the experimental images is almost completely square owing to the approximation method (superficial pixel counting) and the large steps in the external field. Since we estimated the magnetisation by the in-plane superficial Kerr images, the pixel contrast might not be enough to obtain the detailed magnetisation curve. The usual value for coercivity in Py has been reported as two orders the magnitude lower than our sample42, which is in the same order as a high defect film48.

Experimental magnetisation curve (mx/m) as a function of the external field (B). The inset Kerr magnetic domain image points to the corresponding position in the magnetisation process. The positive and negative coercivity are denoted by white dots. The magnetisation reversal in the experimental dataset demonstrates that it is appropriate and that the analysis can be conducted.

Drawing experimental energy landscape

Similar to the discussion in the previous section, the domain wall information was extracted from the experiment using FFT. Before applying PCA, the real and imaginary parts were vectorised and stacked together. Figure 7 displays magnetisation as a function of PC1. Note that the relationship between PC1 and magnetisation is similar to that observed in the simulated data, demonstrating the reproducibility of the extended GL model in the experimental data. Coercivity is located near zero in PC1, which is consistent with our previous discussion. Py has a very low coercivity, which imposed certain experimental limitations. In contrast to the simulation, the external conditions could not be controlled precisely, and the steps were not comparably fine. A small difference is observed in Fig. 7 compared to the simulated case near the saturation region (Fig. 2b). The distinct curvature of the data points in the saturation region in these two images might be because of the different boundary conditions of the magnetic thin film. Nevertheless, the dimension reduction could capture similar information regarding the data condition.

Magnetisation (mx/m) as a function of first principal component (PC1). Positive and negative coercivity are denoted by white dots. This image can be used for direct comparison with the simulated result (Fig. 3b).

We used the model shown in Fig. 4 to estimate coercivity as 0.09 T, which is reasonably close to the measured value of approximated 0.07 T. This result supports the robustness of the developed method. Another advantage of using the energy landscape in the reduced space is its sensitivity compared to the usual methods that can be highly affected by the steps of the external field as observed in the magnetisation curve (Fig. 6). In our experiment, the coarser external field steps hinder the reversal process mechanism. The energy barrier to align the spins in the material with the magnetic field is very low owing to the almost non-existence of large pinning point regions.

Discussion

In real materials, morphological and structural defects play an important role in coercivity, and they are difficult to predict the origin of the material function. Here, we attempt to interpret the meaning of the features and deepen our understanding of the analysis results. In our model the FFT extracts features of the periodic structures by extracting multiple principal components in a data-driven manner, it might be possible to extract hidden features while still containing information about heterogeneity. This could be included in the edge of the usual FFT peak, which may contain magnetic ordering information, but has largely been overlooked by conventional analysis. It is noteworthy that we are using most of the information in the FFT pattern by considering the high explanation ratio components of PCA. Moreover, by using PCA to reduce the dimensions, we can narrow down and focus only on the ones with high explanation, which contributes to reducing the amount of data for analysis and study.

In simulations, coercivity can be calculated with high accuracy owing to the access of all variables in real materials; otherwise, there are many unknown parameters correlated with the microstructures. Our approach for describing the energy landscape using machine learning showed good results for both experimental and simulated data. Both shared similar shapes for the energy landscapes, as well as the explanation of variables and correlations between them. Because both systems consist of the same material, the magnetisation process should be similar; thus, the magnetic domain information in the reciprocal space is comparable, and the same considerations hold. Another advantage of using reciprocal space is that the same method to obtain the energy landscape can be applied to other techniques, not only for spatial imaging. Neutron diffraction, which is a powerful technique to investigate the magnetic structure of a material that outputs the data in the reciprocal space49 and can be used to study the magnetic properties using the energy landscape. We recently developed an analysis method for magnetic domains using topological data analysis (TDA)40,50. In the future, we intend to combine the method described herein with TDA to improve the extraction of inhomogeneities in magnetic domain structures.

In conclusion, to obtain the physical parameters from magnetisation images, we draw the energy landscape using material informatics tools. The coercivity for real materials cannot be described using only the usual GL method because morphological defects cannot be added without tuning the theory using ad hoc parameters. Here, we tried to overcome this problem by using the landscape that emerges from the principal components analysis of the magnetisation images. Based on the landscape, we observed the correlation of the distance and the coercivity in the reduced space. Mediated by the landscape, the energy and order parameters could be obtained from the magnetisation texture. Moreover, we demonstrated the importance of the pinning process in the total energy of the system and showed its relationship to the feature space and physical properties. Although we applied it to simple systems, the method can be extended to other systems and considering other properties such as temperature and strain/stress as well the dynamics in a high-speed magnetisation reversal process.

Methods

Micromagnetics simulation

Micromagnetics is a technique that simulates the magnetic behaviour of ferro- or ferrimagnetic materials in sub-micrometre scales. It can reproduce the magnetisation reversal process in inhomogeneous systems in any arbitrary shape45,51. The Landau–Lifshitz–Gilbert (LLG) equation models the effect of the electromagnetic field on ferromagnetic materials by considering the response of the magnetisation M to torques52.

where γ0 is the electron gyromagnetic ratio and α is the dimensionless damping factor. The effective field Heff usually contains the contribution from the exchange, anisotropy, Zeeman, and demagnetisation energy densities.

Micromagnetic modelling was performed using MuMax344. The cell size in all the simulations was set as 10 nm × 10 nm × 50 nm, and the LLG equation was solved using a closed boundary condition. In this model, we selected the following material parameters that are suitable for Py: saturation magnetisation Ms = 8.5 × 105 Am−1, exchange stiffness A = 1.3 × 10−11 Jm−1, dumping constant α = 0.01, and perpendicular uniaxial anisotropy Ku = 0.5 × 103Jm−353,54. This results in an exchange length \({l}_{s}=\sqrt{2A/{\mu }_{0}{M}_{s}^{2}}=5.3\) nm, which is in accordance with the values reported in literature54.

To simulate the defects, an algorithm was used to draw random size defects in the texture of the input images. The dot defects vary from 1 to 50 μm2, and the total number of defects ranges from 1 to 23.6% of the surface of the film. The external magnetic field B swept from −0.6 T to 0.6 T in the x direction, yielding 4400 magnetisation images for each texture.

Magneto-optic Kerr effect observation

The sample used in this study was a 100 nm Py (Ni78.5Fe21.5) sputtering grown on a clean Si surface without removing the oxide layer. The magnetic texture of the sample was observed using Magneto-optic Kerr effect microscope of Evico Magnetics, selecting the longitudinal polarisation. The exposition time was set to 42 ms, and the field of view was 100 μm. The external magnetic field B swept from −0.07 to 0.07 ± 0.005 T in-plane at room temperature.

ANN model construction

The ANN model was built using 3 layers, the first and second layers have 64 nodes and the last layer has 1 node. We set the dropout in the first layer as 5% and used the rectified linear unit (ReLU) as activation function. To avoid overtraining using the ReLU function, we normalised the energy between 0 and 1. The input data are the reduced space landscape and were divided into 150 × 100 bins. We used the position of the bin and the training value for energy for all data sets. The input data were also shuffled and divided into batches of 10 samples.

Data availability

All datasets are available upon reasonable request.

Code availability

The codes are available upon reasonable request.

References

Herzer, G. Modern soft magnets: Amorphous and nanocrystalline materials. Acta Mater. 61, 718–734 (2013).

McHenry, M. E., Willard, M. A. & Laughlin, D. E. Amorphous and nanocrystalline materials for applications as soft magnets. Prog. Mater. Sci. 44, 291–433 (1999).

Gutfleisch, O. et al. Magnetic materials and devices for the 21st century: Stronger, lighter, and more energy efficient. Adv. Mater. 23, 821–842 (2010).

Fish, G. Soft magnetic materials. Proc. IEEE 78, 947–972 (1990).

Barthelmess, M., Pels, C., Thieme, A. & Meier, G. Stray fields of domains in permalloy microstructures—measurements and simulations. J. Appl. Phys. 95, 5641–5645 (2004).

Kronmüller, H., Durst, K.-D. & Sagawa, M. Analysis of the magnetic hardening mechanism in re-feb permanent magnets. J. Magn. Magn. Mater. 74, 291–302 (1988).

Yu, R. H., Basu, S., Zhang, Y., Parvizi-Majidi, A. & Xiao, J. Q. Pinning effect of the grain boundaries on magnetic domain wall in FeCo-based magnetic alloys. J. Appl. Phys. 85, 6655–6659 (1999).

Platt, C. L., Berkowitz, A. E., Smith, D. J. & McCartney, M. R. Correlation of coercivity and microstructure of thin CoFe films. J. Appl. Phys. 88, 2058–2062 (2000).

Nistor, C., Faraggi, E. & Erskine, J. L. Magnetic energy loss in permalloy thin films and microstructures. Phys. Rev. B 72, 014404 (2005).

Balakrishna, A. R. & James, R. D. A tool to predict coercivity in magnetic materials. Acta Mater. 208, 116697 (2021).

Toga, Y., Miyashita, S., Sakuma, A. & Miyake, T. Role of atomic-scale thermal fluctuations in the coercivity. npj Comput. Mater. 6, 67 (2020).

Agrawal, A. & Choudhary, A. Perspective: Materials informatics and big data: Realization of the “fourth paradigm” of science in materials science. APL Mater. 4, 053208 (2016).

Ramprasad, R., Batra, R., Pilania, G., Mannodi-Kanakkithodi, A. & Kim, C. Machine learning in materials informatics: recent applications and prospects. npj Comput. Mater. 3, 54 (2017).

Himanen, L., Geurts, A., Foster, A. S. & Rinke, P. Data-driven materials science: Status, challenges, and perspectives. Adv. Sci. 6, 1900808 (2019).

Butler, K. T., Davies, D. W., Cartwright, H., Isayev, O. & Walsh, A. Machine learning for molecular and materials science. Nature 559, 547–555 (2018).

Schmidt, J., Marques, M. R. G., Botti, S. & Marques, M. A. L. Recent advances and applications of machine learning in solid-state materials science. npj Comput. Mater. 5, 83 (2019).

Carleo, G. et al. Machine learning and the physical sciences. Rev. Mod. Phys. 91, 045002 (2019).

Schleder, G. R., Padilha, A. C. M., Acosta, C. M., Costa, M. & Fazzio, A. From DFT to machine learning: Recent approaches to materials science—a review. J. Phys.: Mater. 2, 032001 (2019).

Kwon, H. Y. et al. Magnetic hamiltonian parameter estimation using deep learning techniques. Sci. Adv. 6, eabb0872 (2020).

Park, H.-K., Lee, J.-H., Lee, J. & Kim, S.-K. Optimizing machine learning models for granular ndfeb magnets by very fast simulated annealing. Sci. Rep. 11, 1–11 (2021).

Mamada, N., Mizumaki, M., Akai, I. & Aonishi, T. Obtaining underlying parameters from magnetic domain patterns with machine learning. J. Phys. Soc. Japan 90, 014705 (2021).

Nishio, T. et al. High-throughput analysis of magnetic phase transition by combining table-top sputtering, photoemission electron microscopy, and Landau theory. Sci. Technol. Adv. Mater.: Methods 2, 345–354 (2022).

Mitsumata, C. & Kotsugi, M. Interpretation of Kronmüller formula using Ginzburg–Landau theory. J. Magn. Soc. Japan 46, 90–96 (2022).

Park, H. S., Murakami, Y., Shindo, D., Chernenko, V. A. & Kanomata, T. Behavior of magnetic domains during structural transformations in ni2mnga ferromagnetic shape memory alloy. Appl. Phys. Lett. 83, 3752–3754 (2003).

Schmitte, T., Westphalen, A., Theis-Bröhl, K. & Zabel, H. The bragg-MOKE: Magnetic domains in fourier space. Superlattices Microstruct. 34, 127–136 (2003).

Baltz, V., Marty, A., Rodmacq, B. & Dieny, B. Magnetic domain replication in interacting bilayers with out-of-plane anisotropy: Application to Co/Pt multilayers. Phys. Rev. B 75, 014406 (2007).

Tokii, M. et al. Reconstruction of magnetic domain structure using the reverse Monte Carlo method with an extended Fourier image. J. Appl. Phys. 117, 17D149 (2015).

Willems, F. et al. Multi-color imaging of magnetic Co/Pt heterostructures. Struct. Dyn. 4, 014301 (2017).

Hastie, T., Tibshirani, R., Friedman, J. H. & Friedman, J. H. The Elements of Statistical Learning: Data Mining, Inference, and Prediction Vol. 2 (Springer, 2009).

Wold, S., Esbensen, K. & Geladi, P. Principal component analysis. Chemometrics Intell. Laboratory Syst. 2, 37–52 (1987).

Heyer, M. H. & Schloerb, F. P. Application of principal component analysis to large-scale spectral line imaging studies of the interstellar medium. Astrophys. J. 475, 173 (1997).

Pinkowski, B. Principal component analysis of speech spectrogram images. Pattern Recogn. 30, 777–787 (1997).

Rajan, K., Suh, C. & Mendez, P. F. Principal component analysis and dimensional analysis as materials informatics tools to reduce dimensionality in materials science and engineering. Stat. Anal. Data Mining: ASA Data Sci. J. 1, 361–371 (2009).

Modak, S., Chattopadhyay, T. & Chattopadhyay, A. K. Clustering of eclipsing binary light curves through functional principal component analysis. Astrophys. Space Sci. 367, 1–10 (2022).

Ghaboussi, J., Garrett Jr, J. & Wu, X. Knowledge-based modeling of material behavior with neural networks. J. Eng. Mech. 117, 132–153 (1991).

Choi, R. Y., Coyner, A. S., Kalpathy-Cramer, J., Chiang, M. F. & Campbell, J. P. Introduction to machine learning, neural networks, and deep learning. Transl. Vision Sci. Technol. 9, 14–14 (2020).

Landau, L. D. & Lifshitz, E. M. Statistical Physics (Pergamon, 1980).

Coey, J. M. Magnetism and Magnetic Materials (Cambridge University Press, 2010).

Marshall, W. & Lowde, R. Magnetic correlations and neutron scattering. Rep. Prog. Phys. 31, 705 (1968).

Masuzawa, K., Sotaro, K., Foggiatto, A. L., Chiharu, M. & Kotsugi, M. Analysis of the coercivity mechanism of yig based on the extended landau free energy model. Trans. Magn. Soc. Jpn. (Special Issues) 6, 22TR507 (2022).

Schäfer, R. Domains in ‘extremely’ soft magnetic materials. J. Magn. Magn. Mater. 215–216, 652–663 (2000).

Akhter, M., Mapps, D., Ma Tan, Y., Petford-Long, A. & Doole, R. Thickness and grain-size dependence of the coercivity in permalloy thin films. J. Appl. Phys. 81, 4122–4124 (1997).

Manzin, A., Barrera, G., Celegato, F., Coïsson, M. & Tiberto, P. Influence of lattice defects on the ferromagnetic resonance behaviour of 2d magnonic crystals. Sci. Rep. 6, 1–11 (2016).

Vansteenkiste, A. & de Wiele, B. V. MuMax: A new high-performance micromagnetic simulation tool. J. Magn. Magn. Mater. 323, 2585–2591 (2011).

Hubert, A. & Schäfer, R. Magnetic Domains: The Analysis of Magnetic Microstructures (Springer Science & Business Media, Berlin, 2008).

Jolliffe, I. T. & Cadima, J. Principal component analysis: A review and recent developments. Phil. Trans. R. Soc. A: Math., Phys. Eng. Sci. 374, 20150202 (2016).

Virtanen, P. et al. SciPy 1.0: Fundamental algorithms for scientific computing in Python. Nat. Methods 17, 261–272 (2020).

Bryan, M., Atkinson, D. & Cowburn, R. Experimental study of the influence of edge roughness on magnetization switching in permalloy nanostructures. Appl. Phys. Lett. 85, 3510–3512 (2004).

Río-López, N. A. et al. Neutron scattering as a powerful tool to investigate magnetic shape memory alloys: A review. Metals 11, 829 (2021).

Kunii, S., Foggiatto, A. L., Mitsumata, C. & Kotsugi, M. Causal analysis and visualization of magnetization reversal using feature extended Landau free energy. Sci. Rep. https://doi.org/10.1038/s41598-022-21971-1 (2022).

Aharoni, A. et al. Introduction to the Theory of Ferromagnetism Vol. 109 (Clarendon Press, 2000).

Gilbert, T. Classics in magnetics a phenomenological theory of damping in ferromagnetic materials. IEEE Trans. Magn. 40, 3443–3449 (2004).

Bisig, A. et al. Correlation between spin structure oscillations and domain wall velocities. Nat. Commun. 4, 1–8 (2013).

Nakatani, Y., Thiaville, A. & Miltat, J. Faster magnetic walls in rough wires. Nat. Mater. 2, 521–523 (2003).

Acknowledgements

This work was partly supported by JSPS Grant-in-Aid for Scientific Research (A) number 21H04656. We would like to thank Tadakatsu Ohkubo and Hossein Sepehri-Amin for the support and guidance in the magnetic domains observation using the Magneto-optic Kerr effect microscope.

Author information

Authors and Affiliations

Contributions

A.L.F. and S.K. performed the computations and experiments. A.L.F. and C.M. developed the analysis method. C.M. provided advice on computations and machine learning. M.K. designed and directed the present work. A.L.F., S.K., and M.K. equally contributed to writing the manuscript. All authors have discussed the results and contributed to the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Physics thanks Gianfranco Durin and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Foggiatto, A.L., Kunii, S., Mitsumata, C. et al. Feature extended energy landscape model for interpreting coercivity mechanism. Commun Phys 5, 277 (2022). https://doi.org/10.1038/s42005-022-01054-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42005-022-01054-3

This article is cited by

-

Causal analysis and visualization of magnetization reversal using feature extended landau free energy

Scientific Reports (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.