Abstract

Computing in memory (CIM) could be used to overcome the von Neumann bottleneck and to provide sustainable improvements in computing throughput and energy efficiency. Underlying the different CIM schemes is the implementation of two kinds of computing primitive: logic gates and multiply–accumulate operations. Considering the input and output in either operation, CIM technologies differ in regard to how memory cells participate in the computation process. This complexity makes it difficult to build a comprehensive understanding of CIM technologies. Here, we provide a full-spectrum classification of all CIM technologies by identifying the degree of memory cells participating in the computation as inputs and/or output. We elucidate detailed principles for standard CIM technologies across this spectrum, and provide a platform for comparing the advantages and disadvantages of each of the different technologies. Our taxonomy could also potentially be used to develop other CIM schemes by applying the spectrum to different memory devices and computing primitives.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Horowitz, M. Computing’s energy problem (and what we can do about it). In 2014 IEEE International Solid-State Circuits Conference Digest of Technical Papers (ISSCC) 10–14 (IEEE, 2014).

Tsividis, Y. Not your father’s analog computer. IEEE Spectr. 55, 38–43 (2017).

Sebastian, A. et al. Memory devices and applications for in-memory computing. Nat. Nanotechnol. 15, 529–544 (2020).

Ankit, A. et al. Circuits and architectures for in-memory computing-based machine learning accelerators. IEEE Micro 40, 8–22 (2020).

Mannocci, P. et al. In-memory computing with emerging memory devices: status and outlook. APL Mach. Learn. 1, 010902 (2023).

Mutlu, O. et al. In Emerging Computing: from Devices to Systems: Looking Beyond Moore and Von Neumann (eds Sabry Aly, M. M. & Chattopadhyay, A.) 171–243 (Springer, 2023).

Kang, W., Deng, E., Wang, Z. & Zhao, W. in Applications of Emerging Memory Technology (ed. Suri, M.) 215–229 (Springer, 2020).

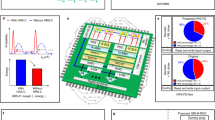

Wan, W. et al. A compute-in-memory chip based on resistive random-access memory. Nature 608, 504–512 (2022).

Verma, N. et al. In-memory computing: advances and prospects. IEEE J. Solid-State Circuits 11, 43–55 (2019).

Jhang, C. J. et al. Challenges and trends of SRAM-based computing-in-memory for AI edge devices. IEEE Trans. Circuits Syst. I 68, 1773–1786 (2021).

Oliveira, G. F. et al. Accelerating neural network inference with processing-in-DRAM: from the edge to the cloud. IEEE Micro 42, 25–38 (2022).

Wang, Z. et al. Resistive switching materials for information processing. Nat. Rev. Mater. 5, 173–195 (2020).

Xiao, T. P. et al. Analog architectures for neural network acceleration based on non-volatile memory. Appl. Phys. Rev. 7, 031301 (2020).

Khan, A. I., Keshavarzi, A. & Datta, S. The future of ferroelectric field-effect transistor technology. Nat. Electron. 3, 588–597 (2020).

Hu, M. et al. Hardware realization of BSB recall function using memristor crossbar arrays. In Proc. 49th Annual Design Automation Conference (DAC) 498–503 (Association for Computing Machinery, 2012).

Chen, W.-H. et al. CMOS-integrated memristive non-volatile computing-in-memory for AI edge processors. Nat. Electron. 2, 420–428 (2019).

Burr, G. W. et al. Experimental demonstration and tolerancing of a large-scale neural network (165 000 synapses) using phase-change memory as the synaptic weight element. IEEE Trans. Electron Devices 62, 3498–3507 (2015).

Patil, A. D. Hua, H., Gonugondla, S., Kang, M. & Shanbhag, N. R. An MRAM-based deep in-memory architecture for deep neural networks. In IEEE International Symposium on Circuits and Systems (ISCAS) 1–5 (IEEE, 2019).

Berdan, R. et al. Low-power linear computation using nonlinear ferroelectric tunnel junction memristors. Nat. Electron. 3, 259–266 (2020).

Jerry, M. et al. Ferroelectric FET analog synapse for acceleration of deep neural network training. In Technical Digest of the International Electron Devices Meeting (IEDM) 6.2.1–6.1.4 (IEEE, 2017).

Guo, X. et al. Fast, energy-efficient, robust, and reproducible mixed-signal neuromorphic classifier based on embedded NOR flash memory technology. In Technical Digest of the International Electron Devices Meeting (IEDM) 6.5.1–6.5.4 (IEEE, 2017).

Xie, S. et al. eDRAM-CIM: compute-in-memory design with reconfigurable embedded-dynamic-memory array realizing adaptive data converters and charge-domain computing. In 2021 IEEE International Solid-State Circuits Conference (ISSCC) 248–249 (IEEE, 2021).

Zhang, J., Wang, Z. & Verma, N. In-memory computation of a machine-learning classifier in a standard 6T SRAM array. IEEE J. Solid-State Circuits 52, 915–924 (2017).

Su, Y. et al. CIM-Spin: a scalable CMOS annealing processor with digital in-memory spin operators and register spins for combinatorial optimization problems. IEEE J. Solid State Circuits 57, 2263–2273 (2022).

Xie, S. et al. Ising-CIM: a reconfigurable and scalable compute within memory analog Ising accelerator for solving combinatorial optimization problems. IEEE J. Solid State Circuits 57, 3453–3465 (2022).

Pagiamtzis, K. & Sheikholeslami, A. Content-addressable memory (CAM) circuits and architectures: a tutorial and survey. IEEE J. Solid State Circuits 41, 712–727 (2006).

Yavits, L. et al. Resistive associative processor. IEEE Comput. Archit. Lett. 14, 148–151 (2015).

Ni, K. et al. Ferroelectric ternary content-addressable memory for one-shot learning. Nat. Electron. 2, 521–529 (2019).

Li, J. et al. 1 Mb 0.41 μm2 2T–2R cell nonvolatile TCAM with two-bit encoding and clocked self-referenced sensing. IEEE J. Solid-State Circuits 49, 896–907 (2014).

Li, C. et al. Analog content-addressable memories with memristors. Nat. Commun. 11, 1638 (2020).

Lin, C. C. et al. A 256b-wordlength ReRAM-based TCAM with 1ns search-time and 14× improvement in word length–energy efficiency–density product using 2.5T1R cell. In 2016 IEEE International Solid-State Circuits Conference (ISSCC) 136–137 (IEEE, 2016).

Vahdat, S. et al. Interstice: inverter-based memristive neural networks discretization for function approximation applications. IEEE Trans. Very Large Scale Integr. VLSI Syst. 28, 1578–1588 (2020).

Sun, Z. et al. Logic computing with stateful neural networks of resistive switches. Adv. Mater. 30, 1802554 (2018).

Imani, M., Gupta, S. & Rosing, T. Ultra-efficient processing in-memory for data intensive applications. In 2017 54th ACM/EDAC/IEEE Design Automation Conference (DAC) 1–6 (IEEE, 2017).

Guckert, L. & Swartzlander, E. E. Optimized memristor-based multipliers. IEEE Trans. Circuits Syst. I 64, 373–385 (2017).

Radakovits, D. et al. A memristive multiplier using semi-serial IMPLY-based adder. IEEE Trans. Circuits Syst. I 67, 1495–1506 (2020).

Haj-Ali, A. et al. IMAGING: In-Memory AlGorithms for Image processiNG. IEEE Trans. Circuits Syst. I 65, 4258–4271 (2018).

Imani, M., Gupta, S., Kim, Y. & Rosing, T. FloatPIM: in-memory acceleration of deep neural network training with high precision. In 2019 ACM/IEEE Annual International Symposium on Computer Architecture (ISCA) 802–815 (IEEE, 2019).

Leitersdorf, O. et al. AritPIM: high-throughput in-memory arithmetic. IEEE Trans. Emerg. Topics Comput. https://doi.org/10.1109/TETC.2023.3268137 (2023).

Borghetti, J. et al. ‘Memristive’ switches enable ‘stateful’ logic operations via material implication. Nature 464, 873–876 (2010).

Kvatinsky, S. et al. MAGIC—memristor-aided logic. IEEE Trans. Circuits Syst. II 61, 895–899 (2014).

Kim, Y. S. et al. Ternary logic with stateful neural networks using a bi-layered TaOx-based memristor exhibiting ternary states. Adv. Sci. 9, 2104107 (2022).

Hoffer, B. et al. Stateful logic using phase change memory. IEEE J. Explor. Solid-State Comput. Devices Circuits 8, 77–83 (2022).

Zabihi, M. et al. In-memory processing on the spintronic CRAM: from hardware design to application mapping. IEEE Trans. Comput. 68, 1159–1173 (2019).

Hoffer, B. & Kvatinsky, S. Performing stateful logic using spin–orbit torque (SOT) MRAM. In 2022 IEEE 22nd International Conference on Nanotechnology (NANO) 571–574 (IEEE, 2022).

Seshadri, V. et al. Ambit: in-memory accelerator for bulk bitwise operations using commodity DRAM technology. In 2017 50th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO) 273–287 (IEEE, 2017).

Gao, F., Tziantzioulis, G. & Wentzlaff, D. ComputeDRAM: in-memory compute using off-the-shelf DRAMs. In MICRO '52: Proc. 52nd Annual IEEE/ACM International Symposium on Microarchitecture 100–113 (Association for Computing Machinery, 2019).

Ali, M. F., Jaiswal, A. & Roy, K. In-memory low-cost bit-serial addition using commodity DRAM technology. IEEE Trans. Circuits Syst. I 67, 155–165 (2020).

Song, Y. et al. Reconfigurable and efficient implementation of 16 Boolean logics and full-adder functions. Adv. Sci. 2022, 2200036 (2022).

Kim, D., Jang, Y., Kim, T. & Park, J. BiMDiM: area efficient bi-directional MRAM digital in-memory computing. In 2022 IEEE 4th International Conference on Artificial Intelligence Circuits and Systems (AICAS) 74–77 (IEEE, 2022).

Linn, E. et al. Beyond von Neumann—logic operations in passive crossbar arrays alongside memory operations. Nanotechnology 23, 305205 (2012).

Gao, S. et al. Implementation of complete Boolean logic functions in single complementary resistive switch. Sci. Rep. 5, 15467 (2015).

Gaillardon, P. E. et al. The Programmable Logic-in-Memory (PLiM) computer. In 2016 Design, Automation & Test in Europe Conference & Exhibition (DATE) 427–432 (IEEE, 2016).

Zhang, H. et al. Stateful reconfigurable logic via a single-voltage-gated spin Hall-effect driven magnetic tunnel junction in a spintronic memory. IEEE Trans. Electron Devices 64, 4295–4301 (2017).

Cassinerio, M., Ciocchini, N. & Ielmini, D. Logic computation in phase change materials by threshold and memory switching. Adv. Mater. 25, 5975–5980 (2013).

Chen, W.-H. et al. A 16 Mb dual-mode ReRAM macro with sub-14 ns computing-in-memory and memory functions enabled by self-write termination scheme. In 2017 IEEE International Electron Devices Meeting (IEDM) 28.2.1–28.2.4 (IEEE, 2017).

Jain, S., Ranjan, A., Roy, K. & Raghunathan, A. Computing in memory with spin-transfer torque magnetic RAM. IEEE Trans. Very Large Scale Integr. VLSI Syst. 26, 470–483 (2018).

Jeloka, S. et al. A 28 nm configurable memory (TCAM/BCAM/SRAM) using push-rule 6T bit cell enabling logic-in-memory. IEEE J. Solid-State Circuits 51, 1009–1021 (2016).

Eckert, C. et al. Neural cache: bit-serial in-cache acceleration of deep neural networks. In 2018 ACM/IEEE 45th Annual International Symposium on Computer Architecture (ISCA) 383–396 (IEEE, 2018).

Kim, H., Chen, Q., Yoo, T., Kim, T. T.-H. & Kim, B. A 1–16b precision reconfigurable digital in-memory computing macro featuring column-MAC architecture and bit-serial computation. In ESSCIRC 2019—IEEE 45th European Solid State Circuits Conference (ESSCIRC) 345–348 (IEEE, 2019).

Chih, Y.-D. et al. An 89 TOPS/W and 16.3 TOPS/mm2 all-digital SRAM-based full-precision compute-in memory macro in 22 nm for machine-learning edge applications. In 2021 IEEE International Solid-State Circuits Conference (ISSCC) 252–253 (IEEE, 2021).

Kwon, Y.-C. et al. A 20nm 6GB function-in-memory DRAM based on HBM2 with a 1.2TFLOPS programmable computing unit using bank-level parallelism for machine learning applications. In 2021 IEEE International Solid-State Circuits Conference (ISSCC) 350–351 (IEEE, 2021).

Seshadri, V. et al. RowClone: fast and energy-efficient in-DRAM bulk data copy and initialization. In 2013 46th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO) 185–197 (IEEE, 2013).

Wang, Z. et al. Functionally complete Boolean logic in 1T1R resistive random-access memory. IEEE Electron Device Lett. 38, 179–182 (2017).

Breyer, E. T. et al. Perspective on ferroelectric, hafnium oxide based transistors for digital beyond von-Neumann computing. Appl. Phys. Lett. 118, 050501 (2021).

Dong, Q. et al. A 4 + 2T SRAM for searching and in-memory computing with 0.3-V VDDmin. IEEE J. Solid-State Circuits 53, 1006–1015 (2018).

Zhang, Y. et al. Recryptor: a reconfigurable cryptographic cortex-M0 processor with in-memory and near-memory computing for IoT security. IEEE J. Solid-State Circuits 53, 995–1005 (2018).

Agrawal, A. et al. X-SRAM: enabling in-memory Boolean computations in CMOS static random-access memories. IEEE Trans. Circuits Syst. I 65, 4219–4232 (2018).

Lee, K., Jeong, J., Cheon, S., Choi, W. & Park, J. Bit parallel 6T SRAM in-memory computing with reconfigurable bit-precision. In 2020 57th ACM/IEEE Design Automation Conference (DAC) 1–6 (IEEE, 2020).

Hung, J. M. et al. A four-megabit compute-in-memory macro with eight-bit precision based on CMOS and resistive random-access memory for AI edge devices. Nat. Electron. 4, 921–930 (2021).

Huo, Q. et al. A computing-in-memory macro based on three-dimensional resistive random-access memory. Nat. Electron. 5, 469–477 (2022).

Wang, W. et al. A memristive deep belief neural network based on silicon synapses. Nat. Electron. 5, 870–880 (2022).

Cui, J. et al. CMOS-compatible electrochemical synaptic transistor arrays for deep learning accelerators. Nat. Electron. 6, 292–300 (2023).

Huang, X. et al. An ultrafast bipolar flash memory for self-activated in-memory computing. Nat. Nanotechnol. 18, 486–492 (2023).

Hsieh, S.-E. et al. A 70.85-86.27TOPS/W PVT-insensitive 8b word-wise ACIM with post-processing relaxation. In 2023 IEEE International Solid-State Circuits Conference (ISSCC) 136–137 (IEEE, 2023).

Chen, P. et al. A 22nm delta–sigma computing-in-memory (Δ∑CIM) SRAM macro with near-zero-mean outputs and LSB-first ADCs achieving 21.38TOPS/W for 8b-MAC edge AI processing. In 2023 IEEE International Solid-State Circuits Conference (ISSCC) 140–141 (IEEE, 2023).

Wu, P.-C. et al. A 22nm 832Kb hybrid-domain floating-point SRAM in-memory-compute macro with 16.2-70.2 TFLOPS/W for high-accuracy AI-edge devices. In 2023 IEEE International Solid-State Circuits Conference (ISSCC) 126–127 (IEEE, 2023).

Yue, J. et al. A 28nm 16.9-300TOPS/W computing-in-memory processor supporting floating-point NN inference/training with intensive-CIM sparse-digital architecture. In 2023 IEEE International Solid-State Circuits Conference (ISSCC) 252–253 (IEEE, 2023).

Ikeda, S. et al. Magnetic tunnel junctions for spintronic memories and beyond. IEEE Trans. Electron Devices 54, 991–1002 (2006).

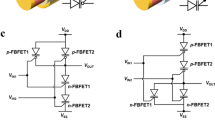

Yin, X. et al. Ferroelectric FETs-based nonvolatile logic-in-memory circuits. IEEE Trans. Very Large Scale Integr. VLSI Syst. 27, 159–172 (2019).

Li, C. et al. Analogue signal and image processing with large memristor crossbars. Nat. Electron. 1, 52–59 (2018).

Danial, L. et al. Two-terminal floating-gate transistors with a low-power memristive operation mode for analogue neuromorphic computing. Nat. Electron. 2, 596–605 (2019).

Agarwal, S. et al. Using floating-gate memory to train ideal accuracy neural networks. IEEE J. Explor. Solid-State Comput. Devices Circuits 5, 52–57 (2019).

Lin, Y.-Y. et al. A novel voltage-accumulation vector–matrix multiplication architecture using resistor-shunted floating gate flash memory device for low-power and high-density neural network applications. In 2018 IEEE International Electron Devices Meeting (IEDM) 2.4.1–2.4.4 (IEEE, 2018).

Jung, S. et al. A crossbar array of magnetoresistive memory devices for in-memory computing. Nature 601, 211–216 (2022).

Jaiswal, A. et al. 8T SRAM cell as a multibit dot-product engine for beyond von Neumann computing. IEEE Trans. Very Large Scale Integr. VLSI Syst. 27, 2556–2567 (2019).

Ali, M., Agrawal, A. & Roy, K. RAMANN: in-SRAM differentiable memory computations for memory-augmented neural networks. In ISLPED '20: Proc. ACM/IEEE International Symposium on Low Power Electronics and Design 61–66 (Association for Computing Machinery, 2020).

Biswas, A. & Chandrakasan, A. P. CONV-SRAM: an energy-efficient SRAM with in-memory dot-product computation for low-power convolutional neural networks. IEEE J. Solid-State Circuits 54, 217–230 (2019).

Jiang, Z. et al. XNOR-SRAM: in-memory computing SRAM macro for binary/ternary deep neural networks. In 2018 IEEE Symposium on VLSI Technology 173–174 (IEEE, 2018).

Si, X. et al. A 28nm 64Kb 6T SRAM computing-in-memory macro with 8b MAC operation for AI edge chips. In 2020 IEEE International Solid-State Circuits Conference (ISSCC) 246–247 (IEEE, 2020).

Chung, W., Si, M. & Ye, P. D. First demonstration of Ge ferroelectric nanowire FET as synaptic device for online learning in neural network with high number of conductance state and Gmax/Gmin. In 2018 IEEE International Electron Devices Meeting (IEDM) 15.2.1–15.2.4 (IEEE, 2018).

Giannopoulos, I. et al. 8-bit precision in-memory multiplication with projected phase-change memory. In 2018 IEEE International Electron Devices Meeting (IEDM) 27.7.1–27.7.4 (IEEE, 2018).

Lequeux, S. et al. A magnetic synapse: multilevel spin-torque memristor with perpendicular anisotropy. Sci. Rep. 6, 31510 (2016).

Gonugondla, S. K., Kang, M. & Shanbhag, N. A 42pJ/decision 3.12TOPS/W robust in-memory machine learning classifier with on-chip training. In 2018 IEEE International Solid-State Circuits Conference (ISSCC) 490–491 (IEEE, 2018).

Dong, Q. et al. A 351TOPS/W and 372.4GOPS compute-in-memory SRAM macro in 7nm FinFET CMOS for machine-learning applications. In 2020 IEEE International Solid-State Circuits Conference (ISSCC) 242–243 (IEEE, 2020).

Yao, P. et al. Fully hardware-implemented memristor convolutional neural network. Nature 577, 641–646 (2020).

Yu, S. et al. Computing-in-memory chips for deep learning: recent trends and prospects. IEEE Circuits Syst. Mag. 21, 31–56 (2021).

Hu, M. et al. Dot-product engine for neuromorphic computing: programming 1T1M crossbar to accelerate matrix–vector multiplication. In 2016 53rd ACM/EDAC/IEEE Design Automation Conference (DAC) 1–6 (IEEE, 2016).

Sun, Z. et al. Solving matrix equations in one step with cross-point resistive arrays. Proc. Natl Acad. Sci. USA 116, 4123–4128 (2019).

Sun, Z. et al. One-step regression and classification with cross-point resistive memory arrays. Sci. Adv. 6, eaay2378 (2020).

Devaux, F. The true Processing in Memory accelerator. In 2019 IEEE Hot Chips 31 Symposium (HCS) 1–24 (IEEE, 2019).

Reuben, J. & Pechmann, S. Accelerated addition in resistive RAM array using parallel-friendly majority gates. IEEE Trans. Very Large Scale Integr. VLSI Syst. 29, 1108–1121 (2021).

Haj-Ali, A. et al. Not in name alone: a memristive memory processing unit for real in-memory processing. IEEE Micro 38, 13–21 (2018).

Seo, J.-S. et al. Digital versus analog artificial intelligence accelerators: advances, trends, and emerging designs. IEEE Solid-State Circuits Mag. 14, 65–79 (2022).

Kim, D. et al. An overview of processing-in-memory circuits for artificial intelligence and machine learning. IEEE J. Emerg. Sel. Top. Circuits Syst. 12, 338–353 (2022).

Kim, Y. S. et al. Stateful in-memory logic system and its practical implementation in a TaOx-based bipolar-type memristive crossbar array. Adv. Intell. Syst. 2, 1900156 (2020).

Yu, S. Semiconductor Memory Devices and Circuits (CRC Press, 2022).

Banerjee, W. Challenges and applications of emerging nonvolatile memory devices. Electron 9, 1029 (2020).

Wolf, S. A. et al. The promise of nanomagnetics and spintronics for future logic and universal memory. Proc. IEEE 98, 2155–2168 (2010).

Endoh, T. et al. An overview of nonvolatile emerging memories—spintronics for working memories. IEEE J. Emerg. Sel. Top. Circuits Syst. 6, 109–119 (2016).

Kim, K.-H. et al. Wurtzite and fluorite ferroelectric materials for electronic memory. Nat. Nanotechnol. 18, 422–441 (2023).

Goda, A. Recent progress on 3D NAND flash technologies. Electron 10, 3156 (2021).

Chen, P.-Y. et al. Technology-design co-optimization of resistive cross-point array for accelerating learning algorithms on chip. In 2015 Design, Automation & Test in Europe Conference & Exhibition (DATE) 854–859 (IEEE, 2015).

Feinberg, B., Wang, S. & Ipek, E. Making memristive neural network accelerators reliable. In 2018 IEEE International Symposium on High Performance Computer Architecture (HPCA) 52–65 (IEEE, 2018).

Jain, S. & Raghunathan, A. CxDNN: hardware–software compensation methods for deep neural networks on resistive crossbar systems. ACM Trans. Embedded Comput. Syst. 18, 113 (2019).

Choi, S. et al. SiGe epitaxial memory for neuromorphic computing with reproducible high performance based on engineered dislocations. Nat. Mater. 17, 335–340 (2018).

Nukala, P. et al. Reversible oxygen migration and phase transitions in hafnia-based ferroelectric devices. Science 372, 630–635 (2021).

Boybat, I. et al. Temperature sensitivity of analog in-memory computing using phase-change memory. In 2021 IEEE International Electron Devices Meeting (IEDM) 28.3.1–28.3.4 (IEEE, 2021).

Shanbhag, N. R. & Roy, S. K. Comprehending in-memory computing trends via proper benchmarking. In 2022 IEEE Custom Integrated Circuits Conference (CICC) 01–07 (IEEE, 2022).

Houshmand, P., Sun, J. & Verhelst, M. Benchmarking and modeling of analog and digital SRAM in-memory computing architectures. Preprint at https://arxiv.org/abs/2305.18335 (2023).

Acknowledgements

This work has received funding from the National Key R&D Program of China (2020YFB2206001, 2022ZD0118901), the National Natural Science Foundation of China (62004002, 92064004, 61927901, 62025401, 61834001, 92264203), the 111 Project (B18001) and the NSF-BSF (2020-613). A.M. acknowledges financial support from the Royal Academy of Engineering in the form of a Senior Research Fellowship.

Author information

Authors and Affiliations

Contributions

All authors contributed to the preparation of the manuscript.

Corresponding author

Ethics declarations

Competing interests

A.M. is a founder and director of Intrinsic Semiconductor Technologies Ltd (www.intrinsicst.com), a spin-out company commercializing silicon oxide RRAM.

Peer review

Peer review information

Nature Electronics thanks Kai Ni and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sun, Z., Kvatinsky, S., Si, X. et al. A full spectrum of computing-in-memory technologies. Nat Electron 6, 823–835 (2023). https://doi.org/10.1038/s41928-023-01053-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41928-023-01053-4