Abstract

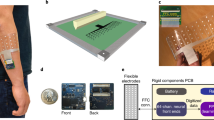

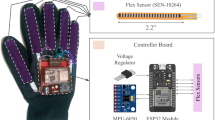

Signed languages are not as pervasive a conversational medium as spoken languages due to the history of institutional suppression of the former and the linguistic hegemony of the latter. This has led to a communication barrier between signers and non-signers that could be mitigated by technology-mediated approaches. Here, we show that a wearable sign-to-speech translation system, assisted by machine learning, can accurately translate the hand gestures of American Sign Language into speech. The wearable sign-to-speech translation system is composed of yarn-based stretchable sensor arrays and a wireless printed circuit board, and offers a high sensitivity and fast response time, allowing real-time translation of signs into spoken words to be performed. By analysing 660 acquired sign language hand gesture recognition patterns, we demonstrate a recognition rate of up to 98.63% and a recognition time of less than 1 s.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data that support the plots within this paper and other findings of the study are available from the corresponding authors upon reasonable request.

Code availability

The code is available from the corresponding authors upon reasonable request.

References

Hill, J. C., Lillo-Martin, D. C. & Wood, S. K. Sign Languages: Structures and Contexts 1st edn, Ch. 1 (Routledge, 2019).

Lane, H. Mask of Benevolence: Disabling the Deaf Community (Knopf, 1992).

Gao, W. et al. Fully integrated wearable sensor arrays for multiplexed in situ perspiration analysis. Nature 529, 509–514 (2016).

Huang, Z. et al. Three-dimensional integrated stretchable electronics. Nat. Electron. 1, 473–480 (2018).

Jinno, H. et al. Stretchable and waterproof elastomer-coated organic photovoltaics for washable electronic textile applications. Nat. Energy 2, 780–785 (2017).

Kaltenbrunner, M. et al. An ultra-lightweight design for imperceptible plastic electronics. Nature 499, 458–463 (2013).

Kang, S. et al. Transparent and conductive nanomembranes with orthogonal silver nanowire arrays for skin-attachable loudspeakers and microphones. Sci. Adv. 4, eaas8772 (2018).

Kim, D. H. et al. Epidermal electronics. Science 333, 838–843 (2011).

Miyamoto, A. et al. Inflammation-free, gas-permeable, lightweight, stretchable on-skin electronics with nanomeshes. Nat. Nanotechnol. 12, 907–913 (2017).

McAlpine, M. C., Ahmad, H., Wang, D. & Heath, J. R. Highly ordered nanowire arrays on plastic substrates for ultrasensitive flexible chemical sensors. Nat. Mater. 6, 379–384 (2007).

Wang, C. et al. User-interactive electronic skin for instantaneous pressure visualization. Nat. Mater. 12, 899–904 (2013).

Wang, C. et al. Monitoring of the central blood pressure waveform via a conformal ultrasonic device. Nat. Biomed. Eng. 2, 687–695 (2018).

Wang, S. et al. Skin electronics from scalable fabrication of an intrinsically stretchable transistor array. Nature 555, 83–88 (2018).

Wu, W., Wen, X. & Wang, Z. L. Taxel-addressable matrix of vertical-nanowire piezotronic transistors for active and adaptive tactile imaging. Science 340, 952–957 (2013).

Zhang, N. et al. Photo-rechargeable fabrics as sustainable and robust power sources for wearable bioelectronics. Matter 2, 1260–1269 (2020).

Xu, S. et al. Soft microfluidic assemblies of sensors, circuits and radios for the skin. Science 344, 70–74 (2014).

Yamada, T. et al. A stretchable carbon nanotube strain sensor for human-motion detection. Nat. Nanotechnol. 6, 296–301 (2011).

Kosmidou, V. E. & Hadjileontiadis, L. J. Sign language recognition using intrinsic-mode sample entropy on sEMG and accelerometer data. IEEE Trans. Biomed. Eng. 56, 2879–2890 (2009).

Savur, C. & Sahin, F. Real-time American sign language recognition system by using surface EMG signal. In 2015 IEEE Int. Conf. Mach. Learn. Appl. 497–502 (IEEE, 2015).

Wu, J., Sun, L. & Jafari, R. A wearable system for recognizing American sign language in real-time using IMU and surface EMG sensors. IEEE J. Biomed. Health 20, 1281–1290 (2016).

O’Connor, T. F. et al. The language of glove: wireless gesture decoder with low-power and stretchable hybrid electronics. PLoS ONE 12, e0179766 (2017).

Lee, B. G. & Lee, S. M. Smart wearable hand device for sign language interpretation system with sensors fusion. IEEE Sens. J. 18, 1224–1232 (2018).

Pradhan, G., Prabhakaran, B. & Li, C. J. Hand-gesture computing for the hearing and speech impaired. IEEE Multimed. 15, 20–27 (2008).

Ambar, R. et al. Development of a wearable device for sign language recognition. J. Phys. Conf. Ser. 1019, 012017 (2018).

Zhao, J. et al. Passive and space-discriminative ionic sensors based on durable nanocomposite electrodes toward sign language recognition. ACS Nano 11, 8590–8599 (2017).

Abhishek, K. S., Qubeley, L. C. F. & Ho, D. Glove-based hand gesture recognition sign language translator using capacitive touch sensor. In 2016 IEEE Int. Conf. Electron Devices and Solid-State Circuits 334–337 (IEEE, 2016).

Ameen, S. & Vadera, S. A convolutional neural network to classify American Sign Language fingerspelling from depth and colour images. Expert Syst. 34, e12197 (2017).

Munib, Q., Habeeb, M., Takruri, B. & Al-Malik, H. A. American sign language (ASL) recognition based on Hough transform and neural networks. Expert Syst. Appl. 32, 24–37 (2007).

Rivera-Acosta, M., Ortega-Cisneros, S., Rivera, J. & Sandoval-Ibarra, F. American sign language alphabet recognition using a neuromorphic sensor and an artificial neural network. Sensors 17, 2176 (2017).

Starner, T., Weaver, J. & Pentland, A. Real-time American sign language recognition using desk and wearable computer based video. IEEE Trans. Pattern Anal. 20, 1371–1375 (1998).

Meng, K. et al. A wireless textile-based sensor system for self-powered personalized health care. Matter 2, 896–907 (2020).

Fan, F. R., Tian, Z. Q. & Wang, Z. L. Flexible triboelectric generator! Nano Energy 1, 328–334 (2012).

Chen, J. & Wang, Z. L. Reviving vibration energy harvesting and self-powered sensing by a triboelectric nanogenerator. Joule 1, 480–521 (2017).

Yan, C. et al. A linear-to-rotary hybrid nanogenerator for high-performance wearable biomechanical energy harvesting. Nano Energy 67, 104235 (2020).

Chen, J. et al. Micro-cable structured textile for simultaneously harvesting solar and mechanical energy. Nat. Energy 1, 16138 (2016).

Zhou, Z. et al. Single-layered ultra-soft washable smart textiles for all-around ballistocardiograph, respiration and posture monitoring during sleep. Biosens. Bioelectron. 155, 112064 (2020).

Wang, Z. L., Chen, J. & Lin, L. Progress in triboelectric nanogenerators as a new energy technology and self-powered sensors. Energy Environ. Sci. 8, 2250–2282 (2015).

Chen, G. et al. Smart textiles for electricity generation. Chem. Rev. 120, 3668–3720 (2020).

Amjadi, M., Pichitpajongkit, A., Lee, S., Ryu, S. & Park, I. Highly stretchable and sensitive strain sensor based on silver nanowire-elastomer nanocomposite. ACS Nano 8, 5154–5163 (2014).

Xiao, X. et al. High-strain sensors based on ZnO nanowire/polystyrene hybridized flexible films. Adv. Mater. 23, 5440–5444 (2011).

Boutry, C. M. et al. A stretchable and biodegradable strain and pressure sensor for orthopaedic application. Nat. Electron. 1, 314–321 (2018).

Lipomi, D. J. et al. Skin-like pressure and strain sensors based on transparent elastic films of carbon nanotubes. Nat. Nanotechnol. 6, 788–792 (2011).

Yu, X. et al. Skin-integrated wireless haptic interfaces for virtual and augmented reality. Nature 575, 473–479 (2019).

Chung, H. U. et al. Binodal, wireless epidermal electronic systems with in-sensor analytics for neonatal intensive care. Science 363, eaau0780 (2019).

Sim, K. et al. Fully rubbery integrated electronics from high effective mobility intrinsically stretchable semiconductors. Sci. Adv. 5, eaav5749 (2019).

Acknowledgements

J.Y. acknowledges support from the National Natural Science Foundation of China (no. 51675069), the Fundamental Research Funds for the Central Universities (nos. 2018CDQYGD0020 and cqu2018CDHB1A05), the Scientific and Technological Research Program of Chongqing Municipal Education Commission (KJ1703047) and the Natural Science Foundation Projects of Chongqing (cstc2017shmsA40018 and cstc2018jcyjAX0076). J.C. also acknowledges the Henry Samueli School of Engineering & Applied Science and the Department of Bioengineering at the University of California, Los Angeles for start-up support. We also thank B. Lewis in the Department of Linguistics at the University of California, Los Angeles for his inspiring discussion on avoiding using a deficit-based framework in the description of deaf people and sign language in our Article.

Author information

Authors and Affiliations

Contributions

J.C. and J.Y. planned the study and supervised the whole project. J.Y., J.C. and Z.Z. conceived the idea, designed the experiment, analysed the data and composed the manuscript. Z.Z., K.C., X.L., S.Z., Y.W., Y.Z., K.M., C.S., Q.H., W.F., E.F., Z.L., X.T. and W.D. performed all of the experiments and made technical comments on the manuscript. J.C. submitted the manuscript and was the lead contact.

Corresponding authors

Ethics declarations

Competing interests

J.C., J.Y. and Z.Z. have filed a patent based on this work under the US provisional patent application no. 62/967,509.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figs. 1–21, Note 1 and Tables 1–3.

Supplementary Video 1

Wearable YSSA for Sign Language gestures acquisition.

Supplementary Video 2

Wearable YSSA for Sign Language translation.

Rights and permissions

About this article

Cite this article

Zhou, Z., Chen, K., Li, X. et al. Sign-to-speech translation using machine-learning-assisted stretchable sensor arrays. Nat Electron 3, 571–578 (2020). https://doi.org/10.1038/s41928-020-0428-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41928-020-0428-6

This article is cited by

-

Motion artefact management for soft bioelectronics

Nature Reviews Bioengineering (2024)

-

Capturing complex hand movements and object interactions using machine learning-powered stretchable smart textile gloves

Nature Machine Intelligence (2024)

-

Encoding of multi-modal emotional information via personalized skin-integrated wireless facial interface

Nature Communications (2024)

-

Well-defined in-textile photolithography towards permeable textile electronics

Nature Communications (2024)

-

Computational design of ultra-robust strain sensors for soft robot perception and autonomy

Nature Communications (2024)