Abstract

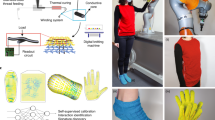

Accurate real-time tracking of dexterous hand movements has numerous applications in human–computer interaction, the metaverse, robotics and tele-health. Capturing realistic hand movements is challenging because of the large number of articulations and degrees of freedom. Here we report accurate and dynamic tracking of articulated hand and finger movements using stretchable, washable smart gloves with embedded helical sensor yarns and inertial measurement units. The sensor yarns have a high dynamic range, responding to strains as low as 0.005% and as high as 155%, and show stability during extensive use and washing cycles. We use multi-stage machine learning to report average joint-angle estimation root mean square errors of 1.21° and 1.45° for intra- and inter-participant cross-validation, respectively, matching the accuracy of costly motion-capture cameras without occlusion or field-of-view limitations. We report a data augmentation technique that enhances robustness to noise and variations of sensors. We demonstrate accurate tracking of dexterous hand movements during object interactions, opening new avenues of applications, including accurate typing on a mock paper keyboard, recognition of complex dynamic and static gestures adapted from American Sign Language, and object identification.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data supporting this study’s findings are available from project page, containing detailed explanations of all the datasets (https://feel.ece.ubc.ca/SmartTextileGlove/) as well as a direct link to a Google Drive Repository (https://drive.google.com/drive/folders/1HWjG_6Y2G7XNEeI19Aids0g-dcufncGJ?usp=share_link) where the datasets can be downloaded. Source data are provided with this paper.

Code availability

The codes supporting this study’s findings are available from https://github.com/arvintashakori/SmartTextileGlove ref. 53.

References

Luo, Y. et al. Learning human–environment interactions using conformal tactile textiles. Nat. Electron. 4, 193–201 (2021).

Moin, A. et al. A wearable biosensing system with in-sensor adaptive machine learning for hand gesture recognition. Nat. Electron. 4, 54–63 (2021).

Zhou, Z. et al. Sign-to-speech translation using machine-learning-assisted stretchable sensor arrays. Nat. Electron. 3, 571–578 (2020).

Chen, W. et al. A survey on hand pose estimation with wearable. Sensors 20, 1074 (2020).

Glauser, O. et al. Interactive hand pose estimation using a stretch-sensing soft glove. ACM Trans. Graph. 38, 41 (2019).

Wang, M. et al. Gesture recognition using a bioinspired learning architecture that integrates visual data with somatosensory data from stretchable sensors. Nat. Electron. 3, 563–570 (2020).

Zhu, M., Sun, Z. & Lee, C. Soft modular glove with multimodal sensing and augmented haptic feedback enabled by materials’ multifunctionalities. ACS Nano 16, 14097–14110 (2022).

Hughes, J. et al. A simple, inexpensive, wearable glove with hybrid resistive-pressure sensors for computational sensing, proprioception, and task identification. Adv. Intell. Syst. 2, 2000002 (2020).

Sun, Z., Zhu, M., Shan, X. & Lee, C. Augmented tactile-perception and haptic-feedback rings as human–machine interfaces aiming for immersive interactions. Nat. Commun. 13, 5224 (2022).

Sundaram, S. et al. Learning the signatures of the human grasp using a scalable tactile glove. Nature 569, 698–702 (2019).

Lei, Q. et al. A survey of vision-based human action evaluation methods. Sensors 19, 4129 (2019).

Wen, F., Zhang, Z., He, T. & Lee, C. AI enabled sign language recognition and VR space bidirectional communication using triboelectric smart glove. Nat. Commun. 12, 5378 (2021).

Henderson, J. et al. Review of wearable sensor-based health monitoring glove devices for rheumatoid arthritis. Sensors 21, 1576 (2021).

Hughes, J. & Iida, F. Multi-functional soft strain sensors for wearable physiological monitoring. Sensors 18, 3822 (2018).

Atiqur, M.A.R., Antar, A. & Shahid, O. Vision-based action understanding for assistive healthcare: a short review. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 1–11 (2019).

Yang, C. et al. Perspectives of users for a future interactive wearable system for upper extremity rehabilitation following stroke: a qualitative study. J. Neuroeng. Rehabil. 20, 1–10 (2023).

Moin, A. et al. An EMG gesture recognition system with flexible high-density sensors and brain-inspired high-dimensional classifier. In IEEE International Symposium on Circuits and Systems (ISCAS), 1–5 (2018).

Karunratanakul, K. et al. Grasping field: learning implicit representations for human grasps. International Conference on 3D Vision (3DV), 333–344 (2020).

Smith, B. et al. Constraining dense hand surface tracking with elasticity. ACM Trans. Graph. 39, 219 (2020).

Xie, K. et al. Physics-based human motion estimation and synthesis from videos. In Proc. IEEE/CVF International Conference on Computer Vision 11532–11541 (IEEE, 2021).

Jiang, L., Xia, H. & Guo, C. A model-based system for real-time articulated hand tracking using a simple data glove and a depth camera. Sensors 19, 4680 (2019).

Guzov, V. et al. Human POSEitioning System (HPS): 3D human pose estimation and self-localization in large scenes from body-mounted sensors. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 4316–4327 (2021).

Ehsani, K. et al. Use the force, Luke! Learning to predict physical forces by simulating effects. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 13–19 (2020).

Ge, L. et al. 3D hand shape and pose estimation from a single RGB image. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 10833–10842 (IEEE, 2019).

Wu, E. et al. Back-hand-pose: 3D hand pose estimation for a wrist-worn camera via dorsum deformation network. In UIST 2020—Proc. 33rd Annual ACM Symposium on User Interface Software and Technology 1147–1160 (ACM, 2020).

Kocabas, M., Athanasiou, N. & Black, M. VIBE: video inference for human body pose and shape estimation. In Proc. IEEE Computer Society Conference on Computer Vision and Pattern Recognition 5252–5262 (IEEE, 2020).

Hu, F. et al. FingerTrak: continuous 3D hand pose tracking by deep learning hand silhouettes captured by miniature thermal cameras on wrist. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 4, 1–24 (2020).

Cao, Z., Hidalgo, G., Simon, T., Wei, S. & Sheikh, Y. OpenPose: realtime multi-person 2D pose estimation using part affinity fields. IEEE Trans. Pattern Anal. Mach. Intell. 43, 172–186 (2021).

Si, Y. et al. Flexible strain sensors for wearable hand gesture recognition: from devices to systems. Adv. Intell. Syst. 4, 2100046 (2022).

Zhang, Q. et al. Dynamic modeling of hand-object interactions via tactile sensing. In IEEE International Conference on Intelligent Robots and Systems 2874–2881 (IEEE, 2021).

Wang, S. et al. Hand gesture recognition framework using a lie group based spatio-temporal recurrent network with multiple hand-worn motion sensors. Inf. Sci. 606, 722–741 (2022).

Deng, L. et al. Sen-Glove: a lightweight wearable glove for hand assistance with soft joint sensing. In International Conference on Robotics and Automation (ICRA), 5170–5175 (IEEE, 2022).

Pan, J. et al. Hybrid-flexible bimodal sensing wearable glove system for complex hand gesture recognition. ACS Sens. 6, 4156–4166 (2021).

Liu, Y., Zhang, S. & Gowda, M. NeuroPose: 3D hand pose tracking using EMG wearables. In Proc. of the Web Conference (WWW 2021), 1471–1482 (2021).

Denz, R. et al. A high-accuracy, low-budget sensor glove for trajectory model learning. In 2021 20th International Conference on Advanced Robotics, ICAR 2021 1109–1115 (2021).

Luo, Y. Discovering the Patterns of Human–Environment Interactions Using Scalable Functional Textiles (Massachusetts Institute of Technology, 2020).

Côté-Allard, U. et al. Deep learning for electromyographic hand gesture signal classification using transfer learning. IEEE Trans. Neural Syst. Rehabil. Eng. 27, 760–771 (2019).

Zhang, W. et al. Intelligent knee sleeves: a real-time multimodal dataset for 3d lower body motion estimation using smart textile. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2023).

Luo, Y. et al. Intelligent carpet: inferring 3D human pose from tactile signals. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 11255–11265 (IEEE, 2021).

Zhou, L., Shen, W., Liu, Y. & Zhang, Y. A scalable durable and seamlessly integrated knitted fabric strain sensor for human motion tracking. Adv. Mater. Technol. 7, 2200082 (2022).

Brahmbhatt, S. et al. ContactPose: a dataset of grasps with object contact and hand pose. in Computer Vision—ECCV 2020 12358, 361–378 (Springer, 2020).

Lei, X., Sun, L. & Xia, Y. Lost data reconstruction for structural health monitoring using deep convolutional generative adversarial networks. Struct. Health Monit. 20, 2069–2087 (2021).

Soltanian, S. et al. Highly piezoresistive compliant nanofibrous sensors for tactile and epidermal electronic applications. J. Mater. Res. 30, 121–129 (2015).

Soltanian, S. et al. Highly stretchable, sparse, metallized nanofiber webs as thin, transferrable transparent conductors. Adv. Energy Mater. 3, 1332–1337 (2013).

Konku-Asase, Y., Yaya, A. & Kan-Dapaah, K. Curing temperature effects on the tensile properties and hardness of γ-Fe2O3 reinforced PDMS nanocomposites. Adv. Mater. Sci. Eng. 2020, 6562373 (2020).

Liu, Z. et al. Functionalized fiber-based strain sensors: pathway to next-generation wearable electronics. Nanomicro Lett. 14, 61 (2022).

Ho, D. et al. Multifunctional smart textronics with blow-spun nonwoven fabrics. Adv. Funct. Mater. 29, 1900025 (2019).

Vu, C. & Kim, J. Highly sensitive e-textile strain sensors enhanced by geometrical treatment for human monitoring. Sensors 20, 2383 (2020).

Tang, Z. et al. Highly stretchable core-sheath fibers via wet-spinning for wearable strain sensors. ACS Appl. Mater. Interfaces 10, 6624–6635 (2018).

Gao, Y. et al. 3D-printed coaxial fibers for integrated wearable sensor skin. Adv. Mater. Technol. 4, 1900504 (2019).

Cai, G. et al. Highly stretchable sheath-core yarns for multifunctional wearable electronics. ACS Appl. Mater. Interfaces 12, 29717–29727 (2020).

Xu, L. et al. Moisture-resilient graphene-dyed wool fabric for strain sensing. ACS Appl. Mater. Interfaces 12, 13265–13274 (2020).

Tashakori, A. arvintashakori/SmartTextileGlove: v1.0.0. Zenodo https://doi.org/10.5281/zenodo.10128938 (2023).

Acknowledgements

We thank the support of NSERC-CIHR (CHRP549589-20, CPG-170611) awarded to J.J.E. and P.S., NSERC Discovery (NSERC: RGPIN-2017-04666 and RGPAS-2017-507964) awarded to P.S., NSERC Alliance (ALLRP 549207-19) awarded to P.S., Mitacs (IT14342 and IT11535) awarded to P.S., and CFI, and financial and technical support of Texavie Technologies Inc. and their staff.

Author information

Authors and Affiliations

Contributions

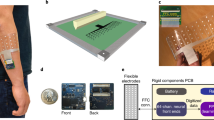

A.T. and P.S. developed the system model and implemented the learning algorithm, iOS Mobile application, data pipeline, PC-based data acquisition software, Unity application and firmware parts. P.S., Z.J. and S.S. designed the yarn-based strain sensors. Z.J., A.S., S.S., H.N., K.L. and P.S. developed the hardware and fabricated gloves and sensors. A.T. performed the experiments and analysis with help and input from others. C.N. helped with the PCB box design and fabrication. P.S., A.S., J.J.E., C.-l.Y. and Z.J.W. oversaw the project. All authors contributed to writing of the paper and analysis of results.

Corresponding authors

Ethics declarations

Competing interests

P.S., A.T., Z.J., C.N., A.S., S.S. and H.N. have filed a patent based on this work under the US provisional patent application no. 63/422,867. The other authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks the anonymous reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Fabrication and characteristics of HSYs.

a, Fabrication process of HSYs and gloves. b, SEM images of the yarn sensors before PDMS coating. c, Sensitivity of HSY resistance to various compressive pressure values applied at a frequency of 1 Hz. A metal indenter with the size of 5mm × 5mm were used to apply pressure normal to the fabric. (The data points present the mean values ± standard deviation of 20 samples.) d, The strain sensitivity of our insulated yarn sensors made from optimized composites of carbon particles with highly stretchable elastomers, demonstrating high stretchability up to 1,000 %, but showing more hysteresis for > 500 % stretch and slower responsiveness due to the softer nature of these sensors in comparison to HSYs. This highlights the superior performance of HSYs for the proposed smart glove real-time applications with less than 120 % maximum stretch for the fabric.

Extended Data Fig. 2 Power consumption.

Custom-made wireless board power consumption breakdown for different components including BLE chip, IMU chips, and all HSYs.

Extended Data Fig. 3 Keyboard typing detection.

Inter-session cross-validation accuracy results for the keyboard typing detection algorithm.

Extended Data Fig. 4 Dynamic gesture recognition.

Confusion matrix for a, intra-subject (accuracy: 97.31 %), and b, inter-subject cross-validation (accuracy: 94.05 %).

Extended Data Fig. 5 Static gesture recognition.

Confusion matrix for a, intra-subject (accuracy: 97.81 %), and b, inter-subject cross-validation (accuracy: 94.60 %).

Extended Data Fig. 6 Object detection.

Confusion matrix for a, intra-subject (accuracy: 95.02 %), and b, inter-subject cross-validation (accuracy: 90.20 %).

Supplementary information

Supplementary Information

Supplementary Algorithms 1 and 2, Tables 1–3, and Figs. 1–12.

Supplementary Video 1

Dynamic articulated tracking of finger movements.

Supplementary Video 2

Typing on a mock keyboard.

Supplementary Video 3

Three-dimensional drawing in air.

Supplementary Video 4

Static hand-gesture recognition.

Supplementary Video 5

Dynamic hand-gesture recognition.

Supplementary Video 6

Object detection based on the participants’ grasp pattern.

Source data

Source Data Fig. 2

Sensor source data.

Source Data Fig. 3

Hand-pose-estimation results and data augmentation method.

Source Data Fig. 4

Click-detection and wrist-angle-detection results.

Source Data Fig. 5

Gesture- and object-detection results.

Source Data Extended Data Fig. 2

Source data for custom-made wireless board power consumption breakdown for different components, including BLE chip, IMU chips and all HSYs.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tashakori, A., Jiang, Z., Servati, A. et al. Capturing complex hand movements and object interactions using machine learning-powered stretchable smart textile gloves. Nat Mach Intell 6, 106–118 (2024). https://doi.org/10.1038/s42256-023-00780-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-023-00780-9