Abstract

Efforts to diagnose the risks of a changing climate often rely on downscaled and bias-corrected climate information, making it important to understand the uncertainties and potential biases of this approach. Here, we perform a variance decomposition to partition uncertainty in global climate projections and quantify the relative importance of downscaling and bias-correction. We analyze simple climate metrics such as annual temperature and precipitation averages, as well as several indices of climate extremes. We find that downscaling and bias-correction often contribute substantial uncertainty to local decision-relevant climate outcomes, though our results are strongly heterogeneous across space, time, and climate metrics. Our results can provide guidance to impact modelers and decision-makers regarding the uncertainties associated with downscaling and bias-correction when performing local-scale analyses, as neglecting to account for these uncertainties may risk overconfidence relative to the full range of possible climate futures.

Similar content being viewed by others

Introduction

Climate change is a global phenomenon that manifests on regional to local scales1. Managing the risks of a changing climate thus requires accurate, high-resolution climate projections as well as an understanding of the associated uncertainties. One of our primary sources of information about future climate change is ensembles of coupled general circulation models (GCMs) run under various greenhouse gas emissions scenarios2. However, GCM projections of future climate are highly uncertain, owing to three primary factors: model uncertainty, arising from differences in the structures and parameters of GCMs and thus their responses to the same radiative forcing input; scenario uncertainty, arising from the range of possible future greenhouse gas emissions trajectories; and internal variability, arising from the chaotic nature of the Earth system.

Understanding the relative importance of each of these sources of uncertainty can help guide research agendas and inform the modeling choices of end-users. Several previous studies have made important progress towards this goal for a variety of both climate and socioeconomic outcomes3,4,5,6,7,8. Hawkins and Sutton3 (hereafter, HS09) use model outputs from the Coupled Model Intercomparison Project Phase 3 (CMIP3) to partition uncertainty in global and regional temperature projections, later extending their analysis to precipitation5. More recently, Lehner et al.6 (hereafter, L20) leverage single model initial condition large ensembles (SMILEs) alongside CMIP6 outputs to better characterize internal variability, particularly at regional to local scales where its influence can be dominant. Using a similar SMILE-based approach, Blanusa et al.7 (hereafter, B23) highlight the importance of internal variability in driving daily temperature and precipitation extremes.

While these works have led to many useful insights, they primarily rely on GCM outputs that are typically viewed as unsuitable for downstream analyses owing to their coarse spatial resolutions and systematic biases9. GCM outputs often need to be downscaled (to increase the spatial resolution) and bias-corrected (to remove systematic biases) before being considered suitable for the wide variety of end-uses in which they might be employed, including impact assessments10,11, adaptation planning12, infrastructure design13, and financial risk disclosures14. However, constructing a downscaled and bias-corrected ensemble requires making several methodological choices15,16 that can combine to produce considerable differences in the representation of temperature and precipitation, in particular for extremes17,18,19,20. Such differences can persist in impact assessments, for example, related to hydrology21,22,23 or ecosystem dynamics24. Due in part to these insights, a separate body of work has emerged that aims to quantify the importance of downscaling and bias-correction relative to other sources of uncertainty25,26,27,28,29,30,31. However, these studies often report mixed conclusions: for example, Chegwidden et al.27 analyze hydrologic variables in the Pacific Northwest region of North America and find that the choice of downscaling algorithm does not contribute meaningfully to projection spread; in contrast, Wootten et al.29 focus on meteorological variables in the southeastern United States and conclude that impact assessments using only a single set of downscaled and bias-corrected GCMs may suffer from overconfidence. Many of the conflicting results in this literature can be explained by different studies focusing on distinct and often small geographic regions, or on varying sets of meteorological or hydrological variables. Each study also relies on a unique sampling of GCMs, scenarios, and downscaling and bias-correction algorithms, which can lead to different uncertainty decompositions.

In this work, we aim to address the above literature gaps by quantifying the contribution of downscaling and bias-correction to projection uncertainty for a variety of climate metrics at a global scale. Following the simple variance decomposition approach of previous works29, we account for scenario uncertainty, model uncertainty, downscaling and bias-correction uncertainty, and interannual variability. Our approach involves calculating the time-evolving relative contribution of each source to the total projection spread (see “Methods”). We focus on statistically downscaled and bias-corrected ensembles and include, to our knowledge, all global, publicly available datasets with parent GCMs taken from the CMIP6 repository32. This leads to a super-ensemble comprising ~200 downscaled and bias-corrected model outputs across 4 emissions scenarios, 22 parent CMIP6 models, and 5 downscaling and bias-correction algorithms (Supplementary Table 1). Owing to data availability, we are restricted to analyzing metrics of climate change derived from daily maximum or minimum temperature and daily precipitation. Our selection of indicators includes annual temperature and precipitation averages as well as several indices of climate extremes due to their potential for large impacts on a broad variety of human–environment systems33.

Our uncertainty partitioning results are strongly heterogeneous across space, time, and climate metrics. However, in general, we find that downscaling and bias-correction contribute a non-negligible fraction of the total projection variance (typically no less than 25%, globally averaged). In many cases they represent the primary source of uncertainty. Downscaling and bias-correction are particularly important over the near term (early-to-mid 21st century), in projections of precipitation, in projections of extremes, in regions of complex terrain, and in regions where historical observations disagree. Our results corroborate previous works showing that in many instances, relying on a single set of downscaled and bias-corrected outputs can risk overconfidence29,34. For stakeholders or impact modelers who lack the computational capacity to extensively sample across all four sources of uncertainty, our results may also assist in deciding which factors to prioritize.

Results

Hereafter, to improve readability, we use the terms “downscaled” or “downscaling” to encompass the outputs or methods of downscaled and bias-corrected ensembles, unless the distinction between downscaling and bias-correction is important.

Variance decomposition of climate averages

We begin by analyzing indicators of long-term climatic change, namely annual average temperature and annual total precipitation. Before moving to the global picture, we focus on three example locations: New Delhi, India; Seattle, USA; and Lagos, Nigeria. In addition to being populous and economically important cities with distinct climates, these locations allow a comparison to previous works (L20, B23). The variance decomposition results for each city, as well as each individual downscaled projection, is shown in Fig. 1 (projections conditioned on each emissions scenario are shown in Supplementary Figs. 1–4). There is broad agreement on the sign of change for both temperature and precipitation, with average temperatures generally increasing in all locations (Fig. 1a–c) and total precipitation slightly increasing in New Delhi and Seattle (Fig. 1g, h) while remaining approximately constant in Lagos (Fig. 1i). However, there is considerable projection spread for all metrics and locations, and the resulting variance decompositions lead to different interpretations as to the driving factors. For temperature projections (Fig. 1d–f), the contribution of scenario uncertainty is similar in all three locations, starting small and only becoming non-negligible after around 2050. The reverse is true for interannual variability, which is more important in the first half of the century and declines over time. Similarly, the relative contribution of downscaling is largest over the near term and declines over time. However, there are considerable differences in magnitude across the three cities: temperature projections in New Delhi show little dependence on the choice of downscaled ensemble (Fig. 1d), whereas downscaling is the dominant uncertainty in Lagos long into the 21st century (Fig. 1f). For precipitation projections, a qualitatively different uncertainty decomposition emerges (Fig. 1j–l). Interannual variability is much more important in all locations, while the contribution of scenario uncertainty virtually disappears. In Seattle, downscaling is responsible for a substantial fraction of the variance of precipitation projections (Fig. 1k), model uncertainty contributes a small but perceptible fraction, and the overall decomposition changes little over time. This contrasts with New Delhi (Fig. 1j) and Lagos (Fig. 1l), where model uncertainty is relatively more important and grows over time.

a–c Timeseries of annual average temperature from each downscaled model output. Gray lines show individual downscaled outputs and colored lines of different styles show associated ensemble-scenario means. Outputs for each city are taken from the single grid point encompassing their respective locations. d–f Variance decomposition of annual average temperatures corresponding to the timeseries plots in (a–c). The contribution of each uncertainty source is expressed as a percentage of the total variance. g–i Timeseries of annual total precipitation, similar to (a–c). j–l Variance decomposition of annual total precipitation, similar to (d–f).

Each variance decomposition shown in Fig. 1 arises from a combination of factors unique to each location. For example, the importance of downscaling uncertainty for Seattle precipitation may be related to its positioning in a mountainous region35, whereas the dominance of downscaling uncertainty in Lagos temperature projections may be driven by disagreements among the underlying observational datasets used to perform the downscaling (Supplementary Figs. 11 and 12). Fully explaining each uncertainty decomposition would require expertise regarding the many physical processes affecting each location’s climate, an understanding of their representations in the CMIP6 GCMs, and knowledge of how the resulting temperature and precipitation outputs are affected by each downscaling methodology. Although beyond the scope of the current work, these considerations are critical in determining which ensemble(s) and models therein to rely on for decision or risk analyses.

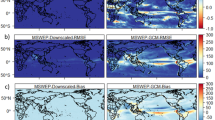

We now apply our variance decomposition globally, continuing to focus on climate averages. These results are shown in Fig. 2, where uncertainty sources are sorted along each column, and each row shows a 20-year averaging period representing the early, mid, or late 21st century. The global results are largely in keeping with those of the three example cities. For annual average temperature, across almost all regions of the globe, there is a marked increase in the contribution of scenario uncertainty over time and a corresponding decrease in downscaling uncertainty and interannual variability. This matches the behavior of each of the locations shown in Fig. 1, even if the magnitudes differ. For example, Lagos can be seen as an outlier in terms of the importance of downscaling uncertainty—by the late 21st century, downscaling still contributes around 25% of the total variance of Lagos temperature projections (Fig. 1f), almost double the global average. Figure 2a also shows that in many locations, model uncertainty grows to become the most important driver of variance by mid-century and continues to contribute a substantial fraction by late-century, though scenario uncertainty typically becomes larger. For annual total precipitation (Fig. 2b), interannual variability remains the dominant contributor, usually followed by downscaling uncertainty and model uncertainty, while scenario uncertainty is almost always negligible. As in Fig. 1, the precipitation decomposition changes little over time.

a Variance decomposition for annual average temperature. Each column shows the contribution from a different source of uncertainty, measured as the fraction of total variance. Each row depicts a 20-year averaging period, where the variance decomposition is performed annually, and the results are averaged over time. The purple dots in the upper left subplot show the locations of New Delhi, Seattle, and Lagos. b Variance decomposition for annual total precipitation in the same layout as (a). The gray boxes in the lower left of each subplot give the area-weighted global average of each decomposition. A version of this plot with a more granular colormap is available in the Supplementary Information (Supplementary Fig. 28).

The global results shown in Fig. 2 also reveal some important spatial patterns. For both temperature and precipitation projections, major mountain ranges including the Rocky Mountains, the Andes, and the Himalayas exhibit comparatively large downscaling uncertainties with correspondingly lower contributions from other sources. This could be due to topographic influences on atmospheric dynamics that are not well represented in coarse-resolution GCMs, leading to methodological differences in the downscaling algorithms being amplified into a larger spread in outcomes36. However, the same regions also tend to show larger disagreements in the historical record (Supplementary Fig. 15), which can drive differences in the projections37,38. Indeed, we find that at the grid point level, downscaling uncertainty is more strongly correlated with observational disagreement than are the other sources (Supplementary Figs. 5 and 6).

Our global results broadly agree with HS09 and L20: for temperature projections, we find that interannual variability is largest over the mid- and high-latitudes; for precipitation projections, we find that model uncertainty is larger in the tropics compared to other regions. In our results, interannual variability remains considerably more important beyond the early 21st century, which arises because previous works apply decadal averages to each climate metric before performing the variance decomposition. Here we do not average any climate indices over time in order to ensure that our results remain sensitive to the entire distribution of possible outcomes in any given year. Applying long-term averaging before performing the variance partitioning would lead to a reduction in the importance of interannual variability, similar to what is observed in the Supplementary Information of B23.

Variance decomposition of climate extremes

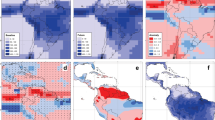

While long-term averages are important indicators of climatic change, climate and weather extremes play an outsized role in driving environmental and socioeconomic impacts39. In this section, we therefore apply our variance decomposition approach to a suite of indices measuring climate extremes, focusing first on annual 1-day maxima of daily maximum temperature and daily precipitation, shown in Fig. 3. The spatial patterns of these results are somewhat similar to those of annual averages (Pearson correlation coefficients calculated at the grid cell level typically range from 0.4 to 0.6, shown in Supplementary Figs. 9 and 10); regions of complex terrain and areas of relatively large observational disagreement are again typically associated with larger downscaling uncertainties (Supplementary Figs. 13, 14, 16). The temporal evolutions are also broadly similar—for both average metrics and 1-day maxima, the precipitation decomposition remains approximately constant over time, and the temperature decomposition shows a pattern of increasing relative contributions from model and scenario uncertainty at the expense of downscaling uncertainty and interannual variability. In terms of the magnitude of the relative contribution from each source, the decomposition for 1-day precipitation maxima (Fig. 3b) is very similar to that for annual totals (Fig. 2b). One of the few differences is that interannual variability becomes slightly more important at the expense of model uncertainty, particularly in the tropics. For temperature projections, there are notable differences. Downscaling and interannual variability play a more important role at longer time horizons for annual maximum temperatures (Fig. 3a) compared to annual average temperatures (Fig. 2a). Recall that for annual average temperatures, scenario and model uncertainty account for most of the variance by the late 21st century (77%, globally averaged; Fig. 2a). The corresponding late-century breakdown for maximum temperatures is qualitatively different as each source contributes approximately equally (Fig. 3a).

a Variance decomposition for the annual maximum of daily maximum temperature. As in Fig. 2, columns delineate the contribution from each uncertainty source, and rows demonstrate the temporal evolution. b Variance decomposition for annual maximum 1-day precipitation, in the same layout as a. The gray boxes in the lower left of each subplot give the area-weighted global average of each decomposition. A version of this plot with a more granular colormap is available in the Supplementary Information (Supplementary Fig. 29).

We find qualitatively similar results for the annual maxima of daily average temperature and daily minimum temperature (Supplementary Fig. 31), although downscaling is slightly less important in both cases. We also consider how the uncertainty partitioning changes for temporally compounding extremes by repeating the calculation for 5-day maxima (Supplementary Fig. 32). This made very little difference for temperature projections; for precipitation, it led to a small decrease in the contribution from downscaling uncertainty and a corresponding increase in the importance of interannual variability.

There are several possible measures of climate extremes beyond annual 1-day maxima. Different end-users may care about distinct characteristics of a given hazard40, including its magnitude and timing in relation to relevant human or environmental thresholds, its correlation structure across space and time, and whether it co-occurs with another hazard41. Although mindful that any set of indices will neglect many aspects of climate extremes that are important for specific sectors, we now define and analyze a suite of metrics that aim to be as broad as possible. We analyze three threshold indices: the annual number of extremely hot days (defined as daily maximum temperature exceeding the local historical 99th percentile), the annual number of dry days (daily precipitation less than 1 mm), and the annual number of extremely wet days (daily precipitation exceeding the local historical 99th percentile). The resulting uncertainty decompositions are shown in Fig. 4.

Variance decomposition for: a annual number of extremely hot days, b annual number of dry days, and c annual number of extremely wet days. As in Figs. 2 and 3, columns delineate the contribution from each uncertainty source, and rows demonstrate the temporal evolution. Extremely hot days and extremely wet days are defined to occur when daily maximum temperature and daily precipitation exceed their local 99th percentiles, respectively, where percentiles are calculated over 1980–2014 from the GMFD observational dataset (see “Methods”). Dry days are defined to occur when daily precipitation is less than 1 mm. The gray boxes in the lower left of each subplot give the area-weighted global average of each decomposition. A version of this plot with a more granular colormap is available in the Supplementary Information (Supplementary Fig. 30).

Several insights emerge from Fig. 4. First, there continues to exist a clear qualitative difference between the precipitation- and temperature-based indices. The decomposition for dry days (Fig. 4b) and extremely wet days (Fig. 4c) is roughly constant over time and largely dominated by downscaling uncertainty and interannual variability, while scenario uncertainty again contributes negligibly. In contrast, the results for extremely hot days (Fig. 4a) show a similar temporal pattern to previous temperature-derived metrics where model and scenario uncertainty play an increasingly important role at longer time horizons. Second, note that in many regions, model uncertainty is the most important factor by the late 21st century in projecting extremely hot days, which contrasts with our results for the non-threshold metric of temperature extremes, annual maxima (Fig. 2a). This is likely related to the large spread in CMIP6 climate sensitivities42. Since we define an extremely hot day in reference to a constant (local) temperature threshold, higher-sensitivity GCMs will tend to cross that threshold earlier than lower-sensitivity GCMs, leading to a relative increase in model uncertainty. Third, for all metrics analyzed thus far, the annual number of dry days is markedly the most sensitive to the choice of downscaled ensemble. This may be related to observational disagreements regarding the historical frequency of dry days (Supplementary Fig. 17) but could also be driven in part by methodological differences in whether and how the bias-correction algorithms adjust their outputs based on minimum precipitation thresholds43. Finally, our results for extremely hot days and extremely wet days are in reasonable qualitative agreement with those of B23, notwithstanding some differences in the magnitudes that arise due to our inclusion of downscaling uncertainty and our decision not to apply decadal averaging.

In the Supplementary Information, we test the sensitivity of these results to several different threshold definitions (Supplementary Figs. 33–45). Broadly, we find that downscaling becomes less important if daily average or minimum temperatures are considered instead of the daily maximum, and interannual variability becomes more important if more extreme thresholds are used. Calculating the historical quantiles from a separate observational dataset can lead to some differences in the contribution from downscaling uncertainty, but this does not change the qualitative results. We also include extensions to account for temporally compounding extremes by calculating the longest consecutive run of days crossing each threshold, the main effect of which is to increase the importance of interannual variability (Supplementary Figs. 33–45). Lastly, we also investigate a simple multivariate metric, extremely hot and dry days (Supplementary Figs. 46–47), which shows a very similar decomposition to that for extremely hot days. This indicates that conditioning the occurrence of daily temperature extremes on concurrent low precipitation does little to alter the uncertainty decomposition, although it is unclear whether this result would hold over longer timescales.

Implications for risk assessment

Our results so far have been presented in terms of the relative contribution of each uncertainty source. However, the magnitude of these contributions in physical units is also important, particularly for end-users who require decision-relevant information. In Fig. 5, we show the absolute uncertainty attributed to downscaling in the middle of the century for four previously defined indices of extremes. The absolute uncertainty is measured in physical units by computing the standard deviation across downscaled ensembles rather than the variance. Although the uncertainty decomposition only holds in variance space, Fig. 5 can provide a heuristic estimate of the extent to which the overall projection spread may be underestimated by relying on a single set of downscaled projections. Note that in contrast to the percentage shares, the absolute uncertainty contributed by each source tends only to grow over time (Supplementary Figs. 69–75).

Absolute uncertainty attributed to downscaling, averaged over 2050–2069, for: a annual number of extremely hot days, b annual maximum of daily maximum temperature, c annual number of extremely wet days, and d annual maximum 1-day precipitation. The absolute uncertainty is expressed via the standard deviation across ensembles at each grid point and is measured in physically meaningful units. The gray boxes in the lower left of each subplot give the area-weighted global average of each contribution.

The heterogeneity across locations and metrics demonstrated in Fig. 5 suggests that the relevance of downscaling uncertainty for local decision and risk analyses is highly contextual. To demonstrate this further, we provide a stylized example around characterizing mid-century hot and wet extremes in Seattle, shown in Fig. 6, which illustrates the effects of only sampling from one downscaled ensemble relative to the entire super-ensemble. Across most metrics and emissions scenarios shown in Fig. 6, key distributional statistics such as the upper percentiles and inter-percentile ranges can vary considerably among downscaled ensembles as well as in relation to the full ensemble. For the precipitation-based metrics (Fig. 6c, d), the differences among downscaled ensembles are larger that those induced by switching from the lowest to highest emissions scenario. Even for the temperature-based metrics that show strong sensitivities to emissions scenario (Fig. 6a, b), relying on different downscaled ensembles can lead to qualitatively different risk perceptions in relation to local thresholds. Consider, for example, the extraordinary 2021 Pacific Northwest heatwave, which has been extensively studied after breaking several temperature records throughout the region44,45,46,47, leading to widespread impacts across many sectors48. During this event, Seattle-Tacoma airport recorded a temperature of 42.2 °C49 (denoted by the dashed vertical line in Fig. 6b). Figure 6b shows that estimates of the likelihood of surpassing this record by mid-century depend on the choice of downscaled ensemble, as one ensemble projects that this record is unlikely to be broken by mid-century even under an extreme emissions scenario.

Comparison of the probability distribution generated by relying on the full ensemble (including all downscaled ensembles) versus any one downscaled ensemble, conditioned on the highest (SSP5-8.5) and lowest (SSP1-2.6) emissions scenarios. Distributions are constructed for the grid point containing Seattle over 2050–2069 for different metrics: a annual number of extremely hot days, b annual maximum of daily maximum temperature, c annual number of extremely wet days, and d annual maximum 1-day precipitation. Boxplot whiskers span the 99% range. The dashed vertical line in (b) denotes the highest temperature recorded at Seattle-Tacoma airport during the 2021 Pacific Northwest heatwave. Details on each downscaled ensemble and the SSP scenarios can be found in the Methods section and Supplementary Information. We neglect the carbonplan ensembles here since they contain a limited number of models.

Although we present here a highly simplified example that neglects many of the challenges of implementing risk assessments in a nonstationary climate50,51, it nonetheless serves to illustrate how modeling choices surrounding downscaled data sources can induce substantively different hazard characterizations. These results suggest that careful consideration should be given to the role of downscaling uncertainty within any broader framework as failure to do so may lead to decisions that are not robust to the full set of plausible climate futures.

Discussion

Our main finding, that downscaling and bias-correction often contribute considerable uncertainty in local climate projections, is robust to a number of methodological checks that we outline in the Methods section and Supplementary Information. There are nevertheless several possible avenues of future research. First, note that despite our simplified treatment of internal variability (see associated discussion in “Methods”), we nonetheless find that interannual variability is an important driver of uncertainty for many metrics. For several precipitation-based metrics and indices of extremes, the combined contribution of interannual variability and downscaling drive a large share of the variance. This would suggest that future work characterizing uncertainties around the role of internal variability at local scales would be valuable. The framework presented here could be extended to include downscaled initial condition ensembles52, but to our knowledge such an ensemble does not yet exist at global scale. Independent estimates of internal variability at local scales, potentially derived from hybrid statistical techniques53, could also be used to test for potential biases in the model-derived representation used here.

Second, one important limitation of this work is the necessarily unbalanced sample design. Our constraint of global spatial coverage led to the omission of many downscaled ensembles that are only available at continental or national scales. As such, many GCMs in our super-ensemble are only downscaled via two different methods, and our estimate of the downscaling uncertainty (the variance across downscaling methods) likely suffers from biases associated with this small sample size. We partially mitigate this bias by averaging each individual estimate across GCMs but expanding the super-ensemble to include a greater variety of downscaling methods should lead to more robust estimates. Adding more ensembles to the uncertainty decomposition could increase or decrease the relative importance of downscaling29.

It is also important to highlight that our definition of downscaling uncertainty encompasses more factors than just the selection of each downscaling and bias-correction algorithm. In general, many decisions related to the development of a downscaled ensemble can contribute to observed differences in outputs. Such choices include the underlying observational dataset and the temporal extent used for training, and any re-gridding processes applied to the observations or native GCMs. Alternate configurations of the same downscaling or bias-correction algorithm, for example, related to the preservation of GCM-simulated trends, can also lead to considerable differences54. In the Supplementary Information, we provide qualitative evidence that in many cases considered here, downscaling uncertainty is related to disagreements in the historical record. However, additional research is needed to more precisely separate the effects of each component in the downscaling and bias-correction process.

Third, we make an implicit assumption that the outputs from each scenario, GCM, and downscaling method represent equally plausible realizations of future climate. Methods are emerging that aim to constrain climate projections by downweighting55,56 or sub-selecting57,58 GCMs based on their agreement with historical observations, potentially combined with probabilistic emissions constraints59,60,61. However, the extension of such techniques to downscaled outputs remains an area of active research62,63 and may be complicated by the presence of observational disagreements at local scales if downscaling algorithms rely on conflicting datasets64. The application of any such framework would decrease absolute uncertainty, but may not reduce the relative importance of downscaling uncertainty if some GCMs or scenarios are down-weighted or removed from the ensemble. Future work investigating these questions would be valuable.

Fourth, we again highlight that our selection of climate metrics is necessarily limited. Since all of the indices we analyze are calculated annually, we are unable to probe extremes that manifest on longer timescales (for example, the magnitude of a 10-year return period event) and we aggregate over seasonal information that is important for many sectors. A useful extension to this work could test how these aspects of climate hazards alter the variance decompositions. In addition, moving beyond standardized meteorological indices to analyze targeted metrics that are relevant for specific sectors may lead to qualitatively different results65.

Finally, note that variance decomposition is only one of many possible approaches to characterize uncertainty. More formal sensitivity analysis techniques can be applied to understand specific aspects of the outcome space66 and ensure that inferences are relevant for downstream decision analyses67. In addition, climate projections are often used to drive sectoral models that contain their own structural and parametric uncertainties68,69,70,71. Socioeconomic outcomes of interest may well be more sensitive to the representation of these environmental and/or human system dynamics, and sound risk management strategies should account for the uncertainty in each relevant system as well as their interactions72.

Our results have important implications for many users of downscaled climate products. Across almost all locations, time horizons, and indices of climatic change that we analyze, downscaling rarely represents a negligible source of uncertainty. This would imply that a strategy of sampling from more than one downscaled ensemble is advisable during risk or impact analyses that are sensitive to low-probability climate hazards, as has been suggested elsewhere29,34. Such a sampling may represent a substantial increase in data and computational requirements, so we emphasize that it may not be necessary in all cases. Our results can provide some initial heuristic guidance in this regard—they suggest that downscaling uncertainty is particularly important over the near term, in projections involving precipitation or climate extremes, and in regions of observational disagreement. We have also developed an interactive JupyterLab-based dashboard, deployed on the MSD-LIVE platform, that facilitates further exploration of our results: https://lafferty-sriver-2023-downscaling-uncertainty.msdlive.org. In general, we urge end-users to follow existing recommendations regarding the use of downscaled climate products16,73, including taking a process-informed approach and relying on expert knowledge of local weather and climate phenomena74. End-users may also consider whether downscaled projections are the most appropriate method of generating future climate information; other complementary approaches might include applying GCM-simulated changes to gridded historical data75 or developing a statistical model based on pointwise observations76.

This work also adds to a growing body of literature applying an increasingly diverse set of tools to characterize the uncertainties of a changing climate and the resulting environmental and socioeconomic impacts. Deliberate efforts to coordinate methodological comparisons would help build confidence in the insights derived from this line of research, which in turn will be necessary to guide best practices for the increasing number of both public and private actors who are incorporating climate projections into their decision-making processes.

Methods

Data sources

We leverage five ensembles of statistically downscaled and bias-corrected GCM outputs: NASA NEX-GDDP-CMIP677 (which we refer to as NEX-GDDP), CIL-GDPCIR78, ISIMIP3BASD79,80 (which we refer to as ISIMIP3b), and two ensembles from carbonplan81: GARD-SV82 and DeepSD-BC83. Some details on the configurations of each approach can be found in Supplementary Table 2. Each ensemble is filtered to ensure: (1) parent GCMs are available in at least two ensembles, (2) downscaled outputs for each GCM are available for at least 3 Shared Socioeconomic Pathways (SSPs)84, (3) downscaled outputs are missing no more than one variable (from tasmax, tasmin, and pr), and (4) downscaling is performed on the same simulation member of the parent GCM. Satisfying these requirements results in dropping 13 of 35 NEX-GDDP parent models and 8 of 25 CIL-GDPCIR parent models. All ISIMIP3b outputs are used. Additional outputs from different downscaling techniques are available in the carbonplan dataset but do not satisfy the above requirements. After calculating each metric in each ensemble, all outputs are conservatively re-gridded to a common 0.25° grid.

For the threshold metrics that require comparing projection outputs to historical quantiles, we rely on two observational datasets: the Global Meteorological Forcing Dataset (GMFD) for Land Surface Modeling85 and the ERA5 reanalysis from the European Centre for Medium-Range Weather Forecasts86. These products are chosen because they are available globally at 0.25° spatial resolution. GMFD is the training dataset for the NEX-GDDP ensemble, and ERA5 is the training dataset for the CIL-GDPCIR ensemble and both carbonplan ensembles, although with different temporal extents. The ISIMIP3b ensemble is trained on W5E5 v2.087,88, which is only available at 0.5° spatial resolution. The quantiles are calculated from daily data over 1980–2014. We conservatively re-grid both observational datasets to the native grid of each downscaled ensemble before calculating the threshold metrics. Our definition of extremely hot days and extremely wet days in the main results is based on daily maximum temperature and daily total precipitation exceeding the local 99th percentile from GMFD, respectively. In the Supplementary Information, we compare the GMFD-calculated quantiles to those obtained from ERA5 (Supplementary Figs. 18–21).

Uncertainty partitioning

Following previous works, we employ a simple variance decomposition approach to calculate the relative uncertainty arising from four sources: scenario uncertainty, model/GCM uncertainty, downscaling uncertainty, and interannual variability. Additionally, in a similar manner to Wootten et al.29, we employ a weighting strategy that accounts for data coverage. Our method is as follows: let x(t, s, m, d) represent a given climate metric in some location at year t from scenario s, parent GCM m, and downscaling method d. We first estimate the forced response \(\hat{x}(t,s,m,d)\) by fitting a 4th-order polynomial over 2015–2100. Interannual variability is then estimated as the centered rolling 11-year variance of the difference between the extracted forced response and the raw outputs, averaged over all outputs. The assumption of constant interannual variability was highlighted as one shortcoming of HS09, so in this work we allow the magnitude of interannual variability to evolve over time. The contribution of each remaining uncertainty source is calculated based on the forced response. Scenario uncertainty is estimated as the variance over scenarios of the multimodel, multi-method mean,

where N(s) is the total number of downscaled outputs available for scenario s. The above definition may underestimate the true scenario uncertainty when the multimodel, multi-method response is weak. Brekke and Barsugli89 propose taking the variance over scenarios before averaging to circumvent this issue:

Here, Nm and Nd are the number of distinct GCMs and downscaling methods in our super-ensemble, respectively. Our main results are based on the former definition of scenario uncertainty, following much of the existing literature. In the Supplementary Information, we show that scenario uncertainty is indeed larger under the Brekke and Barsugli definition, although this does not change the qualitative results (Supplementary Figs. 48–54). Model uncertainty is estimated as the weighted mean of the variance across models,

The weights ws,d are chosen such that if more parent GCMs are available for a given downscaling method and scenario (i.e., if the variance is calculated across more GCMs), those methods and scenarios are weighted higher:

Here, m(s, d) indicates the number of parent models that have been downscaled using method d for scenario s. Downscaling uncertainty is estimated as the weighted mean of the variance across methods:

where the weights ws,m are chosen such that if more downscaled outputs are available for a given GCM and scenario, those GCMs and scenarios are weighted higher:

Here, d(s, m) indicates the number of downscaled outputs available from parent GCM m and scenario s. The weighting strategy can be made more intuitive with an example: from Supplementary Table 1, there are five different downscaled outputs available from the CanESM5 parent GCM whereas only two different downscaled outputs are available from CMCC-ESM2 (neglecting SSP availability). The weighting strategy assumes that the estimated downscaling uncertainty from CanESM5 provides more information about the true uncertainty than the estimate from CMCC-ESM2. In this illustrative example, our estimate for the true downscaling uncertainty would be a weighted average of the two individual estimates, where the CanESM5 estimate is weighted higher by a factor of 5/2. In the Supplementary Information, we recalculate our main results without performing any weighting and show that the qualitative interpretations are unchanged (Supplementary Figs. 55–61).

We assume that the total variance in each year is given by the sum of each individual variance estimate. Our main results show the relative contribution of each uncertainty source measured as a fraction of the total variance.

Methodological caveats

Here we outline two additional methodological caveats associated with our main results. First, the 4th-order polynomial fit used to separate the forced response from interannual variability likely leads to an underestimate of the true extent of internal variability since the fit will interpret unforced fluctuations as being part of the forced response. L20 show that for coarse-resolution GCM outputs, this bias can be particularly acute at regional scales and for noisy output variables such as precipitation, reaching 50% of the total uncertainty in some cases. One approach to mitigate this bias is to average over large spatial scales, but this would considerably reduce the influence of downscaling, which is our primary focus in this work. Alternatively, using a large number of model outputs may achieve a more robust averaged estimate. Our inclusion of over 200 downscaled model outputs across 22 GCMs may be sufficient in many cases, but this is difficult to verify within the current framework. As noted in L20, more sophisticated methods of extracting the forced response could also be used (e.g., ref. 90).

Second, our main results neglect interactions among uncertainty sources, which previous studies have shown to be significant in some instances91. To estimate the importance of interaction effects, we implement two checks. We first perform an ANOVA-based variance decomposition (described in the Supplementary Information) for all metrics across our three example cities. We find that interactions are small for projections of climate averages (Supplementary Fig. 64) but can sometimes be important for extremes (Supplementary Fig. 65). B23 note that accounting for the interaction between model and scenario uncertainty may alter their results, but we find this effect to be small—the interaction between model and downscaling uncertainty is typically larger. Our ANOVA results do not qualitatively alter the relative importance of each uncertainty source, but rather reassign the fractions of variance partitioned to each source to additional interaction terms. For example, when neglecting interactions we find that downscaling is the largest driver of variance for the annual number of extremely hot days in Lagos by the end of the century (Supplementary Fig. 63). The corresponding ANOVA results reveal large model-downscaling and scenario-downscaling interaction effects (Supplementary Fig. 65), but the overall (combined) influence of downscaling uncertainty remains important.

We also test whether our assumed total uncertainty, the sum of each individual term, differs from true total uncertainty, given by the variance across all outputs:

Regions and metrics for which the true total uncertainty is considerably different from our assumed total uncertainty indicate that interaction effects may be important. In the Supplementary Information, we show the ratio of these two quantities for each metric globally (Supplementary Fig. 68) and for our example cities (Supplementary Figs. 66 and 67). Our independence assumption generally leads to small errors (≤10%) for annual averages and annual 1-day maxima. The Sahara Desert stands out as a region of potentially large interaction effects for the precipitation-based metrics. For the threshold metrics, our assumption is less defensible as the discrepancy can reach 20% over many regions. Interaction effects may be particularly important for temperature-based metrics over the Amazon rainforest, and the Sahara again shows comparatively large discrepancies for the precipitation-based metrics. Although these discrepancies do not necessarily indicate the presence of large interaction effects, future research could investigate them in more detail.

Data availability

The NEX-GDDP ensemble is available from the NASA Center for Climate Simulation: https://ds.nccs.nasa.gov/thredds/catalog/AMES/NEX/GDDP-CMIP6/catalog.html. The CIL-GDPCIR ensemble is available on Microsoft Planetary Computer: https://planetarycomputer.microsoft.com/dataset/group/cil-gdpcir. The ISIMIP3b ensemble is available from the ISIMIP repository: https://data.isimip.org/. Both carbonplan ensembles are hosted on Microsoft Azure. Example code showing how to access the data can be found at the following GitHub repository: https://github.com/carbonplan/cmip6-downscaling/. The GMFD observational dataset is available from the National Center for Atmospheric Research: https://rda.ucar.edu/datasets/ds314.0/. The ERA5 reanalysis product is available from the Copernicus Climate Change Service: https://www.ecmwf.int/en/forecasts/datasets/reanalysis-datasets/era5. NetCDF files of our main decomposition results are available on the MSD-LIVE repository93.

Code availability

Code to reproduce this analysis is available at the following GitHub repository and archived on Zenodo94: https://github.com/david0811/lafferty-sriver_2023_npjCliAtm.

Change history

10 January 2024

A Correction to this paper has been published: https://doi.org/10.1038/s41612-023-00551-8

References

Doblas-Reyes, F. et al. Linking global to regional climate change. in Climate Change 2021: The Physical Science Basis. Contribution of Working Group I to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change (Cambridge University Press, 2021).

Lee, J.-Y. et al. Future global climate: Scenario-based projections and near-term information. in Climate Change 2021: The Physical Science Basis. Contribution of Working Group I to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change (Cambridge University Press, 2021).

Hawkins, E. & Sutton, R. The potential to narrow uncertainty in regional climate predictions. Bull. Am. Meteorol. Soc. 90, 1095–1107 (2009).

Bonan, D. B., Lehner, F. & Holland, M. M. Partitioning uncertainty in projections of Arctic sea ice. Environ. Res. Lett. 16, 044002 (2021).

Hawkins, E. & Sutton, R. The potential to narrow uncertainty in projections of regional precipitation change. Clim. Dyn. 37, 407–418 (2011).

Lehner, F. et al. Partitioning climate projection uncertainty with multiple large ensembles and CMIP5/6. Earth Syst. Dynam. 11, 491–508 (2020).

Blanusa, M. L., López-Zurita, C. J. & Rasp, S. Internal variability plays a dominant role in global climate projections of temperature and precipitation extremes. Clim. Dyn. 61, 1931–1945 (2023).

Schwarzwald, K. & Lenssen, N. The importance of internal climate variability in climate impact projections. Proc. Natl. Acad. Sci. USA 119, e2208095119 (2022).

Rössler, O. et al. Challenges to link climate change data provision and user needs: perspective from the COST-action VALUE. Int. J. Climatol. 39, 3704–3716 (2019).

Carleton, T. et al. Valuing the global mortality consequences of climate change accounting for adaptation costs and benefits. Q. J. Econ. 137, 2037–2105 (2022).

Rode, A. et al. Estimating a social cost of carbon for global energy consumption. Nature 598, 308–314 (2021).

Martinich, J. & Crimmins, A. Climate damages and adaptation potential across diverse sectors of the United States. Nat. Clim. Change 9, 397–404 (2019).

Cook, L. M., Anderson, C. J. & Samaras, C. Framework for incorporating downscaled climate output into existing engineering methods: application to precipitation frequency curves. J. Infrastruct. Syst. 23, 04017027 (2017).

Fiedler, T. et al. Business risk and the emergence of climate analytics. Nat. Clim. Change 11, 87–94 (2021).

Hewitson, B. C., Daron, J., Crane, R. G., Zermoglio, M. F. & Jack, C. Interrogating empirical-statistical downscaling. Clim. Change 122, 539–554 (2014).

Maraun, D. Bias correcting climate change simulations—a critical review. Curr. Clim. Change Rep. 2, 211–220 (2016).

Bürger, G., Murdock, T. Q., Werner, A. T., Sobie, S. R. & Cannon, A. J. Downscaling extremes-an intercomparison of multiple statistical methods for present climate. J. Clim. 25, 4366 – 4388 (2012).

Gutmann, E. et al. An intercomparison of statistical downscaling methods used for water resource assessments in the United States. Water Resour. Res. 50, 7167–7186 (2014).

Lopez-Cantu, T., Prein, A. F. & Samaras, C. Uncertainties in future U.S. extreme precipitation from downscaled climate projections. Geophys. Res. Lett. 47, e2019GL086797 (2020).

Tabari, H., Paz, S. M., Buekenhout, D. & Willems, P. Comparison of statistical downscaling methods for climate change impact analysis on precipitation-driven drought. Hydrol. Earth Syst. Sci. 25, 3493–3517 (2021).

Chen, J., Brissette, F. P., Chaumont, D. & Braun, M. Performance and uncertainty evaluation of empirical downscaling methods in quantifying the climate change impacts on hydrology over two North American river basins. J. Hydrol. 479, 200–214 (2013).

Malek, K. et al. Bias correction of hydrologic projections strongly impacts inferred climate vulnerabilities in institutionally complex water systems. J. Water Res. Plan. Man. 148, 04021095 (2022).

Wootten, A. M., Martin, E., Randklev, C. R. & Smith, R. Projected changes to streamflow and stream temperature in central Texas: how much will the river flow? Earth Interact. 27, 220021 (2023).

Pourmokhtarian, A., Driscoll, C. T., Campbell, J. L., Hayhoe, K. & Stoner, A. M. K. The effects of climate downscaling technique and observational data set on modeled ecological responses. Ecol. Appl. 26, 1321–1337 (2016).

Alder, J. R. & Hostetler, S. W. The dependence of hydroclimate projections in snow-dominated regions of the western United States on the choice of statistically downscaled climate data. Water Resour. Res. 55, 2279–2300 (2019).

Bürger, G., Sobie, S. R., Cannon, A. J., Werner, A. T. & Murdock, T. Q. Downscaling Extremes: An Intercomparison Of Multiple Methods For Future Climate. J. Climate 26, 3429–3449 (2013).

Chegwidden, O. S. et al. How do modeling decisions affect the spread among hydrologic climate change projections? Exploring a large ensemble of simulations across a diversity of hydroclimates. Earth’s Future 7, 623–637 (2019).

Wang, H.-M., Chen, J., Xu, C.-Y., Zhang, J. & Chen, H. A framework to quantify the uncertainty contribution of GCMs over multiple sources in hydrological impacts of climate change. Earth’s Future 8, e2020EF001602 (2020).

Wootten, A., Terando, A., Reich, B., Boyles, R. & Semazzi, F. Characterizing sources of uncertainty from global climate models and downscaling techniques. J. Appl. Meteorol. Clim. 56, 3245–3262 (2017).

Wu, Y. et al. Quantifying the uncertainty sources of future climate projections and narrowing uncertainties with bias correction techniques. Earth’s Future 10, e2022EF002963 (2022).

Yang, Y. & Tang, J. Downscaling and uncertainty analysis of future concurrent long-duration dry and hot events in China. Clim. Change 176, 11 (2023).

Eyring, V. et al. Overview of the Coupled Model Intercomparison Project Phase 6 (CMIP6) experimental design and organization. Geosci. Model Dev. 9, 1937–1958 (2016).

Pörtner, H. O. et al. Summary for policymakers. in Climate Change 2022: Impacts, Adaptation and Vulnerability. Contribution of Working Group II to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change (Cambridge University Press, 2022).

Lopez-Cantu, T., Webber, M. K. & Samaras, C. Incorporating uncertainty from downscaled rainfall projections into climate resilience planning in U.S. cities. Environ. Res. Infrastruct. Sustain. 2, 045006 (2022).

Jiang, Y. et al. Inter-comparison of multiple statistically downscaled climate datasets for the Pacific Northwest, USA. Sci. Data. 5, 180016 (2018).

Lanzante, J. R., Dixon, K. W., Nath, M. J., Whitlock, C. E. & Adams-Smith, D. Some pitfalls in statistical downscaling of future climate. Bull. Am. Meteorol. Soc. 99, 791–803 (2017).

Wootten, A. M., Dixon, K. W., Adams-Smith, D. J. & McPherson, R. A. Statistically downscaled precipitation sensitivity to gridded observation data and downscaling technique. Int. J. Climatol. 41, 980–1001 (2021).

Rastogi, D., Kao, S.-C. & Ashfaq, M. How may the choice of downscaling techniques and meteorological reference observations affect future hydroclimate projections? Earth’s Future 10, e2022EF002734 (2022).

AghaKouchak, A. et al. Climate extremes and compound hazards in a warming world. Annu. Rev. Earth Pl. Sc. 48, 1–30 (2020).

McPhillips, L. E. et al. Defining extreme events: a cross-disciplinary review. Earth’s Future 6, 441–455 (2018).

Zscheischler, J. et al. A typology of compound weather and climate events. Nat. Rev. Earth Environ. 1, 333–347 (2020).

Zelinka, M. D. et al. Causes of higher climate sensitivity in CMIP6 models. Geophys. Res. Lett. 47, e2019GL085782 (2020).

Lanzante, J. R., Dixon, K. W., Adams-Smith, D., Nath, M. J. & Whitlock, C. E. Evaluation of some distributional downscaling methods as applied to daily precipitation with an eye towards extremes. Int. J. Climatol. 41, 3186–3202 (2021).

Bartusek, S., Kornhuber, K. & Ting, M. 2021 North American heatwave amplified by climate change-driven nonlinear interactions. Nat. Clim. Change 12, 1143–1150 (2022).

Heeter, K. J. et al. Unprecedented 21st century heat across the Pacific Northwest of North America. NPJ Clim. Atmos. 6, 5 (2023).

McKinnon, K. A. & Simpson, I. R. How unexpected was the 2021 Pacific Northwest heatwave? Geophys. Res. Lett. 49, e2022GL100380 (2022).

Thompson, V. et al. The 2021 western North America heat wave among the most extreme events ever recorded globally. Sci. Adv. 8, eabm6860 (2022).

White, R. H. et al. The unprecedented Pacific Northwest heatwave of June 2021. Nat. Commun. 14, 727 (2023).

NOWData - NOAA Online Weather Data. https://www.weather.gov/wrh/climate (2023).

Doss-Gollin, J. & Keller, K. A subjective Bayesian framework for synthesizing deep uncertainties in climate risk management. Earth’s Future 11, e2022EF003044 (2023).

Keller, K., Helgeson, C. & Srikrishnan, V. Climate risk management. Annu. Rev. Earth Pl. Sc. 49, 95–116 (2021).

Maher, N., Milinski, S. & Ludwig, R. Large ensemble climate model simulations: introduction, overview, and future prospects for utilising multiple types of large ensemble. Earth Syst. Dynam. 12, 401–418 (2021).

Gupta, R. S., Steinschneider, S. & Reed, P. M. Understanding contributions of paleo-informed natural variability and climate changes on hydroclimate extremes in the central valley region of California. Authorea https://doi.org/10.22541/essoar.167870424.46495295/v1 (2023).

Lanzante, J. R., Adams-Smith, D., Dixon, K. W., Nath, M. & Whitlock, C. E. Evaluation of some distributional downscaling methods as applied to daily maximum temperature with emphasis on extremes. Int. J. Climatol. 40, 1571–1585 (2020).

Brunner, L. et al. Reduced global warming from CMIP6 projections when weighting models by performance and independence. Earth Syst. Dynam. 11, 995–1012 (2020).

Qasmi, S. & Ribes, A. Reducing uncertainty in local temperature projections. Sci. Adv. 8, eabo6872 (2022).

Herger, N. et al. Ensemble optimisation, multiple constraints and overconfidence: a case study with future Australian precipitation change. Clim. Dyn. 53, 1581–1596 (2019).

Palmer, T. E. et al. Performance-based sub-selection of CMIP6 models for impact assessments in Europe. Earth Syst. Dynam. 14, 457–483 (2023).

Lehner, F., Hawkins, E., Sutton, R., Pendergrass, A. G. & Moore, F. C. New potential to reduce uncertainty in regional climate projections by combining physical and socio-economic constraints. AGU Adv. 4, e2023AV000887 (2023).

Srikrishnan, V., Guan, Y., Tol, R. S. J. & Keller, K. Probabilistic projections of baseline twenty-first century CO2 emissions using a simple calibrated integrated assessment model. Clim. Change 170, 37 (2022).

Moore, F. C. et al. Determinants of emissions pathways in the coupled climate-social system. Nature 603, 103–111 (2022).

Wootten, A. M., Massoud, E. C., Sengupta, A., Waliser, D. E. & Lee, H. The effect of statistical downscaling on the weighting of multi-model ensembles of precipitation. Climate 8, 138 (2020).

Wootten, A. M., Massoud, E. C., Waliser, D. E. & Lee, H. Assessing sensitivities of climate model weighting to multiple methods, variables, and domains in the south-central United States. Earth Syst. Dynam. 14, 121–145 (2023).

Lorenz, R. et al. Prospects and caveats of weighting climate models for summer maximum temperature projections over North America. J. Geophys. Res. Atmospheres 123, 4509–4526 (2018).

Lafferty, D. C. et al. Statistically bias-corrected and downscaled climate models underestimate the adverse effects of extreme heat on U.S. maize yields. Commun. Earth Environ. 2, 196 (2021).

Pianosi, F. et al. Sensitivity analysis of environmental models: a systematic review with practical workflow. Environ. Modell. Softw. 79, 214–232 (2016).

Razavi, S. et al. The future of sensitivity analysis: an essential discipline for systems modeling and policy support. Environ. Modell. Softw. 137, 104954 (2021).

Karimi, T., Reed, P., Malek, K. & Adam, J. Diagnostic framework for evaluating how parametric uncertainty influences agro-hydrologic model projections of crop yields under climate change. Water Resour. Res. 58, e2021WR031249 (2022).

Mendoza, P. A. et al. Effects of hydrologic model choice and calibration on the portrayal of climate change impacts. J. Hydrometeorol. 16, 762–780 (2015).

Müller, C. et al. Exploring uncertainties in global crop yield projections in a large ensemble of crop models and CMIP5 and CMIP6 climate scenarios. Environ. Res. Lett. 16, 034040 (2021).

Rising, J., Tedesco, M., Piontek, F. & Stainforth, D. A. The missing risks of climate change. Nature 610, 643–651 (2022).

Srikrishnan, V. et al. Uncertainty analysis in multi-sector systems: considerations for risk analysis, projection, and planning for complex systems. Earth’s Future 10, e2021EF002644 (2022).

Kotamarthi, R. et al. Downscaling Techniques for High-Resolution Climate Projections: From Global Change to Local Impacts (Cambridge University Press, 2021).

Maraun, D. et al. Towards process-informed bias correction of climate change simulations. Nat. Clim. Change 7, 764–773 (2017).

Schlenker, W. & Lobell, D. B. Robust negative impacts of climate change on African agriculture. Environ. Res. Lett. 5, 014010 (2010).

Sriver, R. L., Lempert, R. J., Wikman-Svahn, P. & Keller, K. Characterizing uncertain sea-level rise projections to support investment decisions. PLoS ONE 13, 1–35 (2018).

Thrasher, B. et al. NASA global daily downscaled projections, CMIP6. Sci. Data. 9, 262 (2022).

Gergel, D. R. et al. Global downscaled projections for climate impacts research (GDPCIR): preserving extremes for modeling future climate impacts. EGUsphere https://egusphere.copernicus.org/preprints/2023/egusphere-2022-1513/ (2023).

Lange, S. Trend-preserving bias adjustment and statistical downscaling with ISIMIP3BASD (v1.0). Geosci. Model Dev. 12, 3055–3070 (2019).

Lange, S. ISIMIP3BASD https://doi.org/10.5281/zenodo.4686991 (2022).

Chegwidden, O. et al. Open data and tools for multiple methods of global climate downscaling https://carbonplan.org/research/cmip6-downscaling-explainer (2022).

Gutmann, E. D. et al. En-GARD: a statistical downscaling framework to produce and test large ensembles of climate projections. J. Hydrometeorol. 23, 1545–1561 (2022).

Vandal, T. et al. DeepSD: generating high resolution climate change projections through single image super-resolution. in Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’17, 1663–1672 (Association for Computing Machinery, 2017).

Riahi, K. et al. The shared socioeconomic pathways and their energy, land use, and greenhouse gas emissions implications: an overview. Global Environ. Change 42, 153–168 (2017).

Sheffield, J., Goteti, G. & Wood, E. F. Development of a 50-year high-resolution global dataset of meteorological forcings for land surface modeling. J. Clim. 19, 3088–3111 (2006).

Hersbach, H. et al. The ERA5 global reanalysis. Q. J. R. Meteorol. Soc. 146, 1999–2049 (2020).

Cucchi, M. et al. WFDE5: bias-adjusted ERA5 reanalysis data for impact studies. Earth Syst. Sci. Data 12, 2097–2120 (2020).

Lange, S. et al. WFDE5 over land merged with ERA5 over the ocean (W5E5 v2.0). https://doi.org/10.48364/ISIMIP.342217 (2021).

Brekke, L. & Barsugli, J. Uncertainties in projections of future changes in extremes. In Extremes in a Changing Climate, Vol. 65 (eds AghaKouchak, A., Easterling, D., Hsu, K., Schubert, S. & Sorooshian, S.) 309–346 (Springer, 2013).

Sippel, S. et al. Uncovering the forced climate response from a single ensemble member using statistical learning. J. Clim. 32, 5677 – 5699 (2019).

Yip, S., Ferro, C. A. T., Stephenson, D. B. & Hawkins, E. A simple, coherent framework for partitioning uncertainty in climate predictions. J. Clim. 24, 4634–4643 (2011).

Source, M. O., McFarland, M., Emanuele, R., Morris, D. & Augspurger, T. microsoft/planetarycomputer: October 2022. https://doi.org/10.5281/zenodo.7261897 (2022).

Lafferty, D. & Sriver, R. Global variance decomposition of downscaled and bias-corrected CMIP6 climate projections. https://doi.org/10.57931/1994827 (2023).

Lafferty, D. david0811/lafferty-sriver_2023_npjCliAtm: v1.0. https://doi.org/10.5281/zenodo.8244794 (2023).

Acknowledgements

This work was supported by the U.S. Department of Energy, Office of Science, Biological and Environmental Research Program, Earth and Environmental Systems Modeling, MultiSector Dynamics under Cooperative Agreement DE-SC0022141. Computations for this research were performed on the Pennsylvania State University’s Institute for Computational and Data Sciences’ Roar supercomputer. Computations for this research were performed on Microsoft Planetary Computer92. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the funding agencies. The authors are grateful to the participants of the Climate Discussion Group at the University of Illinois for useful feedback. The authors thank Carina Lansing and Casey Burleyson for assistance in developing and deploying the interactive dashboard associated with this paper. The dashboard leverages the capabilities of the MultiSector Dynamics—Living, Intuitive, Value-adding, Environment (MSD-LIVE) platform funded by the U.S. Department of Energy, Office of Science. MSD-LIVE is developed and maintained by Pacific Northwest National Laboratory. The authors are grateful to three anonymous reviewers for their helpful comments and to the editor for handling this manuscript.

Author information

Authors and Affiliations

Contributions

D.C.L.: conceptualization, methodology, data curation, formal analysis, visualization, writing—original draft, and writing—review and editing. R.L.S.: conceptualization, methodology, data curation, writing—review & editing, supervision, and funding acquisition.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lafferty, D.C., Sriver, R.L. Downscaling and bias-correction contribute considerable uncertainty to local climate projections in CMIP6. npj Clim Atmos Sci 6, 158 (2023). https://doi.org/10.1038/s41612-023-00486-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41612-023-00486-0