Abstract

This article will attempt to analyze the current impact of Artificial Intelligence in Spanish healthcare, as well as the challenges that its application poses both from an ethical and legal point of view. Technological advances, which are already being applied in healthcare, make it necessary to adapt the legal system to the new trends that are emerging in society and force healthcare professionals to review their codes of ethics in the face of new ethical dilemmas, since these codes of ethics do not have clear guidelines for action on AI that raise relevant ethical problems to which no response is being given, except for the code of ethics for medicine, which has recently been included. After a review of the last 5 years of Spanish and European regulations in medicine, it can be concluded that the regulation and legislation of the application of AI is very deficient, both in the EU and in Spain. In order to try to identify different ways of resolving conflicts related to AI in medicine, jurisprudential sentences related to the rights and obligations of health professionals, patients and users have been analyzed and reviewed. Therefore, the paper concludes with the proposal of a series of aspects to be taken into account for the future regulation of the application of AI in medicine.

Similar content being viewed by others

Introduction

Systems that involve AI, either by means of virtual assistants, or voice or image recognition programs, search engines, drones or robots, play an essential role in many jobs or help many professionals. This is mainly because AI and robotics have the capacity to learn in an autonomous way, through experience and trial-error techniques, thus improving their processing systems and their deduction skills. In Medicine, the use of Narrow Artificial Intelligence is already a reality, and some initiatives have arisen to claim it must help and support healthcare professionals, instead of substituting them with AI systems designed to reproduce the actions developed by human beings (Rome Call for AI Ethics, 2020).

The implementation of robotics in Medicine is becoming more and more common. The automation of certain tasks can provide some advantages, such as a greater effectiveness, resource optimization and economic efficiency. Technologies such as 5 G and augmented reality have improved the care quality for many patients, offering more autonomy to dependent patients. An example of this are those robots capable of real-time monitoring the vital signs of patients and able to call the emergency services, if necessary (Moreno Olivares, 2020). In addition, the implementation of AI and robotics in the prediction and detection systems of illnesses can be very effective and help improve health care, since its use can allow healthcare professionals to devote more time to a more personalized care.

Besides its advantages, AI can also have certain negative impact on some human rights and freedoms, what is forcing countries to modify legal and normative systems, to guarantee that the fundamental rights and freedoms are respected. The science fiction writer Isaac Asimov proposed, in “Runaround”, in 1942 (Asimov, 1942), three fundamental laws for robotics to be acceptable, being aware of the risks of robots (or AI) acting autonomously. The first one states that a robot must not hurt humans and that they must act if they see a human being hurt. The second law states that they must obey humans, unless their orders contravene the first law, so that humans cannot use robots to cause damage to other humans. The third one states that a robot must protect its very own integrity and existence, unless it means contravening the first two laws.

The rules governing AI applied to Medicine should not be far from those suggested by Isaac Asimov 80 years ago. Legislation on the application of AI to Medicine must ensure, among other issues, the healthcare provision follows the criteria for best practices, guaranteeing that the objective of AI is to help and not to harm humans, as well as that the citizens involved are informed and that users’ data are protected. This means, for instance, ensuring the quality of systems, establishing the different liabilities in healthcare provision assisted by AI, ensuring an adequate protection of data and regulating informed consent in procedures where AI is involved.

Besides having its own legislation, Spain, as a member of the European Union (EU), must translate the binding European regulations to its own normative. Some other normative reference frameworks for Spanish Medicine are the professional codes of ethics. They must guide healthcare professionals, so that AI is used according to the norms and professional objectives. Up to this date, there are no in depth studies about how AI is regulated in Spanish Medicine.

Considering all of the above, the following research question arises: what is current legislation on AI applied to Medicine like in Spain? The aim of this research is to analyze the Spanish current legislation on AI applied to Medicine, both in terms of laws and professional codes of ethics. In addition, this study aims at suggesting some guidelines for future norms, suggestions that could be useful for healthcare professionals, for law professionals and for the academy, in general.

Methodology

This study consists of a literature review on current legislation in Spain regarding AI applied to Medicine.

-

A search was made in Spanish and international law and academic databases (Scopus, Dialnet and VLex) looking for Spanish and European legislation.

-

Communications, recommendations and reports by the European Commission and the European Parliament from the past 6 years have been reviewed, after a search in the official website of every institution, by introducing as keywords the year and the term “Artificial Intelligence”. The documents reviewed were included the Charter of Fundamental Rights of the European Union and the WMA Declaration of Helsinki on the ethical principles for medical research in humans (Helsinki, D. & World Medical Association (1975)), as well as the Treaty on the European Union. In addition, the communications for the last five years of the European Commission related to data protection and artificial intelligence and the EU Regulation 2016/679 of the European Parliament and of the Council of 27 April 2016, on the protection of natural persons with regard to the processing of personal data and on the free movement of such data have been reviewed. Finally, the communication of the Commission to the European Parliament on Artificial Intelligence for Europe, of 25 May 2018 (Brussels), the 169 articles contained in the Spanish Constitution, the National Strategy of the Ministry of Economic Affairs and Digital Transformation 2020, the Organic Law 3/2018 for the Protection of Personal Data and Guarantee of Digital Rights (Ley Orgánica 3/2018) and the Decree Law 2/2023 on urgent measures to foster the Artificial Intelligence in Extremadura were analyzed (Decreto-Ley 2/2023).

-

A search has been made in jurisprudence to identify sentences establishing the legal foundations for the application of AI in Medicine. Those sentences were retrieved from the Spanish Official Gazette (Boletín Oficial del Estado, BOE).

-

Also, the criteria from the International Normalization Organization ISO 2015 in the robotics field have also been considered.

-

The codes of ethics for Spanish healthcare related professions (Medicine, Nursery, Psychology and Physiotherapy) have been analyzed.

-

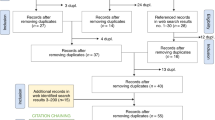

To complete the analysis, a search was made in PubMed with the MESH term “Artificial Intelligence” in Spanish, filtering the results from the last five years. From the 261 articles retrieved, 5 were excluded due to duplication and 16 due to their focus on healthcare in countries outside the European Union. A final amount of 240 articles was obtained. Those articles were analyzed to identify the use of AI in Medicine, as well as to approach in a critical way how legal norms on AI in Medicine must be like.

In the following sections, the results found will be presented and discussed and, afterwards, a proposal will be made regarding the principles that should be followed to regulate the application of AI to Medicine.

Results

Norms on the use of AI in Medicine at the European Union level

At the European Union (EU) level, there are not any specific norms on AI applied to Medicine. For this reason, the analysis was based on those European norms on AI that could be applied to the field of Medicine.

According to the European Commission (EC), AI is a system based on computer programs that have been integrated into physical devices and that show an intelligent behavior, being able to collect, analyze and interpret data from their environment to achieve specific objectives that could have an impact on its environment (European Commission, 2018). Norm ISO 8373 (ISO 8373:2012, 2012) defines robotics as those multifunctional machines that are automatically controlled and that can be reprogrammed to develop activities that were usually developed by human beings. This norm, in its article 6, establishes that all impact caused in the AI and robotics environment that could harm or damage society or that undermines fundamental rights and the norms of security established by the EU, must be considered as a high risk derived from the use of AI and robotics.

Directive 85/374/EEC (Council Directive 85/374/EEC (1985)) regulates the responsibility of defective products because of the possible damages they might cause, including products using AI or robotics. According to this Directive, if we consider robots as products, the liability issues could be solved in two different ways. The first one would imply that the producer assumes the responsibility for having made a defective product, given that the injured party can prove the real damage, the fault in the product and the relation between the fault and the damaged caused. The second one would imply that the user is responsible for having used the product in an inadequate way.

Regarding privacy and data access and management, a fundamental issue in the AI field, it is necessary to mention the Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data (Regulation EU, 2016/679). In this regulation, the norms for the protection of natural persons in relation with personal data and their free circulation are established. Article 22 talks about the right of people not to be subject to decisions based solely on the automatic management of data, when these decisions can cause negative consequences.

An essential norm in the EU regarding AI is the European Parliament resolution of 20 October 2020 with recommendations to the Commission on a civil liability regime for artificial intelligence (European Parliament, 2018), which includes recommendations devoted to the Commission on the ethical aspects of AI, robotics and related technologies. This norm includes computer programs, algorithms and data used or produced by technologies, either inside or outside the EU. If any part of those technologies has been developed or used in the EU, even if it is physically located anywhere else, the norm can be applied. The regulation intends to establish a regulatory framework and some ethical principles to develop AI, robotics and related technologies in the EU. The proposal is based on article 114 of the Treaty on the Functioning of the European Union (Consolidated version, 2008), where the adoption of measures that ensure the functioning of the internal market is fostered. A central part of it is the establishment of a single EU digital market. The regulation seeks to avoid the fragmentation of the market and the creation of national norms that could make the free circulation of products and services with AI difficult. It also fosters the imposition of regulatory limits when high risk AI systems for security and fundamental rights are involved.

Article 5 of the regulation establishes that the AI, robotics and related technologies must be used according to the EU laws and respecting dignity, autonomy and human security, as well as the fundamental rights established in the EU Charter of Fundamental Rights (Charter of Fundamental Rights of the European Union (2007)). The EU and its state members must foster research projects based on AI and robotics devoted to promote social inclusion, democracy, equity, cooperation and equality. Data (both personal and non personal) must be managed according to the regulation EU 2016/679 (Regulation EU, 2016/679) and the Directive 2002/58/EC (European Parliament, 2002), which point out that data can only be used by public authorities and state members if they have an essential public interest goal. Article 7 is on anthropocentric and anthropogenic AI. High risk AI technologies will only be developed and used when a proper and integral human supervision can be guaranteed and when human control can be restored at any time.

Article 8 states that AI and related technologies can only be used when a certain level of security can be achieved, accomplishing some minimum requirements on cybersecurity, and when it is proportional to the risk identified. In case of security risk, a plan and alternative measures must be guaranteed. Article 9 establishes that technologies cannot discriminate for reasons related to race, sex, sexual orientation, disability, language, religion, nationality, social status, economic status, political opinions or if a criminal record is hold. Article 10 specifies that AI, robotics and related technologies cannot interfere in the dissemination of misinformation, and must respect the rights of workers and promote quality education and digital literacy, to neither increase the gender gap nor prevent equal opportunities.

Article 13 highlights the right of indemnity for every person, natural or legal, that is damaged because of the development of AI, robotics and related technologies. According to article 16, the corresponding national authority will issue the European certificate of ethical conformity, upon evaluation of the technology. Article 17 indicates that developers and users will control data used or produced by AI, according to the rules and norms of the EU and the rest of European and international organizations. People responsible for the development of data must establish quality controls on the external sources of data and on the supervision mechanisms. Article 18 establishes that state members will assign an independent public authority in charge of controlling the application of the regulation. This entity will evaluate risks, without any harm to the legislation of every state, and will be the first contact in case any suspicion of ethical principles of the regulation not being fulfilled arises. This national authority will also supervise the application of national, European and international governing rules on AI and robotics. It will provide guidance and support on the EU legislation and on the best way to apply AI and the ethical principles of the regulation.

In 2018, a plan on AI was published by the European Commission (European Commission, 2018). That plan establishes that the AI Watch will be in charge of supervising the implementation of AI. In 2020, a White paper was presented on the excellence in AI (White paper, 2020). In 2021, the EC suggested actions to foster excellence in AI and to guarantee the reliability in the use of technology, stated in the proposal for the development of the Artificial Intelligence Act (Proposal, 2021) and the coordinated plan on AI (European Commission, 2018). In this plan, the EC proposes the harmonization of norms on AI (discouraging their regulation at an exclusively national level) and the regulation of its use by means of the before mentioned Artificial Intelligence Act. It proposes prohibiting AI systems that involve unacceptable risks, such as the manipulation of citizens by means of subliminal techniques or the exploitation and increase of wealth based on the identification of people’s vulnerabilities. Some other prohibitions would correspond to ranking of people based on AI by public authorities and to biometric identification in real time and remotely.

AI watch national strategies on artificial intelligence: a European perspective (Ricart, J. et al., 2022) is a coordinated plan on AI for the EU in 2022. The plan details the guidelines for the strategies on AI in the EU, already published by 23 state members and Norway. The first strategic line is to offer the adequate conditions for the development of AI. To foster the computer capacity, 20 countries are organizing policies and infrastructures for processing and managing data. The second one is to foster excellence, from research to the market. Many countries are instituting public-private collaborations and creating excellence and research centers on AI, and most of them have committed to create facilities for the experimentation in AI. The third line consists of ensuring AI works at the service of citizens and that it is a positive force for society. The fourth one is based on building a strategic leadership in high impact sectors: climate and environment, health, public sector and, depending on the countries, education, manufacturer industry, migration, asylum and law enforcement can be added. The fifth strategy on AI are plans for recovery and resilience.

Spanish legislation on the application of AI in Medicine

In Spain, there are not any specific norms on AI applied to Medicine, so those norms on AI that could be applied to Medicine will be analyzed.

The first norm analyzed is the Spanish Constitution, the one with a higher rank (Constitución Española. Boletín Oficial del Estado, 29 de diciembre de, 1978, núm. 311). Article 18 of the Spanish Constitution protects the right to honor, the right for privacy, either in a personal or familiar sphere, as well as the right to self image. The Constitutional Court (Tribunal Constitucional, TC), in its sentence 14/2003, of the 28 January 2023, indicated that, in spite of being three different rights with similar characteristics, and despite the fact that they are somehow related, they are clearly distinguished and autonomous. They are derived from human dignity and are focused on the protection of the moral assets of individuals (STC, 14/2003). Another important aspect that must be taken into consideration in this study is the one contained in article 18.4, since it governs and limits the use of computer technologies to guarantee personal privacy, family privacy and honor.

The Spanish Constitutional Court understands that the right to use computer technologies, although related to the right for privacy, is an independent right. It is so reflected in sentences such as SSTC, 254/1993 (SSTC 254/1993), of 20 July, and SSTC, 290/2000 (SSTC 290/2000), of 30 November. Besides, the Constitutional Court considers that it is a fundamental right for data protection and that citizens must have the capacity to refuse that certain personal data can be used without their consent or for a purpose other than the previously consented one.

Data protection during the use of computing is linked to ideological freedom, since the storage and use of these data can involve a risk on what we call “sensitive data”, such as political or religious ideology, or data related to health, which are regulated by article 16 of the Spanish Constitution. The Agreement of the European Council, of 28 January 1981, developed the right to the protection of personal data and the Organic Law 5/1992 (Ley Orgánica 5/1992), for the regulation of the automatized treatment of personal data, and was used for its specific regulation.

Another article of the Spanish Constitution we must bear in mind is 20.4, where the rights to transmit and receive true information by means of any distribution medium are acknowledged and protected. The Law, as specified, must regulate the professional confidentiality and the clauses for awareness during the exercise of the freedom of expression, always protecting the right of honor, the right to self image, and the right for personal and familiar privacy.

In 2019, the EU asked the state members to present and publish their coordinated plans on AI. Following the steps of the community agenda and of the United Nations, which promote Agenda 2030, Spain made its National Strategy on AI (ENIA) official in 2020. The effects of the said strategy were not adopted until 2021 (Ministerio de asuntos económicos y transición digital, 2020). The Spanish Digital Agenda 2025, which involves AI, intends to promote the transformation of all fields, including Medicine, by means of cooperation between public and private sectors. According to ENIA and Agenda 2025, Spain seeks to achieve scientific and innovation excellence in AI and making it inclusive and sustainable. To achieve these goals, Spain has developed several measures: the creation of a Spanish Network of Excellence in AI; the establishment of centers for multidisciplinary technological development fostering AI; the development of a National Plan for Digital Competences, promoting an attractive offer for university students and professionals; to reinforce the abilities of super computation and the development or the Project Data for Social Welfare Project; the release of the public-private NextTech Fund, which fosters digital entrepreneurship; the development of the National Program on Green Algorithms; the incorporation of AI to the public administration by means of applications that improve the efficiency of administrative services; the development of a AI quality seal by means of an AI Assessing Council. By means of all these measures, Spain seeks to adapt to the new context of global competitiveness in terms of AI.

The Real Decreto Legislativo 1/2007, por el que se aprueba el texto refundido de la Ley General para la Defensa de Consumidores y Usuarios (Real Decreto, 1/2007) (Real Decreto, 1/2007, that approves the revised text for the General Act on the Protection of Consumers and Users), makes reference to innovative products. Its articles can be applied to designers of systems and programs based on AI and robotics. In its article 135, it establishes that people in charge of making products are liable for those damages and harms caused by those products if they are defective. Article 138 defines as a producer the maker or importer in the EU who participates in a finished product or a raw material or who integrates any extra elements in a finished product. In those cases where it is not possible to identify the producer, the liable person will be the provider. Article 139 points out that the harmed party must prove a causal nexus between the product and the damage, as well as the fact that a defect exists in that product, or at least in some products from the same series. In article 140, it is said that exoneration can be applied if the product has not been put into circulation or if, at the moment of its commercialization, no defects existed. Also, people will be exempt if the product does not have any economic goal or if it was not created to be sold.

Another norm that could affect the use of AI is the Ley Orgánica 3/2018 de Protección de Datos Personales y garantía de los derechos digitales (Ley Orgánica, 3/2018) (Organic Law, 3/2018 for the Protection of Personal Data and Guarantee of Digital Rights). In its article 1, it defines the adaptation of Spanish legal norms to the European Regulation on the protection of data as one of its main objectives. Article 81 could also be applied to the use of AI, since it refers to the right of universal access to the internet, what can include, for instance, the use of medical websites on diagnosis or treatments involving AI systems. Article 87, in turn, mentions the right to privacy for professionals using digital systems and products. The employer can access those devices used by the professional, for the purpose of controlling the fulfilment of their legal obligations. Anyway, the laws and norms to protect privacy must be respected.

The Ley 15/2002 para la igualdad de trato y no discriminación (Ley 15/2002) (Law 15/2022 for equal treatment and non discrimination) regulates, in its article 23, for the first time in Spain, the implementation of AI by public administrations and private companies. Both sectors must promote a trustful use of AI that respects ethics and fundamental rights, as established in the Spanish Constitution. This Act mentions the minimum criteria to be taken into account: minimizing bias, transparency and accountability. Also, it points out that some mechanisms must be included to explain the origin of the data used in these systems for training algorithms, their design and the possible discriminatory impact they may have.

Codes of ethics for Spanish healthcare professionals

In the codes of ethics for Physiotherapy (Código de deontología de la fisioterapia española, 2021), Nursery (Código deontológico de la Enfermería española, 1989) and Psychology (Código deontológico del Psicólogo, 2015) professionals, there are not any references to AI. In the last proposal for the updating of the Nursery code, three articles on social networks and virtual communication were added, but the updated version is not in force yet (Propuesta actualización código deontológico de la enfermería española, 2022).

In December 2022, a new Code of ethics for Medicine was approved (Código deontológico de Medicina, 2022), and it has been applied as of 2023. In this code, there is a chapter (XXIII) on telemedicine and the information and communication technologies (ICT). In article 80, it is confirmed that, whenever telematics media or any other means of communication are used, to favor decision making, each and every person participating will be identified unequivocally. Confidentiality of patients must be preserved and the priority will be to use the means of communication that can guarantee the maximum security level possible and maximize the measures devoted to this duty. Those uses must be regulated according to the code of ethics for Medicine (Lorenzo Aparici, M. O. D. 2022). Also, in article 81, it is stated that doctors must be liable for the direct or indirect damage caused to the patient by using different communication systems. Besides, during the use of telemedicine, the doctor must preserve the same scientific, professional, true and prudent basis that is expected in traditional assistance. In article 82, it is highlighted that any research or activity related to the health data and artificial intelligence must always be for the benefit of society and to achieve objectives that foster public health. Any doctor using AI must commit to reinforcing the protection of confidentiality, control and data property of the patient. Also, models must be developed where the patient consent and procedures for the management of data are included.

Chapter XXIV of the Code discusses AI and Healthcare Databases. In articles 85 and 86, it is stated that the doctor must demand ethical control regarding research with AI. This ethical control must fulfil the criteria for transparency, reversibility and traceability for each and every process in which it intervenes, in order to guarantee patient security. Also, it is highlighted that, even if data related to healthcare that have been extracted from big healthcare databases or any robotic system can be helpful in healthcare or clinical decision making, they will not substitute the duty of the doctor with regard to their use in a proper professional way.

Discussion

As the literature research has proven, the application of AI to Medicine is not explicitly mentioned in specific norms in the EU or Spain. For that reason, the analysis has dealt with some European and Spanish norms on AI that could be applied to Medicine. Regarding the codes of ethics for healthcare professionals, only the code of ethics for Medicine regulates the professional use of AI.

General EU norms on AI are in accordance with its Charter of Fundamental Rights. The EU documents and its code of ethics on AI highlight the following aspects:

(1) the need for the establishment of a general regulatory framework and some ethical principles for its use in accordance with EU law and principles, devoted to their application to a unique digital market for the EU; (2) the importance of national supervision, in such a way that state members control the functioning of AI, by means of a public independent authority in charge of supervising the application of European principles and norms on AI; (3) the necessity of the identification of possible risks, so security and cybersecurity must me ensured, in such a way that high risk AI technologies can only be used if human integral supervision can be guaranteed and if human control can be restored at any time; (4) the requirement for the establishment of liabilities derived from damages caused by products such as AI technologies.

In Spain, as in Europe, some norms have been developed on liability derived from the use of defective products, including those that integrate AI. There is a norm on the protection of personal data and another one on digital rights, but without any specific reference to the application of AI to Medicine. The Law for equal treatment regulates the implementation of AI by public administrations and private companies (Rull, A. A. (2011)). Both must promote its use with trust and respecting the ethical values and fundamental rights of the Spanish Constitution. This law mentions minimum criteria to take into account: bias minimization, accountability and transparency. Besides, it points out that they must explain how data are used for the design and training of algorithms, as well as the possible discriminatory impact they might cause.

We must have norms that allow us to preserve transparency, dignity and equality, having the chance to ask for liabilities to people and legal person that have designed and created these devices and machines. On the 23 February 2022, the Supreme Court of the Administrative Litigation Chamber of Madrid, in its sentence 818/2022 (STS 818/2022), already dictated sentence on to whom we must ask asset liability. The Supreme Court highlights that the fact that the faults of the product used in the surgical intervention can be charged on the manufacturer -in this case, a laboratory- does not mean that some liability cannot be charged on the Healthcare Administration, since it is a product integrated in an essential way on the work on healthcare services at the moment of the surgical intervention.

AI has started to have a very direct impact in clinical practice and it affects important aspects, such as liability in the medical activity, protection of personal data or informed consent. Some European initiatives foster its application to medicine. The European Union, for instance, is financing databases with anonymized images and AI systems, with the purpose of making diagnosis easier and more agile, as well as to detect illnesses in earlier stages (Tsang et al., 2017). Europe, nonetheless, is investing less in AI than some other international actors, such as USA or China. A clear example is that, until 2017, only the 25% of the biggest European companies had introduced digital standards (Miailhe et al., 2020). In spite of the progressive development of AI in Medicine and of the investments in AI having increased in Europe, there is still a lack of specific normative. For this reason, some state members have adopted their own norms, what represents a risk for the single normative framework that EU intends to establish, given that the EU highlights the necessity to create a global governance for AI.

To develop a legislation on AI applied to Medicine, some ethical principles to support legislation must be established first. Those ethical principles must inform both European and national norms. Ethics must be the guidance for AI and cybersecurity, to avoid the potential contrary effects derived from AI (Mostenau, 2020). The code of ethics of AI in the EU establishes the following ethical principles: respect to dignity, autonomy and human security, social inclusion, democracy, equity, cooperation and equality. Data, for instance, must only be used for public authorities and the state members when they have an essential public interest, and they must establish quality controls on the external sources of data and on the supervision mechanisms. In this vein, the signatories of the “Rome Call for AI Ethics” (Rome Call for Ethics, 2020) consider that, for the development of algorithms, there must be an ethical approach, from its design to its application. Thus, technologies (AI) must take into account the principles of transparency, inclusion, liability, impartiality, reliability, security and privacy. The coordinated plan the EU intends to implement must combine the requirements of predictability, liability and verifiability with fundamental rights and ethical principles (Vakkuri et al., 2020).

After analyzing the Spanish and European norms, it can be concluded that the regulation on the application of AI to medicine is still very deficient, both in the EU and in Spain. The EU intends to establish a general regulatory framework and some ethical principles in accordance with right and principles of functioning of the EU, with supervision through national independent authorities. It is urgent to develop and detail those norms, so that Spanish and European citizens can be benefitted by the application of AI to medicine, but also to avoid the risks it can involve.

Proposal to regulate the application of AI to medicine

The EU and its state members, such as Spain, must establish a realistic and adequate plan for the application of AI to Medicine. This plan must seek to improve the quality of healthcare and, at the same time, to respect ethical principles in Medicine and the fundamental rights and freedoms established by the European framework. Continuing with the three laws proposed by Isaac Asimov in 1942, we suggest the following aspects to be taken into account in future Spanish and European norms on AI applied to Medicine:

-

1.

Ethics as a guiding principle: Every norm must respect ethical values and principles that are on the basis of Law in the EU and in the Spanish state.

-

2.

Respect for the general objective of healthcare practices: For the application of AI to medicine to be in accordance with the Spanish and European ethical framework, it is necessary that its systems and algorithms have a clear objective, which is basically the objective of Medicine, that is, to improve the quality of life and assistance to users.

-

3.

Continuous assessment: Decision making derived from AI must be comprehensible, transparent and evaluable, to ensure it is in accordance with the before mentioned general objective regarding healthcare quality. The use of AI must be reliable for every part involved.

-

4.

Clarification of liabilities: All people involved in the application of AI to Medicine (design, distribution, professional use) must know their specific liabilities, especially if an adverse event happens.

-

5.

Risk identification: The actors involved in the application of AI must cooperate to identify and avoid possible risks and negative impacts derived from it. Those include deficits in security or in the protection of data, misinformation for users, threats to the autonomy of the patient (undermining informed consent or their decision making capacity), confusion about the liabilities derived from the healthcare practice mediated by AI. It is necessary to establish mechanisms that allow the identification of these and other negative collateral risks, so that AI can be consolidated as a reliable technology.

-

6.

Cybersecurity: Besides identifying risks, security must be ensured through the establishment of specific mechanisms. High risk AI will only be used when human integral supervision can be guaranteed and when human control can be reinstated any time.

-

7.

Continuous training: The application of AI in Medicine must be part of the training plans of future healthcare professionals. The EU and its state members must ensure that training for their healthcare professionals on AI is appropriate, so that they can make an adequate and responsible use of it.

Main contribution of this study

After the research carried out, it has been observed that the regulation on the application of AI in Medicine is weak, both in the European Union and in Spain, so it is adviable that these norms are detailed as soon as possible. The authors suggest that future regulations on AI applied to Medicine shall be based upon the following aspects: (1) ethical principles must guide them; (2) AI must respect the general purpose of Medicine (improving health care); (3) AI must be subject to continuous assessment; (4) responsibility for all the actions must be clarified; (5) potential risks must be identified; (6) there must be cybersecurity mechanisms; (7) professionals must receive continuous training.

References

Asimov I, Runaround (New York: Street & Smith, 1942), 40. Asimov also added a “Zeroth Law”—so named to continue the pattern where lower-numbered laws supersede the higher-numbered laws—stating that a robot must not harm humanity

Charter of Fundamental Rights of the European Union (2007/C 303/01). EU Official Gazzette, 18 December 2000. Retrieved: https://www.europarl.europa.eu/charter/pdf/text_es.pdf

Consejo General de Colegios de Fisioterapeutas de España (2021). Codigo de deontología de fisioterapia española. https://www.consejo-fisioterapia.org/descargas/codigo-deontologico-cgcfe.pdf

Consejo General de colegios oficiales de medicos (2022) Código de Deonlología Médica. https://www.cgcom.es/sites/main/files/minisite/static/828cd1f8-2109-4fe3-acba-1a778abd89b7/codigo_deontologia/

Colegio oficial de Enfermeria (1989). Código Deontológico de Enfermería Española. https://www.codem.es/codigo-deontologico

Consejo General de la Psicolog´´ia de Espala (2015) Código Deontológico del Psicólogo. https://www.cop.es/pdf/CodigoDeontologicodelPsicologo-vigente.pdf

Communication from the Commission to the European Parliament, the European Council, the Council, the European Economic and Social Committee and the Committee of the Regions. Coordinated Plan on Artificial Intelligence (2018)

Consolidated Version of the Treaty on European Union (2008) OJ C115/13

Constitución Española. [Internet]. Boletín Oficial del Estado, 29 de diciembre de 1978, núm. 311. [Consultado 10 de febrero de 2022]. Disponible en: https://www.boe.es/buscar/pdf/1978/BOE-A-1978-31229-consolidado.pdf

Council Directive 85/374/EEC of 25 July 1985 on the approximation of the laws, regulations and administrative provisions of the Member States concerning liability for defective products (1985) DOCE 210 § 80678

De España, G Decreto-ley 2/2023, de 8 de marzo, de medidas urgentes de impulso a la inteligencia artificial en Extremadura. [Internet]. Boletín Oficial del Estado, 10 de marzo de 2023, núm. 48. Disponible en: https://www.boe.es/diario_boe/txt.php?id=BOE-A-2023-8795

De España, G Ley Orgánica 5/1992, de 29 de octubre, de Regulación del tratamiento automatizado de los datos de carácter personal. [Internet]. Boletín Oficial del Estado, 31 de octubre de 1992, núm. 262. [Consultado 15 de febrero de 2023]. Disponible en: https://www.boe.es/eli/es/lo/1992/10/29/5

De Helsinki, D, World Medical Association (1975) Declaración de Helsinki. Principios éticos para las investigaciones médicas en seres humanos. Tokio-Japón: World Medical Association

European Commission (2018) Communication from the Commission to the European Parliament, the European Council, the Council, the European Economic and Social Committee and the Committee of the Regions. Coordinated Plan on Artificial Intelligence. COM/2018/795

Directive 2002/58/EC of the European Parliament and of the Council of 12 July 2002 concerning the processing of personal data and the protection of privacy in the electronic communications sector (Directive on privacy and electronic communications)

European Commission (2018) Communication from the Commission. Artif Intell Eur (COM/2018/237)

European Parliament legislative resolution of 16 September 2020 on the draft Council decision on the system of own resources of the European Union (10025/2020 – C9- 0215/2020 – 2018/0135(CNS))

ISO 8373:2012 (2012) Robots and robotic devices — Vocabulary

Ricart, J, Van Roy, V, Rossetti, F, Tangi, L (2022) AI Watch. National strategies on Artificial Intelligence: A European perspective. Publications Office of the European Union, https://doi.org/10.2760/385851

Ley 15/2022, de 12 de julio, integral para la igualdad de trato y la no discriminación, Spanish Official Gazette 167 § 11589

Ley Orgánica 3/2018, de 5 de diciembre, de Protección de Datos Personales y garantía de los derechos digitales, Spanish Official Gazette 294 § 16673

Lorenzo Aparici MOD (2022) Latelemedicina y el futuro Código Deontológico Médico

Miailhe N, Hodes C, Jeanmaire C, Buse Çetin R, Lannquist Y (2020) Geopolítica de la inteligencia artificial. Política Exter 34:56–69. 6

Ministerio de asuntos económicos y transición digital (2020) Estrategia Nacional de Inteligencia Artificial

Moreno Olivares, S (2020) Control descentralizado para la resolución de conflictos en la navegación con múltiples robots móviles

Mostenau, NR (2020) Artificial Intelligence and Cyber Security–A Shield against Cyberattack as a Risk Business Management Tool–Case of European Countries, Quality-Access to Success, 21 (175)

Proposal for a regulation of the European Parliament and of the council laying down harmonised rules on artificial intelligence (Artificial Intelligence Act) and amending certain union legislative acts (2021) COM/2021/206

Propuesta de actualización del Código deontológico de la enfermería española (2022)

Real Decreto Legislativo 1/2007, de 16 de noviembre, por el que se aprueba el texto refundido de la Ley General para la Defensa de los Consumidores y Usuarios y otras leyes complementarias (2007) BOE 287 § 20555

Regulation (EU) 2016/679 of the European Parliament of the of the Council of 27 April 2016, on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (General Data Protection Regulation) DOUE 119 § 1

Rome Call for AI Ethics (2020) Available in http://www.academyforlife.va/content/pav/en/events/intelligenza-artificiale.html

Rull, AA (2011) El Proyecto de Ley integral para la igualdad de trato y la no discriminación. InDret

SSTC Roj: SSTC 254/1993-ECLI:ES:TC:1993:254, (Tribunal Constitucional, de 20 de julio de1993)

SSTC Roj: SSTC 290/2000-ECLI:ES:TC:2000:290, (Tribunal Constitucional, de 30 de noviembre de1993)

STC Roj: STC 14/2003-ECLI:ES:TC:2003:14, (Tribunal Constitucional, de 28 de enero de 2003)

STS Roj: STS 818/2022 – ECLI:ES:TS:2022:818 (Tribunal Supremo, 23 de febrero de 2022)

Tsang L, Kracov DA, Mulryne J, Strom L, Perkins N, Dickinson R, Wallace VM, Jones B (2017) The impact of artificial intelligence on medical innovation in the European Union and United States. Intellect Prop Technol Law J 29(8):3–11

Vakkuri V, Kemell KK, Kultanen J, Abrahamsson P (2020) The current state of industrial practice in artificial intelligence ethics. IEEE Softw 37(4):50–57

White paper On Artificial Intelligence - A European approach to excellence and trust (2020) European Commission

Author information

Authors and Affiliations

Contributions

ÓAM, MJB, DLW, and BH contributed to the study conception. Data collection and analysis were performed by ÓAM, MJB, DLW, and BH. The first draft of the manuscript was written by ÓAM and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

This article does not contain any studies with human participants performed by any of the authors.

Informed consent

This article does not contain any studies with human participants performed by any of the authors.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Molina, Ó.A., Bernal, M.J., Wolf, D.L. et al. What is Spanish regulation on the application of artificial intelligence to medicine like?. Humanit Soc Sci Commun 11, 94 (2024). https://doi.org/10.1057/s41599-023-02565-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-023-02565-2