Abstract

The purpose of this research is to identify and evaluate the technical, ethical and regulatory challenges related to the use of Artificial Intelligence (AI) in healthcare. The potential applications of AI in healthcare seem limitless and vary in their nature and scope, ranging from privacy, research, informed consent, patient autonomy, accountability, health equity, fairness, AI-based diagnostic algorithms to care management through automation for specific manual activities to reduce paperwork and human error. The main challenges faced by states in regulating the use of AI in healthcare were identified, especially the legal voids and complexities for adequate regulation and better transparency. A few recommendations were made to protect health data, mitigate risks and regulate more efficiently the use of AI in healthcare through international cooperation and the adoption of harmonized standards under the World Health Organization (WHO) in line with its constitutional mandate to regulate digital and public health. European Union (EU) law can serve as a model and guidance for the WHO for a reform of the International Health Regulations (IHR).

Similar content being viewed by others

Introduction

Regulating the use of AI in healthcare (Bouderhem 2022, 2023) and its challenges at the national, regional and international levels is a complex and crucial topic. AI systems have the potential (Davenport and Kalakota 2019) to improve health outcomes, enhance research and clinical trials, facilitate early detection and diagnosis for better treatment, empower both health employees and patients who can rely on health monitoring in remote areas or developing countries. However, AI also poses ethical, legal, and social risks (Jiang et al. 2021), such as data privacy, algorithmic bias, patient safety, and environmental impact (Stahl et al. 2023). The WHO has published two reports on the use of AI systems in healthcare, respectively in 2021 and 2023 (WHO 2021, 2023). The WHO’s reports outline key considerations and principles for the ethical and responsible use of AI systems. AI for health should be indeed designed and used in a way that respects human dignity, fundamental rights and values. AI systems should promote equity, fairness, inclusiveness, and accountability. The WHO’s reports also highlight the challenges, legal and ethical gaps and voids that exist today on AI for health. There is currently a lack of harmonization and coordination between states and key stakeholders with few harmonized standards such as data privacy. It is extremely difficult for regulatory authorities to keep up with the rapid pace of innovation; AI models and generative AI such as ChatGPT are a clear illustration with unknown results and difficulties to predict the impact of such technologies on healthcare systems. The WHO considers that there is a need for capacity building and collaboration among different sectors and regions. The WHO is trying to address these challenges and to develop a global framework for the governance of AI systems for health. Also, the WHO is providing technical assistance and support for the implementation of its principles and recommendations at the national and regional levels. The WHO also encourages the development of innovative and inclusive approaches to the regulation of AI for health, such as co-regulation, self-regulation (Schultz and Seele 2023), and adaptive regulation, that can balance the benefits and risks of AI, and that can foster trust and confidence among the public and the health sector. However, these rules are only a guidance for WHO Members and do not create any legal obligations. Therefore, the WHO should adopt legally binding rules on AI in healthcare as it is the right authority to monitor global health and specifically digital health. The International Health Regulations (IHR) adopted by the 58th World Health Assembly in 2005 through the Resolution of the World Health Assembly (WHA) 58.3 (WHO, IHR 2005) should be amended to reflect the current state of AI systems in healthcare. Also, the importance of regional regulations such as EU regulations should not be minimized (Bourassa Forcier et al. 2019). The EU General Data Protection Regulation (GDPR) (EU Official Journal 2016), Data Act (EU Commission 2022a) and Artificial Intelligence (AI) Act (EU Commission 2021) could serve as a law model for WHO Members in adopting new legally binding rules for ethical and responsible AI systems in healthcare. The objective of the EU Data Act is to harmonize rules relating to a fair access to data and its use by public and private actors. As its predecessor the GDPR, the EU Data Act will help patients to keep control over their health data more efficiently. EU authorities have proposed a comprehensive legal framework for the regulation and promotion of ethical and responsible AI systems in healthcare and other fields. Such AI systems should be based on the principles of human-centricity, trustworthiness and sustainability (Sigfrids et al. 2023). The AI Act ensures that all AI systems are safe, reliable, and respect fundamental human rights such as the right to privacy; another fundamental aspect for EU authorities is to develop innovation and competitiveness. Also, the AI Act aims to enhance cooperation and coordination among EU Member States and stakeholders. The AI Act legal framework also takes into consideration the global nature of AI. It is expected that the AI Act will promote the EU’s leadership and influence in the international field of data protection regulation as it was the case with the GDPR which inspired several regions and countries (Bentotahewa et al. 2022). Expectations for the AI Act are very high as observers believe that the new regulation will provide legal certainty and trust for all AI stakeholders. A provisional agreement has been reached on 8 December 2023 (European Parliament 2023), which suggests that the AI Act could enter into force in 2024. On 24 January 2024, the European Commission adopted a decision establishing the European Artificial Intelligence Office which is intended to become a key body responsible for overseeing ‘the advancements in artificial intelligence models, including as regards general-purpose AI models, the interaction with the scientific community, and [which] should play a key role in investigations and testing, enforcement and have a global vocation’ (EU Commission 2024). On 2 February 2024, the AI Act was unanimously approved by the Council of EU Ministers (EU Council 2024). On 13 February 2024, the AI Act has passed the last legislative stage as it has been approved following discussions on a compromise deal between the European Commission, the Council of EU Ministers and the Joint committee on Internal Market and Consumer Protection and committee on Civil Liberties, Justice and Home Affairs (European Parliament 2024). The AI Act has now to be finally approved by the European Parliament in a plenary session scheduled for 10 and 11 April 2024 (Gibney 2024). EU regulations usually specify a transitional period of two years for their entry into force which means that the AI Act will be fully applicable by 2026. Compliance with the AI Act should start now as the regulation identifies different levels of risks and obligations. Regarding the necessity to adopt harmonized and new legally binding rules for AI in healthcare, it can be argued that states have a general obligation of cooperation under the United Nations (UN) Charter, including in health matters. Therefore, the WHO should be granted coercive powers to ensure compliance with the IHR which need to be amended by states parties to take into consideration the implementation of AI in healthcare.

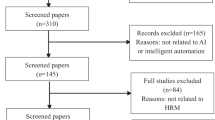

Methodology

This research focuses on publicly available data up to February 2024. Data collected and articles were first screened according to title and abstract and then the full texts of eligible articles were evaluated. Using the same search query, a gray literature research was performed in English on the Google Scholar search engine, retrieving articles focusing on the use of AI in healthcare with particular attention to regulations, policies and guidelines implemented by the EU or the WHO. I also searched for articles relating to the concrete applications of AI in healthcare to determine the technical, legal and ethical challenges posed by AI. Finally, I searched WHO’s institutional repositories for additional information. I combined the results from the different sources to outline the insufficient current legal framework applicable to the use of AI in healthcare – mostly soft law rules – emphasizing the necessity to adopt new legally binding rules under the WHO. AI-generated medical advice such as the GPT chatbot is an illustration of concrete threat to patients’ safety (Haupt and Marks 2023). Therefore, a coordinated and global answer response should be privileged by the international community under the auspices of the WHO. This move will ensure that all WHO Members are legally bound by the same international standards and best practices as there is no universal agreement on the use of AI in healthcare.

Concrete applications of AI in healthcare

As discussed previously, AI in healthcare encompasses a broad range of opportunities and applications. AI systems and generative AI can improve health outcomes, efficiency, and quality of care. The main purpose of such innovative applications and digital health tools is to enhance patients’ experience and democratize access to healthcare worldwide, in line with the UN Sustainable Development Goals (SDGs). If used correctly, AI systems will eliminate human bias (Abbey 2023). Some concrete examples of AI in healthcare are mentioned above in a non-exhaustive list (see Table 1) in an effort to delineate the topic of the study and help to the elaboration of a comprehensive legal framework in the field of AI regulation.

The use of AI in healthcare will offer better care patient and reduce costs (Sunarti et al. 2021). AI can also reduce errors from human negligence for instance; innovation provided by AI models is expected to improve care management (Klumpp et al. 2021).

Regarding medical imaging analysis, it has been demonstrated that AI systems can help radiologists and other medical professionals interpret images from computed tomography (CT), magnetic resonance imaging (MRI), ultrasound, and other modalities (Hosny et al. 2018). AI can detect anomalies, measure features, and provide diagnoses based on the images. For example, AI can help diagnose lung cancer from chest X-rays or brain tumors from MRI scans (Chiu et al. 2022).

Researchers and pharmaceutical companies are relying on AI for the development and discovery of new drugs; AI systems can also be used for drug repurposing (Paul et al. 2021). AI systems can indeed analyze considerable amounts of data (Quazi 2022) from genomic (Chafai et al. 2023), molecular, and clinical sources; such capabilities allow AI systems to generate novel hypotheses and predictions. AI has already been used for drug repurposing or repositioning in precision medicine. Researchers discussed how AI systems could be used for repurposing or repositioning existing drugs which can help design new drug molecules or identify potential treatments for COVID-19 for instance (Zhou et al. 2020; Mohanty et al. 2020; Floresta et al. 2022).

AI can help predict the risk of chronic kidney disease and its progression in patients (Zhu et al. 2023; Schena et al. 2022). By processing large amounts of health data from electronic health records, lab tests, and other sources, AI systems can identify risk factors and provide personalized recommendations. Researchers have been exploring the use of AI in the detection of chronic kidney disease as AI systems can help forecast kidney function decline or prevent acute kidney injury (Tomašev et al. 2019).

Another concrete application of AI is cancer research and treatment (Chen et al. 2021). AI can help researchers but also cancer patients in different areas of cancer care such as diagnosis, prognosis, medical treatment, and follow-up. Following Horizon Europe which is the research and innovation programme of the EU for the period 2021–2027, the European Health and Digital Executive Agency (HaDEA) launched several research projects using AI to improve cancer treatment and patients’ quality of life (HaDEA 2023). Researchers used AI to enhance cancer treatment and predict lung cancer prognosis (Johnson et al. 2022). AI can also analyze genomic, histopathological, and clinical data to provide insights and guidance. AI systems can be used as new tools in digital oncology for diagnosis (Bera et al. 2019). Another illustration in cancer detection is the use of AI for breast cancer analysis (Shah et al. 2022) or in radiation oncology (Huynh et al. 2020).

Precision medicine is another field where AI is used extensively by researchers for its benefits as it can help deliver personalized care and advice for each patient. As demonstrated by researches, ‘[P]recision medicine methods identify phenotypes of patients with less-common responses to treatment or unique healthcare needs’ (Johnson et al. 2021). In a recent study, researchers described their ‘vision for the transformation of the current health care from disease-oriented to data-driven, wellness-oriented and personalized population health’ (Yurkovich et al. 2023). Another important illustration is the use of AI to predict drug response or optimize drug dosing for epilepsy (de Jong et al. 2021).

As discussed, the use of AI in healthcare seems limitless. AI can make a positive impact in telemedicine, mental health, public health and health education. However, numerous challenges need to tackled through coordinated action and effective regional and international cooperation between states.

Challenges posed by the use of AI in healthcare

There are several challenges (see Table 2 above) posed by the use of AI in healthcare ranging from health equity, fairness, access to healthcare to technical (Devine et al. 2022) issues such as the development of AI-based diagnostic algorithms to ethical and regulatory gaps.

It is necessary to look beyond the hype and assess the pros and cons of AI in healthcare today. AI in healthcare poses new challenges such as bias (Parikh et al. 2019) or accountability in situations where patients’ medical reports have been shared or stolen (Naik et al. 2022). Additionally, the technical concept of AI is also source of controversy and a clear and precise definition poses difficulties although researchers believe that the advantages of AI to power up the economy are considerable (Kundu 2021). The AI Act proposal is the first comprehensive attempt to legally regulate AI but European authorities and all stakeholders involved in consultations struggled to agree on a definition of AI as different disciplines are impacted (Ruschemeier 2023). Research (Lau et al. 2023) and recent regulatory challenges such as the surge of ChatGPT-4 as a chatbot (Meskó and Topol, 2023) potentially used in medicine (Lee et al. 2023) that may threaten public health (De Angelis et al. 2023) confirm my hypothesis statement following which there is no adequate regulation of the use of AI systems in healthcare (Loh 2023). Data (Azodo et al. 2020) accuracy is also a concern acknowledged by all stakeholders as physicians or lay people need precise data to be able to rely on it and monitor their health (Smith et al. 2023). Data security (Dinh-Le et al. 2019) and privacy (Banerjee et al. 2018) are other crucial challenges to be addressed. Inaccurate data (Xue 2019) is an important obstacle to health monitoring. From a scientific perspective, the use of AI (Sui et al. 2023) in health research could be a limitation as data may not be accurate and lead to errors and misdiagnosis. Developers need to design algorithms which take into consideration a wide range of situations and all groups of a given population. Biased AI algorithms will necessarily cause discrimination and misleading predictions. Some authors proposed an Ethics Framework for Big Data in Health and Research in order to ensure that best practices and international standards in developing AI models are implemented efficiently (Xafis et al. 2019; Lysaght et al. 2019). Another crucial point for developers is to implement performance indicators to measure AI success (Chen and Decary, 2020) as it will allow healthcare providers to detect errors or potential biases in AI models and algorithms that could lead to medical malpractice liability (Banja et al. 2022) or issues related to ethical design of pathology AI studies (Chauhan and Gullapalli 2021). The same applies to AI as a medical device: here, the scope of performance transparency and accountability has to be clearly defined by a set of rules (Kiseleva 2020). As noted by some authors, ‘personalization of care, reduction of hospitalization, and effectiveness and cost containment of services and waiting lists are benefits unquestionably linked to digitalization and technological innovation but that require a review of the systems of traceability and control with a revolution of traditional ICT systems’ (Dicuonzo et al. 2022). Traditional ICT systems need to evolve drastically in order to allow healthcare systems to rely massively on AI and robotics. Data analysis has become a fundamental skill which has to transform consolidated data from existing fragmented data sources into valuable information for business decision-makers. However, the technical challenges of the use of computational models and AI algorithms in healthcare pose new risks and problems such as fairness (Chen et al. 2023) and need to be carefully addressed by sufficient and adequate regulations. Some authors recommend new quality improvement methodologies prior to AI models and algorithms development: ‘[A]ligning the project around a problem ensures that technology and workflows are developed to address a genuine need’ (Smith et al. 2021). Such approach will ensure that AI is efficient and that all potential errors have been addressed by all stakeholders such as developers and physicians; AI algorithms in healthcare should be constantly updated and monitored (Feng et al. 2022). Methodologies and protocols elaborated by developers shall first take into consideration the safety of patients as ‘the technological concerns (i.e., performance and communication feature) are found to be the most significant predictors of risk beliefs’ (Esmaeilzadeh 2020). Indeed, issues related to patient behaviors and perceptions need to be addressed as they may be reluctant to rely only on AI devices for diabetes detection for instance. As pointed out by some authors, AI is today in a renaissance phase with the successful application of deep learning (Yu et al. 2018). Another challenge that researchers have to tackle is related to affordability of AI enabled systems (Ciecierski-Holmes et al. 2022). Some authors also argued that AI ethics is needed in medical school education as part of the curriculum offered to future practitioners (Katznelson and Gerke 2021). Also, AI’s financial cost has to be limited and reasonable. States need to adopt specific regulations and all stakeholders involved in this new healthcare system based on computational models have to elaborate a new ‘AI delivery science’ with dedicated protocols, process improvement, machine learning models, design thinking and adequate tools (Li et al. 2020).

Explainability (Holzinger et al. 2019) is also an important challenge. Indeed, AI systems are criticized for their opacity (Durán and Jongsma 2021) as observers and researchers do not know how these ‘black boxes’ reach their results or decisions. Public confidence in digital health could be threatened as individuals may be reluctant to rely on such services. This situation necessarily raises trustworthiness, reliability and ethical issues in a very sensitive field which is healthcare. It is fundamental for healthcare providers and patients to clearly understand how AI systems work. AI systems’ limitations and uncertainties have to be determined to allow patients make informed decisions and give informed consent, including in emergency medicine (Iserson 2024). Providing explainable AI methods (Nauta et al. 2023) will allow for more transparency (Hassija et al. 2023) and accountability (Arrieta et al. 2020) for responsible AI systems.

Data quality and availability are also key issues (Sambasivan et al. 2021). As mentioned, AI systems rely on large amounts of data to learn from and perform different tasks. Collecting, processing and sharing health data can be extremely difficult, due to its sensitive nature and confidentiality but also ethical challenges and privacy regulations such as the GDPR or the Health Insurance Portability and Accountability Act (HIPAA) 1996 (Edemekong et al. 2023). In addition, health data can be biased (Grzybowski et al. 2024) which will affect the performance and fairness (Wu 2024) of AI systems. Healthcare providers need to ensure that all data used by AI systems is representative (Suárez et al. 2024) of a given population, accurate, and secure.

Implementation and adoption (Mouloudj et al. 2024) constitute a challenge for public authorities. AI systems can be important additions to healthcare systems worldwide but they need to be wisely and consistently integrated into the existing healthcare systems. This can be a source of technical and organizational barriers in developing countries where there is no or little digitalization of healthcare. As demonstrated, some countries may need a digital revolution (Nithesh et al. 2022) to achieve noticeable results in the implementation of AI systems in healthcare. AI systems require appropriate technical resources and trained professionals. Awareness campaigns and educational programs are needed to address fears (Scott et al. 2021) and potential resistance from both healthcare employees (Abdullah and Fakieh 2020) who may believe that AI will replace (Loong et al. 2021) them and patients who may believe that AI systems could harm them. This is part of public confidence and building trust in AI systems (Kumar et al. 2023a).

AI regulation and governance are of paramount importance (Zhang and Zhang 2023). AI systems in healthcare need to comply with various laws and policies that regulate their design, development, deployment, and evaluation. However, the current regulatory frameworks may not be sufficient or appropriate for the fast-paced and complex nature of AI. There is a need for more collaboration and dialogue among stakeholders, such as governments, regulators, developers, healthcare providers, and patients, to establish clear and consistent standards and guidelines for AI in healthcare such as the use of ChatGPT which lacks regulation (Wang et al. 2023). Data privacy (Kapoor et al. 2020) illustrates the need for regulation as health data is sensitive and confidential by nature (da Silva 2023). All stakeholders have to coordinate their efforts to find a consensus and an acceptable balance between regulation and innovation (Thierer 2015). Technical, ethical and regulatory challenges such as data collection (Huarng et al. 2022), data quality, security (Barua et al. 2022), interoperability between different operating systems (OS) (Lehne et al. 2019), health equity, and fairness (Canali et al. 2022) need to be addressed. Concrete regulations should be developed such as the implementation of quality standards, conditions to access health data, interoperability, and representativity. Most importantly, compliance with key regulations such as the GDPR, HIPAA, AI Act or Data Act is a requirement. Self-regulation should also be encouraged as it will help to build public confidence in AI-based applications as important volumes of personal data are processed. Companies operating in this field are making efforts (Chikwetu et al. 2023) and want to be seen as actors caring about personal health data and its processing, storing and sharing. Guidelines and voluntary codes of conduct developed by the private sector are concrete illustrations (Paul et al. 2023). Despite the existence of such challenges, AI is an opportunity as it could become a substantial addition to the everyday healthcare practice (Powell and Godfrey 2023). Indeed, AI could save lives by allowing healthcare providers to adjust to patients’ needs and situations; AI can also be an important tool for people living in remote areas or far from hospitals or physicians (Canali et al. 2022). As observed, there is today a global consideration for the development of AI-based solutions in a wide range of fields ranging from education to healthcare; this trend demonstrates that individuals are now ready to embrace AI which could help monitoring people’s health condition (Loucks et al. 2021). However, a balance between the use of AI and data privacy is a necessity from a regulatory and ethical perspective (Boumpa et al. 2022). Different measures can be adopted to ensure privacy and global public health security.

Ensuring the privacy of personal health data

Different measures can be taken to ensure the privacy and security of personal health data (Pirbhulal et al. 2019). All stakeholders – regulatory authorities, companies, healthcare providers – have to ensure patient privacy and data confidentiality (see Table 3 below).

It has been demonstrated (Hughes-Lartey et al. 2021) that most data breaches are attributable to human errors. Adequate training and education should be provided by healthcare institutions to their personnel. Employees have to be well-aware of all risks associated with the processing of personal health data and security issues. Risk assessments on a regular basis are a requirement (Khan et al. 2021) as they could help to identify intrinsic limitations – such as data security breaches – of any healthcare institution and help to their resolution. Health personal data can also be protected and secured with a virtual private network (VPN) (Prabakaran and Ramachandran 2022). A VPN allows users to encrypt and mask their digital footprint. Healthcare institutions could protect themselves from data breaches and cyber-attacks such as ransomwares. Access to patients’ health records has to be limited to certified personnel and restricted (Javaid et al. 2023) for better data security and confidentiality. Healthcare institutions could implement improved authentication processes such as two-factor authentication. Based on the confidential and sensitive nature of health data, healthcare providers should implement role-based access control systems (Saha et al. 2023); employees should only have access to a specific assigned system-level.

In the US, the Health Insurance Portability and Accountability Act (HIPAA) 1996 regulates health data and ensures its security and confidentiality. As such, when physicians assign health devices relying on AI to their patients for instance, all data collected is considered as protected health information (PHI). According to US federal regulations, all data collected, processed and shared must be protected and secured at all times (Jayanthilladevi et al. 2020). Companies commercializing services of AI-based solutions for healthcare should consider first data privacy and security issues to be reliable alternatives to healthcare providers. This could be achieved through the adoption of international standards for devices using AI in sport and processing large amount of personal data for instance (Ash et al. 2021). Health data privacy requires not only built-on security features, but also guarantees that the network is safe as well as third party applications available on the App Store or Google Store. Transparency (Kapoor et al. 2020) is a key aspect of data privacy as users should know who can access their data, whether it is a third party or the healthcare provider itself. Here, some gaps exist in the US legal framework applicable to health data and its handling. Indeed, HIPAA only targets specifically health data and not all services or solutions available today on the market such as OpenAI’s ChatGPT which also collects health data. However, US authorities could provide a regulatory answer if such companies start dealing with health data and promote their products as health devices or solutions.

The complexity to regulate AI systems

The regulation (Iqbal and Biller-Andorno 2022) of AI in healthcare is a complex issue but potential solutions exist (see Table 4 below).

As stated, there is a need for clear guidelines and standards (Espinoza et al. 2023) to ensure that AI is used to build better healthcare systems worldwide based on the principles of fairness and health equity. National, regional and international guidelines and recommendations should be detailed as much as possible considering some important challenges such as accuracy, transparency, security, informed consent, data privacy as well as ethics (Leese et al. 2022) in the use of health data collected (Taka 2023). Unauthorized access by third parties is also an ethical issue and a violation of data privacy and informed consent (Segura Anaya et al. 2018). Potential threats such as cybersecurity need to be tackled as well, self-adaptive AI systems could be a solution (Radanliev and De Roure 2022). Public authorities will need to create new regulatory bodies or give new powers and attributions to existing Watchdogs (Korjian and Gibson 2022). Throughout audits and inspections, regulatory bodies such as the Food and Drug Administration (FDA) in the US and the Medicines and Healthcare Products Regulatory Agency (MHRA 2022) in the UK play a crucial role by monitoring all stakeholders and ensuring that they comply with their obligations in terms of privacy, efficiency, safety and quality. The promotion of transparency and accountability (Tahri Sqalli et al. 2023) is fundamental as Tech companies know that they might face severe consequences such as financial sanctions regarding their sharing (Banerjee et al. 2018) practices. They should also be held accountable for any breaches of data privacy or security. Self-regulation should be encouraged as codes of conduct can help to promote international standards such as data protection (Winter and Davidson 2022). As mentioned, states and international organizations need to cooperate, harmonize their national regulations and promote the safe and ethical use of AI systems (Colloud et al. 2023).

EU law offers today detailed rules and guidelines relating to privacy and the handling of personal data. The GDPR is indeed a key regulation and a law model which offers a comprehensive legal framework with stringent obligations and duties for service providers and manufacturers (Mulder and Tudorica 2019). Recently, the European Union Commission made a proposal (EU Commission 2022b) for a European Data Act for adequate regulation of data specifically processed, stored or shared, including health data. In June 2023, the Council presidency and the European parliament came to a consensus and adopted the European Data Act as a provisional agreement (EU Council 2023a). On 9 November 2023, the European Parliament adopted the text of the European Data Act (European Parliament 2023). A few days later, on 27 November 2023, the European Council formally adopted the European Data Act (EU Council 2023b). The last step in the process took place on 22 December 2023 when the Council of the European Union published in the Official Journal of the European Union Regulation (EU) (2023) 2023/2854 of the European Parliament and of the Council of 13 December 2023 on harmonized rules on fair access to and use of data and amending Regulation (EU) 2017/2394 and Directive (EU) 2020/1828 (Data Act). The Data Act entered into force on 11 January 2024. However, the main provisions of the Data Act will only be applicable from 12 September 2025. The objective of the Data Act is to harmonize rules relating to a fair access to data and its use by public and private actors. As its predecessor the GDPR, the Data Act will help patients to keep control over their health data more efficiently. It could also serve as a guideline or law model for the rest of the world and enshrine key international standards relating to health data privacy and security. The AI Act is another fundamental addition to the EU legal framework as it deals with AI explicitly. This EU Regulation is the first world’s AI law as European authorities want to establish clear rules and guidelines for the development and implementation of AI. After years of debate, the European Commission proposed the first EU regulatory framework for AI in April 2021 which is expected to enter into force in April 2024 as explained. This proposal acknowledges the potential of AI in our daily lives and says that AI systems can be used in different applications such as healthcare, education or transportation. The main advantages of AI are affordability and better services through a democratized access to some vital sectors. However, the European Commission also noted that AI is not free of threats and risks posed to users. To this effect, the European Commission classified AI systems based on different levels of risks requiring more or less regulation. The AI Act establishes different rules for service providers based on the level of risk from the implementation of AI. Stringent rules are applicable to AI-based solutions posing the greatest threats such as privacy issues or confidentiality ranging from a ban to compliance with key standards and obligations upon service providers (see Table 5 above for a summary).

At the multilateral level, the WHO released in October 2023 a new publication listing key regulatory considerations on AI for health (see Table 6 above). The WHO emphasizes the importance of establishing AI systems’ safety and effectiveness, rapidly making appropriate systems available to those who need them, and fostering dialogue among stakeholders, including developers, regulators, manufacturers, health workers and patients.

Autonomy ensures that human values and rights are respected and that people can make informed decisions about their health and well-being. The second principle – safety – ensures that AI systems are reliable, robust, and do not cause harm or errors. The third principle – transparency – ensures that AI systems are understandable, explainable, and accountable, and that their limitations and uncertainties are communicated. Responsibility is the fourth principle proposed by the WHO and ensures that AI systems are designed, developed, and deployed in a responsible and ethical manner, and that there are mechanisms for oversight and redress if needed – it could be through the establishment of dedicated Watchdogs. The fifth principle – equity – ensures that AI systems are inclusive, accessible, and do not discriminate or exacerbate existing inequalities. The last principle – sustainable AI – ensures that AI systems are environmentally and socially sustainable, and that they align with the health needs and priorities of the population.

The WHO developed these principles to help states and regulatory authorities develop new guidance and regulations or adapt existing ones on AI at both national or regional levels. The main purpose of the WHO is to provide a legal framework for determining and assessing the benefits and risks of AI for healthcare. Also, the WHO elaborated a checklist for evaluating the quality and performance of AI systems. The WHO acknowledges the potential of AI in healthcare but notes that many challenges affect AI systems such as unethical data collection, cybersecurity threats, biases or misinformation. Therefore, the WHO calls for a better coordination and cooperation between states and all stakeholders to ensure that AI is increasing clinical and medical benefits for patients. These principles developed by the WHO can help all stakeholders to develop ethical and responsible AI systems based on five different themes (see Table 7 below).

Complying with core principles – human autonomy, promoting safety, ensuring transparency, fostering responsibility (Trocin et al. 2023), ensuring equity (Gurevich et al. 2023), and promoting sustainability (Vishwakarma et al. 2023) – can help align AI systems with human values and human rights (Kumar and Choudhury 2023b), such as the right to privacy or the right to health. When developing AI systems, stakeholders need to assess any trade-offs and impacts based on various aspects, such as data quality and availability, regulation and governance, implementation and adoption, and accountability (Andersen et al. 2023). These aspects can affect the performance (Farah et al. 2023), reliability (Yazdanpanah et al. 2023), and trustworthiness (Albahri et al. 2023) of AI systems, and require careful evaluation and management. AI ethics and responsibility can also be developed through collaboration and dialogue (Tang et al. 2023) among all stakeholders for better inclusiveness, participation and responsiveness to the needs and preferences of different groups. A true ‘AI culture’ can be built on awareness campaigns, trainings, education of all stakeholders involved in the development of AI systems (Jarrahi et al. 2023). By educating people (Alowais et al. 2023) on the risks associated with AI systems, we can achieve a better commitment to ethical and responsible AI systems. As mentioned, appropriate tools and methods such as risk mitigation (Harrer 2023), assessment (Schuett 2023), data privacy regulations (Dhirani et al. 2023) and monitoring tools (Prem 2023) can help implement ethical and responsible AI systems.

AI ethics and governance under the WHO

The WHO has a constitutional mandate to regulate global public health. Bump et al. note that: ‘WHO is a manifestation of the advantages of cooperation and collaboration, and it consistently leads member states in ways that uphold its mission to advance the highest standard of health for all. In the pandemic, WHO has shown leadership in sharing information and in co-launching the Access to COVID-19 Tools (ACT) Accelerator, a global collaboration to accelerate development and equitable access to diagnostic tests, treatments, and vaccines’ (Bump et al. 2021). Efforts made by the WHO during the COVID-19 pandemic could be duplicated in digital health and the use of AI systems. How can the international community address the risks associated with the use of AI in healthcare? What can the WHO do to guarantee equal access to new technologies and protect fundamental rights such as privacy (Murdoch 2021) and data protection? According to the WHO, “[e]quitable access to health products is a global priority, and the availability, accessibility, acceptability, and affordability of health products of assured quality need to be addressed in order to achieve the Sustainable Development Goals, in particular target 3.8” (WHO 2019). AI creates an unprecedented situation in interstate relations since the end of the World War II in 1945. AI has always been a crucial issue for the international community (Pesapane et al. 2021) and developing countries (Wahl et al. 2018). However, the current legal framework applicable to global public health does not protect sufficiently personal data and privacy (Duff et al. 2021). A new paradigm is an absolute necessity in order to reshape global health and move towards a dedicated legal framework for AI in healthcare. This new paradigm is the adoption of a global answer throughout the implementation of legally binding rules by WHO Members in the field of AI. The IHR (2005) could be used by States Parties to improve the degree of response to potential threats such as privacy. WHO Members could rely upon the IHR and EU regulations – GDPR, AI Act, Data Act – in efforts to negotiate new legally binding rules. Today, under the IHR, one of the limitations is that bilateral and multilateral cooperation are only encouraged. Healthcare is traditionally recognized as a global public good (Chen et al. 2003). Therefore, it can also be argued that AI systems constitute global public goods (Haugen 2020). As acknowledged by the WHO, all stakeholders should ensure that AI systems are rapidly made available to those who need them. Here again, a parallel could be made with the COVID-19 pandemic and the necessity to regulate more efficiently global public health (Phelan et al. 2020). AI (Banifatemi 2018) systems could be considered as global public goods which is in line with the Goal 3 of the UN SDGs: ‘Ensure healthy lives and promote well-being for all at all ages’ (UN SDGs 2016).

Under the UN Charter, States Parties have a legally binding obligation to cooperate in all matters representing a threat to international peace and security, including in the field of economic and social matters (United Nations Charter 1945). This duty of cooperation is at the core of international law. Some authors argue that such obligation can be assimilated to a hard law principle of international law (Delbrück 2012). The UN International Law Commission listed the obligation to cooperate among states’ obligations (Dire 2018). To support such position, research and traditional concepts demonstrate that the obligation to cooperate is hard law in international water law (Oranye and Aremu 2021). Our postulation is that such a duty to cooperate should necessarily be transposed into the field of global public health and be implemented in situations where the use of AI in healthcare may pose new risks or threats to patients. AI should not create any further inequalities. Coordinated actions between the General Assembly of the UN and the WHO could be implemented in order to conduct a global answer through the duty to cooperate which is a hard law principle. Here, the WHO will guide and ensure that its Members do comply with their obligations such as implementing a defined set of international standards in order to guarantee the respect of fundamental rights and ensure the development of accessible, affordable and responsible AI systems. To this effect, the UN General Assembly may adopt a new Resolution and refer to the general duty to cooperate for an effective implementation of AI in healthcare and call for a coordinated answer that will be led by the WHO. Efforts to modify the IHR should be made as they will create new obligations for states and create a truly global response to mitigate risks associated with the use of AI in healthcare. The existing international legal framework allows us to consider that the WHO can monitor the implementation of this general obligation of cooperation.

The WHO has no enforcement powers and its inability to enforce its guidelines and act of its own reflects the shortcomings of international law. Obviously, legal tools exist and written rules akin to codes of conduct allow states with gaps in their health regulations to adopt a number of international standards. However, this is only a mitigative measure subject to the goodwill of states. The limitations of the WHO have been addressed by researchers (Youfa et al. 2006). The WHO has also been criticized by commentators for its ‘rather restrained role in creating new norms under its Constitution’ (von Bogdandy and Villarreal 2020). Despite the fact that the WHO has a recognized expertise and a constitutional mandate to regulate global health and therefore AI systems, it only provides soft rules such as guidelines and recommendations to its members. The WHO’s reports released in 2021 and 2023 are examples of such non legally binding rules. As noted by Gostin et al., ‘[t]he WHO’s most salient normative activity has been to create ‘soft’ standards underpinned by science, ethics, and human rights. Although not binding, soft norms are influential, particularly at the national level where they can be incorporated into legislation, regulation, or guidelines’ (Gostin et al. 2015). In international law, soft rules are necessary as they allow the international community to reach a consensus on certain matters, and they simplify the adoption of a formal treaty. However, strong answers are essential in specific situations as states may decide not to actively cooperate with each other. Consequently, the WHO should be granted a real normative power. The IHR have been considered as a fundamental development in international law (Fidler and Gostin 2006). These regulations are an existing legal tool that can provide the WHO outstanding attributions to regulate AI systems in healthcare. Article 2 WHO IHR, which is related to purposes and scope of the WHO – one of the most important provisions – states that ‘the purpose and scope of these Regulations are to prevent, protect against, control and provide a public health response to the international spread of disease in ways that are commensurate with and restricted to public health risks, and which avoid unnecessary interference with international traffic and trade’ (WHO IHR 2005). It is also worth referring to Part II – Articles 12 and 13 (WHO IHR 2005) – of the IHR related to ‘Information and Public Health Response’. These provisions give important powers to the WHO but there are no enforcement powers in situations where WHO members refuse to cooperate nor coercive measures that can be taken against such states. The international community acknowledged that there is a correlation between international economic law and development since decades (UN Conference on the Human Development 1972). The same should apply to global public health: economic and sustainable development cannot be achieved without a fair access to healthcare and by regulating adequately AI systems. The duty to cooperate in health matters is a fundamental component of the new international economic order.

Conclusion

The current legal framework shows us the limitations of global public health, and the somewhat limited role played by the WHO. This international organization has been designed as the key player in the regulation of global public health with legal tools negotiated for decades. However, it is time to acknowledge that a new paradigm is necessary due to the emergence of Tech companies expanding globally. There is indeed a shift in how we can access healthcare and how data can be processed, stored or shared. The WHO shall have new coercive and normative powers to address issues (Council of Europe 2020) related to the use of AI in healthcare. AI should ultimately facilitate access to healthcare and provide better health systems (Santosh and Gaur 2021) according to the UN SDGs, especially in the least developed countries (Wakunuma et al. 2020). Ethical and regulatory challenges posed by the novelty of AI systems in healthcare such as bias, data protection or explainability have to be addressed by states. European regulations – GDPR, Data Act, and AI Act – can provide reliable legal frameworks and established standards to be implemented by all stakeholders for ethical and responsible AI systems. WHO Members need to actively cooperate and elaborate new guidelines and legally binding rules under the IHR.

Data availability

All data analyzed during this study are included in this published article.

References

Abbey O (2023) Artificial Intelligence, Bias, and the Sustainable Development Goals, UN Science Policy Brief, May 2023. https://sdgs.un.org/sites/default/files/2023-05/A14%20-%20Abbey%20-%20Artificial%20Intelligence%20Bias.pdf

Abdullah R, Fakieh B (2020) Health Care Employees’ Perceptions of the Use of Artificial Intelligence Applications: Survey Study. J Med Internet Res 22(5):e17620, https://www.jmir.org/2020/5/e17620

Albahri AS, Duhaim AM, Fadhel MA, Alnoor A, Baqer NS, Alzubaidi L, Albahri OS, Alamoodi AH, Bai J, Salhi A, Santamaría J, Ouyang C, Gupta A, Gu Y, Deveci M (2023) A systematic review of trustworthy and explainable artificial intelligence in healthcare: Assessment of quality, bias risk, and data fusion. Inf Fusion 96:156–191. https://doi.org/10.1016/j.inffus.2023.03.008

Alowais SA, Alghamdi SS, Alsuhebany N et al. (2023) Revolutionizing healthcare: the role of artificial intelligence in clinical practice. BMC Med Educ 23:689. https://doi.org/10.1186/s12909-023-04698-z

Andersen T, Nunes F, Wilcox L, Coiera E, Rogers Y (2023) Introduction to the Special Issue on Human-Centred AI in Healthcare: Challenges Appearing in the Wild. ACM Transactions on Computer-Human Interaction 30:2. https://doi.org/10.1145/3589961

Arrieta AB, Díaz-Rodríguez N, Del Ser J, Bennetot A, Tabik S, Barbado A, Garcia S, Gil-Lopez S, Molina D, Benjamins R, Chatila R, Herrera F (2020) Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf Fusion 58:82–115. https://doi.org/10.1016/j.inffus.2019.12.012

EU Council, Artificial Intelligence Act (2024) Text of the Provisional Agreement, 2 February 2024, https://data.consilium.europa.eu/doc/document/ST-5662-2024-INIT/en/pdf

Ash GI, Stults-Kolehmainen M, Busa MA, Gaffey AE, Angeloudis K, Muniz-Pardos B, Gerstein MB (2021) Establishing a global standard for wearable devices in sport and exercise medicine: perspectives from academic and industry stakeholders. Sports Med 51(11):2237–2250

Azodo I, Williams R, Sheikh A, Cresswell K (2020) Opportunities and challenges surrounding the use of data from wearable sensor devices in health care: qualitative interview study. J Med Internet Res 22(10):e19542

Banerjee S, Hemphill T, Longstreet P (2018) Wearable devices and healthcare: Data sharing and privacy. Inf Soc 34(1):49–57

Banifatemi A (2018) Can we use AI for global good? Commun ACM 61:8–9. https://doi.org/10.1145/3264623

Banja JD, Hollstein RD, Bruno MA (2022) When Artificial Intelligence Models Surpass Physician Performance: Medical Malpractice Liability in an Era of Advanced Artificial Intelligence. J Am Coll Radiol 19(7):816–820. https://doi.org/10.1016/j.jacr.2021.11.014

Barua A et al. (2022) Security and privacy threats for Bluetooth low energy in IoT and wearable devices: A comprehensive survey. IEEE Open J Commun Soc 3:251–281

Bentotahewa V, Hewage C, Williams J (2022) The Normative Power of the GDPR: A Case Study of Data Protection Laws of South Asian Countries. SN Comput Sci 3:183. https://doi.org/10.1007/s42979-022-01079-z

Bera K, Schalper KA, Rimm DL et al. (2019) Artificial intelligence in digital pathology — new tools for diagnosis and precision oncology. Nat Rev Clin Oncol 16:703–715. https://doi.org/10.1038/s41571-019-0252-y

Bouderhem R (2023) Privacy and Regulatory Issues in Wearable Health Technology. Eng Proc 58(1):87. https://doi.org/10.3390/ecsa-10-16206

Bouderhem R (2022) AI Regulation in Healthcare: New Paradigms for A Legally Binding Treaty Under the World Health Organization. In: 2022 14th International Conference on Computational Intelligence and Communication Networks (CICN). IEEE, pp. 277–281

Boumpa, E, Tsoukas, V, Gkogkidis, A, Spathoulas, G, Kakarountas, A (2022) Security and Privacy Concerns for Healthcare Wearable Devices and Emerging Alternative Approaches. In: Gao, X, Jamalipour, A, Guo, L (eds) Wireless Mobile Communication and Healthcare. MobiHealth 2021. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, vol 440. Springer, Cham. https://doi.org/10.1007/978-3-031-06368-8_2

Bourassa Forcier M, Gallois H, Mullan S, Joly Y (2019) Integrating artificial intelligence into health care through data access: can the GDPR act as a beacon for policymakers? J Law Biosci 6(1):317–335. https://doi.org/10.1093/jlb/lsz013

Bump JB et al. (2021) International Collaboration and Covid-19: What Are We Doing and Where Are We Going? THE BMJ, https://www.bmj.com/content/372/bmj.n180

Canali S, Schiaffonati V, Aliverti A (2022) Challenges and recommendations for wearable devices in digital health: Data quality, interoperability, health equity, fairness. PLOS Digital Health 1(10):e0000104. https://doi.org/10.1371/journal.pdig.0000104

Chafai N, L Bonizzi, S Botti & B Badaoui (2023) Emerging applications of machine learning in genomic medicine and healthcare, Critical Reviews in Clinical Laboratory Sciences, https://doi.org/10.1080/10408363.2023.2259466

Chauhan C, Gullapalli RR (2021) Ethics of AI in Pathology: Current Paradigms and Emerging Issues. Am J Pathol 191(10):1673–1683. https://doi.org/10.1016/j.ajpath.2021.06.011

Chen M, Decary M (2020) Artificial intelligence in healthcare: An essential guide for health leaders. Healthc Manag Forum 33(1):10–18. https://doi.org/10.1177/0840470419873123

Chen RJ, Wang JJ, Williamson DFK et al. (2023) Algorithmic fairness in artificial intelligence for medicine and healthcare. Nat Biomed Eng 7:719–742. https://doi.org/10.1038/s41551-023-01056-8

Chen Z-H, Lin L, Wu C-F, Li C-F, Xu R-H, Sun Y (2021) Artificial intelligence for assisting cancer diagnosis and treatment in the era of precision medicine. Cancer Commun 41:1100–1115. https://doi.org/10.1002/cac2.12215

Chen LC, Tim GE, Cash RA (2003) Health as a Global Public Good. In: Kaul I, Grunberg I, Stern M (eds), Global Public Goods: International Cooperation in the 21st Century. New York, 1999; online edn, Oxford Academic, https://doi.org/10.1093/0195130529.003.0014

Chikwetu L et al (2023) Does deidentification of data from wearable devices give us a false sense of security? A systematic review. Lancet Digit Health 5:e239–47

Chiu H-Y, Chao H-S, Chen Y-M (2022) Application of Artificial Intelligence in Lung Cancer. Cancers 14(6):1370. https://doi.org/10.3390/cancers14061370

Ciecierski-Holmes T, Singh R, Axt M et al. (2022) Artificial intelligence for strengthening healthcare systems in low- and middle-income countries: a systematic scoping review. npj Digit Med 5:162. https://doi.org/10.1038/s41746-022-00700-y

Colloud S, Metcalfe T, Askin S, Belachew S, Ammann J, Bos E, Cerreta F (2023) Evolving regulatory perspectives on digital health technologies for medicinal product development. npj Digital Med 6(1):56

Council of Europe 2020, Committee on Social Affairs, Health and Sustainable Development, Report, Artificial intelligence in health care: medical, legal and ethical challenges ahead, Rapporteur: Ms Selin Sayek Böke, Turkey, SOC, 22 September 2020, Provisional Version, available at: http://www.assembly.coe.int/LifeRay/SOC/Pdf/TextesProvisoires/2020/20200922-HealthCareAI-EN.pdf

EU Council (2023a) Press release, 27 June 2023a, Data act: Council and Parliament strike a deal on fair access to and use of data. https://www.consilium.europa.eu/en/press/press-releases/2023/06/27/data-act-council-and-parliament-strike-a-deal-on-fair-access-to-and-use-of-data/#:~:text=The%20data%20act%20will%20give,objects%2C%20machines%2C%20and%20devices

EU Council (2023b) Press release, 27 November 2023b, Data Act: Council adopts new law on fair access to and use of data, https://www.consilium.europa.eu/en/press/press-releases/2023/11/27/data-act-council-adopts-new-law-on-fair-access-to-and-use-of-data/

da Silva JP (2023) Privacy Data Ethics of Wearable Digital Health Technology, Center for Digital Health. Available at: https://digitalhealth.med.brown.edu/news/2023-05-04/ethics-wearables

Davenport T, Kalakota R (2019) The potential for artificial intelligence in healthcare. Future Health J 6(2):94–98. https://doi.org/10.7861/futurehosp.6-2-94

De Angelis L, Baglivo F, Arzilli G, Privitera GP, Ferragina P, Tozzi AE, Rizzo C (2023) ChatGPT and the rise of large language models: the new AI-driven infodemic threat in public health. Front Public Health 11:1166120. https://doi.org/10.3389/fpubh.2023.1166120

de Jong J, Cutcutache I, Page M, Elmoufti S, Dilley C, Fröhlich H, Armstrong M (2021) Towards realizing the vision of precision medicine: AI based prediction of clinical drug response. Brain 144(6):1738–1750. https://doi.org/10.1093/brain/awab108

Delbrück, J. (2012). Coexistence, Cooperation and Solidarity in International Law The International Obligation to Cooperate–An Empty Shell or a Hard Law Principle of International Law?–A Critical Look at a Much Debated Paradigm of Modern International Law. In Coexistence, Cooperation and Solidarity, Leiden, The Netherlands: Brill | Nijhoff. Available From: Brill https://doi.org/10.1163/9789004214828_002

Devine JK, Schwartz LP, Hursh SR (2022) Technical, regulatory, economic, and trust issues preventing successful integration of sensors into the mainstream consumer wearables market. Sensors 22(7):2731

Dhirani LL, Mukhtiar N, Chowdhry BS, Newe T (2023) Ethical Dilemmas and Privacy Issues in Emerging Technologies: A Review. Sensors 23(3):1151. https://doi.org/10.3390/s23031151

Dicuonzo G, Galeone G, Shini M, Massari A (2022) Towards the Use of Big Data in Healthcare: A Literature Review. Healthcare 10(7):1232. https://doi.org/10.3390/healthcare10071232

Dinh-Le C, Chuang R, Chokshi S, Mann D (2019) Wearable health technology and electronic health record integration: scoping review and future directions. JMIR mHealth uHealth 7(9):e12861

Dire, T (Special Rapporteur) (2018) Third Rep. on Peremptory Norms of General International Law (jus cogens), U.N. Doc. A/CN.4/714. https://legal.un.org/ilc/documentation/english/a_cn4_714.pdf

Duff JH et al. (2021) A Global Public Health Convention for the 21st Century, 6 LANCET PUB. HEALTH e428:e428

Durán JM, Jongsma KR (2021) Who is afraid of black box algorithms? On the epistemological and ethical basis of trust in medical AI. J Med Ethics 47(5):329–335. https://doi.org/10.1136/medethics-2020-106820

Edemekong PF, Annamaraju P, Haydel MJ (2023) Health Insurance Portability and Accountability Act. [Updated 2022 Feb 3]. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing. Available from: https://www.ncbi.nlm.nih.gov/books/NBK500019/

EU Commission, Decision of 24 January 2024 establishing the European Artificial Intelligence Office, C/2024/390, OJ C, C/2024/1459, 14.02.2024, ELI: http://data.europa.eu/eli/C/2024/1459/oj

Esmaeilzadeh P (2020) Use of AI-based tools for healthcare purposes: a survey study from consumers’ perspectives. BMC Med Inf Decis Mak 20:170. https://doi.org/10.1186/s12911-020-01191-1

Espinoza J, Xu NY, Nguyen KT, Klonoff DC (2023) The need for data standards and implementation policies to integrate CGM data into the electronic health record. J Diabetes Sci Technol 17(2):495–502

EU Commission (2022a) Brussels, Data Act: Commission proposes measures for a fair and innovative data economy. Available at: https://ec.europa.eu/commission/presscorner/detail/en/ip_22_1113

EU Commission (2022b) Brussels, Data Act: Commission proposes measures for a fair and innovative data economy. https://ec.europa.eu/commission/presscorner/detail/en/ip_22_1113

EU Official Journal, Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (General Data Protection Regulation), OJ 2016 L 119/1. Available at: https://eur-lex.europa.eu/eli/reg/2016/679/oj

EU Commission (2021) Proposal for a Regulation of the European Parliament and of the Council laying down harmonised rules on Artificial Intelligence (Artificial Intelligence Act) and amending certain Union legislative Acts, Brussels, 21 April 2021, COM/2021/206 final. Available at: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A52021PC0206

European Parliament (2022) European Parliament legislative resolution of 9 November 2023 on the proposal for a regulation of the European Parliament and of the Council on harmonised rules on fair access to and use of data (Data Act) (COM(2022)0068 – C9-0051/2022 – 2022/0047(COD)), https://www.europarl.europa.eu/doceo/document/TA-9-2023-0385_EN.html

European Parliament (2023) Artificial Intelligence Act: deal on comprehensive rules for trustworthy AI, Press Releases. https://www.europarl.europa.eu/news/en/press-room/20231206IPR15699/artificial-intelligence-act-deal-on-comprehensive-rules-for-trustworthy-ai

European Parliament (2024) Multimedia Center, Recorded Session, https://multimedia.europarl.europa.eu/en/webstreaming/joint-committee-on-internal-market-and-consumer-protection-and-committee-on-civil-liberties-justice_20240213-0930-COMMITTEE-IMCO-LIBE

Farah L, Murris JM, Borget I, Guilloux A, Martelli NM, Katsahian SIM (2023) Assessment of Performance, Interpretability, and Explainability in Artificial Intelligence–Based Health Technologies: What Healthcare Stakeholders Need to Know. Mayo Clin Proc: Digital Health 1(2):120–138. https://doi.org/10.1016/j.mcpdig.2023.02.004

Feng J, Phillips RV, Malenica I et al. (2022) Clinical artificial intelligence quality improvement: towards continual monitoring and updating of AI algorithms in healthcare. npj Digit Med 5:66. https://doi.org/10.1038/s41746-022-00611-y

Fidler DP, Gostin LO (2006) The New International Health Regulations: An Historic Development for International Law and Public Health. Med Ethics 34:85

Floresta G, Zagni C, Gentile D, Patamia V, Rescifina A (2022) Artificial Intelligence Technologies for COVID-19 De Novo Drug Design. Int J Mol Sci 23(6):3261. https://doi.org/10.3390/ijms23063261

Gibney E (2024) What the EU’s tough AI law means for research and ChatGPT. Nature, https://doi.org/10.1038/d41586-024-00497-8

Gostin LO et al. (2015) The Normative Authority of the World Health Organization, 129(7) PUB. HEALTH 854:855

Grzybowski A, Jin K, Wu H (2024) Challenges of Artificial Intelligence in Medicine and Dermatology. Clin Dermatol, https://doi.org/10.1016/j.clindermatol.2023.12.013

Gurevich E, El Hassan B, El Morr C (2023) Equity within AI systems: What can health leaders expect? Healthcare Management. Forum 36(2):119–124. https://doi.org/10.1177/08404704221125368

HaDEA (2023) EU health research projects using AI to improve cancer treatment and patients’ quality of life. https://hadea.ec.europa.eu/news/eu-health-research-projects-using-ai-improve-cancer-treatment-and-patients-quality-life-2023-05-27_en

Harrer S (2023) Attention is not all you need: the complicated case of ethically using large language models in healthcare and medicine. EBioMedicine 90:104512. https://doi.org/10.1016/j.ebiom.2023.104512

Hassija V, Chamola V, Mahapatra A et al. (2023) Interpreting Black-Box Models: A Review on Explainable Artificial Intelligence. Cogn Comput, https://doi.org/10.1007/s12559-023-10179-8

Haugen HM (2020) The Crucial and Contested Global Public Good: Principles and Goals in Global Internet Governance. Internet Policy Rev 9;1–22, Available at SSRN: https://ssrn.com/abstract=3531536

Haupt CE, Marks M (2023) AI-Generated Medical Advice—GPT and Beyond. JAMA 329(16):1349–1350. https://doi.org/10.1001/jama.2023.5321

Holzinger A, Langs G, Denk H, Zatloukal K, Müller H (2019) Causability and explainability of artificial intelligence in medicine. WIREs Data Min Knowl Discov 9:e1312. https://doi.org/10.1002/widm.1312

Hosny A, Parmar C, Quackenbush J et al. (2018) Artificial intelligence in radiology. Nat Rev Cancer 18:500–510. https://doi.org/10.1038/s41568-018-0016-5

Huarng K-H, Hui-Kuang Yu T, Lee CF (2022) Adoption model of healthcare wearable devices. Technol Forecast Soc Change 174:121286

Hughes-Lartey K, Li M, Botchey FE, Qin Z (2021) Human factor, a critical weak point in the information security of an organization’s Internet of things. Heliyon 7:e06522

Huynh E, Hosny A, Guthier C et al. (2020) Artificial intelligence in radiation oncology. Nat Rev Clin Oncol 17:771–781. https://doi.org/10.1038/s41571-020-0417-8

Iqbal JD, Biller-Andorno N (2022) The regulatory gap in digital health and alternative pathways to bridge it. Health Policy Technol 11(3):100663

Iserson KV (2024) Informed consent for artificial intelligence in emergency medicine: A practical guide. Am J Emerg Med 76:225–230. https://doi.org/10.1016/j.ajem.2023.11.022

Jarrahi MH, Askay D, Eshraghi A, Smith P (2023) Artificial intelligence and knowledge management: A partnership between human and AI. Bus Horiz 66(1):87–99. https://doi.org/10.1016/j.bushor.2022.03.002

Javaid M, Haleem A, Singh RP, Suman R (2023) Towards insighting cybersecurity for healthcare domains: A comprehensive review of recent practices and trends. Cyber Sec Appl 1:100016

Jayanthilladevi A, Sangeetha K, Balamurugan E (2020) Healthcare Biometrics Security and Regulations: Biometrics Data Security and Regulations Governing PHI and HIPAA Act for Patient Privacy," 2020 International Conference on Emerging Smart Computing and Informatics (ESCI), Pune, India, 2020, pp. 244-247. https://doi.org/10.1109/ESCI48226.2020.9167635

Jiang L, Wu Z, Xu X, et al. (2021) Opportunities and challenges of artificial intelligence in the medical field: current application, emerging problems, and problem-solving strategies. J Int Med Res, Available at: https://journals.sagepub.com/doi/full/10.1177/03000605211000157

Johnson KB, Wei W-Q, Weeraratne D, Frisse ME, Misulis K, Rhee K, Zhao J, Snowdon JL (2021) Precision Medicine, AI, and the Future of Personalized Health Care. Clin Transl Sci 14:86–93. https://doi.org/10.1111/cts.12884

Johnson M, Albizri A, Simsek S (2022) Artificial intelligence in healthcare operations to enhance treatment outcomes: a framework to predict lung cancer prognosis. Ann Oper Res 308:275–305. https://doi.org/10.1007/s10479-020-03872-6

Kapoor V et al. (2020) Privacy Issues in Wearable Technology: An Intrinsic Review (April 2, 2020). Proceedings of the International Conference on Innovative Computing & Communications (ICICC) 2020, New Delhi, India. Available at SSRN: https://ssrn.com/abstract=3566918 or https://doi.org/10.2139/ssrn.3566918

Kapoor V, Singh R, Reddy R, Churi P (2020) Privacy issues in wearable technology: An intrinsic review. In Proceedings of the International Conference on Innovative Computing & Communications (ICICC). https://doi.org/10.2139/ssrn.3566918

Katznelson G, Gerke S (2021) The need for health AI ethics in medical school education. Adv Health Sci Educ 26:1447–1458. https://doi.org/10.1007/s10459-021-10040-3

Khan F, Kim JH, Mathiassen L, Moore R (2021) Data breach management: an integrated risk model. Inf Manag 58(1):103392

Kiseleva A (2020) AI as a Medical Device: Is it Enough to Ensure Performance Transparency and Accountability? Eur Pharm Law Rev 4(1):5–16. https://doi.org/10.21552/eplr/2020/1/4

Klumpp M, Hintze M, Immonen M, Ródenas-Rigla F, Pilati F, Aparicio-Martínez F, Çelebi D, Liebig T, Jirstrand M, Urbann O, Hedman M, Lipponen JA, Bicciato S, Radan AP, Valdivieso B, Thronicke W, Gunopulos D, Delgado-Gonzalo R (2021) Artificial Intelligence for Hospital Health Care: Application Cases and Answers to Challenges in European Hospitals. Healthcare 9(8):961. https://doi.org/10.3390/healthcare9080961

Korjian S, Gibson CM (2022) Digital technologies and the democratization of clinical research: Social media, wearables, and artificial intelligence. Contemp Clin Trials 117:106767

Kumar P, Chauhan S, Awasthi LK (2023a) Artificial intelligence in healthcare: review, ethics, trust challenges & future research directions. Eng Appl Artif Intell 120:105894. https://doi.org/10.1016/j.engappai.2023.105894

Kumar S, Choudhury S (2023b) Normative ethics, human rights, and artificial intelligence. AI Ethics 3:441–450. https://doi.org/10.1007/s43681-022-00170-8

Kundu S (2021) AI in medicine must be explainable. Nat Med 27:1328. https://doi.org/10.1038/s41591-021-01461-z

Lau PL, Nandy M, Chakraborty S, Accelerating UN (2023) Sustainable Development Goals with AI-Driven Technologies: A Systematic Literature Review of Women’s Healthcare. Healthcare 11(3):401. https://doi.org/10.3390/healthcare11030401

Lee P, Bubeck S, Petro J (2023) Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. N Engl J Med 388:1233–1239. https://www.nejm.org/doi/full/10.1056/NEJMsr2214184

Leese J, Zhu S, Townsend AF, Backman CL, Nimmon L, Li LC (2022) Ethical issues experienced by persons with rheumatoid arthritis in a wearable‐enabled physical activity intervention study. Health Expect 25(4):1418–1431

Lehne M, Sass J, Essenwanger A et al. (2019) Why digital medicine depends on interoperability. npj Digit Med 2:79. https://doi.org/10.1038/s41746-019-0158-1

Li RC, Asch SM, Shah NH (2020) Developing a delivery science for artificial intelligence in healthcare. npj Digit Med 3:107. https://doi.org/10.1038/s41746-020-00318-y

Loh E (2023) ChatGPT and generative AI chatbots: challenges and opportunities for science, medicine and medical leaders. BMJ Leader, https://bmjleader.bmj.com/content/early/2023/05/02/leader-2023-000797

Loong Z, Ai X, Huang T, Xiang S (2021) The impact of AI on employment and the corresponding response of PRC labor law. J Chin Hum Resour Manag 12(2):69–94. https://doi.org/10.47297/wspchrmWSP2040-800506.20211202

Loucks J et al. (2021) Deloitte Insights, Wearable technology in health care: Getting better all the time. https://www2.deloitte.com/content/dam/insights/articles/GLOB164601_Wearable-healthcare/DI_Wearable-healthcare.pdf

Lysaght T, Lim HY, Xafis V et al. (2019) AI-Assisted Decision-making in Healthcare. ABR 11:299–314. https://doi.org/10.1007/s41649-019-00096-0

Meskó B, Topol EJ (2023) The imperative for regulatory oversight of large language models (or generative AI) in healthcare. npj Digit Med 6:120. https://doi.org/10.1038/s41746-023-00873-0

MHRA (2022) Regulatory Horizons Council Report on the Regulation of AI as a Medical Device. https://assets.publishing.service.gov.uk/media/6384bf98e90e0778a46ce99f/RHC_regulation_of_AI_as_a_Medical_Device_report.pdf

Mohanty S, Rashid MHA, Mridul M, Mohanty C, Swayamsiddha S (2020) Application of Artificial Intelligence in COVID-19 drug repurposing. Diabetes Metab Syndrome Clin Res Rev 14(5):1027–1031

Mouloudj K, Le VL, Bouarar A, Bouarar AC, Asanza DM, Srivastava M (2024) Adopting Artificial Intelligence in Healthcare: A Narrative Review. In Teixeira S, Remondes J (eds), The Use of Artificial Intelligence in Digital Marketing: Competitive Strategies and Tactics (pp. 1-20). IGI Global. https://doi.org/10.4018/978-1-6684-9324-3.ch001

Mulder T, Tudorica M(2019) Privacy policies, cross-border health data and the GDPR. Inf Commun Technol Law 28(3):261–274. https://doi.org/10.1080/13600834.2019.1644068

Murdoch B (2021) Privacy and artificial intelligence: challenges for protecting health information in a new era. BMC Med Ethics 22:122. https://doi.org/10.1186/s12910-021-00687-3

Naik N, Hameed BMZ, Shetty DK, Swain D, Shah M, Paul R, Aggarwal K, Ibrahim S, Patil V, Smriti K, Shetty S, Rai BP, Chlosta P, Somani BK (2022) Legal and Ethical Consideration in Artificial Intelligence in Healthcare: Who Takes Responsibility? Front Surg 9:862322. https://doi.org/10.3389/fsurg.2022.862322

Nauta M, Trienes J, Pathak S, Nguyen E, Peters M, Schmitt Y, Schlötterer J, van Keulen M, Seifert C (2023) From Anecdotal Evidence to Quantitative Evaluation Methods: A Systematic Review on Evaluating Explainable AI. ACM Comput Surv 55:42

Nithesh N, Zeeshan HBM, Nilakshman S, Shankeeth V, Vathsala P, Komal S, Janhavi S, Milap S, Sufyan I, Anshuman S, Hadis K, Karthickeyan N, Shetty Dasharathraj K, Prasad RB, Piotr C, Somani Bhaskar K (2022) Transforming healthcare through a digital revolution: A review of digital healthcare technologies and solutions. Front Digital Health 4, https://www.frontiersin.org/articles/10.3389/fdgth.2022.919985

Oranye NP, Aremu AW (2021) The Duty to Cooperate in State Interactions for the Sustainable Use of International Watercourses, SPRINGER LINK, https://link.springer.com/article/10.1007/s43621-021-00055-6

Parikh RB, Teeple S, Navathe AS (2019) Addressing Bias in Artificial Intelligence in Health Care. JAMA 322(24):2377–2378. https://doi.org/10.1001/jama.2019.18058

Paul D, Sanap G, Shenoy S, Kalyane D, Kalia K, Tekade RK (2021) Artificial intelligence in drug discovery and development. Drug Discov Today 26(1):80–93. https://doi.org/10.1016/j.drudis.2020.10.010

Paul M, et al. (2023) Digitization of healthcare sector: A study on privacy and security concerns. ICT Express 9(4):571-588. https://doi.org/10.1016/j.icte.2023.02.007

Pesapane F, Bracchi DA, Mulligan JF, Linnikov A, Maslennikov O, Lanzavecchia MB, Tantrige P, Stasolla A, Biondetti P, Giuggioli PF, Cassano E, Carrafiello G (2021) Legal and Regulatory Framework for AI Solutions in Healthcare in EU, US, China, and Russia: New Scenarios after a Pandemic. Radiation 1(4):261–276. https://doi.org/10.3390/radiation1040022

Phelan AL et al. (2020) Legal Agreements: Barriers and Enablers to Global Equitable COVID-19 Vaccine Access, 396. LANCET 800:800–802

Pirbhulal S, Samuel OW, Wu W, Sangaiah AK, Li G (2019) A joint resource-aware and medical data security framework for wearable healthcare systems. Future Gener Comput Syst 95:382–391

Powell D, Godfrey A (2023) Considerations for integrating wearables into the everyday healthcare practice. npj Digit Med 6:70. https://doi.org/10.1038/s41746-023-00820-z

Prabakaran D, Ramachandran S (2022) Multi-factor authentication for secured financial transactions in cloud environment. CMC Comput Mater Contin 70(1):1781–1798

Prem E (2023) From ethical AI frameworks to tools: a review of approaches. AI Ethics 3:699–716. https://doi.org/10.1007/s43681-023-00258-9

Quazi S (2022) Artificial intelligence and machine learning in precision and genomic medicine. Med Oncol 39(8):120. https://doi.org/10.1007/s12032-022-01711-1

Radanliev P, De Roure D (2022) Advancing the cybersecurity of the healthcare system with self-optimising and self-adaptative artificial intelligence (part 2). Health Technol 12:923–929. https://doi.org/10.1007/s12553-022-00691-6

Regulation (EU) (2023) 2023/2854 of the European Parliament and of the Council of 13 December 2023 on harmonised rules on fair access to and use of data and amending Regulation (EU) 2017/2394 and Directive (EU) 2020/1828 (Data Act), PE/49/2023/REV/1, OJ L, 2023/2854, 22.12.2023, ELI: http://data.europa.eu/eli/reg/2023/2854/oj

Ruschemeier H (2023) AI as a challenge for legal regulation – the scope of application of the artificial intelligence act proposal. ERA Forum 23(3):361–376, https://doi.org/10.1007/s12027-022-00725-6

Saha S, Chowdhury C, Neogy S (2024) A novel two phase data sensitivity based access control framework for healthcare data. Multimed Tools Appl 83, 8867–8892. https://doi.org/10.1007/s11042-023-15427-5

Sambasivan N, Kapania S, Highfill H, Akrong D, Paritosh P, Aroyo LM (2021) “Everyone wants to do the model work, not the data work”: Data Cascades in High-Stakes AI. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (CHI ‘21). Association for Computing Machinery, New York, NY, USA, Article 39, 1–15. https://doi.org/10.1145/3411764.3445518

Santosh K, Gaur L (2021) AI in Sustainable Public Healthcare. In: Artificial Intelligence and Machine Learning in Public Healthcare. SpringerBriefs in Applied Sciences and Technology. Springer, Singapore. https://doi.org/10.1007/978-981-16-6768-8_4

Schena FP, Anelli VW, Abbrescia DI et al. (2022) Prediction of chronic kidney disease and its progression by artificial intelligence algorithms. J Nephrol 35:1953–1971. https://doi.org/10.1007/s40620-022-01302-3

Schuett J (2023) Risk Management in the Artificial Intelligence Act. Eur J Risk Regul 1–19. https://doi.org/10.1017/err.2023.1

Schultz MD, Seele P (2023) Towards AI ethics’ institutionalization: knowledge bridges from business ethics to advance organizational AI ethics. AI Ethics 3:99–111. https://doi.org/10.1007/s43681-022-00150-y

Scott IA, Carter SM, Coiera E (2021) Exploring stakeholder attitudes towards AI in clinical practice. BMJ Health Care Inf 28(1):e100450. https://doi.org/10.1136/bmjhci-2021-100450

Segura Anaya LH, Alsadoon A, Costadopoulos N et al. (2018) Ethical Implications of User Perceptions of Wearable Devices. Sci Eng Ethics 24:1–28. https://doi.org/10.1007/s11948-017-9872-8

Shah SM, Khan RA, Arif S, Sajid U (2022) Artificial intelligence for breast cancer analysis: Trends & directions. Comput Biol Med 142:105221. https://doi.org/10.1016/j.compbiomed.2022.105221

Sigfrids A, Leikas J, Salo-Pöntinen H, Koskimies E (2023) Human-centricity in AI governance: A systemic approach. Front Artif Intell 6:976887. https://doi.org/10.3389/frai.2023.976887

Smith AA, Li R, Tse ZTH (2023) Reshaping healthcare with wearable biosensors. Sci Rep 13(1):4998

Smith M, Sattler A, Hong G et al. (2021) From Code to Bedside: Implementing Artificial Intelligence Using Quality Improvement Methods. J Gen Intern Med 36:1061–1066. https://doi.org/10.1007/s11606-020-06394-w

Stahl BC, Antoniou J, Bhalla N et al. (2023) A systematic review of artificial intelligence impact assessments. Artif Intell Rev 56:12799–12831. https://doi.org/10.1007/s10462-023-10420-8

Suárez A, Díaz-Flores García V, Algar J, Gómez Sánchez M, Llorente de Pedro M, Freire Y (2024) Unveiling the ChatGPT phenomenon: Evaluating the consistency and accuracy of endodontic question answers. Int Endod J 57:108–113. https://doi.org/10.1111/iej.13985

Sui A, Sui W, Liu S, Rhodes R (2023) Ethical considerations for the use of consumer wearables in health research. Digital Health 9:20552076231153740

Sunarti S, Rahman FF, Naufal M, Risky M, Febriyanto K, Masnina R (2021) Artificial intelligence in healthcare: opportunities and risk for future. Gac Sanit 35(Supplement 1):S67–S70. https://doi.org/10.1016/j.gaceta.2020.12.019

Tahri Sqalli M, Aslonov B, Gafurov M, Nurmatov S (2023) Humanizing AI in medical training: ethical framework for responsible design. Front Artif Intell 6:1189914

Taka AM (2023) A deep dive into dynamic data flows, wearable devices, and the concept of health data, International Data Privacy Law, Volume 13, Issue 2, May 2023, Pages 124–140. https://doi.org/10.1093/idpl/ipad007

Tang L, Li J, Fantus S (2023) Medical artificial intelligence ethics: A systematic review of empirical studies. Digital Health 9, https://doi.org/10.1177/20552076231186064

Thierer AD (2015) The Internet of Things and Wearable Technology: Addressing Privacy and Security Concerns without Derailing Innovation. Rich J L Tech 21(6). Available at: https://scholarship.richmond.edu/jolt/vol21/iss2/4

Tomašev N, Glorot X, Rae JW et al. (2019) A clinically applicable approach to continuous prediction of future acute kidney injury. Nature 572:116–119. https://doi.org/10.1038/s41586-019-1390-1

Trocin C, Mikalef P, Papamitsiou Z et al. (2023) Responsible AI for Digital Health: a Synthesis and a Research Agenda. Inf Syst Front 25:2139–2157. https://doi.org/10.1007/s10796-021-10146-4