Abstract

This paper introduces two three-term trust region conjugate gradient algorithms, TT-TR-WP and TT-TR-CG, which are capable of converging under non-Lipschitz continuous gradient functions without any additional conditions. These algorithms possess sufficient descent and trust region properties, and demonstrate global convergence. In order to assess their numerical performance, we compare them with two classical algorithms in terms of restoring noisy gray-scale and color images as well as solving large-scale unconstrained problems. In restoring noisy gray-scale images, we set the performance of TT-TR-WP as the standard, then TT-TR-CG takes around 2.33 times longer. The other algorithms around 2.46 and 2.41 times longer, respectively. In solving the same color images, the proposed algorithms exhibit relative good performance over other algorithms. Additionally, TT-TR-WP and TT-TR-CG are competitive in unconstrained problems, and the former has wide applicability while the latter has strong robustness. Moreover, the proposed algorithms are both more outstanding than the baseline algorithms in terms of applicability and robustness.

Similar content being viewed by others

Introduction

This paper considers following model

where the objective function \(h:R^n\longrightarrow R\) is continuously differentiable. The conjugate gradient (CG) algorithm is widely used to solve (1), in which the iteration formula is written as:

where \(x_{k+1},\) \(\alpha _k\) and \(d_k\) are next iteration point, step size and search direction respectively, where \(d_k\) is generally defined by formula

where \(g_k\) is called the gradient of objective function h(x) at iteration point \(x_k\), and \(\beta _k\in R\) is a scalar. Some CG algorithms are proposed to solve large-scale optimization problems and engineer problems. In Ref.4, general conjugate gradient method using the Wolfe line search is proposed, with a condition on the scalar \(\beta _k,\) which is sufficient for the global convergence. In Ref.16, a projection-based method is proposed to solve large-scale nonlinear pseudo-monotone equations, without Lipschitz continuity. In Refs.19,20,21, Sheng et al. proposed some trust region algorithms to solve nonsmooth minimization, large-residual nonsmooth least squares problems and optimization problems. Yuan et al proposed some nonlinear conjugate gradient methods to restore nonlinear equations and image restorations in Ref.24,25. In Ref.5, Dai summarized some analysis of conjugate gradient method. In Ref.9, authors adopted conjugate gradient solvers on graphic processing units. In Ref.12, authors proposed a new conjugate gradient method with guaranteed descent and an efficient line search for optimization. In Ref.18, authors proposed a hybrid conjugate gradient algorithm combining PRP and FR algorithms. In Ref.23, Wei et al proposed a conjugate gradient algorithm which designs a negative coefficient in the formula of the search direction. In fact, an important work is the design of \(\beta _k,\) and some classical expressions are widely used, including the Hestenes-Stiefel (HS)8,14,27, Liu-Storey (LS)22, Polak-Ribière-Polyak (PRP)11,25,26,28, Dai-Yuan (DY)6,29 and conjugate descent method (CD)10,13, Fletcher-Reeves (FR)15, where the first three algorithms have relatively good numerical performance but fewer theoretical results, while the others are inverse. The definitions are listed in Table 1, where \(\Vert .\Vert \) is the Euclidean norm.

The primary components of conjugate gradient algorithms encompass the search direction, step size (when applicable), and global convergence. The ultimate objective is to achieve a satisfactory balance between numerical efficiency and theoretical scrutiny.

In fact, the adequate descent property is a prerequisite for theoretical analysis and is governed by the following equation

where \(t>0.\) Moreover, the trust region technique illustrates that the search radius plays a crucial role in determining the numerical efficacy. The search direction is obtained by solving the subsequent quadratic function, where \(\Delta _{k}\) denotes the trust region radius.

The search direction in CG algorithms is also called satisfying the trust region property if following formula holds.

where \(t_1 > 0\). Equations (4) and (5) are intimately connected with the global convergence. Furthermore, an inexact linear search approach is frequently utilized to determine a suitable step size \(\alpha _k\). This paper adopts weak Wolfe-Powell (WWP) inexact linear search, which is formulated as follows:

and

where \(\delta \in (0,\frac{1}{2})\) and \(\tau \in (\delta ,1)\).

The aforementioned discussions are intricately linked to global convergence, which necessitates certain fundamental assumptions. These include: (i) the objective function must be continuously differentiable; (ii) the level set \(S=\{x\in R^n: h(x)\le h(x_0)\}\) must be bounded; and (iii) the gradient function g(x) must be Lipschitz continuous, where \(x_0\) denotes an initial point. The FR method1, modified HS method7, modified LS method17, and modified DY method29 achieve global convergence through the formula

In other words, the Lipschitz continuity of the gradient function is a prerequisite for existing works, prompting us to consider whether global convergence can be attained in the absence of Lipschitz continuity. This paper proposes some three-term trust region conjugate gradient methods that converge under non-Lipschitz continuity condition, with the main properties summarized as follows:

-

Objective algorithms possess both the sufficient descent and trust region properties, without any additional conditions. The trust region property is derived from the trust region algorithm, while the algorithm design is based on classical approaches such as Hestenes-Stiefel (HS) and Polak-Ribière-Polyak (PRP).

-

These algorithms achieve global convergence even under conditions of non-Lipschitz continuity of the gradient function and weak Wolfe-Powell linear search techniques.

-

The applications of these algorithms include image restoration of noisy gray scale and color images, as well as solving large-scale unconstrained problems. The case studies illustrate that TT-TR-WP and TT-TR-CG possess superior numerical performance.

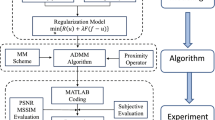

The remainder of the paper is organized as follows: “Motivation and TT-TR-WP” provides an overview of the motivation behind TT-TR-WP; “The global convergence of TT-TR-WP” presents the convergence analysis; “TT-TR-CG and theoretical analysis” describes the TT-TR-WP algorithm and its convergence analysis; “Case studies” presents the case studies, including image restoration and large-scale unconstrained problem-solving; and finally, the last section offers concluding remarks.

Motivation and TT-TR-WP

The first three-term conjugate gradient formula is proposed by Zhang et al.30, in which the search direction is defined by

Formula (8) satisfies the sufficient descent property without any additional conditions, while the trust region property is closely related to the objective function, Lipschitz continuity, and level set.

Formula (9) was introduced by Yuan et al.28 under the weak Wolfe-Powell linear search technique, where the search direction is given by the following expression:

The step size \(\alpha _{k-1}\) is included in the search direction (9). This formula not only satisfies the sufficient descent property without other conditions, but also guarantees global convergence under non-Lipschitz continuity conditions, while the trust region property is closely linked to the formula \(\alpha _{k-1}d_{k-1} = x_{k} - x_{k-1}\), objective function, and level set.

To summarize, while formulas (8) and (9) do possess the sufficient descent property without additional conditions, there are several limitations. The trust region property, vital for both theoretical analysis and numerical performance, unfortunately depends on the objective function, basic assumptions, and complex analysis. Additionally, there exist simpler and more cost-effective algorithms that simultaneously achieve better numerical performance and theoretical results.

Aforementioned discussions inspire us to propose following formula.

Remark 1

-

(i)

Formula (10) possesses the sufficient descent and trust region properties that are independent of any additional conditions.

-

(ii)

Global convergence is guaranteed even under conditions of non-Lipschitz continuity of the gradient function.

-

(iii)

The classical HS algorithm’s excellent numerical performance is incorporated into TT-TR-WP through a specified denominator.

This section presents Algorithm 1, while the subsequent section provides the theoretical analysis.

TT-TR-WP: A convergently three-term trust region algorithm with the weak Wolfe-Powell linear search

-

Step 0: Initialize \(x_0\in R^n\), \(d_0=-g_0\), constants \(\epsilon \in (0,1)\), \(\delta \in (0,\frac{1}{2})\), \(\tau \in (\delta ,1)\), \(\sigma >0\), and set \(k=0\).

-

Step 1: Stop rule \(\Vert g_k\Vert \le \epsilon \).

-

Step 2: Choose step size \(\alpha _k\) under formulas (6) and (7).

-

Step 3: Update iteration point \(x_{k+1} = x_k+\alpha _kd_k\).

-

Step 4: Stop rule \(\Vert g_{k+1}\Vert \le \epsilon \).

-

Step 5: Update search direction under formula (10).

-

Step 6: Set \(k=k+1\), and go to Step 2.

The global convergence of TT-TR-WP

This section analyzes the global convergence of TT-TR-WP, in which the properties of sufficient descent and trust region are firstly given.

Lemma 3.1

The search direction (10) simultaneously has the sufficient descent (4) and trust region (5) properties, i.e.,

and

Proof

If \(k=0\), \(d_0=-g_0,\) and \(\Vert d_0\Vert \le \Vert g_0\Vert \le (1+\frac{2}{\sigma })\Vert g_0\Vert ,\)

If \(k\ge 1\), following formulas can be obtained from the formula (10):

and

then completes the proof. \(\square \)

Remark 2

-

(i)

The Lemma 3.1 proves the sufficient descent and trust region properties of search direction (10), which are independent of any assumptions and linear search techniques.

-

(ii)

From formula (11), we can obtain

$$\begin{aligned} -\Vert d_k\Vert \Vert g_k\Vert \le g_k^Td_k = -\Vert g_k\Vert ^2, \end{aligned}$$this means that

$$\begin{aligned} \Vert g_k\Vert \le \Vert d_k\Vert , \end{aligned}$$thus following formula holds from formula (12)

$$\begin{aligned} \Vert g_k\Vert \le \Vert d_k\Vert \le (1+\frac{2}{\sigma })\Vert g_k\Vert ,\forall \, k. \end{aligned}$$(13)

To achieve global convergence, certain basic assumptions are proposed.

Assumption

-

(i)

The level set \(S=\{x| h(x)\le h(x_0)\}\) is well-defined and bounded, where \(x_0\) is the initial point.

-

(ii)

The function h(x) is continuously differentiable and bounded below.

Under these assumptions, the following significant properties hold:

Property 1: The iteration sequence \(\{x_k\}\) is bounded.

Property 2: The gradient function g(x) is continuous on the level set.

Now pay attention to the global convergence of TT-TR-WP.

Theorem 3.1

If sequences \(\{x_k,d_k,\alpha _k,g_k\}\) are generated by TT-TR-WP, then, following formula holds

Proof

We adopt proof by contradiction, and firstly make an assumption

where \(\varepsilon _C\) is a positive constant.

Additionally, there exists a convergent subsequence \(\{x_{k_i}\}\) since iteration point \(\{x_k\}\) is bounded, it means that

Similarly, the gradient function is continuous, thus there exists \(\epsilon _1>0\) and an integer \(N_1>0\) such that

From formula (13), there exists \(\epsilon _2>0,\) and an integer \(N_2>0\) satisfying

From (16), (17) and (11), following formula holds

On the other hand, following formula will be obtained from (7)

thus

then taking the limit on both sides and set \(N=\max \{N_1,N_2\},\) with the subsequence \(\{x_{k_i}\},\) we can deduce that

It means that there exists a subsequence \(\{x_{k_i}\},\) such that

while this contradicts the relation (11), i.e. the original formula holds and the proof is completed. \(\square \)

Remark 3

-

(i)

Non-Lipschitz continuous gradient functions are prevalent. For instance, \(g(x) = \sin (\frac{1}{x})\) and \(g(x)=x^{\frac{3}{2}}\sin (\frac{1}{x})\) for \(x\in (0, 1].\)

-

(ii)

The global convergence of TR-TR-WP is established under the weak Wolfe-Powell linear search technique and gradient function non-Lipschitz continuity.

-

(iii)

The sufficient descent and trust region properties, (11) and (12), simplify the convergence analysis.

TT-TR-CG and theoretical analysis

This section will propose the other modified three-term trust region CG algorithm, TT-TR-CG, and prove some properties.

In TT-TR-CG, the search direction has following form:

where \(\mu > 0.\)

This subsection will firstly describe contents of objective algorithm.

TT-TR-CG: A convergently three-term trust region CG with the weak Wolfe-Powell

-

Step 0: Initialize \(x_0\in R^n\), \(d_0=-g_0\), constants \(\epsilon \in (0,1)\), \(\delta \in (0,\frac{1}{2})\), \(\tau \in (\delta ,1)\), \(\mu >0\), and set \(k=0\).

-

Step 1: Stop rule \(\Vert g_k\Vert \le \epsilon \).

-

Step 2: Choose step size \(\alpha _k\) under formulas (6) and (7).

-

Step 3: Update iteration point \(x_{k+1} = x_k+\alpha _kd_k\).

-

Step 4: Stop rule \(\Vert g_{k+1}\Vert \le \epsilon \).

-

Step 5: Update search direction under formula (19).

-

Step 6: Set \(k=k+1\), and go to Step 2.

Remark 4

-

(i)

The search direction (19) satisfies both the sufficient descent and trust region properties simultaneously.

-

(ii)

Global convergence analysis is established under the gradient function non-Lipschitz continuity and weak Wolfe-Powell linear search technique.

-

(iii)

The good numerical performance of the classical PRP algorithm is partly incorporated into TT-TR-CG through the specified denominator.

Lemma 4.1

The search direction (19) has the sufficient descent (4) and trust region (5) properties simultaneously without any conditions, i.e.,

and

Proof

The proof is similar with the TT-TR-WP, thus omits it. \(\square \)

To obtain the global convergence, some basic assumptions are proposed.

Assumption

-

(i)

the level set \(S=\{x| h(x)\le h(x_0)\}\) is defined and bounded, where \(x_0\) is an initial point;

-

(ii)

the objective function h(x) is continuously differentiable and bounded below.

Theorem 4.1

If sequences \(\{x_k,d_k,\alpha _k,g_k\}\) are generated by TT-TR-CG, then, following formula holds

Proof

The proof is similar with the “The global convergence of TT-TR-WP”, then completes the proof. \(\square \)

Case studies

This section utilises objective algorithms to restore noisy images and solve large-scale unconstrained optimisation problems to test their numerical performance.

To further test the numerical performance, this paper introduces two baseline algorithms in Ref.26,28, namely MPRP and A-TPRP-A, and the formulas are (8), (9), respectively. The former is the first three-term conjugate gradient algorithm and is widely cited. The latter is the latest algorithm which updates the search direction with the step size and possesses global convergence without Lipschitz continuity. The baseline algorithms possess both good numerical performance and theoretical properties in the existing works.

The experimental environment consists of an Intel(R) Core(TM) i5-8250U CPU @ 1.60GHz 1.80 GHz with 16 GB RAM running on the Windows 11 operating system.

Image restoration

The restoration of noisy images is of great practical importance and is widely used. This subsection uses the TT-TR-WP, TT-TR-CG and baseline algorithms to restore noisy images to test their numerical performance, in which three figures are chosen because they are widely used and classical test figures, see Refs.24,25.

The objective function and experimental settings are described as follows: The candidate noise index set is denoted as N, the objective function as \(\omega (u)\), and the edge-preserving function as \(\chi \). The true image containing \(K\times L\) pixels is denoted as x. For a more detailed explanation of image restoration, please refer to Refs.3,24,25,28.

where \(I = \{1, 2, \ldots , K\} \times \{1,2,\ldots ,L,\},\) \(\zeta _{i, j}\) is the observed noisy image and \({\bar{\zeta }}_{i, j}\) is the verified image, \(s_{min}\) and \(s_{max}\) are the minimum and maximum noisy pixel. Consider following optimization function

and

\(\phi _{i, j} = \{(i,j-1), (i,j+1),(i-1,j),(i+1,j)\}.\)

where \(\nu > 0.\)

where MSE is the mean square error between the original image and processed image and num is the number of bits.

The stop rule of algorithm is \(\frac{\Vert h_{k+1}-h_k\Vert }{\Vert h_k\Vert }<\varepsilon \), and the parameters are \(\delta =0.2, \tau = 0.895, \sigma =0.1, \mu = 0.1, \varepsilon =10^{-6}.\)

In restoring noisy gray-scale images, from Table 2, we can conclude that TT-TR-WP exhibits the best numerical performance in terms of running time, TT-TR-CG is the second best, MPRP is third, and A-T-PRP-A is the slowest. Furthermore, if we set the performance of TT-TR-WP as the standard, then TT-TR-CG takes around 2.34 times longer. The other algorithms take around 2.46 and 2.42 times longer, respectively. In Table 3, the time proportion among all algorithms in each figure and all figures is proposed, in which the biggest gap is 1.68, TT-TR-WP is far ahead than the others, and TT-TR-CG is pretty good in most situations. Additionally, results in Table 4 further demonstrate that all algorithms obtain highly similar SSIM and PSNR values. Combining the above discussion, we can make a conclusion: to obtain highly similar results, TT-TR-WP and TT-TR-CG perform relatively well and the proposed algorithms are competitive.

In summary, TT-TR-WP exhibits impressive numerical performance, and TT-TR-CG is highly competitive with the others. To save space, this paper only records numerical results but abandons the display of figures obtained by diverse algorithms with noise ratios of 70%, and 90%, see Fig. 1. In each row, the first column is obtained by TT-TR-WP, the second column by TT-TR-CG, the third column by A-T-PRP-A, and the last column by MPRP.

Color image restoration

To further evaluate the performance of the objective algorithms, this section applies various algorithms to restore color images with different levels of noise. Peak signal-to-noise ratio (PSNR) and structural similarity index measure (SSIM) and Mean Squared Error (MSE) are widely used measurements for image quality assessment and are used in this section. To save space, this paper only records numerical results but abandons the display of figures obtained by diverse algorithms with noise ratios of 20%, 60% and 80%. The stop rule of algorithm is \(\frac{\Vert h_{k+1}-h_k\Vert }{\Vert h_k\Vert }<\varepsilon \), and the parameters are \(\delta =0.0885, \tau = 0.885, \sigma =0.0015, \mu = 1.1555, \varepsilon =10^{-4}.\)

In Table 5, the total running time of four algorithms is 73.83, 74.88, 80.02, 74.52 s, respectively. Additionally, from Tables 6, 7, 8, the PSNR, MSE, and SSIM of algorithms are highly similar, but object algorithms are relatively competitive. The images restored by various algorithms under different noise ratios are presented in Fig. 2 that corresponds to noise ratio 40%. In each row, the first column is obtained by TT-TR-WP, the second column by TT-TR-CG, the third column by A-T-PRP-A, and the last column by MPRP.

General unconstrained optimization

To further test the numerical performance, this subsection applies the algorithms to solve large-scale unconstrained optimization problems. Sixty-five classical functions are randomly selected from2, as shown in Table 9, with dimensions of 3000, 6000, and 12,000. The stopping criterion is \(\Vert g(x_k)\Vert <\varepsilon \) or \(NI > 8000\), where NI is the iteration number, and \(g(x_k)\) is the gradient value at the point \(x_k\). The parameters used are \(\delta =0.2, \tau = 0.9, \sigma =0.001, \mu = 0.1, \varepsilon =10^{-6}\).

The running time in seconds is used as the reference standard for evaluating numerical performance, as shown in Table 10. The relative numerical performance of solving large-scale problems is illustrated in Fig. 3, in which the red line denotes TT-TR-WP, black line denotes TT-TR-CG, blue line denotes A-T-PRP-A, and the other denotes MPRP. TT-TR-WP has a high initial value, which means that possesses relatively good robustness. TT-TR-CG exhibits gradually increase trend all time which means that possesses relatively good applicability. TT-TR-WP and TT-TR-CG both possess relatively good robustness and applicability than the others.

In summary, TT-TR-WP and TT-TR-CG possess relatively good numerical performance than baseline algorithms, in terms of applicability and robustness, in which TT-TR-WP has the best robustness and relatively good applicability and TT-TR-CG is the opposite.

Conclusion

This paper introduces two three-term trust region conjugate gradient algorithms, TT-TR-WP and TT-TR-CG, which are capable of converging under non-Lipschitz continuous gradient functions without any additional conditions. These algorithms possess sufficient descent and trust region properties, and demonstrate global convergence. In order to assess their numerical performance, we compare them with two classical algorithms in terms of restoring noisy gray-scale and color images as well as solving large-scale unconstrained problems. To obtain highly similar SSIM and PSNR values in noisy gray-scale images, TT-TR-WP exhibits the best numerical performance in terms of running time, TT-TR-CG is the second best, MPRP is third, and A-T-PRP-A is the slowest. Furthermore, if we set the performance of TT-TR-WP as the standard, then TT-TR-CG takes around 2.34 times longer. The other algorithms take around 2.46 and 2.42 times longer, respectively. In solving the same color images, the proposed algorithms exhibit relative good performance over other algorithms. Additionally, in comparative experiments of algorithm performance, the curve of TT-TR-CG has the maximum initial value, while the curve of TT-TR-WP is the second-best, indicating that TT-TR-CG and TT-TR-WP are relatively more robustness and have high stability when facing diverse situations. In summary, TT-TR-WP and TT-TR-CG exhibit relatively better performance in terms of applicability and robustness.

Data availability

All images are sourced from published papers or the internet, and there are no copyright disputes. All data generated or analysed during this study are included in this published article [and its supplementary information files].

References

Al-Baali, M. Descent property and global convergence of the Fletcher–Reeves method with inexact line search. IMA J. Numer. Anal. 5(1), 121–124 (1985).

Andrei, N. An unconstrained optimization test functions collection. Environ. Sci. Technol. 10(1), 6552–6558 (2008).

Cao, J. & Wu, J. A conjugate gradient algorithm and its applications in image restoration. Appl. Numer. Math. 152, 243–252 (2020).

Cheng, W. & Dai, Y. Gradient-based method with active set strategy for optimization. Math. Comput. 87(311), 1283–1305 (2017).

Dai, Y. Analysis of conjugate gradient methods, Ph.D. Thesis, Institute of Computational Mathematics and Scientific Engineering Computing, Chinese Academy of Sciences (1997).

Dai, Y. & Yuan, Y. A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10(1), 177–182 (1999).

Dai, Z. Two modified HS type conjugate gradient methods for unconstrained optimization problems. Nonlinear Anal. 74(3), 927–936 (2011).

Dai, Z. & Zhu, H. A modified Hestenes–Stiefel-type derivative-free method for large-scale nonlinear monotone equations. 8(2) (2020).

Dehnavi, M. et al. Enhancing the performance of conjugate gradient solvers on graphic processing units. IEEE Trans. Magn. 47(5), 1162–1165 (2017).

Fletcher, R. Practical Methods of Optimization 2nd edn. (Wiley, 1987).

Grippo, L. & Lucidi, S. A globally convergent version of the Polak-Ribière conjugate gradient method. Math. Program. 78(3), 375–391 (1997).

Hager, W. & Zhang, H. A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16(1), 170–192 (2005).

Hager, W. & Zhang, H. Algorithm 851: CG-DESCENT, a conjugate gradient method with guaranteed descent. ACM Trans. Math. Softw. (TOMS) 32(1), 113–137 (2006).

Hu, W., Wu, J. & Yuan, G. Some modified Hestenes–Stiefel conjugate gradient algorithms with application in image restoration. Appl. Numer. Math. (2020).

Li, Z., Zhou, W. & Li, D. Global convergence of a modified Fletcher–Reeves conjugate gradient method with Armijo-type line search. Numerische Mathematik 104(4), 561–572 (2006).

Liu, J. et al. An efficient projection-based algorithm without Lipschitz continuity for large-scale nonlinear pseudo-monotone equations. J. Comput. Appl. Math.https://doi.org/10.1016/j.cam.2021.113822 (2021).

Min, L. & Feng, H. A sufficient descent LS conjugate gradient method for unconstrained optimization problems. Appl. Math. Comput. 218(5), 1577–1586 (2011).

Mtagulwa, P. & Kaelo, P. An efficient modified PRP-FR hybrid conjugate gradient method for solving unconstrained optimization problems. Appl. Numer. Math. 145(Nov.), 111–120 (2019).

Sheng, Z. & Yuan, G. An effective adaptive trust region algorithm for nonsmooth minimization. Comput. Optim. Appl. 71(1), 251–271 (2018).

Sheng, Z. et al. An adaptive trust region algorithm for large-residual nonsmooth least squares problems. J. Ind. Manag. Optim. 14(2), 707 (2018).

Sheng, Z., Yuan, G. & Cui, Z. A new adaptive trust region algorithm for optimization problems. ACTA Math. Sci. 38(2), 479–496 (2018).

Tang, C., Wei, Z. & Li, G. A new version of the Liu–Storey conjugate gradient method. Appl. Math. Comput. 189(1), 302–313 (2007).

Wei, Z., Yao, S. & Liu, L. The convergence properties of some new conjugate gradient methods. Appl. Math. Comput. 183(2), 1341–1350 (2006).

Yuan, G., Li, T. & Hu, W. A conjugate gradient algorithm for large-scale nonlinear equations and image restoration problems. Appl. Numer. Math. 147, 129–141 (2020).

Yuan, G., Lu, J. & Wang, Z. The modified PRP conjugate gradient algorithm under a non-descent line search and its application in the Muskingum model and image restoration problems. Soft Comput. 25(8), 5867–5879 (2021).

Yuan, G. Modified nonlinear conjugate gradient methods with sufficient descent property for large-scale optimization problems. Optim. Lett. 3, 11–21 (2009).

Yuan, G., Meng, Z. & Li, Y. A modified Hestenes and Stiefel conjugate gradient algorithm for large-scale nonsmooth minimizations and nonlinear equations. J. Optim. Theory Appl. 168(1), 129–152 (2016).

Yuan, G., Yang, H. & Zhang, M. Adaptive three-term PRP algorithms without gradient Lipschitz continuity condition for nonconvex functions. Numer. Algorithms 91, 145–160 (2022).

Zhu, Z., Zhang, D. & Wang, S. Two modified DY conjugate gradient methods for unconstrained optimization problems. Appl. Math. Comput. 373, 125004 (2020).

Zhang, L., Zhou, W. & Li, D. A descent modified Polak–Ribière–Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal. 26(4), 629–640 (2006).

Acknowledgements

We firstly thank the editor and the referee for their useful suggestions and comments which greatly improve this manuscript. This work is supported by Guangxi science and technology base and talent project Grant AD22080047, Innovation Project of Guangxi Graduate Education Grant YCBZ2021027, National Natural Science Foundation of China under Grant No. 12261027, and the special foundation for Guangxi Ba Gui Scholars.

Author information

Authors and Affiliations

Contributions

W.H. wrote the main manuscript text, and J.W. and G.Y. were responsible for the layout and revision of the article.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hu, W., Wu, J. & Yuan, G. Some convergently three-term trust region conjugate gradient algorithms under gradient function non-Lipschitz continuity. Sci Rep 14, 10851 (2024). https://doi.org/10.1038/s41598-024-60969-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-60969-9

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.