Abstract

The Normalized Mutual Information (NMI) metric is widely utilized in the evaluation of clustering and community detection algorithms. This study explores the performance of NMI, specifically examining its performance in relation to the quantity of communities, and uncovers a significant drawback associated with the metric's behavior as the number of communities increases. Our findings reveal a pronounced bias in the NMI as the number of communities escalates. While previous studies have noted this biased behavior, they have not provided a formal proof and have not addressed the causation of this problem, leaving a gap in the existing literature. In this study, we fill this gap by employing a mathematical approach to formally demonstrate why NMI exhibits biased behavior, thereby establishing its unsuitability as a metric for evaluating clustering and community detection algorithms. Crucially, our study exposes the vulnerability of entropy-based metrics that employ logarithmic functions to similar bias.

Similar content being viewed by others

Introduction

Background

Community detection (CD) within social networks has emerged as a pivotal area of research, given its potential to unravel intricate patterns of interaction and group dynamics. It is used in many disciplines, including biology1, criminology2, economics3, and urban planning4, to mention just a few examples. In particular, this topic has also emerged as a critical field in the battle against disinformation. Social networks are often the primary conduits for the spread of disinformation, with communities within these networks playing a significant role in the dissemination and amplification of misleading content. By identifying and understanding these communities, we can gain valuable insights into the dynamics of disinformation spread, enabling more effective interventions5,6. It is essential, as the rapid spread of medical7,8, political9,10, social11,12,13, as well as scientific14,15,16 misinformation and disinformation are among the greatest civilization challenges of our times17,18,19.

Research has shown that disinformation tends to spread rapidly within tight-knit communities and is often characterized by homogenous beliefs and high levels of trust among members20. These communities can act as echo chambers, reinforcing and amplifying disinformation and using more and more sophisticated strategies for masking their agendas21. By employing CD algorithms, we can identify these communities and understand their structure and behavior, providing a basis for targeted, community-specific strategies to combat disinformation. As such, CD in social networks is not only a theoretical exercise but also a practical tool in the fight against disinformation. However, even though many new emerging approaches have been tested22,23,24,25, we are still far from an optimal approach.

A plethora of algorithms have been developed to enhance the accuracy of CD, yet comprehensive and diverse sets of metrics for evaluating these algorithms are lacking. Predominantly, metrics such as modularity, conductance, pairwise F-measure (PWF), NMI, variation of information (VI), purity, and adjusted rand index (ARI) have been employed to assess the performance of CD algorithms. These metrics, which were originally designed for evaluating clustering techniques, have been adapted for CD due to the conceptual similarities between clustering and community detection26.

Certain metrics, such as modularity, operate based on the internal structure of communities identified by a specific algorithm, independent of the availability of ground truth27. Conversely, metrics such as the NMI, ARI, VI, purity, and F-measure necessitate the availability of ground truth for deployment28. Regardless of the accessibility of ground truth, each metric is subject to a resolution limit29, which is a factor that has been highlighted and well established in existing research30,31,32,33,34.

Motivation

Predominantly, external metrics (those reliant on ground truth) construct a contingency matrix (table), where each cell represents the intersection of members between actual classes and detected communities. In some instances, the accuracy of CD algorithms is evaluated solely based on the number of true positive members. However, an effective CD algorithm should take into account two primary aspects of communities: distribution and joint membership. Therefore, the evaluation metric for CD algorithms must be capable of discerning the distribution of communities and joint members in relation to the ground truth. This implies that the critical factor is not merely identifying joint members but also accurately determining the number of communities relative to the ground truth35.

Despite the identification of biased behavior in these measures by several researchers32,33,34, a mathematical explanation for this issue has not been adequately addressed. Most reports suggest that this bias is due to the finite size effect. In this study, we aim to formally demonstrate why the NMI metric exhibits bias. To achieve this, we first present the results of NMI across 40 scenarios (representing different community numbers assumed to be detected by a specific algorithm) and compare them with five other well-established measures. Subsequently, we dissected the NMI formula and ultimately proved that this formula inherently leads to biased behavior36.

Contribution

Community detection is a critical concern spanning diverse scientific disciplines, including biology, health, social networks, politics, targeted marketing, recommender systems, link prediction, and criminology37. As such, the accuracy of community detection algorithms is of paramount importance, and the evaluation metric for these algorithms assumes even greater significance. Given the widespread use of the NMI in evaluating community detection algorithms, illuminating its biased behavior contributes significantly to fields that employ community detection studies. Moreover, substantiating this bias establishes the foundation for analyzing evaluation metrics of community detection algorithms that incorporate a logarithmic function38. It also opens the door to crafting new metrics while considering issues rooted in logarithmic functions.

The remainder of this paper is structured as follows: The subsequent section reviews the relevant literature in this domain. Then “Preliminaries and notations” are introduced. “Problem statement” delves into a detailed description of the NMI drawback. The proof of the NMI problem is presented in “Proof of biased behavior of NMI”. In “Case study”, we present a case study based on a real-world dataset and “Conclusion” concludes the paper with key findings and implications.

Related works

CD algorithms aim to identify groups of nodes characterized by dense interconnections compared to the rest of the network39,40. Girvan and Newman39 introduced the modularity metric to evaluate the accuracy of communities detected by their algorithm, sparking the development of numerous algorithms based on this metric. However, Fortunato26 highlighted a resolution limit in the modularity metric, indicating its inability to detect small-sized communities. Cai et al.31 further demonstrated that maximizing modularity is an NP-hard problem and that a random network without any communities can achieve a high Q value. Chen, Nguyen, and Szymanski41 underscored the inconsistencies of the modularity metric, noting its tendency to favor either small or large communities in different scenarios. They proposed a new measure, modularity density, which combines modularity with split penalty and community density to circumvent the dual problems inherent in modularity.

The NMI was first considered a precise metric by Danon et al.36, who reported its sensitivity to errors in the community detection procedure. They consider \({Z}_{out}\) as the average number of links a node has to members of any other community, by increasing \({Z}_{out}\) NMI tends to be zero. Subsequent research has addressed the limitations of the NMI measure, with Romano et al.42 emphasizing the role of the number of clusters in the evaluation metrics. Amelio and Pizzuti30 argued that the NMI is not fair, as solutions with a high number of clusters receive disproportionately high NMI. Zhang34 demonstrated that the NMI is significantly affected by systematic errors due to finite network sizes and proposed the relative normalized mutual information (rNMI). Lai and Nardini32 introduced the corrected normalized mutual information (cNMI) to address the reverse finite size problem of the rNMI. Liu, Cheng, and Zhang33 highlighted the drawbacks of NMI and its improved versions, such as rNMI and cNMI, noting that these measures often overlook the importance of small communities. Rossetti, Pappalardo, and Rinzivillo43 introduced community precision and community recall to evaluate CD algorithms, addressing the high computational complexity of the NMI. Arab and Hasheminezhad44 also reported scalability problems with the NMI in large-scale data.

Other researchers have proposed alternative measures for evaluating community detection and clustering algorithms. Meilă45 introduced the variation of information (VI) metric, an entropy-based measure that operates based on mutual information. Wagner and Wagner46 categorized measures based on counting pairs, set overlaps, and mutual information and concluded that information theoretical measures outperform counting pairs and set overlaps measures. Santos and Embrechts47 utilized the ARI for cluster validation and feature selection. Yang and Leskovec48compared 13 measures for evaluating community detection algorithms, categorizing them into four groups and concluding that conductance and triad-participation-ratio have the best performance in identifying communities. Saltz, Prat-Pérez, and Dominguez-Sal49 introduced a new metric for the CD problem, weighted community clustering (WCC), which operates based on the distribution of triangles in the graph.

Preliminaries and notations

Normalized Mutual Information (NMI)

The NMI serves as a metric for assessing the performance of community detection algorithms. The NMI facilitates comparisons between two clusters or communities, yielding a value that ranges from 0 to 1. A higher value indicates a greater degree of similarity between two partitions or communities. As an external metric, the NMI necessitates the availability of class labels for computations, implying that the ground truth is required when employing this metric. The calculation of NMI is executed according to Eq. (1).

where \(I(A,B)\) is mutual information and \(H\) is the entropy as shown in Eqs. (2) and (3).

The expansion of Eq. (1) with respect to (2) and (3) is Eq. (4)

Suppose there are two networks denoted as \({Net}_{1}\) and \({Net}_{2}\) each consisting of sets of vertices (V) and edges (E). \({Net}_{1}\) consists of R communities denoted as \(A=\{{A}_{1},{A}_{2},\dots .,{A}_{R}\}\), while \({Net}_{2}\) consists of S communities denoted as \(B=\{{B}_{1},{B}_{2},\dots .,{B}_{S}\}\). \({C}_{ij}\) denotes the number of nodes that clusters (communities) \({A}_{i}\) and \({B}_{j}\) share. If \(A=B\), then \(NMI (A, B)=1\); if A and B are completely different, then \(NMI (A,B)=0\).

In addition to the NMI, some other measures can be used to evaluate the accuracy of CD and clustering algorithms. Table 1 lists well-known measures in this domain. We listed these measures here to highlight the differences between the NMI and other measures in practice.

Essentially, to compute the measures listed in Table 1, a contingency table (CT) is employed. This table is created based on the joint members between communities detected by a certain algorithm and the ground truth. The contingency table used for computing the NMI is presented in Table 2.

Table 3 presents important notations.

Problem statement

In this section, we present the main drawback of the NMI. We illustrate this problem through an example. Example 1. Suppose we have 40 members and eight gold standard communities (ground truth) as follows:

\({\mathrm{\varnothing }}_{1}^{S}=\{{a}_{1},...,{a}_{5}\}\), \({\mathrm{\varnothing }}_{2}^{S}=\{{a}_{6},...,{a}_{10}\}\), \({\mathrm{\varnothing }}_{3}^{S}=\{{a}_{11},...,{a}_{15}\}\), \({\mathrm{\varnothing }}_{4}^{S}=\{{a}_{16},...,{a}_{20}\}\), \({\mathrm{\varnothing }}_{5}^{S}=\{{a}_{21},...,{a}_{25}\}\), \({\mathrm{\varnothing }}_{6}^{S}=\{{a}_{26},...,{a}_{30}\}\), \({\mathrm{\varnothing }}_{7}^{S}=\{{a}_{31},...,{a}_{35}\}\), \({\mathrm{\varnothing }}_{8}^{S}=\{{a}_{36},...,{a}_{40}\}\)

We analyze all possible states based on the number of communities. Table 4 and Fig. 1 present the results of NMI values for different states compared to those of the ARI, PWF, Fowlkes Mallows, Hubert statistics, and Jaccard.

The number of communities in the ground truth is 8, and it should remain constant across all the experiments. The second column shows the number of communities detected by a specific algorithm, while columns 3–8 display the values for each measure. The last column represents the number of ground truth communities that share common members with the detected communities. For example, if a certain algorithm detects two communities as follows:

The \({\varvec{\omega}}\) value is 8 since all members of the 8 ground truth communities share common members with the detected communities.

In this study, we assume the best-case scenario in which the detected communities of a certain algorithm lead to the highest NMI value. Thus, we propose:

Axiom 1: The highest NMI value is obtained if and only if the detected communities have the highest possible number of common members with the ground truth and if the highest value of \({\varvec{\omega}}\) is maintained.

Now, as shown in Table 4 and Fig. 1, everything appears to be fine when the number of R is less than S. However, the situation changes when the number of R increases and surpasses S. At first glance, it may not seem that there is a strong argument to support the claim that the value of metrics declines as the number of members decreases. However, upon closer examination, it becomes evident that the decrease in the NMI value is less steep compared to the other metrics. This raises the question of what is amiss when comparing all algorithms to a specific metric such as the NMI.

The problem arises when N is equal to the number of communities, indicating that each community includes only one member, which is the worst-case scenario. Surprisingly, even in this unfavorable situation, the NMI value still indicated a high level of efficiency. On the other hand, when R equals one or two, the NMI value returned is very low. For instance, Table 4 demonstrates that when R is 2, the NMI is 0.5, whereas in the worst-case scenario with R being 40, the NMI is 0.72. This finding implies that if Alg1 and Alg2 return 2 and 40 communities respectively, the NMI suggests that the accuracies of Alg1 and Alg2 are 0.5 and 0.72 respectively. Similarly, when R is 4, the NMI is 0.8, whereas when R is 27 the NMI is also 0.8. In both examples, the NMI suggests that a certain algorithm that detects more communities is better. However, in reality, a certain algorithm that detects 4 communities may be better than another algorithm that detects 27 communities with respect to the number of ground truth communities.

Proof of biased behavior of NMI

As discussed in the previous section, when the number of communities (R) increases and exceeds the number of communities in the ground truth (S), the NMI exhibits a biased behavior. Therefore, in this section, we aim to analyze the effect of quantity of communities on this measure. Firstly, we decompose the NMI formula, allowing us to examine how NMI values change from the minimum to the maximum. Subsequently, we present an explanation as to why NMI changes are more pronounced when \(R<S\) rather than \(R>S\). Before we begin our discussion, it should be noted that for all Lammas and the relevant proof, Axiom 1 should be maintained.

Decomposition

For simplicity, we have Formula 4 (NMI) here.

We decompose the above equation to examine the behavior and performance of each component. The NMI consists of three main components: the common members (\({C}_{ij}\)), the sum of common members for each community detected by a particular algorithm (\({C}_{.j}\)), and the sum of common members for each community in the ground truth (\({C}_{i.}\)). Here, S represents the number of communities in the ground truth, and R represents the number of communities detected by a specific algorithm. We denote the set of common members as Z. S represents the set of the sum of common members in each ground truth community, while R represents the set of common members in each community detected by a specific algorithm.

\(S=\{{s}_{1},{s}_{2},\dots ,{s}_{s}\}\) is a set representing the number of members in each community of the ground truth, where \({s}_{i}\) denotes the number of members in community i. It is important to note that there exists an inverse relationship between each pair of elements in S.

\(R=\{{r}_{1},{r}_{2},\dots ,{r}_{r}\}\) is a set representing the number of members in each community detected by a certain algorithm, where \({r}_{j}\) represents the number of members in community j. Again, there exists an inverse relationship between each pair of elements in R.

Suppose that:

The L and M values are always negative, ∵ \(0<{C}_{i.}\) \(<N \therefore\) \({\text{log}}\frac{{C}_{i.}}{N}\) \(<0\) ⇒ \({C}_{i.}{\text{log}}\frac{{C}_{i.}}{N}\) \(<0\)

In addition, K is positive, therefore, the − 2 multiplied K is also negative and the NMI value is positive.

and:

Lemma 1

\(N={\sum }_{j=1}^{R}{r}_{j}={\sum }_{i=1}^{S}{s}_{i}\)

Proof

In simple terms, a certain algorithm detects communities with N members, where these N members are distributed among R communities. Similarly, this term holds true for the ground truth, which consists of S communities. Below, we provide a formal proof:

From minimum to maximum

Lemma 2

The minimum value of NMI is obtained if \(R=1 and S>1 OR S=1 and R>1\)

Proof

If \(R=1\), then a certain algorithm considers all members in one community, on the other hand \(S>1\) demonstrates that there is more than one community in ground truth. The conclusion is that \({C}_{ij}= {C}_{i.}\) and \(N= {C}_{.j}\) therefore \(K=0\). In a similar vein when \(S=1\) and \(R>1\) NMI is zero. Formally this can be shown as:

In both cases K is zero, ∵ \({C}_{ij}N{=C}_{i.}{C}_{.j}\) \(\Rightarrow {\text{log}}1=0\), ∴ \(NMI=0\).

\({\varvec{R}}={\varvec{S}}\)

Lemma 3

The maximum value of NMI is 1, if \(R=S \& \forall i, j, i=j \Rightarrow {C}_{ij}\ne 0 \& s.t i\ne j \Rightarrow {C}_{ij}=0\) and the CT is a square and diagonal matrix.

Proof

When \(R=S\) only diagonal elements of CT is non zero, to have a maximum NMI value of 1, the members of each community in the ground truth first should be the same in terms of quantity and second in terms of member similarity with the communities detected by a certain algorithm. It should be noted that this does not imply that the number of members in all communities should be the same. Therefore, when R equals S, a square matrix is formed, ensuring that there are similar members in each pair. This results in members not being distributed across two communities, thus resulting in a diagonal matrix for the contingency table. Having only one non-zero cell leads to:

Since the CT is diagonal, only one cell in each row or column is considered to be non-zero ∴

In addition:

∴

Furthermore, this can be demonstrated through contradiction by considering the case when L and M are not equal, therefore \(R\ne S\). If \(R>S\), it implies that some members are distributed across different communities. This distribution decreases the number of common members, consequently increasing the absolute value of the denominator in the fraction. Consequently, the NMI decreases, as shown in Eqs. (10) and (11):

Now, if \(S>R\), the absolute value of the numerator decreases. This occurs because the logarithmic reduction has a smaller slope compared to the effect of the coefficient.

In the given example, the roles of K and M are crucial in the NMI formula, where L is constant. Let us consider the two algorithms, Alg 1 and Alg 2, along with their respective communities.

Example 2

Suppose the ground truth consists of 4 communities: \(\{{a}_{1},{a}_{2}\},\{{a}_{3},{a}_{4}\},\{{a}_{5},{a}_{6}\},\{{a}_{7},{a}_{8}\}\)

Algorithm Alg 1 consists of two communities: \(\{{a}_{1},{a}_{2},{a}_{3},{a}_{4}\},\{{a}_{5},{a}_{6},{a}_{7}{,a}_{8}\}\). In this case, the values for K, L, M, and NMI for Alg 1 are as follows:

Now, consider Algorithm Alg 2, which consists of six communities:\(\{{a}_{1},{a}_{2}\},\{{a}_{3},{a}_{4}\},\{{a}_{5}\},\{{a}_{6}\},\{{a}_{7}\},{\{a}_{8}\}\). In this case, the values for K, L, M, and NMI for Alg 2 are as follows:

These values demonstrate the roles of K and M in the NMI formula, and how they contribute to the calculation of the NMI value for Alg 1 and Alg 2, respectively.

\({\varvec{R}}\ne {\varvec{S}}\)

Now, let us discuss the scenarios where the number of communities is greater or less than the number of communities in the ground truth.

-

\({\varvec{R}}>{\varvec{S}}\)

It is clear that \(|{\varvec{Z}}|={\varvec{R}}\times {\varvec{S}}\)

For this state:

Lemma 4

If \(R>S\), then the maximum value of NMI is obtained if and only if the cardinality of the set of common members (Z) is equal to \({\varvec{R}}\times ({\varvec{R}}-1)\), and if the number of pairwise common members (\({C}_{RS}\)) that are not equal to zero is equal to or greater than the cardinality of \({\varvec{R}}\).

First it is important to note that values smaller than |R|, indicating non-zero values in the cells of the contingency table, are not possible. This is because having a community without any common members violates the pigeonhole principle in mathematics. According to this principle, if n items are placed into m containers, where \(n>m\), then at least one container must contain more than one item.

In this scenario, at least one detected community should have at least one common member, resulting in at least |R| being the non-zero member in Z. This can be illustrated with an example.

Example 3

Let us suppose that the ground truth has 2 communities, and a certain algorithm detects 3 communities as follows:

Table 5 displays the number of common members (Z). The number of nonzero elements in Z is 3, which is equal to or greater than |R|.

Proof (lemma 4)

The greater the difference between R and S, the greater the number of communities detected. This results in fewer common members between the communities in R and S, subsequently leading to a decrease in NMI. Additionally, if the number of non-zero members in Z is greater than R, it indicates that the number of common members between S and R is less, further contributing to the reduction in NMI.

-

\({\varvec{R}}<{\varvec{S}}\)

For this state:

Lemma 5

If \({\varvec{R}}<{\varvec{S}}\), then the maximum value of NMI is obtained if and only if the cardinality of the set of common members (Z) is equal to \({\varvec{S}}\times ({\varvec{S}}-1)\), and if the number of pairwise common members (\({C}_{RS}\)) that are not equal to zero is equal to the cardinality of S.

Like Lemma 4, Lemma 5 can be proven.

Slope of change in the NMI

Lemma 6

When the difference between R and S increases, in cases where \(R < S\), the slope of changes of the NMI values are greater than when \(R>S\).

Proof

By applying Lemma 4 and Lemma 5, we can demonstrate that if there is a difference between R and S, the optimal NMI value is obtained when the difference between R and S is 1. In other words, as the difference between R and S increases, the NMI decreases.

Equation (6) is equal to function 12 where we consider \(NMI\) as \(f(x,y, z)\), \(K=g(x,y,z), M=h(z)\):

s.t. \(x={C}_{ij} , y={C}_{i.} , z={C}_{.j}\)

where \(O=({C}_{1.}{\text{log}}\left({C}_{1.}\right)+{C}_{2.}{\text{log}}\left({C}_{2.}\right)+\dots +{C}_{S.}{\text{log}}({C}_{S.}))\)

For all cases, \(N{\text{log}}N\mathrm{\,is\, a\, constant},\mathrm{\,therefore\,}h(z)\mathrm{\,is\, completely\, dependent\, on\, }P,\,\mathrm{s}.\mathrm{t}\,P<N{\text{log}}N\)

When \(S>R\), increasing R results in a decrease in P, causing \(|h(z)|\) to increase. This might suggest that NMI decreases. However, \(g(x,y,z)\) increases, leading to an increase in NMI. Let us examine \(g(x,y,z)\) to further understand this.

suppose \(\sum_{i=1}^{S}\sum_{j=1}^{R}{C}_{ij}{\text{log}}{C}_{ij}=T\) then

O and \(N{\text{log}}N\) are constant for all scenarios. Let us suppose O = \({C}_{1}\) and \(2 N{\text{log}}N\)= \({C}_{2}\):

Now, let us consider Eq. (21) for two possible scenarios:

-

1.

\(R<S\) (the number of detected communities is lower than that of the ground truth):

In this scenario, let us suppose that Algorithm 1 returns \({N}_{1}\) communities in one run and \({N}_{2}\) communities in another run, where \({N}_{1} <{N}_{2}.\). According to Axiom 1, as the number of communities increases, the possibility of member distribution also increases, which leads to a reduction in the P value according to (22) (14) and (13). A decrease in P results in a larger numerator in Eq. (21). This is because it increases the difference with \({C}_{2}\), where \({C}_{2}\), is a constant value for all the scenarios.

∵

For Alg 1 \({R}_{1}=\{{r}_{{1}_{.1}},{r}_{{1}_{.2}},...,{r}_{{1}_{.{N}_{1}}}\}\)

For Alg 2, \({R}_{2}=\{{r}_{{2}_{.1}},{r}_{{2}_{.2}},...,{r}_{{2}_{.{N}_{2}}}\}\)

\({N}_{2}>{N}_{1}: \frac{N}{{N}_{2}}<\frac{N}{{N}_{1}}\) ∴\(\forall i {r}_{{2}_{.i}}\le {r}_{{1}_{.i}}\&\nexists j {r}_{{2}_{.j}}>{r}_{{1}_{.j}} \& \exists k {r}_{{2}_{.k}}<{r}_{{1}_{.k}}\)

∴ According to (14) and (22) \(P1>P2\)

To further illustrate this, let us consider the following example:

Example 4

Suppose the ground truth consists of 4 communities, Algorithm 1 returns 2 communities, Algorithm 2 returns 3 communities (in both cases, \(R<S\)), and N = 8.

Table 6 displays the contingency table of Alg1.

Table 7 presents the contingency table of Alg 2.

Therefore, the NMIs of different algorithms depend on P and T. Let us consider Alg1 and Alg2 with \({P}_{1}\), \({T}_{1}\), \({P}_{2}\), and \({T}_{2}\) respectively . Suppose Alg1 has a lower number of communities than Alg2. In this case, it can be proven that Eq. (23) holds.

-

1.

R > S (the number of detected communities is greater than that of the ground truth)

To analyze the scenario where \(R>S\), we start by incrementing R by one unit. This implies that, after the case where \(R=S\), in the next state, a certain algorithm returns \(R+1\) communities. As a reminder, S is constant. In this case, one of the community members is distributed across two communities to maintain Axiom 1. This can be expressed as:

By further increasing R, this process is repeated until the new community consists of only one member. The distribution of shared members from one community to new communities results in a lower value of P compared to previous states according to (22) and (14). Additionally, \(P=T\) in this case (\(R>S\)), the numerator remains constant for all scenarios.

The key issue here is that increasing R results in a non-linear (approximately logarithmic) decrease in P. The maximum value of P with respect to R is achieved if:

….

…..

First, according to (22)

and

According to Eq. (28), we can conclude that when \(R<S\), the slope of the change curve is greater than when \(R>S\). This results in a larger P value when \(R<S\) compared to states where \(R>S\). Additionally, it can be proven that the T value when \(R<S\) is constant. Therefore, NMI when \(R<S\) changes with respect to P, and a greater change in P when \(R<S\) leads to a greater change in NMI.

Figure 2 illustrates a nonlinear (approximately logarithmic) decrease in the P value as the number of communities (R) increases.

Lemma 7

When \(R<S\) and Axiom 1 holds, T is a constant.

Proof

As the number of communities (R) becomes larger than the number of communities in the ground truth (S), the changes in P decrease. In this case, the value of T is equal to P, resulting in a constant numerator for the scenario when \(R>S\). However, due to a small change in the denominator, the magnitude of change in NMI is smaller when \(R>S\) than when \(R<S\).

Lemma 8

When \(R>S\) and Axiom 1 holds, then T equals P.

Proof

When \(R=S\), the contingency table becomes a scalar matrix.

By increasing each unit in R when \(R>S\) then:

Regarding the values of (24) to (27), for the case when \(R>S\), the T value is equal to P, resulting in a constant numerator for this scenario.

Overall, when we summarize the NMI formula in Eq. (21), the logarithm function remains the key factor. One of the main characteristics of the logarithm function is its low slope change with respect to its variable. This characteristic is reflected in the descending derivative of the logarithm function. This observation forms the main intuition behind Eq. (28), which explains the biased behavior of NMI. Furthermore, this phenomenon can be generalized to other entropy-based metrics that utilize the logarithm function in their formulas.

Case study

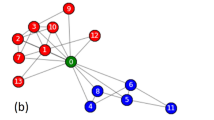

In this section, we provide a case study to demonstrate why and how NMI returns biased results in practice. To do this, we utilized the email-Eu-core network dataset50, which was generated using email data from a large European research institution. This dataset is provided by Jure Leskovec from Stanford university51, and according to50-p.12 " we have anonymized information about all incoming and outgoing email of the research institution”. It consists of 1005 nodes (N = 1005) and 25,571 edges. To compare the NMI value and reveal its biased behavior in different scenarios according to R value, seven well-known community detection algorithms were deployed, including multi-level, Louvain, leading eigenvector, infomap, walktrap, edge betweenness (GN), and Leiden. These algorithms are implemented through specific functions developed to detect communities within the 'igraph' package of the R programming language. The functions corresponding to the aforementioned algorithms are as follows: multilevel.community, cluster_louvain, cluster_leading_eigen, cluster_infomap, cluster_walktrap, cluster_edge_betweenness, and cluster_leiden. These functions take as input a network comprising nodes and edges and return the node name along with its corresponding community number. Interestingly, a diverse range of R values obtained by the above-mentioned algorithms helps us clearly show the limitations of NMI. Additionally, a plot of the cumulative distribution function (CDF) of the percentage of common members according to CT is illustrated for each community detection algorithm (see Fig. 3). Each point in this plot is labeled with a number representing the number of common members. For instance, if a point is labeled 1 and the corresponding value on the y-axis is 97, it means that 97% of the cells in CT have 1 or fewer common members. The labels '0' and corresponding CDF values on the y-axis represent the percentages of cells in the CT with a zero value. This indicates a lack of common members between the communities detected by a certain algorithm and the communities in the ground truth. The x-axis illustrates the common members observed in the cells of the CT. For example, in Fig. 3's top plot, the CDF of common members in the contingency table for the GN algorithm reveals the occurrence of values 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 12, 14, 16, 17, 19, and 53. These findings suggest that these values represent the number of common members between the communities detected by the GN algorithm and the ground truth. The CDF essentially shows the frequency of these values in the CT. It is evident that lower values are more commonly observed in the cells of the CT, with the exception of the Leiden algorithm. As we will discuss later, the Leiden algorithm returns a CT in which all cells are filled with the value '1'."

Table 8 shows the NMI values obtained by applying the above-mentioned algorithms to the email-Eu-core network. The abnormal NMI value, which alone can highlight the drawback of the NMI measure, is obtained by the Leiden algorithm, with a value of 0.65. Leiden returns 1005 communities, meaning that each community consists of only one node. In practice, this is a worst-case scenario, as considering each node as a separate community in relation to the ground truth (42 communities) is a noticeable fault.

Furthermore, as shown in Fig. 3, 100% of the cells in CT for the Leiden algorithm have a value of 1, indicating that the communities detected by Leiden have only one common member with the ground truth communities. It is evident that the NMI value of 0.65 is a significantly biased value, whereas the ARI is zero for this algorithm, providing a more realistic measure of common members with the ground truth. The problem arises when an analyst deploys only the NMI without considering information about R, S, or common members, which is a common approach in scientific experiments in community detection studies. In such cases, the analyst may interpret Leiden or infomap as the best algorithm, while Leiden is actually the worst.

Now, let us consider the second-highest value of R in Table 8, which is the result of the edge betweenness (GN) algorithm. Its R value is 731, which is significantly greater than S, leading to the distribution of members in numerous communities. Consequently, it is less likely that the communities detected by GN align with the ground truth. However, the NMI for this algorithm is 0.6, which is a high value compared to that of the other algorithms in this case study and an inaccurate result. According to Fig. 3, approximately 97.5% of the CT cells are zero, more than 99% are 1 or 0, and only 0.5% of the cells are greater than 1.

Comparing Leiden and GN to Louvain highlights the incompetence of NMI. There are 27 communities returned by Louvain, suggesting a greater likelihood of having more common members with the ground truth. However, this is not guaranteed, as meeting Axiom 1 in a real-world scenario is not assured. Investigating common members based on the CDF plot of the number of cells depicted in Fig. 3 demonstrates that the Louvain algorithm leads to more similar communities with ground truth communities. Despite this fact, NMI returned higher values for GN and Leiden than for Louvain. It can be concluded that when R > S, NMI exhibits biased behavior. A comparison with other algorithms also highlights this problem.

Another interesting issue we find from this case study is how Eq. (28) behaves when R > S and R < S. As expressed in the explanation of Eq. (28), the changes in each pair of P when R < S are greater than when R > S. For instance, when R is 27, P is 4847, when R is 41, P is 4718, and the difference in P is 129. On the other hand, the difference in P when R is 43 and 145 is 183. Therefore, the changes in the case of R < S per unit are 129/(41–27) = 9.21, which is greater than when R > S, where the difference per unit is 183/(145–43) = 1.79. Therefore, it leads to a sharper change per unit in the NMI value when R < S, which is (0.58–0.52)/(41–27) × 100 = 0.43, compared to R > S, which is (0.65–0.58)/102 × 100 = 0.07. This computation can be extended to other pairs of R values (e.g., 145, 731, and 1005) with respect to their P value. However, a meaningful comparison will be achieved if we have all possible R values, but in the real world, it is not an easy task to achieve this due to the limited number of community detection algorithms and the large number of nodes. Therefore, by considering a percentage and simulating it per unit, we provide an estimation of this problem.

Ethical approval

The authors declare that there is no any human or live human cells involved in the study.

Conclusion

In this study, we conducted an in-depth analysis of one of the most recognized measures employed in the evaluation of clustering and community detection algorithms. Utilizing a mathematical approach, we demonstrated the inherent bias of this measure (NMI), a bias that becomes particularly pronounced when the number of communities detected by a given algorithm surpasses the number of communities present in the ground truth. Our findings underscore the significant impact that the number of detected communities can have on each evaluation metric, an effect that is especially notable in logarithmic entropy-based metrics such as NMI. This observation is critical because it highlights the potential for skewed results and misinterpretations when using this metric in different applications.

The findings of this study can be generalized and applied in various contexts that utilize community detection evaluation metrics, ranging from friendship networks to critical applications such as identifying certain cancer diseases in protein–protein interaction (PPI) networks. Our study has highlighted and formally proven that the NMI is a biased metric for evaluating the results of community detection algorithms in any application. For instance, an algorithm that fails to detect the protein structure in PPI networks may be considered successful based on the NMI, potentially leading to the misdiagnosis of cancer. Therefore, our findings underscore the need for careful consideration of the characteristics and limitations of NMI, particularly in scenarios where the number of detected communities is high.Therefore, this study formally indicates that in the future, any field of study intending to base decisions on community detection algorithms should exercise caution in selecting the appropriate metric for evaluating these algorithms. This applies across various domains, such as marketing, where accurately targeting communities is crucial, or in information diffusion methods, where identifying dense communities is vital for activating more nodes.

However, the primary contribution of this study lies in revealing the root cause of this biased behavior, originating from the logarithmic function and its corresponding derivative. This insight holds significant value for future studies focused on designing new and equitable metrics within this domain. Understanding the mathematical behavior of this logarithmic metric can substantially aid in the creation of more precise evaluation metrics.

Data availability

The data and codes used and/or analyzed during the current study available from the corresponding author on request.

References

Manipur, I., Giordano, M., Piccirillo, M., Parashuraman, S. & Maddalena, L. Community detection in protein–protein interaction networks and applications. IEEE/ACM Trans. Comput. Biol. Bioinform. 20, 217–237 (2023).

Roy, S., Kundu, S., Sarkar, D., Giri, C. & Jana, P. Community detection and design of recommendation system based on criminal incidents. In Proceedings of International Conference on Frontiers in Computing and Systems 71–80 (Springer Singapore, 2021).

Ferretti, S. On the Modeling and simulation of portfolio allocation schemes: An approach based on network community detection. Comput. Econ. https://doi.org/10.1007/s10614-022-10288-w (2022).

Wei, S. & Wang, L. Community detection, road importance assessment, and urban function pattern recognition: A big data approach. J. Spat. Sci. 68, 23–43 (2023).

Vicario, M. D. et al. The spreading of misinformation online. Proc. Natl. Acad. Sci. 113, 554–559 (2016).

Mukerjee, S. A systematic comparison of community detection algorithms for measuring selective exposure in co-exposure networks. Sci. Rep. 11, 15218 (2021).

Neff, T. et al. Vaccine hesitancy in online spaces: A scoping review of the research literature, 2000–2020. Harvard Kennedy School Misinf. Rev. https://doi.org/10.37016/mr-2020-82 (2021).

Jemielniak, D. & Krempovych, Y. An analysis of AstraZeneca COVID-19 vaccine misinformation and fear mongering on Twitter. Public Health 200, 4–6 (2021).

Benkler, Y., Faris, R. & Roberts, H. Network Propaganda: Manipulation, Disinformation, and Radicalization in American Politics. (Oxford University Press, 2018).

Mosleh, M. & Rand, D. G. Measuring exposure to misinformation from political elites on Twitter. Nat. Commun. 13, 7144 (2022).

Górska, A., Kulicka, K. & Jemielniak, D. Men NOT Going Their Own Way: A Thick Big Data Analysis of #MGTOW and #Feminism Tweets. Feminist Media Studies (second round of revisions) (2022).

Ophir, Y. et al. Weaponizing reproductive rights: a mixed-method analysis of White nationalists’ discussion of abortions online. Inf. Commun. Soc. 26, 1–26 (2022).

Panizo-LLedot, A., Torregrosa, J., Bello-Orgaz, G., Thorburn, J. & Camacho, D. Describing alt-right communities and their discourse on twitter during the 2018 US Mid-term elections. In Complex Networks and Their Applications VIII 427–439 (Springer International Publishing, 2020).

Okruszek, Ł, Piejka, A., Banasik-Jemielniak, N. & Jemielniak, D. Climate change, vaccines, GMO: The N400 effect as a marker of attitudes toward scientific issues. PLoS One 17, e0273346 (2022).

Grusauskaite, K., Carbone, L., Harambam, J. & Aupers, S. Debating (in) echo chambers: How culture shapes communication in conspiracy theory networks on YouTube. New Media Soc. 14614448231162585 (2023).

Kaiser, J., Rauchfleisch, A. & Córdova, Y. Comparative approaches to mis/disinformation| fighting Zika with honey: An analysis of YouTube’s video recommendations on Brazilian YouTube. Int. J. Commun. Syst. 15, 19 (2021).

Humprecht, E., Esser, F. & Van Aelst, P. Resilience to online disinformation: A framework for cross-national comparative research. Int. J. Press/Polit. 25, 493–516 (2020).

Ahmad, N., Milic, N. & Ibahrine, M. Data and disinformation. Computer 54, 105–110 (2021).

Lewandowsky, S., Ecker, U. K. H. & Cook, J. Beyond misinformation: Understanding and coping with the ‘Post-Truth’ era. J. Appl. Res. Mem. Cogn. 6, 353–369 (2017).

Shu, K., Sliva, A., Wang, S., Tang, J. & Liu, H. Fake news detection on social media: A data mining perspective. SIGKDD Explor. Newsl. 19, 22–36 (2017).

Darius, P. & Stephany, F. How the far-right polarises twitter: ‘Hashjacking’ as a disinformation strategy in times of COVID-19. In Complex Networks & Their Applications X 100–111 (Springer International Publishing, 2022).

De Clerck, B. et al. Maximum entropy networks applied on twitter disinformation datasets. In Complex Networks & Their Applications X 132–143 (Springer International Publishing, 2022).

Hasan Ahmed Abdulla, H. H. & Abdulla, H. H. Fake news detection: A graph mining approach. In 2023 International Conference on IT Innovation and Knowledge Discovery (ITIKD) 1–5 (2023).

Kaur, K. & Gupta, S. Towards dissemination, detection and combating misinformation on social media: a literature review. J. Bus. Ind. Market. (2022) (ahead-of-print).

Ali, M. et al. Social media content classification and community detection using deep learning and graph analytics. Technol. Forecast. Soc. Change 188, 122252 (2023).

Fortunato, S. Community detection in graphs. Phys. Rep. 486, 75–174 (2010).

Newman, M. E. J. Modularity and community structure in networks. Proc. Natl. Acad. Sci. U. S. A. 103, 8577–8582 (2006).

Meilă, M. Comparing clusterings—An information based distance. J. Multivar. Anal. 98, 873–895 (2007).

Lancichinetti, A. & Fortunato, S. Limits of modularity maximization in community detection. Phys. Rev. E Stat. Nonlinear Soft. Matter Phys. 84, 066122 (2011).

Amelio, A. & Pizzuti, C. Is normalized mutual information a fair measure for comparing community detection methods? In Proceedings of the 2015 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining 2015 1584–1585 (Association for Computing Machinery, 2015).

Cai, Q., Ma, L., Gong, M. & Tian, D. A survey on network community detection based on evolutionary computation. Int. J. Bio-Inspir. Comput. 8, 84 (2016).

Lai, D. & Nardini, C. A corrected normalized mutual information for performance evaluation of community detection. J. Stat. Mech. 2016, 093403 (2016).

Liu, X., Cheng, H.-M. & Zhang, Z.-Y. Evaluation of community detection methods. IEEE Trans. Knowl. Data Eng. 32, 1736–1746 (2020).

Zhang, P. Evaluating accuracy of community detection using the relative normalized mutual information. J. Stat. Mech. 2015, P11006 (2015).

Yang, Z., Algesheimer, R. & Tessone, C. J. A comparative analysis of community detection algorithms on artificial networks. Sci. Rep. 6, 30750 (2016).

Danon, L., Díaz-Guilera, A., Duch, J. & Arenas, A. Comparing community structure identification. J. Stat. Mech. 2005, P09008 (2005).

Karataş, A. & Şahin, S. Application areas of community detection: A review. In 2018 International Congress on Big Data, Deep Learning and Fighting Cyber Terrorism (IBIGDELFT) 65–70 (2018).

Vinh, N. X., Epps, J. & Bailey, J. Information theoretic measures for clusterings comparison: Variants, properties, normalization and correction for chance. J. Mach. Learn. Res. 11, 2837–2854 (2010).

Girvan, M. & Newman, M. E. J. Community structure in social and biological networks. Proc. Natl. Acad. Sci. U. S. A. 99, 7821–7826 (2002).

Mahmoudi, A., Bakar, A. A., Sookhak, M. & Yaakub, M. R. A temporal user attribute-based algorithm to detect communities in online social networks. IEEE Access 8, 154363–154381 (2020).

Chen, M., Nguyen, T. & Szymanski, B. K. A New Metric for Quality of Network Community Structure. arXiv [cs.SI] (2015).

Romano, S., Bailey, J., Nguyen, V. & Verspoor, K. Standardized mutual information for clustering comparisons: One step further in adjustment for chance. In Proceedings of the 31st International Conference on Machine Learning (eds. Xing, E. P. & Jebara, T.) vol. 32 1143–1151 (PMLR, 2014).

Rossetti, G., Pappalardo, L. & Rinzivillo, S. A novel approach to evaluate community detection algorithms on ground truth. In Complex Networks VII: Proceedings of the 7th Workshop on Complex Networks CompleNet 2016 (eds. Cherifi, H., Gonçalves, B., Menezes, R. & Sinatra, R.) 133–144 (Springer International Publishing, 2016).

Arab, M. & Hasheminezhad, M. Limitations of quality metrics for community detection and evaluation. In 2017 3th International Conference on Web Research (ICWR) 7–14 (2017).

Meilă, M. Comparing clusterings by the variation of information. In Learning Theory and Kernel Machines 173–187 (Springer Berlin Heidelberg, 2003).

Wagner, S. & Wagner, D. Comparing clusterings—An overview. https://publikationen.bibliothek.kit.edu/1000011477 (2007) https://doi.org/10.5445/IR/1000011477.

Santos, J. M. & Embrechts, M. On the use of the adjusted rand index as a metric for evaluating supervised classification. 175–184 (2009).

Yang, J. & Leskovec, J. Defining and evaluating network communities based on ground-truth. In Proceedings of the ACM SIGKDD Workshop on Mining Data Semantics 1–8 (Association for Computing Machinery, 2012).

Saltz, M., Prat-Pérez, A. & Dominguez-Sal, D. Distributed community detection with the WCC metric. In Proceedings of the 24th International Conference on World Wide Web 1095–1100 (Association for Computing Machinery, 2015).

Leskovec, J., Kleinberg, J. & Faloutsos, C. Graph evolution: Densification and shrinking diameters. ACM Trans. Knowl. Discov. Data 1, 2-es (2007).

email-Eu-core network. https://snap.stanford.edu/data/email-Eu-core.html.

Funding

This work was supported by Narodowe Centrum Nauki (National Science Centre, Poland) under Grant 2020/38/A/HS6/00066.

Author information

Authors and Affiliations

Contributions

A.M. developed the theory and performed the computations. developed the theoretical formalism, performed the analytic calculations and performed the numerical simulations. Prove the hypothesis of the manuscript and provide mathematical proof of this paper. Prepared figures and/or tables, authored and reviewed drafts of the article, and approved the final draft. D.J. investigated and supervised the findings of this work, editing and revising the whole text. Contributed to literature review. Supervised the project. Authored and reviewed drafts of the article, and approved the final draft.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mahmoudi, A., Jemielniak, D. Proof of biased behavior of Normalized Mutual Information. Sci Rep 14, 9021 (2024). https://doi.org/10.1038/s41598-024-59073-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-59073-9

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.