Abstract

Bentonite plastic concrete (BPC) demonstrated promising potential for remedial cut-off wall construction to mitigate dam seepage, as it fulfills essential criteria for strength, stiffness, and permeability. High workability and consistency are essential attributes for BPC because it is poured into trenches using a tremie pipe, emphasizing the importance of accurately predicting the slump of BPC. In addition, prediction models offer valuable tools to estimate various strength parameters, enabling adjustments to BPC mixing designs to optimize project construction, leading to cost and time savings. Therefore, this study explores the multi-expression programming (MEP) technique to predict the key characteristics of BPC, such as slump, compressive strength (fc), and elastic modulus (Ec). In the present study, 158, 169, and 111 data points were collected from the experimental studies for the slump, fc, and Ec, respectively. The dataset was divided into three sets: 70% for training, 15% for testing, and another 15% for model validation. The MEP models exhibited excellent accuracy with a correlation coefficient (R) of 0.9999 for slump, 0.9831 for fc, and 0.9300 for Ec. Furthermore, the comparative analysis between MEP models and conventional linear and non-linear regression models revealed remarkable precision in the predictions of the proposed MEP models, surpassing the accuracy of traditional regression methods. SHapley Additive exPlanation analysis indicated that water, cement, and bentonite exert significant influence on slump, with water having the greatest impact on compressive strength, while curing time and cement exhibit a higher influence on elastic modulus. In summary, the application of machine learning algorithms offers the capability to deliver prompt and precise early estimates of BPC properties, thus optimizing the efficiency of construction and design processes.

Similar content being viewed by others

Introduction

The ageing infrastructure worldwide poses a significant concern for many nations. Unfortunately, public awareness regarding this issue tends to escalate only following a catastrophic failure in some aspect of the infrastructure1. For instance, during the Katrina and Rita Hurricanes in the Gulf Coast, embankment dams and levees experienced severe and widespread failure in 20052. Earthen dams can fail in different ways, including insufficient maintenance, over-topping, foundation issues, and slope instability. The latter often happens when water seepage beneath the dam weakens internal friction, leading to the dam sliding or slipping3. As a result, significant attention has been directed towards ensuring the safety of dams, leading to the implementation of various global programs focused on dam repair and remediation1. A widely used approach to address dam seepage involves the construction of cut-off walls. There are numerous options for backfill materials in cut-off walls, but there is a growing interest in plastic concrete4,5. This is because of its favorable qualities, such as its elastic–plastic properties, low permeability, and homogeneity6.

Due to its excellent low permeability characteristics, bentonite is utilized to prepare plastic concrete to construct cut-off walls beneath dams to block water penetration7. Plastic concrete must possess robust strength, impermeability, and stiffness similar to the surrounding soil. Ensuring compatibility of strain between adjacent soil and the wall helps mitigate the risk of wall over-stressing and allows for deformation without separation8. This type of concrete holds significant potential in meeting the criteria for strength, stiffness, and permeability in the construction of remedial cut-off walls7. Although it offers enhanced formability, its strength is comparatively lower due to the incorporation of clay slurry9. Typically, plastic concrete includes typical concrete constituents and bentonite clay, and a greater water-binder ratio to yield a more workable and elastic material9. It is noteworthy that bentonite has long been employed for sealing purposes in hydraulic and civil structures10,11,12,13.

Bentonite plastic concrete (BPC) must have excellent workability and consistency because fresh concrete deposited into a trench by pipe must be capable of moving in the ditch and forcing the already poured concrete with high pressure14. This emphasizes the significance of forecasting the slump of BPC. Moreover, regulating seepage content and, ensuring the stability of dams is significantly influenced by the compressive strength (fc) of the employed plastic concrete (PC). Therefore, obtaining comprehensive details about factors impacting the fc of PC, including the mixing ratio and curing duration, is essential14. Numerous factors can impact the strength of BPC, including the attributes of concrete constituents, curing time, and mixing ratio. In dam construction sites and during the manufacturing of BPC, it is customary to subject samples from different mixers to testing using specialized equipment and expert personnel. This process is essential for ensuring quality control and reliability.15,16. However, challenges arise in the workplace, such as construction issues, storage, and curing processes for a large number of concrete samples17,18,19,20,21. The need for a prompt assessment of sample resistance to adjust ratios adds complexity and incurs significant time and costs. Therefore, having a reasonably accurate and comprehensive estimate of compressive strength (within the desired confidence level) is essential for making informed decisions22,23. Researchers have employed empirical regression methods to estimate the strength of BPC24,25,26.

In the past few decades, machine learning (ML) has garnered significant interest in its application to construction materials18,27,28. ML techniques, like neural network (NN) prediction models, were chosen from the beginning of the application of data mining2930,31. However, over time, alternative techniques such as adaptive probabilistic neural networks (APNN)32, fuzzy polynomial neural networks (FPNN)33,34, and GMDH-type neural networks35,36, were developed to improve the reliability, pace, and enhancing the performance of NN, but the artificial neural network (ANN) technique still holds the majority of literature37,38,39,40,41,42. In addition to ANN, several authors have employed other ML techniques in their studies, such as SVM and ANFIS43,44,4546. Nevertheless, the use of the ANN approach has certain drawbacks and limitations in prediction modeling47,48,49,50. To begin with, the ANN is categorized as a black-box approach, offering limited interpretation in terms of how the model generates its estimations51,52,53. The absence of clarity of interpretation may hinder understanding and confidence in the model, particularly in vital applications where interpretability holds significant importance. For example, Ekanayake et al.54 highlighted the difficulty faced by individuals lacking familiarity with ML methods in understanding them, often perceiving them as an enigmatic “black-box” approach55,56,57. The absence of vital information like the relationship between outputs and inputs, and the logic behind estimations, erodes end-users' trust in ML estimations58. In addition, ANN is susceptible to overfitting or underfitting the data. Overfitting occurs when the model becomes excessively complex, memorizing the training data and subsequently exhibiting poor generalization performance when applied to new, unseen data59. Moreover, fine-tuning hyperparameters in ANN models is frequently necessary to improve model performance. Identifying the ideal setup can pose challenges and may necessitate extensive experimentation through trial and error60,61,62,63. To address these issues, evolutionary algorithms (EAs) and genetic algorithms (GA), which include gene expression programming (GEP) and multi-expression programming (MEP), are being utilized to forecast concrete properties49,50,53,57,64. The superiority of such algorithms is the generation of useful mathematical expressions, as well as their great reliability and predictive potential.

Recently, few studies have been conducted to forecast the characteristics of BPC. For instance, Ghanizadehe et al.14 utilized ANN and SVM approaches to estimate the fc of BPC. Similarly, another study by Amlashi et al.65 employed four techniques (SVM, RSM, GMDH, MGGP) to forecast the fc of BPC. It was reported that the SVM model outperformed the remaining three models. Amlashi et al.66 also used SVM and adaptive Neuro-fuzzy inference system (ANFIS) methods optimized with particle swarm optimization (PSO) to estimate the fc of BPC. The majority of these studies focused on neural network methods, which lack transparency and interpretability aspects of ML modeling. Moreover, ANN methods are vulnerable to the issue of overfitting67,68,69.

To address the shortcomings of other neural algorithms, a novel approach known as MEP has been developed51,70. Due to the linear nature of chromosomes and their potential for coding several solutions on just one chromosome. The finest of the chosen chromosomes is selected as the final replica. In comparison to EA, MEP is an improved version of GP that can compute an accurate output even when the complexity of the objective is unknown. Contrary to ML techniques, MEP does not need the final equation's formulation to be determined. The mathematical discrepancies are determined and removed from the final formulation throughout the MEP development process. Furthermore, in comparison to other soft computing systems, the decoding procedure in MEP is significantly simpler. Despite the numerous advantages of MEP over other evolutionary algorithms, its utilization till now is limited in construction materials research. MEP was used by Alavi et al.71 to forecast soil classification established on the liquid limit (LL), plastic limit (PL), and soil color. Similarly, MEP is used for Marshall mix design, flow, and stability72,73,74.

In the present study, fc, elastic modulus (Ec), and slump of BPC have been modeled using the MEP technique while taking into consideration the most influential input variables. An extensive database has been collected and categorized into different sets (training, validation, testing) to guarantee that the model is effectively prepared. To ensure model applicability and accuracy, extensive statistical and performance checks are performed to measure model efficiency. In addition, SHAP analysis was used for the interpretability of the suggested models.

Research methodology

Multi-expression programming (MEP)

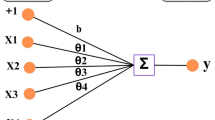

The objective of this modeling technique is to offer precise and useful mathematical formulations to predict output using pre-defined parameters. Koza75 introduced an extension of GA called GP, which is relying on Darwinian principles14. The fundamental distinction between both methods is that in GA, binary strings are used, but in GP, parse trees are used. Recently, multiple kinds of EAs have been suggested, with one of their main differences being linearity75. One method for describing the output of an MEP modeling is a linear string of commands with variables or operations. Figure 1 depicts the processes that occur in MEP development. The MEP algorithm forms through several stages: initially, it creates a diverse population of chromosomes. Then, it employs a binary tournament operation to select parents. With a constant crossover probability, it merges selected parents to produce offspring. Mutation introduces variation, and finally, the algorithm replaces inferior members of the population with the best-performing ones51. The process is iterative and continues until it reaches convergence71. Figure 2 depicts the MEP architecture.

MEP offers various advantages over other types of genetic techniques like genetic programming. GP uses a tree crossover evolutionary process, which produces several parse trees, increasing computational time and the need for storage76. In addition, since GP is both a phenotype and a genotype, it is difficult to provide a simple formulation for the required task. MEP maintains a large variety of expressions, including certain implicit structures, which is referred to as implicit parallelism. MEP also has the capacity to maintain many solutions to a problem on a single chromosome51,70. MEP can distinguish between phenotype and genotype due to the linear variations74. MEP is thought to be more effective than other ML methods due to its capacity to encode several answers inside a single chromosome. This unique feature enables MEP to look over for a better feasible response. Unlike other GP algorithms, MEP provides simple decoding operations and pays particular attention to cases where the specifics of the desired expression are unclears51. MEP can manage issues such as division by zero, improper expressions, and many more77. Furthermore, multi-gene genetic programming (MGGP) and MEP are both extensions of traditional GP designed to address complex optimization problems. While they share similarities in their approach, there are distinct differences in how they represent and evolve solutions. In MGGP, an individual is represented as a set of multiple genes, each of which may encode a distinct subcomponent or module of the solution78,79. These genes can be trees or other structures suitable for the problem domain. In MEP, an individual is represented as a set of multiple expressions, typically in the form of linear or matrix-based representations80. Each expression contributes to the overall solution and can be evaluated independently. Moreover, MGGP typically uses genetic operators such as crossover and mutation at the gene level81. It means that crossover and mutation operations can occur within individual genes, allowing for the exchange or modification of entire subcomponents of the solution. In contrast, MEP often employs mutation operators that act at the expression level, modifying individual expressions or parts of expressions to create new candidate solutions. Crossover operations in MEP may involve combining entire expressions from different individuals82.

Experimental database

An extensive database of BPC has been collected from the existing literature for GEP modeling (provided in supplementary as Tables S1–S3)83. The database contains 158, 169, and 111 datasets for the slump, fc, and Ec, respectively. It must be noted that the samples used in experimental studies were of two distinct dimensions (150 × 150 × 150 mm and 100 × 100 100 mm). To estimate the characteristics of BPC, an ML model considered a wide range of input features. To build up a predictive model for the slump, six input variables, which include gravel, sand, silty clay, cement, bentonite, and water, were retrieved from the literature. In addition, for modeling compressive and elastic modulus, curing time was added to these six influential input variables.

The distribution of input variables influences the generated model's generalization capabilities. Frequency histograms are provided in Fig. 3 to visualize variable distribution. Tables 1, 2 and 3 summarize the various statistics for the collected datasets of slump, compressive strength, and elastic modulus. The dataset is split into three categories: testing (15%), training (70%), and validation (15%). This data partitioning approach facilitates evaluating the model's performance on new, unseen data, offering a more precise gauge of its real-world applicability. By doing so, it mitigates the risk of overfitting, preventing the model from depending excessively on particular training data patterns. Additionally, it supports model refinement and hyperparameter optimization by furnishing a distinct validation set for comparing and selecting the most effective model configurations47,52,84. Each subset of the dataset has comparable statistical characteristics such as standard deviation, variance, mean, and range. These statistical analyses prove that the proposed ML models are usable for a diverse set of data, which broadens their generalization. It is noticeable that only a few research have determined slump, fc, and Ec for a specific mix proportion. Due to this reason, separate databases have been collected for these three characteristics and are considered for their respective model development.

MEP model development

The methodology used in this research is outlined in Fig. 4. Several MEP setting variables must be defined prior to building a valid and adaptive model. The setting variables are chosen by prior recommendations and a trial-and-error procedure85. The number of developed programs is determined by the population size. A large-scale population model can be more complex, but it is more exact and reliable, and it takes longer to reach convergence. However, if the size increases above a certain range, the model may overfit. Table 4 shows the setup variables that were used for the model constructed in this work. The function just comprises the simple mathematical operators (ln, exp, -, × , ÷ , +) for simplicity in the final formulations. The number of generations indicates the accuracy of the method before it is discontinued. The model for simulation with the fewest errors will be produced by a multi-generation run. Various variable combinations were used to optimize the model, and the optimum combination was chosen to offer an outcome model with the lowest errors, as shown in Table 4. The main challenge with ML prediction simulation is the over-fitting of the prediction model. Whenever utilized with original data, the model performs well; however, when given unknown data, the model performs significantly worse. To avoid overfitting, it has been suggested that the model be evaluated using previously unknown data85,86. As a result, the data is proportionately divided into three groups. Following validation, the model is evaluated on the dataset that was not used in the training of the model. The database was divided into three subsets, i.e., 15% for testing, 15% for validation, and 70% for training. The generated models perform excellently across all datasets. In the current study, the MEPX tool (version. 2023.3.5) was used to carry out MEP modeling.

Initially, the modeling process generates optimal solutions for the population. The procedure is repeated, with every iteration getting closer to a solution. The fitness of each successive generation is determined. The MEP modeling process carries on until the fitness value does not change. If the outcomes are not precise, the operation is iterated by progressively increasing the size of the population and tuning other hyperparameters. After evaluating the fitness function of every model, the model with the lowest fitness is chosen. It should be noted that the evolution time and the number of generations have a considerable impact on the accuracy of the suggested model. Due to the addition of new features to the framework, a model will be iterated indefinitely using these approaches. However, in the current study, the model has completed either the change in function was less than 0.1% or after 1000 generations. The hyperparameters setup of the suggested MEP model is provided in Table 4.

Model performance assessment

The models' effectiveness is assessed by calculating numerous statistical error metrics. Multiple performance metrics such as R, RMSE, MAE, RRMSE, RSE, and performance index (ρ) are used to check the accuracy of the MEP model, as given in Eqs. (1–6). Another approach to prevent model overfitting is to choose the optimum model by reducing the objective function (OF)88,89.

where ei shows actual data and mi shows model data of actual while n denotes the number of collected values. Whereas \({\bar{\text{{ei}}}}\) and \({\bar{\text{{mi}}}}\) represent the mean of experimental and predicted values, respectively. The training and validation sets are represented by the subscripts T and V, respectively. R measures the correlation between estimated and actual values87, and a value greater than 0.8 shows a strong connection between anticipated and actual results88,89. However, because R is insensitive to the division or multiplication of data by a constant number, it is insufficient as a check of the overall model efficacy. The RMSE and MAE calculate the mean magnitude of the errors. Each variable, though, has its own significance. A larger RMSE value indicates that the frequency of estimations with substantial errors is significantly greater than expected and should be decreased. On the other hand, MAE provides minimum weight to higher error and is always lower than RMSE.

The MEP model used in this study is also assessed via the OF to determine the overall efficiency because OF takes into account the influence of RMSE, R, and the total number of collected values. The values OF range from 0 to infinity. A model is considered best if \(\uprho\) and OF are both 0.288. The OF considers three parameters, namely R, RRMSE, and the proportion of data in validation and training sets. Consequently, the least value signifies a model's greater performance. Furthermore, the MEP model was externally validated using criteria suggested in the literature, as shown in Table 5.

Results and discussion

The MEP algorithm was employed to construct predictive models for various properties of bentonite plastic concrete. These models were meticulously developed with a hyperparameter configuration comprising a sub-population size of 250, generations of 1000, a mutation probability of 0.9, and sub-populations of 50. Additionally, mathematical operators including + , −, /, Inv, and exp were utilized in the model construction process. The optimized MEP code for future prediction of slump, fc, and Ec has been compiled and is conveniently accessible in the supplementary materials under Tables S4–S6. These codes provide a comprehensive overview of the generated code, facilitating accurate and efficient forecasting of slump, fc, and Ec.

Outcomes of MEP modeling

Figure 5 displays the comparison model forecasted and experimental values of the slump. The plot also includes the expressions for regression lines. In perfect condition, the regression slope should be approached close to 1. Figure 5 illustrates a significant correlation between original and modeled values, as evidenced by slopes of 0.987, 0.991, and 0.976 for the training, validation, and testing phases, respectively. Moreover, the values are relatively similar and near to perfect matching, showing that the MEP model is trained effectively and has a better prediction performance, i.e., it works similarly very well with new data.

The fc findings have also been compared to experimental values of fc, as shown in Fig. 6. The resulting model appears to have undergone effective training on the input data, as evidenced by its ability to generate precise predictions for the actual fc. All three sets of data have almost optimal regression line slopes (0.988, 0.834, and 0.984). This model, like the one for the slump, does very well on test data. This demonstrates that the concern of the model being overfitted has been much reduced. The greater the number of data points, the more accurate and generalizable the outcomes will be90. The largest number of points possible (169) were chosen for fc in the compiled database, resulting in a high level of precision with the least statistical errors.

Similarly, Fig. 7 provides a comparison of the model and experimental results of Ec. In contrast, to slump and fc models, the MEP model for Ec exhibited a comparatively lower regression slope as shown in Fig. 7. According to Gholampour et al.90, the precision and efficacy of the model are heavily influenced by the number of dataset points. In the current work, a greater number of datasets (111) were obtained from the available published work and used for the suggested model, resulting in improved accuracy.

Performance evaluation of MEP models

The number of data points required to construct a model is crucial because it affects the model's validity. The data set proportion to the number of inputs should be 3 for a satisfactory model, and a ratio of 5 is preferred90,91. In this study, the ratios are 26.3, 24.1, and 15.9 for the slump, fc, and Ec, respectively. As discussed previously, the performance of all three models is assessed by using various statistical measures (R, MAE, RMSE, RSE, RRMSE, ρ, and OF). The values of all these error measurements for the three models are provided in Table 6 and illustrated in Fig. 8. The table provides a good correlation between the model estimated and actual values, as R-values are closer to 1 (ideal condition) for the three suggested models. The MAE, RSE, and RMSE values for the three datasets are notably lower, which indicates the good precision and generalization ability of MEP models.

It is shown that the values of RRMSE in the three sets of slump models are lower than 0.2, indicating that the slump model is in the excellent range. The values of \(\uprho\) are less than 0.20 for all sets of slump and compressive strength models, demonstrating that the MEP models are accurate and suitable for predicting the output. However, these values of \(\uprho\) are a little high for the elastic modulus model. OF for the slump, fc, and Ec models are 0.0453, 0.0471, and 0.1662, respectively. These values are quite close to 0, substantiating the accuracy and indicating that the issue of overfitting for the models has been adequately handled.

Figure 9 shows the absolute error in each MEP model to explain the statistics of absolute errors. The mean absolute error values for the slump, fc, and Ec are 1.095 mm, 0.226 MPa, and 296.79 MPa, respectively, with a maximum error of 12.64 mm, 1.08 MPa, and 2560.2 MPa. It is worth noting that the occurrence of maximum error is very low. In addition, the predicted values of MEP models closely followed the trend of the experimental values.

External validation of MEP model

Table 7 represents the numbers of the additional criteria used for model validations. It has been proposed that the slopes of regression lines should be close to 192. Roy and Roy93 proposed another criterion of Rm to measure the external reliability of the model. When the value of Rm is higher than 0.5, this criterion is satisfied. Table 7 illustrates that the MEP model meets the additional validation criteria, showing that the MEP algorithm is accurate and has better predictive potential. Thus, the formulated MEP models have the potential to accurately and precisely predict the workability and strength properties of BPC.

Comparing the MEP model with statistical regression models

In this study, non-linear (NLR) and linear regression (LR) models were constructed using similar databases to predict the characteristics of BPC. The outcomes were compared with MEP models. The RMSE and ρ values are lower for the MEP model compared to the regression models for all three datasets.

The formulations to estimate the slump of BPC using LR and NLR analysis are provided in Eqs. (7–8). The results of NLR and LR regression analysis are compared with the MEP model for the slump and shown in Fig. 10. The RMSEtraining of the MEP model for the slump is 95.2% lower than that of the linear regression, which shows the accuracy and reliability of the MEP model. It is worth noticing from Fig. 10 that the regression model failed to capture the lower value of the slump.

Similarly, based on the same dataset, LR and NLR analyses are conducted for the compressive strength of BPC. LR and NLR formulations for fc of BPC are shown as Eqs. (9–10). MEP-predicted values for compressive strength are compared with LR and NLR, as provided in Fig. 11. The statistical errors for the MEP model of fc are considerably lower than those of regression models. The RMSEtraning of the MEP model is 52% less than that of the regression model, which indicates the inaccuracy of the conventional regression model. It is worth noticing that the non-linear regression model for fc produced similar values of outcomes throughout all the dataset data, as represented by the nearly straight line. This provides the inaccuracy of the NLR model to forecast the fc of BPC.

The formulations of LR and NLR for the elastic modulus of BPC are given as Eqs. (11–12). The outcomes of elastic modulus regression analysis are compared with the MEP model and experimental data, as depicted in Fig. 12. The RMSEtraining of the MEP model for Ec is 60% lower than that of linear regression, which shows the excellent capability of MEP model to precisely forecast the elastic modulus of BPC.

These findings imply that MEP-based models outperform both LR and NLR models. The reason for this is that these statistical regression procedures have limits, such as the actual issue being linked to a forecast model by certain pre-defined functions. In contrast, the outcomes of MEP-based modeling, demonstrate that the models have a great generalization capability and, most importantly, less error than the regression models. Hence, these limitations impede the utilization of statistical regression models for predictive tasks.

Comparison of the developed models with literature models

To date, several soft-computing models have been developed to predict the properties of bentonite plastic concrete. The majority of developed models have primarily focused on predicting the fc of BPC. However, it is noteworthy that despite the significance of slump, most studies have not delved into developing prediction models for this parameter, with Amlashi et al.83 being an exception. To facilitate a precise comparison between existing models from the literature and the established models in this study, two statistical metrics (R, RMSE) were selected, as given in Table 8.

As given in Table 8, a comparative analysis between the highest-performing model for predicting slump models in this study (i.e., MEP model) and the top-performing model from the literature (i.e., ANN model by Amlashi et al.83). The RMSE value of the MEP model was reduced by 95.70% compared to the top-performing model (ANN model developed by Amlashi et al.83) in the literature for slump prediction of BPC. Similarly, the RMSE value of the MEP model for compressive strength is 46.26% lower than that of the best prediction model in the literature (ANN-PSO developed by Amlashi et al.94). Furthermore, the reduction in RMSE for Ec is 45.16% in the MEP model developed in the present study compared to the most accurate model found in the literature (ANN model developed by Amlashi et al.83). The developed MEP model exhibited superior accuracy in predicting both the workability and strength properties of bentonite plastic concrete. The MEP model's performance surpassed that of all models reported in the literature, demonstrating its efficacy in optimizing predictions for bentonite plastic concrete. This accuracy signifies a significant advancement in predictive modeling of BPC, promising enhanced reliability for engineering applications.

Moreover, while the bulk of the research concentrated on constructing ML models for predicting BPC properties, it overlooked the crucial aspect of model interpretation. The transparency of ML models is pivotal for engendering trust among end-users. Although several literature studies conducted sensitivity analyses to gauge the importance of individual features in predicting BPC properties, these analyses primarily provide feature significance and do not delve into the internal mechanisms of the models or the complex interrelationships among these features. Hence, this study employed SHAP analysis to interpret the forecasts of the developed models, thereby augmenting their transparency. Overall, this study not only provides models with superior accuracy compared to existing literature models but also enhances model interpretability.

SHAP interpretability of the models

Lundberg and Lee95 developed an approach for analyzing ML models that utilize the concept of Shapely Additive explanations (SHAP). The SHAP-based approach was established to determine each feature's proportionate relevance to the output and to determine if the feature enhances the output favorably or unfavorably96,97. References96,97 give a thorough explanation of the SHAP method. The SHAP value shows how much each input feature contributed to the results. This approach is equivalent to parametric analysis, in which a particular parameter is changed while others are kept constant to assess how modifications to one input variable are impacting the result.

The mean SHAP values provided in Fig. 13 show the importance of the input parameter. As illustrated in Fig. 13a, bentonite has a relatively greater contribution in output (slump) followed by the rest of the input variables. Similarly, water has relatively more contribution than other input variables in compressive strength, as illustrated in Fig. 13b. Cement exhibits the greatest influence, while silty clay has the least impact on the elastic modulus of BPC, as depicted in Fig. 13c. Furthermore, Fig. 14 shows the summary plot which demonstrates the influence the input features on output parameter. It shows the order of SHAP value for a specific feature in addition to the trend of the related variable. The vertical axis of the SHAP plot displays the variables used as inputs and their importance in decreasing order, while the x-axis displays each individual SHAP result. The dots are data instances, and the size of the dots is represented by their color, which goes from blue to red. The x-axis shows the value of the estimate for each feature's SHAP values as the input parameter's intensity changes (from blue to red). Each variable's high feature value indicates that it has a favorable impact on the output result, as given in Fig. 14. Nevertheless, the smaller the attribute value is, the greater the unfavorable influence of the input parameter on the output. As shown in Fig. 14a, a higher amount of water has favorable effects on the slump, while a higher amount of cement has negative impacts on the slump. It is noticeable from Fig. 14b that the high feature value of water has significantly unfavorable effects on the fc of BPC, while, on the other hand, gravel, cement, and curing have positive impacts on compressive strength. Similarly, higher amounts of cement and gravel have favorable effects on elastic modulus, as given in Fig. 14c.

Conclusion

In the present study, the slump, fc, and Ec of BPC have been modeled using multi-expression programming. An extensive database of 158 datasets for the slump, 169 for compressive strength, and 111 for elastic modulus have been collected from the experimental studies available on BPC. The most influential input parameters are considered for MEP modeling. The large database has been divided into three distinct categories of training, testing, and validation with the purpose of well-training the model on unseen data. Various statistical parameters (R, MAE, RMSE, RSE, and RRMSE) have been utilized to check the predictive capability and performance of the MEP models. Furthermore, all three models have been validated by using various external validation criteria. SHAP analysis was conducted for all models to discover the impact of input parameters on the output property.

The MEP models exhibited excellent accuracy with a correlation coefficient (R) of 0.9999 for slump, 0.9831 for fc, and 0.9300 for Ec. In addition, the developed models predicted the slump with MAE values of 1.4175 for training, 0.4272 for testing, and 0.2262 for validation. Similarly, the MEP model for compressive strength exhibited MAE values of 0.2245, 0.1574, and 0.3146 for training, testing, and validation, respectively. Overall, the suggested MEP models demonstrated higher accuracy and lower errors, indicating their robust prediction performance in estimating the properties of bentonite plastic concrete. Moreover, the comparative analysis between MEP models and conventional linear and non-linear regression models revealed remarkable precision in the predictions of the proposed MEP models, surpassing the accuracy of traditional regression methods. SHAP analysis was conducted to investigate the influence of various influential input parameters such as bentonite content, curing time, gravel, sand, cement, water, and silty clay on the properties of BPC. Water, cement, and bentonite have a significant influence on slump, but silty clay and sand have less effect. Furthermore, the water parameter has the maximum influence on compressive strength while curing time and cement has the higher impact on elastic modulus. In summary, this study provides crucial insights for builders and designers, elucidating the significance of each constituent in the mix design of bentonite plastic concrete. The application of ML algorithms offers the capability to deliver prompt and precise early estimates of BPC properties, thus optimizing the efficiency of construction and design processes.

The robustness of the developed models is contingent upon adherence to the prescribed ranges of input parameters used in this study. Any deviation beyond these limits warrants comprehensive validation to ensure the reliability of model predictions. Moreover, it is highly recommended to augment the dataset with a wider range of samples to enrich the model's predictive capacity. The inclusion of diverse data points spanning various scenarios and conditions will significantly enhance the model's robustness and generalizability, ensuring more accurate predictions across different contexts. In addition, exploring the integration of advanced global optimization bio-inspired algorithms, such as the human felicity algorithm, artificial bee colony algorithm, and tunicate swarm algorithm, for fine-tuning model hyperparameters is recommended. This approach has the potential to enhance the hybrid model's accuracy and robustness significantly, leading to a more reliable prediction model. Finally, it is recommended to utilize additional post-hoc model interpretability techniques, such as Individual conditional expectation plots and partial dependence plots, to gain deeper insights into the influence of input parameters on the properties of bentonite plastic concrete.

Data availability

Data and codes are provided in supplementary information files.

References

Alós Shepherd, D., Kotan, E. & Dehn, F. Plastic concrete for cut-off walls: A review. Constr. Build. Mater. 255, 119248. https://doi.org/10.1016/j.conbuildmat.2020.119248 (2020).

Bruce, D. Specialty construction techniques for dam and levee remediation, (2012).

Athani, S. S., Shivamanth, C. H. & Solanki, G. R. Dodagoudar, seepage and stability analyses of earth dam using finite element method. Aquat. Procedia. 4, 876–883. https://doi.org/10.1016/j.aqpro.2015.02.110 (2015).

Bai, B., Zhou, R., Cai, G., Hu, W. & Yang, G. Coupled thermo-hydro-mechanical mechanism in view of the soil particle rearrangement of granular thermodynamics. Comput. Geotech. 137, 104272. https://doi.org/10.1016/j.compgeo.2021.104272 (2021).

Huang, H., Yuan, Y., Zhang, W. & Li, M. Seismic behavior of a replaceable artificial controllable plastic hinge for precast concrete beam-column joint. Eng. Struct. 245, 112848. https://doi.org/10.1016/j.engstruct.2021.112848 (2021).

Peng, M. X. & Chen, J. Slip-line solution to active earth pressure on retaining walls. Géotechnique 63, 1008–1019. https://doi.org/10.1680/geot.11.P.135 (2013).

Yu, Y., Pu, J. & Ugai, K. Study of mechanical properties of soil-cement mixture for a cutoff wall. Soils Found. 37, 93–103. https://doi.org/10.3208/sandf.37.4_93 (1997).

Zhang, P., Guan, Q. & Li, Q. Mechanical properties of plastic concrete containing bentonite. Res. J. Appl. Sci. Eng. Technol. 5, 1317–1322 (2013).

ICOLD, Filling materials for watertight cut off walls. Bulletin, International Committee of Large Dams, Paris, Fr. (1995).

Garvin, S. L. & Hayles, C. S. The chemical compatibility of cement–bentonite cut-off wall material. Constr. Build. Mater. 13, 329–341. https://doi.org/10.1016/S0950-0618(99)00024-0 (1999).

Koch, D. Bentonites as a basic material for technical base liners and site encapsulation cut-off walls. Appl. Clay Sci. 21, 1–11. https://doi.org/10.1016/S0169-1317(01)00087-4 (2002).

Ata, A. A., Salem, T. N. & Elkhawas, N. M. Properties of soil–bentonite–cement bypass mixture for cutoff walls. Constr. Build. Mater. 93, 950–956. https://doi.org/10.1016/j.conbuildmat.2015.05.064 (2015).

García-Siñeriz, J. L., Villar, M. V., Rey, M. & Palacios, B. Engineered barrier of bentonite pellets and compacted blocks: State after reaching saturation. Eng. Geol. 192, 33–45. https://doi.org/10.1016/j.enggeo.2015.04.002 (2015).

Ghanizadeh, A. R., Abbaslou, H., Amlashi, A. T. & Alidoust, P. Modeling of bentonite/sepiolite plastic concrete compressive strength using artificial neural network and support vector machine. Front. Struct. Civ. Eng. 13, 215–239. https://doi.org/10.1007/s11709-018-0489-z (2019).

Khan, M., Shakeel, M., Khan, K., Akbar, S. & Khan, A. A review on fiber-reinforced foam concrete. In: ICEC 2022 (MDPI, Basel Switzerland, 2022) p. 13. https://doi.org/10.3390/engproc2022022013.

Anas, M., Khan, M., Bilal, H., Jadoon, S. & Khan, M.N. Fiber reinforced concrete: A Review. In: ICEC 2022 (MDPI, Basel Switzerland, 2022) p. 3. https://doi.org/10.3390/engproc2022022003.

Onyelowe, K. C. et al. Optimization of green concrete containing fly ash and rice husk ash based on hydro-mechanical properties and life cycle assessment considerations. Civ. Eng. J. 8, 3912–3938 (2022).

Onyelowe, K. C., Gnananandarao, T., Jagan, J., Ahmad, J. & Ebid, A. M. Innovative predictive model for flexural strength of recycled aggregate concrete from multiple datasets. Asian J. Civ. Eng. 24, 1143–1152. https://doi.org/10.1007/s42107-022-00558-1 (2023).

Ebid, A. M., Onyelowe, K. C., Kontoni, D.-P.N., Gallardo, A. Q. & Hanandeh, S. Heat and mass transfer in different concrete structures: A study of self-compacting concrete and geopolymer concrete. Int. J. Low-Carbon Technol. 18, 404–411. https://doi.org/10.1093/ijlct/ctad022 (2023).

Onyelowe, K. C., Ebid, A. M. & Ghadikolaee, M. R. GRG-optimized response surface powered prediction of concrete mix design chart for the optimization of concrete compressive strength based on industrial waste precursor effect. Asian J. Civ. Eng. 25, 997–1006. https://doi.org/10.1007/s42107-023-00827-7 (2024).

Onyelowe, K. C. & Ebid, A. M. The influence of fly ash and blast furnace slag on the compressive strength of high-performance concrete (HPC) for sustainable structures. Asian J. Civ. Eng. 25, 861–882. https://doi.org/10.1007/s42107-023-00817-9 (2024).

Huang, H., Yuan, Y., Zhang, W. & Zhu, L. Property assessment of high-performance concrete containing three types of fibers. Int. J. Concr. Struct. Mater. 15, 39. https://doi.org/10.1186/s40069-021-00476-7 (2021).

Li, Z. et al. Ternary cementless composite based on red mud, ultra-fine fly ash, and GGBS: Synergistic utilization and geopolymerization mechanism. Case Stud. Constr. Mater. 19, e02410. https://doi.org/10.1016/j.cscm.2023.e02410 (2023).

Tahershamsi, A., Bakhtiary, A. & Binazadeh, N. Effects of clay mineral type and content on compressive strength of plastic concrete. J. Min. Eng. 4(7), 35–42 (2009).

Singer, A., Kirsten, W. & Bühmann, C. Fibrous clay minerals in the soils of Namaqualand, South Africa: Characteristics and formation. Geoderma 66, 43–70. https://doi.org/10.1016/0016-7061(94)00052-C (1995).

Yalçin, H. Sepiolite-palygorskite from the Hekimhan region (Turkey). Clays Clay Miner. 43, 705–717. https://doi.org/10.1346/CCMN.1995.0430607 (1995).

Onyelowe, K. C. et al. AI mix design of fly ash admixed concrete based on mechanical and environmental impact considerations. Civ. Eng. J. 9, 27–45. https://doi.org/10.28991/CEJ-SP2023-09-03 (2023).

Onyelowe, K. C. et al. Evaluating the compressive strength of recycled aggregate concrete using novel artificial neural network. Civ. Eng. J. 8(8), 1679–1693 (2022).

Eldin, N. N. & Senouci, A. B. Measurement and prediction of the strength of rubberized concrete. Cem. Concr. Compos. 16, 287–298. https://doi.org/10.1016/0958-9465(94)90041-8 (1994).

He, H. et al. Exploiting machine learning for controlled synthesis of carbon dots-based corrosion inhibitors. J. Clean. Prod. 419, 138210. https://doi.org/10.1016/j.jclepro.2023.138210 (2023).

Long, X., Mao, M., Su, T., Su, Y. & Tian, M. Machine learning method to predict dynamic compressive response of concrete-like material at high strain rates. Def. Technol. 23, 100–111. https://doi.org/10.1016/j.dt.2022.02.003 (2023).

Lee, J. J., Kim, D., Chang, S. K. & Nocete, C. F. M. An improved application technique of the adaptive probabilistic neural network for predicting concrete strength. Comput. Mater. Sci. 44, 988–998. https://doi.org/10.1016/j.commatsci.2008.07.012 (2009).

Fazel Zarandi, M. H., Türksen, I. B., Sobhani, J. & Ramezanianpour, A. A. Fuzzy polynomial neural networks for approximation of the compressive strength of concrete. Appl. Soft Comput. 8, 488–498. https://doi.org/10.1016/j.asoc.2007.02.010 (2008).

Kim, D. K., Lee, J. J., Lee, J. H. & Chang, S. K. Application of probabilistic neural networks for prediction of concrete strength. J. Mater. Civ. Eng. 17, 353–362. https://doi.org/10.1061/(ASCE)0899-1561(2005)17:3(353) (2005).

Madandoust, R., Ghavidel, R. & Nariman-zadeh, N. Evolutionary design of generalized GMDH-type neural network for prediction of concrete compressive strength using UPV. Comput. Mater. Sci. 49, 556–567. https://doi.org/10.1016/j.commatsci.2010.05.050 (2010).

Madandoust, R., Bungey, J. H. & Ghavidel, R. Prediction of the concrete compressive strength by means of core testing using GMDH-type neural network and ANFIS models. Comput. Mater. Sci. 51, 261–272. https://doi.org/10.1016/j.commatsci.2011.07.053 (2012).

Bilim, C., Atiş, C. D., Tanyildizi, H. & Karahan, O. Predicting the compressive strength of ground granulated blast furnace slag concrete using artificial neural network. Adv. Eng. Softw. 40, 334–340. https://doi.org/10.1016/j.advengsoft.2008.05.005 (2009).

Chopra, P., Sharma, R. K. & Kumar, M. Prediction of compressive strength of concrete using artificial neural network and genetic programming. Adv. Mater. Sci. Eng. 2016, 1–10. https://doi.org/10.1155/2016/7648467 (2016).

Dantas, A. T. A., Batista Leite, M. & de Jesus Nagahama, K. Prediction of compressive strength of concrete containing construction and demolition waste using artificial neural networks. Constr. Build. Mater. 38, 717–722. https://doi.org/10.1016/j.conbuildmat.2012.09.026 (2013).

Duan, Z. H., Kou, S. C. & Poon, C. S. Prediction of compressive strength of recycled aggregate concrete using artificial neural networks. Constr. Build. Mater. 40, 1200–1206. https://doi.org/10.1016/j.conbuildmat.2012.04.063 (2013).

Naderpour, H., Kheyroddin, A. & Amiri, G. G. Prediction of FRP-confined compressive strength of concrete using artificial neural networks. Compos. Struct. 92, 2817–2829. https://doi.org/10.1016/j.compstruct.2010.04.008 (2010).

Sarıdemir, M. Prediction of compressive strength of concretes containing metakaolin and silica fume by artificial neural networks. Adv. Eng. Softw. 40, 350–355. https://doi.org/10.1016/j.advengsoft.2008.05.002 (2009).

Gilan, S.S. Ali, A.M., Ramezanianpour, A.A. Evolutionary fuzzy function with support vector regression for the prediction of concrete compressive strength. In: 2011 UKSim 5th Eur. Symp. Comput. Model. Simul., IEEE, 2011: pp. 263–268https://doi.org/10.1109/EMS.2011.28.

Uysal, M. & Tanyildizi, H. Predicting the core compressive strength of self-compacting concrete (SCC) mixtures with mineral additives using artificial neural network. Constr. Build. Mater. 25, 4105–4111. https://doi.org/10.1016/j.conbuildmat.2010.11.108 (2011).

Siddique, R., Aggarwal, P. & Aggarwal, Y. Prediction of compressive strength of self-compacting concrete containing bottom ash using artificial neural networks. Adv. Eng. Softw. 42, 780–786. https://doi.org/10.1016/j.advengsoft.2011.05.016 (2011).

Hamdia, K. M., Lahmer, T., Nguyen-Thoi, T. & Rabczuk, T. Predicting the fracture toughness of PNCs: A stochastic approach based on ANN and ANFIS. Comput. Mater. Sci. 102, 304–313. https://doi.org/10.1016/j.commatsci.2015.02.045 (2015).

Khan, M. et al. Optimizing durability assessment: Machine learning models for depth of wear of environmentally-friendly concrete. Results Eng. 2023, 20. https://doi.org/10.1016/j.rineng.2023.101625 (2023).

Alyami, M. et al. Predictive modeling for compressive strength of 3D printed fiber-reinforced concrete using machine learning algorithms. Case Stud. Constr. Mater 20, e02728. https://doi.org/10.1016/j.cscm.2023.e02728 (2024).

Khan, M. & Javed, M. F. Towards sustainable construction: Machine learning based predictive models for strength and durability characteristics of blended cement concrete. Mater. Today Commun. 37, 107428. https://doi.org/10.1016/j.mtcomm.2023.107428 (2023).

Alabduljabbar, H. et al. Predicting ultra-high-performance concrete compressive strength using gene expression programming method. Case Stud. Constr. Mater. 18, e02074. https://doi.org/10.1016/j.cscm.2023.e02074 (2023).

Oltean, M. & Groşan, C. Evolving evolutionary algorithms using multi expression programming. In European conference on artificial life 651–658 (Springer Berlin Heidelberg, Berlin, 2003). https://doi.org/10.1007/978-3-540-39432-7_70.

Khan, A. et al. Predictive modeling for depth of wear of concrete modified with fly ash: A comparative analysis of genetic programming-based algorithms. Case Stud. Constr. Mater. https://doi.org/10.1016/j.cscm.2023.e02744 (2023).

Chen, L. et al. Development of predictive models for sustainable concrete via genetic programming-based algorithms. J. Mater. Res. Technol. 24, 6391–6410. https://doi.org/10.1016/j.jmrt.2023.04.180 (2023).

Ekanayake, I. U., Meddage, D. P. P. & Rathnayake, U. A novel approach to explain the black-box nature of machine learning in compressive strength predictions of concrete using Shapley additive explanations (SHAP). Case Stud. Constr. Mater. 16, e01059. https://doi.org/10.1016/j.cscm.2022.e01059 (2022).

Khan, M. et al. Intelligent prediction modeling for flexural capacity of FRP-strengthened reinforced concrete beams using machine learning algorithms. Heliyon https://doi.org/10.1016/j.heliyon.2023.e23375 (2023).

Alyousef, R. et al. Forecasting the strength characteristics of concrete incorporating waste foundry sand using advance machine algorithms including deep learning. Case Stud. Constr. Mater. 19, e02459. https://doi.org/10.1016/j.cscm.2023.e02459 (2023).

Alyousef, R. et al. Machine learning-driven predictive models for compressive strength of steel fiber reinforced concrete subjected to high temperatures. Case Stud. Constr. Mater. 19, e02418. https://doi.org/10.1016/j.cscm.2023.e02418 (2023).

Chen, V. C. P. & Rollins, D. K. Issues regarding artificial neural network modeling for reactors and fermenters. Bioprocess Eng. 22, 85–93. https://doi.org/10.1007/PL00009107 (2000).

Zhang, G., Eddy Patuwo, B. & Hu, M. Y. Forecasting with artificial neural networks. Int. J. Forecast. 14, 35–62. https://doi.org/10.1016/S0169-2070(97)00044-7 (1998).

Yin, C., Rosendahl, L. & Luo, Z. Methods to improve prediction performance of ANN models. Simul. Model. Pract. Theory. 11, 211–222. https://doi.org/10.1016/S1569-190X(03)00044-3 (2003).

Nasir Amin, M. et al. Prediction model for rice husk ash concrete using AI approach: Boosting and bagging algorithms. Structures 50, 745–757. https://doi.org/10.1016/j.istruc.2023.02.080 (2023).

Aslam, F. et al. Compressive strength prediction of rice husk ash using multiphysics genetic expression programming. Ain Shams Eng. J. 13, 101593. https://doi.org/10.1016/j.asej.2021.09.020 (2022).

Imtiaz, L. et al. Life cycle impact assessment of recycled aggregate concrete, geopolymer concrete, and recycled aggregate-based geopolymer concrete. Sustainability 13, 13515. https://doi.org/10.3390/su132413515 (2021).

Alyousef, R. et al. Forecasting the strength characteristics of concrete incorporating waste foundry sand using advance machine algorithms including deep learning. Case Stud. Const. Mater. 19, e02459. https://doi.org/10.1016/j.cscm.2023.e02459 (2023).

Amlashi, A. T. et al. Application of computational intelligence and statistical approaches for auto-estimating the compressive strength of plastic concrete. Eur. J. Environ. Civ. Eng. 26, 3459–3490. https://doi.org/10.1080/19648189.2020.1803144 (2022).

Tavana Amlashi, P. A. A., Ghanizadeh, A. R. & Abbaslou, H. Developing three hybrid machine learning algorithms for predicting the mechanical properties of plastic concrete samples with different geometries. AUT J. Civ. Eng. https://doi.org/10.22060/ajce.2019.15026.5517 (2019).

Chu, J., Liu, X., Zhang, Z., Zhang, Y. & He, M. A novel method overcomeing overfitting of artificial neural network for accurate prediction: Application on thermophysical property of natural gas. Case Stud. Therm. Eng. 28, 101406. https://doi.org/10.1016/j.csite.2021.101406 (2021).

Ayub, S. et al. Preparation methods for graphene metal and polymer based composites for EMI shielding materials: State of the art review of the conventional and machine learning methods. Metals 11, 1164. https://doi.org/10.3390/met11081164 (2021).

Iqtidar, A. et al. Prediction of compressive strength of rice husk ash concrete through different machine learning processes. Crystals 11, 352. https://doi.org/10.3390/cryst11040352 (2021).

M.O. and C. Grosan, A Comparison of Several Linear Genetic Programming Techniques, Complex Syst. Publ. Inc. (2003).

Alavi, A. H., Gandomi, A. H., Sahab, M. G. & Gandomi, M. Multi expression programming: A new approach to formulation of soil classification. Eng. Comput. 26, 111–118. https://doi.org/10.1007/s00366-009-0140-7 (2010).

Althoey, F. et al. Prediction models for marshall mix parameters using bio-inspired genetic programming and deep machine learning approaches: A comparative study. Case Stud. Constr. Mater. 18, e01774. https://doi.org/10.1016/j.cscm.2022.e01774 (2023).

Gul, M. A. et al. Prediction of Marshall stability and marshall flow of asphalt pavements using supervised machine learning algorithms. Symmetry. 14, 2324. https://doi.org/10.3390/sym14112324 (2022).

Gandomi, A. H., Faramarzifar, A., Rezaee, P. G., Asghari, A. & Talatahari, S. New design equations for elastic modulus of concrete using multi expression programming. J. Civ. Eng. Manag. 21, 761–774. https://doi.org/10.3846/13923730.2014.893910 (2015).

Oltean, M. & Dumitrescu, D. Multi expression programming. J. Genet. Program. Evol. Mach. (2002).

Koza, J. R. & Poli, R. Genetic programming. In Search Methodology 127–164 (Springer US, 1994). https://doi.org/10.1007/0-387-28356-0_5.

Sharifi, S., Abrishami, S. & Gandomi, A. H. Consolidation assessment using multi expression programming. Appl. Soft Comput. 86, 105842. https://doi.org/10.1016/j.asoc.2019.105842 (2020).

Nuo, L.I., Hao, C.H.E.N. & Han, J.Q. Application of multigene genetic programming for estimating elastic modulus of reservoir rocks. In: 2019 13th Symp. Piezoelectrcity, Acoust. Waves Device Appl., IEEE, 2019: pp. 1–4. https://doi.org/10.1109/SPAWDA.2019.8681879.

Mohammadi Bayazidi, A., Wang, G.-G., Bolandi, H., Alavi, A. H. & Gandomi, A. H. Multigene genetic programming for estimation of elastic modulus of concrete. Math. Probl. Eng. 2014, 1–10 (2014).

Khan, M. et al. Forecasting the strength of graphene nanoparticles-reinforced cementitious composites using ensemble learning algorithms. Results Eng. 21, 101837. https://doi.org/10.1016/j.rineng.2024.101837 (2024).

Mohammadzadeh, S., Danial, Bolouri Bazaz, J., Vafaee Jani Yazd, S. H. & Alavi, A. H. Deriving an intelligent model for soil compression index utilizing multi-gene genetic programming. Environ. Earth Sci. 75, 1–11 (2016).

Mihai Oltean, C. G. A comparison of several linear genetic programming techniques. Complex Syst. 12, 285–313 (2003).

Amlashi, A. T., Abdollahi, S. M., Goodarzi, S. & Ghanizadeh, A. R. Soft computing based formulations for slump, compressive strength, and elastic modulus of bentonite plastic concrete. J. Clean. Prod. 230, 1197–1216. https://doi.org/10.1016/j.jclepro.2019.05.168 (2019).

Alyami, M. et al. Estimating compressive strength of concrete containing rice husk ash using interpretable machine learning-based models. Case Stud. Constr. Mater. 20, e02901. https://doi.org/10.1016/j.cscm.2024.e02901 (2024).

Pyo, J., Hong, S. M., Kwon, Y. S., Kim, M. S. & Cho, K. H. Estimation of heavy metals using deep neural network with visible and infrared spectroscopy of soil. Sci. Total Environ. 741, 140162. https://doi.org/10.1016/j.scitotenv.2020.140162 (2020).

Azim, I. et al. Prediction model for compressive arch action capacity of RC frame structures under column removal scenario using gene expression programming. Structures 25, 212–228. https://doi.org/10.1016/j.istruc.2020.02.028 (2020).

Nguyen, T., Kashani, A., Ngo, T. & Bordas, S. Deep neural network with high-order neuron for the prediction of foamed concrete strength. Comput. Civ. Infrastruct. Eng. 34, 316–332. https://doi.org/10.1111/mice.12422 (2019).

Gandomi, A. H. & Roke, D. A. Assessment of artificial neural network and genetic programming as predictive tools. Adv. Eng. Softw. 88, 63–72. https://doi.org/10.1016/j.advengsoft.2015.05.007 (2015).

Gandomi, A. H., Alavi, A. H., Mirzahosseini, M. R. & Nejad, F. M. Nonlinear genetic-based models for prediction of flow number of asphalt mixtures. J. Mater. Civ. Eng. 23, 248–263. https://doi.org/10.1061/(ASCE)MT.1943-5533.0000154 (2011).

Gholampour, A., Gandomi, A. H. & Ozbakkaloglu, T. New formulations for mechanical properties of recycled aggregate concrete using gene expression programming. Constr. Build. Mater. 130, 122–145. https://doi.org/10.1016/j.conbuildmat.2016.10.114 (2017).

Frank, I. E. & Todeschini, R. The Data Analysis Handbook (Elsevier, 1994).

Golbraikh, A. & Tropsha, A. Beware of q2!. J. Mol. Graph. Model. 20, 269–276. https://doi.org/10.1016/S1093-3263(01)00123-1 (2002).

Roy, P. P. & Roy, K. On some aspects of variable selection for partial least squares regression models. QSAR Comb. Sci. 27, 302–313. https://doi.org/10.1002/qsar.200710043 (2008).

Tavana Amlashi, A., Ghanizadeh, A. R., Abbaslou, H. & Alidoust, P. Developing three hybrid machine learning algorithms for predicting the mechanical properties of plastic concrete samples with different geometries. AUT J. Civ. Eng. 4(1), 37–54 (2020).

Lundberg, S. M. & Lee, S. I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 30, 1 (2017).

Bakouregui, A. S., Mohamed, H. M., Yahia, A. & Benmokrane, B. Explainable extreme gradient boosting tree-based prediction of load-carrying capacity of FRP-RC columns. Eng. Struct. 245, 112836. https://doi.org/10.1016/j.engstruct.2021.112836 (2021).

Mangalathu, S., Hwang, S.-H. & Jeon, J.-S. Failure mode and effects analysis of RC members based on machine-learning-based SHapley Additive exPlanations (SHAP) approach. Eng. Struct. 219, 110927. https://doi.org/10.1016/j.engstruct.2020.110927 (2020).

Funding

Open access funding provided by Lulea University of Technology.

Author information

Authors and Affiliations

Contributions

M.K: Conceptualization, data curation, formal analysis, methodology, project administration, resources, software, supervision, validation, writing—review and editing. M.A: Formal analysis, visualization, writing—original draft. T.N: Validation, visualization, writing—original draft, Y.G: Data curation, Formal analysis, writing—review and editing, funding acquisition.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Khan, M., Ali, M., Najeh, T. et al. Computational prediction of workability and mechanical properties of bentonite plastic concrete using multi-expression programming. Sci Rep 14, 6105 (2024). https://doi.org/10.1038/s41598-024-56088-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-56088-0

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.