Abstract

Increased water intake is recommended for kidney transplant recipients; however, its efficacy remains controversial. We hypothesized that pre-existing histological findings of the allograft might modulate the impact of water intake. We retrospectively analyzed 167 adults with living-donor kidney transplants (April 2011–May 2020; median observation period, 77 months) whose baseline biopsy data were available. We compared the chronic-change group (n = 38) with the control group (n = 129) to assess the impact of self-reported daily water intake on the estimated glomerular filtration rate (eGFR). The range distribution of water intake was as follows: − 1000 ml (n = 4), 1000–1500 ml (n = 23), 1500–2000 ml (n = 64), 2000–2500 ml (n = 57), 2500–3000 ml (n = 16), and 3000 − ml (n = 3). Donor age was significantly higher in the chronic-change group. In the control group, the ΔeGFR/year increase was correlated with water intake. However, the increase in the water intake of the chronic-change group significantly decreased ΔeGFR/year (1000–1500 ml: + 1.95 ml/min/1.73 m2 and > 2000 ml: − 1.92 ml/min/1.73 m2, p = 0.014). This study suggested a potential influence of increased water intake on recipients with marginal grafts in living donor kidney transplantation.

Similar content being viewed by others

Introduction

Active water intake of at least eight glasses per day to improve health is a widely accepted belief that is encouraged in guidelines and social campaigns1,2. Increased water intake prevents kidney stones and acute tubular necrosis caused by reduced water intake and heat stress, which would eventually lead to the progression of chronic kidney disease (CKD)3,4. Increased water intake theoretically protects against kidney disease5 by reducing vasopressin secretion. Vasopressin secretion is related to hyperfiltration-mediated kidney injury3, resulting in renal sclerosis6. Notably, the total water intake per unit of body weight (BW) is known to decrease with age7. To reap survival benefits8, increased water intake remains the standard of care following kidney transplantation5,9.

Evidence supporting the encouragement of increased water intake is scarce in the literature. Several community-based studies have suggested that increasing water intake positively influences the progression of CKD and the prevention of cardiovascular disease10,11. In contrast, CKD population studies have positive12,13 and negative14,15,16 results regarding increased water intake (mostly estimated from urine osmolality) in slowing kidney function decline. A recent CKD-REIN cohort study showed that the degree of water intake, the risk of kidney failure, and the decline of kidney function were U-shaped; both decreased (< 1 L) and increased (> 2 L) water intake were not beneficial, suggesting that the optimal range of daily water intake for CKD patients is 1–2 l/day17. Due to the concentration deficit from tubular damage and fibrosis, patients with advanced CKD produce low-osmolality urine, which requires an increased water intake, resulting in a rapid estimated glomerular filtration rate (eGFR) decline. In kidney transplantation, no studies have supported or discouraged the impact of increased water intake on graft function9,18,19,20. One possible explanation for these conflicting results is the difference in baseline CKD stages15. Furthermore, without specific reasons, CKD patients usually do not undergo kidney biopsies in the clinical setting. CKD staging does not correlate with kidney histological damage, even in different patients with the same eGFR value21.

The intensity of tubulointerstitial fibrosis negatively correlates with kidney function in native kidneys22. Interstitial fibrosis and tubular atrophy (IFTA) found in baseline biopsies describe the prognosis of long-term outcomes in living and deceased kidney transplantation patients23,24,25. We have also reported that even healthy living donors within the donation criteria26 may have pre-existing histopathological damage. This included chronicity change, which is a combination of interstitial fibrosis (ci), tubular atrophy (ct), and arteriolar hyalinosis (ah) of Banff scores27 (ci + ct ≥ 1 ∩ ah ≥ 1), which was related to suboptimal compensatory hypertrophy irrespective of donor age28. This chronicity change would have a long-term impact on the recipient as well29.

To our knowledge, no study, to date, has investigated the impact of water intake and renal biopsy findings on renal function, even in the general and CKD population segments. We hypothesize that the impact of water intake might be affected by the baseline biopsy findings of the allograft in living donor kidney transplantation.

Results

Baseline characteristics

The baseline characteristics of the living donors and recipients are presented in Table 1. The median observation period was 77 months (range 46–99 months). There were no significant differences in recipient characteristics, including age, sex, body mass index (BMI), dialysis vintage, preemptive kidney transplantation, and diabetes. In the donor characteristics, age [chronic change (CC) vs. control: 60.0 (54.5–70.0) years old vs. 57.0 (49.0–63.0) years old, p = 0.01], BMI [24.4 (21.6–26.3) kg/m2 vs. 22.3 (20.7–24.8) kg/m2, p = 0.02], and HbA1c [5.7 (5.5–6.1) % vs. 5.6 (5.4–5.8) %, p = 0.04] were significantly higher in the CC group. Pre-donation eGFR [81.8 (74.1–92.5) ml/min/1.73 m2 vs. 80.9 (72.6–91.1) ml/min/1.73 m2, p = 0.48] and urine protein (UP) [59 (35–94) mg vs. 50 (34–72) mg, p = 0.20] were not different between the groups. Daily water intake peaked at 1500–2000 ml, and the prevalence did not differ between the groups.

Graft and patient outcomes

The graft and patient outcomes are shown in Table 2. Five-year graft and patient survival rates did not differ between the groups. The rates of acute rejection and de novo donor-specific antibody (DSA) did not differ between groups. Although serum creatinine (S-Cr) and eGFR at 1 year and the latest year were significantly lower in the CC group, ΔeGFR/year between the groups was not significantly different [− 0.59 (− 1.77 to 0.59) ml/min/1.73 m2/year vs. − 0.70 (− 1.93 to + 0.74) ml/min/1.73 m2/year, p = 0.74], and the decline in eGFR did not depend on eGFR at 1 year.

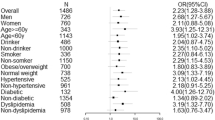

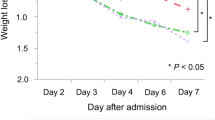

The impact of water intake on graft function and survival

Overall, increased water intake was positively correlated with the eGFR slope (Fig. 1A, p = 0.078). However, in the CC group (Fig. 1B), ΔeGFR/year declined significantly as water intake increased (p = 0.014); peaked at 1000–1500 ml [+ 1.95 (+ 0.46– + 5.36)], and declined thereafter [> 2000 ml, − 1.92 (− 4.66 to + 0.49) ml/min/1.73 m2/year]. One-year UP ≥ 1 seemed to have a similar impact as CC, while 1 year-eGFR < 45 did not (Fig. 1C,D). In the sensitivity analysis, the impact of water intake on graft function (declining more than 1 ml/min/1.73 m2/year) showed an association in the CC group but not in the UP ≥ 1 or eGFR < 45 subgroups (Fig. 2). In the survival analysis, CC and water intake did not impact overall survival. However, UP ≥ 1 and eGFR < 45 did (Fig. 3).

The relationship between the ΔeGFR/year and the amount of daily water intake. (A) Overall, (B) control vs. chronic change group, (C) 1-year UP < 1 vs. 1-year UP ≥ 1, and (D) 1-year eGFR < 45 vs. 1-year eGFR ≥ 45. ※p < 0.05. eGFR estimated glomerular filtration rate, ΔeGFR/year eGFR slope, UP urine protein.

Interaction analyses for the impact of daily water intake on the ΔeGFR/year. The odds ratio for ΔeGFR/year < − 1 or not. Adjusted for recipient gender and age. P values for interactions. aOR adjusted odds ratio, CI confidence interval, eGFR estimated glomerular filtration rate, ΔeGFR/year eGFR slope.

Post-hoc sample size calculation

A post-hoc sample size calculation was performed to determine the minimum sample size needed to achieve reliable results for this cohort. We set a margin of error rate, confidence level, population proportion, and population size of 5%, 95%, 22.8%, and n = 167, respectively. The cohort size in this study comfortably exceeded the calculated minimum sample size requirement of n = 104.

Discussion

In this study, we have proposed a potential association between increased water intake and the slope of kidney function based on pre-existing pathological status in living donor kidney transplantation. The strength of the present study is that we could clarify the baseline status by drawing a comparison with baseline biopsy, UP ≥ 130, and eGFR < 4531. Furthermore, our study further confirmed that pre-donation eGFRs were not correlated with baseline biopsy findings in the otherwise healthy living donor population.

Notably, increased water intake differed according to the baseline histological changes; in the CC group, graft function was best preserved or improved with 1000–1500 ml daily water intake and decreased thereafter. The relationship between water intake in the CC group and the eGFR slope was U-shaped in our study, which was in line with the CKD-REIN cohort study17. As per the present study, 1000–2000 ml water intake per day could be recommended, irrespective of the baseline biopsy status. However, a further increase in water intake improved the eGFR slope in the control group. The positive impact of increased water intake was also observed in the UP < 1 and eGFR ≥ 45 groups. As expected, the CC group had a higher proportion of older donors, higher BMI, and higher HbA1c levels at baseline than the control group. However, this did not directly impact the distribution of daily water intake categories, as it remained normally distributed in both of the two groups.

The impact of this increased water intake on graft function might differ between healthy single kidneys and pre-diseased single kidneys. In healthy 5/6 nephrectomized rats, increased water intake effectively slowed the progression of early chronic renal failure [increased creatinine clearance (CCr), reduced proteinuria, hypertrophy, sclerosis, and fibrosis] by reducing vasopressin32,33. It failed to increase CCr in a streptozotocin-induced diabetic rat model34, possibly because of the AVP-resistant downregulation of aquaporin receptors35. Increased plasma flow due to increased water intake for a pre-diseased single kidney might induce glomerular hyperfiltration, resulting in hypertrophy of the allograft and finally leading to sclerosis36. Furthermore, increased water intake might induce “renal tamponade” or compression of the kidney in fibrotic kidneys37. Reduced elastosis in the transplanted, ectopic kidney would be unable to withstand the micro-intestinal edema as there is limited space for pressure escape, resulting in tubular compression and ischemia37. However, the concept of renal tamponade has not been established in transplanted kidneys, it has been mainly used in patients with fluid overload and cardiac failure37,38.

In the clinical setting, general population studies consistently report the benefit of increased water intake in lowering CKD prevalence and slowing the kidney function slope. However, discrepancies exist in the CKD population regarding increased water intake12,13,14,16,17,18,19,20, which might arise from the different baseline stages15, since stage evaluations were solely dependent on eGFR. The varied effects of water intake depending on the baseline pathological status of the kidney in this study would implicate a new target for novel CKD studies and management. Notably, adherence to NaCl restriction was slightly better in the CC group. Considering there was no difference in the recipient characteristics between the CC and control groups, informing recipients of the baseline biopsy result might have affected their attitude toward a better lifestyle and improved graft survival39.

There are several limitations that should be considered alongside the results of this study. Because of the single-center retrospective design and the constrained sample size, the exclusion of potential confounders was not feasible, and a detailed analysis within the chronic change (CC) group could not be undertaken. Due to the substantial dissimilarities in characteristics between recipients and donors, employing statistically robust adjustments or matching methods to mitigate potential confounding factors was challenging. Besides the baseline biopsy, we did not regularly perform protocol biopsies. Therefore, it would be of great interest if we could analyze patient data with respect to the progression of fibrosis, atrophy, and sclerosis after kidney transplantation. We did not include the possible impact of tacrolimus (TAC) or cyclosporine on the chronic damage and deterioration of kidney function. We acknowledge that the reliability of self-reporting water intake is limited. Prospective studies employing alternative methodologies, such as "smart" water bottles, represent the forthcoming challenge aimed at enhancing the precision of estimation40. Although we employed arbitrary water intake categorization, considering the measurement of fluid requirements using objective parameters, such as bioimpedance, for future research is advisable since this could provide a more accurate assessment41. Care should be taken in interpreting the implications of increased water intake and eGFR decline in the chronic change group, given the potential influence of confounding factors such as healthcare providers' instructions to increase water intake. Furthermore, the disparity between eGFR decline and graft survival could stem from the distinct time points of measurements, with baseline biopsy conducted at the time of implantation and urine protein/eGFR assessments performed at 1-year after transplantation. Further prospective studies are essential for a more comprehensive understanding of the relationship between chronic pathological changes and water intake in graft function after living donor kidney transplantation.

In conclusion, this study suggested a potential influence of increased water intake on recipients with marginal grafts in living donor kidney transplantation. Further studies are needed to confirm the effects of increased water intake and pathological findings in CKD and kidney transplant patients.

Methods

Our institution conducted 189 consecutive living donor kidney transplantations from April 2011 to May 2020. Among these, 5, 2, 6, and 9 cases with graft failure within 2 years, with unavailable baseline biopsy data, involving pediatric patients (aged < 18 years at the time of transplantation), and with lost follow-up or incomplete data, respectively, were excluded from the analysis. Eventually, 167 cases were included in this retrospective analysis. To examine the effect of self-reported daily water intake on ΔeGFR/year according to baseline biopsy findings, the CC group (IFTA or chronicity change defined as ct + ci ≥ 1 ∩ ah ≥ 1, n = 38) was compared with the control group (n = 129). The next section of this manuscript describes a detailed definition of the CC group of patients.

The corresponding data were collected from electronic medical records. The recipient characteristics were as follows: age, gender, BW, (kg), height, BMI (kg/m2), dialysis vintage, presence of diabetic nephropathy, observation period, pre-emptive kidney transplant, S-Cr, eGFR, UP, ABO blood type incompatibility, and pre-existing DSA. The preoperative donor characteristics were as follows: age, gender, BW, BMI, height, S-Cr, eGFR, CCr, measured GFR, UP, low-density lipoprotein cholesterol, and HbA1c. All donors were selected by the Japanese donor selection criteria26. The standard immunosuppressive regimen included TAC, mycophenolate mofetil (MMF), and methylprednisolone. The desensitization therapy consisted of rituximab, plasmapheresis, and MMF. The eGFR slope (ΔeGFR/year) was calculated (latest eGFR − 1-year post-transplant eGFR)/(postoperative years of the latest eGFR − 1). Nonadherence was defined as whether the recipients had a prior history of skipping scheduled outpatient visits. All acute rejections (including T cell-mediated and antibody-mediated rejection) were biopsy-proven. De novo DSA (A, B, Cw, DR, DQ) was defined as more than 1000 mean fluorescence intensities measured by single human leukocyte antigen (HLA)-coated synthetic flow bead analysis (LABScreen® Single Antigen HLA Class I and II, One Lambda, CA, USA).

This study was approved by the institutional review board of the Japanese Red Cross Kumamoto Hospital (Approval Number 513). The review board waived the requirement for informed consent based on the nature of this research. None of the transplant donors were from a vulnerable population, and all donors or their next of kin provided written informed consent. The clinical and research activities being reported are consistent with the Principles of the Declaration of Istanbul as outlined in the 'Declaration of Istanbul on Organ Trafficking and Transplant Tourism' and in accordance with the principles of the Declaration of Helsinki.

Water intake data collection

Data on water intake were collected from the medical notes that all patients were required to record (water intake, urine output, and physical activity) at every outpatient visit to our institution. The recipients were instructed to note only plain water intake. Considering the seasons and work environments, water intake ranges were categorized as – 1000, 1000–1500, 1500–2000, 2000–2500, 2500–3000, and 3000 – ml.

Pathological diagnosis

Baseline biopsy was done 1 h after reperfusion during the transplantation. Data were collected from the pathological reports. No protocol biopsies using other timings were included in this study. Chronic histopathological findings were classified according to the banff classification as ci, ct, and ah27. Other than IFTA, the definition of chronicity change is the combination of those above (ci + ct ≥ 1 ∩ ah ≥ 1) as previously reported28,29.

Statistical analysis

Variables were analyzed using Chi-square and Fisher’s exact tests for categorical data and Mann–Whitney U and Kruskal–Wallis H test tests for continuous data. Unless otherwise specified, all continuous data are expressed as medians (interquartile range) considering the non-parametric distribution. Multivariate analysis was used to adjust for the effect of water intake on graft function. Survival analyses were performed using the Kaplan–Meier method, and statistical differences between the curves were assessed using the log-rank test. A post-hoc sample size calculation was conducted to determine the minimum sample size for this cohort using the Sample Size Calculator (https://www.calculator.net/sample-size-calculator.html). Statistical significance was set at p < 0.05. For multiple comparisons, Bonferroni correction was applied to correct false-positive results. IBM SPSS Statistics for Windows, version 28 (IBM Corp., Armonk, NY, USA) was used for statistical analysis.

Data availability

Data are available upon request from the corresponding author, S.Y.

References

EFSA Panel on Dietetic Products, N., and Allergies. Scientific opinion on dietary reference values for water. EFSA J. 8, 1459. https://doi.org/10.2903/j.efsa.2010.1459 (2010).

Al-Awqati, Q. Thirst, and (bottled) water everywhere. Kidney Int. 71, 1191–1192. https://doi.org/10.1038/sj.ki.5002351 (2007).

Clark, W. F. et al. Hydration and chronic kidney disease progression: A critical review of the evidence. Am. J. Nephrol. 43, 281–292. https://doi.org/10.1159/000445959 (2016).

Maremonti, F., Meyer, C. & Linkermann, A. Mechanisms and models of kidney tubular necrosis and nephron loss. J. Am. Soc. Nephrol. 33, 25 (2022).

Ibrahim, H. N., Murad, D. N. & Knoll, G. A. Thinking outside the box: Novel kidney protective strategies in kidney transplantation. Clin. J. Am. Soc. Nephrol. 16, 1890–1897. https://doi.org/10.2215/CJN.15070920 (2021).

Visconti, L. et al. The myth of water and salt: From aquaretics to tenapanor. J. Ren. Nutr. 28, 73–82. https://doi.org/10.1053/j.jrn.2017.06.005 (2018).

Mallett, L. J. et al. Total water intake by kilogram of body weight: Analysis of the Australian 2011 to 2013 National Nutrition and Physical Activity Survey. Nutr. Dietet. J. Diet. Assoc. Aust. 78, 496–505. https://doi.org/10.1111/1747-0080.12697 (2021).

Hariharan, S., Israni, A. K. & Danovitch, G. Long-term survival after kidney transplantation. N. Engl. J. Med. 385, 729–743. https://doi.org/10.1056/NEJMra2014530 (2021).

Kullgren, K. A., Scholl, P., Kidwell, K. M. & Hmiel, S. P. Using an interactive water bottle to target fluid adherence in pediatric kidney transplant recipients: A pilot study. Pediatr. Transplant. 19, 35–41. https://doi.org/10.1111/petr.12385 (2015).

Clark, W. F. et al. Urine volume and change in estimated GFR in a community-based cohort study. Clin. J. Am. Soc. Nephrol. 6, 2634–2641. https://doi.org/10.2215/CJN.01990211 (2011).

Sontrop, J. M. et al. Association between water intake, chronic kidney disease, and cardiovascular disease: A cross-sectional analysis of NHANES data. Am. J. Nephrol. 37, 434–442. https://doi.org/10.1159/000350377 (2013).

Torres, V. E. et al. Potentially modifiable factors affecting the progression of autosomal dominant polycystic kidney disease. Clin. J. Am. Soc. Nephrol. 6, 640–647. https://doi.org/10.2215/CJN.03250410 (2011).

Plischke, M. et al. Urine osmolarity and risk of dialysis initiation in a chronic kidney disease cohort—a possible titration target?. PLoS One 9, e93226. https://doi.org/10.1371/journal.pone.0093226 (2014).

Hebert, L. A., Greene, T., Levey, A., Falkenhain, M. E. & Klahr, S. High urine volume and low urine osmolality are risk factors for faster progression of renal disease. Am. J. Kidney Dis. 41, 962–971. https://doi.org/10.1016/s0272-6386(03)00193-8 (2003).

Lee, M. J. et al. Urine osmolality and renal outcome in patients with chronic kidney disease: Results from the KNOW-CKD. Kidney Blood Press. Res. 44, 1089–1100. https://doi.org/10.1159/000502291 (2019).

Tabibzadeh, N. et al. Fasting urinary osmolality, CKD progression, and mortality: A prospective observational study. Am. J. Kidney Dis. 73, 596–604. https://doi.org/10.1053/j.ajkd.2018.12.024 (2019).

Wagner, S. et al. Water intake and progression of chronic kidney disease: The CKD-REIN cohort study. Nephrol. Dial. Transplant. 37, 730–739. https://doi.org/10.1093/ndt/gfab036 (2022).

Weber, M. et al. Daily fluid intake and outcomes in kidney recipients: Post hoc analysis from the randomized ABCAN trial. Clin. Transplant. 29, 261–267. https://doi.org/10.1111/ctr.12514 (2015).

Magpantay, L., Ziai, F., Oberbauer, R. & Haas, M. The effect of fluid intake on chronic kidney transplant failure: A pilot study. J. Ren. Nutr. 21, 499–505. https://doi.org/10.1053/j.jrn.2011.01.020 (2011).

Gordon, E. J. et al. Longitudinal analysis of physical activity, fluid intake, and graft function among kidney transplant recipients. Transplant Int. 22, 990–998. https://doi.org/10.1111/j.1432-2277.2009.00917.x (2009).

Trevisani, F. et al. Renal histology across the stages of chronic kidney disease. J. Nephrol. 34, 699–707. https://doi.org/10.1007/s40620-020-00905-y (2021).

Saritas, T. & Kramann, R. Kidney allograft fibrosis: Diagnostic and therapeutic strategies. Transplantation 105, e114–e130. https://doi.org/10.1097/TP.0000000000003678 (2021).

Raza, S. S. et al. Abnormal time-zero histology is predictive of kidney transplant outcomes. Clin. Transplant. 36, e14676. https://doi.org/10.1111/ctr.14676 (2022).

Hofer, J. et al. Pre-implant biopsy predicts outcome of single-kidney transplantation independent of clinical donor variables. Transplantation 97, 426–432. https://doi.org/10.1097/01.tp.0000437428.12356.4a (2014).

El-Husseini, A., Sabry, A., Zahran, A. & Shoker, A. Can donor implantation renal biopsy predict long-term renal allograft outcome?. Am. J. Nephrol. 27, 144–151. https://doi.org/10.1159/000099944 (2007).

Morozumi, K. Clinical practice guidelines for renal transplant donors and recipients in Japan. Jpn. J. Transplant. 49, 410–416. https://doi.org/10.11386/jst.49.410 (2014).

Roufosse, C. et al. A 2018 reference guide to the Banff classification of renal allograft pathology. Transplantation 102, 1795–1814. https://doi.org/10.1097/TP.0000000000002366 (2018).

Nishida, S. et al. Factors related to suboptimal recovery of renal function after living donor nephrectomy: A retrospective study. BMC Nephrol. 20, 403. https://doi.org/10.1186/s12882-019-1588-3 (2019).

Tanaka, K. et al. HbA1c and aortic calcification index as noninvasive predictors of pre-existing histopathological damages in living donor kidney transplantation. J. Clin. Med. 9, 25. https://doi.org/10.3390/jcm9103266 (2020).

Tsampalieros, A. & Knoll, G. A. Evaluation and management of proteinuria after kidney transplantation. Transplantation 99, 2049–2060. https://doi.org/10.1097/TP.0000000000000894 (2015).

Baek, C. H., Kim, H., Yang, W. S., Han, D. J. & Park, S. K. A postoperative 1-year eGFR of more than 45 ml/min may be the cutoff level for a favorable long-term prognosis in renal transplant patients. Ann. Transplant. Q. Pol. Transplant. Soc. 21, 439–447. https://doi.org/10.12659/aot.897938 (2016).

Bouby, N., Bachmann, S., Bichet, D. & Bankir, L. Effect of water intake on the progression of chronic renal failure in the 5/6 nephrectomized rat. Am. J. Physiol. 258, F973-979. https://doi.org/10.1152/ajprenal.1990.258.4.F973 (1990).

Sugiura, T. et al. High water intake ameliorates tubulointerstitial injury in rats with subtotal nephrectomy: Possible role of TGF-beta. Kidney Int. 55, 1800–1810. https://doi.org/10.1046/j.1523-1755.1999.00443.x (1999).

Perico, N. et al. V1/V2 Vasopressin receptor antagonism potentiates the renoprotection of renin-angiotensin system inhibition in rats with renal mass reduction. Kidney Int. 76, 960–967. https://doi.org/10.1038/ki.2009.267 (2009).

Kwon, T. H., Frokiaer, J., Knepper, M. A. & Nielsen, S. Reduced AQP1, -2, and -3 levels in kidneys of rats with CRF induced by surgical reduction in renal mass. Am. J. Physiol. 275, F724-741. https://doi.org/10.1152/ajprenal.1998.275.5.F724 (1998).

Okumura, K., Grace, H., Sogawa, H. & Yamanaga, S. Acute kidney injury and the compensation of kidney function after nephrectomy in living donation. World J. Transplant. 12, 223–230. https://doi.org/10.5500/wjt.v12.i8.223 (2022).

Boorsma, E. M., Ter Maaten, J. M., Voors, A. A. & van Veldhuisen, D. J. Renal compression in heart failure: The renal tamponade hypothesis. JACC. Heart Failure 10, 175–183. https://doi.org/10.1016/j.jchf.2021.12.005 (2022).

Rubio-Gracia, J., Giménez-López, I., Sánchez-Marteles, M., Josa-Laorden, C. & Pérez-Calvo, J. I. Intra-abdominal pressure and its relationship with markers of congestion in patients admitted for acute decompensated heart failure. Heart Vessels 35, 1545–1556. https://doi.org/10.1007/s00380-020-01634-9 (2020).

Gordon, E. J., Prohaska, T. R., Gallant, M. & Siminoff, L. A. Self-care strategies and barriers among kidney transplant recipients: A qualitative study. Chron. Illness 5, 75–91. https://doi.org/10.1177/1742395309103558 (2009).

Borofsky, M. S., Dauw, C. A., York, N., Terry, C. & Lingeman, J. E. Accuracy of daily fluid intake measurements using a “smart” water bottle. Urolithiasis 46, 343–348. https://doi.org/10.1007/s00240-017-1006-x (2018).

Jaffrin, M. Y. & Morel, H. Body fluid volumes measurements by impedance: A review of bioimpedance spectroscopy (BIS) and bioimpedance analysis (BIA) methods. Med. Eng. Phys. 30, 1257–1269. https://doi.org/10.1016/j.medengphy.2008.06.009 (2008).

Acknowledgements

Authors appreciate Dr Masatomo Ogata for providing thoughtful insights.

Author information

Authors and Affiliations

Contributions

S.Y, M.T. and Y.H. designed the research, S.Y. and Y.H. analyzed data, and wrote the article. S.Y., Y.H., C.K., M.T., K.T., Y.Y., A.I., A.T., and H.Y. collected the data and reviewed the article.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yamanaga, S., Hidaka, Y., Kawabata, C. et al. Water intake, baseline biopsy, and graft function after living donor kidney transplantation. Sci Rep 14, 3715 (2024). https://doi.org/10.1038/s41598-024-54163-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-54163-0

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.