Abstract

Improving the accuracy of long-term multivariate time series forecasting is important for practical applications. Various Transformer-based solutions emerging for time series forecasting. Recently, some studies have verified that the most Transformer-based methods are outperformed by simple linear models in long-term multivariate time series forecasting. However, these methods have some limitations in exploring complex interdependencies among various subseries in multivariate time series. They also fall short in leveraging the temporal features of the data sequences effectively, such as seasonality and trends. In this study, we propose a novel seasonal-trend decomposition-based 2-dimensional temporal convolution dense network (STL-2DTCDN) to deal with these issues. We incorporate the seasonal-trend decomposition based on loess (STL) to explore the trend and seasonal features of the original data. Particularly, a 2-dimensional temporal convolution dense network (2DTCDN) is designed to capture complex interdependencies among various time series in multivariate time series. To evaluate our approach, we conduct experiments on six datasets. The results demonstrate that STL-2DTCDN outperforms existing methods in long-term multivariate time series forecasting.

Similar content being viewed by others

Introduction

Long-term series forecasting of multivariate time series has already played a significant role in numerous practical fields, such as transportation1, meteorology2, energy management3, finance4, environment5, etc. In these practical application scenarios, we can explore a mass of historical data to forecast the future value for making decisions and planning in advance. The task of time series prediction is divided into multivariate and univariate based on the number of temporal variables involved. Multivariate time series forecasting tasks holds extremely challenges when dealing with long-term setting, yet they hold crucial practical significance. Hence, we are dedicated to developing appropriate methods to improve the forecasting performance of models in long-term multivariate time series.

Many scholars have proposed various methods for time series forecasting. Traditional statistic-based methods are mainly applied in univariate time series forecasting tasks, for example, Autoregressive (AR)6, Autoregressive Integrated Moving Average (ARIMA)7, Exponential Smoothing (ES)8, and more. However, these traditional methods encounter challenges in capturing intricate nonlinear dependencies within long-term multivariate time series. For the past few years, deep learning has made great progress in the field of time series forecasting. Recurrent Neural Network (RNN) is an important model in the area of sequence modeling and are widely used in natural language processing9. Given the sequential nature of time series data, numerous RNN-based models and their variants are employed for time series forecasting10,11,12,13. Furthermore, Convolutional Neural Network (CNN) and its variants, such as Temporal Convolutional Network (TCN), are combined with RNN to enhance the model's capability in capturing local temporal features14,15. Additionally, to enhance the robustness and reliability of forecasting results, some works propose ensemble models for prediction16,17,18.

In the past few years, Transformer19 has demonstrated remarkable effectiveness in the domains of picture processing20 and language processing21. Transformer-based models exhibit superior performance in exploring long-term dependencies compared to RNN models. Therefore, numerous works have designed various prediction methods with the Transformer architecture. Nevertheless, the quadratic complexity of the calculating self-attention in both memory and time has been the limitation of applying Transformer to time series forecasting problem in long-term. Some studies meticulously design effective modules to address these challenges. By combining causal convolution with the Transformer architecture, the Logtran22 introduces a novel attention mechanism called LogSparse self-attention. In this innovative method, the queries and keys for self-attention are generated through causal convolution. Reformer23 replace self-attention with a novel locality-sensitive hashing and uses reversible residual to replace the standard residual. Informer24 introduces the ProbSparse self-attention mechanism, focusing on extracting important queries. Autoformer25 designs the auto-correlation and combines the sequence decomposition with Transformer architecture. Fedformer26 applies Fourier to enhance the performance of Transformer. PatchTST27 uses patches of time series data as tokens for the Transformer. CNformer28 proposes a CNN-based encoder-decoder attention mechanism to replace the vanilla attention mechanism. Triformer29 presents a triangular structure with the Patch Attention. While Transformer-based solutions have made great success in long-term time series forecasting, recent studies have indicated that most of Transformer-based methods can be outperformed by simple linear models. For instance, Zeng et al.30 introduce a simple linear model and achieve remarkable performance on forecasting benchmarks. Das et al.31 design a novel Time-series Dense Encoder (TiDE) model to solve time series prediction tasks in long-term, which can explore non-linear dependencies and enjoys the speed of linear models.

Although various Transformer-based models and linear models have made valuable contributions to time series forecasting, they often fall short when capturing complex interdependencies among components in long-term. Additionally, they have not effectively leveraged the temporal characteristics of the data sequences, such as seasonality and trends. To mend these gaps, we propose the STL-2DTCDN to deal with long-term multivariate time series forecasting tasks. STL-2DTCDN use the STL32 to decompose original series into three different subseries. The primary contributions of this paper can be summarized as follows:

-

1.

We present the STL-2DTCDN for long-term multivariate time series forecasting. It follows a hybrid structure similar to most recent studies but incorporates enhanced component methods. The STL-2DTCDN achieves the state-of-the-art performance on six practical multivariate time series datasets, with a significant improvement in prediction accuracy.

-

2.

Different from canonical TCN designed for single time series, we present a 2-dimensional temporal convolution dense network (2DTCDN) for multivariate time series forecasting. The 2DTCDN, employing 2D convolutional kernels, casual convolution, dilated convolution, and a dense layer, making it highly effective at capturing complex interdependencies among various time series in multivariate time series.

-

3.

To enhance the model's ability in capturing seasonal and trend features, we integrate STL as the processing method. The original time sequence data is decomposed into different subseries with STL: seasonal, trend, and residual. These derived subseries can effectively illustrate the seasonal and trend characteristics inhered in the primitive data.

Related works

Methods for time series forecasting

Given the time series prediction tasks hold paramount significance in real world applications, numerous methods have been meticulously developed. Many traditional time series prediction models begin with statistical methods33,34. ARIMA7 adopts the Markov process to constructs the autoregressive model for iterative sequential forecasting. Nevertheless, an autoregressive process is incapable when handling complex sequence with nonlinearity and non-stationarity. As the evolution of neural networks over the past decades, RNN9 has been specially designed for processing sequential data. To address the challenge of gradient vanishing, many studies propose various modifications of RNN such as LSTM12 and GRU13. TCN14 is a neural network that employs dilated causal 1D convolution layers tailored for 1D data. However, as the convolution kernel with limited receptive field, the vanilla TCN is unable to explore interdependencies among various time series in multivariate time series data.

As a single model may fall short in learning complex features, some works pay attention to integrate various methods to one framework and present many ensemble models for time series forecasting. Ensemble models have been used in many practical applications successfully, such as traffic forecasting17, financial forecasting18, and energy management35,36. Moreover, ensemble learning methods hold robustness and reliability. Therefore, ensemble models have advantages in the field of medical applications37,38.

With the Transformer's impact on natural language processing and computer vision in recent years, there has been a surge in discussions, adaptations, and applications of Transformer-based solutions in time series forecasting. As for network modifications, various adaptations of Transformer for time series can be summarized into two levels: architectural and modular39. Some approaches, including Informer24, Autoformer25, and FEDformer26, modify the vanilla positional encoding of Transformer to leverage timestamps of time series and redesign the attention calculation methods to reduce complexity. Besides adapting individual modules within Transformer for time series modeling, approaches like Informer24 and Pyraformer40 aim to reconfigure Transformer at the architectural level. Notably, recent studies by Zeng et al.30 and Das et al.31 have demonstrated that linear models possess a strong capability for temporal relation extraction. In many cases, these linear models outperform most Transformer-based models in the area of time series forecasting.

Decomposition of time series

Entangled temporal features in multivariate time series forecasting present significant challenges when it comes to effectively exploring local and long-range dependencies among time points and variables41. Many methods identify temporal dependencies with entangled temporal patterns, but they often can hardly fully leverage the inherent complex features of time series data, such as seasonality and trends. Therefore, various studies adopt time series decomposition to analyze time series. These decomposition methods can be divided into three categories: frequency domain decomposition, time domain decomposition, and time–frequency domain decomposition. The Fourier transform (FT)25,26 is a widely-recognized frequency domain decomposition technique in time series analysis. FT and its modifications can transform an original sequence from time domain to frequency domain, but they ignore trends shifts of time series. The STL is an important time domain decomposition method, which can effectively decompose a time series data into three distinct subseries. These three components represent different underlying categories of patterns that exhibit higher predictability. Wavelet transform (WT)42,43 and empirical wavelet transformation (EWT)44,45 are time–frequency domain decomposition methods that are particularly well-suited for the analysis of non-stationary series, as they can provide enhanced local time–frequency information.

Methodology

We begin by introducing the formulation representation of multivariate time series forecasting. Subsequently, all involved components and the architecture of the proposed STL-2DTCDN model are presented. Finally, we detail the objective function and the evaluation metric employed for model training.

Problem statement

The formulation is articulated as follows: Given one historical time data denoted as \({Y}_{1:L}={\left\{{y}_{1}^{t},{y}_{2}^{t},...,{y}_{c}^{t}\right\}}_{t=1}^{L}\) for \(t=1\) to L, where L is the fixed look-back window, c (c > 1) is the number of variates, and \({y}_{i}^{t}\) denotes the value of the \({i}_{th}\) variate at the \({t}_{th}\) time. The multivariate time series prediction tasks aiming to figure out the predicted series \({\widehat{Y}}_{L+1:L+H}={\left\{{\widehat{y}}_{1}^{t},{\widehat{y}}_{2}^{t},...,{\widehat{y}}_{c}^{t}\right\}}_{t=L+1}^{L+H}\), where c (c > 1) denotes the number of variates, \({\widehat{y}}_{i}^{t}\) is the predicted result of the \({i}_{th}\) variate at the time step \(t\), and H (H > 1) denotes the number of forecasting time steps. The ground truth for the time period from L + 1 to L + H is denoted as \({Y}_{L+1:L+H}={\left\{{y}_{1}^{t},{y}_{2}^{t},...,{y}_{c}^{t}\right\}}_{t=L+1}^{L+H}\). Long-term multivariate time series forecasting aims to forecast \(\widehat{Y}\) with a larger value of H (\(H\gg 1\)).

Multi-step forecasting can be categorized into two types: iterated multi-step (IMS)46 forecasting and direct multi-step (DMS)47 forecasting. IMS forecasting iteratively predicts each time step, but it suffers from error accumulation effects. Compared to IMS forecasting, DMS forecasting can directly learn all prediction results at once. Consequently, DMS forecasting can outperform IMS forecasting in long-term forecasting tasks.

Seasonal-trend decomposition based on loess (STL)

STL is an effective approach that can decompose an original time sequence data into three different subseries, which can be formulated as:

where \(t=\mathrm{1,2},...,n\) represents time steps, \({Y}_{t}\) denotes the original time series data, \({T}_{t}\), \({S}_{t}\), and \({R}_{t}\) represents the trend, seasonal, and residual components, respectively. In contrast to traditional decomposition methods, STL provides much more robust components for effectively decomposing time series sequence, especially in the presence of outliers. STL methodology consists of two iterative processes, known as the inner loop and the outer loop. Seasonal smoothing and trend smoothing during a single iteration are conducted in the inner loop, updating both the seasonal and trend components. Suppose \({{T}_{t}}^{k}\) and \({{S}_{t}}^{k}\) are the trend and seasonal components at the end of the \({k}_{th}\) iteration of the inner loop, respectively. Steps of computing \({{T}_{t}}^{k+1}\) and \({{S}_{t}}^{k+1}\) for the \({(k+1)}_{th}\) inner loop are detailed as follows:

Step 1: Detrending. Computing the detrend series \({Y}_{t}^{detrend}={Y}_{t}-{T}_{t}^{k}\). If there is a missing \({Y}_{t}\) at a time step, then the \({Y}_{t}^{detrend}\) of that time step is also missing;

Step 2: Seasonal smoothing. Smoothing the \({Y}_{t}^{detrend}\) with a smoother using Loess to figure out the initial seasonal component \({\widehat{S}}_{t}^{k+1}\);

Step 3: Filtering with low-pass. Processing \({\widehat{S}}_{t}^{k+1}\) with a filter with low-pass and a subsequent using Loess to figure out any residual trend component \({{\widehat{T}}_{t}}^{k+1}\);

Step 4: Detrending. The seasonal elements \({S}_{t}^{k+1}\) of the \({(k+1)}_{th}\) inner loop is calculated by \({\widehat{S}}_{t}^{k+1}-{{\widehat{T}}_{t}}^{k+1}\);

Step 5: Deseasonalizing. Subtract the seasonal elements from the original sequence \({Y}_{t}\) to get the deseasonalized time series \({Y}_{t}^{detrend}={Y}_{t}-{S}_{t}^{k+1}\);

Step 6: Trend smoothing. The trend component \({{T}_{t}}^{k+1}\) is obtained by smoothing \({Y}_{t}^{detrend}\) the with a Loess smoother.

After finishing the inner loop, the initial sequence is decomposed into the trend elements and the seasonal elements, the residual elements are calculated by in the outer loop: \({R}_{t}^{k+1}={Y}_{t}-{T}_{t}^{k+1}-{S}_{t}^{k+1}\).

The parameters of STL were explored in previous experiments41. In this study, we set relevant parameters refer to the recommended defaults. Figure 1 presents the decomposition results of STL with the default parameter values, using data from the Centers for Disease Control and Prevention of the United States.

2-Dimensional temporal convolution dense network (2DTCDN)

TCN is an effective approach proposed for modeling long sequence. Different from traditional RNN, TCN leverage the concept of CNN to explore complex dependencies in time sequences. The TCN architecture is presented in the Fig. 2, which consists of various layers, and an optional 1 × 1 convolution. Notably, dilated causal convolutions are used in TCN to increase the receptive field, enabling the capture of features at different time scales in time series data. For one 1-D sequence \(X\in {R}^{M}\), and the filter \({K}_{d}\) with dilation rate \(d\), the operation of the dilated causal convolution is defined as

where \(\widehat{X}\left(t\right)\) is the \({t}_{th}\) element of the output processed by the dilated causal convolution, \(X(t-(d\cdot \tau ))\) represents the \({(t-(d\cdot \tau ))}_{th}\) element of the input sequence \(X\), \({K}_{d}(\tau )\) denotes the \({\tau }_{th}\) element of the filter, \(l\) is the length of the filter.

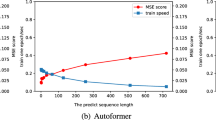

As illustrated in the Fig. 3, one framework of the dilated causal convolution with a filter size of l = 3 and dilation rate is set to d = 1, 2, 4. However, it's important to note that TCN's filter is one-dimensional (1D) and can only convolve along the time dimension of the time series. Consequently, TCN has limitations in capturing interdependencies among various time series in multivariate time series data. To better adapt TCN for multivariate time series forecasting tasks, we make some adjustments to the vanilla TCN and introduce the 2DTCDN. Figure 4 shows the architecture of 2DTCDN.

Causal convolution is an important concept, which limits that the output at time t is influenced by elements no later than t, ensuring that the future cannot influence the past. This concept is called information leakage, which is crucial in time series forecasting and it was initially proposed more than 30 years ago by Waibel et al.48. To maintain consistent dimensionality with the input layer and enable convolutions, zero padding is applied in the hidden layers. However, since we are using 2D convolutional kernels in the proposed 2DTCDN, the padding method and convolution process differ from that of the 1D dilated causal convolution. For one 2D sequence \(X\in {R}^{M\times N}\), and the 2D filter \({K}_{d}\) with dilation rate d, the operation of the 2D dilated causal convolution is formulated as

where \(\widehat{X}\left(i,j\right)\) is the \({(i,j)}_{th}\) element of the output processed by 2D dilated causal convolution, \(X(i-(m-1)\cdot d,j-(n-1)\cdot d)\) represents the \({(i-(m-1)\cdot d,j-(n-1)\cdot d)}_{th}\) element of the input 2D matrix \(X\), \({K}_{d}(m,n)\) denotes the \({(m,n)}_{th}\) element of the 2D filter, \(h\) and \(w\) respectively denote the height and width of the 2D filter.

Figure 5 presents the padding of 2DTCDN, the kernel size is set to 3 × 3, dilation is set to 1. The padding of in the time dimension is similar to the 1D causal convolution, with the padding length calculated as \(\left( {filter\_size {-} 1} \right) \times dilation\). In the feature dimension, the first 'padding length' features are duplicated and placed after the last feature to serve as padding data.

Dilated convolution is a variant of traditional convolutional operations used in deep learning49. In a standard convolution, a filter slides over the input data with a fixed stride, and each weight in the filter interacts with a neighboring input pixel. Different from the standard convolution, dilated convolution introduces gaps between the weights of the filter, allowing it to capture information from a broader receptive field while retaining the original resolution. For example, Fig. 6 illustrates a 2D dilated causal convolution process, the filter size is set to 3 × 3, dilation and stride are both set to 1.

Residual connections

Residual connections are a fundamental architectural component in deep neural networks proposed by He et al.50. These connections are employed to mitigate the vanishing gradient. The main idea behind residual connections is to add the skip connection between different layers, creating a shortcut path for the gradient during backpropagation. We adopt the dense layer as the residual connection in the proposed 2DTCDN.

Dense layer

Recent studies by Zeng et al.30 and Das et al.31 have demonstrated the remarkable capabilities of simple linear models in time series prediction tasks. Essentially, only one simple one-layer linear model can explore complex interdependencies within the sequence data effectively, allowing the neural network to explore intricate features that are important for the task. In our proposed 2DTCDN, we integrate the residual block of TiDE with 2D dilated causal convolution.

Overview of the STL-2DTCDN framework

Figure 7 illustrates the entire framework of the STL-2DTCDN. We use \({F}_{t}\) to denote the time features at time step t. These time features include the holidays, the day of week, or other specific to a particular time step. The time series are first decomposed into three sub-series: trend (\({T}_{t}\)), seasonal (\({S}_{t}\)), and residual (\({R}_{t}\)) using STL. Subsequently, these three sub-series, concatenated with time features, are separately processed by an encoder architecture with the 2DTCDN block. Next, the processed sub-series are concatenated to form the input data of the next decoder architecture. Finally, outcomes of the decoder layer concatenated with time features are delivered to a dense layer to generate the predictions.

Objective function

The squared error is a loss function frequently employed in time series prediction tasks. It evaluates the squared differences between the real values and predicted values. The optimization objective is formulated as:

where \(Train\) denotes a set of training time steps, \(t\) represents the time step, \(H\) represents the horizon of prediction, \(c\) is the number of subseries, \({\parallel \parallel }_{F}\) is the Frobenius norm.

Evaluation metrics

Mean absolute error (MAE) and mean square error (MSE) are adopted as the evaluation metric to assess the performance models. they are defined as:

where \(t\) represents the time step, \(H\) indicates the horizon of prediction, and \(c\) is the number of subseries.

Experiments

Datasets

The proposed STL-2DTCDN is tested on six datasets. All datasets are split into three segments in a chronological order: training, validation, and test sets, with a split ratio of 7:1:2 for Traffic and Electricity. ETT dataset are split with the ratio of 6:2:2, as recommended by Informer24 and Autoformer25. Table 1 presents statistical information of the datasets.

• ETT (Electricity Transformer Temperature)24 including two datasets in 1-h-level (ETTh1, ETTh2) and two datasets in 15-min-level (ETTm1, ETTm2). Each dataset consists of seven electricity transformer attributes.

• Traffic30 collects data from the California.

• Electricity (ECL)30 describes the electricity consumption (Kwh) of 321 clients.

Methods for comparison

At present, deep learning-based methods are the predominant approach in time series forecasting. We select six baseline methods for comparison with the STL-2DTCDN. These selected baseline methods including three categories: the TCN14 model, the Transformer-based methods (Informer24, Autoformer25, PatchTST/6427, and FEDformer26), and the linear models (DLinear30and TiDE31). TCN is designed for processing sequence data, and the adoption of causal convolution enhances its capacity in exploring dependencies of long-term. Transformer-based methods have made great success in time series forecasting tasks recently. In addition, linear models have been demonstrated that they can achieved promising results in various forecasting tasks.

The TiDE conducts experiments with the fixed look-back window 720 for all prediction lengths. Other compared models set the look-back windows as recommended. The results of baseline methods are reported from TiDE31 and PatchTST27.

Experimental settings

The proposed STL-2DTCDN is trained using the L2 loss function and optimized with the ADAM51, initialized with a learning rate of 10–4. The look-back window for prediction lengths {96, 192, 336, 720} is all set to 720, following the TiDE. The batch size is set to 32 for training and experiments are repeated five times. Experiments are conducted using two NVIDIA GeForce RTX 2080 Ti GPUs, with the implementation in PyTorch. Table 2 presents the range of involved hyper-parameters. We tune these hyper-parameters by leveraging the rolling validation error on the validation dataset.

Since the size of the look-back window is significantly different from the number of subseries for all datasets, the two dimensions of the convolution kernel are designed separately for the time and feature dimension. Specifically, considering the varying number of subseries in different datasets, such as ECL and Traffic with a larger number of time series, we utilize larger parameters [4, 8, 12, 16] in the feature dimension, while for datasets with fewer time series like ETT datasets, smaller parameters [1, 2, 3, 4] are employed in the feature dimension. Table 3 reports the chosen hyper-parameters for six datasets.

Experimental results

MAE and MSE of the proposed STL-2DTCDN and compared methods on six practical datasets are shown in Table 4. Each row in the table corresponds to a comparison of results within a specific window horizon, and each column represents the results of a particular model in all cases. The values that highlighted in bold are best results.

Since all models are trained with the squared error, let's concentrate on the column of MSE column for comparisons. From Table 4, we can observe that: (1) The STL-2DTCDN can achieve the best results in most cases (as indicated by the count of the best results in the last row). (2) The STL-2DTCDN shows better performance than TiDE, and the MSE decreases by 3.2% (at 96), 5.7% (at 192), 5.8% (at 336), 6.9% (at 720) in average. The longer the prediction horizon, the better STL-2DTCDN performs. This suggests that STL-2DTCDN is more suitable for long-term forecasting. (3) Our proposed model achieves significantly better results than TiDE in large datasets for long-term forecasting. The MSE decreases by 10.1% (at 720) and 13.8% (at 720) for the Traffic dataset and the Electricity dataset, respectively. However, for four ETT datasets with the prediction length of 720, the STL-2DTCDN achieves only a 4.4% decrease in MSE on average compared to TiDE. We believe that this is because the Traffic and Electricity datasets have a significantly larger number of time series compared to four ETT datasets. Consequently, the 2DTCDN can utilize a larger kernel size in the feature dimension to better explore interdependencies among different time series.

Figures 8 and 9 present the comparison of forecasting results calculated by STL-2DTCDN and TiDE with the ground truth for the Traffic and Electricity datasets. From these figures, we observe that the STL-2DTCDN performs better in capturing the temporal repeating patterns and fitting the trend of the curve. This indicates that the STL-2DTCDN effectively captures the temporal characteristics of the data sequences, including seasonality and trends.

Ablation studies

The contribution of each involved component of STL-2DTCDN is figured out by ablation studies. Specifically, each component is removed in turn from the STL-2DTCDN, and we evaluate the performance of each sub-framework consists of the remaining components. Each sub-framework is detailed as follows:

-

Re/STL: Remove the STL from the originally proposed STL-2DTCDN.

-

Re/2DTCDN: Remove the 2DTCDN from the originally proposed STL-2DTCDN.

-

Re/Time features: Remove the Time features from the originally proposed STL-2DTCDN.

-

STL-2DTCDN → STL-TCN: 2DTCDN is replaced with a vanilla TCN.

Table 5 presents the performance of the original STL-2DTCDN and sub-frameworks by removing each component. It can be observed from the Table 5 that the combination of STL, 2DTCDN, and Time features delivers the most precise forecasts in different datasets, and the removal of any single component leads to a decline in performance. Furthermore, we also substitute the 2DTCDN with a standard TCN, and the forecasting results demonstrate that 2DTCDN can achieve better performance in long-term multivariate prediction tasks.

Conclusions

We design a STL-2DTCDN model for long-term multivariate time series forecasting in this paper. STL-2DTCDN utilizes STL to decompose the original time sequence into three subseries. Time features is used to add additional covariates to the model. Furthermore, we adapt the vanilla TCN and introduce the 2DTCDN for long-term multivariate time series forecasting. Compared to various Transformer-based methods and linear models, the STL-2DTCDN exhibits strong capabilities in capturing various temporal patterns and exploring complex interdependencies between different related subseries for long-term multivariate time series forecasting. In the next stage, we will concentrate on: (1) interpreting the model outputs and understanding how a deep neural network achieves its forecasting results; (2) exploring alternative approaches to enhance the capability of capturing temporal patterns and exploring complex interdependencies inhered in multivariate time series.

Data availability

All datasets used can be accessed from the corresponding author on request.

References

Reza, S., Ferreira, M. C., Machado, J. J. M. & Tavares, J. M. R. A multi-head attention-based transformer model for traffic flow forecasting with a comparative analysis to recurrent neural networks. Expert. Syst. Appl. 202, 117275 (2022).

Han, Y. et al. A short-term wind speed prediction method utilizing novel hybrid deep learning algorithms to correct numerical weather forecasting. Appl. Energy 312, 118777 (2022).

Khan, Z. A. et al. Efficient short-term electricity load forecasting for effective energy management. Sustain. Energy Technol. 53, 102337 (2022).

Liang, Y., Lin, Y. & Lu, Q. Forecasting gold price using a novel hybrid model with ICEEMDAN and LSTM-CNN-CBAM. Expert. Syst. Appl. 206, 117847 (2022).

Johansson, C., Zhang, Z., Engardt, M., Stafoggia, M. & Ma, X. Improving 3-day deterministic air pollution forecasts using machine learning algorithms. Atmos. Chem. Phys. 38, 1–52 (2023).

Box, G. E., Jenkins, G. M., Reinsel, G. C. & Ljung, G. M. Time Series Analysis: Forecasting and Control (John Wiley & Sons, Hoboken, 2015).

Alizadeh, M., Rahimi, S. & Ma, J. A hybrid ARIMA–WNN approach to model vehicle operating behavior and detect unhealthy states. Expert. Syst. Appl. 194, 116515 (2022).

Smyl, S. A hybrid method of exponential smoothing and recurrent neural networks for time series forecasting. Int. J. Forecast. 36(1), 75–85 (2020).

Xiao, J. & Zhou, Z. Research progress of RNN language model. ICAICA. IEEE, 1285–1288 (2020).

Liu, Y., Gong, C., Yang, L. & Chen, Y. DSTP-RNN: A dual-stage two-phase attention-based recurrent neural network for long-term and multivariate time series prediction. Expert. Syst. Appl. 143, 113082 (2020).

Hajiabotorabi, Z., Kazemi, A., Samavati, F. F. & Ghaini, F. M. M. Improving DWT-RNN model via B-spline wavelet multiresolution to forecast a high-frequency time series. Expert. Syst. Appl. 138, 112842 (2019).

Khan, M., Wang, H., Riaz, A., Elfatyany, A. & Karim, S. Bidirectional LSTM-RNN-based hybrid deep learning frameworks for univariate time series classification. J. Supercomput. 77, 7021–7045 (2021).

Zheng, W. & Chen, G. An accurate GRU-based power time-series prediction approach with selective state updating and stochastic optimization. IEEE Trans. Cybern. 52(12), 13902–13914 (2021).

Bai, S., Kolter, J. Z. & Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv preprint arXiv:1803.01271 (2018).

Livieris, I. E., Pintelas, E. & Pintelas, P. A CNN–LSTM model for gold price time-series forecasting. Neural Comput. Appl. 32, 17351–17360 (2020).

Du, L. et al. Bayesian optimization based dynamic ensemble for time series forecasting. Inf. Sci. 591, 155–175 (2022).

Du, L. et al. Graph ensemble deep random vector functional link network for traffic forecasting. Appl. Soft Comput. 131, 109809 (2022).

Albuquerque, P. H. M., Peng, Y. & Silva, J. P. F. Making the whole greater than the sum of its parts: A literature review of ensemble methods for financial time series forecasting. J. Forecast. 41(8), 1701–1724 (2022).

Vaswani, A., Shazeer, N. & Parmar, N., et al. Attention is all you need. In NIPS, vol. 30 (2017).

Khan, S. et al. Transformers in vision: A survey. ACM. Comput. Surv. 54(10s), 1–41 (2022).

Wu, Y., Zhao, Y., Hu, B., Minervini, P., Stenetorp, P. & Riedel, S. An efficient memory-augmented transformer for knowledge-intensive nlp tasks. arXiv preprint arXiv:2210.16773 (2022).

Li, S., X., Xuan, Y., Zhou, X., Chen, W., Wang, Y. X., & Yan, X. Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting. In Advances in Neural Information Processing Systems, Vol. 32 (2019).

Kitaev, N., Kaiser, Ł. & Levskaya, A. Reformer: The efficient transformer. arXiv preprint arXiv:2001.04451 (2020).

Zhou, H., Zhang, S., Peng, J., Zhang, S., Li, J., H., & Zhang, W. Informer: Beyond efficient transformer for long sequence time-series forecasting. In Proceedings of the AAAI conference on artificial intelligence Vol. 35(12) 11106–11115 (2021).

Wu, H., Xu, J., Wang, J. & Long, M. Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. Adv. Neural Inf. Process. Syst. 34, 22419–22430 (2021).

Zhou, T., Ma, Z., Wen, Q., Wang, X. & Sun, L., R. Fedformer: Frequency enhanced decomposed transformer for long-term series forecasting. In International Conference on Machine Learning, 27268–27286 (2022).

Nie, Y., Nguyen, N. H., Sinthong, P. & Kalagnanam, J. A time series is worth 64 words: Long-term forecasting with transformers. arXiv preprint arXiv:2211.14730 (2022).

Wang, X., Liu, H., Yang, Z., Du, J. & Dong, X. CNformer: a convolutional transformer with decomposition for long-term multivariate time series forecasting. Appl. Intell. 53, 1–15 (2023).

Cirstea, R. G., Guo, C., Yang, B., Kieu, T., Dong, X. & Pan, S. Triformer: Triangular, variable-specific attentions for long sequence multivariate time series forecasting—full version. arXiv preprint arXiv:2204.13767 (2022).

Zeng, A., Chen, M., Zhang, L. & Xu, Q. Are transformers effective for time series forecasting? In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 37(9) 11121–11128 (2023).

Das, A., Kong, W., Leach, A. et al. Long-term Forecasting with TiDE: Time-series Dense Encoder. arXiv preprint arXiv:2304.08424 (2023).

Cleveland, R. B. et al. STL: A seasonal-trend decomposition. J. Off. Stat. 6(1), 3–73 (1990).

Sorjamaa, A., Hao, J., Reyhani, N., Ji, Y. & Lendasse, A. Methodology for long-term prediction of time series. Neurocomputing 70(16–18), 2861–2869 (2007).

Chen, R. & Tao, M. Data-driven prediction of general Hamiltonian dynamics via learning exactly-symplectic maps. In International Conference on Machine Learning, 1717–1727 (2021).

Stefenon, S. F. et al. Time series forecasting using ensemble learning methods for emergency prevention in hydroelectric power plants with dam. Electric Power Syst. Res. 202, 107584 (2022).

Gao, R. et al. Dynamic ensemble deep echo state network for significant wave height forecasting. Appl. Energy 329, 120261 (2023).

Abdar, M. et al. Uncertainty quantification in skin cancer classification using three-way decision-based Bayesian deep learning. Comput. Biol. Med. 135, 104418 (2021).

Gao, R. et al. Inpatient discharges forecasting for Singapore hospitals by machine learning. IEEE J. Biomed. Health Inf. 26(10), 4966–4975 (2022).

Wen, Q., Zhou, T., Zhang, C. et al. Transformers in time series: A survey. arXiv preprint arXiv:2202.07125 (2022).

Liu, S., Yu, H., Liao, C. et al. Pyraformer: Low-complexity pyramidal attention for long-range time series modeling and forecasting. In International Conference on Learning Representations (2021).

He, H. et al. A seasonal-trend decomposition-based dendritic neuron model for financial time series prediction. Appl. Soft Comput. 108, 107488 (2021).

Lin, Y. et al. Forecasting crude oil futures prices using BiLSTM-Attention-CNN model with Wavelet transform. Appl. Soft Comput. 130, 109723 (2022).

Iwabuchi, K. et al. Flexible electricity price forecasting by switching mother wavelets based on wavelet transform and Long Short-Term Memory. Energy and AI 10, 100192 (2022).

Gao, R. et al. Random vector functional link neural network based ensemble deep learning for short-term load forecasting. Expert. Syst. Appl. 206, 117784 (2022).

Gao, R. et al. Time series forecasting based on echo state network and empirical wavelet transformation. Appl. Soft Comput. 102, 107111 (2021).

Taieb, S. B. & Hyndman, R. J. Recursive and direct multi-step forecasting: the best of both worlds. Vol. 19. Department of Econometrics and Business Statistics, Monash Univ., 2012. (2012).

Chevillon, G. Direct multi-step estimation and forecasting. J. Econ. Surv. 21(4), 746–785 (2007).

Waibel, A., Hanazawa, T., Hinton, G., Shikano, K. & Lang, K. J. Phoneme recognition using time-delay neural networks. Proc. IEEE Int. Trans. Acoust. Speech Signal Process. 37(3), 328–339 (1989).

Yu, F. & Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv preprint arXiv:1511.07122 (2015).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In CVPR, 770–778 (2016).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

Acknowledgements

This work is supported by: National Natural Science Foundation of China 61772321, Shandong Natural Science Foundation ZR202011020044.

Author information

Authors and Affiliations

Contributions

J.H. and F.L.: Literature Review and Proposed Algorithm; J.H.: Implementation; J.H. and F.L.: Results and Discussion.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hao, J., Liu, F. Improving long-term multivariate time series forecasting with a seasonal-trend decomposition-based 2-dimensional temporal convolution dense network. Sci Rep 14, 1689 (2024). https://doi.org/10.1038/s41598-024-52240-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-52240-y

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.