Abstract

This paper presents a technique to model the residual dynamics between a high-level planner and a low-level controller by considering reference trajectory tracking in a cluttered environment as an example scenario. We focus on minimising residual dynamics that arise due to only the kinematical modelling of high-level planning. The kinematical modelling is sufficient for such scenarios due to safety constraints and aggressive manoeuvres that are difficult to perform when the environment is cluttered and dynamic. We used a simplified motion model to represent the motion of the quadrotor when formulating the high-level planner. The Sparse Gaussian Process Regression-based technique is proposed to model the residual dynamics. Recently proposed Data-Driven MPC is targeting aggressive manoeuvres without considering obstacle constraints. The proposed technique is compared with Data-Driven MPC to estimate the residual dynamics error without considering obstacle constraints. The comparison results yield that the proposed technique helps to reduce the nominal model error by a factor of 2 on average. Further, the proposed complete framework was compared with four other trajectory-tracking approaches in terms of tracking the reference trajectory without colliding with obstacles. The proposed approach outperformed the others with less flight time without losing computational efficiency.

Similar content being viewed by others

Introduction

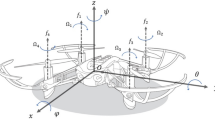

Accurate reference trajectory tracking in cluttered environments1 is still a challenging research problem. Sensing capabilities, e.g., how far the sensor can observe the environment, and computational and power capabilities are the main constraints that face when solving such research problems. Therefore, the quadrotor’s full maneuverability can not be exploited due to those constraints. On the contrary, when the problem formulation uses a simplified motion model instead of a complex dynamical model, the residual dynamical error arises between the actual controller and desired control commands.

The high-level control command generation is based on Model Predictive Control (MPC). Residual dynamics (y) that arise between high-level control generated by MPC and low-level control generated by flight controller can not be estimated analytically. Hence, the Sparse Gaussian Process (SGP)-based learning technique is proposed to estimate y, where \(\textbf{x}_k\), \(\bar{\textbf{x}}\), and \(\hat{\textbf{x}}\) are denoted current state, next desired state, and actual state after applying the current control to the system, respectively.

For controlling a quadrotor through high-speed agile maneuvers, it is necessary to consider aerodynamic drag effects, which act as the main component of residual dynamics and external aerodynamic effects, e.g., wind, that is applied on the quadrotor in addition to other constraints: dynamics and obstacle constraints. However, several studies related to agile maneuvers2,3,4,5,6 do not consider such effects, which are very difficult to incorporate when modelling system dynamics, except approximating the quadrotor dynamics with simplified motion model7. Even if those effects are incorporated, the necessary external aerodynamic effects are difficult to obtain due to high-computational demands that leverage real-time performance. In other words, model complexity is constrained by the computational capabilities of the onboard controller. Nonetheless, such aerodynamic effects produce negligible impact for the low-speed maneuvers since dynamic effects can be neglected, considering only the kinematic modelling, especially in the plan-based control paradigm. Such a paradigm consists of two stages: planning and controlling. In the planning stage, a simplified motion model is utilized for generating near-optimal control policy, whilst in the controlling stage, the controller depends on the input from the planner and generates sufficient control commands based on the simplified motion model. However, utilizing an approximated motion model in the planning stage produces a dynamical error (residual dynamics) between the planner and the low-level controller that is hard to estimate analytically (Fig. 1).

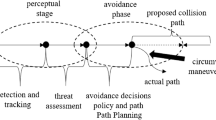

In this research, we used a planner that consists of two sub-planners: a local planner that consists of a 4 degree of freedom model (simplified motion model)8 and a replanner9 that can push the reference trajectory out of obstacle zones. The local planner is formulated as a hard constraint optimization problem, whereas the replanner is formulated as a box-constrained function minimization problem, which iteratively refines the reference trajectory by pushing away from the obstacle zones. To compensate for the limitations of the simplified motion model, a learning mechanism based on the Sparse Gaussian Process (SGP) was introduced. This learning mechanism modeled the residual dynamics (y) that arose from the discrepancy between the simplified motion model and the actual system dynamics. As depicted in Fig. 1, the residual dynamics is determined by \(y = g(\textbf{z}), \; \textbf{z} = [\textbf{x}, \textbf{u}]\), where \(\textbf{g}\) is the model that estimates residual dynamics, given current state and current control (\(\textbf{x}, \textbf{u}\)). The proposed approach employs velocity residual as the residual dynamics to integrate with the local planner’s nominal dynamics, effectively reducing the discrepancy between the estimated control output from the local planner and the desired control that the simplified motion model is incapable of estimating.

Related works

Most recent trajectory generation and tracking methods are proposed either without considering obstacles or considering partially known or simplified obstacles, e.g., gates. For example, in10 they have achieved 60km/h on a defined racing track. This approach uses Model Predictive Contouring Control (MPCC)11 for local replanning at such a speed. However, such methods are not capable of flying through cluttered environments due to generating a time-optimal trajectory, ensuring safety and feasibility that are limited by onboard computational capacity. Graph-based planning techniques often rely on geometric methods like Jump Point Search (JPS)12, RRT13, and A14 to generate an initial trajectory by identifying a path or a set of waypoints for a limited horizon. This initial trajectory serves as a guide for subsequent path-planning stages. This trajectory generation can be achieved by different methods, including minimum snap15, B-spline9. These generated trajectories, in general, do not guarantee dynamic feasibility. Kinodynamic-based trajectory planning16,17 addressed these issues through kinodynamic search, whilst ensuing dynamic feasibility. Despite addressing such issues, kinodynamic-based trajectory planning remains a highly computational footprint. In18, the authors developed a kinodynamic-based framework, yet the framework failed to guarantee consistent smoothness.

Hierarchical trajectory planning9, e.g., global and local planning, is one of the ways that can address all the aforementioned issues when planning in a cluttered environment. In such planning, a global planner19 tries to refine the desired trajectory considering safety constraints. Along with that, a local planner8 ensures the dynamic feasibility and imposes safety constraints. In the DARPA subterranean challenge20, Tranzatto et al.21 and De Petris et al.22 used a modified version of DJI M100 with position level control23 relying on a highly accurate localisation module. Chung et al.24, and Arm et al.25 used multiple depth sensors to build highly accurate elevation maps and groundbreaking local motion planning techniques, enabling robots to navigate effectively in complex and cluttered underground environments ensuring planning outputs are feasible for low-level controllers targeting exploration tasks. On the other hand, with fewer sensing capabilities, incorporating fully-fledged system dynamics makes it harder to solve trajectory planning whilst avoiding obstacles in real-time. Hence, the approaches that employ approximated system dynamics use external and internal disturbances alongside approximated dynamics19,26,27,28. Also, recent advancements in adaptive robust nonlinear control-based techniques29,30 have demonstrated promising results for controlling aerial vehicles. Most of these techniques can be categorized under different variations of receding horizon-based control. A special variant of such control, GP-MPC (Gaussian Process MPC) excels in estimating unknown quantities, such as residual dynamics, internal disturbances, and external disturbances. A recent line of work7,31,32,33 has been investigating how to incorporate Gaussian Process (GP) to learn system dynamics entirely or partially considering external disturbances, for example, wind28, from data, targeting applications such as reference trajectory tracking and reaching to a specified goal. The choice of GPs is preferred for system identification-related tasks, e.g., residual dynamics modelling, compared to more expressive modelling techniques such as neural networks in the recent past. GPs are preferred due to the modelling constraints for receding horizon planning, e.g., MPC and NMPC. It is sophisticated to develop the back-propagation algorithm34 to estimate gradients of the cost function when formulating receding horizon planning problems within the optimization solver. However, for GPs, it is relatively easy to implement gradient estimation. Hence, variants of GPs are proposed for system identification-related tasks more often than the other techniques.

Instead of learning a complete dynamical model, the authors of35 proposed to learn only time-varies state uncertainty using Gaussian belief propagation. Consequently, learning only the sub-portion of time-varies state or/and control uncertainty as the residual dynamics yields quite promising results7. The latter training technique requires less data to learn the residual dynamics. Yet it is advisable to have more training data to improve the robustness to learn the residual dynamics. However, a large amount of training data is problematic when GP is applied for real-time applications. There are several ways to reduce the overhead that arises when utilizing a high volume of training data36: select a set of points, i.e, inducing points37,38, that approximate the original training data distribution; exploit the structure of the formation of GP39,40,41, e.g., Kronecker Structure42; and use variational methods. Sparse GP43 is such a variant that can be used in different ways to obtain inducing points: Subset of Regressors (SoR), Deterministic Training Conditional (DTC), Fully Independent Training Conditional (FITC)38, and Structured Kernel Interpolation (SKI)39. The complexity reduces from \(O(n^3)\) to \(O(nm^3)\), where n and m are the numbers of points (\(n >m\)) that are used to model GP and Sparse GP, respectively. Inducing point methods are quite appropriate for the real-time setting. However, model accuracy degrades as m decreases, which can be seen as a trade-off between model expressiveness reduction versus computational complexity.

In this work, we present how to reduce residual dynamics that arise between high-level planning and low-level controlling by considering reference trajectory tracking as an example in the plan-based control paradigm (Table 1). The proposed approach is not limited to the considered example. It is valid for any motion planner which depends on a low-level controller. Depending on the representation of the motion model, the residual dynamics are defined by selecting a set of states and controlling input appropriately. The uniqueness of the proposed approach is that it is invariant to the geometry representation of the trajectory. Hence, once the residual dynamics model is trained, it can be deployed without retraining. The following sections explain the training process of such residual dynamics model and deploy it in hardware, which shows the effectiveness of incorporating residual dynamics modelling in high-level planning.

Our contributions are as follows:

-

1.

To address the limitations of traditional motion models in capturing the actual system dynamics of aerial vehicles, we propose a hybrid approach that combines a simplified motion model for high-level planning with a learning-based technique, Sparse Gaussian Process, to estimate residual dynamics. This approach enables real-time performance by running the entire algorithm on an onboard computer.

-

2.

To augment the replanner’s9 capability to circumvent local minima, we introduce a mechanism that dynamically regenerates the reference trajectory whenever the quadrotor’s actual position deviates substantially from the initial reference trajectory. This enhancement effectively precludes the quadrotor from becoming ensnared in local minima during the receding horizon control process.

Methodology

This paper tackles the challenge of using a less accurate model (kinematic or dynamic) with Model Predictive Control (NMPC) by learning the residual errors between that approximated model and the actual model. Instead of a full 6-degree-of-freedom dynamic model, we employ a 4-degree-of-freedom model and propose a Sparse Gaussian Process technique to reduce the impact of dynamic factors that depend on the operating conditions and can hardly be modelled analytically in advance. Crucially, we aim to maintain trajectory planner performance, so we focus on learning the rate of velocity changes as residual dynamics. This choice is invariant to the trajectory’s geometric representation, allowing offline learning of the residual dynamics distribution. By generating diverse trajectories, we capture the latent distribution of residual dynamics. We then augment the nominal model with this learned residual dynamics model and demonstrate it in online NMPC as a feedback controller, especially for the quadrotor in the plan-based robot control paradigm.

The plan-based robot control paradigm comprises two stages: planning and controlling. The planning stage can be a combination of several planners that run simultaneously or cascade planners45 that run sequentially. In this work, the planning stage is formed by two planners: a replanner and a local planner. We have used the approach proposed in9 as our replanner. It executes continuous refinement of the provided reference trajectory out of obstacle regions. The proposed learning-based residual dynamics are augmented into a local planner8. Further, the subsections are organized as follows: formulating the local planner with residual dynamics in the Residual Augmented Quadrotor Motion Model; laying down training strategies of residual dynamics in the Nonlinear Model Predictive Control local planner, and estimating approximated distribution to represent the residual dynamics in Sparse GP Regression.

Residual augmented quadrotor motion model

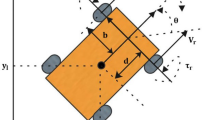

The proposed residual dynamics learning framework can be integrated with various quadrotor kinematical and dynamical models when formulating NMPC. While the choice of model can significantly impact the effectiveness of the approach, it also introduces various challenges, including high computational demands, being stuck in local minima, low convergence rate, and real-time applicability. In NMPC, finding a delicate balance between avoiding local minima and managing computational costs is crucial. A longer prediction horizon helps evade local minima, but it also increases the number of optimization variables, demanding more computational resources. Several strategies exist to reduce these variables, but each has its own drawbacks. Increasing the discretization interval (\(\delta t\)): This relies heavily on the accuracy of the motion model. If the model is approximated, it can lead to unrealistic control commands. Increasing maximum control inputs (velocity, acceleration): this raises the risk of collisions with obstacles. Using high-degree-of-freedom models: if some degrees of freedom aren’t fully utilized, unnecessary computational overhead is introduced. In this work, we chose a 4-degree-of-freedom model (\(\textbf{f}_{norm}(\textbf{x}, \textbf{u})\))8, aligning with our focus on low-speed maneuvering. To compensate for potential inaccuracies, we employed Sparse Gaussian Regression to model residual dynamics. This approach strikes a balance between avoiding local minima and maintaining computational efficiency, demonstrating the intricate link between these two factors in NMPC. Let \(\textbf{f}_{est}(\textbf{x}, \textbf{u})\) be the augmented quadrotor motion model:

where the state vector and the control inputs, defined \(\textbf{x}\) and \(\textbf{u}\), respectively. Depending on which states and controls are learnt, \(B_z\) gives the appropriate transformation, defined in the following sections. The Gaussian process was used to model the residual dynamics \(g(\textbf{z}) \sim N(\mu , \Sigma _p), \textbf{z} = [\textbf{x};\textbf{u}]\), where \(\Sigma _p\) is a i.i.d (independent and identically distributed) process noise and \(\mu\) estimated mean value. The training data were centered, i.e., zero mean, before stating the learning process, which consists of only the diagonal covariance (or assuming no correlation between the two states of residual dynamics). The explicit Runge-Kutta 4th order algorithm was used to incorporate \(\textbf{f}_{est}(\textbf{x}, \textbf{u})\) motion model in the discrete-time \(\textbf{f}_d(\textbf{x}_k, \textbf{u}_k, \delta t)\) with integration step size \(\delta t\) as \(\textbf{x}_{k+1} = \textbf{f}_d(\textbf{x}_k, \textbf{u}_k, \delta t),\) where k depicts the time index. Since we rely on a less accurate model (or an approximate model) using the Runge-Kutta 4th order method46 compared to the Eular, reduce the integration error of the discrete system dynamics.

The high-level overview of the proposed framework for learning the residual dynamics. Design matrix \(B_{\textbf{z}}\) defines which states and control inputs should be trained. Learned residual dynamics \(g(\textbf{z}_{k+i-1})\) is added to nominal dynamics \(f_{norm}(\textbf{x}_{k+i-1}, \textbf{u}_{k+i-1})\) when formulating NMPC by using the multiple shooting technique. In the training stage, collect the data \(D = \{X, \textbf{y}\} = \{(\textbf{x}_i, \bar{\textbf{u}}_i, \bar{\textbf{x}}_i, \hat{\textbf{x}}_i), y_i\}, i=0, \ldots ,n\) for n number of times, where \((\textbf{x}_i, \bar{\textbf{u}}_i, \bar{\textbf{x}}_i\), and \(\hat{\textbf{x}}_i)\) denote current state, estimated near-optimal control inputs and state after applying NMPC, and actual system state after applying estimated control inputs, respectively. We used the same PD regulator that was proposed in8. This work focuses on the DJI M100 quadrotor as the representative real system..

Nonlinear model predictive control based local planner

To obtain augmented residual motion model \(\textbf{f}_{d}(\textbf{x}, \textbf{u}, \delta t)\), the local planner calculates near-optimal control policy. However, the local planner does not guarantee optimality because NMPC-based planners calculate approximated control policy heuristically. In complex and cluttered environments, generating a convex representation of free space is generally challenging. However, the incremental Euclidean Distance Transformation Map (EDTM)8, when applied along the reference trajectory, can provide accurate obstacle poses. These poses can be incorporated as hard constraints in the NMPC formulation, effectively addressing the non-linearity of obstacle constraints. NMPC is preferred over linear or convex MPC due to its ability to generate feasible solutions even when dealing with non-linear constraints. However, NMPC is susceptible to getting stuck in local minima, unlike convex MPC which guarantees global optimality. The following section outlines a proposed method to mitigate this issue.

For a specified time index k, a corresponding slice of the reference trajectory \([\textbf{x}_{k}^{ref}, \ldots , \textbf{x}_{k+N}^{ref}, \textbf{u}_{k}^{ref}, \ldots , \textbf{u}_{k+N}^{ref}]\) and the current state \({\textbf {x}}_{k}\), and close-in obstacles \(g_2(\textbf{w})\) information are available. The local planner, which was designed using multiple shooting technique, generates near-optimal control policy \(\textbf{w} = [\bar{{\textbf {u}}}_{k}, \ldots , \bar{{\textbf {u}}}_{k+N-1}, \bar{{\textbf {x}}}_{k}, \ldots , \bar{{\textbf {x}}}_{k+N}]\) as follows:

where the performance index (or objective function) \(J(\textbf{w})\) aims to minimize the proportional error \(\bar{\textbf{x}}_{k+i} -\textbf{x}^{ref}_{k+i}\) and the control effect \(\bar{\textbf{u}}_{k+i} -\textbf{u}^{ref}_{k+i}\) by employing a quadratic cost function. The continuous and differentiable nature of quadratic functions facilitates smooth optimization, enabling efficient solution via sequential quadratic programming (SQP)47. The prediction horizon length of NMPC, denoted N. Both maximum and minimum pairs of velocities and positions, denoted \(\textbf{v}_{max}, \textbf{x}_{max}\) and \(\textbf{v}_{min}, \textbf{x}_{min}\), respectively. Both the Q and R matrices are positive semi-definite and positive definite, respectively. These matrices govern the importance of state variables and control inputs in the cost function, \(J(\textbf{w})\). To obtain the discrete dynamical model, the Runge-Kutta 4th order algorithm is applied to the quadrotor’s motion dynamics, resulting in the discrete dynamical model \(\textbf{x}_{k+1} = \textbf{f}_d(\textbf{x}_k, \textbf{u}_k, \delta t)\), where \(\delta t\) is the time step. The system dynamic constraints are formulated based on the given discrete dynamical model \(\textbf{f}_d\)(Eq. 2)8.

At each time step k, the desired reference pose and control are denoted by \(\textbf{x}^{ref}_{k}\) and \(\textbf{u}^{ref}_{k}\), respectively. The reference trajectory, which provides the desired poses and control inputs, can be represented as a dth-degree polynomial function of time. Consequently, desired derivatives up to order \(d-1\) can be obtained at each time step k. Cubic uniform B-spline was employed to define the initial reference trajectory, which was subsequently refined using the global planner9. The obstacle constraints were formulated as a set of nonlinear hard constraints, \(g_2(\textbf{w})\), as follows:

where \(N_{o}\) is the number of obstacles at time k, and \(dis({\textbf {x}}_j^{o}, \bar{{\textbf {x}}}_{k+h})\) is calculated as \(-\sqrt{(x_j^{o} - x_{k+h})^2 + (y_j^{o}-y_{k+h})^2 + (z_j^{o}-z_{k+h})^2} + d^{o},\) where \(d^{o}\) is the safe zone distance between a quadrotor and close-in obstacles, denoted by \({\textbf {x}}_j^{o} \in \mathbb {R}^3, j=1,\ldots ,N_o\). For the descriptive formulations of \(g_1(\textbf{w})\), \(g_2(\textbf{w})\), \(\textbf{x}^{ref}_{k}\), and \(\textbf{u}^{ref}_{k}\), refers to8,9.

Augmented residual dynamics learning with Gaussian process (GP)

To learn residual dynamics \(g(\textbf{z})\) Eq. (1), the first step is to collect a training dataset. As shown in Fig. 2, generate a set of random reference trajectories and formulate the local planner Eq. (2) considering only \(\textbf{f}_{norm}(\textbf{x}, \textbf{u})\). Let training dataset D be \(\{X, \textbf{y}\}\), where \(X = \{(\textbf{x}_i, \bar{\textbf{u}}_i, \bar{\textbf{x}}_i, \hat{\textbf{x}}_i)\}_{i=0}^n\) and \(\textbf{y} = \{y_i\}_{i=0}^n, i=0, \ldots ,n\). Terms \(\textbf{x}_i, \bar{\textbf{u}}_i, \bar{\textbf{x}}_i\) and \(\hat{\textbf{x}}_i\) are current state, (predicted) control input and state using the local planner, and actual states after applying \(\bar{\textbf{u}}_i\) to the system, respectively, at time \(t_i\). Residual dynamics \(y_i\) at \(t_i\) is determined by

Thus, given a training dataset D, Gaussian Process is employed to learn \(g(\textbf{z}_i)\). Gaussian Process, in general, can be used to infer a distribution (prior) over function directly. Hence, residual dynamics can be determined as follows:

where \(\epsilon _i\) is the corresponding n white noise realization \(\sigma _n\). Given an unseen \(\textbf{x}_*\) data points (or test dataset), GP, which is a way to estimate the posterior predictive distribution, estimates corresponding residual dynamics \(\textbf{y}_*\), that can be formulated as follows:

where \(A = \sigma _n^{-2}XX^{\top } +\Sigma _p^{-1}\) and term \(\textbf{s}\), denoted the prior distribution of the residual dynamics. Nonetheless, linear models, e.g., Eq. (6), suffer severely from limited expressiveness. Various methods can be used to transform input space (\(\textbf{x}_i\)) into different feature spaces using basis functions; however, the model (\(\textbf{s}\)) is still linear in parameters, which is analytically tractable. Let \(\Phi (*)\) be such a basic function, where \(*\) denotes input space. Along with that, posterior predictive distribution Eq. (7) becomes: \(p(\textbf{y}_*|\textbf{x}_*,X,\textbf{y}) \sim N\Bigl (\frac{1}{\sigma _n^2}\Phi (\mathbf {x_*})^{\top }A^{-1}\Phi \textbf{y}, \Phi (\mathbf {x_*})^{\top }A^{-1}\Phi (\textbf{x}_*)\Bigl ),\) where \(\Phi = \Phi (X), A = \sigma _n^{-2}\Phi \Phi ^{\top } + \Sigma _p^{-1}\). This posterior predictive distribution can be reformulated as \(p(\textbf{y}_*|\textbf{x}_*, X,\textbf{y}) \sim N\Bigl (\sigma _*^{\top }\Sigma _p\Phi (K+\sigma _n^2I)^{-1}\textbf{y}, \Phi _*^{\top }\Sigma _p\Phi _* -\Phi _*^{\top }\Sigma _p\Phi (K+\sigma _n^2I)^{-1}\Phi ^{\top }\Sigma _p\Phi _{*}\Bigl ),\) where \(\Phi (\textbf{x}_*) = \Phi _*\) and \(K = \Phi ^{\top }\Sigma _p\Phi\). Feature space (\(\Phi (*)\)) always enters in the form of \(\Phi ^{\top }\Sigma _p\Phi , \Phi _*^{\top }\Sigma _p\Phi ,\) or \(\Phi _*^{\top }\Sigma _p\Phi _*\). Thus, the entries of these matrices have invariable form \(\Phi (\textbf{x}_{\mu 1})^{\top }\Sigma _p\Phi (\textbf{x}_{\mu 2})\), where \(\textbf{x}_{\mu 1}\) and \(\textbf{x}_{\mu 2}\) come either from training or testing sets. Let \(k(\textbf{x}_{\mu 1}, \textbf{x}_{\mu 2}) = \Phi (\textbf{x}_{\mu 1})^{\top }\Sigma _p\Phi (\textbf{x}_{\mu 2})\). Since \(\Sigma _p\) is positive definite, we can define \(\Sigma _p^{1/2}\). Moreover, \(\Sigma _{p}^{1/2} = UD^{1/2}U^{\top }\) (using Singular Value Decomposition (SVD)). Thus, \(k(\textbf{x}_{\mu 1}, \textbf{x}_{\mu 2}) = \psi (\textbf{x}_{\mu 1})\cdot \psi (\textbf{x}_{\mu 2})\), where \(\psi (\textbf{x}_{\mu 1}) = \Sigma _p^{1/2}\Phi (\textbf{x}_{\mu 1})\). If \(\textbf{x}_{\mu 1}\) and \(\textbf{x}_{\mu 2}\) are similar, kernel \(k(\textbf{x}_{\mu 1}, \textbf{x}_{\mu 2})\) at these points, i.e., \(f(\textbf{x}_{\mu 1})\) and \(f(\textbf{x}_{\mu 2})\), must have similar values.

Since data centring is, in general, applied before applying regression, a GP is completely specified by its co-variance function \(k(\textbf{x}_{\mu 1}, \textbf{x}_{\mu 2})\) for a real process \(g(\textbf{x})\) (or \(g(\textbf{z})\), where \(\textbf{z} = [\textbf{x};\textbf{u}]\)). We have used only \(\textbf{x}\) as the input for GP Eq. (5). Hence, we neglected \(\textbf{u}\) from the formulation). The \(g(\textbf{x}_*) \sim GP( m(\textbf{x}), cov(\textbf{x}, \textbf{x}_*))\), where terms \(m(\textbf{x}) = \mathbb {E}[g(\textbf{x})], m(\mathbf {x_*})\), \(cov(\textbf{x}, \textbf{x}_*) = \mathbb {E}[(g(\textbf{x})- m(\textbf{x}))(g(\textbf{x}_*)- m(\textbf{x}_*))]\), denoted mean for training data, mean for testing data and covariance between training and testing data, respectively. Since it is impossible to estimate \(k(\textbf{x}_{\mu 1}, \textbf{x}_{\mu 2})\) analytically, an approximated kernel function k, e.g., square exponential (SE)48, is required to estimate \(\Sigma _p\) is defined as \(k(\textbf{x}_{\mu 1}, \textbf{x}_{\mu 2}) = \sigma _f^2 e^{(-\frac{1}{2l^2}\left| \textbf{x}_{\mu 1} - \textbf{x}_{\mu 2} \right| ^2)} + \sigma _n^2I\), where \(\sigma _f\) and l are hyper-parameters that have to learn; they are unique for the selected kernel function. Along with that, GP is formed to estimate \(\textbf{y}_*\) analytically given \(\mathbf {x_*}\) as follows:

Thus, using Eq. (8), the mean and covariance of the testing dataset can be fully determined as \(m(\mathbf {x_*}) = k(\textbf{x}_*, \textbf{x})[k(\textbf{x}, \textbf{x})+\sigma _n^2I]^{-1}\textbf{y}\) and \(cov(\textbf{x}_*, \textbf{x}_*) = k(\textbf{x}_*, \textbf{x}_*)-k(\textbf{x}_*, \textbf{x})[\textbf{x}, \textbf{x}+\sigma _n^2I]^{-1}k(\textbf{x}, \textbf{x}_*)\), respectively.

Sparse GP regression

Let \(\textbf{y}_i = \textbf{g}_i + \epsilon _i, \textbf{g}_i = \textbf{g}(\textbf{x}_i)\), where \(\textbf{g}= \{\textbf{g}_i\}_{i=1}^n\) are the latent function values. Once GP is estimated, the prior \(p(\textbf{g})\) can be determined. Therefore, the joint probability model \(p(\textbf{y}, \textbf{g}) = p(\textbf{y}|\textbf{g})p(\textbf{g})\) can be estimated. Sparse GP Regression aims to minimize the distance between the exact Gaussian distribution and a proposed posterior Gaussian distribution using variational approximation in which the number of training samples (m) is less than the number of training samples (n) for exact GP . Let \(p(\textbf{h}|\textbf{y})= \int p(\textbf{h}|\textbf{g})p(\textbf{g}|\textbf{y})d\textbf{g}\) be such a posterior distribution, where \(p(\textbf{h}|\textbf{g})\) denotes the prior conditional probability on the selected a set of auxiliary inducing points \(\textbf{x}_m\) and \(\textbf{h} = \textbf{g}_j +\epsilon _j, \epsilon _j \sim N(0, \sigma _m), j= \{0, \ldots ,m\}\). \(\epsilon _j\) is the corresponding m white noise realization \(\sigma _m\). After selecting such points, \(\textbf{g}_m\) be the approximated GP model. Therefore, \(p(\textbf{h}|\textbf{y})\) can be reformulated as: \(p(\textbf{h}|\textbf{y}) = \int p(\textbf{h}|\textbf{g}_m,\textbf{g})p(\textbf{g}|\textbf{g}_m, \textbf{y})p(\textbf{g}_m|\textbf{y})d\textbf{g}d\textbf{g}_m\). Suppose \(\textbf{h}\) and \(\textbf{g}\) are conditionally independent given \(\textbf{g}_m\), i.e., \(p(\textbf{h}|\textbf{g}_m,\textbf{g}) = p(\textbf{h}|\textbf{g}_m)\) or \(\textbf{g}_m\) is sufficient to describe the distribution \(\textbf{g}\):

where \(\Phi (\textbf{g}_m) = p(\textbf{g}_m|\textbf{y}) \sim N({\varvec{\mu }}, A)\). With that, mean and covariance of the approximated posterior GP are determined as

respectively. Inducing points \(x_m\) are estimated to reduce the distance between approximated and actual Gaussian distributions. The Kullback-Leibler (\(\mathbb{K}\mathbb{L}\)) divergence can be used to minimize the distance between the approximated posterior \(q(\textbf{h})\) and the exact posterior \(p(\textbf{g}|\textbf{y})\). The optimal estimation \(q^*(\textbf{h}) = \mathop {\text{argmin}}\nolimits _{{\varvec{\Phi }}(\textbf{g}_{{m}})} \mathbb{K}\mathbb{L}[q\Bigl (\textbf{h}; \Phi (\textbf{g}_m)\Bigl )||p(\textbf{h}|\textbf{y})]\). Using Bayesian inference, i.e., \(p(h|y)=p(h,y)/p(y)\), \(\mathbb{K}\mathbb{L}\) divergence can be expanded in the following way:

However, \(p(\textbf{y})\) is an intractable integral, yet \(p(\textbf{y})\) is independent of \(q(\textbf{h}; \Phi (\textbf{g}_m))\). Thus, by maximizing the first two terms (evidence lower bound \(ELBO(q) = L(\mu , A, \textbf{x}_m)\)):

true log marginal likelihood \(log(p(\textbf{y}))\) can be calculated. Thus, relationship between ELBO(q) and \(\mathbb{K}\mathbb{L}[q(\textbf{h}; \Phi (\textbf{g}_m))||p(\textbf{h}|\textbf{y})]\) can be determined considering Eqs. (10) and (11) as \(ELBO(q) = \mathbb {E}[log\Bigl (p(\textbf{y}|\textbf{h})\Bigl )] - \mathbb{K}\mathbb{L}[q\Bigl (\textbf{h}; \Phi (\textbf{g}_m)\Bigl )||p(\textbf{h})]\). Similarly, log marginal likelihood \(log(p(\textbf{y}))\) can also be determined by \(log(p(\textbf{y})) = ELBO(q) + \mathbb{K}\mathbb{L}[q\Bigl (\textbf{h}; \Phi (\textbf{g}_m)\Bigl )||p(\textbf{h}|\textbf{y})]\). Since \(\mathbb{K}\mathbb{L}[q(\textbf{h}; \Phi (\textbf{g}_m))||p(\textbf{h}|\textbf{y})] \ge 0\) for any true distribution, i.e. \(\mathbb{K}\mathbb{L}\) is a distance, \(log (p(\textbf{y})) \ge ELBO(q)\) should hold. Thus, this inequality maximizes the ELBO on \(\textbf{h}\), which eventually approximates the actual log-marginal likelihood as follows, which gives the optimal inducing points to represent the approximated Gaussian distribution.

Once we obtained the optimal values for \(\textbf{x}_m\), \(\mu\) and A, which are the parameters of \(\Phi (\textbf{g}_m)\), can be determined as

respectively.

Epistemic uncertainty propagation of residual dynamics \(\textbf{y}_y\) for changing of input velocity \(\textbf{v}_y\), where subscript \(_y\) indicates the y axis. Variable \(\textbf{y}_y\), defined the rate of the different between predicted velocity \(\bar{\textbf{v}}_y\) and actual velocity \(\hat{\textbf{v}}_y\). Inducing points provide an approximation for \(\textbf{y}_y\). A Gaussian process is formed using such inducing points, i.e., sparse Gaussian process, which can be used to predict residual dynamics for corresponding input velocity \(\textbf{v}_y\).

After selecting the inducing points \(\textbf{x}_m\), only those points are considered to formulate the \(\textbf{g}(\textbf{z})\) (Eq. 1). Afterwards, NMPC is formulated (Eq. 2) with an augmented residual dynamics model.

Experimental procedure and results

To assess the improvement of incorporating the proposed GP-based residual dynamics modelling into the local planner, we conducted several simulated and real-world experiments. For real-world experiments, the DJI M100 quadrotor was employed. The developed software stack is built on the ROS1 framework and implemented in C++. Since the local planner generates velocity and angular velocity commands, we employed the velocity control interface provided by the DJI Onboard-SDK-ROS23. The DJI control interface can handle control commands at a frequency of 20 Hz or higher. To bridge the gap between the DJI quadrotor and the local planner, we implemented a PD regulator8 that ensures smooth command transmission at 30 Hz.

Collecting testing dataset

Initially, twenty distinct trajectories were generated, as shown in Fig. 4. The objective was to collect a testing dataset in two forms: with and without obstacles. The testing dataset is structured as follows, which is similar to the training dataset: \(D = \{X, \textbf{y}\} = \{(\bar{\textbf{v}}_i, \hat{\textbf{v}}_i, {\varvec{\delta }}_i), \textbf{y}_i\}, i=0, \ldots ,n,\) where n represents the number of data points. In this structure: \(\bar{\textbf{v}}_i, \hat{\textbf{v}}_i, \textbf{y}_i \in \mathbb {R}^3\) and \(\delta _i \in \mathbb {R}\) represent the estimated velocity by NMPC, the actual velocity after applying the estimated velocity, the velocity residual, and the time difference between two consecutive data points, respectively. The terms \(\bar{\textbf{v}}_i, \hat{\textbf{v}}_i\), and \(\textbf{y}_i\) are expressed as: \({\bar{v}}_{\mu }, \hat{v}_{\mu }, y_{\mu }, \; \mu \in \{x,y,z\},\) where \(y_{\mu }\) and \(v_\mu\) denote the expected residual dynamics and velocity component in the \(\mu\) direction, respectively. These values are calculated at time \({\varvec{\delta }}_i\) when using only the nominal model (\(\textbf{f}_{norm}\)). For simplicity, \(\varvec{\delta }_i\) is defined as \(\varvec{\delta }_v\). Accordingly, the residual dynamics of the actual system are given by: \(\textbf{y}_i = (\hat{\textbf{x}}_i - \bar{\textbf{x}}_i)/\delta _i \rightarrow (\hat{\textbf{v}}_i - \bar{\textbf{v}}_i)/\delta _v\). The data were acquired using both a PX4-enabled quadrotor in a Gazebo-based simulated environment and a DJI M100 quadrotor (Fig. 4) in real-world conditions.

The right sub-figure: A trajectory of motion along all the axes was used to collect the data for learning and testing the latent representation of residual dynamics (Fig. 3). The left sub-figure: DJI M100 quadrotor is used for real-world experiments and PX4-enabled quadrotor is used for simulated environments.

Since residual dynamics is invariant to the geometric representation of trajectory but variant to velocity changes, we focused on collecting the dataset such that the dataset represents the whole distribution of the velocity changes. Additionally, since we used two different quadrotors for simulated and real-world experiments, residual dynamics learning was carried out separately for both cases. However, for simplicity, we explained the results and finding considering the real-world setup.

Modeling residual dynamics distribution

In cluttered environments, the desired velocity is automatically reduced due to obstacle constraints and computational limitations, both of which are addressed by the local planner. Consequently, in such environments, accurate residual dynamics estimation is more crucial for lower velocities than for higher velocities. This is because the velocity profile typically falls within a lower range, such as 0–2 m per second, particularly in cluttered environments with limited sensing capabilities. Hence, \(g(\textbf{z})\)(Eq. 5) was modelled such that it could generate residual dynamics with low uncertainty for such velocities, i.e., by selecting more inducing points closer to lower velocity regions.

The relationship between residual dynamics \(\textbf{y}_y\) and input velocity \(\textbf{v}_y\). In left sub-figure: the first plot displays how \(\textbf{y}_y\) and \(\textbf{v}_y\) vary over time, while the second plot shows the approximation of the \(\textbf{y}_y\) using two different approaches: Sparse Gaussian Process-based (proposed) and cluster selection approach7, in right sub-figure: residual dynamics before and after incorporating the learnt Sparse Gaussian process. Root Mean Square Error drops from 0.4012 to 0.0751 after introducing the residual dynamics into the nominal motion model.

We have compared two inducing points selection approaches, i.e., the proposed approach and the cluster selection approach7, for capturing residual dynamics along each axis: \(\textbf{y}_x, \textbf{y}_y,\) and \(\textbf{y}_z\)). The results of our experiments are given concerning the y axis, i.e., \(\textbf{y}_y\). However, we applied the same procedure to other axes as well. The latter approach targets high-speed maneuvering provided that inducing points should capture the whole distribution, which is shown in Fig. 5 in green dots. In contrast, the proposed approach selects more inducing points (pink triangles) towards lower velocity ranges.

Assessing the effect of residual dynamics produces on the nominal dynamics

To assess which effect residual dynamics produces on the nominal dynamics Eq. (1), we used the testing dataset that was acquired without considering obstacles (\(\{\textbf{y}_{\mu },\textbf{v}_{\mu }, \varvec{\delta }_v\}\)). Let \(\bar{\textbf{y}}_\mu = \textbf{g}(\textbf{v}_{\mu })\) be the expected residual dynamics. Then, the nominal error and the augmented residual error are fully determined by \(\textbf{y}_{\mu } \cdot \varvec{\delta }_v\) and \((\textbf{y}_{\mu } -\bar{\textbf{y}}_\mu ) \cdot \varvec{\delta }_v\). We observed, that the accuracy of the augmented residual dynamics model has increased drastically compared to the standalone nominal model (Fig. 5). Such an improvement helps to significantly reduce the trajectory tracking error since the control policy that is generated by NMPC after incorporating augmented residual dynamics can cope with the mean of epistemic uncertainty, residual dynamics, robustly.

Selecting an optimal number of inducing points

The execution time that is taken to estimate control policy is generated based on NMPC for maneuvering the quadrotor. This time is crucial for a smooth flight experience. We have experimented to find the correlation among the selected number of inducing points, NMPC execution time on two different embedded devices, and model accuracy (Fig. 6). As a result, we have selected only 30 inducing points to represent the residual dynamics. Such many inducing points were selected mainly due to two reasons.

Correlation between the number of inducing points and computational power. The number of inducing points is inversely proportional to augmented dynamics residual RMSE, yet proportional to computational power for two embedded computers: Nvidia Xavier and Nvidia Xavier NX. Since RMSE shows no significant change compared to computation time, we selected 30 as the number of inducing points for formulating the Sparse Gaussian Process.

First, the accuracy improvement rate is almost negligible compared to NMPC execution time which grows exponentially when the number of inducing points is increased. Second, the Nvidia Xavier NX embedded computer was used for onboard computation. Moreover, there is no considerable advantage to using Nvidia Xavier over Nvidia Xavier NX since the optimization problem, i.e., local planner, is solved sequentially. The residual dynamics model learning is performed as an offline process, ensuring it does not impact real-time performance considerably. Therefore, the Graphics Processing Unit (GPU) is only utilized during the learning stage. Once the residual dynamics are estimated as a latent distribution, the model parameters are incorporated into the nominal model (Eq. 2). We employed Casadi49 with IPOPT50 as the primary solver for the local planner. Therefore, employing a large training dataset that accurately captures the residual dynamics distribution and carefully selecting optimal inducing points to represent the residual dynamics distribution effectively minimizes the residual dynamics of the model while having a minimum burden on computational cost.

Estimating root mean square error considering with and without obstacles

Furthermore, we have calculated root mean square error (RMSE) with and without considering obstacles (Table 2) in a simulated environment. The control policy generation can differ when considering obstacle constraints because NMPC can generate different control policies over time for different obstacle constraints. Thus, we kept the same initial reference trajectory running the planner with and without considering obstacles and then estimated the RMSE of residual dynamics.

RMSE is less when the augmented residual dynamics model is applied in obstacle-free environments, compared to obstacle-cluttered environments. Such behaviour is mainly due to the lack of stability of the global map, which is built incrementally as a result of updates within a small range, e.g., 5m from the quadrotor pose. Hence, the replanner iteratively refines the trajectory when the global map is changed. Thus, the local planner has to incorporate more obstacles and constraints from time to time. Such behaviour can lead to the trap of the quadrotor in local minima. None of such problems exists in obstacle-free environments.

Avoiding trapping the quadrotor in local minima

The initial reference trajectory can be within the obstacle zones since the quadrotor has no prior knowledge about the environment beyond the sensing range. Hence, the quadrotor can be far from the reference trajectory in the presence of a huge obstacle through the reference trajectory (Fig. 7). In such a situation, the replanner9 fails to refine the trajectory due to time constraints.

To overcome this issue, we proposed to regenerate a reference trajectory provided that the replanner can push the reference trajectory further away from the obstacles. In Fig. 7, one such experiment shows how the proposed approach worked in real-world conditions.

Experimenting in real-world conditions

To evaluate the proposed approach, we conducted two real-world experiments. In the first experiment,a set of twenty initial reference trajectories (Fig. 8) was generated for traversing an obstacle-free environment with a length range of 40–60 m. The trajectories varied in their geometrical representations, while the maximum allowable velocity was set to 1.5 m/s. We first executed the experiment without considering residual dynamic estimation in the nominal model. Then, we repeated the experiment after incorporating residual dynamics. Both experiments were repeated three times for each trajectory to calculate the average RMSE of the augmented residual dynamics. Compared to the provided initial reference trajectory, the average RMSE for trajectories with and without augmented residual dynamics were 0.0851 and 0.2681, respectively. This indicates that incorporating residual dynamics reduced the residual dynamics error by almost half. The simulated experiment results (Table 2) and real-world results show similar RMSE in obstacle-free environments.

In the second experiment, we only compared the RMSE of the trajectories after incorporating residual dynamics due to the dynamic nature of an environment with obstacles. The initial reference trajectory passes through a cluttered environment (Fig. 9, which is approximately 60m long.

Experimental results for the proposed approach in a real-world setting. The initial reference trajectory within the obstacles is pushed iteratively. The distance between the refined trajectory and its closest obstacle poses is 1.5 m. We set a 1.5 m distance between the refined trajectory (traversed trajectory) and the quadrotor pose. Due to computational constraints, the map of the environment is built 5 m from the quadrotor pose incrementally, though LiDAR can measure up to 100 m. Sub-figure (b) depicts the velocity profile for the experiment. Due to our reliance on GPS for quadrotor height estimation, the velocity along the z-axis exhibited significant instability compared to the other two axes..

The traversed trajectory distance is approximately 80 m. The local planner we selected was specifically designed for low-speed maneuvering, as the primary objective was to navigate in cluttered environments. Consequently, we set the maximum velocity to 1.5 m/s. However, this restriction is not inherent to the proposed residual dynamics modeling approach. The initial reference trajectory was regenerated within the said traversed trajectory distance due to the local minima. Such an occasion can be seen in the provided video51. The RMSE of the augmented residual dynamics is 0.1018 (m/s). Even though it is hard to estimate the trajectory smoothness quantitatively, i.e., to assess control policy generation after introducing augmented residual dynamics, the smoothness has improved after incorporating augmented residual dynamics into the nominal model (Table 2).

Comparing with other similar recent works

We compared the proposed approach with four different trajectory planners: sampling-based grid search followed by trajectory generation, NMPC-based replanner, hybrid planner (local and global), and KinoJGM. All the selected planners have their advantages and limitations with respect to low-speed maneuvers. The matrices that we considered are as follows: (1) mean computation time (MCT) (execution time for one iteration of the planner), (2) success rate (SR) (the number of times quadrotor reaches from start to goal without any collision), and (3) traversed distance (TD) (total distance of quadrotor that is traversed).

In this experiment, we use a random forest with a density of (60 m \(\times\) 60 m \(\times\) 10 m). After that, we selected 10 different start and target poses and executed each planner. The experiment results are given in Table 3. For the sampling-based planner, MCT is high because it generates nondeterministic trajectories due to the nature of the planner. Replanner generates locally near-optimal control policies, yet execution time is high due to design constraints, i.e., the local planner is formulated as a constraint nonlinear optimization problem. Moreover, the quadrotor can trap in local minima since the planner optimizes locally. Compared to the listed two planners, the hybrid approach has high MCT and SR.

The latter planner executes two sub-planners, local and global, simultaneously. Hence, the planner observes the environment in the distance and generates a near-optimal control policy to reach the goal. However, since a simplified motion model was used to generate near-optimal control policy, dynamics uncertainty (or residual dynamics) between desired control that the planner generated and the actual control that the quadrotor obtained is considerably high. Such behaviour leads to trapping the quadrotor in local minima. The proposed approach is a modification of this planner by addressing two issues. Traps in local minima and residual dynamics are the two issues that the proposed planner addressed. Resolving those issues helps to obtain a low TD compared to the hybrid planner and a slightly higher SR. In addition, the proposed planner was compared with the KinoJGM planner and its variants: GP-MPC and KinoJSS. Those results were provided by the authors of KinoJGM. GP-MPC planner is conceptually similar to the proposed one. However, it has no recovery mechanism and ways to avoid local minima unlike ours. These are the reasons the proposed planner achieved higher SR compared to GP-MPC. MCT of the proposed is slightly lower compared to GP-MPC. However, the obtained MCT is acceptable for low-speed maneuvers.

Discussion

The trajectory planning problems in the plan-based control paradigm, in general, are solved adhering to these steps: path search52, initial trajectory generation and trajectory refinement53, high-level control command generation that can be achieved by several approaches, including differential flatness mapping54, receding horizon planning, and finally low-level control commands generation using a flight controller, for example, PX4, DJI. These flight controllers operate independently irrespective of high-level planners. Moreover, due to their independence, such controllers reduce the overhead and complexity of developing high-level planning algorithms. In other words, the same planner can be deployed on different firmware by implementing an interface between a high-level planner and a low-level controller. Therefore, residual dynamics arise between the high-level planner and the low-level controller. Gaussian Process-based techniques are used to model these residual dynamics in the recent past not only for MAVs but also other types of vehicles55,56.

In recent years, MAVs-related manifestations, e.g., trajectory tracking, exploration, expanding the range for venturing out computationally expensive techniques in various disciplines, including agriculture, aerial photography, and crop monitoring57,58. Low-speed maneuvering, in general, is preferred over high-speed maneuvering in executing such demanding tasks due to task complexity. An example of such low-speed maneuver need is trajectory planning, where the environment is obstacle-rich. Therefore, the kinematic modelling of a quadrotor was considered since the scope of this work is for low-speed maneuvers without consideration of dynamic effects (external or/and internal). Similar assumptions were used in59 for weed detection in crops, which showed the necessity of low-speed maneuvering. On the other hand, several studies have been carried out for high-speed maneuvers4,5,6 with consideration of residual dynamics estimation, while most of them struggle with high-computational demands for on-board processing and difficulty in maneuvering in cluttered environments. The proposed framework can be adapted for high-speed maneuvers as well. However, it requires adding a behavioural planner that switches between planners according to the requirements, for example, in the cluttering environment we can switch to the low maneuvers while keeping the same control command generation. That will give us several benefits compared to existing solutions, e.g., residual dynamics models can be trained separately for low-speed maneuvers and high-speed maneuvers. Hence, the proposed solution can be adapted to general control with high and low-speed maneuvers introducing two or more operation modes and switching between them based on the external conditions.

Since Sparse Gaussian Processing is a non-parametric model, it does not require any parameter tuning. However, it is necessary to provide a proper training set that can capture the whole distribution of the latent space, i.e., residual dynamics. The simulated experiments of the proposed approach were carried out with an IRIS quadrotor with a PX4 flight controller. The real-world experiments were carried out with a DJI M100 with an A3 flight controller. In both cases, the initial step was to fine-tune the hyper-parameters of the PD regulator. This process is required before running the proposed motion planner. Suppose the proposed approach is deployed in another MAV. In that case, it is required to fine-turn the listed parameters since the high-level planner does not know any information about the low-level controller. However, this is a one-time process. Recently, auto PID tuning approaches were proposed60. Hence, it is required to investigate these aspects to improve the parameter tuning of the proposed approach.

The source code and complete experiments are available at Github51.

Data availibility

The datasets generated and/or analysed during the current study are available in the trajectory-tracker repository, https://github.com/GPrathap/trajectory-tracker.

Abbreviations

- \(B_z\) :

-

A mapping matrix that projects the full state vector \(\textbf{z}\) onto the subspace of states relevant to the residual dynamics

- \(\textbf{f}_{norm}\) :

-

Nominal system dynamics, it can be either kinematic model or dynamic model

- \(\textbf{f}_{est}\) :

-

Augmented quadrotor motion model that comprises nominal dynamics and estimated residual dynamics by Sparse Gaussian Process (SGP)

- \(\textbf{f}_{d}\) :

-

Discrete augmented system dynamics

- \(\textbf{g}_1(\textbf{w}), \textbf{g}_2(\textbf{w})\) :

-

The constraints enforced by system dynamics and obstacle for Nonlinear Model Predictive Control (NMPC)

- \(\textbf{g}_m\) :

-

Residual dynamics model that is learnt using Sparse Gaussian Process

- \(g(\textbf{z})\) :

-

Residual dynamics model that is learnt using Gaussian Process

- \(\textbf{p}_k\) :

-

The robot’s current position \(\textbf{p}^{\mu }_k, \mu \in \{x,y,z\}\)

- \(\textbf{p}_k\) :

-

The robot’s current velocity \(\textbf{v}^{\mu }_k, \mu \in \{x,y,z\}\)

- \(\textbf{u}^{ref}\) :

-

Desired reference robot control input at time step k

- \(\textbf{u}_k\) :

-

The robot’s current control inputs \({\textbf {u}}_k \in \mathbb {R}^{n_u}\), influencing its linear velocity \(\textbf{v}_k \in \mathbb {R}^3\) in meters per second and angular velocity \(\omega\)

- \(\bar{\textbf{u}}_k\) :

-

The estimated control input at time step k by NMPC

- \(\hat{\textbf{u}}_k\) :

-

Actual control input at time step k after applying the current control (\(\bar{\textbf{u}}_k\)) to the robot

- \(\textbf{w}\) :

-

The estimated state trajectories and corresponding control inputs (\([\bar{{\textbf {u}}}_{k}, \ldots , \bar{{\textbf {u}}}_{k+N-1}, \bar{{\textbf {x}}}_{k}, \ldots , \bar{{\textbf {x}}}_{k+N}]\)) estimated from the NMPC

- \(\bar{\textbf{x}}_k\) :

-

The estimated robot state vector at time step k by using NMPC

- \(\textbf{x}_k\) :

-

The robot’s current state \({\textbf {x}}_k \in \mathbb {R}^{n_x}\), given by its position \(\textbf{p}_k \in \mathbb {R}^3\) in meters and orientation \(\alpha\) in radians at time step k

- \(\hat{\textbf{x}}_k\) :

-

Actual robot state vector at time step k after applying the current control (\(\bar{\textbf{u}}_k\)) to the robot

- \(\textbf{x}_m\) :

-

The optimal inducing points employed to construct an approximate multivariate Gaussian distribution by using SGP

- \(\textbf{x}^{ref}\) :

-

Desired reference robot state vector at time step k

- \((\textbf{x}_*)_i\) :

-

A testing data input point that comprises \((\textbf{x}_i, \bar{\textbf{u}}_i, \bar{\textbf{x}}_i, \hat{\textbf{x}}_i)\)

- \(y_i\) :

-

A testing data expected output point

- \(\textbf{y}_*\) :

-

The estimated residual dynamics for given \(\textbf{x}_*\)

- \(\textbf{z} = [\textbf{x};\textbf{u}]\) :

-

The state vector for the Gaussian Process

- \(\delta t\) :

-

The integration step for Runge–Kutta 4th order method

References

Han, R. et al. RDA: An accelerated collision free motion planner for autonomous navigation in cluttered environments. IEEE Robot. Autom. Lett. 8, 1715–1722 (2023).

Neunert, M. et al. Fast nonlinear model predictive control for unified trajectory optimization and tracking. In 2016 IEEE International Conference on Robotics and Automation (ICRA) 1398–1404 (IEEE, 2016).

Zhou, B., Pan, J., Gao, F. & Shen, S. Raptor: Robust and perception-aware trajectory replanning for quadrotor fast flight. IEEE Trans. Robot. 37, 1992–2009. https://doi.org/10.1109/TRO.2021.3071527 (2021).

Rojas-Perez, L. O. & Martinez-Carranza, J. On-board processing for autonomous drone racing: An overview. Integration 80, 46–59 (2021).

Song, Y. & Scaramuzza, D. Learning high-level policies for model predictive control. In 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 7629–7636 (IEEE, 2020).

Hönig, W., Preiss, J. A., Kumar, T. K. S., Sukhatme, G. S. & Ayanian, N. Trajectory planning for quadrotor swarms. IEEE Trans. Robot. 34, 856–869. https://doi.org/10.1109/TRO.2018.2853613 (2018).

Torrente, G., Kaufmann, E., Föhn, P. & Scaramuzza, D. Data-driven MPC for quadrotors. IEEE Robot. Autom. Lett. 6, 3769–3776 (2021).

Kulathunga, G., Devitt, D. & Klimchik, A. Trajectory tracking for quadrotors: An optimization-based planning followed by controlling approach. J. Field Robot. 39, 1003–1013. https://doi.org/10.1002/rob.22084 (2022).

Kulathunga, G., Hamed, H., Devitt, D. & Klimchik, A. Optimization-based trajectory tracking approach for multi-rotor aerial vehicles in unknown environments. IEEE Robot. Autom. Lett. 7, 4598–4605 (2022).

Romero, A., Penicka, R. & Scaramuzza, D. Time-optimal online replanning for agile quadrotor flight. arXiv preprint arXiv:2203.09839 (2022).

Romero, A., Sun, S., Foehn, P. & Scaramuzza, D. Model predictive contouring control for near-time-optimal quadrotor flight. arXiv preprint arXiv:2108.13205 (2021).

Liu, S. et al. Planning dynamically feasible trajectories for quadrotors using safe flight corridors in 3-d complex environments. IEEE Robot. Autom. Lett. 2, 1688–1695 (2017).

Kulathunga, G., Fedorenko, R., Kopylov, S. & Klimehik, A. Real-time long range trajectory replanning for MAVs in the presence of dynamic obstacles. In 2020 5th Asia-Pacific Conference on Intelligent Robot Systems (ACIRS) 145–153 (IEEE, 2020).

Chen, Y., Huang, S. & Fitch, R. Active slam for mobile robots with area coverage and obstacle avoidance. IEEE/ASME Trans. Mechatron. 25, 1182–1192 (2020).

Mellinger, D. & Kumar, V. Minimum snap trajectory generation and control for quadrotors. In 2011 IEEE International Conference on Robotics and Automation 2520–2525 (IEEE, 2011).

Ding, W., Gao, W., Wang, K. & Shen, S. An efficient b-spline-based kinodynamic replanning framework for quadrotors. IEEE Trans. Robot. 35, 1287–1306 (2019).

Kulathunga, G., Devitt, D., Fedorenko, R. & Klimchik, A. Path planning followed by kinodynamic smoothing for multirotor aerial vehicles (MAVs). Russ. J. Nonlinear Dyn. 17, 491–505 (2021).

Liu, S., Atanasov, N., Mohta, K. & Kumar, V. Search-based motion planning for quadrotors using linear quadratic minimum time control. In 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 2872–2879 (IEEE, 2017).

Singh, S., Majumdar, A., Slotine, J.-J. & Pavone, M. Robust online motion planning via contraction theory and convex optimization. In 2017 IEEE International Conference on Robotics and Automation (ICRA) 5883–5890 (IEEE, 2017).

The DARPA subterranean challenge. A synopsis of the circuits stage, accessed 27 December 2023; https://www.darpa.mil/program/darpa-subterranean-challenge (2023).

Tranzatto, M. et al. Cerberus in the DARPA subterranean challenge. Sci. Robot. 7, eabp9742 (2022).

De Petris, P. et al. RMF-Owl: A collision-tolerant flying robot for autonomous subterranean exploration. In 2022 International Conference on Unmanned Aircraft Systems (ICUAS) 536–543 (IEEE, 2022).

Dji. Online, accessed 29 January 2023; https://github.com/dji-sdk/Onboard-SDK-ROS (2023).

Chung, T. H., Orekhov, V. & Maio, A. Into the robotic depths: Analysis and insights from the DARPA subterranean challenge. Ann. Rev. Control Robot. Auton. Syst. 6, 477–502 (2023).

Arm, P. et al. Scientific exploration of challenging planetary analog environments with a team of legged robots. Sci. Robot. 8, eade9548 (2023).

Wang, Y., O’Keeffe, J., Qian, Q. & Boyle, D. E. KinoJGM: A framework for efficient and accurate quadrotor trajectory generation and tracking in dynamic environments. arXiv preprint arXiv:2202.12419 (2022).

Guerrero, J. A., Escareño, J.-A. & Bestaoui, Y. Quad-rotor MAV trajectory planning in wind fields. In 2013 IEEE International Conference on Robotics and Automation 778–783 (IEEE, 2013).

Mehndiratta, M. & Kayacan, E. Gaussian process-based learning control of aerial robots for precise visualization of geological outcrops. In 2020 European Control Conference (ECC) 10–16 (IEEE, 2020).

Flores, G., de Oca, A. M. & Flores, A. Robust nonlinear control for the fully actuated hexa-rotor: Theory and experiments. IEEE Control Syst. Lett. 7, 277–282 (2022).

Nian, X.-H., Zhou, W.-X., Li, S.-L. & Wu, H.-Y. 2-d path following for fixed wing UAV using global fast terminal sliding mode control. ISA Trans. 136, 162–172 (2023).

Hewing, L., Kabzan, J. & Zeilinger, M. N. Cautious model predictive control using Gaussian process regression. IEEE Trans. Control Syst. Technol. 28, 2736–2743 (2019).

Kabzan, J., Hewing, L., Liniger, A. & Zeilinger, M. N. Learning-based model predictive control for autonomous racing. IEEE Robot. Autom. Lett. 4, 3363–3370 (2019).

Cao, G., Lai, E.M.-K. & Alam, F. Gaussian process model predictive control of an unmanned quadrotor. J. Intell. Robot. Syst. 88, 147–162 (2017).

Cilimkovic, M. Neural networks and back propagation algorithm. Inst. Technol. Blanchardstown Blanchardstown Road North Dublin 15 (2015).

Desaraju, V. R., Spitzer, A. E., O’Meadhra, C., Lieu, L. & Michael, N. Leveraging experience for robust, adaptive nonlinear MPC on computationally constrained systems with time-varying state uncertainty. Int. J. Robot. Res. 37, 1690–1712 (2018).

Quinonero-Candela, J. & Rasmussen, C. E. A unifying view of sparse approximate gaussian process regression. J. Mach. Learn. Res. 6, 1939–1959 (2005).

Wilson, A. G., Dann, C. & Nickisch, H. Thoughts on massively scalable gaussian processes. arXiv preprint arXiv:1511.01870 (2015).

Rasmussen, C. E. & Nickisch, H. Gaussian processes for machine learning (GPML) toolbox. J. Mach. Learn. Res. 11, 3011–3015 (2010).

Wilson, A. & Nickisch, H. Kernel interpolation for scalable structured Gaussian processes (KISS-GP). In International Conference on Machine Learning 1775–1784 (PMLR, 2015).

Cunningham, J. P., Shenoy, K. V. & Sahani, M. Fast Gaussian process methods for point process intensity estimation. In Proceedings of the 25th International Conference on Machine Learning 192–199 (2008).

Flaxman, S. et al. Fast hierarchical Gaussian processes. Manuscript in preparation (2015).

Werner, K., Jansson, M. & Stoica, P. On estimation of covariance matrices with Kronecker product structure. IEEE Trans. Signal Process. 56, 478–491 (2008).

Titsias, M. Variational learning of inducing variables in sparse Gaussian processes. In Artificial Intelligence and Statistics 567–574 (PMLR, 2009).

Chee, K. Y., Jiahao, T. Z. & Hsieh, M. A. KNODE-MPC: A knowledge-based data-driven predictive control framework for aerial robots. IEEE Robot. Autom. Lett. 7, 2819–2826 (2022).

Xu, L.-X., Ma, H.-J., Guo, D., Xie, A.-H. & Song, D.-L. Backstepping sliding-mode and cascade active disturbance rejection control for a quadrotor UAV. IEEE/ASME Trans. Mechatron. 25, 2743–2753 (2020).

Westermann, H. & Mahnken, R. On the accuracy, stability and computational efficiency of explicit last-stage diagonally implicit Runge–Kutta methods (ELDIRK) for the adaptive solution of phase-field problems. Comput. Methods Appl. Mech. Eng. 418, 116545 (2024).

Boggs, P. T. & Tolle, J. W. Sequential quadratic programming. Acta Numer. 4, 1–51 (1995).

Seeger, M. Gaussian processes for machine learning. Int. J. Neural Syst. 14, 69–106 (2004).

Andersson, J. A., Gillis, J., Horn, G., Rawlings, J. B. & Diehl, M. CasADi: A software framework for nonlinear optimization and optimal control. Math. Program. Comput. 11, 1–36 (2019).

Biegler, L. T. & Zavala, V. M. Large-scale nonlinear programming using IPOPT: An integrating framework for enterprise-wide dynamic optimization. Comput. Chem. Eng. 33, 575–582 (2009).

Geesara, K. Reference trajectory tracking, accessed 29 March 2023; https://github.com/GPrathap/trajectory-tracker.git (2023).

Wang, J., Fader, M. T. & Marshall, J. A. Learning-based model predictive control for improved mobile robot path following using Gaussian processes and feedback linearization. J. Field Robot.https://doi.org/10.1002/rob.22165 (2023).

Liu, Y., Zhao, X., Xu, J., Zhu, S. & Su, D. Rapid location technology of odor sources by multi-UAV. J. Field Robot. 39, 600–616 (2022).

Talke, K., Birchmore, F. & Bewley, T. Autonomous hanging tether management and experimentation for an unmanned air-surface vehicle team. J. Field Robot. 39, 869–887 (2022).

Narayanan, S. R. et al. Physics-integrated segmented Gaussian process (SegGP) learning for cost-efficient training of diesel engine control system with low cetane numbers. In AIAA SCITECH 2023 Forum 1283 (2023).

Xu, P., Qin, H., Ma, J., Deng, Z. & Xue, Y. Data-driven model predictive control for ships with Gaussian process. Ocean Eng. 268, 113420 (2023).

Fikri, M. R., Candra, T., Saptaji, K., Noviarini, A. N. & Wardani, D. A. A review of implementation and challenges of unmanned aerial vehicles for spraying applications and crop monitoring in indonesia. arXiv preprint arXiv:2301.00379 (2023).

Nduku, L. et al. Global research trends for unmanned aerial vehicle remote sensing application in wheat crop monitoring. Geomatics 3, 115–136 (2023).

Anderegg, J. et al. On-farm evaluation of UAV-based aerial imagery for season-long weed monitoring under contrasting management and pedoclimatic conditions in wheat. Comput. Electron. Agric. 204, 107558 (2023).

Stamate, M.-A., Pupăză, C., Nicolescu, F.-A. & Moldoveanu, C.-E. Improvement of hexacopter UAVs attitude parameters employing control and decision support systems. Sensors 23, 1446 (2023).

Acknowledgements

This work was supported by the Innovate UK-funded project Agri-OpenCore [grant number 10041179].

Author information

Authors and Affiliations

Contributions

G.K. Proposed and implemented the algorithm. H.H. and G.K. were in charge of real-world flight tests and creating a quadrotor for the tests. G.K. wrote the manuscript in consultation with A.K. A.K. made a critical reviewing the manuscript and final approval for publication.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Supplementary Video 1.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kulathunga, G., Hamed, H. & Klimchik, A. Residual dynamics learning for trajectory tracking for multi-rotor aerial vehicles. Sci Rep 14, 1858 (2024). https://doi.org/10.1038/s41598-024-51822-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-51822-0

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.