Abstract

Respiratory syncytial virus (RSV) is the cause of lung infection, nose, throat, and breathing issues in a population of constant humans with super-spreading infected dynamics transmission in society. This research emphasizes on examining a sustainable fractional derivative-based approach to the dynamics of this infectious disease. We proposed a fractional order to establish a set of fractional differential equations (FDEs) for the time-fractional order RSV model. The equilibrium analysis confirmed the existence and uniqueness of our proposed model solution. Both sensitivity and qualitative analysis were employed to study the fractional order. We explored the Ulam–Hyres stability of the model through functional analysis theory. To study the influence of the fractional operator and illustrate the societal implications of RSV, we employed a two-step Lagrange polynomial represented in the generalized form of the Power–Law kernel. Also, the fractional order RSV model is demonstrated with chaotic behaviors which shows the trajectory path in a stable region of the compartments. Such a study will aid in the understanding of RSV behavior and the development of prevention strategies for those who are affected. Our numerical simulations show that fractional order dynamic modeling is an excellent and suitable mathematical modeling technique for creating and researching infectious disease models.

Similar content being viewed by others

Introduction

A common respiratory virus is the single-stranded RNA virus known as respiratory syncytial virus (RSV). RSV is a cause of illnesses of the respiratory system, including infections of the middle ear, lungs, and airways. The common cold, bronchitis, croup, etc. are all most frequently brought on by it. Certain people are typically severely infected by this virus, particularly pretermit infants, elderly people, infants, adults with heart and lung conditions, and someone with a highly weakened defense mechanism1,2. The infection may transferred through actual interaction with contagious excretions or through droplets released during a person’s cough. It can be spread unintentionally by contact with hands that have touched dining utensils or any items that have been recently soiled by a sick person’s nasal or throat discharge. In other words, the infection spreads quickly. In the United States, about \(60\%\) of newborns contract HRSV during their first season, and the virus will largely attack children between the ages of two and three3.

Mathematical models can be used to predict the emergence of infectious diseases, as is well known. Finding the epidemic’s anticipated outcome in this way is helpful for the objectives of public health initiatives. As a fundamental mathematical framework, compartmental models can be used to investigate the intricate dynamics of epidemiological systems4. Recently, several important efforts have been made to execute this inquiry program for several disorders with integer-order compartmental models, including cholera5, hepatitis B6, zika virus7, malaria transmission8, dengue9, and influenza10. A detailed model of HRSV transmission was presented in2, in which individuals gradually develop immunity to the infection after being exposed to it repeatedly. In this study, a comparison between the sophisticated model indicated above and a typical SIRS framework was also made. In11, the HRSV was modeled using an age-structured mathematical formalism that specifically took into account the youngest patients, or children under the age of one, who are most impacted by the disease. A numerical method for solving the HRSV seasonal model was presented in12. Sungchasit et al.13 described the global stability (GS) analysis of the super spreading RSV disease.

In the literature, fractional differential equations (FDEs), also known as significant DEs, are an extension of differential equations that use fractional calculus, a branch of mathematical analysis that looks at alternative approaches to creating differentiation operators of non-integer order14,15. Due to their natural connection to memory-based systems, FDEs are advantageous in the majority of biological systems16,17,18. Through fractional derivatives, Jan et al.19 build an epidemic model for Rift Valley fever with vaccination. In the paper20, the authors examined a dengue infection model with partial immunity and asymptomatic subjects. A compartmental model for the dengue fever transmission phenomenon was presented by Jan et al.21,22 and included nonlinear forces of infection via a fractional derivative. In23, scientists examined tumor-immune interactions using a fractional derivative framework. A unique mathematical model for the transmission of HFMD with reinfection was developed by researchers in24. In reference25, researchers developed a mathematical model using the Atangana–Baleanu operator within fractal fractional-order principles to depict the transmission of pneumonia among a population. Several epidemiological models have included FDEs. For more applications see26,27,28,29,30.

In many different systems and processes, FDs are an effective tool for explaining memory and hereditary characteristics. The fundamental details of the function are preserved in stacked form via fractional-order differential equations. In fractional-order modeling, the order of the derivative is one such additional variable we have that is helpful for numerical techniques. The dynamics of disease transmission have been investigated using fractional-order modeling. Additionally, the fractional differentiation is not local, while the integer-order differentiation is. The simulation of epidemic scenarios is aided by this behavior. Additionally, the fractional derivative can expand the system’s stability zone. Due to its ability to incorporate common starting and boundary conditions into the derivation and the fact that the derivative of a constant is zero, as opposed to the fractional Riemann–Liouville derivative, the Caputo derivative is highly useful when discussing real-world concerns. The aforementioned investigations as well as the aid of Caputo FDEs served as inspiration for this study. A fractional order \(SEI_rI_sR\) model was developed utilizing Caputo FDEs to characterize the dynamics of RSV.

These are the goals of this work:

-

Examine the stability and dynamical behavior of the \(SEI_rI_sR\) model.

-

The Basic Reproduction number and Equilibrium points should be determined.

-

Application of the Lagrange polynomial technique to obtain a numerical solution.

-

The fractional order RSV model is demonstrated with chaotic behaviors.

This paper is structured as follows: section one serves as an introduction, while section two presents fundamental fractional order derivatives applicable to solving the epidemic model. The third section delves into the positivity of the fractional order model, discussing endemic equilibrium, DFE, and sensitivity analysis. In “Qualitative analysis of model”, the existence and uniqueness of a system of model solutions are affirmed using the fixed point concept. “Ulam–Hyers stability” focuses on investigating the Ulam–Hyers stability of the RSV model. “Numerical scheme” explores the impact of fractional parameters by employing numerical techniques to solve the fractional-order system. Finally, “Simulation and discussion” and “Conclusion” discuss the results and conclusion.

Basic concepts

Before creating the model, we must first study the fundamental definitions that are essential to comprehending fractional operators.

Definition 2.1

14Assume that \(w(t) \in {H^1}(0,T), \ T > 0,\) and \(0 < \beta \le 1\). The following is the definition of a power-law kernel fractional derivative:

Definition 2.2

14For (1), the corresponding fractional integral operator is given by

Fractional order RSV model

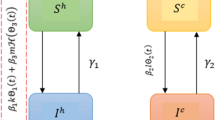

Here, we propose an RSV model with memory-affected fractal fractional order. The RSV epidemic model presented in13 is a classical derivative that has to be taken into account. The entire population is classified into five classes: susceptible S(t), exposed E(t), normally infectious \(I_r(t)\), super infectious \(I_s(t)\), and recovered R(t). The birth rate is indicated by b while the natural death rate is symbolized by \(\mu\). The overall size of the human population at a given time is denoted by N. The rate of virus transmission between people, which affects the disease’s spread is represented by the symbol \(\beta\). The interval from infection to the development of symptoms, or the incubation time of the virus within an infected human, is shown by the symbol \(\eta\). The likelihood that a new case of the virus will manifest as a normal infected human, typically characterized by typical transmission patterns indicated by p. The additional likelihood that a new case may involve an infected person who is super-spreading, who has an elevated potential for transmitting the virus to a larger number of individuals indicated by \((1-p)\). The rate at which individuals with normal infections recover from the disease is symbolized by \(r_1\). The rate at which individuals with super-spreading infections recover is indicated by \(r_2\). The set of non-linear fractional differential equations is

the initial conditions are

Steady state analysis

Here, we will look into the RSV fractional-order system for both endemic and disease-free steady states. Setting the fractional derivative \({}^CD_t^\alpha S\), \({}^CD_t^\alpha E\), \({}^CD_t^\alpha {I_r}\), \({}^CD_t^\alpha {I_s}\) and \({}^CD_t^\alpha R\) of the fractional system (3) without infection to zero allows us to reach the steady-state with no infection. Disease free equilibrium points are

The endemic equilibrium can be expressed as follows by setting the right-hand side of the system (3) to zero and assuming that none of the disease states is zero,

where

Utilizing the next-generation technique, we were able to determine the system’s fundamental reproduction number, which is represented by \(R_0\). In13 is given as:

where \({\Psi _1} = {r_1} + p{r_1} + p{r_2} + \mu + 2p\mu ,\) and \({\Psi _2} = \mu \left( {\mu + {r_1}} \right) \left( {\mu + {r_2}} \right) \left( {\eta \mu + 1} \right) .\) The aforementioned \(R_0\) serves as a threshold parameter, if \(R_0\) is less than 1, the disease disappears, and if \(R_0\) is more than 1, the contagion perseveres in the community.

Sensitivity analysis

We can check the sensitivity of \(R_0\) by computing the partial derivative for the significant variables, that is:

\(R_0\) is extremely responsive to changes in the parameters. In this work, the values b, \(\beta\), and p are increasing, while \(r_1\), \(r_2\), \(\mu\), and \(\eta\) are decreasing. To avoid an illness, prevention is preferable to treatment.

Theorem 3.1

The closed set \(\Phi = \left\{ {\left( {S, E,{I_r},{I_s}, R} \right) \in R_ + ^5:N \le \frac{b}{\mu }} \right\}\) is a positive invariant set for the proposed fractional-order system (3).

Proof

To prove that the system of Eqs. (3) has a non-negative solution, we have

Thus, the fractional system (3) has non-negative solutions. Lastly By adding all the relations of the system (3), the total population with the fractional derivative is given as

Take a Laplace transform to both sides, we get

Take Laplace inverse to both sides and Theorem 7.2 in31, we obtained

Because of this if \(N(0) \le \frac{b}{\mu }\) then for \(t > 0\), \(N(t) \le \frac{b}{\mu }\). Therefore, in the context of fractional derivative, positive invariance exists for the closed set \(\Phi\). \(\square\)

Remarks 3.1

The closed set \(\Phi\) is currently representing a set of conditions that are biologically significant in the context of RSV transmission modeling. It is emphasizing the relationship between population dynamics, birth and death rates, and the vulnerability of specific age groups. These insights are currently informing strategies for managing and controlling RSV transmission within a population.

Theorem 3.2

If \(R_0 < 1,\) the DFE of the system (3) is LAS otherwise unstable.

Proof

The system’s (3) Jacobian matrix at \(D^0\)

and

where

It should be emphasized that the suggested model assumes positive values for the parameters. Therefore, the eigenvalue \(\lambda _1 < 0\). Indeed the quantity \(\mu\) is strictly positive. Thus, there are no positive roots of Eq. (9) according to Descarte’s rule of signs because there is no sign change if \(R_0<1\). In addition, if \(\lambda\) is changed to \(- \lambda\) in Eq. (9), then Eq. (9) has three signs that change if \(R_0 < 1,\) therefore there are precisely three negative roots to Eq. (9). Thus by the condition \(\left| {\arg {\lambda _i}} \right| > \frac{{\alpha \pi }}{2},i = 1,2,3,4,5,\alpha \in \left( {0,1} \right]\), \(D^0\) is locally asymptotically stable. \(\square\)

Remarks 3.2

The value of \(R_0\) is a critical determinant of RSV transmission dynamics. A value below 1 suggests limited transmission, while a value above 1 indicates the potential for significant and sustained spread. This insight is essential for understanding the epidemiology of RSV and for designing effective control and prevention strategies.

Qualitative analysis of model

Here, we demonstrate that the system has a unique solution. System (3) is first written as follows:

The aforementioned equations can be solved by applying integral form to both sides.

We demonstrate that the Lipschitz condition and contraction are satisfied by the kernels \(P_i = 1, 2, 3, 4, 5\).

Theorem 4.1

If the following inequality holds, then the kernel \(P_1\) fulfills both the Lipschitz condition and contraction:

Proof

We have

Assume that \({m_1} = \beta {k_1} + \beta {k_2} + \mu ,\) where \(\left\| {{I_r}} \right\| \le {k_1},\) \(\left\| {{I_s}} \right\| \le {k_2}\) are bounded functions.

Therefore

As a result, \(P_1\) meets the Lipschitz condition, and \(P_1\) is a contraction if \(0 \le \beta {k_1} + \beta {k_2 } + \mu < 1\). Similarly, we may demonstrate that \(P_i, i = 2, 3, 4, 5\) fulfil the Lipschitz condition and \(P_i\) are contractions for \(i = 2, 3, 4, 5,\) if \(0 \le m_i < 1,\) where \({m_2} = \left( {\frac{1}{\eta }} \right) p + \left( {\frac{1}{\eta }} \right) \left( {1 - p} \right) + \mu ,\) \({m_3} = {r_1} + \mu ,\) \({m_4} = {r_2} + \mu ,\) \({m_5} = \mu\) are bounded functions. Take into account the following recursive forms of system (11):

with the initial circumstances \({S_0}(t) = S(0),\) \({E_0}(t) = E(0),\) \({I_{r_0}}(t) = {I_r}(0),\) \({I_{s_0}}(t) = {I_s}(0),\) \({R_0}(t) = R(0).\) Take the norm of the first equation of the above system

Lipschitz condition (12) gives us

Similar to this, we get

Hence, we can state that

We demonstrate that a solution exists in the following theorem. \(\square\)

Theorem 4.2

A system of solutions of the model (3) exists if there is \(\xi _1\) such that

Proof

From Eqs. (13) and (14), we have

This means that the system is continuous and has a solution. Currently, we demonstrate how the aforementioned functions create a model solution (11). Considering that

So

The procedure is repeated to produce

At \(\xi _1,\) we get

Limiting the current equation as n gets closer to \(\infty\), we obtain \(\left\| {{\Upsilon _{in}}(t)} \right\| \rightarrow 0,i = 2,3,4,5.\) Similarly, we may demonstrate that \(\left\| {{\Upsilon _{in}}(t)} \right\| \rightarrow 0,i = 2,3,4,5.\) The proof is now complete. \(\square\)

We assume that the system has an alternative solution, such as \(S_1(t),\) \(E_1(t),\) \(I_{r1}(t),\) \(I_{s1}(t),\) and \(R_1(t),\) then we have

We use the norm of the aforementioned equation

Lipschitz condition (12) implies that

Thus

Theorem 4.3

If the following circumstance applies, the solution of model (3) is distinct:

Proof

Assuming condition (15) is satisfied

Then \(\left\| {S(t) - {S_1}} \right\| = 0.\) So, we obtain \(S(t) = S_1(t)\). Similar equality may be demonstrated for E, \(I_r,\) \(I_s,\) R. \(\square\)

Ulam–Hyers stability

In this section of the paper, we will examine the stability of the system (3) considering the perspective of UH. The analysis of approximate solution stability holds significant importance.

Definition 5.1

The considered system is said to be UH stable if \(\exists\) some constants \({\Upsilon _i} > 0,\) \(i \in {N^5}\) and for each \({\Xi _i} > 0\), \(i \in {N^5}\), for

and there exist \(\left\{ {{\tilde{S}},\,{\tilde{E}},\,{{{\tilde{I}}}_r},\,{{{\tilde{I}}}_s},\,{\tilde{R}}} \right\}\) satisfy the following

such that

Assumption 5.1

Let us assume a Banach space on a real-valued function \(\textrm{B}(u)\) and \(u=[0, b]\) and \(u = \textrm{B}(u) \times \textrm{B}(u) \times \textrm{B}(u) \times \textrm{B}(u) \times \textrm{B}(u)\) prescribe a norm \({\sup }_{t \in u}\) \(\left\| {{\tilde{S}},\,{\tilde{E}},\,{{{\tilde{I}}}_r},\,{{{\tilde{I}}}_s},\,{\tilde{R}}} \right\| = {\sup }_{t \in u} \left| {{\tilde{S}}} \right| + {\sup }_{t \in u} \left| {{\tilde{E}}} \right| + {\sup }_{t \in u} \left| {{{{\tilde{I}}}_r}} \right| + {\sup }_{t \in u} \left| {{{{\tilde{I}}}_s}} \right| + {\sup }_{t \in u} \left| {{\tilde{R}}} \right| .\)

Theorem 5.1

The considered system is UH stable with the above assumption.

Proof

The system has a unique solution, we have

Using \({\Delta _i} = {\Upsilon _i}\) and \(\frac{1}{{\Gamma (\alpha )}} = {\Xi _i}\), we have

Similarly, for the rest of the classes, we have the following

Thus, we have completed the proof. \(\square\)

Numerical scheme

The goal of this section is to create a numerical scheme for (3). To acquire computational results, the scheme has been used. Consider the following to illustrate this:

By employing Lagrange’s interpolation polynomial (LIP), we get

where

\({\zeta _1} = r - \nu + 1,\) \({\zeta _2} = r - \nu ,\) \({\zeta _3} = r - \nu + 3 + 2\alpha ,\) and \({\zeta _4} = r - \nu + 3 + 3\alpha .\) We included data from systematic literature reviews13. The following algorithm also presents the previously discussed methodology. It also mentions the output variables, initial conditions, set of parameters, and resulting equations.

Simulation and discussion

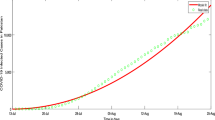

Here, we show how the transmission of RSV is impacted by fractional order. For a better understanding of the RSV infection phenomenon, we conducted several simulations. For numerical simulation, Table 1 contains the initial values of the compartments and the values of the model parameters.

To demonstrate how memory affects the dynamics of RSV, we consider various values of the memory index \((\alpha = 0.85, 0.90, 0.95, 1.00)\) in Figs. 1, 2, 3, 4 and 5. Figure 1 indicates that the susceptible population S(t) grows uniformly whenever the non-integer order \(\alpha\) decreases. This phenomenon reflects how variations in memory index \(\alpha\) influence immunity duration. A lower \(\alpha\) value indicates stronger memory effects, causing individuals to retain immunity for an extended time after infection or vaccination. Figure 3 shows that, there is a sharp leap in the population of super spreading infected people in the early days when fractional order \(\alpha\) decreases. This finding underscores the role of highly infectious individuals in initiating and driving early outbreaks. A decrease in memory index \(\alpha\) could intensify the impact of memory-driven interactions, making highly infectious individuals more potent transmitters. This insight is crucial for anticipating and managing the initial surge of infection. Furthermore, as can be seen from Fig. 5 the recovered population R(t) decreases by decreasing memory index \(\alpha\). A lower memory index \(\alpha\) value implies a shorter duration of immunity post-recovery. This aligns with the idea that reduced \(\alpha\) values emphasize stronger memory effects, resulting in faster waning of immunity. The observation emphasizes the potential for reinfections and the importance of immunity maintenance strategies. It is clear that the memory index has a significant impact on the dynamics of RSV and lowers the number of infected people. These observations reinforce the importance of memory effects, immunity, and initial transmission dynamics in the context of RSV infection. They offer insights that can influence public health strategies, vaccination programs, and the understanding of population-level immunity. The findings contribute to a more comprehensive understanding of the biological mechanisms underlying RSV behavior and its interactions with the human immune system, ultimately aiding in more effective disease management and control. Figs. 1, 2, 3, 4 and 5 depict the effects of input parameters p on the dynamics of RSV transmission, where the impact of infection progression rate has been established. Figure 6 shows the chaotic behavior of our system (3) with different settings for the memory index \(\alpha\), the trajectories of the system converge to the equilibrium point. In Fig. 6a, we observe that the more individuals are susceptible the more transitioning to an exposed individuals whereas less the number of susceptible individuals the less the transitioning to an exposed individuals. In Fig. 6b, we observe that the more individuals are susceptible the more they get infected with RSV infection whereas less the number of susceptible individuals the less the number of individuals infected with RSV infection. Figure 6c describes that the number of recovered individuals decrease as the normal infected individuals increase. We noted that treatment effectiveness, immune response variations, and the overall progression of the infection collectively influence this chaotic transition. We observed that the memory index \(\alpha\) can also be used as a chaotic control parameter. The chaotic behavior of the system is significantly relied upon in numerous scientific and engineering applications. It is generally known that there is a significant propensity to imagine and represent the behavior of chaotic systems. The proposed mathematical model is made feasible and scalable by the chaotic modeling, which can then be used to study novel chaos systems. We demonstrated how \(\alpha\) might have made a significant contribution and could be used as a useful parameter for preventative measures.

Conclusion

The most frequent cause of lower respiratory tract infections in newborns and children globally is respiratory syncytial virus (RSV). In this work, we have presented a fractional-order mathematical model for the respiratory syncytial virus (RSV) transmission in the presence of a super-spreader. Using the Caputo derivative, we provided the suggested model. The results demonstrate that the suggested model has bounded and positive solutions. The sensitivity analysis indicates that the value R0 correlates directly with the birth rate of susceptible individuals \((\mu )\), the virus transmission rate between humans \((\beta )\), and the likelihood of a new case being a normally infected human (p). These factors are adjustable through the efficient implementation of vaccination campaigns. Using the fixed-point theorem, we investigated the existence and uniqueness of the system’s solutions. Furthermore, we established UHS results for our system of viral infection RSV. We presented a two-step Lagrange polynomial numerical technique for addressing the Caputo fractional derivative to understand the dynamics of RSV. With varying input parameters, the chaotic graphs have been displayed. It has been demonstrated that the chaotic behavior of the proposed model is affected by fractional order \(\alpha\). Adding the memory index \(\alpha\) is expected to improve the system and could have been employed as a controlling parameter. Every fractional order model, in our opinion, has more information than the integer orders. For example, the integer order model will only have one solution, but the fractional order in an interval will have an infinite number of solutions. The beauty of these operators is that they can find new information for orders other than one while still approaching the integer order solution for orders that are close to one. In conclusion, the utilization of data-driven approaches in Respiratory Syncytial Virus (RSV) modeling proves pivotal in understanding the complexities of disease transmission and management.

In our future work, we plan to implement optimal control analysis on this model to reduce infection rates and increase the number of healthy individuals. The biological phenomenon can be described using real data by extending our model to a new generalized fractional derivative. Additionally, we will also apply the fractional operators to stochastic models.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

Change history

13 February 2024

A Correction to this paper has been published: https://doi.org/10.1038/s41598-024-54130-9

References

Arenas, A. J., González, G. & Jódar, L. Existence of periodic solutions in a model of respiratory syncytial virus RSV. J. Math. Anal. Appl. 344(2), 969–980 (2008).

Weber, A., Weber, M. & Milligan, P. Modeling epidemics caused by respiratory syncytial virus (RSV). Math. Biosci. 172(2), 95–113 (2001).

Glezen, W. P., Taber, L. H., Frank, A. L. & Kasel, J. A. Risk of primary infection and reinfection with respiratory syncytial virus. Am. J. Dis. Child. 140(6), 543–546 (1986).

Rao, F., Mandal, P. S. & Kang, Y. Complicated endemics of an SIRS model with a generalized incidence under preventive vaccination and treatment controls. Appl. Math. Model. 67, 38–61 (2019).

Lin, J., Xu, R. & Tian, X. Global dynamics of an age-structured cholera model with both human-to-human and environment-to-human transmissions and saturation incidence. Appl. Math. Model. 63, 688–708 (2018).

Khan, T., Rihan, F. A. & Ahmad, H. Modelling the dynamics of acute and chronic hepatitis B with optimal control. Sci. Rep. 13(1), 14980 (2023).

Miyaoka, T. Y., Lenhart, S. & Meyer, J. F. Optimal control of vaccination in a vector-borne reaction–diffusion model applied to Zika virus. J. Math. Biol. 79(3), 1077–1104 (2019).

Eikenberry, S. E. & Gumel, A. B. Mathematical modeling of climate change and malaria transmission dynamics: A historical review. J. Math. Biol. 77, 857–933 (2018).

Ghosh, I., Tiwari, P. K. & Chattopadhyay, J. Effect of active case finding on dengue control: Implications from a mathematical model. J. Theor. Biol. 464, 50–62 (2019).

Li, L., Sun, C. & Jia, J. Optimal control of a delayed SIRC epidemic model with saturated incidence rate. Opt. Control Appl. Methods 40(2), 367–374 (2019).

Acedo, L., Morano, J. A. & Díez-Domingo, J. Cost analysis of a vaccination strategy for respiratory syncytial virus (RSV) in a network model. Math. Comput. Model. 52(7–8), 1016–1022 (2010).

Arenas, A. J., Moraño, J. A. & Cortés, J. C. Non-standard numerical method for a mathematical model of RSV epidemiological transmission. Comput. Math. Appl. 56(3), 670–678 (2008).

Sungchasit, R., Tang, I. M. & Pongsumpun, P. Mathematical modeling: Global stability analysis of super spreading transmission of respiratory syncytial virus (RSV) disease. Computation 10(7), 120 (2022).

Caputo, M. & Fabrizio, M. A new definition of fractional derivative without singular kernel. Progr. Fract. Differ. Appl 1(2), 73–85 (2015).

Atangana, A. & Baleanu, D. D, New fractional derivatives with nonlocal and non-singular kernel: Theory and application to heat transfer model. arXiv:1602.03408 (arXiv preprint) (2016).

Nisar, K. S. et al. Analysis of dengue transmission using fractional order scheme. AIMS Math. 7(5), 8408–8429 (2022).

Haq, I. U. et al. Mathematical analysis of a Corona virus model with Caputo, Caputo-Fabrizio-Caputo fractional and Atangana-Baleanu-Caputo differential operators. Int. J. Biomath. 20, 10 (2023).

Rihan, F. A., Al-Mdallal, Q. M., AlSakaji, H. J. & Hashish, A. A fractional-order epidemic model with time-delay and nonlinear incidence rate, Chaos. Solitons. Fractals 126, 97–105 (2019).

Jan, A., Jan, R., Khan, H., Zobaer, M. S. & Shah, R. Fractional-order dynamics of Rift Valley fever in ruminant host with vaccination. Commun. Math. Biol. Neurosci. 20, 20 (2020).

Asamoah, J. K. K. et al. Optimal control and cost-effectiveness analysis for dengue fever model with asymptomatic and partial immune individuals. Results Phys. 31, 104919 (2021).

Jan, R. & Boulaaras, S. Analysis of fractional-order dynamics of dengue infection with non-linear incidence functions. Trans. Inst. Meas. Control. 44(13), 2630–2641 (2022).

Jan, R., Shah, Z., Deebani, W. & Alzahrani, E. Analysis and dynamical behavior of a novel dengue model via fractional calculus. Int. J. Biomath. 15(06), 2250036 (2022).

Tang, T. Q., Shah, Z., Jan, R. & Alzahrani, E. Modeling the dynamics of tumor-immune cells interactions via fractional calculus. Eur. Phys. J. Plus 137(3), 367 (2022).

Jan, R., Boulaaras, S., Alyobi, S. & Jawad, M. Transmission dynamics of Hand-Foot-Mouth Disease with partial immunity through non-integer derivative. Int. J. Biomath. 16(06), 2250115 (2023).

Almutairi, N., Saber, S. & Ahmad, H. The fractal-fractional Atangana–Baleanu operator for pneumonia disease: Stability, statistical and numerical analyses. AIMS Math. 8(12), 29382–29410 (2023).

Nemati, S. & Torres, D. F. A new spectral method based on two classes of hat functions for solving systems of fractional differential equations and an application to respiratory syncytial virus infection. Soft. Comput. 25(9), 6745–6757 (2021).

Ullah, A., Abdeljawad, T., Ahmad, S. & Shah, K. Study of a fractional-order epidemic model of childhood diseases. J. Funct. Sp. 20, 20 (2020).

Sajjad, A., Farman, M., Hasan, A. & Nisar, K. S. Transmission dynamics of fractional order yellow virus in red chili plants with the Caputo-Fabrizio operator. Math. Comput. Simul. 20, 10 (2023).

Abu-Zinadah, H., Alsulami, M. D. & Ahmad, H. Application of efficient hybrid local meshless method for the numerical simulation of time-fractional PDEs arising in mathematical physics and finance. Eur. Phys. J. Spec. Top. 20, 1–11 (2023).

Adel, M., Khader, M. M., Ahmad, H. & Assiri, T. A. Approximate analytical solutions for the blood ethanol concentration system and predator-prey equations by using variational iteration method. AIMS Math. 8(8), 19083–19096 (2023).

Diethelm, K. & Ford, N. J. Analysis of fractional differential equations. J. Math. Anal. Appl. 265(2), 229–248 (2002).

Acknowledgements

This study is supported via funding from Prince Sattam bin Abdulaziz University project number (PSAU/2023/R/1444).

Funding

This Project is funded by King Saud University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this Article was revised: The original version of this Article contained an error in the Acknowledgements section.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jamil, S., Bariq, A., Farman, M. et al. Qualitative analysis and chaotic behavior of respiratory syncytial virus infection in human with fractional operator. Sci Rep 14, 2175 (2024). https://doi.org/10.1038/s41598-023-51121-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-51121-0

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.