Abstract

Influenza epidemic data are seasonal in nature. Zero-inflation, zero-deflation, overdispersion, and underdispersion are frequently seen in such number of cases of disease (count) data. To explain these counts’ features, this paper introduces a flexible model for nonnegative integer-valued time series with a seasonal autoregressive structure. Some probabilistic properties of the model are discussed for general seasonal INAR(p) model and three estimation methods are used to estimate the model parameters for its special case seasonal INAR(1) model. The performance of the estimation procedures has been studied using simulation. The proposed model is applied to analyze weekly influenza data from the Breisgau- Hochschwarzwald county of Baden–Württemberg state, Germany. The empirical findings show that the suggested model performs better than existing models.

Similar content being viewed by others

Introduction

Many epidemic diseases follow a seasonal pattern and so is the Influenza. Influenza shows a seasonal pattern1 in temperate regions. The weekly/monthly/yearly epidemic data form a time series of counts. Analyzing and forecasting time series of counts remain a useful technique of getting information needed for successful policy making and management of epidemics. Before modeling a count time series, an analyst should be familiar with specific characteristics of the series in order to select a good model for the data. Dispersion characteristic, stationarity, autocorrelation structure and seasonality remain factors that help a researcher to determine the model that should be fitted to a count time series. Seasonality refers to the tendency of a time series (including low count time series) to exhibit patterns or movements that are completed within a year and repeat themselves every year. Seasonality deals with regular and predictable patterns in a time series. For example, a weekly time series of low counts is said to be seasonal with seasonal period s = 52 if its associated autocorrelation function has spikes at multiples of lag 52.

New INAR processes have been constructed and used to model stationary nonseasonal count time series from a variety of fields since the independent works of Al-Osh and Alzaid2, and McKenzie3, which paved the way for research on the applications and construction of nonnegative integer-valued autoregressive (INAR) processes. Several models were constructed for stationary, overdispersed nonseasonal series based on the binomial thinning operator and first-order autoregressive correlation structure4,5. Authors who used other thinning operators to build models for the count time series include Liu and Zhu6, and Ristić et al.7. There is also evidence of stationary, first-order integer-valued autoregressive processes, which were constructed using innovation distributions, that can exhibit any of the underdispersion, equidispersion and overdispersion phenomena8,9,10.

An INAR(1) process with an innovation distribution that is basically a standard discrete or compound Poisson distribution has been described as inappropriate for modeling any of underdispersed and overdispersed series with either inflation or a deflation of zeros11. Zero-modified distributions are useful in modeling count data with an inflation or deflation of zeros. The zero-modified versions of some discrete distributions have been introduced and used as innovation distributions to construct INAR(1) models by some authors. For example, Barreto-Souza11 constructed a zero-modified geometric INAR(1) process using the negative binomial thinning operator and illustrated empirically the applicability of the model using two practical data sets. In particular, the model may be used to describe an underdispersed or overdispersed series with deflationary or inflationary zeros. Sharafi et al.12 suggested an INAR(1) process using the zero-modified Poisson–Lindley (ZMPL) distributed innovations. They fitted the model to both zero-deflated and zero-inflated series and compared the model’s fit to each data set to that of competing models. Empirically speaking, their model outperformed the other models fitted to each of the data sets.

Sometimes, it is necessary to analyze time series that exhibit seasonal variations. Such time series are called seasonal time series. Seasonal time series of counts are seen in several domains, including epidemiology13. Though authors have analyzed several seasonal epidemiological data using the Guassian multiplicative seasonal autoregressive moving average (SARIMA) model14,15,16 and Holt–Winter’s Method16, these models may not be suitable for certain nonnegative count time series, especially those involving small counts17,18,19. In the case of seasonal low count time series, a seasonal nonnegative integer-valued autoregressive (INAR) model is needed. Unlike the SARIMA model, the seasonal INAR model takes into consideration the discrete nature of the data through an appropriate discrete innovation distribution. It can also be used to generate integer-valued forecasts. It can also be constructed to account for properties of count time series, such as overdispersion, underdispersion, zero-inflation and zero-deflation. Despite the extensive literature on INAR models, INAR models for stationary seasonal count time series have received little attention20,21,22. Remarkably, none of the seasonal models was developed for the purpose of analyzing an underdispersed or overdispersed seasonal count time series that is either zero-inflated or zero-deflated. This study intends to achieve two principal objectives. First, to propose a seasonal INAR process of order one that is suitable for underdispersed, overdispersed, zero-inflated and zero-deflated count time series data. Second, application of the model to influenza data. In view of the absence of the general seasonal INAR model in the literature, the theoretical framework is developed for the general seasonal INAR model in this paper.

The remainder of this article is organized as follows: “Introduction” discusses the general seasonal INAR model. In “The general seasonal INAR model”, we deal with the construction and properties of the general seasonal INAR(p) model. Methods of estimating the parameters of the model are investigated, namely the Yule–Walker and conditional least squares. The theoretical aspect of forecasting based on the proposed model, simulation and real-world data application for a special case of the general seasonal model, namely the INAR\((1)_{s}\)ZMPL process are considered in “Construction of the proposed seasonal INAR(1) process and its properties”. The conclusions of this research are summarized in “Conclusion”.

The general seasonal INAR model

In response to the comment made by one of the anonymous reviewers, and considering that the literature lacks a general seasonal INAR model, we introduce the general seasonal INAR model in this section. Let “\(*\)” be the negative binomial thinning (NBT) operator and \(\lambda *W= \sum _{i=1}^W Z_i,\) where \({Z_i}\) refers to the sequence of independent and identically distributed (i.i.d.) geometric variables having the probability mass function (PMF)

An elaborate discussion of the properties of the NBT operator is available in Ristić et al.7. The general seasonal INAR model of order P and seasonal period s (INAR\((P)_s\) model) is defined by

In (1), the counting series are mutually independent, \(\lambda _l \in \left[ 0,1\right] ,~l=1, 2, \ldots , P\) and \(\left\{ \nu _t \right\}\) are independent of all the counting series.

Theorem 2.1 contains the condition for the existence of a unique stationary INAR\((P)_s\) model.

Theorem 2.1

Let \(\left\{ \nu _t \right\}\) be i.i.d., nonnegative integer-valued random variables whose mean and variance are E\((\nu _t)= \mu _v\) and Var\((\nu _t)= \sigma _\nu ^2\) respectively. Suppose that \(\lambda _l \in \left[ 0,1\right] ,~l=1, 2, \ldots , P\). If the roots of the equation

lie inside the unit circle, then there is a unique stationary nonnegative integer-valued series \(\left\{ W_t \right\}\) that satisfies the equation

Proof

The proof of Theorem 2.1 is based on some properties of the NBT operator. These properties, which were established by Ristić et al.7 are as follows

-

(i)

E \(\left[ \prod _{m=1}^r\left( \lambda _m *W_m\right) \right] =\prod _{m=1}^r \lambda _m E\left( W_m\right) , r \ge 1\)

-

(ii)

\(E \left( \lambda *W\right) ^2 =\lambda ^2E \left( W\right) ^2+\lambda (1+\lambda )E \left( W\right)\)

-

(iii)

\(E \left( \lambda *W-\lambda *Y \right) ^2=\lambda (1+\lambda )E \left( \vert W-Y \vert \right) +\lambda ^2 E \left( \vert W-Y \vert \right) ^2,\) if each of the counting series of \(\lambda *W\) and \(\lambda *Y\) has the geometric \(\left( \frac{\lambda }{1+\lambda }\right)\) distribution. The remaining part of the proof can be deduced from the proof of Theorem 2.1 in Jin-Guan and Yuan23.

\(\square\)

Taking expectation of both sides of (1) and assuming stationarity, the mean of the INAR\((P)_s\) model becomes

Next, we study the autocorrelation structure of the INAR\((P)_s\) model. Let \({\textbf {W}}_t= \left( W_t, W_{t-s}, W_{t-2s}, \ldots , W_{t-(P+1)s} \right)' .\)

Then (1) can be written as

where

The vector of the autocovariances is defined by

Since \(E\left( {\textbf {W}}_t\right) ={\textbf {C}}E\left( {\textbf {W}}_{t-s}\right) + E\left( \varvec{\nu }_t\right)\), it is easy to verify that \(\left( {\textbf {C}}-{\textbf {I}}\right) E\left( {\textbf {W}}_{t-s} \right) E\left( {\textbf {W}}_{t-k}^\top \right) +E\left( \varvec{\nu }_t\right) E\left( {\textbf {W}}_{t-k}^\top \right)\) is a null matrix. Thus

It follows that the autocovariance function at lag k is

The associated autocorrelation coefficient has the form:

In view of (6), it is obvious that the model under consideration and the Gaussian AR model of order P and seasonal period ‘s' have similar autocorrelation structures. Hence, the identification of the model can be done using the autocorrelation function (ACF) and partial autocorrelation function (PACF). Theoretically speaking, the ACF of the INAR\((P)_s\) model has nonzero values at the seasonal lags. The nonzero values at the seasonal lags tail off while the related partial ACF cuts off after lag P. When we have a stationary seasonal time series with sample ACF and sample partial ACF that have patterns that are akin to the theoretical ACF and sample partial ACF, we are expected to fit the INAR\((P)_s\) model to the data. In situations where, the sample ACF and sample partial ACF do not look exactly like their theoretical counterparts, a variety of models can be fitted to the given data and the best model is determined using model selection criteria24.

The parameters \(\lambda _l,~l=1, 2, \ldots , P\) of the model can be estimated using any of the Yule–Walker and conditional least squares approaches. For \(k=s, 2s, \ldots , Ps\), we derive P equations from (6). These equations are written in matrix form as

where

The Yule–Walker estimator \(\hat{\varvec{\lambda }}\) of \(\varvec{\lambda }\) is given as

For example, for a second order monthly count time series, \(s=12\) and the estimates \(\hat{\lambda }_{1}\) and \(\hat{\lambda }_{1}\) based on sample autocorrelation coefficients at lags 12 and 24 are given as.

In (7),

Furthermore, the Yule–Walker estimate of \(\mu _\nu\) is

The corresponding estimate of \(\sigma _\nu ^2\) is

Notably,

and

Let \(\varvec{\Theta }=\left( \mu _\nu , \lambda _1, \lambda _2, \ldots , \lambda _P\right) ^\top\), \(g(\varvec{\Theta },F_t) =\lambda _1W_{t-s}+\lambda _2W_{t-2s}+\cdots +\lambda _PW_{t-Ps}+\mu _\nu\) and \(Q(\varvec{\Theta })= \sum _{Ps+1}^n \left( W_t-g(\varvec{\Theta },F_t)\right) ^2.\)

The CLS estimator \(\hat{\varvec{\Theta }}\) of \(\varvec{\Theta }\) is obtained by minimizing \(Q(\varvec{\Theta })\). In this case, we solve the following equations simultaneously:

Certain properties of CLS estimators are well-known (see, Klimko and Nelson25).

The minimum mean squared error (MMSE) predictor of \(W_{n+1}\) is

In general, the MMSE of \(W_{n+m}\) becomes

We have carried out a simulation study to assess the performance of the Yule–Walker (YW) and conditional least squares(CLS) estimates. For the sake of brevity we consider INAR\((2)_{S}\) model and its parameter estimation. We simulated 1000 series from the proposed model for various parameter combinations and for various sample sizes as given in Table 1. We have computed the mean estimates and their related MSEs based on 1000 simulations. In this study, we estimated two autoregressive parameters (\(\lambda _{1}\) and \(\lambda _{2}\)) and the mean of the innovation distribution (\(\mu _{\nu }\)). For simulation from an innovation distribution, we use the parameter combinations: \(\alpha =(4,1,2,0.5)\) and \(\delta =(-2,0.5,-1,0.7)\), which give the mean of the innovation distribution as \(\mu _{\nu }=(0.9,0.75,1.3333,1.00)\). The estimation of AR coefficient and all the parameters of innovation distribution have been studied in detail for INAR\((1)_{S}\) model in “Construction of the proposed seasonal INAR(1) process and its properties”. From Table 1, it can be seen that the estimates perform well, and their mean squared errors (MSEs) decrease as the sample size increases. It also can be seen that the CLS estimation performs much better than the YW estimation in terms of the MSE. As the joint distribution of \(\lambda _{1} *W_{t-s}\) and \(\lambda _{2} *W_{t-2s}\) is not tractable, we can not find the conditional distribution and hence maximum likelihood estimation cannot be attempted, as it has been done for INAR\((1)_{S}\) model in “Construction of the proposed seasonal INAR(1) process and its properties”.

Construction of the proposed seasonal INAR(1) process and its properties

In this section, the definition of the first-order seasonal INAR process based on zero-modified Poisson–Lindley (ZMPL) innovations12 and NBT operation is given, with an extensive study. Using the NBT operator, we define the proposed seasonal INAR(1) process as follows.

Definition 3.1

Let \(\lbrace W_t\rbrace\) be a discrete-time nonnegative integer-valued process. Then, the process is a seasonal INAR(1) process with zero-modified Poisson–Lindley innovations if

where ‘s’ represents the seasonal period such that \(s \in \mathbb {N}^+\), \(\mathbb {N}^+\) is the set of positive integers, \(\lbrace W_t\rbrace\) stands for a sequence of identically distributed nonnegative random variables, \(\lbrace \nu _t\rbrace\) is an innovation sequence of i.i.d. ZMPL random variables not depending on the past values of \(\lbrace W_t\rbrace\) and the thinnings of \(\lbrace W_t\rbrace\) and the random variables \(\lbrace W_{t-s}\rbrace\), \(\lbrace W_{t-2s}\rbrace\), \(\ldots ,\) are independent.

In the sequel, the notation INAR(1)\(_s\)ZMPL process is used to represent the model defined in Eq. (12). A random variable X follows the ZMPL distribution with parameters \(\alpha\) and \(\delta\) if its PMF is

where \(\alpha >0\) and \(-\frac{\alpha ^2(\alpha +2)}{\alpha ^2+3\alpha +1} \le \delta \le 1.\) Thus, the unconditional mean and variance of the random variable \(\lbrace \nu _t\rbrace\) are, respectively, given by

and

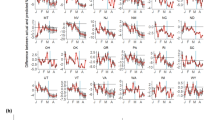

Following26, the distribution of the random variable (RV) \(\nu _t\) is overdispersed if \(\delta \in [0, 1)\), while it is underdispersed when \(\alpha > \sqrt{2}\) and \(\delta \in \left( \frac{-\alpha ^2(\alpha +2)}{\alpha ^2+3\alpha +1}, 0 \right) .\) The INAR(1)\(_s\)ZMPL process comprises ‘s’ mutually independent INAR(1) processes with ZMPL innovations, which are constructed using the NBT operator such that they have the autoregressive parameter \(\lambda\) and an innovation distribution. The simulated sample paths, sample ACFs and sample PACFs of the process for various parameter combinations are given in the Fig. 1. The seasonality in the simulated data can be seen from the time series plots as well as ACF plots in the figure. Let \(W_t^{(r)}=W_{ts+r}, \quad t \in \mathbb {N}_0\), where \(\mathbb {N}_0\) is a set of nonnegative integers. For \(r=0,1,2, \ldots , s-1\), the process \(\lbrace W_t^{(r)} \rbrace\) satisfies the model:

Here, the innovation sequences \(\lbrace \nu _t^{(r)} \rbrace\) satisfy the equation \(\nu _t^{(r)}=\nu _{ts+r}\). The independence of \(\lbrace W_t^{(r)} \rbrace\) is the direct implication of the independence of \(\lbrace \nu _t^{(r)} \rbrace\) and the counting processes that define the requisite thinning operators. Additionally, the process defined in Eq. (12) is an s-step Markov chain, implying that

\(P\left( W_t=w_t\vert W_{t-1}=w_{t-1}, \ldots , W_0=w_0\right) =P\left( W_t=w_t\vert W_{t-s}=w_{t-s}\right), \quad w_0, w_1, \ldots , w_{s-1} \in \mathbb {N}_0.\)

The conditional mean of the process is

Hence, the unconditional expectation of the process is

Assuming stationarity and using Eq. (17), the unconditional mean is found to be

The conditional variance satisfies the equation:

The unconditional variance is obtained as

Hence, it follows that

Suppose that \(W_{(k+h)s+j}\) and \(W_{ks+i}\) satisfy the process in Eq. (12), \(h \in \mathbb {N}^+\) and \(k \in \mathbb {N}_0,~ i, j=1,2, \ldots , s\). In order to gain insight into conditional moments based on the seasonal period as well as the autocorrelation function of the process, we proceed to give the following propositions:

Proposition 3.1

The conditional expectation of \(W_{(k+h)s+j}\) given \(W_{ks+i}\) is

and \(\lim \nolimits _{h \rightarrow \infty } \text {E}\left( W_{(k+h)s+j} \vert W_{ks+i} \right) =\mu _W.\)

Proof

Let \(W_{(k+h)s+j}=W_{k+h}^{(j)}\) and \(W_{ks+i}=W_{k}^{(i)}\). If \(i\ne j\), the conditional expectation of \(W_{k+h}^{(j)}\) given \(W_{k}^{(i)}\) is equal to \(\frac{\mu _\nu }{1-\lambda }\), which is the unconditional expectation of \(W_t\). If \(i=j\), we have

Consequently,

The proof comes to an end here. \(\square\)

Proposition 3.2

The conditional variance of \(W_{(k+h)s+j}\) given \(W_{ks+i}\) is

and \(\lim \nolimits _{h \rightarrow \infty } \text {Var}\left( W_{(k+h)s+j} \vert W_{ks+i} \right) =\sigma _W^2.\)

Proof

If \(i \ne j\), \(W_{k+h}^{(j)}\) and \(W_{k}^{(i)}\) are independent. Thus, the conditional variance of \(W_{(k+h)s+j}\) given \(W_{ks+i}\) is \(\frac{\lambda (\lambda +1)\mu _\nu +(1-\lambda )\sigma _\nu ^2}{(1-\lambda )(1-\lambda ^2)}\), the unconditional variance of \(W_t\).

If \(i=j\), then

where

Notably, \(\text {Var}\left( W_k^{(j)}\big \vert W_k^{(j)} \right) =0\). Also, after some algebra with the earlier discussions, we can find that,

In the light of the above, we have

Hence,

\(\square\)

Proposition 3.3

The covariance of \(W_{(k+h)s+j}\) and \(W_{ks+i}\) has the form

Proof

Once \(i \ne j\), the variables \(W_{(k+h)s+j}\) and \(W_{ks+i}\) are uncorrelated. In this case, \(\text {Covar}\left( W_{(k+h)s+j}, W_{ks+i} \right) =0.\)

Dividing the autocovariance function by \(\text {Var}\left( {W_t}\right)\), the autocorrelation function (ACF) is obtained as

Here, we have an exponentially decaying autocorrelation function. \(\square\)

Techniques for estimating model parameters

Three popular and widely used methods of point estimation for the parameters of the INAR\((1)_{S}\)ZMPL are adopted in this section. These are, the Yule–Walker, conditional least squares and conditional maximum likelihood methods. In each of the methods, we assume a realization of the seasonal count time series.

Yule–Walker approach

To estimate the parameters \(\lambda\), \(\delta\) and \(\alpha\) of the INAR(1)\(_s\)ZMPL process, we form three equations by equating \(\hat{\rho }(s)\), \(\text {E}\left( W_t\right)\) and \(\text {Var}\left( W_t\right)\) to \(\frac{\hat{\gamma }(s)}{\hat{\gamma }(0)}\), \(\overline{W}\) and \(\hat{\gamma }(0)\), respectively.

Notably, \(\overline{W}=\frac{ \sum _{t=1}^{n} W_t}{n}\), \(\hat{\gamma }(s)=\frac{1}{n} \sum _{t=s+1}^{n}\left( W_t-\overline{W}\right) \left( W_{t-s}-\overline{W}\right)\), and n are, respectively, the sample mean, sample autocorrelation function and length of the time series. If \(\hat{\lambda }_{\text {YW}}\), \(\hat{\delta }_{\text {YW}}\) and \(\hat{\alpha }_{\text {YW}}\) are the Yule–Walker (YW) estimators of \(\lambda\), \(\delta\) and \(\alpha\) in that order, then the following equations are needed:

where

From Eq. (20), we obtain

Using Eqs. (21) and (22), the following cubic equation is obtained:

where

R packages for solving polynomial equations, particularly the cubic equation, abound. In “Yule–Walker approach” of this study, polyroot R function is used to obtain the roots of Eq. (23) after the coefficients have been calculated from the given time series. Though the equation has three roots, only the root that gives an acceptable value of \(\hat{\delta }_{YW}\) will be used for further computations.

Approach to conditional least squares estimation

Consider the process in Eq. (12). Let \(\hat{\varvec{\xi }}_{\text {CLS}}=\left( \hat{\lambda }_{CLS}, \hat{\delta }_{CLS} , \hat{\alpha }_{CLS}\right) ^T\) be the vector of the conditional least squares (CLS) estimators of \({\varvec{\xi }_{\text {CLS}}}=\left( {\lambda }_{CLS}, {\delta }_{CLS} , {\alpha }_{CLS}\right) ^T\). Then

where \(C_n(\varvec{\xi })=\sum _{t=s+1}^n\left( W_t-\lambda W_{t-s}-(1-\delta ) \frac{\alpha +2}{\alpha (\alpha +1)}\right) ^2.\)

Here, it is necessary to obtain and equate each of \(\frac{\partial C_n( \varvec{\xi })}{\partial \lambda } \big \vert _{\lambda =\hat{\lambda }_{CLS},~ \delta =\hat{\delta }_{CLS},~ \alpha =\hat{\alpha }_{CLS}},\),

\(\frac{\partial C_n( \varvec{\xi })}{\partial \delta } \big \vert _{\lambda =\hat{\lambda }_{CLS},~ \delta =\hat{\delta }_{CLS},~ \alpha =\hat{\alpha }_{CLS}}\) and \(\frac{\partial C_n(\varvec{\xi })}{\partial \alpha }\big \vert _{\lambda =\hat{\lambda }_{CLS},~ \delta =\hat{\delta }_{CLS},~ \alpha =\hat{\alpha }_{CLS}}\) to zero, leading to the following equations, respectively:

Equations (26) and (27) are identical. Hence, we solve for \(\hat{\lambda }_{\text {CLS}}\) using Eqs. (25) and (26). The concerned estimator has the form:

Suppose \(C_n(\delta , \alpha )\) is a function obtained when we substitute \(\hat{\lambda }_{\text {CLS}}\) for \({\lambda }\) in \(C_n(\varvec{\xi })\). We minimize \(C_n(\delta , \alpha )\) in order to find \(\hat{\delta }_{\text {CLS}}\) and \(\hat{\alpha }_{\text {CLS}}\). The minimization process is done using a suitable numerical method. To summarize, no closed form expression can be found for any of \(\hat{\delta }_{\text {CLS}}\) and \(\hat{\alpha }_{\text {CLS}}\).

Conditional maximum likelihood estimation

In view of the s-step Markovian property of the seasonal INAR(1)\(_s\)ZMPL process, we define the transition probabilities as

Using this we can write the detailed transition probabilities as

Let \(\hat{\varvec{\xi }}_{\text {CML}}\) be the conditional maximum likelihood(CML) estimator of \({\varvec{\xi }}\). To find \(\hat{\varvec{\xi }}_{\text {CML}}\), maximize the conditional log-likelihood function below:

Obviously, \(\hat{\varvec{\xi }}_{\text {CML}}\) has no closed form. Furthermore, the required estimators can only be found through a numerical technique.

Simulation study for the INAR\((1)_{s}\)ZMPL

In this section, we have carried out a simulation study to assess the performance of the proposed estimation methods for INAR\((1)_{s}\)ZMPL process, paying attention to the cases of zero-inflation, overdispersion, zero-deflation and underdispersion baesd on the INAR\((1)_{s}\)ZMPL process. We have simulated 1000 series of the sample sizes 100, 300, 500 and 1000 for various parameter combinations and for seasonal period \(s=52\). We have computed the mean and the mean squared errors (MSEs) of the estimates over all the 1000 simulations. All the quantities are given in the Table 2, the first row for each parameter and sample size (n) combination represents the mean estimate and second row represents the MSE. From the table, it can be seen that the MSE is decreasing with the increase in sample size, indicating the consistency of the estimates. The numerical optimization of the likelihood function to obtain CML estimates is done using the function ‘constrOptim’ in R software. In our setup, constraints are imposed on all the parameters. In particular, the upper limit of \(\delta\) is 1 while its lower limit is a negative quantity that is a function of \(\alpha\). These constraints are considered while numerical optimization using ‘constrOptim’. In all, CML estimates correspond to minimum MSEs compared to the YW and CLS estimates.

Model-based forecasting

Time series models are usually constructed with a view to forecasting future values of time series data. In the case of INAR\((1)_{s}ZMPL\) process, we handle the problem of forecasting a future observation \(W_{n+h}, h \in \mathbb {N}\) using the information \(\mathscr {F}_n\) available upto time n. From Eq. (12), we deduce using induction and properties of the NBT that

where \(\overset{\text {d}}{=}\) implies equality in distribution, \(q=\lceil \frac{h}{s}\rceil\) is the integer part of \(\frac{h}{s}\). That is \(\lceil y \rceil =\text {min}\left[ n \in \mathbb {N}^+ \big \vert y \le n \right]\). We round off this section with the following proposition.

Proposition 3.4

Consider the INAR(1)\(_s\) ZMPL process in Eq. (12). Then

-

(a)

The h-step conditional expectation is

$$\begin{aligned} \text {E}\left( W_{n+h} \big \vert \mathscr {F}_n\right) = \lambda ^q \left( W_{n+h-qs}-\text {E}\left( W_t\right) \right) +\mu _\nu . \end{aligned}$$ -

(b)

The h-step conditional variance is

$$\begin{aligned} \text {Var}\left( W_{n+h} \big \vert \mathscr {F}_n\right)&= (1+\lambda )\frac{\lambda ^{q}\left( 1-\lambda ^{q}\right) }{1-\lambda }W_{n+h-qs} \\ {}&\quad +\frac{\lambda (1+\lambda )}{1-\lambda } \left( \frac{1-\lambda ^{2(q-1)}}{1-\lambda ^{2}}-\frac{\lambda ^{(q-1)}\left( 1-\lambda ^{(q-1)}\right) }{1-\lambda }\right) \mu _\nu \\ {}&\quad +\left( \frac{1-\lambda ^{2q}}{1-\lambda ^2}\right) \sigma _\nu ^2. \end{aligned}$$ -

(c)

\(\lim \nolimits _{q \rightarrow \infty } \text {E}\left( W_{n+h} \big \vert \mathscr {F}_n\right) = \text {E}\left( W_t \right) .\)

-

(d)

\(\lim \nolimits _{q \rightarrow \infty } \text {Var}\left( W_{n+h} \big \vert \mathscr {F}_n\right) = \text {Var}\left( W_t \right) .\)

It is not difficult to prove Proposition 3.4 using knowledge of the proofs of Propositions 3.1 to 3.2 and Eq. (30). Therefore, we omit the proof of Proposition 3.4. The h-step ahead forecast can be written as

Model comparison with seasonal ARIMA

In this section, we have compared the forecast performance of the proposed model and its counterpart \(SARIMA(0,0,0)(1,0,0)_{s}\) model. Here, we have simulated 1000 series each of size 500 from the INAR(1)\(_s\) ZMPL model with various parameter combinations given in Table 3 and the seasonal period \(s=52\). For each series, the first 495 observations are used for the estimation of the parameters and last five observations are used to check the forecast accuracy. We have used three forecast accuracy measures to assess the forecast performance of the model. These measures include prediction root mean squared error (PRMSE), prediction mean absolute error (PMAE) and percentage of true prediction (PTP). Notably,

where \(\hat{X}_{(t+i)}^{(k)}=\widehat{mean}(X_{t+i}|X_{t-k+i})\) is the k-step ahead conditional mean of the fitted process,

where \(\hat{X}_{(t+i)}^{(k)}=\widehat{median}(X_{t+i}|X_{t-k+i})\) is the k-step ahead conditional median of the fitted process, and

where \(I(\cdot )\) denotes the indicator function. Here, \(X_{(t+i)}\) is the actual ith observation at time point \((t + i)\) and \(\hat{X}_{(t+i)}^{(k)}\) is the k-step ahead forecast value at the same time point.

We have obtained the conditional mean of the process as traditional forecast while the median and mode from one-step ahead forecast distribution serve as coherent forecasts. For the computation of PMAE in the SARIMA model, we have used mean forecast while in the INAR(1)\(_s\) ZMPL model, we have used the median of the one-step ahead forecast distribution. In the SARIMA model, we can have only mean as the forecast, for computation of percentage of true prediction (PTP) based on mean we have considered rounded mean for both models. We have computed median and mode from the forecast distribution for the computation of PTP-Med and PTP-Mode. In INAR literature, the median and mode of the forecast distribution are called the coherent forecasts.

From Table 3, it can be seen that in terms of PRMSE both models perform equally well, as their forecasts are the same. However, the PMAE for the SARIMA model is higher than that of the PMAE for the proposed model, showing the better performance of the proposed model than the SARIMA model. This may be because median is used in the proposed model and mean is used in the SARIMA model for the computation of the PMAE. But when it comes to the coherent forecast performance, the proposed model outperforms the SARIMA model, which can be seen from the PTP for median and mode. When data are overdispersed, as it is the case in the top panel in the table, the coherent forecast performs five times better than the traditional forecast. Both models perform equally well when the data are underdispersed.

Application of INAR(1)\(_s\)ZMPL model

We have analyzed weekly Influenza data from the Breisgau- Hochschwarzwald county of Baden–Württemberg state, Germany for the years 2001 to 2008 (https://survstat.rki.de). The data have mean 0.4735 and variance 2.5969. Clearly the data are overdispersed. From the relative frequency plot in Fig. 2, we can see that the number of zeros in the data is excessively high. Hence, this series can be modeled using the proposed zero-modified model. The seasonality of the data can be seen from the ACF and PACF plot in Fig. 3, as it is characterized by the sinusoidal autocorrelation pattern. Also, the data are seasonal because the corresponding ACF plot has significant peaks at multiples of 54. From Fig. 4, it can be seen that the AIC and BIC values are small for the period \(s=54\). Hence the seasonal period of the series (\(s=54\)) is confirmed.

From Table 4, it can be seen that the proposed model is the best model for the data. We have obtained point forecast using the INAR(1)\(_s\)ZMPL model for the given data. The data has 416 observations. We have used 411 observations (training data) for estimating the parameters and last five observations are predicted using the fitted model. We have used the conditional ‘h’ step ahead mean as the forecast function. The parameter estimates (CML) based on training data are \(\hat{\lambda }=0.2497\), \(\hat{\alpha }=0.5289\), \(\hat{\delta }=0.8619\). The mean forecast \(\hat{X}_{t+h}\) and the rounded mean forecast [\(\hat{X}_{t+h}\)] are given in the Table 5. From this table it can be seen that the model predicts the future observations with good accuracy.

Conclusion

In this paper, we have introduced a seasonal, nonnegative, integer-valued autoregressive model, with multiple features, using the negative binomial thinning operator and zero-modified Poisson-Lindley distributed innovations. General seasonal INAR model of order P has been discussed in the paper and it has been illustrated in detail for order one. This model, denoted by INAR(1)\(_s\)ZMPL model, is the first of its kind for modeling zero-deflated or zero-inflated seasonal time series of counts. Some theoretical results are determined for the model. Specifically, means, variances and autocorrelation function are obtained. In estimating the parameters of the model, the Yule-Walker, conditional least squares and conditional maximum likelihood approaches are given due consideration. The simulation results obtained in this study demonstrate the superiority of the conditional maximum likelihood method over the other two point estimation methods of estimating the model parameters. A real-life application of the model was investigated by analyzing a zero-inflated overdispersed seasonal count time series. The model fit was compared to the fits of three competing models. In the final analysis, the proposed model outperforms the other models.

Data availability

The datasets used and/or analysed during the current study can be provided by the corresponding author upon reasonable request.

References

Tamerius, J. et al. Global influenza seasonality: Reconciling patterns across temperate and tropical regions. Environ. Health Perspect. 119, 439–445 (2011).

Al-Osh, M. A. & Alzaid, A. A. First-order integer-valued autoregressive (INAR (1)) process. J. Time Ser. Anal. 8, 261–275 (1987).

McKenzie, E. Some simple models for discrete variate time series 1. J. Am. Water Resour. Assoc. 21, 645–650 (1985).

Altun, E. A new one-parameter discrete distribution with associated regression and integer-valued autoregressive models. Math. Slov. 70, 979–994 (2020).

Mohammadpour, M., Bakouch, H. S. & Shirozhan, M. Poisson–Lindley INAR(1) model with applications. Braz. J. Probab. Stat. 32, 262–280 (2018).

Liu, Z. & Zhu, F. A new extension of thinning-based integer-valued autoregressive models for count data. Entropy 23, 62 (2020).

Ristić, M. M., Bakouch, H. S. & Nastić, A. S. A new geometric first-order integer-valued autoregressive (NGINAR (1)) process. J. Stat. Plan. Inference 139, 2218–2226 (2009).

Bourguignon, M., Rodrigues, J. & Santos-Neto, M. Extended Poisson INAR (1) processes with equidispersion, underdispersion and overdispersion. J. Appl. Stat. 46, 101–118 (2019).

Bourguignon, M. & Weiß, C. H. An INAR (1) process for modeling count time series with equidispersion, underdispersion and overdispersion. TEST 26, 847–868 (2017).

Eliwa, M. S., Altun, E., El-Dawoody, M. & El-Morshedy, M. A new three-parameter discrete distribution with associated INAR (1) process and applications. IEEE Access 8, 91150–91162 (2020).

Barreto-Souza, W. Zero-modified geometric INAR (1) process for modelling count time series with deflation or inflation of zeros. J. Time Ser. Anal. 36, 839–852 (2015).

Sharafi, M., Sajjadnia, Z. & Zamani, A. A first-order integer-valued autoregressive process with zero-modified Poisson–Lindley distributed innovations. Commun. Stat. Simul. Comput. 20, 1–18 (2020).

Awale, M., Kashikar, A. & Ramanathan, T. Modeling seasonal epidemic data using integer autoregressive model based on binomial thinning. Model. Assist. Stat. Appl. 15, 1–17 (2020).

Arwaekaji, M., Sillabutra, J., Viwatwongkasem, C. & Soontornpipit, P. Forecasting influenza incidence in public health region 8 Udonthani, Thailand by SARIMA model. Curr. Appl. Sci. Technol. 20, 10–55003 (2022).

Zhao, Z. et al. Study on the prediction effect of a combined model of SARIMA and LSTM based on SSA for influenza in Shanxi province, China. BMC Infect. Dis. 23, 71 (2023).

Riaz, M. et al. Epidemiological forecasting models using ARIMA, SARIMA, and Holt-Winter multiplicative approach for Pakistan. J. Environ. Public Health 20, 23 (2023).

Quddus, M. A. Time series count data models: An empirical application to traffic accidents. Accid. Anal. Prev. 40, 1732–1741 (2008).

Homburg, A., Weiß, C. H., Alwan, L. C., Frahm, G. & Göb, R. A performance analysis of prediction intervals for count time series. J. Forecast. 40, 603–625 (2021).

Kong, J. & Lund, R. Seasonal count time series. J. Time Ser. Anal. 44, 93–124 (2023).

Bourguignon, M., LP Vasconcellos, K., Reisen, V. A. & Ispány, M. A Poisson INAR (1) process with a seasonal structure. J. Stat. Comput. Simul. 86, 373–387 (2016).

Okereke, E. W., Gideon, S. N. & Omekara, C. O. A seasonal INAR (1) process with geometric innovation for over dispersed count time series. Int. J. Stat. Reliab. Eng. 6, 82–100 (2020).

Tian, S., Wang, D. & Cui, S. A seasonal geometric INAR process based on negative binomial thinning operator. Stat. Pap. 61, 2561–2581 (2020).

Jin-Guan, D. & Yuan, L. The integer-valued autoregressive (INAR (p)) model. J. Time Ser. Anal. 12, 129–142 (1991).

Merabet, F. & Zeghdoud, H. On modelling seasonal ARIMA series: Comparison, application and forecast (number of injured in road accidents in northeast algeria). Wseas Trans. Syst. Control 15, 235–246 (2020).

Klimko, L. A. & Nelson, P. I. On conditional least squares estimation for stochastic processes. Ann. Stat. 20, 629–642 (1978).

Xavier, D., Santos-Neto, M., Bourguignon, M. & Tomazella, V. Zero-modified Poisson-Lindley distribution with applications in zero-inflated and zero-deflated count data. arXiv:1712.04088 (arXiv preprint) (2017).

Acknowledgements

The work was supported by the Deanship of Scientific Research, Vice Presidency for Graduate Studies and Scientific Research, King Faisal University, Saudi Arabia [Grant No. 3290].

Keywords: Seasonal INAR time series, Forecasting, Statistical model, Estimation, Simulation.

Author information

Authors and Affiliations

Contributions

Conceptualization, H.S.B. and E.W.O; methodology, E.W.O., F.E.A. and H.S.B.; software, M.A.; formal analysis, E.W.O., M.A., F.E.A. and H.S.B.; investigation, E.W.O., M.A., F.E.A. and H.A.; writing—original draft preparation, E.W.O. and M.A.; writing—review and editing, F.E.A. and H.A.; funding acquisition, F.E.A. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Almuhayfith, F.E., Okereke, E.W., Awale, M. et al. Some developments on seasonal INAR processes with application to influenza data. Sci Rep 13, 22037 (2023). https://doi.org/10.1038/s41598-023-48805-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-48805-y

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.