Abstract

A medical check-up during driving enables the early detection of diseases. Heartbeat irregularities indicate possible cardiovascular diseases, which can be determined with continuous health monitoring. Therefore, we develop a redundant sensor system based on electrocardiography (ECG) and photoplethysmography (PPG) sensors attached to the steering wheel, a red, green, and blue (RGB) camera behind the steering wheel. For the video, we integrate the face recognition engine SeetaFace to detect landmarks of face segments continuously. Based on the green channel, we derive colour changes and, subsequently, the heartbeat. We record the ECG, PPG, video, and reference ECG with body electrodes of 19 volunteers during different driving scenarios, each lasting 15 min: city, highway, and countryside. We combine early, signal-based late, and sensor-based late fusion with a hybrid convolutional neural network (CNN) and integrated majority voting to deliver the final heartbeats that we compare to the reference ECG. Based on the measured and the reference heartbeat positions, the usable time was 51.75%, 58.62%, and 55.96% for the driving scenarios city, highway, and countryside, respectively, with the hybrid algorithm and combination of ECG and PPG. In conclusion, the findings suggest that approximately half the driving time can be utilised for in-vehicle heartbeat monitoring.

Similar content being viewed by others

Introduction

According to the World Health Organization, cardiovascular diseases cause 32% of global deaths, which are 17.9 million deaths per year1. Stroke and heart attacks are responsible for 85% of these deaths1. Atrial fibrillation is a risk factor for both stroke2 and heart failure3, and often yields an abnormally fast and irregular heartbeat4. Continuous monitoring of heartbeats enables early detection, improves therapeutic outcomes, and decreases the mortality rate5. In Western countries, people spend about 35 min per day driving a vehicle6. This time could be used for a medical check-up – without any additional burden on behaviour change or time – and continuous monitoring can be integrated into our daily life7. So far, commercial in-vehicle systems for health-related monitoring focus on the tiredness tracking of the driver. For instance, they track the eyes and movements of the steering wheel or the pedals, as well as car-to-lane distances8,9,10.

However, it is also possible to monitor individual health in a medical sense7. Several publications have focused on heartbeat detection during driving11,12,13,14. In our previous work, we conducted a review to identify relevant sensors for in-vehicle health monitoring15. In 2010, Vavrinsky et al. integrated one-lead electrocardiogram (ECG), galvanic skin response, and temperature sensors into each side of the steering wheel12. In the same year, Lazaro et al. applied radar from the seat backrest for heart and respiratory rate detection13. In 2011, Walter et al. integrated capacitive ECG and ballistocardiography (BCG) into the seat belt14. In 2012, Gomez-Clapers & Casanella attached electrodes for ECG to the steering wheel to derive the heart rate16. In 2015, Kuo et al. introduced image-based photoplethysmography (iPPG) for drivers’ heart rate detection17. More recently, we developed ECG electrodes integrated into the steering wheel18. We replaced the previous copper electrodes19 with printed and flexible electrodes to improve the SNR. To prevent any impact on driving behavior, our flexible and thin polyurethane electrodes exactly fit the three-dimensional (3D) shape of the steering wheel18. However, the quality of health monitoring based on a single sensor is insufficient11. Redundant sensor systems have been used in aerospace20 and autonomous driving21. They can guard against the fact that one defective sensor may yield wrong assumptions and could cause severe adverse events.

In a redundant sensor system, a fusion of the sensor data is needed. Münzner et al.22 compared three convolutional neural network (CNN)-based approaches: early, signal-based late, and sensor-based late fusion. The early fusion merges data in the convolutional layer. This layer extracts important signal features. Sensor-based late fusion merges the input data in the dense layer. The dense layer classifies the signal into binary classes. The signal-based late fusion includes two CNNs per signal, which increases the number of features and the computing time. Tejedor et al. reviewed signal fusion in the biomedical domain, in particular, for reliable heart rate detection23. They highlight the CNN-based information fusion from Chandra et al.24. This algorithm can be applied to noisy data. Furthermore, we focused on the development of a redundant sensor system in a driving simulator, which was composed of ECG, PPG, BCG, and iPPG sensors19.

We use the sensor system as well as the data fusion algorithm. Based on our previous results, we select the sensors with the best performance, which are ECG, PPG, and iPPG. We record the data with 19 subjects under real driving conditions. Furthermore, we develop fusion approaches and determine the performance gain on the reliability of heartbeat detection. Altogether, we want to answer the research questions: (1) Which driving time is utilisable to detect the heartbeat robustly and accurately in the vehicle?, (2) What is the most reliable combination of sensors, and (3) How does the heartbeat detection performance vary between different driving scenarios?.

Methods

Ground truth

We obtain the ground truth with an ECG sensor (BiosignalPlux Explorer, Plux Wireless Biosignals, Lisbon, Portugal) connected to three adhesive electrodes, which we attach to the usual positions on the chest25. We make a test recording to ensure the electrodes are in the correct position. The R-waves are detected by the simultaneous truth and performance level estimation (STAPLE) algorithm from Kashif et al.26. The STAPLE algorithm includes nine state-of-the-art algorithms: Pan and Tompkins27, Chernenko28, Arzeno et al.29, Manikandan et al.30, Lentini et al.31, Sartor et al.32, Liu et al.33, Arteaga-Falconi et al.34, and Khamis et al.35. STAPLE determines the positions of the R-waves based on a majority vote. We implement and execute our algorithms using a script-based math package (MATLAB version R2021a, The MathWorks, Natick, United States).

Experimental design

We record data from \(N=19\) volunteering subjects driving a vehicle with an automatic gear shift (VW Tiguan 2.0 4M RL, Volkswagen AG, Wolfsburg, Germany). The volunteers from diverse ethnicities differ in gender (female: \(n=6\) and male: \(n=13\)), age (20-67 years), height (164-195 cm), weight (63-120 kg), having a beard (\(n=4)\), and wearing glasses (\(n=8\)). For volunteers with long hair (\(n=7\)), the outer segment of the forehead is covered. We record 15 min for each scenario city, highway, and countryside in Braunschweig (Lower Saxony, Germany).

To ensure comparable recordings, all volunteers drove the same route. The city requires many start and stop maneuvers. The first part of the highway is bumpy. In addition, a construction zone causes traffic jams, and a long tunnel degrades the light for camera recording. The countryside route leads along rural roads and smaller villages and has a railroad crossing.

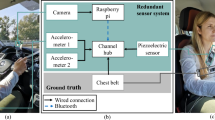

Sensor system

We use steering wheel-based contact ECG and PPG sensors and RGB camera for iPPG in front of the driver behind the steering wheel as redundant recording systems. We select an ECG sensor (BiosignalPlux Explorer, Plux Wireless Biosignals, Lisbon, Portugal) and a PPG sensor with two integrated LEDs for the red and infrared spectrum (BiosignalPlux Explorer, Plux Wireless Biosignals, Lisbon, Portugal). We positioned the PPG sensor on the steering wheel’s right side at the level of the index finger. A channel hub (BiosignalPlux Explorer, Plux Wireless Biosignals, Lisbon, Portugal) connects the ECG and PPG sensors and sends the recorded data via Bluetooth to a single-board computer (Raspberry Pi, Raspberry Pi Foundation, Cambridge, United Kingdom). The sampling rate for ECG and PPG is 500 Hz. The red, green, and blue (RGB) camera (Raspberry Pi Foundation, Cambridge, United Kingdom) has a wired connection to the single-board computer (Fig. 1). It records 10 frames per second (FPS) with 720 by 1280 pixels. For time synchronization, we develop a Python script on the single-board computer with an integrated counter that assigns the same ascending number to each sample at the same point in time. The subjects in Figs. 1 and 2 signed an informed consent and agreed to the publication of the image in an online open-access journal.

Ethics approval

We record all data based on the Helsinki Declaration. The study design was approved by the ethics committee (Internal process number: D_2022-13) of the TU Braunschweig (Lower Saxony, Germany)36. Informed consent was obtained from participants.

Signal pre-processing

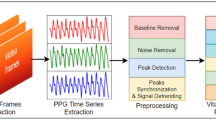

Face recognition and face segmentation

We use the face recognition engine Seetaface (version SeetaFace237) to detect landmarks on the driver’s face. As a first step, it applies a funnel-structured cascade schema for face detection. Second, it cascades several stacked auto-encoder networks for landmark detection and inherits a modified AlexNet for face composition38. We extract the cheeks as a region of interest (ROI) based on the landmarks. According to Kamshilin et al.39, we extract the green channel and detect color changes caused by the systolic and asystolic blood flow (Fig. 2). We select the right and left cheeks as ROI because more capillaries are in these ROIs compared to the forehead, leading to better results than other regions40,41. Moreover, hair and beard, as well as glasses, may cover the skin in the ROI (Fig. 2). Varying illumination further impacts the signal quality17.

Recording during the driving scenario highway (top) and city (bottom). Left: Landmark detection with SeetaFace. Middle: Applied face segments. Right: Face segments for cheek. Figure 2 is generated with MATLAB (MATLAB version R2021a, The MathWorks, Natick, United States).

Implementation for statistical analysis

We generated the ground truth with the STAPLE algorithm from Kashif et al.26 that is implemented in MATLAB (version R2021a, The MathWorks, Natick, United States) and uses the MATLAB Signal Processing Toolbox. We extracted the facial landmarks with SeetaFace237, which uses packages from OpenCV (version 4.5) and CMake (version 3.16). For the fusion approach, we used the libraries TensorFlow (version 2.3.1) and Keras (version 2.4.3) with Python (version 3.8.5) as the programming language. The evaluation is made with Numpy (version 1.19.0).

Input data

The input contains the ECG (steering wheel) and PPG signals, as well as the green channel of the RGB video. According to Chandra et al. 24, we (i) up-sampled the data to 500 Hz, (ii) applied median filtering of size 100 samples, and (iii) normalized the amplitudes to the range of \([-1,1]\). The signal quality changes over time due to the movements of the driver and vehicle, which are caused by normal driving activities (Figs. 3, 4). This leads to baseline wander, noise, and artifacts (Fig. 4).

However, the recordings of the reference ECG have a high signal-to-noise ratio (SNR), which is used as a ground truth. The arbitrary unit (au) represents the unit for PPG and iPPG (Figs. 3, 4). As suggested by Chandra et al.24, we split the signal into snippets of 501 overlapping samples. The overlap is 490 and 500 for generating training and testing snippets, respectively. To create more training data, the overlap for the training data is reduced. We use leave-one-subject-out cross-validation: one subject is used as a test set, and the remaining as a training set, which is repeated 19 times, and the results are averaged.

Signal fusion

Our hybrid signal fusion approach has a CNN structure and determines the signal segments containing a heartbeat19. The model parameters are matched for driving scenarios. It includes (i) early fusion, (ii) signal-based late fusion, and (iii) sensor-based late fusion. The voting function finally determines the heartbeats’ positions19.

The input layer is the first layer, and the inputs are ECG, PPG, and iPPG (Figs. 3 and 4). The convolutional layer extracts features from the signals and generates a feature map. In line with Chandra et al.24, we use two filters with a kernel size of 20 for each sensor signal in the convolutional layer. To prevent over-fitting, the dropout layer has a dropout rate of 0.5. The pooling layer minimises unnecessary information with the function MaxPooling1D, and the pool size is 2. The dense layer binary classifies snippets: no heartbeat (class 0) and heartbeat (class 1). We choose the sigmoid as an activation function and an Adam optimizer with a learning rate of 0.001. The output layer generates a vector \(\hat{Y}\) of multiple labels that are either 0 or 1. The voting function is independent of the CNN and processes \(\hat{Y}\) for the final decision based on a majority vote. Thereby, j represents the number of a snippet, and s stands for the signal. The output vector is19:

We compute training and testing on the high-performance computer Phoenix at TU Braunschweig42.

Early fusion and signal-based late fusion have a single integrated CNN (Fig. 5). In the context of signal-based late fusion, both CNNs receive identical input data for each signal. In contrast to sensor-based fusion, it extracts much more parameters.

In the early fusion approach, the convolutional layer extracts features from input signals, such as the R-wave in the ECG or the systolic peak in the PPG. These features are subsequently aggregated within a feature map, which is further processed in a dropout layer. In contrast, sensor-based fusion yields a larger number of extracted parameters in comparison to signal-based late fusion. The visualisation shows the hybrid fusion with the input of three signals. The input layer contains one or two signals for the performance comparison. The voting function operates independently of the CNN and evaluates the output vector \(\hat{Y}\) to make the final decision regarding the presence of heartbeats in a given segment. This decision is based on a majority vote, where if more than two sensor fusion approaches yield a label \(\hat{Y} = 1\) it is inferred that the segment contains a heartbeat (class 1), else not (class 0)19.

Evaluation

For evaluation, we follow the approach of Chandra et al.24. Moreover, we chose this approach because it reflects the relation between false-positive (FP), false-negative (FN), and true-positive (TP).

Accordingly, TP, FN, and FP determine whether an R-wave was correctly detected, missed, or a spurious spike was mistaken for an R-wave. Due to the high number of true negatives, specificity is not used. We calculate an overall performance:

that uses the positive predictive value

and the sensitivity

We compare P between the different signal pairs and also the fusion of all three signals in the three scenarios city, highway, and countryside.

Results

Performance of a single signal

The PPG signal achieves the highest performance (\(P_{max} = 57.25\%\)) with early fusion. This means that 57.25% of the recording time, the heartbeat position matches the ground truth (Table 1). In total, PPG delivers the highest score three times for a specific signal fusion approach. Mean\(_{P}\) denotes the mean performance for a signal fusion approach. The signal-based late approach has highest Mean\(_{P}\) (Mean\(_{P}\) = 50.54%).

Performance of two signals

The early fusion of PPG+iPPG has the highest performance \(P=55.79\%\) (Table 2). Overall scenarios, the tables show that the ECG+PPG combination achieves the highest performance two times. In comparison, the sensor pair ECG+iPPG and PPG+iPPG only achieves the highest score once. The hybrid algorithm has the highest Mean\(_{P}\) (Mean\(_{P}\) = 52.61%).

Performance of three signals

The sensor-based late fusion approach achieves the highest performance twice with the scenarios city (\(P=57.23\%\)) and highway (\(P=57.50\%\)) (Table 3). The signal-based late fusion has the highest performance for the countryside (\(P=47.16\%\)). The sensor-based late fusion approach has the second-ranked performance in the countryside (\(P=42.00\%\)). Therefore, this approach yields the best performance. With the sensor-based late fusion approach, the correct heartbeat is detected on average for 52.24% of the driving time. Early fusion yields the lowest score twice: city (\(P=47.90\%\)) and highway (\(P=48.52\%\)). The scenario highway has the highest score (\(P=57.50\%\)), followed by city (\(P=57.23\%\)) and countryside (\(P=47.16\%\)).

Best performance with ECG and PPG and hybrid fusion

The distribution plot shows the performance differences during the different driving scenarios (Fig. 6). The hybrid fusion approach delivers, on average, the best performance with the ECG and PPG sensor (\(Mean_{P}=55.44\%\)) (Table 4). The sensor-based late fusion and early fusion have the lowest performance (Table 4). This is 3.2% higher than with the sensor-based late fusion with ECG+PPG+iPPG.

Discussion

A big challenge of continuous in-vehicle health monitoring is the poor signal quality due to the movements of the car and the driver14, (partly abrupt) changes in the illumination44, characteristics such as skin color45, hair and beard cuts, and physiological parameters: humans with a lower R-wave amplitude yield lower performance. Our sensor system is composed of ECG, PPG, and iPPG on and behind the steering wheel. For ECG, we printed polyurethane electrodes exactly in the 3D shape of the steering wheel18. Comparing the sensor pairs identifies the best-performing sensor, and the selection of sensor pairs increases the resource efficiency.

The novelty of our work is the data collection and analysis of biomedical data under real driving conditions. Moreover, we use a multimodal fusion method, which is already applied to other use cases, such as wearable IoT sensors46. Previous publications for in-vehicle health monitoring mostly focus on the analysis of a single sensor11,14,17. For instance, in 2018, Leicht et al. evaluated the capacitive ECG in a driving simulator47. Although Walter et al. measured the cECG and BCG during driving, they reported the technical implementation rather than the fusion of signals or the portion of usable driving time14. Contrarily, we compared the R peak positions between the ground truth and the steering wheel ECG, and we determined the correct heartbeat in 45.62% of the driving time18. However, the hybrid signal fusion of three signals outperforms our previous results for heartbeat detection under real driving conditions.

Furthermore, we integrated face recognition based on 81 landmarks, which changes with every movement, to detect the face segments. The performance of the iPPG is worse than the performance of the ECG and PPG sensors. This leads to a better performance of two instead of three sensors. However, a redundant system still improves performance and enables continuous monitoring during driving.

The current sensor system can still be improved by additional PPG sensors, which are placed on the steering wheel. Additional PPG sensors could be placed around the steering wheel to increase the possibility of recording a signal with a good SNR. The recording of the iPPG signal is often disturbed by strong sunshine. Also, it is possible to optimize the structure of the signal fusion model. Further research could investigate the difference with respect to the performance with adjusted model parameters by increasing the number of convolutional layers, for instance48. Furthermore, a lower or varying learning rate of the Adam optimizer could improve the results49. There is also an option to use another activation function, e.g., rectified linear unit (ReLU) or leaky ReLU function50.

Majority voting and averaging are two common techniques used in signal fusion, and their effectiveness depends on the specific context and characteristics of the signals being fused. We selected majority voting due to its robustness against outliers and noisy data points, which are less likely to influence the final decision as the majority overrules them. Majority voting is especially valuable in situations where discrete or categorical decisions are required, such as in classification tasks or binary decision-making.

The redundant system also has some limitations. We excluded the BCG sensor because the pretest in the car showed that the SNR was low. For the pre-test, we placed the sensor at the backrest to measure ballistic forces generated by the heart. Additionally, more testers are needed with different skin colors and melanin levels because these factors have an impact on video-based heartbeat detection51. Another important integration is the detection of respiratory rate and temperature. This would enable the detection of a wider variety of diseases. For future work, we will integrate movement detection using depth cameras, as suggested by Fu et al.52. Furthermore, the redundant system can be extended by phonocardiography, which records acoustic signals during a cardiac cycle. Such sensors may be integrated into the seat belt to rest on top of the heart.

To detect heartbeat arrhythmia, it is important to analyse a longer signal segment with a high SNR. The signal visualisation in Fig. 3 shows that the recorded signal contains such signal lengths. The number of longer segments with a high SNR will be lower than predicted, with approximately 55% of usable driving time. To identify such longer segments, an artifact index53 is needed, which includes CAN-BUS data54, such as the acceleration of the steering wheel and the car.

We will publish a research paper focusing on respiratory rate detection during driving, an essential vital sign for monitoring the driver’s health and detecting respiratory diseases. The study involves 15 healthy subjects and follows the same experimental design as this paper55. We present our findings in two separate papers due to the complexity of the sensor system, pre-processing, and CNN-based training parameters, which exceed a single journal paper’s scope.

Conclusion

In summary, we developed a redundant sensor system and signal fusion approaches to detect heartbeats while driving. Moreover, we want to answer the research question of the usable driving time for heartbeat detection: The hybrid algorithm and sensor pair ECG and PPG deliver on average the best results highway (\(P=58.62\%\)), countryside (\(P=55.96\%\)), and city (\(P=51.75\%\)).

As a take-home message, we can potentially use over half of our drive time for continuous monitoring with the ECG and PPG sensor and a low variance between the different driving scenarios. This provides the possibility to detect symptoms of cardiovascular diseases at an earlier stage in comparison to conventional methods. With the publicly available data, it is possible to reproduce the results and apply further algorithms to detect the correct heartbeat position.

Data availability

Due to the inability to derive the identity of the subject from the ECG, PPG, and iPPG signals, we published the data anonymously in the TU Braunschweig library under CC BY 4.0 (link: https://doi.org/10.24355/dbbs.084-202207150657-0, accessed on November 16th, 2023). The dataset contains reference ECG, steering wheel ECG, and PPG (.csv format), RGB channels for face segment cheek (.mat format), and subject information (subject ID, age, height, weight, gender, and known diseases). All test persons signed a consent form and agreed on the publication of these anonymous data.

Change history

14 March 2024

A Correction to this paper has been published: https://doi.org/10.1038/s41598-024-56883-9

References

Cardiovascular diseases [Internet]. Genève: World Health Organization [cited 2023 Jul 9]; [about screen 1]. Available from: https://www.euro.who.int/en/health-topics/noncommunicable-diseases/cardiovascular-diseases/cardiovascular-diseases2.

Wolf, P., Abbott, R. & Kannel, W. Atrial fibrillation as an independent risk factor for stroke: The Framingham study. Stroke 22(8), 983–988. https://doi.org/10.1161/01.STR.22.8.983 (1991).

Middlekauff, H., Stevenson, W. & Stevenson, L. Prognostic significance of atrial fibrillation in advanced heart failure. A study of 390 patients. Circulation 84(1), 40–4. https://doi.org/10.1161/01.CIR.84.1.40 (1991).

Nattel, S. New ideas about atrial fibrillation 50 years on. Nature 415(6868), 219–226. https://doi.org/10.1038/415219a (2002).

Steinhubl, S. et al. Effect of a home-based wearable continuous ECG monitoring patch on detection of undiagnosed atrial fibrillation: The mstops randomized clinical trial. JAMA 320(2), 146–155. https://doi.org/10.1001/jama.2018.8102 (2018).

National Travel Survey: England 2018 [Internet]. Department of Transportation [cited 2022 Nov]; [about page 14]. Available from: https://www.gov.uk/government/statistics/national-travel-survey-2018.

Deserno, T. Transforming smart vehicles and smart homes into private diagnostic spaces. Conf. Proc. APIT 2020, 165–171. https://doi.org/10.1145/3379310.3379325 (2020).

Driver Alter System [Internet]. Wolfsburg: Volkswagen [cited 2023 June 20]; [about screen 1]. Available from: https://www.volkswagen.co.uk/en/technology/car-safety/driver-alert-system.html.

Driver Drowsiness Detection [Internet]. Stuttgart: Bosch [cited 2023 June 20]; [about screen 1]. Available from: https://www.bosch-mobility-solutions.com/en/solutions/assistance-systems/driver-drowsiness-detection/.

Sahayadhas, A., Sundaraj, K. & Murugappan, M. Detecting driver drowsiness based on sensors: a review. Sensors (Basel) 12(12), 16937–53. https://doi.org/10.3390/s121216937 (2012).

Leonhardt, S., Leicht, L. & Teichmann, D. Unobtrusive vital sign monitoring in automotive environments—A review. Sensors (Basel) 18(9), 3080. https://doi.org/10.3390/s18093080 (2018).

Vavrinsky, E., Tvarozek, V., Stopjakova, V., Solarikova, P. & Brezina, I. Analysis of vital signs monitoring using an IR-UWB radar. Conf. Proc. ASDAM 2010, 227–30. https://doi.org/10.1109/ASDAM.2010.5667021 (2010).

Lazaro, A., Girbau, D. & Villarino, R. Analysis of vital signs monitoring using an IR-UWB radar. PIER 100, 265–84. https://doi.org/10.2528/PIER09120302 (2010).

Walter, M., Eilebrecht, B., Wartzek, T. & Leonhardt, S. The smart car seat: Personalized monitoring of vital signs in automotive applications. J. Pers. Ubiquit Comput. 15(7), 707–15. https://doi.org/10.1007/s00779-010-0350-4 (2011).

Wang, J., Warnecke, J., Haghi, M. & Deserno, T. Unobtrusive health monitoring in private spaces: the smart vehicle. Sensors (Basel) 20(9), 2442. https://doi.org/10.3390/s20092442 (2020).

Gomez-Clapers, J. & Casanella, R. A fast and easy-to-use ECG acquisition and heart rate monitoring system using a wireless steering wheel. IEEE Sens. 12(3), 610–6. https://doi.org/10.1109/JSEN.2011.2118201 (2012).

Kuo, J., Koppel, S., Charlton, J. & Rudin-Brown, C. Evaluation of a video-based measure of driver heart rate. J. Saf. Res. 54, 55–9. https://doi.org/10.1016/j.jsr.2015.06.009 (2015).

Warnecke, J. et al. Printed and flexible ECG electrodes attached to the steering wheel for continuous health monitoring during driving. Sensors (Basel) 22(11), 4198. https://doi.org/10.3390/s22114198 (2022).

Warnecke, J. et al. Sensor fusion for robust heartbeat detection during driving. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2021, 447–450. https://doi.org/10.1109/EMBC46164.2021.9630935 (2021).

Harris, C., Bailey, A. & Dodd, T. Multi-sensor data fusion in defence and aerospace. J. Aeronaut. 102(1015), 229–244. https://doi.org/10.1017/S0001924000065271 (1998).

Yeong, D., Velasco-Hernandez, G., Barry, J. & Walsh, J. Sensor and sensor fusion technology in autonomous vehicles: A review. Sensors (Basel) 21(6), 2140. https://doi.org/10.3390/s21062140 (2021).

Münzner, S. et al. CNN-based sensor fusion techniques for multimodal human activity recognition. Conf. Proc. ACM Intern. Symp. Wearable Comput. 2017, 16–158. https://doi.org/10.1145/3123021.3123046 (2017).

Tejedor, J., García, C., Márquez, D., Raya, R. & Otero, A. Multiple physiological signals fusion techniques for improving heartbeat detection: A review. Sensors (Basel) 19(21), 4708. https://doi.org/10.3390/s19214708 (2019).

Chandra, B., Sastry, C. & Jana, S. Robust heartbeat detection from multimodal data via CNN-based generalizable information fusion. IEEE Trans. Biomed. Eng. 66(3), 710–717. https://doi.org/10.1109/TBME.2018.2854899 (2018).

Warnecke, J. et al. Registered report protocol: Developing an artifact index for capacitive electrocardiography signals acquired with an armchair. PLoS ONE 16(7), e0254780. https://doi.org/10.1371/journal.pone.0254780 (2021).

Kashif, M., Jonas, S. & Deserno, T. Deterioration of R-wave detection in pathology and noise: A comprehensive analysis using simultaneous. IEEE Trans. Biomed. Eng. 64(9), 2163–75. https://doi.org/10.1109/TBME.2016.2633277 (2018).

Pan, J. & Tompkins, W. A real-time QRS detection algorithm. IEEE Trans. Biomed. Eng. 32(3), 230–6. https://doi.org/10.1109/TBME.1985.325532 (1985).

ECG processing. R-peaks detection [Internet] Librow: Kyiv [cited 2022 Dec 20]; [about 1 screen]. Available: http://www.librow.com/articles/article-13.

Arzeno, N., Deng, Z. & Poon, C. Analysis of first-derivative based QRS detection algorithms. IEEE Trans. Biomed. Eng. 55(2), 478–84. https://doi.org/10.1109/TBME.2007.912658 (2008).

Manikandan, M. & Soman, K. A novel method for detecting R-peaks in electrocardiogram (ECG) signal. Biomed. Signal Proc. Control 7(2), 478–84. https://doi.org/10.1016/j.bspc.2011.03.004 (2008).

Lentini, M. Long ECG and pattern extraction. Conf. Proc. Appl. Math. Inform. (2013).

Sartor, M. et al. Nicht-lineare Zeitnormierung im Langzeit-EKG. Conf. Proc. BVM.https://doi.org/10.1007/978-3-642-54111-7_56 (2014).

Liu, X. et al. A novel R-peak detection method combining energy and wavelet transform in electrocardiogram signal. Biomed. Eng. Appl. Basis Commun. 26, 1450007. https://doi.org/10.4015/S1016237214500070 (2014).

Arteaga-Falconi, J., Al Osman, H. & El Saddik, A. R-peak detection algorithm based on differentiation. Conf. Proc. Symp. Intell. Signal Process.https://doi.org/10.1109/WISP.2015.7139157 (2015).

Khamis, H. et al. QRS detection algorithm for telehealth electrocardiogram recordings. IEEE Trans. Bio Med. Eng. 63, 1377–1388. https://doi.org/10.1109/TBME.2016.2549060 (2016).

Ethikkommission [Internet]. Braunschweig: TU Braunschweig [cited 2022 Sep 20]; [about screen 1]. Available from: https://www.tu-braunschweig.de/struktur/organe/kommissionen/ethik.

Seetaface2 [Internet]. Beijing: Seetatech [cited 2023 Sep 25]; [about screen 1]. Available from: https://github.com/seetafaceengine/SeetaFace2/blob/master/README_en.md.

Wu, S. et al. Funnel-structured cascade for multi-view face detection with alignment awareness. J. Neurocomput. 221, 138–145. https://doi.org/10.48550/arXiv.1609.07304 (2017).

Kamshilin, A. et al. A new look at the essence of the imaging photoplethysmography. Sci. Rep. 5, 10494. https://doi.org/10.1038/srep10494 (2015).

Zheng, K., Ci, K., Cui, J., Kong, J. & Zhou, J. Non-contact heart rate detection when face information is missing during online learning. Sensorshttps://doi.org/10.3390/s20247021 (2020).

Cheng, C.-H., Wong, K.-L., Chin, J.-W., Chan, T.-T. & So, R. Deep learning methods for remote heart rate measurement: A review and future research agenda. Sensors (Basel)https://doi.org/10.3390/s21186296 (2021).

TU Braunschweig, Hardware [Internet]. [cited 2023 Oct 20]; [about 1 screen]. Available from: https://www.tu-braunschweig.de/it/hpc.

Allen, M., Poggiali, D., Whitaker, K., Marshall, T. & Kievit, R. Raincloud plots: A multi-platform tool for robust data visualization. Wellcome Open Res. 63(4), 1–4. https://doi.org/10.12688/wellcomeopenres.15191.2 (2019).

Selvaraju, V. et al. Continuous monitoring of vital signs using cameras: A systematic review. Sensors (Basel) 22(11), 4097. https://doi.org/10.3390/s22114097 (2022).

Sunaga, T. et al. Measurement of the electrical properties of human skin and the variation among subjects with certain skin conditions. Phys. Med. Biol. 47, N11–N15. https://doi.org/10.1088/0031-9155/47/1/402 (2001).

Arlene, J., Redmond, S., Cardiff, B. & Deepu, J. A multimodal data fusion technique for heartbeat detection in wearable IoT sensors. IEEE Internet Things 9, 2071–2082. https://doi.org/10.1109/JIOT.2021.3093112 (2022).

Leicht, L. et al. Capacitive ECG monitoring in cardiac patients during simulated driving. IEEE Trans. Biomed. Eng. 66(3), 749–758. https://doi.org/10.1109/TBME.2018.2855661 (2018).

Li, F. et al. Feature extraction and classification of heart sound using 1d convolutional neural networks. J. Adv. Signal Process. 59, 1–11. https://doi.org/10.1186/s13634-019-0651-3 (2019).

Smith, L. N. Cyclical learning rates for training neural networks. Conf. Proc. Appl. Comput. Vis. 2017, 464–72. https://doi.org/10.1109/WACV.2017.58 (2017).

Isaac, B., Kinjo, H., Nakazono, K. & Oshiro, N. Suitable activity function of neural networks for data enlargement. Conf. Proc. Control Autom. Syst. 2018, 392–397 (2018).

Nowara, E., McDuff, D. & Veeraraghavan, A. A meta-analysis of the impact of skin type and gender on non-contact photoplethysmography measurements. Conf. Proc. IEEE Comput. Vis. Pattern Recognit. 2020, 1148–55. https://doi.org/10.1109/CVPRW50498.2020.00150 (2020).

Fu, C., Mertz, C. & Dolan, J. Lidar and monocular camera fusion: On-road depth completion for autonomous driving. Conf. Proc. IEEE Intell. Trans. Syst. (ITSC) 2019, 273–278. https://doi.org/10.1109/ITSC.2019.8917201 (2019).

Satija, U., Ramkumar, B. & Manikandan, M. A review of signal processing techniques for electrocardiogram signal quality assessment. IEEE Rev. Biomed. Eng. 11, 36–52. https://doi.org/10.1109/RBME.2018.2810957 (2018).

Deserno, T., Warnecke, J., Flormann, M. & Henze, R. Combining vehicle BUS and vital sign sensors for continuous health monitoring during driving: Concept and first results. Conf. Proc. Electron. Comput. Commun. Mech. Eng. 2022, 1–6. https://doi.org/10.1109/ICECCME55909.2022.9987896 (2022).

Warnecke, J. M., Lasenby, J. & Deserno, T. M. Robust in-vehicle respiratory rate detection using multimodal signal fusion. Sci. Rep. 13, 20435. https://doi.org/10.1038/s41598-023-47504-y (2023).

Acknowledgements

Our sincere thanks go out to all the volunteers who contributed to the data acquisition process.

Funding

Open Access funding enabled and organized by Projekt DEAL. This work is funded by the Lower Saxony Ministry of Science and Culture under Grant Number ZN3491 within the Lower Saxony “Vorab” of the Volkswagen Foundation and Center for Digital Innovations.

Author information

Authors and Affiliations

Contributions

Conceptualization, T.M.D., and J.M.W.; methodology, J.L., T.M.D., and J.M.W.; validation, J.L., T.M.D., and J.M.W.; formal analysis, T.M.D., and J.M.W.; investigation, T.M.D., J.L., and J.M.W.; resources, J.L., and T.M.D.; data curation, T.M.D.; writing—original draft preparation, J.M.W.; writing—review and editing, J.L., and T.M.D.; visualization, J.M.W.; supervision, T.M.D.; project administration, T.M.D.; funding acquisition, T.M.D. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this Article was revised: The original version of this Article contained an error in Reference 55, which was incorrectly given as: Warnecke, J. M., Lasenby, J. & Deserno, T. M. Robust in-vehicle respiratory rate detection using multimodal signal fusion https://doi.org/10.21203/rs.3.rs-2828298/v1 (2023). The correct reference is: Warnecke, J. M., Lasenby, J. & Deserno, T. M. Robust in-vehicle respiratory rate detection using multimodal signal fusion. Sci. Rep. 13, 20435. https://doi.org/10.1038/s41598-023-47504-y (2023).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Warnecke, J.M., Lasenby, J. & Deserno, T.M. Robust in-vehicle heartbeat detection using multimodal signal fusion. Sci Rep 13, 20864 (2023). https://doi.org/10.1038/s41598-023-47484-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-47484-z

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.