Abstract

Cloud Computing model provides on demand delivery of seamless services to customers around the world yet single point of failures occurs in cloud model due to improper assignment of tasks to precise virtual machines which leads to increase in rate of failures which effects SLA based trust parameters (Availability, success rate, turnaround efficiency) upon which impacts trust on cloud provider. In this paper, we proposed a task scheduling algorithm which captures priorities of all tasks, virtual resources from task manager which comes onto cloud application console are fed to task scheduler which takes scheduling decisions based on hybridization of both Harris hawk optimization and ML based reinforcement algorithms to enhance the scheduling process. Task scheduling in this research performed in two phases i.e. Task selection and task mapping phases. In task selection phase, all incoming priorities of tasks, VMs are captured and generates schedules using Harris hawks optimization. In task mapping phase, generated schedules are optimized using a DQN model which is based on deep reinforcement learning. In this research, we used multi cloud environment to tackle availability of VMs if there is an increase in upcoming tasks dynamically and migrate tasks to one cloud to another to mitigate migration time. Extensive simulations are conducted in Cloudsim and workload generated by fabricated datasets and realtime synthetic workloads from NASA, HPC2N are used to check efficacy of our proposed scheduler (FTTHDRL). It compared against existing task schedulers i.e. MOABCQ, RATS-HM, AINN-BPSO approaches and our proposed FTTHDRL outperforms existing mechanisms by minimizing rate of failures, resource cost, improved SLA based trust parameters.

Similar content being viewed by others

Introduction

Cloud Computing is one of the rapid growing paradigm in IT industry renders seamless services to its users on demandly based on requirement of user’s application. Applications to be deployed in cloud paradigm are of different types and they require different computing, storage and network capacities. All these resources can be provisioned by cloud provider by using different types of services virtually as infrastructure, platform and as software to users as and when they required with different pricing models1. Every cloud user may not require same cloud deployment model for their application. Therefore, a tailor made customized models are available the cloud users and they are public, private, hybrid deployment models2. Rendering of these services to all users with different customized deployment models for different pricing models is a challenge for cloud vendor may have different customers around the world and to schedule and allocate all the requests coming from various heterogeneous resources and to allocate different types of requests to various virtual resources to compute in an effective manner without human intervention is a challenging scenario. Therefore, task scheduling plays a crucial role in cloud computing paradigm3. Task scheduling is defined as allocating all the incoming tasks to virtual resources resided in datacentres considered in this paradigm. It is a challenging problem in this paradigm as variety of requests from heterogeneous resources comes to cloud application console where the scheduler need to look up all those requests and it should assign it to an appropriate suitable VM which can process this request. Many of existing authors proposed various task scheduling algorithms such as MOABCQ4, RATSHM5, AINN-BPSO6 which are modelled based on metaheuristic approaches but still these approaches are not focused on failure rate, resource cost, SLA based trust parameters. Ineffective scheduling of tasks leads to increase in delay of processing tasks thereby increase in makespan, resource costs, execution time, energy consumption. It effects various parameters and thereby it effects cloud provider’s quality of service and thereby it violates SLA therefore trust on cloud provider also be decreased. Many Task scheduling algorithms developed by various authors using nature inspired, metaheuristic approaches as task scheduling in this paradigm is highly dynamic and it is of type NP-hard problem which cannot give solution in specific polynomial amount of time and scheduling in cloud is also the same type it is difficult to schedule variety of heterogeneous dynamic tasks on to VMs as it is difficult to predict number of tasks to come onto cloud console. When a task is not properly scheduled onto a VM by considering parameters i.e. run time processing capacity, length of task then task execution process may get delayed which impacts makespan and in some other cases task may fails due to improper assignment of VM there by rate of failures will be increased. When rate of failures increased there is a chance of impact on violation of SLA which impacts both Quality of service and trust on the cloud provider. Trust on the cloud provider depends mainly on success rate of VM, Availability of VM, turnaround efficiency of tasks. For improvement of availability of resource and to minimize resource cost we used multi cloud model and migrate tasks based on availability of resources in the corresponding resource where VMs are available by minimizing resource cost. Therefore, in this research, to minimize rate of failures and to increase trust on cloud provider we developed a task scheduler which schedules tasks in two phases i.e. selection of tasks, mapping of tasks on suitable VMs. In initial phase, tasks for scheduling is done based on all priorities of tasks, VMs collected from task manager and fed to scheduler which generates schedules with the help of Harris Hawks optimization and generated schedules are optimized using a reinforcement learning based model i.e. DQN model that minimizes makespan, rate of failures, resource cost and improving SLA based parameters.

Motivation and contributions

Task scheduling in cloud paradigm poses challenges to cloud provider as it is difficult to map tasks with different run time capacities to precise VMs. This is a challenge in cloud paradigm and improper mapping or assigning of tasks to VMs effects the QoS of cloud provider. It directly effects makespan, turnaround efficiency of tasks by delaying task execution on VMs which leads to decay of quality service. In some cases, due to ineffective mapping of tasks by scheduler i.e. if size of task or runtime processing capacity is not matched with the VM capacity then there is a chance of failure occurs in that VM. Therefore, rate of failures can also be an effected parameter for ineffective scheduling. Another parameter to be effected in cloud computing paradigm is Availability of VMs as if a task is assigned to VM and if that resource is not available at that instance of time then it directly effects the task execution by making that task to be failed. Success rate of VM effects QoS of cloud provider as if the task assignment is not done accurately onto a VM then it directly effects quality of service, SLA violations. The above reasons motivated us to take up this research while mapping tasks to accurate VMs by considering priorities of Tasks, VMs using both Harris Hawks for selecting tasks and scheduling them and DQN model to optimize generated schedules while addressing makespan, resource cost, SLA based trust parameters.

Highlights of our manuscript are indicated as below.

-

A Fault tolerant based task scheduling algorithm(FTTHDRL) for multi-cloud environment.

-

This task scheduler modelled using hybrid approach Harris hawk optimization and a DQN model based on deep reinforcement learning.

-

Scheduling of tasks to precised VMs modelled in two phases i.e. task selection and generation of schedules using Harris Hawks optimization. In task mapping phase optimization of generated schedules designed by using DQN model which works based on Deep reinforcement learning.

-

Extensive simulations are conducted on Cloudsim with fabricated and realtime computing worklgs from HPC2N, NASA.

-

Proposed FTTHDRL evaluated against existing approaches i.e. RATS-HM, MOABCQ, AINN-BPSO and evaluated parameters makespan, resource cost, rate of failures, SLA based trust parameters in multi cloud environment.

Rest of the manuscript is organized as indicated below. Section “Existing related works” discusses existing related works, Section “Fault tolerant trust aware task scheduling using Harris Hawks and DRL in multi cloud environment” discusses Proposed architecture of FTTHDRL, Section “Simulation and results” discusses methodology used for our proposed approach i.e. Harris Hawks optimization, DQN model, Section “Conclusion and future works” discusses Simulation and Results. Finally Section 6 discusses Conclusion and Future works.

Existing related works

This section clearly discusses about various existing task schedulers which addresses different parameters and techniques they used to develop schedulers in cloud computing paradigm. In7, authors focused on scheduling tasks effectively by minimizing makespan, execution time on VMs. They improved differential evolution approach to a hybrid level by incrementing scaling factors to enhance exploration, exploitation in the searching process of a solution in search space. All experimentation conducted on Cloudsim. HDE compared against state of art algorithms SMA, EO, GWO, FCFS, RR to check effectiveness of proposed HDE. Results proved that HDE dominant with respect to execution time, makespan. Penalty function which is related to SLA violations in cloud computing plays a major role in this paradigm. Authors in8, formulated adaptive task scheduling mechanism which addressed penalty function as a parameter to adjust scheduling process dynamically as per SLA made by cloud provider. Symbiotic organisms search is improved to enhance scheduling process to adapt to search space as and when more workload generated it schedules workload to VMs. This simulation conducted on Cloudsim with random workload and results proved that penalty related to SLA violation minimized with ABFSOS. Authors in9 formulated a task scheduling mechanism which uses improved version of MVO approach by adjusting average position of solution in scheduling. These simulations are conducted on Cloudsim and evaluated parameters i.e. execution time, throughput, VM processing Power. It evaluated over existing algorithms MVO, mMVO and proposed IMOMVO reveals that it improves above said parameters while compared with existing approaches. Authors in10 developed multi objective task scheduling model addressed the parameters consumption of energy, makespan. A hybrid approach was used to model task scheduler. This model works based on combining Whale optimization, differential evolution techniques for better enhancement of scheduling process in cloud paradigm. Simulations performed extensively in developing this scheduler which takes standard realtime datasets as input to algorithm i.e. curie workloads, HPC2N by varying different number of iterations. Results revealed that h-DEWOA dominates other non-differential approaches by minimizing both energy consumption, makespan. A multi objective scheduling mechanism developed to tackle parallel workloads in cloud environment. This approach aims at makespan, throughput. This mechanism modelled by using hybrid BAT approach to explore near optimal solution in search space. Real time parallel workload given as an input to algorithm and simulations are conducted on Cloudsim. Performance of hybrid BAT evaluated over classical BAT and other metaheuristic approaches. Evaluated results evident that it outperforms existing mechanisms for specified parameters. For the benefit of cloud user and service provider authors in12 proposed a task scheduling strategy which provides Quality of service and improves resource utilization. It was modelled using both IQSSA, QSSGWA algorithms which were inspired based on Quantum computing, Salp swarm algorithms. All simulations are conducted on MATALAB. Both IQSSA, QSSGWA tested against more than 10 benchmark functions to evaluate convergence of proposed approach. It evaluated against existing approaches SSA, GWO algorithms. These approaches shown huge impact over state of art algorithms for above mentioned parameters. Degree of imbalance for tasks is one of the major concern in cloud computing as they rush towards application console. This parameter efficiently tackled by authors in13. Initially they used WOA to explore its ability in local search process while horse optimization is used as global search to fine tune convergence rate. It was compared to baseline mechanisms PSO, GWO, WOA approaches to address makespan, degree of imbalance. In14, authors developed job scheduling mechanism in Fog computing i.e. DOLSSO. It was modelled based on opposition learning social spider optimization combined with a reinforced mechanism which was implemented on Ifogsim. Initially, schedules generation was populated by OLSSO and optimization of those schedules were done by reinforcement strategy used in the approach. Workflows used in simulation are generated randomly in Ifogsim. It was ratified against state of art approaches and results shown that DOLSSO dominates other approaches for utilization of CPU, consumption of energy. Resource cost is also a crucial parameter which effects scheduling impact in cloud computing paradigm. It was discussed by authors in15. They developed a task scheduling mechanism which consists of both cuckoo search and harmony search to tackle scheduling problem in cloud computing. CS acts as local search to explore solution space while HS used as global search to explore solution space. Simulations are conducted on Cloudsim tool. It was compared over CS, HS, CGSA approaches. Finally from generated results of CSHA it proved that resource cost, penalty is minimized over existing approaches. Authors in16 also focused on total execution cost in Fog computing that effects scheduling. They developed a task scheduling mechanism which is based on hyper heuristic approach which tunes convergence of solutions. It evaluated against baseline metaheuristic approaches and entire simulation conducted on Ifogsim. Results shown the effectiveness of HHS on other approaches while minimizing execution cost, latency, execution time. A Job scheduling strategy developed in17 to find a best possible VM to execute tasks which are highly dynamic in IOT applications. This mechanism was developed in two stages. In first stage, all the nodes are clustered together and grouped them together and trained with different levels of utilization. In second stage, SSA combined with DE used to optimize degree of imbalance, throughput, consumption of energy. Power consumption in datacentres effects cloud provider as if proper scheduler is not used to execute tasks, therefore there is an increase in consumption of power in cloud paradigm which effects cloud provider directly but it also effects customer because they need to pay extra pricing for services they consume. Authors in18 developed a scheduler which tackles with power consumption, execution cost, runtime. It was modelled using NSGA-III which considers an adaptive fitness function to adjust and schedule tasks to VMs appropriately. From results, it proved that NSGA-III dominated other approaches in terms of power consumption, cost, Runtime. Resource allocation optimization in cloud paradigm is challenging issue as mapping tasks to VMs is a challenge in cloud paradigm as it is a NP hard problem. For this to happen, authors in19 proposed task scheduler which developed by combining GTO, RSO. This approach initially predicts features in upcoming tasks by extracting them using PCA. These features are fed to HMEERA which allocates tasks to virtual resources by optimizing them using GTO, RSO approaches. It was compared over existing approaches and results shown dominance of HMEERA in view of response time, waiting time. Execution time, Cost are prominent concerns for task scheduling in cloud model. These parameters are addressed and tackled in20 by using hybrid technique HWOA-MBA. It was developed by enhancing RDWOA by tuning mutation of Bee’s algorithm. It was simulated on Cloudsim and ratified against MALO, IWC, BA-ABC. Results shown that proposed HWOA-MBA dominated over state of art algorithms for task completion time, execution time. In21, authors developed a task scheduler which improves makespan in cloud computing paradigm. This was developed by using IPSO which is an improved version of PSO by segregating particles as ordinary, local best particles which converges towards solution fast when compared with classical PSO. IPSO ratified over CEC 2017 benchmark and results shown huge impact over state of art algorithms for makespan, load balancing. Authors in22 proposed an AI based scheduling technique to precisely map tasks to VMs while addressing rate of task completion, energy consumption. It was modelled by extending GGCN by adding a recurrent unit to it. Simulation results of HunterPlus shown it was greatly minimizes consumption of energy by 17%, improves rate of task completion by 10%. Resource cost, makespan are addressed by authors in23 by formulating a task scheduler for cloud paradigm which is a hybridized approach by integrating adaptive weight strategy to ACO algorithm. It was compared over ACO, Min-Min, MTF-BPSO algorithms. Results of HWACO shown improvement in makespan, cost over compared approaches. In24 bio inspired task scheduling paradigm which deals with cost saving of resources. It was designed by sea gull optimization technique which adapts to cloud environment. It compared over CJS, MSDE, FUGE approaches and finally results evident that cost of virtual resources, consumption of energy minimized with SOATS. In25, task scheduling algorithm which optimizes quality of service parameters concerned with cloud computing. It was modelled as a hybridized approach by combining rider optimization with cuckoo search to get adapted to dynamic nature of cloud paradigm. It was implemented on Cloudsim tool. RCOA ratified against COA, Rider algorithm, PSO. RCOA shown huge impact while minimizing makespan. Multi objective scheduling technique developed by authors in26 using QOGSHO by combining QOBL, SHO by designing an adaptive fitness function to evaluate SLA Violation, makespan, resource utilization. This experimentation conducted on a customized cloud environment. Services for mobile computations are restricted as it is difficult to assign resources to applications in mobiles. Therefore, offloading is a technique where intensive computations are migrated and offloaded to cloud servers for computing tasks. This offloading process designed by authors in27 using African wild dog algorithm based on hunting cooperation behaviour of wild dogs. This entire simulation process conducted on Cloudsim. AWDA evaluated over existing approaches. Results of AWDA dominated other state of art algorithms by minimizing cost, delay time, energy. User satisfaction to gain trust over cloud provider is a serious concern in terms of business of cloud provider. Authors in28 hybridized PSO, GA algorithms to handle the concerns related to user satisfaction, processing efficiency. Cloudsim tool is used to conduct simulations. All simulations are conducted with uniform simulation settings for all the other algorithms to which PGSAO is ratified against them. Simulation results shown high impact over against existing state of art approaches by improving user satisfaction. Concurrent tasks are difficult to schedule tasks in VMs resided in Physical nodes. In datacentres minimizing energy consumption is a huge challenge in datacentres as tasks are raised from various resources to cloud application console29. In the first phase, OBL, PSO are integrated with WOA algorithm to enhance performance of algorithm. In second phase, OBL, PSO optimizes exploration and minimizes energy consumption, makespan over existing state of art algorithms. In30, task scheduling algorithm is formulated to address multiple objectives availability, success rate, makespan, turnaround efficiency. ICOATS is proposed quality of service scheduling algorithm which concerned with length of task, priorities. It was simulated on Cloudsim. It ratified over state of art approaches. Results of simulations dominated in terms of above mentioned parameters. Task scheduling algorithm with multiple objectives developed in31 to address makespan, execution cost, utilization of resources in integrated cloud-fog computing model. This approach modelled using IJFA by considering with variations in sizes of tasks, task speed, capacity of VMs in cloud-fog environment. Ifogsim was used as a simulation environment and ratified over state of art approaches to check the efficacy of IJFA.

From the above section “Existing related works” and Table 1 it is clearly observed that earlier authors who formulated task scheduling algorithms addressed parameters execution time, cost, utilization of resources, makespan, consumption of energy. They haven’t addressed SLA based trust parameters in a multi cloud environment with inclusion of task, VM priorities. This approach modelled by using hybridization of Harris hawks Optimization algorithm(HHOA), DQN model which is a reinforcement learning based technique to optimize generated schedules which minimize makespan, resource cost, rate of failures and improves SLA based trust parameters.

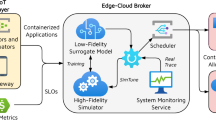

Fault tolerant trust aware task scheduling using Harris Hawks and DRL in multi cloud environment

This section discusses overall system architecture and mathematical modelling used in Fault tolerant trust aware model developed for multi cloud environment modelled by hybridizing Harris Hawks Optimization algorithm(HHOA) and DQN models which is used to check availability for multiple cloud environments to map tasks to corresponding VMs to minimize rate of failures, resource cost in this model. The subsection “FTTHDRL Problem definition and system architecture” discusses FTTHDRL mathematical modelling, problem formulation.

FTTHDRL problem definition and system architecture

In this subsection, we precisely formulated problem definition for FTTHDRL(Fault tolerant trust aware Harris Hawk and Deep reinforcement Learning) based system architecture. Assume that we have \(i\) number of tasks represented as \(\{t{a}_{1},t{a}_{2},t{a}_{3}\dots .,t{a}_{i}\}\), \(j\) number of VMs represented as \(\left\{{v}_{1},{v}_{2},\dots {v}_{j}\right\}\), \(k\) number of physical nodes represented as \(\{p{n}_{1},p{n}_{2},\dots .p{n}_{k}\}\), \(l\) number of datacentres represented as \(\{{d}_{1},{d}_{2},{d}_{3}\dots .,{d}_{l}\}\). Now problem formulation can be done as \(i\) tasks are mapped to \(j\) VMs placed in \(k\) physical nodes which are placed in \(l\) datacentres by considering priorities of both tasks, VMs while tackling all the parameters. Initially, all tasks arises from various resources which have different processing capacities. All these tasks consists of different lengths, runtime capacities. For every task which is coming from user will be submitted to cloud application console which are captured by broker included in the cloud provider module. This broker will keep track of priorities of all tasks. For all the tasks, priorities are calculated based on task length, execution time. This scheduling process considers another priority i.e. VM priority based on electricity cost. All these priorities are to be maintained in a priority queue to be fed to the scheduler module which is integrated with Harris Hawk algorithm and DQN model to tackle parameters rate of failures, resource cost, makespan, SLA based trust parameters. While scheduling each task to a VM based on priorities a task with highest priority should be mapped to a VM with highest priority means that VM resided in a datacentre which run with low electricity cost. If there is no VM suitable for the task available in the datacentre as we are using multi cloud environment it will check for the VM in the another cloud and if it is suitable it migrates tasks to another cloud environment which runs with same priority. In another case, if the task need to be mapped to a VM which scans all the VMs available in multi cloud environment and schedules task to a VM which incurs less resource cost. In the first phase, all priorities captured by task manager and fed to scheduler which generates schedules based on HHA algorithm and then all these generated schedules are optimized using DQN model to tackle above mentioned parameters.

Mathematical modelling of FTTHDRL

This subsection discusses about mathematical modelling of fault tolerant trust aware task scheduler by hybridization of HHA and DRL based DQN model. In the initial phase to generate schedules calculation of priorities for tasks, VMs to be done. In the below equation, present workload on considered VMs in this architecture are calculated using Eq. (1).

where \(loa{d}_{{v}_{j}}\) is present running tasks workload on \(j\) VMs. All these \(j\) VMs are placed in \(k\) physical nodes. Present workload on all physical nodes are calculated using Eq. (2).

where \(loa{d}_{p{n}_{k}}\) is present running tasks on \(k\) physical nodes. For calculation of task priorities processing capacities of VMs to be properly identified and they are represented in Eq. (3).

Processing capacity of all VMs are represented using below Eq. (4).

Priorities of tasks depends on two components i.e. length of task, runtime or processing capacity of a VM. All incoming length of tasks are calculated and they are represented in Eq. (5).

In the step 5, we identified length of all \(i\) tasks considered in our system architecture. Now, Priorities of all incoming tasks are represented by Eq. (6).

In Eq. (6). after calculation of task priorities, we calculated all \(j\) VM priorities to schedule all incoming \(i\) tasks to suitable VMs considered in our architecture. In order to map tasks appropriately, VM priorities based on electricity cost at datacentre location is represented by Eq. (7).

From Eqs. (6) and (7) priorities of tasks, VMs are calculated. These priorities are fed to scheduler in which high prioritized task should map to a high prioritized VM. If high prioritized VM is not available at the current cloud vendor look for the high prioritized VM in the another cloud vendor as we are using multi cloud environment. If high prioritized VMs are not available at both the cloud vendors and then look for a VM which is having next highest priority in any cloud vendor which have less resource cost. Therefore, it is necessary to calculate resource cost in cloud model and it is represented by Eq. (8). It is an important parameter need to be addressed in this model as we said earlier our model is aimed at minimization of resource cost which is an important aspect for both cloud provider and user. It is represented in Eq. (8).

Makespan is one of the important parameter which should be addressed after identifying resource cost in this model. For any task scheduler in the cloud environment it is important to calculate makespan as it impacts quality of service of cloud provider and other parameters which effects SLA violations and related to trust on the cloud provider. It is represented by Eq. (9).

In this proposed algorithm, another important objective to address is to minimize rate of failures thus by improving fault tolerance using this scheduler in multi cloud environment. It is represented by Eq. (10).

where \(mtbf\) represents mean time between failures, \(mttr\) represents mean time to repair or restore a node from a failure to restore process. We formulated fault tolerance for all \(i\) tasks in this model. Our next objective is to relate fault tolerance with SLA based trust parameters which effects Quality of service of cloud provider. SLA based trust parameters are of three types. They are Availability, Success rate, Turnaround efficiency of VMs. Thus, we calculated availability of \(j\) VMs and it is represented using Eq. (11).

Another trust based parameter need to be calculated is success rate of VMs. It is represented using Eq. (12). It is defined as rate of successful tasks of \(t{a}_{i}\) to submitted number of tasks of \(t{a}_{i}\). It is represented by Eq. (12).

Turnaround efficiency of a VM is another parameter which effects trust of cloud provider. It is represented by using Eq. (13).

After evaluation of all these SLA based trust parameters, trust on cloud provider is represented by Eq. (14).

where \({X}_{1}, {X}_{2}, {X}_{3}\) are coefficient weights which are helpful to evaluate trust on Cloud provider and this value \(\epsilon (\mathrm{0,1})\) and it is captured from37. It is calculated using co-variance mechanism The weights in the above equations are considered as \({X}_{1}=0.5,{X}_{2}=0.2, {X}_{3}=0.1\) are considered from37.

FTTHDRL fitness function for schedules generation using Harris Hawk optimization

This subsection clearly presents fitness function for generation of schedules using Harris Hawk optimization. Earlier we said we are using a hybrid approach, thus initially we generate schedules by evaluating fitness function using Eq. (15). and there after we use reward function in DQN model to optimize schedules in the second level while improving above said parameters.

where \({\upbeta }_{1}+{\upbeta }_{2}+{\upbeta }_{3}+{\upbeta }_{4}+{\upbeta }_{5}+{\upbeta }_{6}=1\). From Eq. (15). as we discussed earlier in the subsection "FTTHDRL fitness function for schedules generation using Harris Hawk optimization", we evaluate fitness function and then check the values of generated parameters using Harris Hawks algorithm.

Methodology used in FTTHDRL

This subsection clearly presents the methodology used in proposed FTTHDRL. This algorithm modelled by using hybridizing Harris Hawk algorithm and DQN model which is based on reinforcement learning. The below Section “Harris Hawk Optimization” clearly discusses about different phases of Harris Hawk optimization algorithm.

Harris Hawk optimization

In this section, initial methodology of FTTHDRL i.e. Harris hawk optimization from38 discussed. It works based on cooperative hunting behaviour of Hawks for prey. In the initial stage, it identifies location of the prey based on whether it is alone or in the group. Position of prey represented using Eq. (16).

Equation (17) represents average position of Hawk.

Now, we evaluate Prey energy and is represented using Eq. (18).

From Eq. (18), \(ENER\) represents escaping energy of prey, \(ENE{R}_{0}\) represents initial escaping energy of prey. It ranges from -1 to 1. When energy of prey is decreasing then it is easy for hawk bird to trace prey and exploit. Hawk birds exploit prey when it moves from exploration to exploitation phase but it depends on probability of escaping of prey from hawk, energy required for prey to escape from hawk bird. Prey hunting by hawk bird totally depends on probability of escaping from hawk i.e. if \(prob<0.5\) then prey can be escaped or if \(prob\ge 0.5\) then prey can be exploited by hawk. Exploitation according to 38 mentioned in two phases i.e. softly encircled around the prey by hawks if \(prob\ge 0.5 \&\& \left|ENER\right|\ge 0.5\). It is represented here in the eqns. 19, 20.

Hardly encircled by Hawk birds around the prey if \(prob\ge 0.5 \&\& \left|ENER\right|\ge 0.5\). It is represented in Eq. (21).

There is an another encircling mechanism for prey by using incremental steps by observing the previous movements of prey. It is known as soft encircling mechanism with incrementing their steps up to the prey’s location and it is represented using Eq. (22).

In the above encircling mechanism, if the steps of hawk bird is not incremental then hawk encircle prey suddenly by attacking it and represented as Eq. (23) (Fig. 1).

Soft incremental encircling with incremental steps are represented by Eq. (26).

\(X,{Z}_{0}\) calculated using Eqs. (22, 23). Hard encircling with incremental steps are represented by Eq. (27).

In above equations \({Z}_{0}, X\) are calculated in Eqs. (22,27) respectively.

\({Z}^{m}\left(T\right)\) is calculated from Eq. (17).

Reinforcement Learning based DQN model

This subsection clearly discusses about DQN(Deep Q- network) model which is a reinforcement based learning strategy used as a methodology in our research. This DQN model basically works on Q-learning model. This Q-learning39 works based on two tuples in Q-table. They are action space, state space. This is a reinforcement based strategy and therefore it doesn’t need any prior knowledge to generate output. For every iteration of model, this DQN model check for each state with respect to state space and then how it takes action against that corresponding state. A Q-learning function is to be represented using Eq. (29).

For every iteration reinforcement agent looks for Q-learning table keeping in mind that for which state which action to be generated evaluating from Reward function. The outcome of that reward function generates either positive or negative reward based on the input supplied at state space and action space tuples in Q-table but as it is a reinforcement learning based agent it learns from the previous action and improves the generated output accordingly. In Eq. (29). \(\Delta\) represents rate of learning for the model, \(\omega\) represents discount factor. \(stat{e}_{t{a}_{i}}\) represents state space of considered tasks in the model, \(Ac{t}_{t{a}_{i}}\) represents action space of considered tasks in model, \(stat{e}_{{\left(ta+1\right)}_{i}}\) represents state space of next iteration of considered tasks in the model, \(Ac{t}_{ta+1}\) represents action space of next iteration of considered tasks in the model. In this research, while training the agent we used 100 neurons are added as hidden layers in DQN model. Scheduling time for agent to generate a decision is kept as 10 Ms. Replay memory which is used to store states is represented as \(rm\). Iterations runs for different number of times and all these values are stored in replay memory and iterations ran as batches. Agent learning time represented as \(agen{t}_{time}\).Reward function for this model is represented using Eq. (30).

From the above equation, we evaluate rewards for each of the parameter whether they have improved or not for every iteration we ran in the model. Tables 2 and 3 display the notations and simulation using FTTHDRL system architecture.

Proposed FTTHDRL task scheduler in multi cloud environment

The above Fig. 2. shows the flow of our proposed FTTHDRL scheduler which is modelled by hybridization of Harris Hawk algorithm, DQN model which is based on reinforcement learning. Initially, hawk population generated randomly. After that calculation of priorities of tasks, VMs are using by eqns.6, 7. In next step, fitness function calculation using Eq. (15). Algorithm evaluates problem space in two stages by checking a condition \(\left|ENER\right|\ge 1\) if it is true then it is in exploitation stage or it is in exploration stage. After this in exploitation if \(prob\ge 0.5 \&\& \left|ENER\right|\ge 0.5\) is true then it takes soft encircling on prey otherwise it takes hard encircling. In another step, if \(prob\le 0.5 \&\& \left|ENER\right|\ge 0.5\) is true it will go into a stage i.e. Incremental soft encircling otherwise it will be in incremental hard circling. After this step, Assess the parameters and input them to DQN model to generate schedules and evaluate parameters using reward function using Eq. (30) for optimizing parameters. In the next step, assess the SLA based trust parameters. If trust is increased update trust value to existing trust value of cloud provider otherwise trust will be decreased and this process will be continued until all iterations completed. Fig. 1 indicates the physical proposed FTTHDRL system architecture.

Simulation and results

This section discusses about extensive simulations carried out on Cloudsim tool40 for proposed FTTHDRL (Fault tolerant trust based task scheduler using Harris Hawk and deep reinforcement learning). Proposed FTTHDRL simulation performed by giving input parallel worklogs of HPC2N41, NASA42. Both of these parallel worklogs consists of lengthy, medium, small worklogs. In this research, initially while evaluating and for simulating the algorithm, we fabricated the datasets manually with different statistical distributions which are uniform distributed consists of all tasks are equally distributed. Normal distribution which consists of tasks more medium number of tasks, less number of large, small number of tasks. Left skewed distribution which consists of more large, medium tasks, less number of small tasks. Right skewed presents less number of large, medium, more number of small tasks. Thus, proposed FTTHDRL evaluated in two phases by fabricating workload manually and using real time workload from HPC2N41, NASA42. In this work we represented Uniform, Normal, Left, right distributions as U01, N02, L03, R04, H05, NA06 respectively. The below Section “Configuration settings used in FTTHDRL for simulation” presents configuration settings of simulation which are used in simulation.

Configuration settings used in FTTHDRL for simulation

This Section “Configuration settings used in FTTHDRL for simulation” discusses simulation settings used in proposed FTTHDRL and we captured standard simulation settings from 43. We have installed Cloudsim tool40 in our environment. It was installed in MAC operating system, 64 GB RAM, 8-core CPU with M1 chip resided in it.

Evaluation of makespan for FTTHDRL

In this Section “Evaluation of makespan for FTTHDRL”, we carefully evaluated makespan of our proposed FTTHDRL by giving input workload from various fabricated workloads from U01, N02, L03, R04 and real time parallel worklogs from H05, NA06. Proposed FTTHDRL compared over existing RATS-HM, MOABCQ, AINN-BPSO approaches. Table 4 represents generated makespan of 100, 500, 1000 tasks. Figures 3, 4, 5, 6, 7, 8 represents makespan of U01, N02, L03, R04, H05, NA06 respectively. After observing results generated from makespan FTTHDRL improves makespan greatly over state of art algorithms.

Evaluation of rate of failures for FTTHDRL

In this Section “Evaluation of rate of failures for FTTHDRL”, we evaluated Rate of failures of our proposed FTTHDRL by giving input workload from various fabricated workloads from U01, N02, L03, R04 and real time parallel worklogs from H05, NA06. Proposed FTTHDRL compared over existing RATS-HM, MOABCQ, AINN-BPSO approaches. Table 5 represents Rate of failures for 100, 500, 1000 tasks. Figures 9, 10, 11, 12, 13, 14 represents rate of failures of U01, N02, L03, R04, H05, NA06 respectively. After observing results generated rate of failures for FTTHDRL minimizes rate of failures greatly over state of art algorithms.

Evaluation of availability for FTTHDRL

In this Section “Evaluation of availability for FTTHDRL”, we evaluated Availability of VMs of our proposed FTTHDRL by giving input workload from various fabricated workloads from U01, N02, L03, R04 and real time parallel worklogs from H05, NA06. Proposed FTTHDRL compared over existing RATS-HM, MOABCQ, AINN-BPSO approaches. Table 6 represents availability of VMs for 100, 500, 1000 tasks. Figures 15, 16, 17, 18, 19, 20 represents availability of VMs for U01, N02, L03, R04, H05, NA06 respectively. After observing results generated availability for FTTHDRL improves availability greatly over state of art algorithms.

Evaluation of success rate for FTTHDRL

In this Section “Evaluation of success rate for FTTHDRL”, we evaluated Success rate of VMs of our proposed FTTHDRL by giving input workload from various fabricated workloads from U01, N02, L03, R04 and real time parallel worklogs from H05, NA06. Proposed FTTHDRL compared over existing RATS-HM, MOABCQ, AINN-BPSO approaches. Table 7 represents Success rate of VMs for 100, 500, 1000 tasks. Figures 21, 22, 23, 24, 25, 26 represents success rate of VMs for U01, N02, L03, R04, H05, NA06 respectively. After observing results generated Success rate for FTTHDRL improves availability greatly over state of art algorithms.

Evaluation of Turnaround efficiency for FTTHDRL

In this Section “Evaluation of Turnaround efficiency for FTTHDRL”, we evaluated Turnaround efficiency of VMs of our proposed FTTHDRL by giving input workload from various fabricated workloads from U01, N02, L03, R04 and real time parallel worklogs from H05, NA06. Proposed FTTHDRL compared over existing RATS-HM, MOABCQ, AINN-BPSO approaches. Table 8 represents Turnaround efficiency for 100, 500, 1000 tasks. Figures 27, 28, 29, 30, 31, 32 represents Turnaround efficiency for U01, N02, L03, R04, H05, NA06 respectively. After observing results generated Turnaround efficiency for FTTHDRL improves Turnaround efficiency greatly over state of art algorithms.

Evaluation of resource cost for FTTHDRL

In this Section “Evaluation of resource cost for FTTHDRL”, we evaluated Resource cost for our proposed FTTHDRL by giving input workload from various fabricated workloads from U01, N02, L03, R04 and real time parallel worklogs from H05, NA06. Proposed FTTHDRL compared over existing RATS-HM, MOABCQ, AINN-BPSO approaches. Table 9 represents Resource cost for 100, 500, 1000 tasks. Figures 33, 34, 35, 36, 37, 38 represents Resource cost for U01, N02, L03, R04, H05, NA06 respectively. After observing results generated Resource cost for FTTHDRL minimizes Resource cost greatly over state of art algorithms.

Simulation analysis and discussion of results

Section “Simulation analysis and discussion of results” discusses generated result analysis and discussion about how they have improved over existing approaches. Initially, we fabricated different datasets indicated as U01, N02, L03, R04, H05, NA06. Generated results for proposed FTTHDRL evaluated over state of art approaches RATS-HM, MOABCQ, AINN-BPSO to check efficacy of our proposed algorithm. The below Table 10 represents makespan improvement for FTTHDRL, Table 11 represents improvement of rate of failures for FTTHDRL, Table 12 represents improvement of availability of VMs for FTTHDRL, Table 13 represents improvement of success rate of VMs for FTTHDRL, Table 14 represents improvement of turnaround efficiency of VMs for FTTHDRL, Table 15 represents minimization of resource cost for FTTHDRL. From these results, we can clearly observe that our proposed FTTHDRL dominates all state of art approaches for above specified parameters.

Conclusion and future works

Task scheduling is a crucial aspect in cloud computing paradigm as tasks arised from various resources and coming to cloud console need different processing capacities of virtual resources. In order to match task capacities with virtual resources an effective scheduler needed for cloud provider. Ineffective task scheduler leads to failures of tasks on VMs which doesn’t match task capacities to VM effectively. Thus, in this research to minimized failures of tasks and to match tasks appropriately to VMs we proposed a Fault tolerant trust aware task scheduler using Harris Hawk and Deep reinforcement based approach (FTTHDRL) in multi cloud environment. Initially we captured task, VM priorities to carefully map tasks to appropriate VMs. These priorities are fed to scheduler which is integrated with Harris Hawk optimization and DQN model which is a reinforcement learning approach which is a hybridized methodology used in our model. It generates schedules initially using Harris hawk algorithm and these generated schedules are optimized by DQN model to optimize parameters. Simulations are conducted on Cloudsim. For evaluating FTTHDRL, we used fabricated workload indicated as U01,N02, L03,R04. After this, we used realtime worklogs H05, NA06 used in simulation to evaluate FTTHDRL. Proposed FTTHDRL is evaluated over state of art approaches RATS-HM, AINN-BPSO, MOABCQ. From the observed results, FTTHDRL dominates existing algorithms by minimizing makespan, resource cost, rate of failures while improving trust based parameters. Shortcomings observed in our proposed research are it is not able to predict upcoming tasks thus, in future, we integrate a prediction module in the scheduler to predict tasks by using model to effectively schedule tasks in cloud paradigm.

Data availability

Researchers Supporting Project number (RSPD2023R576), King Saud University, Riyadh, Saudi Arabia.

Change history

22 February 2024

The original online version of this Article was revised: The original version of this Article contained an error in the Acknowledgements section, where the project number was incorrect.

References

Mangalampalli, S. et al. Cloud computing and virtualization, in Convergence of Cloud with AI for Big Data Analytics: Foundations and Innovation (13–40, 2023).

Hsu, P.-F., Ray, S. & Li-Hsieh, Y.-Y. Examining cloud computing adoption intention, pricing mechanism, and deployment model. Int. J. Inf. Manag. 34(4), 474–488 (2014).

Houssein, E. H. et al. Task scheduling in cloud computing based on meta-heuristics: review, taxonomy, open challenges, and future trends. Swarm Evolut. Comput. 62, 100841 (2021).

Kruekaew, B. & Kimpan, W. Multi-objective task scheduling optimization for load balancing in cloud computing environment using hybrid artificial bee colony algorithm with reinforcement learning. IEEE Access 10, 17803–17818 (2022).

Bal, P. K. et al. A joint resource allocation, security with efficient task scheduling in cloud computing using hybrid machine learning techniques. Sensors 22(3), 1242 (2022).

Alghamdi, M. I. Optimization of load balancing and task scheduling in cloud computing environments using artificial neural networks-based binary particle swarm optimization (BPSO). Sustainability 14(19), 11982 (2022).

Abdel-Basset, M. et al. Task scheduling approach in cloud computing environment using hybrid differential evolution. Mathematics 10(21), 4049 (2022).

Abdullahi, M. et al. An adaptive symbiotic organisms search for constrained task scheduling in cloud computing. J. Ambient Intell. Hum. Comput. 14(7), 8839–8850 (2023).

Otair, M. et al. Optimized task scheduling in cloud computing using improved multi-verse optimizer. Clust. Comput. 25(6), 4221–4232 (2022).

Chhabra, A. et al. Energy-aware bag-of-tasks scheduling in the cloud computing system using hybrid oppositional differential evolution-enabled whale optimization algorithm. Energies 15(13), 4571 (2022).

Bezdan, T. et al. Multi-objective task scheduling in cloud computing environment by hybridized bat algorithm. J. Intell. Fuzzy Syst. 42(1), 411–423 (2022).

Jain, R. & Sharma, N. A quantum inspired hybrid SSA–GWO algorithm for SLA based task scheduling to improve QoS parameter in cloud computing. Clust. Comput. 26, 1–24 (2022).

Saravanan, G. et al. Improved wild horse optimization with levy flight algorithm for effective task scheduling in cloud computing. J. Cloud Comput. 12(1), 24 (2023).

Kuppusamy, P. et al. Job scheduling problem in fog-cloud-based environment using reinforced social spider optimization. J. Cloud Comput. 11(1), 99 (2022).

Pradeep, K. & Jacob, T. P. A hybrid approach for task scheduling using the cuckoo and harmony search in cloud computing environment. Wirel. Pers. Commun. 101, 2287–2311 (2018).

Rahbari, D. Analyzing meta-heuristic algorithms for task scheduling in a fog-based IoT application. Algorithms 15(11), 397 (2022).

Khaleel, M. I. Efficient job scheduling paradigm based on hybrid sparrow search algorithm and differential evolution optimization for heterogeneous cloud computing platforms. Internet of Things 22, 100697 (2023).

Imene, L. et al. A third generation genetic algorithm NSGAIII for task scheduling in cloud computing. J. King Saud Univ. Comput. Inf. Sci. 34(9), 7515–7529 (2022).

Al-Wesabi, F. N. et al. Energy aware resource optimization using unified metaheuristic optimization algorithm allocation for cloud computing environment. Sustain. Comput. Inform. Syst. 35, 100686 (2022).

Manikandan, N., Gobalakrishnan, N. & Pradeep, K. Bee optimization based random double adaptive whale optimization model for task scheduling in cloud computing environment. Comput. Commun. 187, 35–44 (2022).

Pirozmand, P. et al. An improved particle swarm optimization algorithm for task scheduling in cloud computing. J. Ambient Intell. Hum. Comput. 14(4), 4313–4327 (2023).

Iftikhar, S. et al. HunterPlus: AI based energy-efficient task scheduling for cloud–fog computing environments. Internet of Things 21, 100667 (2023).

Chandrashekar, C. et al. HWACOA scheduler: Hybrid weighted ant colony optimization algorithm for task scheduling in cloud computing. Appl. Sci. 13(6), 3433 (2023).

Mansouri, N. An efficient task scheduling based on Seagull optimization algorithm for heterogeneous cloud computing platforms. Int. J. Eng. 35(2), 433–450 (2022).

Krishnadoss, P., Chandrashekar C., & Poornachary, V. K. RCOA scheduler: Rider cuckoo optimization algorithm for task scheduling in cloud computing. Int. J. Intell. Eng. Syst. 15 34(24), e7228 (2022).

Natesan, G. et al. Optimization techniques for task scheduling criteria in IAAS cloud computing atmosphere using nature inspired hybrid spotted hyena optimization algorithm. Concurr. Comput. Pract. Exp. 34(24), e7228 (2022).

Almadhor, A. et al. A new offloading method in the green mobile cloud computing based on a hybrid meta-heuristic algorithm. Sustain. Comput. Inform. Syst. 36, 100812 (2022).

Shao, K., Hui, Fu. & Wang, Bo. An efficient combination of genetic algorithm and particle swarm optimization for scheduling data-intensive tasks in heterogeneous cloud computing. Electronics 12(16), 3450 (2023).

Chhabra, A. et al. Optimizing bag-of-tasks scheduling on cloud data centers using hybrid swarm-intelligence meta-heuristic. J. Supercomput. 78, 1–63 (2022).

Tamilarasu, P., & G. Singaravel. Quality of service aware improved coati optimization algorithm for efficient task scheduling in cloud computing environment. J. Eng. Res. (2023).

Jangu, N. & Raza, Z. Improved jellyfish algorithm-based multi-aspect task scheduling model for IoT tasks over fog integrated cloud environment. J. Cloud Comput. 11(1), 1–21 (2022).

Talha, A., Bouayad, A. & Malki, M. O. C. An improved pathfinder algorithm using opposition-based learning for tasks scheduling in cloud environment. J. Comput. Sci. 64, 101873 (2022).

Malti, A. N., Hakem, M., & Benmammar, B. A new hybrid multi-objective optimization algorithm for task scheduling in cloud systems. Clust. Comput. 1–24 (2023).

Malathi, K. & Priyadarsini, K. Hybrid lion–GA optimization algorithm-based task scheduling approach in cloud computing. Appl. Nanosci. 13(3), 2601–2610 (2023).

Zubair, A. A. et al. A cloud computing-based modified symbiotic organisms search algorithm (AI) for optimal task scheduling. Sensors 22(4), 1674 (2022).

Jakwa, A. G. et al. Performance evaluation of hybrid meta-heuristics-based task scheduling algorithm for energy efficiency in fog computing. Int. J. Cloud Appl. Comput. (IJCAC) 13(1), 1–16 (2023).

Singh, A., & Chatterjee, K. A multi-dimensional trust and reputation calculation model for cloud computing environments, in 2017 ISEA Asia Security and Privacy (ISEASP). IEEE, (2017).

Heidari, A. A. et al. Harris Hawks optimization: Algorithm and applications. Future Gen. Comput. Syst. 97, 849–872 (2019).

Spano, S. et al. An efcient hardware implementation of reinforcement learning: The q-learning algorithm. IEEE Access 7, 186340–186351 (2019).

Calheiros, R. N. et al. CloudSim: A toolkit for modeling and simulation of cloud computing environments and evaluation of resource provisioning algorithms. J. Softw. Pract. Exp. 41(1), 23–50 (2011).

HPC2N: The HPC2N Seth log; 2016. http://www.cs.huji.ac.il/labs/parallel/workload/l_hpc2n/.0

https://www.cse.huji.ac.il/labs/parallel/workload/l_nasa_ipsc/

Mangalampalli, S., et al. DRLBTSA: Deep reinforcement learning based task-scheduling algorithm in cloud computing. Multimed. Tools Appl. 1–29 (2023).

Acknowledgements

Researchers Supporting Project number (RSPD2023R1060), King Saud University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

SM, GRK wrote the main manuscript text; SNM supervised the research work; SA, MIK, DA analysed and interpreted the data; FAA, EAAI contributed analysis tools and re-write the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mangalampalli, S., Karri, G.R., Mohanty, S.N. et al. Fault tolerant trust based task scheduler using Harris Hawks optimization and deep reinforcement learning in multi cloud environment. Sci Rep 13, 19179 (2023). https://doi.org/10.1038/s41598-023-46284-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-46284-9

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.