Abstract

Digital image processing has a wide range of uses, including robotics and automated inspection of industrial parts. Other uses include remote sensing using satellites and other spacecraft, image transmission and storage for business applications, medical processing, and Acoustic image processing. The process of highlighting particular intriguing features in a hidden image is known as image enhancement. We can accomplish this by altering the brightness, contrast, etc. The generated output is more suitable than the original image for some particular purposes. The proposed algorithm which is based on the convolution of coefficient bounds of a subclass \(p-\Upsilon {\mathcal {S}}^*(t,\delta ,\mu )\) obtained using Mittag-Leffler type Poisson Distribution is tested on three image data sets with different dimensions and image formats (PNG, JPEG, TIFF, etc.) and its PSNR, SSIM, MSE, RMSE, PCC and MAE values are observed to check the quality of the enhanced images.

Similar content being viewed by others

Introduction

The practise of applying different techniques to an image in order to enhance it or extract useful information from it is known as image processing. It is a form of signal processing in which a picture serves as the input, and the output may be another image, features, or characteristics associated with the input image. One of the technologies that is currently developing swiftly is image processing. It is a major area of study in the fields of engineering and computer science. The following three phases fundamentally make up image processing: Importing the image with the aid of image acquisition tools, reviewing and editing the image, and creating a report or modified image as a result of the analysis. Analog and digital image processing are the two categories of image processing techniques. The tangible copies, such as prints and photographs, can be processed using analogue image technology. Applying these visual techniques, image analysts employ several interpretational fundamentals. Through the use of computers, digital image processing techniques enable image alteration. Pre-processing, enhancement, and display, as well as information extraction, are the three general processes that all sorts of data must go through when employing digital approach. Image enhancement is the process of modifying digital images to provide outcomes that are better suited for display or additional image analysis. That is, the original image is processed so that the resultant image is more suitable. To spot important details of the image, the process includes removing noise, sharpening or brightening of the image. The goal of image enhancement is to make it easier for viewers to understand and gain more insight from the information in images. While using this technique, the properties of image are modified. Many techniques are used to enhance a digital image without wrecking the actual image. There are two main categories of image enhancement techniques available:

-

(i)

Spatial Domain method.

-

(ii)

Frequency Domain method.

The image plane, which is made up of all the pixels that make up an image, is referred to as the Spatial domain whereas Frequency Domain methods are based on altering the Fourier Transformation of the image. Our primary method in this article is the Spatial Domain in which a \(2\times 2\) matrix with each member representing the intensity of a pixel can be used to represent a grayscale image. The spatial domain method can be represented as:

where u(x, y) is the input image, v(x, y) is the processed image and \({\mathcal {T}}\) is defined to be an operator on u over some neighborhood of u(x, y). The operator \({\mathcal {T}}\) can operate on a set of input images. In order to provide an acceptable result, some applications such as convolution are required by the image enhancement task. Convolution is a straightforward mathematical operation that forms the basis for several popular image processing techniques. By “multiplying together” two arrays of numbers, typically of different sizes but of the same dimensionality, convolution offers a method for creating a third array of numbers, also of the same dimensionality. Using this, image processing operators can be implemented whose output pixel values are straightforward linear combinations of certain input pixel values.

In this paper, we use the method of convolution on the coefficients of the class obtained using the Mittag–Leffler Type Poisson Distribution.

This article is structured as follows:

-

The previous methods for image enhancement.

-

Definitions related to the proposed algorithm.

-

Mathematical approach of the suggested algorithm.

-

Experimental analysis and Conclusion.

Related work

Different techniques, such as blur reduction and denoising, are used to improve images1. In order to produce images that are more appropriate than the original images, the majority of image enhancement algorithms rely on spatial processes that are executed on image pixels. Modifying the image histogram is the idea behind image improvement techniques that is used most frequently2. Due to its simplicity and adaptability in various situations, this concept is often used. However, when image intensities are normalised, images improved utilising this technique could experience a washed-out look. A brand-new technique based on Joint Histogram Equalisation (JHE) was suggested to address this problem. The goal is to increase visual contrast by utilising neighbouring pixels’ information3. Guo et al.4, optimised the lighting component iteratively starting with the image’s brightness as the initial illumination component. Utilizing this method results in greater image enhancement with appealing visuals. Hasikin and Mat Isa5 suggested an additional fuzzy set theory-based method for improving images. Using this method, improved image quality was attained with little processing time. In their research, the authors suggested a contrast factor that is based on variations in the gray-level values of nearby image pixels. This method was successful in enhancing the image’s quality while retaining its details. Roy et al.6 suggested a brand-new Laplacian-based approach for improving fractional calculus enhancement algorithm. The technique was suggested to clear the generated Laplacian noise in text included within video frames. Fu et al.7 proposed the fusion-based image improvement approach for images with inadequate illumination by using several techniques to change the image illumination. This strategy successfully increased the illumination of images. Zhang et al.8 also suggested a dual illumination estimation-based method for automatic image exposure correction. The multi-exposure image fusion approach is used in this method to transform an input image containing both underexposed and overexposed portions into a well-exposed image overall. Also, Ibrahim et al. 9 proposed a model which is based on a class of fractional order heat equations using a hybrid fractional integral-differential operator, which has the potential to improve the image to some extent.

Chen et al.10 developed an infrared finger vein picture contrast enhancement based on fuzzy approach for identify recognition. For the enhancement of the acquired infrared image, the fuzzy set theory is employed to address the issues of low contrast, blurring, and speckle noise. The vein pattern feature extract and recognition are used after the gray augmentation. Kumar and Bhandari11 proposed a fuzzy c-means clustering method for image enhancement which enhances the perceptually invisible image along with preserving its color and naturalness. In this method, the image pixels are grouped into different clusters and are assigned membership values to those clusters. Based on this membership value, its intensity level is modified in the spatial domain. Yang et al.12 proposed a fuzzy c-means clustering with a cooperation center(FCM-co) for image enhancement. Using the FCM-co, the authors divided the image pixels into different clusters and marked membership values to those clusters, modified the membership values and calculated the new pixel gray levels. More recently, Priya and Sruthakeerthi13 studied the texture analysis in Image processing using the initial coefficient bounds for a bi-univalent class \(C_\Sigma (\lambda ,t,\nu )\) subordinate to Horadam polynomials.

The results discussed above investigated various methods for improving images. The overall appearance is greatly enhanced by these techniques. The main idea of this article is to propose an image enhancement algorithm which uses the convolution of coefficient bounds obtained for a subclass of Sakaguchi kind functions with the image pixels using a \(3 \times 3\) mask window. The proposed algorithm yields sufficiently good outcome.

A set of definitions

Let \(\mathcal {A}\) denote the family of analytic functions in the open unit disk \(\mathbb {U}=\{\zeta \in \mathbb {C}:|\zeta |<1\}\). These functions f are normalized under the conditions \(f(0) = 0\) and \(f^{\prime }(0)=1\) in \(\mathbb {U}\) and are given by,

and let \(\mathcal {S}\) be the subclass of \(\mathcal {A}\) consisting of the univalent functions.

Porwal and Dixit14 defined the Mittag-Leffler type Poisson distribution as,

where \(\mathbb {Y}(\lambda ,\delta ,\vartheta )(\zeta )\) belong to the class \(\mathcal {S}\) and,

Wiman, Agrawal and others15,–21 defined the probability mass distribution of the the Mittag-Leffler type Poisson distribution as,

where

Recently, Alessa et al.22 studied the Mittag-Leffler type Poisson distribution using convolution operator as,

where

Following is the definition for a subclass of Sakaguchi kind functions defined using the Mittag-Leffler type Poisson distribution:

Definition 1

A function \(f \in \mathcal {A}\) is said to be in the class \(p-\Upsilon \mathcal {S}^*(t,\delta ,\vartheta )\) if it satisfies the condition

where \(|t|\le 1,t\ne 1\).

The following theorem gives the generalized coefficient bound \(|o_n|\) for the subclass \(p-\Upsilon \mathcal {S}^*(t,\delta ,\mu )\).

Theorem 1

A function \(f \in \mathcal {A}\) of the form (1) is in the class \(p-\Upsilon \mathcal {S}^*(t,\delta ,\vartheta )\), then

The above result is sharp for the function \(g(\zeta )\) given by,

By using the initial values obtained from \(o_n\) in (2), a \(3 \times 3\) mask window is constructed to process the given set of images.

Image Quality Assessment (IQA) is regarded as a characteristic property of an image which measures the degradation of perceived images. Degradation is frequently estimated in comparison to an ideal image. Technical descriptions of image quality are possible, and it is also possible to evaluate it using objectives that highlight variations from ideal or perfect models. It also has to do with how someone could interpret or anticipate an image.

In order to compare the various outcomes of our experiments when working with computer vision challenges, we must select a strategy for gauging the similarity between two images. There are numerous methods and metrics that can be used to evaluate the quality of images. The measuring techniques that are frequently employed in the evaluation of image quality are Peak Signal to Noise Ratio (PSNR), Structural Similarity Index Measure (SSIM), Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Pearson Correlation Coefficient (PCC) and Mean Absolute Error (MAE).

Definition 2

The PSNR is used to determine the ratio between the maximum signal strength and the power of the noise that distorts the signal’s representation. The decibel form of this ratio between the two images is computed. The PSNR formula is given by,

and

where MSE is the Mean Squared Error which measures the average squared difference between the estimated values and the actual values pixel by pixel, M and N are the number of rows and columns in the images and S is the maximum fluctuation in the input image.

Definition 3

RMSE, Root Mean Squared Error is given by,

A smaller RMSE value, like MSE, denotes a closer match or similarity between the images, whereas a bigger RMSE value denotes a greater dissimilarity or inaccuracy.

Definition 4

The SSIM index is the measure of the similarity between two images.

where \(\xi _a\) and \(\xi _b\) are the pixel mean of the image windows a and b, \(\varsigma _a^2\) and \(\varsigma _b^2\) are the variance of a and b, \(\varsigma _{ab}\) is the covariance of a and b, \(c_1=(r_1\mathcal {L})^2\) and \(c_2=(r_2\mathcal {L})^2\) are two variables to stabilize the division, \(\mathcal {L}\) is the dynamic range of the pixel values and \(r_1=0.01\), \(r_2=0.03\) are set by default.

Definition 5

Pearson Correlation Coefficient (PCC) is used for comparing two images for image registration, disparity measurement, etc.

where \(x_i\) and \(y_i\) denote the intensity of \(i^{th}\) pixel in the input and output image, \(x_m\) and \(y_m\) denote the mean intensity of the input and output image. The PCC threshold has value 1 if the two images are identical, 0 if they are completely uncorrelated, and -1 if they are completely anti-correlated, for example, if one image is the negative of the other.

Definition 6

The Mean Absolute Error (MAE) is used to measure the average absolute difference in pixel values between two images. A lower MAE indicates that the distorted image is closer in quality to the original image.

where \(a_i\) and \(b_i\) are the pixel values at the \(i^{th}\) position of the input and output image respectively and n is the total number of pixels in the images.

Proposed work

In this section, we present a mathematical work based on the coefficients obtained for the class given by \(p-\Upsilon \mathcal {S}^*(t,\delta ,\mu )\).

The coefficients obtained using \(o_n\) are used to enhance the images. The processed image can be denoted by \(I_E(m,n)\) which can be obtained by the convolution,

where \(I_O(m,n)\) is the original image, \(\mathcal {M}\) is the \(3 \times 3\) mask window and \(*\) is the convolution operation. The mask window is represented using the coefficients \(o_1, o_2\) and \(o_3\) as follows:

The initial coefficients for the class \(p-\Upsilon \mathcal {S}^*(t,\delta ,\vartheta )\) with \(t=-0.5, \delta =1, \lambda =0.01,\) and \(p=4999\) are given by,

The pixel values of the proposed mask are calculated by sliding the mask window over the grayscale pixel values of the original image, generally starting with the top-left corner of the image and moving through all the pixels until the fractional mask fits completely on the image.

The proposed mask window for image enhancement can be obtained from the following algorithm:

-

Step 1:

Convert the RGB images to Grayscale images.

-

Step 2:

Set the proposed mask window to a \(3 \times 3\) pixel size.

-

Step 3:

Fix the range of \(\vartheta\) as \(0< \vartheta < 1\).

-

Step 4:

Apply the convolution of fractional mask in four directions over the original image.

-

Step 5:

Calculate the quality metrics for the enhanced image.

The above algorithm is initially applied to various types of images with different pixel values to check its functioning. For this purpose, we used the RGB images “DOG”, “ARGYLE” and “X-RAY” which are converted to Grayscale images given in Fig. 1.

Experimental results and analysis

The effectiveness of the suggested algorithm is shown in this section. Performance evaluations are carried out using MATLAB online. PSNR, SSIM, MSE, RMSE, PCC and MAE, which are frequently used in literature to assess the quality of a processed image, is taken into account to confirm the image’s quality.

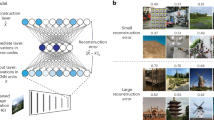

To analyze the performance, the proposed algorithm is tested on images: “DOG” of size \(1120\times 640\), “ARGYLE” pattern of size \(1200\times 1200\) and “X-RAY” of size \(1597\times 1920\). Figures 2, 3 and 4 gives the enhancement of the images “DOG”, “ARGYLE” and “X-RAY” for different angles \(0^\circ\), \(90^\circ\), \(45^\circ\), \(135^\circ\) and the average of all the angles at \(\vartheta =0.1\). Tables 1, 2, 3 gives the observation of the quality metrics for the images at different values of \(\vartheta\) and it is noted that at \(\vartheta =0.1\), the quality metrics are optimum for all the images. The graphical representation of Tables 1, 2, 3 is given in Figure 5. Figures 6, 7 and 8 illustrates the input image and enhanced images of “DOG”, “ARGYLE” and “X-RAY” along with the representations mesh and histogram respectively. At \(\vartheta =0.1\), it is observed that the quality metrics are higher for the proposed algorithm.

Also, the algorithm is tested on 3 different data sets to check its robustness and stability including “DAISY”, “MEDICAL” and “MISCELLANEOUS” data sets. The “DAISY” data set consists of 764 images with size around \(320 \times 240\) pixels and ’.jpg’ extension. The “MEDICAL” data set consists of 200 images with size around \(1514 \times 2044\) pixels and ’.png’ extension. The “MISCELLANEOUS” data set consists of 44 images with size around \(512 \times 512\) pixels and ’.tiff’ extension. The data sets are selected with different properties taken into account like number of images, size and extensions so as to validate the working of the proposed algorithm.

The values of the quality metrics PSNR, SSIM, MSE, RMSE, PCC and MAE for the average of all images in each data set are listed in Table 4. The enhancement of the images is optimum at \(\vartheta =0.1\). Figures 9, 10 and 11 shows the enhancement of sample images from the given data sets using the proposed algorithm.

In conclusion, the proposed algorithm is capable of delivering optimal results for images of any size and diverse file formats, including PNG, JPEG, BMP, TIFF, GIF, HEIC, DICOM, and more. As illustrated in Fig. 5, the quality metrics achieved for the images demonstrate a high level of satisfaction. A key benefit of this algorithm lies in its ability to be applied to images with a wide range of extensions and substantial pixel values.

Conclusion

The key concept of this paper is to propose a novel image enhancement algorithm which is simple and easy to access. The proposed algorithm is based on a new convolution technique using the coefficient bounds obtained for the class \(p-\Upsilon \mathcal {S}^*(t,\delta ,\mu )\) of analytic functions applied on a \(3\times 3\) mask window. It is observed that by decreasing the parameter value \(\vartheta\), the enhancement of the images is improved and the quality metrics are ideal at \(\vartheta =0.1\). Since development in computer technology and imaging is increasing rapidly, our work can be applied in various areas of image processing including image restoration, sharpening, edge detection and gamma correction.

Data availibility

The following links are given for the images and data sets used in this study. Source file of “DOG”. Source file of “ARGYLE”. Source file of “X-RAY”. Source file of Data set 1—“DAISY”. Source file of Data set 2—“MEDICAL”. Source file of Data set 3—“MISCELLANEOUS”.

References

Sharma, D., Chandra, S. K. & Bajpai, M. K. Image Enhancement Using Fractional Partial Differential Equation. In 2019 Second International Conference on Advanced Computational and Communication Paradigms (ICACCP), 1–6 (IEEE, 2019).

Janan, F. & Brady, M. RICE: A method for quantitative mammographic image enhancement. Med. Image Anal. 71, 102043 (2021).

Agrawal, S., Panda, R., Mishro, P. K. & Abraham, A. A novel joint histogram equalization based image contrast enhancement. J. King Saud Univ.-Comput. Inf. Sci. 34(4), 1172–1182 (2022).

Guo, X., Li, Y. & Ling, H. LIME: Low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 26(2), 982–993 (2016).

Hasikin, K. & Mat Isa, N. A. Adaptive fuzzy contrast factor enhancement technique for low contrast and nonuniform illumination images. SIViP 8(8), 1591–1603 (2014).

Roy, S. et al. Fractional Poisson enhancement model for text detection and recognition in video frames. Pattern Recogn. 52, 433–447 (2016).

Fu, X. et al. A fusion-based enhancing method for weakly illuminated images. Signal Process. 129, 82–96 (2016).

Zhang, Q., Nie, Y. & Zheng, W. S. Dual illumination estimation for robust exposure correction. Comput. Gr. Forum 38(7), 243–252 (2019).

Ibrahim, R. W., Jalab, H. A., Karim, F. K., Alabdulkreem, E. & Ayub, M. N. A medical image enhancement based on generalized class of fractional partial differential equations. Quant. Imaging Med. Surg. 12(1), 172 (2022).

Chen, L., Li, Z., Li, Z., Chen, S., Yang, Q. & Du, Y. A contrast enhancement method of infrared finger vein image based on fuzzy technique. In 2019 IEEE 14th International Conference on Intelligent Systems and Knowledge Engineering (ISKE), 307–310 (IEEE, 2019).

Kumar, R. & Bhandari, A. K. Fuzzified contrast enhancement for nearly invisible images. IEEE Trans. Circuits Syst. Video Technol. 32(5), 2802–2813 (2021).

Yang, L., Zenian, S. & Zakaria, R. Image enhancement method based on an improved fuzzy C-means clustering. Int. J. Adv. Comput. Sci. Appl. 13(8), 1 (2022).

Priya, H. & Sruthakeerthi, B. Texture analysis using Horadam polynomial coefficient estimate for the class of Sakaguchi kind function. Sci. Rep. 13(1), 14436 (2023).

Porwal, S. & Dixit, K. K. On Mittag–Leffler type Poisson distribution. Afr. Mat. 28, 29–34 (2017).

Agarwal, R. P. A propos d’une note de M. Pierre Humbert. C. R. Acad. Sci. Paris 236(21), 2031–2032 (1953).

Mahmood, T., Naeem, M., Hussain, S., Khan, S. & Altinkaya, S. A subclass of analytic functions defined by using Mittag–Leffler function. Honam Math. J. 42(3), 577–590 (2020).

Mittag-Leffler, G. M. Sur la nouvelle fonction \(E_\alpha (x)\). Comptes rendus de l’Académie des Sciences 137, 554–558 (1903).

Mittag-Leffler, G. Sur la représentation analytique d’une branche uniforme d’une fonction monogène: cinquième note. Acta Math. 29(1), 101–181 (1905).

Mittag-Leffler, G.M. Une généralisation de l’intégrale de Laplace-Abel. CR Acad. Sci. Paris (Ser. II). 137, 537–539 (1903).

Wiman, A. Über den Fundamentalsatz in der Teorie der Funktionen \(E_\alpha (x)\). Acta Math. 29(1), 191–201 (1905).

Wiman, A. Über die Nullstellen der Funktionen \(E_\alpha (x)\). Acta Math. 29(1), 217–234 (1905).

Alessa, N., Venkateswarlu, B., Reddy, P. T., Loganathan, K. & Tamilvanan, K. A new subclass of analytic functions related to Mittag–Leffler type Poisson distribution series. J. Funct. Spaces 2021, 1–7 (2021).

Author information

Authors and Affiliations

Contributions

All authors have jointly worked and agreed for the manuscript. B.A. developed the theory and performed the computations. B.S.K. investigated and supervised the findings of this work.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Aarthy, B., Keerthi, B.S. Enhancement of various images using coefficients obtained from a class of Sakaguchi type functions. Sci Rep 13, 18722 (2023). https://doi.org/10.1038/s41598-023-45938-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-45938-y

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.