Abstract

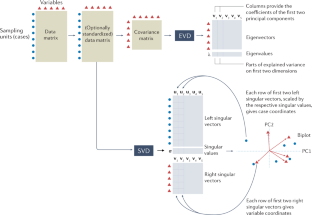

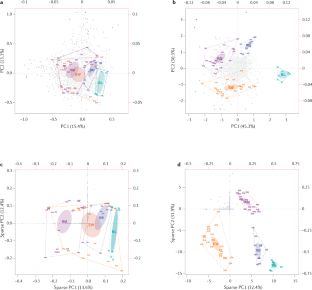

Principal component analysis is a versatile statistical method for reducing a cases-by-variables data table to its essential features, called principal components. Principal components are a few linear combinations of the original variables that maximally explain the variance of all the variables. In the process, the method provides an approximation of the original data table using only these few major components. This Primer presents a comprehensive review of the method’s definition and geometry, as well as the interpretation of its numerical and graphical results. The main graphical result is often in the form of a biplot, using the major components to map the cases and adding the original variables to support the distance interpretation of the cases’ positions. Variants of the method are also treated, such as the analysis of grouped data, as well as the analysis of categorical data, known as correspondence analysis. Also described and illustrated are the latest innovative applications of principal component analysis: for estimating missing values in huge data matrices, sparse component estimation, and the analysis of images, shapes and functions. Supplementary material includes video animations and computer scripts in the R environment.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 1 digital issues and online access to articles

$99.00 per year

only $99.00 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Code availability

Several datasets and the R scripts that produce certain results in this Primer can be found on GitHub at: https://github.com/michaelgreenacre/PCA.

Change history

08 March 2023

A Correction to this paper has been published: https://doi.org/10.1038/s43586-023-00209-y

References

Pearson, K. On lines and planes of closest fit to systems of points in space. Lond. Edinb. Dubl. Phil. Mag. J. Sci. 2, 559–572 (2010).

Hotelling, H. Analysis of a complex of statistical variables into principal components. J. Educ. Psychol. 24, 417–441 (1933).

Wold, S., Esbensen, K. & Geladi, P. Principal component analysis. Chemometr. Intell. Lab. Syst. 2, 37–52 (1987).

Jackson, J. E. A User’s Guide To Principal Components (Wiley, 1991).

Jolliffe, I. T. Principal Component Analysis 2nd edn (Springer, 2002). Covering all major aspects of theory of PCA and with a wide range of real applications.

Ringnér, M. What is principal component analysis? Nat. Biotechnol. 26, 303–304 (2008).

Abdi, H. & Williams, L. J. Principal component analysis. WIREs Comp. Stat. 2, 433–459 (2010).

Bro, R. & Smilde, A. K. Principal component analysis. Anal. Meth. 6, 2812–2831 (2014).A tutorial on how to understand, use, and interpret PCA in typical chemometric areas, with a general treatment that is applicable to other fields.

Jolliffe, I. T. & Cadima, J. Principal component analysis: a review and recent developments. Phil. Trans. R. Soc. A 374, 20150202 (2016).

Helliwell, J. F., Huang, H., Wang, S. & Norton, M. World happiness, trust and deaths under COVID-19. In World Happiness Report Ch. 2, 13–56 (2021).

Cantril, H. Pattern Of Human Concerns (Rutgers Univ. Press, 1965).

Flury, B. D. Developments in principal component analysis. In Recent Advances In Descriptive Multivariate Analysis (ed. Krzanowski, W. J.) 14–33 (Clarendon Press, 1995).

Gabriel, R. The biplot graphic display of matrices with application to principal component analysis. Biometrika 58, 453–467 (1971).

Gower, J. C. & Hand, D. J. Biplots (Chapman & Hall, 1995).

Greenacre, M. Biplots In Practice (BBVA Foundation, 2010). Comprehensive treatment of biplots, including principal component and correspondence analysis biplots, explained in a pedagogical way and aimed at practitioners.

Greenacre, M. Contribution biplots. J. Comput. Graph. Stat. 22, 107–122 (2013).

Eckart, C. & Young, G. The approximation of one matrix by another of lower rank. Psychometrika 1, 211–218 (1936).

Greenacre, M., Martínez-Álvaro, M. & Blasco, A. Compositional data analysis of microbiome and any-omics datasets: a validation of the additive logratio transformation. Front. Microbiol. 12, 727398 (2021).

Greenacre, M. Compositional data analysis. Annu. Rev. Stat. Appl. 8, 271–299 (2021).

Aitchison, J. & Greenacre, M. Biplots of compositional data. J. R. Stat. Soc. Ser. C 51, 375–392 (2002).

Greenacre, M. Compositional Data Analysis In Practice (Chapman & Hall/CRC Press, 2018).

Cattell, R. B. The scree test for the number of factors. Multivar. Behav. Res. 1, 245–276 (1966).

Jackson, D. A. Stopping rules in principal components analysis: a comparison of heuristical and statistical approaches. Ecology 74, 2204–2214 (1993).

Peres-Neto, P. R., Jackson, D. A. & Somers, K. A. How many principal components? Stopping rules for determining the number of non-trivial axes revisited. Comput. Stat. Data Anal. 49, 974–997 (2005).

Auer, P. & Gervini, D. Choosing principal components: a new graphical method based on Bayesian model selection. Commun. Stat. Simul. Comput. 37, 962–977 (2008).

Cangelosi, R. & Goriely, A. Component retention in principal component analysis with application to cDNA microarray data. Biol. Direct. 2, 2 (2007).

Josse, J. & Husson, F. Selecting the number of components in principal component analysis using cross-validation approximations. Comput. Stat. Data Anal. 56, 1869–1879 (2012).

Choi, Y., Taylor, J. & Tibshirani, R. Selecting the number of principal components: estimation of the true rank of a noisy matrix. Ann. Stat. 45, 2590–2617 (2017).

Wang, M., Kornblau, S. M. & Coombes, K. R. Decomposing the apoptosis pathway into biologically interpretable principal components. Cancer Inf. 17, 1176935118771082 (2018).

Greenacre, M. & Degos, L. Correspondence analysis of HLA gene frequency data from 124 population samples. Am. J. Hum. Genet. 29, 60–75 (1977).

Borg, I. & Groenen, P. J. F. Modern Multidimensional Scaling: Theory And Applications (Springer Science & Business Media, 2005).

Khan, J. et al. Classification and diagnostic prediction of cancers using gene expression profiling and artificial neural networks. Nat. Med. 7, 673–679 (2001).

Hastie, T., Tibshirani, R., Friedman, J. H. & Friedman, J. H. The Elements of Statistical Learning Data Mining, Inference, And Prediction (Springer, 2009).

James, G., Witten, D., Hastie, T. & Tibshirani, R. Introduction To Statistical Learning 2nd edn (Springer, 2021). General text on methodology for data science, with extensive treatment of PCA in its various forms, including matrix completion.

Greenacre, M. Data reporting and visualization in ecology. Polar Biol. 39, 2189–2205 (2016).

Fisher, R. A. The use of multiple measurements in taxonomic problems. Ann. Eugen. 7, 179–188 (1936).

Campbell, N. A. & Atchley, W. R. The geometry of canonical variate analysis. Syst. Zool. 30, 268–280 (1981).

Jolliffe, I. T. Rotation of principal components: choice of normalization constraints. J. Appl. Stat. 22, 29–35 (1995).

Cadima, J. F. C. L. & Jolliffe, I. T. Loadings and correlations in the interpretation of principal components. J. Appl. Stat. 22, 203–214 (1995).

Jolliffe, I. T., Trendafilov, N. T. T. & Uddin, M. A modified principal component technique based on the LASSO. J. Comput. Graph. Stat. 12, 531–547 (2003).

Zou, H., Hastie, T. & Tibshirani, R. Sparse principal component analysis. J. Comput. Graph. Stat. 15, 265–286 (2006).

Shen, H. & Huang, J. Z. Sparse principal component analysis via regularized low rank matrix approximation. J. Multivar. Anal. 99, 1015–1034 (2008).

Witten, D. M., Tibshirani, R. & Hastie, T. A penalized matrix decomposition, with applications to sparse principal components and canonical correlation analysis. Biostatistics 10, 515–534 (2009).

Journée, M., Nesterov, Y., Richtárik, P. & Sepulchre, R. Generalized power method for sparse principal component analysis. J. Mach. Learn. Res. 11, 517–553 (2010).

Papailiopoulos, D., Dimakis, A. & Korokythakis, S. Sparse PCA through low-rank approximations. In Proc. 30th Int. Conf. on Machine Learning (PMLR) 28, 747–755 (2013).

Erichson, N. B. et al. Sparse principal component analysis via variable projection. SIAM J. Appl. Math. 80, 977–1002 (2020).

Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B 58, 267–288 (1996).

Zou, H. & Hastie, T. Regularization and variable selection via the elastic net. J. R. Stat. Soc. Ser. B 67, 301–320 (2005).

Guerra-Urzola, R., van Deun, K., Vera, J. C. & Sijtsma, K. A guide for sparse PCA: model comparison and applications. Psychometrika 86, 893–919 (2021).

Camacho, J., Smilde, A. K., Saccenti, E. & Westerhuis, J. A. All sparse PCA models are wrong, but some are useful. Part I: Computation of scores, residuals and explained variance. Chemometr. Intell. Lab. Syst. 196, 103907 (2020).

Camacho, J., Smilde, A. K., Saccenti, E., Westerhuis, J. A. & Bro, R. All sparse PCA models are wrong, but some are useful. Part II: Limitations and problems of deflation. Chemometr. Intell. Lab. Syst. 208, 104212 (2021).

Benzécri, J.-P. Analyse Des Données, Tôme 2: Analyse Des Correspondances (Dunod, 1973).

Greenacre, M. Correspondence Analysis in Practice 3rd edn (Chapman & Hall/CRC Press, 2016). Comprehensive treatment of correspondence analysis (CA) and its variants, multiple correspondence analysis (MCA) and canonical correspondence analysis (CCA).

ter Braak, C. J. F. Canonical correspondence analysis: a new eigenvector technique for multivariate direct gradient analysis. Ecology 67, 1167–1179 (1986).

Greenacre, M. & Primicerio, R. Multivariate Analysis of Ecological Data (Fundacion BBVA, 2013).

Good, P. Permutation Tests: A Practical Guide To Resampling Methods For Testing Hypotheses (Springer Science & Business Media, 1994).

Legendre, P. & Anderson, M. J. Distance-based redundancy analysis: testing multispecies responses in multifactorial ecological experiments. Ecol. Monogr. 69, 1–24 (1999).

van den Wollenberg, A. L. Redundancy analysis an alternative for canonical correlation analysis. Psychometrika 42, 207–219 (1977).

Capblancq, T. & Forester, B. R. Redundancy analysis: a Swiss army knife for landscape genomics. Meth. Ecol. Evol. 12, 2298–2309 (2021).

Palmer, M. W. Putting things in even better order: the advantages of canonical correspondence analysis. Ecology 74, 2215–2230 (1993).

ter Braak, C. J. F. & Verdonschot, P. F. M. Canonical correspondence analysis and related multivariate methods in aquatic ecology. Aquat. Sci. 57, 255–289 (1995).

Abdi, H. & Valentin, D. Multiple correspondence analysis. Encycl. Meas. Stat. 2, 651–657 (2007).

Richards, G. & van der Ark, L. A. Dimensions of cultural consumption among tourists: multiple correspondence analysis. Tour. Manag. 37, 71–76 (2013).

Glevarec, H. & Cibois, P. Structure and historicity of cultural tastes. Uses of multiple correspondence analysis and sociological theory on age: the case of music and movies. Cult. Sociol. 15, 271–291 (2021).

Jones, I. R., Papacosta, O., Whincup, P. H., Goya Wannamethee, S. & Morris, R. W. Class and lifestyle ‘lock-in’ among middle-aged and older men: a multiple correspondence analysis of the British Regional Heart Study. Sociol. Health Illn. 33, 399–419 (2011).

Greenacre, M. & Pardo, R. Subset correspondence analysis: visualizing relationships among a selected set of response categories from a questionnaire survey. Sociol. Meth. Res. 35, 193–218 (2006).

Greenacre, M. & Pardo, R. Multiple correspondence analysis of subsets of response categories. In Multiple Correspondence Analysis And Related Methods (eds Greenacre, M. & Blasius, J.) 197–217 (Chapman & Hall/CRC Press, 2008).

Aşan, Z. & Greenacre, M. Biplots of fuzzy coded data. Fuzzy Sets Syst. 183, 57–71 (2011).

Vichi, M., Vicari, D. & Kiers, H. A. L. Clustering and dimension reduction for mixed variables. Behaviormetrika 46, 243–269 (2019).

van de Velden, M., Iodice D’Enza, A. & Markos, A. Distance-based clustering of mixed data. Wiley Interdiscip. Rev. Comput. Stat. 11, e1456 (2019).

Greenacre, M. Use of correspondence analysis in clustering a mixed-scale data set with missing data. Arch. Data Sci. Ser. B https://doi.org/10.5445/KSP/1000085952/04 (2019).

Gifi, A. Nonlinear Multivariate Analysis (Wiley-Blackwell, 1990).

Michailidis, G. & de Leeuw, J. The Gifi system of descriptive multivariate analysis. Stat. Sci. 13, 307–336 (1998).

Linting, M., Meulman, J. J., Groenen, P. J. F. & van der Koojj, A. J. Nonlinear principal components analysis: introduction and application. Psychol. Meth. 12, 336–358 (2007). Gentle introduction to nonlinear PCA for data that have categorical or ordinal variables, including an in-depth application to data of early childhood caregiving.

Cazes, P., Chouakria, A., Diday, E. & Schektman, Y. Extension de l’analyse en composantes principales à des données de type intervalle. Rev. Stat. Appl. 45, 5–24 (1997).

Bock, H.-H., Chouakria, A., Cazes, P. & Diday, E. Symbolic factor analysis. In Analysis of Symbolic Data (ed. Bock H.-H. & Diday, E.) 200–212 (Springer, 2000).

Lauro, C. N. & Palumbo, F. Principal component analysis of interval data: a symbolic data analysis approach. Comput. Stat. 15, 73–87 (2000).

Gioia, F. & Lauro, C. N. Principal component analysis on interval data. Comput. Stat. 21, 343–363 (2006).

Giordani, P. & Kiers, H. A comparison of three methods for principal component analysis of fuzzy interval data. Comput. Stat. Data Anal. 51, 379–397 (2006). The application of PCA to non-atomic coded data, that is, interval or fuzzy data.

Makosso-Kallyth, S. & Diday, E. Adaptation of interval PCA to symbolic histogram variables. Adv. Data Anal. Classif. 6, 147–159 (2012).

Brito, P. Symbolic data analysis: another look at the interaction of data mining and statistics. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 4, 281–295 (2014).

Le-Rademacher, J. & Billard, L. Principal component analysis for histogram-valued data. Adv. Data Anal. Classif. 11, 327–351 (2017).

Booysen, F. An overview and evaluation of composite indices of development. Soc. Indic. Res. 59, 115–151 (2002).

Lai, D. Principal component analysis on human development indicators of China. Soc. Indic. Res. 61, 319–330 (2003).

Krishnakumar, J. & Nagar, A. L. On exact statistical properties of multidimensional indices based on principal components, factor analysis, MIMIC and structural equation models. Soc. Indic. Res. 86, 481–496 (2008).

Mazziotta, M. & Pareto, A. Use and misuse of PCA for measuring well-being. Soc. Indic. Res. 142, 451–476 (2019).

Fabrigar, L. R., Wegener, D. T., MacCallum, R. C. & Strahan, E. J. Evaluating the use of exploratory factor analysis in psychological research. Psychol. Meth. 4, 272–299 (1999).

Booysen, F., van der Berg, S., Burger, R., von Maltitz, M. & du Rand, G. Using an asset index to assess trends in poverty in seven Sub-Saharan African countries. World Dev. 36, 1113–1130 (2008).

Wabiri, N. & Taffa, N. Socio-economic inequality and HIV in South Africa. BMC Public. Health 13, 1037 (2013).

Lazarus, J. Vetal The global NAFLD policy review and preparedness index: are countries ready to address this silent public health challenge? J. Hepatol. 76, 771–780 (2022).

Rodarmel, C. & Shan, J. Principal component analysis for hyperspectral image classification. Surv. Land. Inf. Sci. 62, 115–122 (2002).

Du, Q. & Fowler, J. E. Hyperspectral image compression using JPEG2000 and principal component analysis. IEEE Geosci. Remote. Sens. Lett. 4, 201–205 (2007).

Turk, M. & Pentland, A. Eigenfaces for recognition. J. Cogn. Neurosci. 3, 71–86 (1991).

Paul, L. & Suman, A. Face recognition using principal component analysis method. Int. J. Adv. Res. Comput. Eng. Technol. 1, 135–139 (2012).

Zhu, J., Ge, Z., Song, Z. & Gao, F. Review and big data perspectives on robust data mining approaches for industrial process modeling with outliers and missing data. Annu. Rev. Control. 46, 107–133 (2018).

Ghorbani, M. & Chong, E. K. P. Stock price prediction using principal components. PLoS One 15, e0230124 (2020).

Pang, R., Lansdell, B. J. & Fairhall, A. L. Dimensionality reduction in neuroscience. Curr. Biol. 26, R656–R660 (2016).

Abraham, G. & Inouye, M. Fast principal component analysis of large-scale genome-wide data. PLoS One 9, e93766 (2014).

Alter, O., Brown, P. O. & Botstein, D. Singular value decomposition for genome-wide expression data processing and modeling. Proc. Natl Acad. Sci. 97, 10101–10106 (2000). Application of PCA to gene expression data, proposing the concepts of eigenarrays and eigengenes as representative linear combinations of original arrays and genes.

Patterson, N., Price, A. L. & Reich, D. Population structure and eigenanalysis. PLoS Genet. 2, e190 (2006).

Tsuyuzaki, K., Sato, H., Sato, K. & Nikaido, I. Benchmarking principal component analysis for large-scale single-cell RNA-sequencing. Genome Biol. 21, 9 (2020).

Golub, G. H. & van Loan, C. F. Matrix Computations (JHU Press, 2013).

Lanczos, C. An iteration method for the solution of the eigenvalue problem of linear differential and integral operators. J. Res. Nat. Bureau Standards 45, 255–282 (1950).

Baglama, J. & Reichel, L. Augmented GMRES-type methods. Numer. Linear Algebra Appl. 14, 337–350 (2007).

Wu, K. & Simon, H. Thick-restart Lanczos method for large symmetric eigenvalue problems. SIAM J. Matrix Anal. Appl. 22, 602–616 (2000).

Halko, N., Martinsson, P.-G. & Tropp, J. A. Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev. 53, 217–288 (2011). A comprehensive review of randomized algorithms for low-rank approximation in PCA and SVD.

Weng, J., Zhang, Y. & Hwang, W.-S. Candid covariance-free incremental principal component analysis. IEEE Trans. Pattern Anal. Mach. Intell. 25, 1034–1040 (2003).

Ross, D. A., Lim, J., Lin, R.-S. & Yang, M.-H. Incremental learning for robust visual tracking. Int. J. Comput. Vis. 77, 125–141 (2008). Proposal of incremental implementations of PCA for applications to large data sets and data flows.

Cardot, H. & Degras, D. Online principal component analysis in high dimension: which algorithm to choose? Int. Stat. Rev. 86, 29–50 (2018).

Iodice D’Enza, A. & Greenacre, M. Multiple correspondence analysis for the quantification and visualization of large categorical data sets. In Advanced Statistical Methods for the Analysis of Large Data-Sets (eds di Ciaccio, A., Coli, M. & Angulo Ibanez, J.-M.) 453–463 (Springer, 2012).

Iodice D’Enza, A., Markos, A. & Palumbo, F. Chunk-wise regularised PCA-based imputation of missing data. Stat. Meth. Appl. 31, 365–386 (2021).

Shiokawa, Y. et al. Application of kernel principal component analysis and computational machine learning to exploration of metabolites strongly associated with diet. Sci. Rep. 8, 3426 (2018).

Koren, Y., Bell, R. & Volinsky, C. Matrix factorization techniques for recommender systems. Computer 42, 30–37 (2009).

Li, Y. On incremental and robust subspace learning. Pattern Recogn. 37, 1509–1518 (2004).

Bouwmans, T. Subspace learning for background modeling: a survey. Recent Pat. Comput. Sci. 2, 223–234 (2009).

Guyon, C., Bouwmans, T. & Zahzah, E.-H. Foreground detection via robust low rank matrix decomposition including spatio-temporal constraint. In Asian Conf. Computer Vision (eds Park, J. Il & Kim, J.) 315–320 (Springer, 2012).

Bouwmans, T. & Zahzah, E. H. Robust PCA via principal component pursuit: a review for a comparative evaluation in video surveillance. Comput. Vis. Image Underst. 122, 22–34 (2014).

Mazumder, R., Hastie, T. & Tibshirani, R. Spectral regularization algorithms for learning large incomplete matrices. J. Mach. Learn. Res. 11, 2287–2322 (2010).

Josse, J. & Husson, F. Handling missing values in exploratory multivariate data analysis methods. J. Soc. Fr. Stat. 153, 79–99 (2012).

Hastie, T., Tibshirani, R. & Wainwright, M. Statistical Learning With Sparsity: The LASSO And Generalizations (CRC Press, 2015). Comprehensive treatment of the concept of sparsity in many different statistical contexts, including PCA and related methods.

Hastie, T., Mazumder, R., Lee, J. D. & Zadeh, R. Matrix completion and low-rank SVD via fast alternating least squares. J. Mach. Learn. Res. 16, 3367–3402 (2015).

Risso, D., Perraudeau, F., Gribkova, S., Dudoit, S. & Vert, J.-P. A general and flexible method for signal extraction from single-cell RNA-seq data. Nat. Commun. 9, 284 (2018).

Ioannidis, A. G. et al. Paths and timings of the peopling of Polynesia inferred from genomic networks. Nature 597, 522–526 (2021).

Rohlf, F. J. & Archie, J. W. A comparison of Fourier methods for the description of wing shape in mosquitoes (Diptera: Culicidae). Syst. Zool. 33, 302–317 (1984).

Gower, J. C. Generalized Procrustes analysis. Psychometrika 40, 33–51 (1975).

Dryden, I. L. & Mardia, K. V. Statistical Shape Analysis: With Applications In R 2nd edn, Vol. 995 (John Wiley & Sons, 2016).

Ocaña, F. A., Aguilera, A. M. & Valderrama, M. J. Functional principal components analysis by choice of norm. J. Multivar. Anal. 71, 262–276 (1999).

Ramsay, J. O. & Silverman, B. W. Principal components analysis for functional data. In Functional Data Analysis 147–172 (Springer, 2005).

James, G. M., Hastie, T. J. & Sugar, C. A. Principal component models for sparse functional data. Biometrika 87, 587–602 (2000).

Yao, F., Müller, H.-G. & Wang, J.-L. Functional data analysis for sparse longitudinal data. J. Am. Stat. Assoc. 100, 577–590 (2005).

Hörmann, S., Kidziński, Ł. & Hallin, M. Dynamic functional principal components. J. R. Stat. Soc. Ser. B 77, 319–348 (2015).

Bongiorno, E. G. & Goia, A. Describing the concentration of income populations by functional principal component analysis on Lorenz curves. J. Multivar. Anal. 170, 10–24 (2019).

Li, Y., Huang, C. & Härdle, W. K. Spatial functional principal component analysis with applications to brain image data. J. Multivar. Anal. 170, 263–274 (2019).

Song, J. & Li, B. Nonlinear and additive principal component analysis for functional data. J. Multivar. Anal. 181, 104675 (2021).

Tuzhilina, E., Hastie, T. J. & Segal, M. R. Principal curve approaches for inferring 3D chromatin architecture. Biostatistics 23, 626–642 (2022).

Maeda, H., Koido, T. & Takemura, A. Principal component analysis of song units produced by humpback whales (Megaptera novaeangliae) in the Ryukyu region of Japan. Aquat. Mamm. 26, 202–211 (2000).

Allen, J. A. et al. Song complexity is maintained during inter-population cultural transmission of humpback whale songs. Sci. Rep. 12, 8999 (2022).

Wiltschko, A. B. et al. Mapping sub-second structure in mouse behavior. Neuron 88, 1121–1135 (2015).

Liu, L. T., Dobriban, E. & Singer, A. ePCA: high dimensional exponential family PCA. Ann. Appl. Stat. 12, 2121–2150 (2018).

Lê, S., Josse, J. & Husson, F. FactoMineR: an R package for multivariate analysis. J. Stat. Softw. 25, 1–18 (2008).

Siberchicot, A., Julien-Laferrière, A., Dufour, A.-B., Thioulouse, J. & Dray, S. adegraphics: an S4 Lattice-based package for the representation of multivariate data. R J. 9, 198–212 (2017).

Thioulouse, J. et al. Multivariate Analysis Of Ecological Data With ade4 (Springer, 2018).

Erichson, N. B., Voronin, S., Brunton, S. L. & Kutz, J. N. Randomized matrix decompositions using R. J. Stat. Softw. 89, 1–48 (2019).

Iodice D’Enza, A., Markos, A. & Buttarazzi, D. The idm package: incremental decomposition methods in R. J. Stat. Softw. 86, 1–24 (2018).

Josse, J. & Husson, F. missMDA: a package for handling missing values in multivariate data analysis. J. Stat. Softw. 70, 1–31 (2016).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Harris, C. R. et al. Array programming with NumPy. Nature 585, 357–362 (2020).

Kidziński, Ł. et al. Deep neural networks enable quantitative movement analysis using single-camera videos. Nat. Commun. 11, 4054 (2020).

Acknowledgements

This review is dedicated to the memory of Professor Cas Troskie, who was the head of the Department of Statistics at the University of Cape Town, both teacher and mentor to M.G. and T.H., and who planted the seeds of principal component analysis in them at an early age. T.H. was partially supported by grants DMS2013736 and IIS1837931 from the National Science Foundation, and grant 5R01 EB001988-21 from the National Institutes of Health. E.T. was supported by the Stanford Data Science Institute.

Author information

Authors and Affiliations

Contributions

Introduction (M.G. & T.H.); Experimentation (M.G., P.J.F.G. & T.H.); Results (M.G., P.J.F.G., T.H. & E.T.); Applications (M.G., P.J.F.G., T.H. & E.T.); Reproducibility and data deposition (M.G., A.I.D’E. & A.M.); Limitations and optimizations (M.G., T.H., A.I.D’E., A.M. & E.T.); Outlook (M.G., T.H., A.I.D’E., A.M. & E.T.); Overview of the Primer (all authors).

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Reviews Methods Primers thanks Age Smilde, Carles Cuadras and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Related links

amap: https://CRAN.R-project.org/package=amap

elasticnet: https://CRAN.R-project.org/package=elasticnet

fdapace: https://CRAN.R-project.org/package=fdapace

irlba: https://CRAN.R-project.org/package=irlba

Musical illustration of the SVD: https://www.youtube.com/watch?v=JEYLfIVvR9I

onlinePCA: https://CRAN.R-project.org/package=onlinePCA

PCAtools: https://github.com/kevinblighe/PCAtools

pca3d: https://CRAN.R-project.org/package=pca3d

RSDA: https://CRAN.R-project.org/package=RSDA

RSpectra: https://CRAN.R-project.org/package=RSpectra

softImpute: https://CRAN.R-project.org/package=softImpute

stats: https://www.R-project.org/

symbolicDA: https://CRAN.R-project.org/package=symbolicDA

Supplementary information

43586_2022_184_MOESM2_ESM.mp4

Supplementary Video 2 A dynamic transition from the regular PCA to the PCA of the four tumour group centroids, as weight is transferred from the individual tumours to the tumour group centroids. This shows how the centroid analysis separates the groups better in the two-dimensional PCA solution, as well as how the highly contributing genes change.

43586_2022_184_MOESM3_ESM.mp4

Supplementary Video 3 A dynamic transition from the PCA of the group centroids to the corresponding sparse PCA solution. This shows how most genes are shrunk to the origin, and are thus eliminated, while the others are generally shrunk to the axes, which means they are contributing to only one PC. A few genes still contribute to both PCs.

Glossary

- Active variables

-

Variables used to construct the principal component analysis solution.

- Biplot

-

Joint representation in principal component analysis of the sampling units (usually the rows of the data matrix) represented as points in a scatterplot, often using the principal components as coordinates and variables (the columns) obtained from the right singular vectors shown as arrows.

- Biplot axis

-

Axis in the direction of the variable arrow in a biplot.

- Bootstrap

-

Process aimed at assessing the statistical variability of a solution by repeatedly creating a bootstrap dataset derived from the original dataset through sampling the cases with replacement and computing the solution each time.

- Covariance matrix

-

Matrix containing the covariances between all pairs of variables.

- Dense

-

In the context of a data matrix, the presence of very few or no zeros; in the context of principal component analysis, the presence of no zeros in the principal component coefficients.

- Eigenvalue

-

In principal component analysis, a value indicating the accounted variance by a principal component.

- Eigenvalue decomposition

-

Reconstruction of any square and symmetric matrix through a sum of rank-one matrices of the outer product of an eigenvector with itself (vvT) times the corresponding eigenvalue.

- Eigenvector

-

In principal component analysis, this provides the linear combination for a principal component.

- Euclidean distance

-

The measure of distance between two points defined as the length, in the physical sense, of the shortest straight line connecting these points.

- Least-squares matrix approximation

-

Approximation of a data matrix such that the sum over all squared differences is minimized, between values in the data matrix and the corresponding approximated values.

- Linear combination

-

For a set of variables, a sum of scalar coefficients times the variables.

- Low-rank matrix approximation

-

Approximation of a matrix by one of lower rank.

- Nonlinear multivariate analysis

-

General strategy that optimally assigns numerical values to the categories of a categorical variable and, in the context of principal component analysis, this strategy helps to increase the variance accounted for by the principal components.

- Passive variables

-

Variables that are not used to determine the principal component analysis solution and are fitted into the solution afterwards, also called supplementary variables.

- Permutation test

-

General computational method that compares a statistic of observed data with the distribution of the statistic simulated many times using data with the values randomly permuted under a certain null hypothesis.

- Principal axis

-

The same as a dimension in principal component analysis and equivalent to the direction corresponding to maximal variance projections of the sampling units and uncorrelated to other principal axes.

- Principal coordinates

-

The coordinates of the sampling units or variables on a dimension that have average sum of squares equal to the variance accounted for by that dimension.

- Regressed

-

In the context of principal component analysis, using multiple regression to predict a variable from the principal components.

- Scree plot

-

Plot of eigenvalue by dimension often used for selecting the number of principal component analysis dimensions by those above the straight line (scree) that goes approximately through the higher dimensions.

- Shrinkage penalty

-

The addition to the objective function of an additional objective to reduce the absolute value of certain quantities being estimated; for example, the singular values in matrix completion, or the principal component coefficients in sparse principal component analysis.

- Singular value

-

In principal component analysis, the square root of the variance accounted for by a principal component.

- Singular value decomposition

-

Reconstruction of any matrix by the weighted sum of rank-one matrices consisting of the outer product of the left and right singular vectors (uvT) multiplied by their corresponding positive singular value.

- Singular vectors

-

In principal component analysis (PCA), the vectors of the singular value decomposition that lead to the row and column coordinates in a PCA biplot.

- Sparsity

-

In the context of a data matrix, the presence of many zeros; in the context of principal component analysis, the presence of many zeros in the principal component coefficients.

- Standard coordinates

-

Coordinates in a principal component analysis that are standardized to have the average sum of squares equal to 1.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Greenacre, M., Groenen, P.J.F., Hastie, T. et al. Principal component analysis. Nat Rev Methods Primers 2, 100 (2022). https://doi.org/10.1038/s43586-022-00184-w

Accepted:

Published:

DOI: https://doi.org/10.1038/s43586-022-00184-w

This article is cited by

-

Development and psychometric testing of the Aesthetics of Everyday Life Scale in Aging (AELSA)

BMC Geriatrics (2024)

-

Host plants directly determine the α diversity of rhizosphere arbuscular mycorrhizal fungal communities in the National Tropical Fruit Tree Field Genebank

Chemical and Biological Technologies in Agriculture (2024)

-

Multiplexed MRM-based proteomics for identification of circulating proteins as biomarkers of cardiovascular damage progression associated with diabetes mellitus

Cardiovascular Diabetology (2024)

-

Human-induced intensification of terrestrial water cycle in dry regions of the globe

npj Climate and Atmospheric Science (2024)

-

Predicting circRNA-RBP Binding Sites Using a Hybrid Deep Neural Network

Interdisciplinary Sciences: Computational Life Sciences (2024)